1.orchestrator 功能演示:

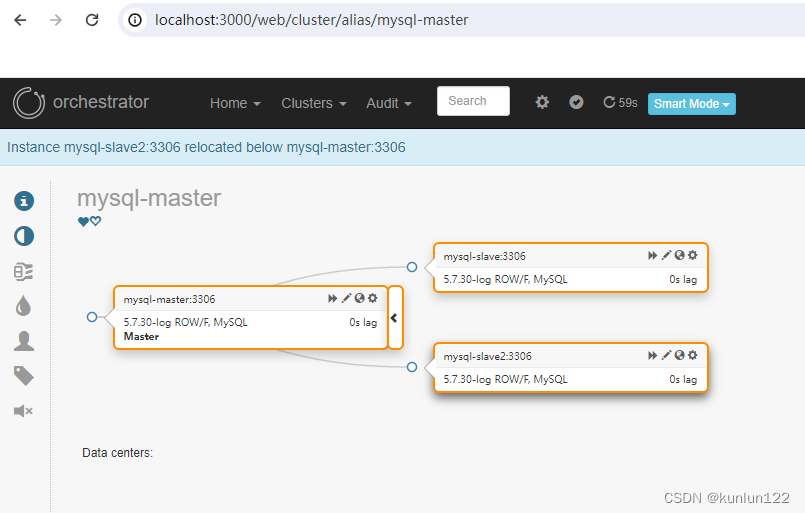

1.1 多级级联:

1.2 主从切换:

切换成功后,原来的主库是红色的,需要在主库的配置页面点击“start replication ”,重新连接上新的主库。

1.3 主从故障,从库自动切换新主库

2.mysql主从复制 搭建

参考地址:https://www.jb51.net/server/3105779cp.htmhttps://www.jb51.net/server/3105779cp.htm

主库my.cnf配置文件如下:

[mysqld]

log-bin=mysql-bin

server-id=1

report_host=172.22.0.103

log-slave-updates=1

# 启用GTID模式

gtid_mode=ON

# 确保一致性

enforce_gtid_consistency=ON

# 使用表格存储master信息

master_info_repository=TABLE

# 使用表格存储relay log信息

relay_log_info_repository=TABLE

# 设置binlog格式为ROW

binlog_format=ROW其他数据库的my.cnf只需要修改server-id和report_host就行了。

docker compose 配置文件如下:

version: "3"

services:

mysql-master:

container_name: mysql-master

hostname: mysql-master

image: mysql:5.7.30

restart: always

environment:

MYSQL_ROOT_PASSWORD: test123456

ports:

- "3307:3306"

networks:

mysql-network:

ipv4_address: 172.22.0.103

volumes:

- ./mysql1/conf/my.cnf:/etc/my.cnf

- ./mysql1/data:/var/lib/mysql

mysql-slave:

container_name: mysql-slave

hostname: mysql-slave

image: mysql:5.7.30

restart: always

environment:

MYSQL_ROOT_PASSWORD: test123456

ports:

- "3308:3306"

networks:

mysql-network:

ipv4_address: 172.22.0.104

volumes:

- ./mysql2/conf/my.cnf:/etc/my.cnf

- ./mysql2/data:/var/lib/mysql

mysql-slave2:

container_name: mysql-slave2

hostname: mysql-slave2

image: mysql:5.7.30

restart: always

environment:

MYSQL_ROOT_PASSWORD: test123456

ports:

- "3309:3306"

networks:

mysql-network:

ipv4_address: 172.22.0.105

volumes:

- ./mysql3/conf/my.cnf:/etc/my.cnf

- ./mysql3/data:/var/lib/mysql

networks:

mysql-network:

driver: bridge

ipam:

config:

- subnet: 172.22.0.0/16

主从复制使用gtid,

主库需要执行的sql:

create user 'repl_user'@'%' identified by 'test123456';

grant replication slave on *.* to 'repl_user'@'%';从库需要执行的sql:

stop slave ;

CHANGE MASTER TO MASTER_HOST='192.168.0.62', MASTER_PORT=3306, MASTER_USER='repl_user',

MASTER_PASSWORD='test123456',master_auto_position=1;

start slave;

show slave status;

--主从切换时要用到,提前创建好用户

create user 'repl_user'@'%' identified by 'test123456';

grant replication slave on *.* to 'repl_user'@'%';检查主从同步是否配置好,在主库执行以下sql:

show slave hosts;主从复制正常时,显示如下:

3.orchestrator 搭建

参考地址:Orchestrator实现MySQL故障切换 - 墨天轮 (modb.pro)

orchestrator.conf.json配置文件如下:

{

"Debug": true,

"EnableSyslog": false,

"ListenAddress": ":3000",

"MySQLTopologyUser": "orc_client_user",

"MySQLTopologyPassword": "test123456",

"MySQLTopologyCredentialsConfigFile": "",

"MySQLTopologySSLPrivateKeyFile": "",

"MySQLTopologySSLCertFile": "",

"MySQLTopologySSLCAFile": "",

"MySQLTopologySSLSkipVerify": true,

"MySQLTopologyUseMutualTLS": false,

"MySQLOrchestratorHost": "172.22.0.102",

"MySQLOrchestratorPort": 3306,

"MySQLOrchestratorDatabase": "orcdb",

"MySQLOrchestratorUser": "orc1",

"MySQLOrchestratorPassword": "orc123456",

"MySQLOrchestratorCredentialsConfigFile": "",

"MySQLOrchestratorSSLPrivateKeyFile": "",

"MySQLOrchestratorSSLCertFile": "",

"MySQLOrchestratorSSLCAFile": "",

"MySQLOrchestratorSSLSkipVerify": true,

"MySQLOrchestratorUseMutualTLS": false,

"MySQLConnectTimeoutSeconds": 1,

"RaftEnabled": true,

"RaftDataDir": "/var/lib/orchestrator",

"RaftBind": "172.22.0.91",

"DefaultRaftPort": 10008,

"RaftNodes": [

"172.22.0.91",

"172.22.0.92",

"172.22.0.93"

],

"DefaultInstancePort": 3306,

"DiscoverByShowSlaveHosts": false,

"InstancePollSeconds": 5,

"DiscoveryIgnoreReplicaHostnameFilters": [

"a_host_i_want_to_ignore[.]example[.]com",

".*[.]ignore_all_hosts_from_this_domain[.]example[.]com",

"a_host_with_extra_port_i_want_to_ignore[.]example[.]com:3307"

],

"UnseenInstanceForgetHours": 240,

"SnapshotTopologiesIntervalHours": 0,

"InstanceBulkOperationsWaitTimeoutSeconds": 10,

"HostnameResolveMethod": "default",

"MySQLHostnameResolveMethod": "@@hostname",

"SkipBinlogServerUnresolveCheck": true,

"ExpiryHostnameResolvesMinutes": 60,

"RejectHostnameResolvePattern": "",

"ReasonableReplicationLagSeconds": 10,

"ProblemIgnoreHostnameFilters": [],

"VerifyReplicationFilters": false,

"ReasonableMaintenanceReplicationLagSeconds": 20,

"CandidateInstanceExpireMinutes": 60,

"AuditLogFile": "",

"AuditToSyslog": false,

"RemoveTextFromHostnameDisplay": ".mydomain.com:3306",

"ReadOnly": false,

"AuthenticationMethod": "",

"HTTPAuthUser": "",

"HTTPAuthPassword": "",

"AuthUserHeader": "",

"PowerAuthUsers": [

"*"

],

"ClusterNameToAlias": {

"127.0.0.1": "test suite"

},

"ReplicationLagQuery": "",

"DetectClusterAliasQuery": "SELECT SUBSTRING_INDEX(@@hostname, '.', 1)",

"DetectClusterDomainQuery": "",

"DetectInstanceAliasQuery": "",

"DetectPromotionRuleQuery": "",

"DataCenterPattern": "[.]([^.]+)[.][^.]+[.]mydomain[.]com",

"PhysicalEnvironmentPattern": "[.]([^.]+[.][^.]+)[.]mydomain[.]com",

"PromotionIgnoreHostnameFilters": [],

"DetectSemiSyncEnforcedQuery": "",

"ServeAgentsHttp": false,

"AgentsServerPort": ":3001",

"AgentsUseSSL": false,

"AgentsUseMutualTLS": false,

"AgentSSLSkipVerify": false,

"AgentSSLPrivateKeyFile": "",

"AgentSSLCertFile": "",

"AgentSSLCAFile": "",

"AgentSSLValidOUs": [],

"UseSSL": false,

"UseMutualTLS": false,

"SSLSkipVerify": false,

"SSLPrivateKeyFile": "",

"SSLCertFile": "",

"SSLCAFile": "",

"SSLValidOUs": [],

"URLPrefix": "",

"StatusEndpoint": "/api/status",

"StatusSimpleHealth": true,

"StatusOUVerify": false,

"AgentPollMinutes": 60,

"UnseenAgentForgetHours": 6,

"StaleSeedFailMinutes": 60,

"SeedAcceptableBytesDiff": 8192,

"PseudoGTIDPattern": "",

"PseudoGTIDPatternIsFixedSubstring": false,

"PseudoGTIDMonotonicHint": "asc:",

"DetectPseudoGTIDQuery": "",

"BinlogEventsChunkSize": 10000,

"SkipBinlogEventsContaining": [],

"ReduceReplicationAnalysisCount": true,

"FailureDetectionPeriodBlockMinutes": 60,

"FailMasterPromotionOnLagMinutes": 0,

"RecoveryPeriodBlockSeconds": 60,

"RecoveryIgnoreHostnameFilters": [],

"RecoverMasterClusterFilters": [

"*"

],

"RecoverIntermediateMasterClusterFilters": [

"*"

],

"OnFailureDetectionProcesses": [

"echo 'Detected {failureType} on {failureCluster}. Affected replicas: {countSlaves}' >> /tmp/recovery.log"

],

"PreGracefulTakeoverProcesses": [

"echo 'Planned takeover about to take place on {failureCluster}. Master will switch to read_only' >> /tmp/recovery.log"

],

"PreFailoverProcesses": [

"echo 'Will recover from {failureType} on {failureCluster}' >> /tmp/recovery.log"

],

"PostFailoverProcesses": [

"echo '(for all types) Recovered from {failureType} on {failureCluster}. Failed: {failedHost}:{failedPort}; Successor: {successorHost}:{successorPort}' >> /tmp/recovery.log"

],

"PostUnsuccessfulFailoverProcesses": [],

"PostMasterFailoverProcesses": [

"echo 'Recovered from {failureType} on {failureCluster}. Failed: {failedHost}:{failedPort}; Promoted: {successorHost}:{successorPort}' >> /tmp/recovery.log"

],

"PostIntermediateMasterFailoverProcesses": [

"echo 'Recovered from {failureType} on {failureCluster}. Failed: {failedHost}:{failedPort}; Successor: {successorHost}:{successorPort}' >> /tmp/recovery.log"

],

"PostGracefulTakeoverProcesses": [

"echo 'Planned takeover complete' >> /tmp/recovery.log"

],

"CoMasterRecoveryMustPromoteOtherCoMaster": true,

"DetachLostSlavesAfterMasterFailover": true,

"ApplyMySQLPromotionAfterMasterFailover": true,

"PreventCrossDataCenterMasterFailover": false,

"PreventCrossRegionMasterFailover": false,

"MasterFailoverDetachReplicaMasterHost": false,

"MasterFailoverLostInstancesDowntimeMinutes": 0,

"PostponeReplicaRecoveryOnLagMinutes": 0,

"OSCIgnoreHostnameFilters": [],

"GraphiteAddr": "",

"GraphitePath": "",

"GraphiteConvertHostnameDotsToUnderscores": true,

"ConsulAddress": "",

"ConsulAclToken": "",

"ConsulKVStoreProvider": "consul"

}多个orchestrator.conf.json需要修改的地方如下:

"MySQLTopologyUser": "orc_client_user",

"MySQLTopologyPassword": "test123456",

......

"MySQLOrchestratorHost": "172.22.0.102",

"MySQLOrchestratorPort": 3306,

"MySQLOrchestratorDatabase": "orcdb",

"MySQLOrchestratorUser": "orc1",

"MySQLOrchestratorPassword": "orc123456",

......

"AuthenticationMethod": "basic",

"HTTPAuthUser": "admin",

"HTTPAuthPassword": "uq81sgca1da",

"RaftEnabled":true,

"RaftDataDir":"/usr/local/orchestrator/raftdata",

"RaftBind": "172.22.0.91",

"DefaultRaftPort": 10008,

"RaftNodes": [

"172.22.0.91",

"172.22.0.92",

"172.22.0.93"

],

......

"RecoveryPeriodBlockSeconds": 60,

"RecoveryIgnoreHostnameFilters": [],

"RecoverMasterClusterFilters": [

"*"

],

"RecoverIntermediateMasterClusterFilters": [

"*"

],

......MySQLTopologyUser是orchestrator监听mysql主从数据库时使用的用户

MySQLOrchestratorUser是orchestrator自身需要的数据库

在主库和从库执行以下sql,创建MySQLTopologyUser:

create user 'orc_client_user'@'%' identified by 'test123456';

GRANT ALL PRIVILEGES ON *.* TO 'orc_client_user'@'%';在orchestrator自身的数据库(这个数据库是独立的,不在三个mysql主从库之中)执行以下sql,创建MySQLOrchestratorUser:

CREATE USER 'orc1'@'%' IDENTIFIED BY 'orc123456';

GRANT ALL PRIVILEGES ON *.* TO 'orc1'@'%';成功启动后,如下图:

4.完整的docker-compose.yml文件

version: "3"

services:

# orchestrator 监控

orchestrator-test1:

container_name: orchestrator-test1

image: openarkcode/orchestrator:latest

restart: always

ports:

- "3000:3000"

networks:

mysql-network:

ipv4_address: 172.22.0.91

volumes:

- ./orchestrator.conf.json:/etc/orchestrator.conf.json

orchestrator-test2:

container_name: orchestrator-test2

image: openarkcode/orchestrator:latest

restart: always

ports:

- "3002:3000"

networks:

mysql-network:

ipv4_address: 172.22.0.92

volumes:

- ./orchestrator2.conf.json:/etc/orchestrator.conf.json

orchestrator-test3:

container_name: orchestrator-test3

image: openarkcode/orchestrator:latest

restart: always

ports:

- "3003:3000"

networks:

mysql-network:

ipv4_address: 172.22.0.93

volumes:

- ./orchestrator3.conf.json:/etc/orchestrator.conf.json

# orc 使用的数据库

orc-mysql:

container_name: orc-mysql

image: mysql:5.7.30

restart: always

environment:

MYSQL_ROOT_PASSWORD: test123456

MYSQL_PASSWORD: orc123456

MYSQL_USER: orc1

MYSQL_DATABASE: orcdb

ports:

- "3306:3306"

networks:

mysql-network:

ipv4_address: 172.22.0.102

volumes:

- ./mysql:/var/lib/mysql

mysql-master:

container_name: mysql-master

hostname: mysql-master

image: mysql:5.7.30

restart: always

environment:

MYSQL_ROOT_PASSWORD: test123456

ports:

- "3307:3306"

networks:

mysql-network:

ipv4_address: 172.22.0.103

volumes:

- ./mysql1/conf/my.cnf:/etc/my.cnf

- ./mysql1/data:/var/lib/mysql

mysql-slave:

container_name: mysql-slave

hostname: mysql-slave

image: mysql:5.7.30

restart: always

environment:

MYSQL_ROOT_PASSWORD: test123456

ports:

- "3308:3306"

networks:

mysql-network:

ipv4_address: 172.22.0.104

volumes:

- ./mysql2/conf/my.cnf:/etc/my.cnf

- ./mysql2/data:/var/lib/mysql

mysql-slave2:

container_name: mysql-slave2

hostname: mysql-slave2

image: mysql:5.7.30

restart: always

environment:

MYSQL_ROOT_PASSWORD: test123456

ports:

- "3309:3306"

networks:

mysql-network:

ipv4_address: 172.22.0.105

volumes:

- ./mysql3/conf/my.cnf:/etc/my.cnf

- ./mysql3/data:/var/lib/mysql

networks:

mysql-network:

driver: bridge

ipam:

config:

- subnet: 172.22.0.0/16

5.常见问题

5.1 拖动报Relocating m03:3306 below m02:3306 turns to be too complex; please do it manually错误

解决办法:检查mysql是否启用gtid模式

5.2 mysql无法拖动到另一个mysql的下级,报ERROR m04:3306 cannot replicate from m03:3306. Reason: instance does not have log_slave_updates enabled: m03:3306错误

解决办法:mysql需要开启log-slave-updates

5.3 主从故障后,从库上显示errant gtid found错误

解决办法1:执行reset master

解决办法2:跳过从库多余的gtid,参考https://blog.csdn.net/weixin_48154829/article/details/124200051

5.4 orchestrator无法发现mysql主从集群,后台日志报hostname无法解析错误

解决办法:需要在安装orchestrator的机器上,修改hosts文件

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?