Flink是Apache软件基金会下开源的分布式流批一体计算框架,具备实时流计算和高吞吐批处理计算的大数据计算能力。本专栏内容为Flink源码解析的记录与分享。

本文解析的Kafka源码版本为:flink-1.19.0

1.Flink DataStream数据流转换为Transformation集合功能概述

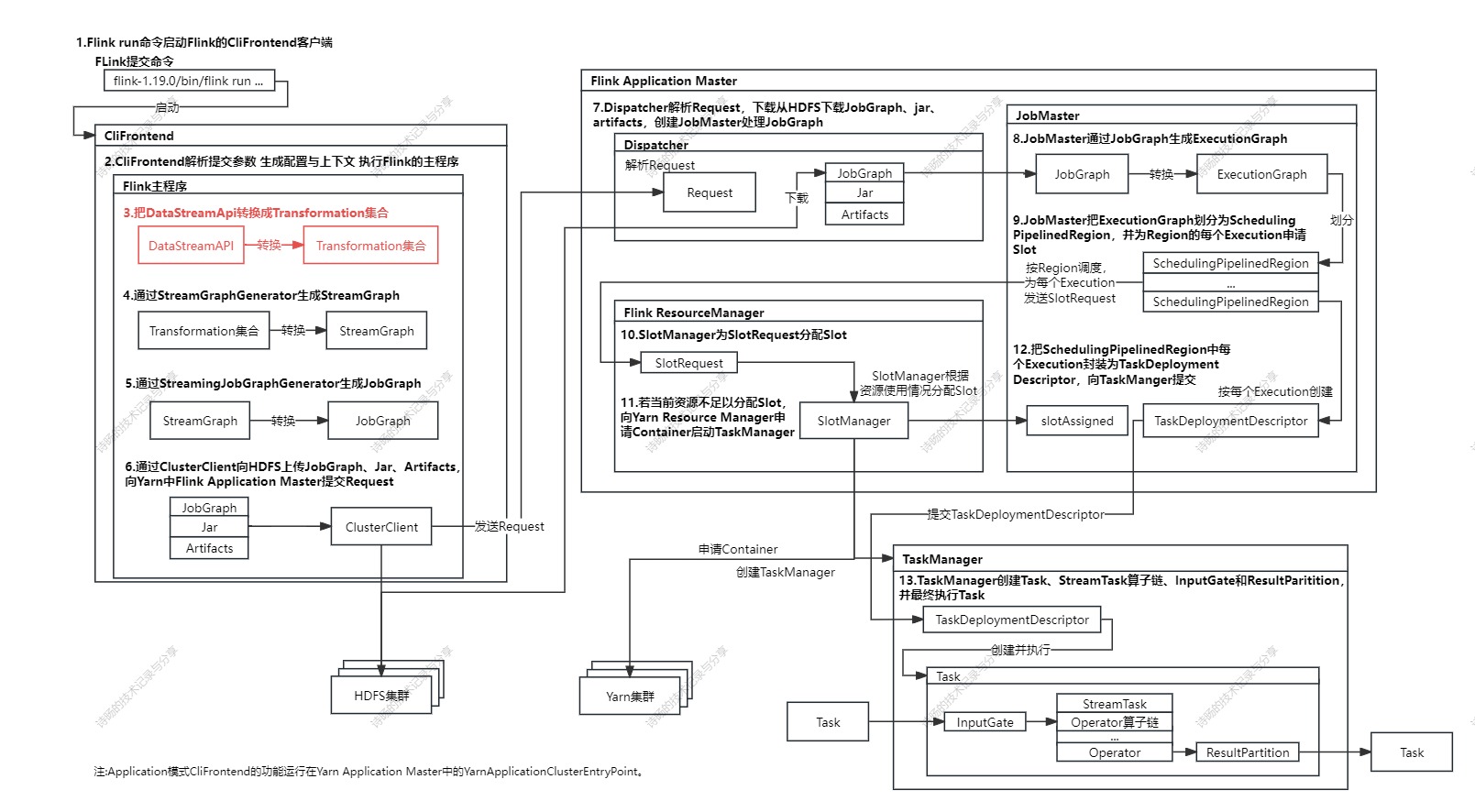

在上文《Flink-1.19.0源码详解2-Flink CliFrontend客户端启动源码解析》中,已介绍了Flink执行run命令启动CliFrontend客户端的过程。当CliFrontend客户端的启动后,CliFrontend会执行Flink主类的main()方法进入Flink主类的业务逻辑。Flink主类是研发人员通过Flink API编写的DataStream代码及Flink执行环境、状态管理、Checkpoint等环境与配置代码。

本文从Flink主类的main()方法开始解析(内容为下流程图的红色部分),解析Flink主类main()方法运行后,Flink程序逐步将研发人员编写的DataStream数据流转换为Flink Transformation集合并添加到StreamExecutionEnvironment上下文环境的过程。

执行主类main()方法的核心功能是把在代码中编写的DataStream数据流转换为Transformation集合:

以map为例,Flink程序在执行时会调用DataStream.map()方法,该方法会把DataStream的map算子转换为OneInputTransformation,把map操作封装在StreamMap中的MapFunction中。

首先Flink会创建StreamMap(StreamOperator的具体实现)封装用户定义的MapFunction与数据处理逻辑,再通过创建SimpleOperatorFactory封装StreamMap,最终创建Transformation封装SimpleOperatorFactory、并行度信息parallelism、输出类型outputType和上游Transformation的引用。

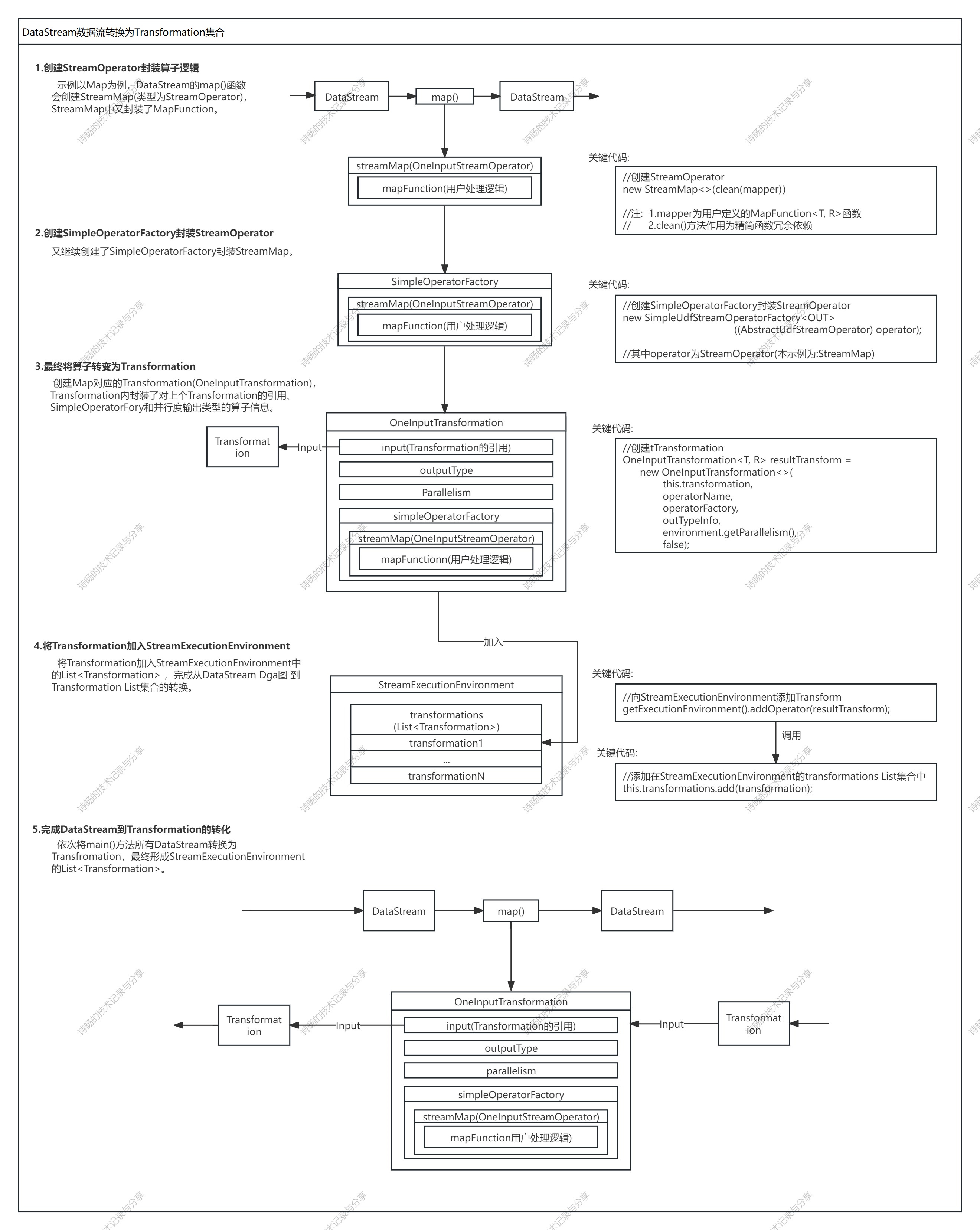

DataStream数据流转换Transformation集合的具体步骤如下:

1.创建StreamOperator封装算子逻辑:示例以map为例,DataStream的map()函数会创建StreamMap(StreamOperator的具体实现),StreamMap中又封装了MapFunction。

2.创建SimpleOperatorFactory:Flink又继续创建了SimpleOperatorFactory封装StreamMap。

3.将DataStream转变为Transformation:创建map对应的Transformation (具体为OneInputTransformation) ,Transformation内封装了对上游Transformation的引用、SimpleOperatorFory、并行度、输出类型等算子信息。

4.将Transformation加入StreamExecutionEnvironment:将Transformation加入StreamExecutionEnvironment中的List<Transformation> ,完成单个算子从DataStream数据流到 Transformation List集合的转换。

5.最终完成DataStream到Transformation的转化:依次将main()方法所有DataStream转换为Transfromation,最终形成StreamExecutionEnvironment的List<Transformation>。

DataStream数据流转换为Transformation集合源码图解:

完整代码解析:

2.Flink DataStream数据流转换为Transformation集合源码解析

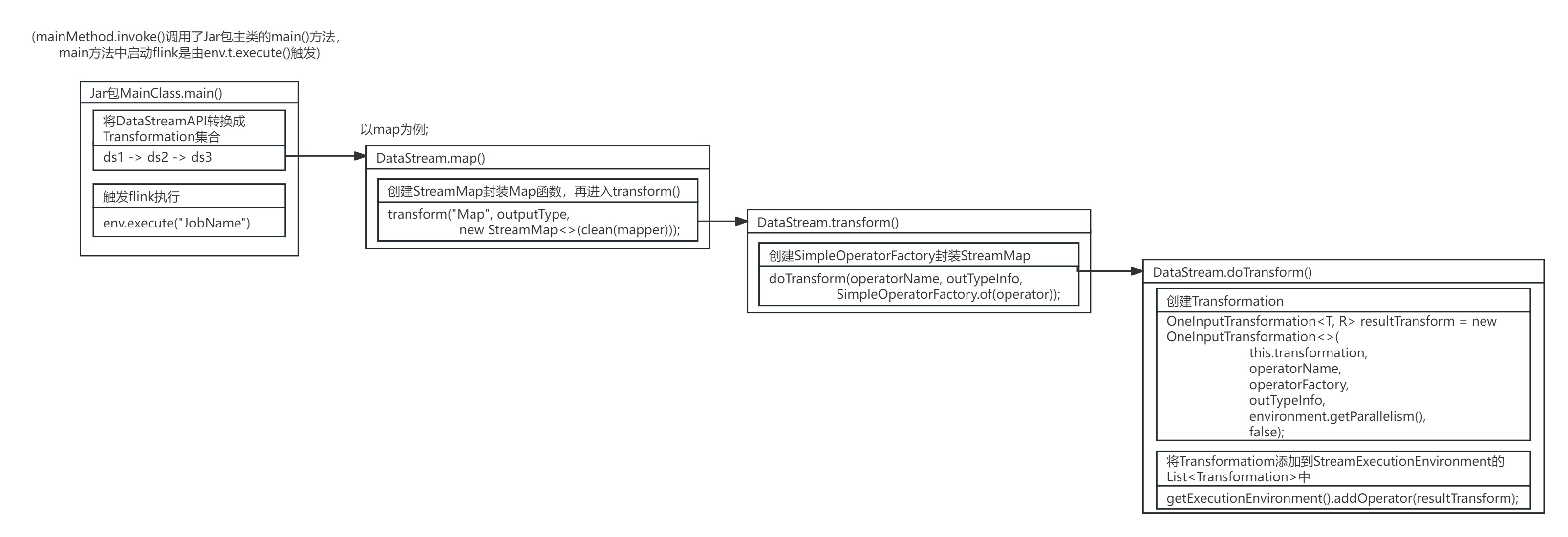

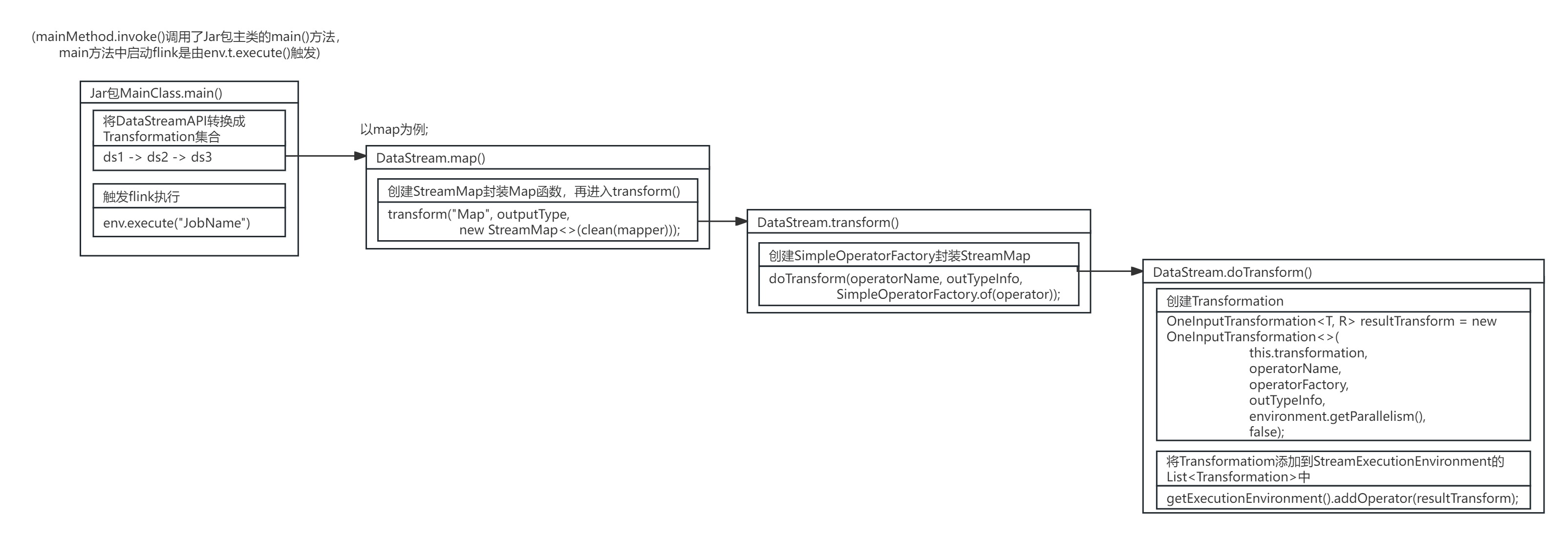

CliFrontend通过调用PackagedProgram.callMainMethod()方法,启动Flink主类的main()方法。

private static void callMainMethod(Class<?> entryClass, String[] args)

throws ProgramInvocationException {

Method mainMethod;

//...

//找到Flink主程序的main()方法

mainMethod = entryClass.getMethod("main", String[].class);

//...

//用反射执行jar包Flink程序的main方法

mainMethod.invoke(null, (Object) args);

//...

}Flink主类的main()方法的内容为研发人员编写的DataStream数据流,执行各算子方法如map()会把DataStream转换为Transformation。

源码图解:

DataStream数据流向Transformation集合转换的关键步骤是依次执行DataStream的所有算子API,将DataStream算子转换为Transformation,并把用户数据处理逻辑封装在StreamOperator中,以下为DataStream数据流向Transformation集合转换关键步骤的源码解析,以map算子为例:

在DataStream的map()方法中,Flink构造了算子的输出类型,继续调用带MapFunction与outPutType的map()方法。

DataStream.map()方法源码:

public <R> SingleOutputStreamOperator<R> map(MapFunction<T, R> mapper) {

//构建输出类型

TypeInformation<R> outType =

TypeExtractor.getMapReturnTypes(

clean(mapper), getType(), Utils.getCallLocationName(), true);

//继续调用map()

return map(mapper, outType);

}

DataStream的map()方法先创建了map对应StreamOperator的实现StreamMap,再继续调用transform()方法。其中clean(mapper)方法的作用是清除mapper(MapFunction对象)函数的冗余依赖。

DataStream.map()方法源码:

public <R> SingleOutputStreamOperator<R> map(

MapFunction<T, R> mapper, TypeInformation<R> outputType) {

//先创建StreamMap

//再调用transform()

return transform("Map", outputType, new StreamMap<>(clean(mapper)));

}StreamMap为map算子对应StreamOperator的实现,封装了算子的运算逻辑。从源码中可以解读到,map算子最终是通过userFunction.map()处理输入的element实现的。

创建过程:

StreamMap类源码:

public class StreamMap<IN, OUT> extends AbstractUdfStreamOperator<OUT, MapFunction<IN, OUT>>

implements OneInputStreamOperator<IN, OUT> {

private static final long serialVersionUID = 1L;

public StreamMap(MapFunction<IN, OUT> mapper) {

//调用父类构造方法

super(mapper);

chainingStrategy = ChainingStrategy.ALWAYS;

}

//算子处理方法

@Override

public void processElement(StreamRecord<IN> element) throws Exception {

//通过map操作element数据

output.collect(element.replace(userFunction.map(element.getValue())));

}

}其中StreamMap在构造时调用了父类AbstractUdfStreamOperator的构造方法,AbstractUdfStreamOperator的构造方法中实现了对用户业务逻辑方法userFunction(map具体为MapFunction)的封装。

AbstractUdfStreamOperator类源码:

public abstract class AbstractUdfStreamOperator<OUT, F extends Function>

extends AbstractStreamOperator<OUT>

implements OutputTypeConfigurable<OUT>, UserFunctionProvider<F> {

private static final long serialVersionUID = 1L;

/** The user function. */

protected final F userFunction;

public AbstractUdfStreamOperator(F userFunction) {

this.userFunction = requireNonNull(userFunction);

checkUdfCheckpointingPreconditions();

}

/**

* Gets the user function executed in this operator.

*

* @return The user function of this operator.

*/

public F getUserFunction() {

return userFunction;

}

//...

}创建完StreamMap后,Flink继续调用DataStream.transform()方法创建了SimpleOperatorFactory封装StreamOperator,并继续调用doTransform()方法。

创建过程:

DataStream.transform()方法源码:

public <R> SingleOutputStreamOperator<R> transform(

String operatorName,

TypeInformation<R> outTypeInfo,

OneInputStreamOperator<T, R> operator) {

//先创建SimpleOperatorFactory封装StreamOperator

//再继续调用doTransform()方法

return doTransform(operatorName, outTypeInfo, SimpleOperatorFactory.of(operator));

}其中SimpleOperatorFactory.of()方法作用是为各类StreamOperator创建其对应的SimpleOperatorFactory,SteamMap对应的StreamOperator类型为AbstractUdfStreamOperator,因此创建了SimpleUdfStreamOperatorFactory封装SteamMap。

SimpleOperatorFactory.of()方法源码:

public static <OUT> SimpleOperatorFactory<OUT> of(StreamOperator<OUT> operator) {

//...

//为AbstractUdfStreamOperator创建SimpleUdfStreamOperatorFactory<

else if (operator instanceof AbstractUdfStreamOperator) {

return new SimpleUdfStreamOperatorFactory<OUT>((AbstractUdfStreamOperator) operator);

}

//...

}SimpleUdfStreamOperatorFactory类是StreamOperator的封装类,可调用getUserFunction()方法获取StreamOperator封装的用户定义的业务逻辑方法

SimpleUdfStreamOperatorFactory类源码:

public class SimpleUdfStreamOperatorFactory<OUT> extends SimpleOperatorFactory<OUT>

implements UdfStreamOperatorFactory<OUT> {

private final AbstractUdfStreamOperator<OUT, ?> operator;

public SimpleUdfStreamOperatorFactory(AbstractUdfStreamOperator<OUT, ?> operator) {

super(operator);

this.operator = operator;

}

@Override

public Function getUserFunction() {

return operator.getUserFunction();

}

@Override

public String getUserFunctionClassName() {

return operator.getUserFunction().getClass().getName();

}

}创建完SimpleOperatorFactory后,Flink继续调用DataStream.doTransform()方法进行Transformation的创建。该方法为算子创建了Transformation(map对应的为OneInputTransformation),并把创建好的Transformation添加到StreamExecutionEnvironment中。

创建过程:

DataStream.doTransform()方法源码:

protected <R> SingleOutputStreamOperator<R> doTransform(

String operatorName,

TypeInformation<R> outTypeInfo,

StreamOperatorFactory<R> operatorFactory) {

// read the output type of the input Transform to coax out errors about MissingTypeInfo

transformation.getOutputType();

//创建Transformation,map对应的为OneInputTransformation

OneInputTransformation<T, R> resultTransform =

new OneInputTransformation<>(

this.transformation,

operatorName,

operatorFactory,

outTypeInfo,

environment.getParallelism(),

false);

@SuppressWarnings({"unchecked", "rawtypes"})

SingleOutputStreamOperator<R> returnStream =

new SingleOutputStreamOperator(environment, resultTransform);

//把创建好的Transformation添加到StreamExecutionEnvironment中。

getExecutionEnvironment().addOperator(resultTransform);

return returnStream;

}Transformation为Flink里对每个算子的封装,封装了SimpleOperatorFactory、并行度信息parallelism、输出类型outputType和上游Transformation的引用。通过SimpleOperatorFactory可以获取每个算子的业务处理逻辑,通过input(上游Transformation的引用)可以串联起Flink程序中上下游Transformation,形成整个业务处理链。

OneInputTransformation类源码:

public class OneInputTransformation<IN, OUT> extends PhysicalTransformation<OUT> {

private final Transformation<IN> input;

private final StreamOperatorFactory<OUT> operatorFactory;

private KeySelector<IN, ?> stateKeySelector;

private TypeInformation<?> stateKeyType;

/**

* Creates a new {@code OneInputTransformation} from the given input and operator.

*

* @param input The input {@code Transformation}

* @param name The name of the {@code Transformation}, this will be shown in Visualizations and

* the Log

* @param operator The {@code TwoInputStreamOperator}

* @param outputType The type of the elements produced by this {@code OneInputTransformation}

* @param parallelism The parallelism of this {@code OneInputTransformation}

*/

public OneInputTransformation(

Transformation<IN> input,

String name,

OneInputStreamOperator<IN, OUT> operator,

TypeInformation<OUT> outputType,

int parallelism) {

this(input, name, SimpleOperatorFactory.of(operator), outputType, parallelism);

}

public OneInputTransformation(

Transformation<IN> input,

String name,

OneInputStreamOperator<IN, OUT> operator,

TypeInformation<OUT> outputType,

int parallelism,

boolean parallelismConfigured) {

this(

input,

name,

SimpleOperatorFactory.of(operator),

outputType,

parallelism,

parallelismConfigured);

}

public OneInputTransformation(

Transformation<IN> input,

String name,

StreamOperatorFactory<OUT> operatorFactory,

TypeInformation<OUT> outputType,

int parallelism) {

super(name, outputType, parallelism);

this.input = input;

this.operatorFactory = operatorFactory;

}

/**

* Creates a new {@code LegacySinkTransformation} from the given input {@code Transformation}.

*

* @param input The input {@code Transformation}

* @param name The name of the {@code Transformation}, this will be shown in Visualizations and

* the Log

* @param operatorFactory The {@code TwoInputStreamOperator} factory

* @param outputType The type of the elements produced by this {@code OneInputTransformation}

* @param parallelism The parallelism of this {@code OneInputTransformation}

* @param parallelismConfigured If true, the parallelism of the transformation is explicitly set

* and should be respected. Otherwise the parallelism can be changed at runtime.

*/

public OneInputTransformation(

Transformation<IN> input,

String name,

StreamOperatorFactory<OUT> operatorFactory,

TypeInformation<OUT> outputType,

int parallelism,

boolean parallelismConfigured) {

super(name, outputType, parallelism, parallelismConfigured);

this.input = input;

this.operatorFactory = operatorFactory;

}

/** Returns the {@code TypeInformation} for the elements of the input. */

public TypeInformation<IN> getInputType() {

return input.getOutputType();

}

@VisibleForTesting

public OneInputStreamOperator<IN, OUT> getOperator() {

return (OneInputStreamOperator<IN, OUT>)

((SimpleOperatorFactory) operatorFactory).getOperator();

}

/** Returns the {@code StreamOperatorFactory} of this Transformation. */

public StreamOperatorFactory<OUT> getOperatorFactory() {

return operatorFactory;

}

/**

* Sets the {@link KeySelector} that must be used for partitioning keyed state of this

* operation.

*

* @param stateKeySelector The {@code KeySelector} to set

*/

public void setStateKeySelector(KeySelector<IN, ?> stateKeySelector) {

this.stateKeySelector = stateKeySelector;

updateManagedMemoryStateBackendUseCase(stateKeySelector != null);

}

/**

* Returns the {@code KeySelector} that must be used for partitioning keyed state in this

* Operation.

*

* @see #setStateKeySelector

*/

public KeySelector<IN, ?> getStateKeySelector() {

return stateKeySelector;

}

public void setStateKeyType(TypeInformation<?> stateKeyType) {

this.stateKeyType = stateKeyType;

}

public TypeInformation<?> getStateKeyType() {

return stateKeyType;

}

@Override

public List<Transformation<?>> getTransitivePredecessors() {

List<Transformation<?>> result = Lists.newArrayList();

result.add(this);

result.addAll(input.getTransitivePredecessors());

return result;

}

@Override

public List<Transformation<?>> getInputs() {

return Collections.singletonList(input);

}

@Override

public final void setChainingStrategy(ChainingStrategy strategy) {

operatorFactory.setChainingStrategy(strategy);

}

}创建完Transfromation后,继续调用StreamExecutionEnvironment.addOperator方法,把新创建的Transfromation添加到StreamExecutionEnvironment的List<Transformation>中。

StreamExecutionEnvironment.addOperator()方法源码:

public class StreamExecutionEnvironment implements AutoCloseable {

//...

//声明List<Transformation>

protected final List<Transformation<?>> transformations = new ArrayList<>();

//...

//把新创建的Transfromation添加到StreamExecutionEnvironment的List<Transformation>中

public void addOperator(Transformation<?> transformation) {

Preconditions.checkNotNull(transformation, "transformation must not be null.");

this.transformations.add(transformation);

}

}执行Flink主程序的过程,本质上就是依次执行Flink程序中的DataStream算子API,依次把代码中编写的DataStream数据流转换为Transformation,并将创建的Transformation集合保存在StreamExecutionEnvironment的流计算上下文环境中。

3.结语

当DataStream转换Transfomation结束后,执行StreamExecutionEnvironment.execute()方法,Flink开始进入StreamGraph调度,StreamGraph调度源码解析见下篇博文。

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?