在Android平台折腾了好多次openGL + MediaPlayer播放视频的事情,openGL本身是套API规范,其身后的计算机图形学还是比较难缠,但只是播放视频用不了太多东西,没有过多的坐标转换、简单2D纹理展示,实现起来还是比较简单,这边记录下开发的代码,后续需要可以直接查看。

openGL环境搭建

在Android中,GLSurfaceView实现了openGL运行依赖的环境,直接使用即可。openGL指定3.0版本,Activity作为Renderer借口实现类。GLES类接口需要在onSurfaceCreated,onSurfaceChanged以及onDrawFrame中调用,因为openGL运行在自己的GLThread中,在其他线程中缺少openGL上下文环境,运行时系统会报错。

在AndroidManifest.xml文件中声明

<uses-feature

android:glEsVersion="0x00030000"

android:required="true" />class OpenGLVideoPlayerActivity : Activity(), GLSurfaceView.Renderer, SurfaceTexture.OnFrameAvailableListener {

private lateinit var mGLSurfaceView: GLSurfaceView

override fun onCreate(savedInstanceState: Bundle?) {

super.onCreate(savedInstanceState)

setContentView(R.layout.activity_opengl_video)

mGLSurfaceView = findViewById<GLSurfaceView?>(R.id.surfaceView).apply {

setEGLContextClientVersion(3)

setRenderer(this@OpenGLVideoPlayerActivity)

}

}

override fun onSurfaceCreated(gl: GL10?, config: EGLConfig?) { }

override fun onSurfaceChanged(gl: GL10?, width: Int, height: Int) { }

override fun onDrawFrame(gl: GL10?) { }

}<?xml version="1.0" encoding="utf-8"?>

<FrameLayout xmlns:android="http://schemas.android.com/apk/res/android"

android:layout_width="match_parent"

android:layout_height="match_parent">

<android.opengl.GLSurfaceView

android:id="@+id/surfaceView"

android:layout_width="match_parent"

android:layout_height="match_parent" />

</FrameLayout>顶点着色器和片元着色器比较简单,但是要注意openGL播放视频需要用到外部纹理采样。

顶点着色器。vTextureCoord是要输出给片元着色器的纹理坐标,需要对原始纹理坐标处理后输出。gl_Position是输出给管线(自己理解)的顶点坐标,因为播放视频时使用的顶点坐标简单并且已经时标准化坐标,所以这里可以直接输出(一会儿看到顶点坐标就明白了)。

attribute vec4 a_Position;

attribute vec4 a_TextureCoordinate;

uniform mat4 u_TextureMatrix;

varying vec2 vTextureCoord;

void main() {

vTextureCoord = (u_TextureMatrix * a_TextureCoordinate).xy;

gl_Position = a_Position;

}片元着色器。因为播放视频,对比直接显示静态图片需要特殊声明采样的纹理单元。以下声明是全平台通用。

#extension GL_OES_EGL_image_external:require

precision mediump float;

uniform samplerExternalOES u_TextureSampler;

varying vec2 vTextureCoord;

void main() {

gl_FragColor = texture2D(u_TextureSampler, vTextureCoord);

}初始化openGL环境以及加载着色器生成program。此处代码都是模版代码,此处仅仅是记录,没有很完善的做状态错误结果的校验。

private fun newProgram(): Int {

val vShaderId = GLES30.glCreateShader(GLES30.GL_VERTEX_SHADER)

GLES30.glShaderSource(vShaderId, read(R.raw.vertex_camera))

GLES30.glCompileShader(vShaderId)

val fShaderId = GLES30.glCreateShader(GLES30.GL_FRAGMENT_SHADER)

GLES30.glShaderSource(fShaderId, read(R.raw.fragment_camera))

GLES30.glCompileShader(fShaderId)

val program = GLES30.glCreateProgram()

GLES30.glAttachShader(program, vShaderId)

GLES30.glAttachShader(program, fShaderId)

GLES30.glLinkProgram(program)

return program

}program创建成功以后即可绑定顶点坐标以及纹理坐标等数据,此处直接使用VAO顶点数据对象方式将数据拷贝到GPU中处理,避免直接使用导致的CPU和GPU之间频繁读写数据。

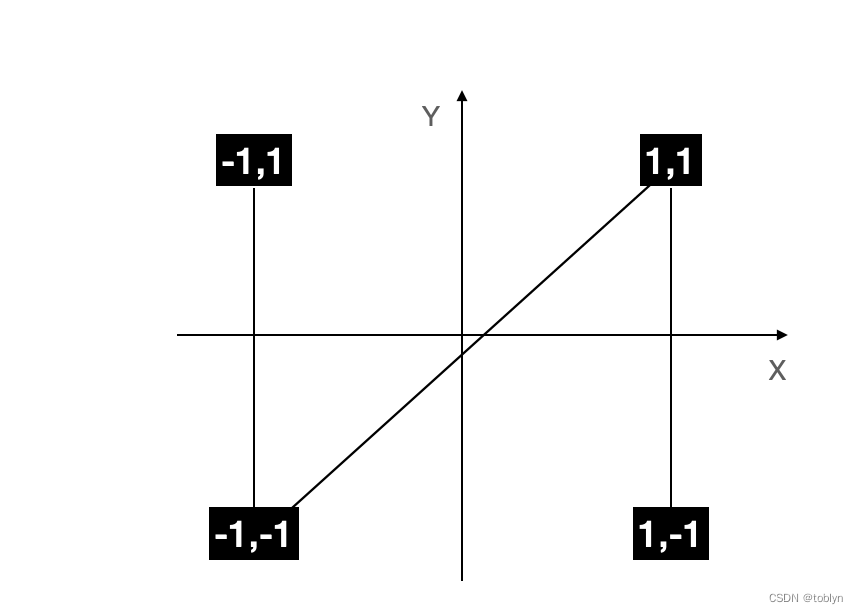

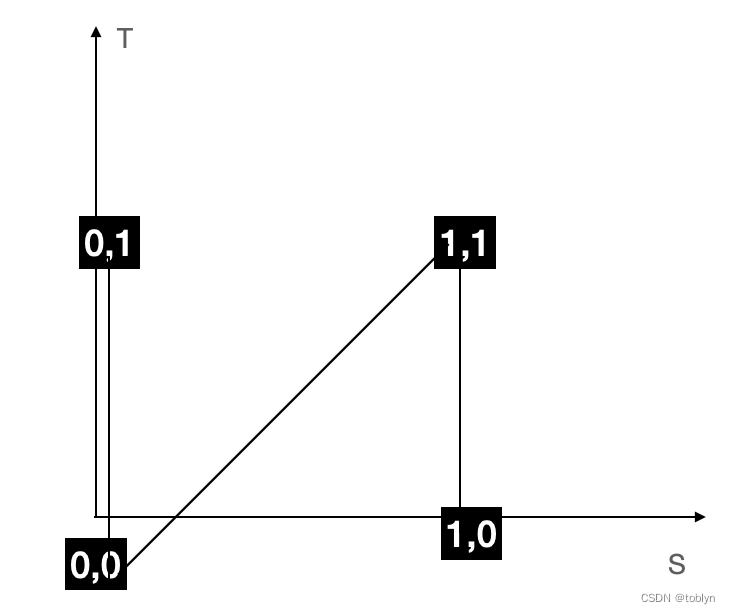

顶点坐标数据以及纹理坐标

private val VERTEX = floatArrayOf(

-1f, 1f, 0f,

-1f, -1f, 0f,

1f, 1f, 0f,

1f, -1f, 0f)

private val TEXTURE = floatArrayOf(

0f, 1f,

0f, 0f,

1f, 1f,

1f, 0f)

private val INDICES = intArrayOf(0, 1, 2, 1, 2, 3)以下图示顶点分布以及顺序情况。渲染时是两个三角形组装成为一个四边形,顶点坐标的定义即两个三角形(左上,右下),纹理坐标与顶点坐标一一对应。采用顶点索引方式绘制,因此(0,1,2,1,2,3)即表示两个三角形顶点顺序。

VAO顶点数据填充绑定代码,需要注意使用GLES30.glBufferSubData方式绑定数据,内存填充结束后,需要及时给顶点绑定属性数据,否则会出现没有数据导致的黑屏问题。

class OpenGLVideoPlayerActivity : Activity(), GLSurfaceView.Renderer,

SurfaceTexture.OnFrameAvailableListener {

private var mProgramId: Int = 0

private var mVao: Int = GLES30.GL_NONE

override fun onSurfaceCreated(gl: GL10?, config: EGLConfig?) {

...

val vaoArray = IntArray(1)

val vboArray = IntArray(2)

GLES30.glGenVertexArrays(vaoArray.size, vaoArray, 0)

GLES30.glGenBuffers(vboArray.size, vboArray, 0)

GLES30.glBindVertexArray(vaoArray[0])

GLES30.glBindBuffer(GLES30.GL_ARRAY_BUFFER, vboArray[0])

GLES30.glBufferData(GLES30.GL_ARRAY_BUFFER, getMemorySize(VERTEX) + getMemorySize(TEXTURE), null, GLES30.GL_STATIC_DRAW)

GLES30.glBufferSubData(GLES30.GL_ARRAY_BUFFER, 0, getMemorySize(VERTEX), VERTEX.toBuffer())

GLES30.glBufferSubData(GLES30.GL_ARRAY_BUFFER, getMemorySize(VERTEX), getMemorySize(TEXTURE), TEXTURE.toBuffer())

val aPosition = GLES30.glGetAttribLocation(mProgramId, "a_Position")

GLES30.glEnableVertexAttribArray(aPosition)

GLES30.glVertexAttribPointer(aPosition, 3, GLES30.GL_FLOAT, false, 3 * 4, 0)

val aTextCoordinate = GLES30.glGetAttribLocation(mProgramId, "a_TextureCoordinate")

GLES30.glEnableVertexAttribArray(aTextCoordinate)

GLES30.glVertexAttribPointer(aTextCoordinate, 2, GLES30.GL_FLOAT, false, 2 * 4, getMemorySize(VERTEX))

GLES30.glBindBuffer(GLES30.GL_ELEMENT_ARRAY_BUFFER, vboArray[1])

GLES30.glBufferData(GLES30.GL_ELEMENT_ARRAY_BUFFER, getMemorySize(INDICES), INDICES.toBuffer(), GLES30.GL_STATIC_DRAW)

mVao = vaoArray[0]

...

}

private fun getMemorySize(array: FloatArray) = array.size * 4

private fun getMemorySize(array: IntArray) = array.size * 4

private fun FloatArray.toBuffer(): FloatBuffer {

val buffer = ByteBuffer.allocateDirect(size * 4).order(ByteOrder.nativeOrder()).asFloatBuffer().put(this)

buffer.position(0)

return buffer

}

private fun IntArray.toBuffer(): IntBuffer {

val buffer = ByteBuffer.allocateDirect(size * 4).order(ByteOrder.nativeOrder()).asIntBuffer().put(this)

buffer.position(0)

return buffer

}

}openGL外部纹理

不同与普通图片作为纹理,视频纹理需要生成特殊类型的texture,与MediaPlayer协作,以MediaPlayer作为纹理的生产者,而openGL作为纹理消费者处理视频数据。

openGL纹理生成,注意此处绑定的纹理类型是GLES11Ext.GL_TEXTURE_EXTERNAL_OES。

private fun createOESTextureId(): Int {

val textureArray = intArrayOf(1)

GLES30.glGenTextures(1, textureArray, 0)

GLES30.glBindTexture(GLES11Ext.GL_TEXTURE_EXTERNAL_OES, textureArray[0])

GLES30.glTexParameteri(GLES11Ext.GL_TEXTURE_EXTERNAL_OES, GLES30.GL_TEXTURE_WRAP_S, GLES30.GL_CLAMP_TO_EDGE)

GLES30.glTexParameteri(GLES11Ext.GL_TEXTURE_EXTERNAL_OES, GLES30.GL_TEXTURE_WRAP_T, GLES30.GL_CLAMP_TO_EDGE)

GLES30.glTexParameteri(GLES11Ext.GL_TEXTURE_EXTERNAL_OES, GLES30.GL_TEXTURE_MAG_FILTER, GLES30.GL_LINEAR)

GLES30.glTexParameteri(GLES11Ext.GL_TEXTURE_EXTERNAL_OES, GLES30.GL_TEXTURE_MIN_FILTER, GLES30.GL_LINEAR)

return textureArray[0]

}openGL与MediaPlayer通过SurfaceTexture类进行绑定,当SurfaceTexture.OnFrameAvailableListener#onFrameAvailable回调时表示内存缓冲区有数据,可以刷新画面(个人理解)。

mTextureId = createOESTextureId()

mSurfaceTexture = SurfaceTexture(mTextureId)

mSurfaceTexture.setOnFrameAvailableListener(this)

mediaPlayer.setSurface(Surface(mSurfaceTexture))在Renderer接口的onDrawFrame方法中,SurfaceTexture刷新缓冲区内存,openGL读取最新的纹理画面推送到窗口中,其中注意SurfaceTexture#getTransformMatrix函数,需要获取最新的变换矩阵与纹理坐标相乘以获取正确的纹理坐标(因为此处视频固定,纹理坐标简单,其实不处理也行)。

override fun onDrawFrame(gl: GL10?) {

...

if (mAtomicBoolean.get()) {

mSurfaceTexture.updateTexImage()

mSurfaceTexture.getTransformMatrix(mTextureMatrix)

}

...

GLES30.glBindTexture(GLES11Ext.GL_TEXTURE_EXTERNAL_OES, mTextureId)

GLES30.glActiveTexture(GLES30.GL_TEXTURE0)

}最后就是MediaPlayer的播放媒体文件。

整体来看,使用openGL播放2D画面视频比较简单,没有顶点坐标的转换等操作,但是openGL的使用是基本功,许多的参数在网上没有详细的教程,需要多查找资料。先写到这里,后面沾上完整的代码。以后有时间,再来详细介绍参数的含义,这部分其实很重要。

package com.opengl

import android.app.Activity

import android.content.Context

import android.content.res.Resources

import android.graphics.SurfaceTexture

import android.media.MediaPlayer

import android.opengl.GLES11Ext

import android.opengl.GLES30

import android.opengl.GLSurfaceView

import android.os.Bundle

import android.view.Surface

import com.didi.davinci.avm.R

import java.io.BufferedReader

import java.io.IOException

import java.io.InputStreamReader

import java.nio.ByteBuffer

import java.nio.ByteOrder

import java.nio.FloatBuffer

import java.nio.IntBuffer

import java.util.concurrent.atomic.AtomicBoolean

import javax.microedition.khronos.egl.EGLConfig

import javax.microedition.khronos.opengles.GL10

private val VERTEX = floatArrayOf(

-1f, 1f, 0f, -1f, -1f, 0f, 1f, 1f, 0f, 1f, -1f, 0f

)

private val TEXTURE = floatArrayOf(

0f, 1f, 0f, 0f, 1f, 1f, 1f, 0f

)

private val INDICES = intArrayOf(0, 1, 2, 1, 2, 3)

class OpenGLVideoPlayerActivity : Activity(), GLSurfaceView.Renderer,

SurfaceTexture.OnFrameAvailableListener {

private lateinit var mGLSurfaceView: GLSurfaceView

private var mProgramId: Int = 0

private var mVao: Int = GLES30.GL_NONE

private val mediaPlayer: MediaPlayer = MediaPlayer().apply {

isLooping = true

}

private lateinit var mSurfaceTexture: SurfaceTexture

private var mTextureId: Int = 0

private val mTextureMatrix = FloatArray(16)

private var mTextureMatrixLocation: Int = 0

override fun onCreate(savedInstanceState: Bundle?) {

super.onCreate(savedInstanceState)

setContentView(R.layout.activity_opengl_video)

mGLSurfaceView = findViewById<GLSurfaceView?>(R.id.surfaceView).apply {

setEGLContextClientVersion(3)

setRenderer(this@OpenGLVideoPlayerActivity)

}

with(mediaPlayer) {

setDataSource(assets.openFd("ai.mp4"))

prepare()

start()

}

}

override fun onSurfaceCreated(gl: GL10?, config: EGLConfig?) {

mProgramId = newProgram()

val vaoArray = IntArray(1)

val vboArray = IntArray(2)

GLES30.glGenVertexArrays(vaoArray.size, vaoArray, 0)

GLES30.glGenBuffers(vboArray.size, vboArray, 0)

GLES30.glBindVertexArray(vaoArray[0])

GLES30.glBindBuffer(GLES30.GL_ARRAY_BUFFER, vboArray[0])

GLES30.glBufferData(

GLES30.GL_ARRAY_BUFFER,

getMemorySize(VERTEX) + getMemorySize(TEXTURE),

null,

GLES30.GL_STATIC_DRAW

)

GLES30.glBufferSubData(GLES30.GL_ARRAY_BUFFER, 0, getMemorySize(VERTEX), VERTEX.toBuffer())

GLES30.glBufferSubData(

GLES30.GL_ARRAY_BUFFER,

getMemorySize(VERTEX),

getMemorySize(TEXTURE),

TEXTURE.toBuffer()

)

val aPosition = GLES30.glGetAttribLocation(mProgramId, "a_Position")

GLES30.glEnableVertexAttribArray(aPosition)

GLES30.glVertexAttribPointer(aPosition, 3, GLES30.GL_FLOAT, false, 3 * 4, 0)

val aTextCoordinate = GLES30.glGetAttribLocation(mProgramId, "a_TextureCoordinate")

GLES30.glEnableVertexAttribArray(aTextCoordinate)

GLES30.glVertexAttribPointer(

aTextCoordinate, 2, GLES30.GL_FLOAT, false, 2 * 4, getMemorySize(VERTEX)

)

GLES30.glBindBuffer(GLES30.GL_ELEMENT_ARRAY_BUFFER, vboArray[1])

GLES30.glBufferData(

GLES30.GL_ELEMENT_ARRAY_BUFFER,

getMemorySize(INDICES),

INDICES.toBuffer(),

GLES30.GL_STATIC_DRAW

)

mTextureMatrixLocation = GLES30.glGetUniformLocation(mProgramId, "u_TextureMatrix")

mVao = vaoArray[0]

mTextureId = createOESTextureId()

mSurfaceTexture = SurfaceTexture(mTextureId)

mSurfaceTexture.setOnFrameAvailableListener(this)

mediaPlayer.setSurface(Surface(mSurfaceTexture))

}

override fun onSurfaceChanged(gl: GL10?, width: Int, height: Int) {

GLES30.glViewport(0, 0, width, height)

}

override fun onDrawFrame(gl: GL10?) {

if (mAtomicBoolean.get()) {

mSurfaceTexture.updateTexImage()

mSurfaceTexture.getTransformMatrix(mTextureMatrix)

}

GLES30.glClearColor(0f, 1f, 0f, 1f)

GLES30.glUseProgram(mProgramId)

GLES30.glBindVertexArray(mVao)

GLES30.glBindTexture(GLES11Ext.GL_TEXTURE_EXTERNAL_OES, mTextureId)

GLES30.glActiveTexture(GLES30.GL_TEXTURE0)

GLES30.glUniformMatrix4fv(mTextureMatrixLocation, 1, false, mTextureMatrix, 0)

GLES30.glDrawElements(GLES30.GL_TRIANGLES, INDICES.size, GLES30.GL_UNSIGNED_INT, 0)

}

private fun newProgram(): Int {

val vShaderId = GLES30.glCreateShader(GLES30.GL_VERTEX_SHADER)

GLES30.glShaderSource(vShaderId, read(this, R.raw.vertex_camera))

GLES30.glCompileShader(vShaderId)

val fShaderId = GLES30.glCreateShader(GLES30.GL_FRAGMENT_SHADER)

GLES30.glShaderSource(fShaderId, read(this, R.raw.fragment_camera))

GLES30.glCompileShader(fShaderId)

val program = GLES30.glCreateProgram()

GLES30.glAttachShader(program, vShaderId)

GLES30.glAttachShader(program, fShaderId)

GLES30.glLinkProgram(program)

return program

}

private fun read(context: Context, id: Int): String {

val builder = StringBuilder()

try {

context.resources.openRawResource(id).use {

InputStreamReader(it).use { sr ->

BufferedReader(sr).use { br ->

var textLine: String?

while (br.readLine().also { textLine = it } != null) {

builder.append(textLine)

builder.append("\n")

}

}

}

}

} catch (e: IOException) {

e.printStackTrace()

} catch (e: Resources.NotFoundException) {

e.printStackTrace()

}

return builder.toString()

}

private fun getMemorySize(array: FloatArray) = array.size * 4

private fun getMemorySize(array: IntArray) = array.size * 4

private fun FloatArray.toBuffer(): FloatBuffer {

val buffer =

ByteBuffer.allocateDirect(size * 4).order(ByteOrder.nativeOrder()).asFloatBuffer()

.put(this)

buffer.position(0)

return buffer

}

private fun IntArray.toBuffer(): IntBuffer {

val buffer =

ByteBuffer.allocateDirect(size * 4).order(ByteOrder.nativeOrder()).asIntBuffer()

.put(this)

buffer.position(0)

return buffer

}

private fun createOESTextureId(): Int {

val textureArray = intArrayOf(1)

GLES30.glGenTextures(1, textureArray, 0)

GLES30.glBindTexture(GLES11Ext.GL_TEXTURE_EXTERNAL_OES, textureArray[0])

GLES30.glTexParameteri(

GLES11Ext.GL_TEXTURE_EXTERNAL_OES, GLES30.GL_TEXTURE_WRAP_S, GLES30.GL_CLAMP_TO_EDGE

)

GLES30.glTexParameteri(

GLES11Ext.GL_TEXTURE_EXTERNAL_OES, GLES30.GL_TEXTURE_WRAP_T, GLES30.GL_CLAMP_TO_EDGE

)

GLES30.glTexParameteri(

GLES11Ext.GL_TEXTURE_EXTERNAL_OES, GLES30.GL_TEXTURE_MAG_FILTER, GLES30.GL_LINEAR

)

GLES30.glTexParameteri(

GLES11Ext.GL_TEXTURE_EXTERNAL_OES, GLES30.GL_TEXTURE_MIN_FILTER, GLES30.GL_LINEAR

)

return textureArray[0]

}

private val mAtomicBoolean = AtomicBoolean(false)

override fun onFrameAvailable(surfaceTexture: SurfaceTexture?) {

mAtomicBoolean.set(true)

}

}attribute vec4 a_Position;

attribute vec4 a_TextureCoordinate;

uniform mat4 u_TextureMatrix;

uniform mat4 u_MvpMatrix;

varying vec2 vTextureCoord;

void main() {

vTextureCoord = (u_TextureMatrix * a_TextureCoordinate).xy;

gl_Position = a_Position;

}

#extension GL_OES_EGL_image_external:require

precision mediump float;

uniform samplerExternalOES u_TextureSampler;

varying vec2 vTextureCoord;

void main() {

gl_FragColor = texture2D(u_TextureSampler, vTextureCoord);

}

171

171

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?