Flink的task operator之间肯定会涉及到数据的流传,基本就是requestPartition --> netty --> InputGetway。今天主要分析的就时前一部分operator将数据处理完之后的步骤。

1.数据在operator中处理

数据在operator中进行处理后,我们经常会调用out.collect(...),这类方法将数据发送到下游,而这个方法,会将数据封装成StreamRecord,内部包含了时间戳等信息。

/** The actual value held by this record. */

private T value;

/** The timestamp of the record. */

private long timestamp;

/** Flag whether the timestamp is actually set. */

private boolean hasTimestamp;2.数据提交给RecordWrite处理分发

RecordWriter负责将数据写入RequsetPartition中去。提交给RecordWrite很简单,就是以下代码,在RecordWriterOutput类中。

@Override

public void collect(StreamRecord<OUT> record) {

if (this.outputTag != null) {

// we are not responsible for emitting to the main output.

return;

}

pushToRecordWriter(record);

}

@Override

public <X> void collect(OutputTag<X> outputTag, StreamRecord<X> record) {

if (this.outputTag == null || !this.outputTag.equals(outputTag)) {

// we are not responsible for emitting to the side-output specified by this

// OutputTag.

return;

}

pushToRecordWriter(record);

}

private <X> void pushToRecordWriter(StreamRecord<X> record) {

serializationDelegate.setInstance(record);

try {

recordWriter.emit(serializationDelegate);

}

catch (Exception e) {

throw new RuntimeException(e.getMessage(), e);

}

}3.RecordWriter处理数据

在recordWriter初始化的时候,默认会开启一个守护线程,定时的去flush一下通道里面的数据。

/**

*

* @param writer

* @param timeout flush时间,在StreamTask中,默认设置时间是100ms

* @param taskName

*/

RecordWriter(ResultPartitionWriter writer, long timeout, String taskName) {

this.targetPartition = writer;

this.numberOfChannels = writer.getNumberOfSubpartitions();

this.serializer = new SpanningRecordSerializer<T>();

checkArgument(timeout >= -1);

this.flushAlways = (timeout == 0);

if (timeout == -1 || timeout == 0) {

outputFlusher = null;

} else {

String threadName = taskName == null ?

DEFAULT_OUTPUT_FLUSH_THREAD_NAME :

DEFAULT_OUTPUT_FLUSH_THREAD_NAME + " for " + taskName;

//开启一个守护线程,定时去flushAll

outputFlusher = new OutputFlusher(threadName, timeout);

outputFlusher.start();

}

}recordWriter接收数据,并序列化写入channel的是在emit方法。

protected void emit(T record, int targetChannel) throws IOException, InterruptedException {

checkErroneous();

//将数据序列化成ByteBuffer(JAVA NIO的缓冲区)

serializer.serializeRecord(record);

// Make sure we don't hold onto the large intermediate serialization buffer for too long

if (copyFromSerializerToTargetChannel(targetChannel)) {

serializer.prune();

}

}先看序列化的方法,将会数据写入java.nio.ByteBuffer中去,下面的dataBuffer 就是java.nio.ByteBuffer的实例。

public void serializeRecord(T record) throws IOException {

if (CHECKED) {

if (dataBuffer.hasRemaining()) {

throw new IllegalStateException("Pending serialization of previous record.");

}

}

serializationBuffer.clear();

// the initial capacity of the serialization buffer should be no less than 4

serializationBuffer.skipBytesToWrite(4);

/**

* 这里就是各种序列化各显神通的地方了,怎么样去序列化都在这里体现

* 最终都会写入serializationBuffer中

*/

// write data and length

record.write(serializationBuffer);

int len = serializationBuffer.length() - 4;

serializationBuffer.setPosition(0);

serializationBuffer.writeInt(len);

serializationBuffer.skipBytesToWrite(len);

dataBuffer = serializationBuffer.wrapAsByteBuffer();

}emit会调用copyFromSerializerToTargetChannel方法,这里会将数据写入对应的channel中去,channel中通过BufferBuilder去接收数据,而BufferBuilder中就封装了Flink内部著名的MemorySegment。

protected boolean copyFromSerializerToTargetChannel(int targetChannel) throws IOException, InterruptedException {

// We should reset the initial position of the intermediate serialization buffer before

// copying, so the serialization results can be copied to multiple target buffers.

serializer.reset();

boolean pruneTriggered = false;

//获取当前的memorysegment,如果当前没有,那么就去申请。BufferBuilder中封装了memorysegment

BufferBuilder bufferBuilder = getBufferBuilder(targetChannel);

//往bufferBuilder写入数据

SerializationResult result = serializer.copyToBufferBuilder(bufferBuilder);

/**

* 1.如果NIO的缓冲区没有写满,那么就继续写不会触发break,继续往memorySegment中写 ---> result.isFullBuffer = true result.isFullRecord = false

* 2.如果NIO的缓冲区写满了,而memorySegment没有写满,直接跳出循环,进行flush ---> result.isFullBuffer = false result.isFullRecord = true

* 3.如果NIO的缓存区和memorySegment都写满了,那么会清空targetChannel中的数据,然后跳出循环 ---> result.isFullBuffer = true result.isFullRecord = true

*/

while (result.isFullBuffer()) {

//用于输出监控内容

finishBufferBuilder(bufferBuilder);

// If this was a full record, we are done. Not breaking out of the loop at this point

// will lead to another buffer request before breaking out (that would not be a

// problem per se, but it can lead to stalls in the pipeline).

if (result.isFullRecord()) {

pruneTriggered = true;

emptyCurrentBufferBuilder(targetChannel);

break;

}

//从localbufferpool中去拉取memorySegment,localbufferpool如果有可用的memorySegment,就直接返回,没有就重新去申请

bufferBuilder = requestNewBufferBuilder(targetChannel);

//往bufferBuilder写入数据

result = serializer.copyToBufferBuilder(bufferBuilder);

}

checkState(!serializer.hasSerializedData(), "All data should be written at once");

if (flushAlways) {

flushTargetPartition(targetChannel);

}

return pruneTriggered;

}这里就涉及到了内存的申请,体现在requestNewBufferBuilder这个方法中,这里会去申请memorySegment。这里就有LocalBufferPool和NetworkBufferPool之间的交互了,如果有读者对这两块不了解的,可以去读笔者的另一篇文章 Flink内存管理机制 。 这里面涉及到的主要代码有一下,下列方法来着好几个类,各位可以追踪requestNewBufferBuilder去找到下列方法

@Override

public BufferBuilder requestNewBufferBuilder(int targetChannel) throws IOException, InterruptedException {

checkState(bufferBuilders[targetChannel] == null || bufferBuilders[targetChannel].isFinished());

BufferBuilder bufferBuilder = targetPartition.getBufferBuilder();

targetPartition.addBufferConsumer(bufferBuilder.createBufferConsumer(), targetChannel);

bufferBuilders[targetChannel] = bufferBuilder;

return bufferBuilder;

}

@Override

public BufferBuilder getBufferBuilder() throws IOException, InterruptedException {

checkInProduceState();

return bufferPool.requestBufferBuilderBlocking();

}

@Override

public BufferBuilder requestBufferBuilderBlocking() throws IOException, InterruptedException {

return toBufferBuilder(requestMemorySegmentBlocking());

}

private BufferBuilder toBufferBuilder(MemorySegment memorySegment) {

if (memorySegment == null) {

return null;

}

return new BufferBuilder(memorySegment, this);

}

private MemorySegment requestMemorySegmentBlocking() throws InterruptedException, IOException {

MemorySegment segment;

while ((segment = requestMemorySegment()) == null) {

try {

// wait until available

isAvailable().get();

} catch (ExecutionException e) {

LOG.error("The available future is completed exceptionally.", e);

ExceptionUtils.rethrow(e);

}

}

return segment;

}OK,说完了数据内存的申请,再说如何将数据从数据序列化缓冲区(ByteBuffer)写入BufferBuilder中,这里主要用到了copyToBufferBuilder方法,同时还会判断是否可以继续写。具体代码如下

@Override

public SerializationResult copyToBufferBuilder(BufferBuilder targetBuffer) {

targetBuffer.append(dataBuffer);

targetBuffer.commit();

//判断是否有剩余空间,可以继续往下写

return getSerializationResult(targetBuffer);

}

private SerializationResult getSerializationResult(BufferBuilder targetBuffer) {

if (dataBuffer.hasRemaining()) {

//如果nio的ByteBuffer(缓冲区)还有空间,那么继续往这个dataBuffer写

return SerializationResult.PARTIAL_RECORD_MEMORY_SEGMENT_FULL;

}

//如果满了,就会判断是否是memory_segment满了

return !targetBuffer.isFull()

? SerializationResult.FULL_RECORD //只是缓冲区满了

: SerializationResult.FULL_RECORD_MEMORY_SEGMENT_FULL; //这个memory_segemnt满了

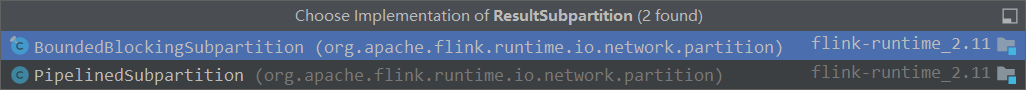

}之后就到了flush的环节了,这里会触发 ResultPartition的flush方法,然后触发ResultSubpartition的flush方法,其中ResultSubpartition方法有两个实现类,BoundedBlockingSubpartition是对应的有界数据集,而PipelinedSubpartition对应的是有界和无界数据集。

我们来看PipelinedSubpartition的flush,最终会开启一个线程去调用netty的方法将数据写出去。

@Override

public void notifyDataAvailable() {

requestQueue.notifyReaderNonEmpty(this);

}

void notifyReaderNonEmpty(final NetworkSequenceViewReader reader) {

// The notification might come from the same thread. For the initial writes this

// might happen before the reader has set its reference to the view, because

// creating the queue and the initial notification happen in the same method call.

// This can be resolved by separating the creation of the view and allowing

// notifications.

// TODO This could potentially have a bad performance impact as in the

// worst case (network consumes faster than the producer) each buffer

// will trigger a separate event loop task being scheduled.

ctx.executor().execute(() -> ctx.pipeline().fireUserEventTriggered(reader));

}

6811

6811

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?