案例说明:

Kingbase V8R3集群,集群启动正常,备库数据库服务正常,流复制状态正常。但是备库在show pool_nodes下查看是‘down’状态,通过pcp_attach_node重新注册节点后,仍然是‘down’,通过复制(cp)主库data方式重建备库后,仍然没有解决。

此文档,详细介绍了此问题的分析和解决过程。适用版本: KingbaseES V8R3

集群节点信息:

一、问题现象

1、节点状态信息

TEST=# show pool_nodes;

node_id | hostname | port | status | lb_weight | role | select_cnt | load_balance_node | replication_delay

---------+---------------+-------+--------+-----------+---------+------------+-------------------+-------------------

0 | 192.168.1.101 | 54321 | up | 0.500000 | primary | 0 | true | 0

1 | 192.168.1.102 | 54321 | down | 0.500000 | standby | 0 | false | 0

(2 rows)

### 如上所示,备库节点状态‘down’。2、流复制状态信息

TEST=# select * from sys_stat_replication;

PID | USESYSID | USENAME | APPLICATION_NAME | CLIENT_ADDR | CLIENT_HOSTNAME | CLIENT_PORT | BACKEND_START |BACKEND_XMIN | STATE | SENT_LOCATION | WRITE_LOCATION | FLUSH_LOCATION | REPLAY_LOCATION | SYNC_PRIORITY | SYNC_STATE

-------+----------+---------+------------------+---------------+-----------------+-------------+-------------------------------+--------------+-----------+---------------+----------------+----------------+-----------------+---------------+------------

11393 | 10 | SYSTEM | node102 | 192.168.1.102 | | 28251 | 2022-09-21 16:25:46.638372+08 | | streaming | 2/41237A0 | 2/41237A0 | 2/41237A0 | 2/41237A0 | 2 | sync

(1 row)二、问题分析和解决Tips: 一般对于以上问题,可以通过pcp_attach_node方式注册节点,恢复到‘up’状态。

1、注册备库节点

[kingbase@node101 bin]$ ./pcp_attach_node -U kingbase 1

Password:

pcp_attach_node -- Command Successful

[kingbase@node101 bin]$ ./pcp_node_info -U kingbase 1

Password:

192.168.1.102 54321 1 0.500000 waiting

[kingbase@node101 bin]$ ./pcp_node_info -U kingbase 1

Password:

192.168.1.102 54321 2 0.500000 up

[kingbase@node101 bin]$ ./pcp_node_info -U kingbase 1

Password:

192.168.1.102 54321 3 0.500000 down

##如上所示,执行备库节点注册后仍然是‘down’状态。2、查看备库recovery.log

......

primary node/Im node status is changed, primary ip[192.168.1.101], recovery.conf NEED_CHANGE [0] (0 is need ), I,m status is [1] (1 is down), I will be in recovery.

node_id | hostname | port | status | lb_weight | role | select_cnt | load_balance_node | replication_delay

---------+---------------+-------+--------+-----------+---------+------------+-------------------+-------------------

0 | 192.168.1.101 | 54321 | up | 0.500000 | primary | 0 | true | 0

1 | 192.168.1.102 | 54321 | down | 0.500000 | standby | 0 | false | 0

(2 rows)

if recover node up, let it down , for rewind

waiting for server to shut down.... done

server stopped

2022-09-21 16:18:36 set /home/kingbase/cluster/R3HA/db/data down now... already down , check again

wait kb stop 5 sec .......

2022-09-21 16:18:37 sys_rewind...

sys_rewind --target-data=/home/kingbase/cluster/R3HA/db/data --source-server="host=192.168.1.101 port=54321 user=SUPERMANAGER_V8ADMIN dbname=TEST"

datadir_source = /home/kingbase/cluster/R3HA/db/data

rewinding from last common checkpoint at 2/125C2A0 on timeline 19

find last common checkpoint start time from 2022-09-21 16:18:37.147787 CST to 2022-09-21 16:18:37.178836 CST, in "0.031049" seconds.

reading source file list

reading target file list

reading WAL in target

Rewind datadir file from source

update the control file: minRecoveryPoint is '2/127FA40', minRecoveryPointTLI is '19', and database state is 'in archive recovery'

rewind start wal location 2/125C268 (file 000000130000000200000001), end wal location 2/127FA40 (file 000000130000000200000001). time from 2022-09-21 16:18:37.147787 CST to 2022-09-21 16:18:38.887227 CST, in "1.739440" seconds.

Done!

server started

ksql "port=54321 user=SUPERMANAGER_V8ADMIN dbname=TEST connect_timeout=10" -c "select 33333;"

SYS_CREATE_PHYSICAL_REPLICATION_SLOT

--------------------------------------

(slot_node101,)

(1 row)

2022-09-21 16:18:43 create the slot [slot_node101] success.

SYS_CREATE_PHYSICAL_REPLICATION_SLOT

--------------------------------------

(slot_node102,)

(1 row)

2022-09-21 16:18:43 create the slot [slot_node102] success.

2022-09-21 16:18:43 start up standby successful!

can not get the replication of myself

---日志显示,在执行pcp_attach_node后,执行sys_rewind做节点的recovery,

sys_rewind执行成功,备库仍然为‘down’状态。3、查看备库recovery配置文件1)recovery.conf 配置

[kingbase@node102 data]$ cat recovery.conf

standby_mode='on'

primary_conninfo='port=54321 host=192.168.1.101 user=SYSTEM password=MTIzNDU2 application_name=node101

primary_slot_name ='slot_node101'2)备库etc/recovery.done

[kingbase@node102 data]$ cat etc/recovery.done

standby_mode='on'

primary_conninfo='port=54321 host=192.168.1.101 user=SYSTEM password=MTIzNDU2 application_name=node101

primary_slot_name ='slot_node101'3)查看主库recovery.done

[kingbase@node101 bin]$ cat ../data/recovery.done

standby_mode='on'

primary_conninfo='port=54321 host=192.168.1.102 user=SYSTEM password=MTIzNDU2 application_name=node101'

recovery_target_timeline='latest'

primary_slot_name ='slot_node101'

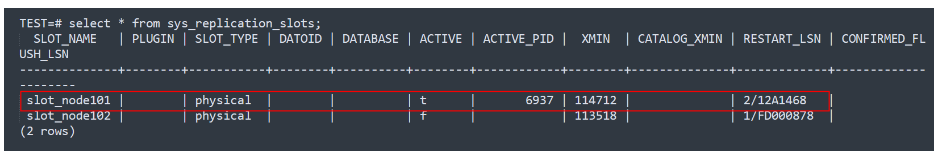

4、查看备库复制槽信息

TEST=# select * from sys_replication_slots;

SLOT_NAME | PLUGIN | SLOT_TYPE | DATOID | DATABASE | ACTIVE | ACTIVE_PID | XMIN | CATALOG_XMIN | RESTART_LSN | CONFIRMED_FL

USH_LSN

--------------+--------+-----------+--------+----------+--------+------------+--------+--------------+-------------+-------------

--------

slot_node101 | | physical | | | t | 6937 | 114712 | | 2/12A1468 |

slot_node102 | | physical | | | f | | 113518 | | 1/FD000878 |

(2 rows)

###由于备库recovery.conf配置问题,导致流复制备库应用了错误的复制槽。

四、问题解决

1、修改备库recovery.conf

[kingbase@node102 data]$ cat recovery.conf

standby_mode='on'

primary_conninfo='port=54321 host=192.168.1.101 user=SYSTEM password=MTIzNDU2 application_name=node102

primary_slot_name ='slot_node102'2、 备库etc/recovery.done

[kingbase@node102 data]$ cat etc/recovery.done

standby_mode='on'

primary_conninfo='port=54321 host=192.168.1.101 user=SYSTEM password=MTIzNDU2 application_name=node102

primary_slot_name ='slot_node102'

3、关闭备库数据库服务

[kingbase@node102 bin]$ ./sys_ctl stop -D ../data

waiting for server to shut down.... done

server stopped4、删除复制槽

TEST=# select sys_drop_replication_slot('slot_node101');

SYS_DROP_REPLICATION_SLOT

---------------------------

(1 row)5、重启集群[kingbase@node101 bin]$ ./kingbase_monitor.sh restart

6、查看节点状态

TEST=# show pool_nodes;

node_id | hostname | port | status | lb_weight | role | select_cnt | load_balance_node | replication_delay

---------+---------------+-------+--------+-----------+---------+------------+-------------------+-------------------

0 | 192.168.1.101 | 54321 | up | 0.500000 | primary | 0 | true | 0

1 | 192.168.1.102 | 54321 | up | 0.500000 | standby | 0 | false | 0

(2 rows)

[kingbase@node101 bin]$ ./pcp_node_info -U kingbase 1

Password:

192.168.1.102 54321 2 0.500000 up

[kingbase@node101 bin]$ ./pcp_node_info -U kingbase 0

Password:

192.168.1.101 54321 2 0.500000 up

###如上所示,备库节点已经正常。7、查看备库recovery.log

.......

2022-09-21 16:37:02 check if the network is ok

ping trust ip 192.168.1.1 success ping times :[3], success times:[2]

determine if i am master or standby

node_id | hostname | port | status | lb_weight | role | select_cnt | load_balance_node | replication_delay

---------+---------------+-------+--------+-----------+---------+------------+-------------------+-------------------

0 | 192.168.1.101 | 54321 | up | 0.500000 | primary | 0 | false | 0

1 | 192.168.1.102 | 54321 | up | 0.500000 | standby | 0 | true | 0

(2 rows)

i am standby in cluster,determine if recovery is needed

2022-09-21 16:37:04 now will del vip [192.168.1.200/24]

but no 192.168.1.200/24 on my DEV, nothing to do with del

cluster is sync cluster.

now,there is a synchronous standby.

2022-09-21 16:37:07 ALL NODES ARE UP STATUS!

2022-09-21 16:37:07 ALL NODES ARE UP STATUS!

###如上所示,recovery.log中备库状态信息已经正常。五、总结

1、 对于集群出现的问题,要从多个方面进行分析、判断,找到问题发生的根本原因。

2、对于备库的clone,尽量不要使用静态copy方式,此种方式备库需修改的文件较多,难免有遗漏,出现集群故障问题。

3、建议使用sys_basebackup执行备库clone,clone后注意检查备库集群配置文件。

4723

4723

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?