1.解压压缩包

tar -zxvf apache-tez-0.9.2-bin.tar.gz

2.将tez路径下的压缩包放到hdfs上

/opt/lagou/servers/tez/share/tez.tar.gz

hdfs dfs -mkdir /user/tez

hdfs dfs -put tez.tar.gz /user/tez/

3.配置hadoop让其他地方知道该路径

该路径下新建配置文件tez-site.xml

/opt/lagou/servers/hadoop-2.10.1/etc/hadoop

配置文件的内容为

<?xml version="1.0" encoding="UTF-8"?>

<configuration>

<!--指明hdfs集群上的tez的tar包,使Hadoop可以自动分布式缓存该jar包-->

<property>

<name>tez.lib.uris</name>

<value>hdfs://Linux121:9000/user/tez/tez.tar.gz</value>

</property>

</configuration>

4.配置环境变量(这个配置哪个节点使用到hive就在哪个节点配置,其他节点可以不知道该配置,全部节点都配置也可以)

#Tez

export HADOOP_CONF_DIR=$HADOOP_HOME/etc/hadoop

export TEZ_CONF_DIR=$HADOOP_CONF_DIR

export TEZ_JARS=/opt/lagou/servers/tez/*:/opt/lagou/servers/tez/lib/*

export HADOOP_CLASSPATH=$TEZ_CONF_DIR:$TEZ_JARS:$HADOOP_CLASSPATH

查看hive的执行引擎

hive (default)> set hive.execution.engine;

hive.execution.engine=mr

修改计算执行引擎

hive (default)> set hive.execution.engine=tez;

hive (default)> set hive.execution.engine;

hive.execution.engine=tez

当我利用tez执行计算时候发现报如下问题

hive (dws)> select app_v,count(*) from dws_member_retention_day group by app_v;

Query ID = root_20220102110741_de1b700e-6f2f-493f-a495-f2d5a3676063

Total jobs = 1

Launching Job 1 out of 1

FAILED: Execution Error, return code 1 from org.apache.hadoop.hive.ql.exec.tez.TezTask

通过查看hive日志发现

[2022-01-02 11:09:15.587]Container exited with a non-zero exit code 143.

For more detailed output, check the application tracking page: http://Linux123:8088/cluster/app/application_1641092738683_0001 Then click on links to logs of each attempt.

. Failing the application.

at org.apache.tez.client.TezClient.waitTillReady(TezClient.java:1013) ~[tez-api-0.9.2.jar:0.9.2]

at org.apache.tez.client.TezClient.waitTillReady(TezClient.java:982) ~[tez-api-0.9.2.jar:0.9.2]

at org.apache.hadoop.hive.ql.exec.tez.TezSessionState.startSessionAndContainers(TezSessionState.java:396) ~[hive-exec-2.3.7.jar:2.3.7]

at org.apache.hadoop.hive.ql.exec.tez.TezSessionState.openInternal(TezSessionState.java:323) ~[hive-exec-2.3.7.jar:2.3.7]

at org.apache.hadoop.hive.ql.exec.tez.TezSessionPoolManager$TezSessionPoolSession.openInternal(TezSessionPoolManager.java:703) ~[hive-exec-2.3.7.jar:2.3.7]

at org.apache.hadoop.hive.ql.exec.tez.TezSessionState.open(TezSessionState.java:196) ~[hive-exec-2.3.7.jar:2.3.7]

at org.apache.hadoop.hive.ql.exec.tez.TezTask.updateSession(TezTask.java:303) ~[hive-exec-2.3.7.jar:2.3.7]

at org.apache.hadoop.hive.ql.exec.tez.TezTask.execute(TezTask.java:168) ~[hive-exec-2.3.7.jar:2.3.7]

at org.apache.hadoop.hive.ql.exec.Task.executeTask(Task.java:199) ~[hive-exec-2.3.7.jar:2.3.7]

at org.apache.hadoop.hive.ql.exec.TaskRunner.runSequential(TaskRunner.java:100) ~[hive-exec-2.3.7.jar:2.3.7]

at org.apache.hadoop.hive.ql.Driver.launchTask(Driver.java:2183) ~[hive-exec-2.3.7.jar:2.3.7]

at org.apache.hadoop.hive.ql.Driver.execute(Driver.java:1839) ~[hive-exec-2.3.7.jar:2.3.7]

at org.apache.hadoop.hive.ql.Driver.runInternal(Driver.java:1526) ~[hive-exec-2.3.7.jar:2.3.7]

at org.apache.hadoop.hive.ql.Driver.run(Driver.java:1237) ~[hive-exec-2.3.7.jar:2.3.7]

at org.apache.hadoop.hive.ql.Driver.run(Driver.java:1227) ~[hive-exec-2.3.7.jar:2.3.7]

at org.apache.hadoop.hive.cli.CliDriver.processLocalCmd(CliDriver.java:233) ~[hive-cli-2.3.7.jar:2.3.7]

at org.apache.hadoop.hive.cli.CliDriver.processCmd(CliDriver.java:184) ~[hive-cli-2.3.7.jar:2.3.7]

at org.apache.hadoop.hive.cli.CliDriver.processLine(CliDriver.java:403) ~[hive-cli-2.3.7.jar:2.3.7]

at org.apache.hadoop.hive.cli.CliDriver.executeDriver(CliDriver.java:821) ~[hive-cli-2.3.7.jar:2.3.7]

at org.apache.hadoop.hive.cli.CliDriver.run(CliDriver.java:759) ~[hive-cli-2.3.7.jar:2.3.7]

at org.apache.hadoop.hive.cli.CliDriver.main(CliDriver.java:686) ~[hive-cli-2.3.7.jar:2.3.7]

at sun.reflect.NativeMethodAccessorImpl.invoke0(Native Method) ~[?:1.8.0_301]

at sun.reflect.NativeMethodAccessorImpl.invoke(NativeMethodAccessorImpl.java:62) ~[?:1.8.0_301]

at sun.reflect.DelegatingMethodAccessorImpl.invoke(DelegatingMethodAccessorImpl.java:43) ~[?:1.8.0_301]

at java.lang.reflect.Method.invoke(Method.java:498) ~[?:1.8.0_301]

at org.apache.hadoop.util.RunJar.run(RunJar.java:244) ~[hadoop-common-2.10.1.jar:?]

at org.apache.hadoop.util.RunJar.main(RunJar.java:158) ~[hadoop-common-2.10.1.jar:?]

2022-01-02T11:09:17,144 ERROR [0cbe2c1f-642f-4d8f-9305-e072f7ca3639 main] ql.Driver: FAILED: Execution Error, return code 1 from org.apache.hadoop.hive.ql.exec.tez.TezTask

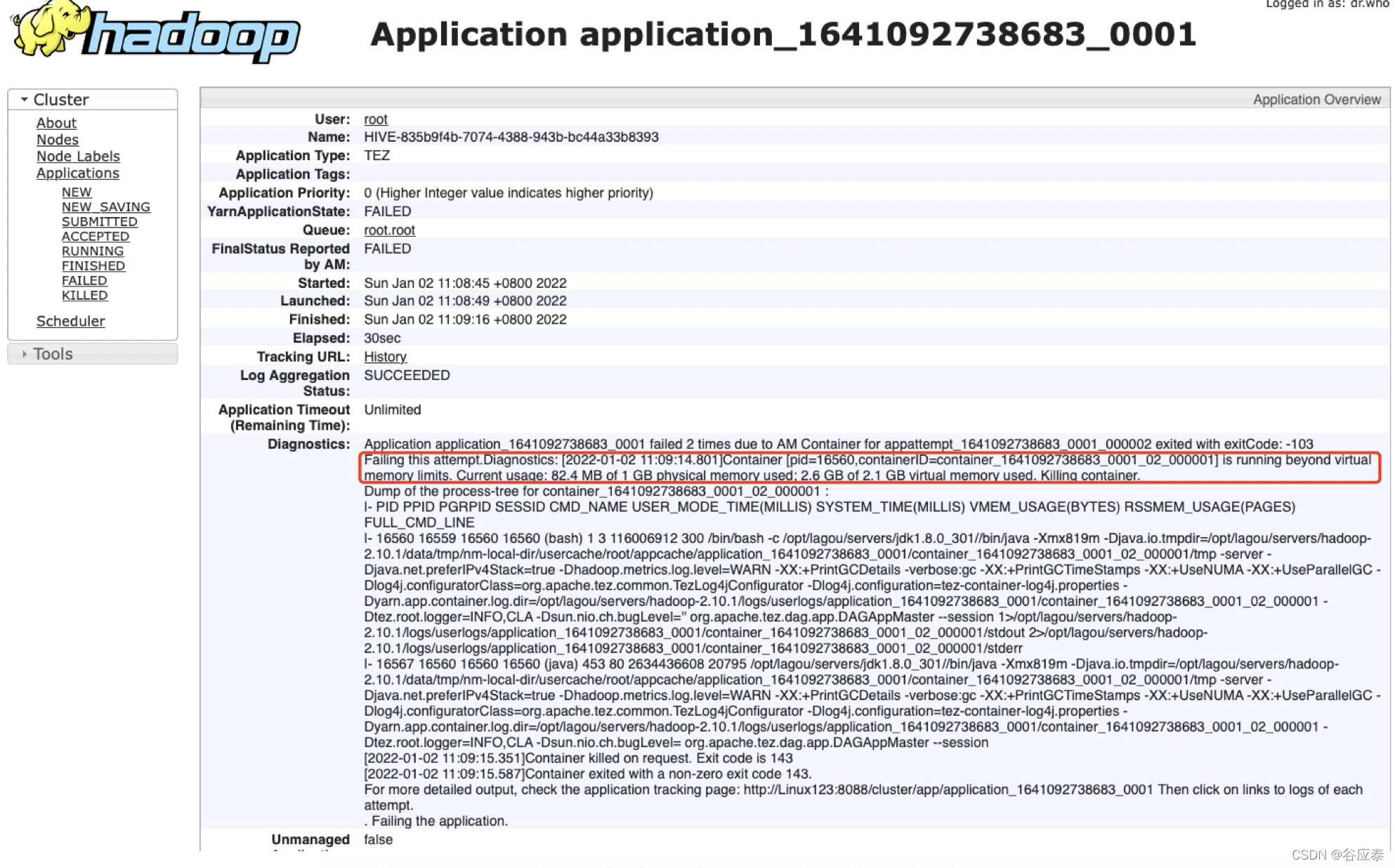

根据错误提示我点击其中的链接查看详细信息

http://Linux123:8088/cluster/app/application_1641092738683_0001

根本原因是计算时候yarn资源不足

该错误是YARN的虚拟内存计算方式导致,上例中用户程序申请的内存为1Gb,YARN根据此值乘以一个比例(默认为2.1)得出申请的虚拟内存的值,当YARN计算的用户程序所需虚拟内存值(当前是2.6 GB)大于计算出来的值时(2.1GB),就会报出以上错误。调节比例值可以解决该问题。具体参数为:yarn-site.xml中的yarn.nodemanager.vmem-pmem-ratio

解决方法:

调整hadoop配置文件yarn-site.xml中值:

//<property>

// <name>yarn.scheduler.minimum-allocation-mb</name>

// <value>2048</value>

// <description>default value is 1024</description>

//</property>

<property>

<name>yarn.nodemanager.vmem-pmem-ratio</name>

<value>3</value>

<description>default value is 2.1</description>

</property>

修改所有节点重启yarn

再次hive中执行计算

hive (dws)> select app_v,count(*) from dws_member_retention_day group by app_v;

Query ID = root_20220102110741_de1b700e-6f2f-493f-a495-f2d5a3676063

Total jobs = 1

Launching Job 1 out of 1

FAILED: Execution Error, return code 1 from org.apache.hadoop.hive.ql.exec.tez.TezTask

hive (dws)> set hive.execution.engine;

hive.execution.engine=tez

hive (dws)> select app_v,count(*) from dws_member_retention_day group by app_v;

Query ID = root_20220102120136_1f4506fe-2ca2-4df3-b937-a99a0d4326a6

Total jobs = 1

Launching Job 1 out of 1

Status: Running (Executing on YARN cluster with App id application_1641096100742_0001)

----------------------------------------------------------------------------------------------

VERTICES MODE STATUS TOTAL COMPLETED RUNNING PENDING FAILED KILLED

----------------------------------------------------------------------------------------------

Map 1 .......... container SUCCEEDED 1 1 0 0 0 0

Reducer 2 ...... container SUCCEEDED 1 1 0 0 0 0

----------------------------------------------------------------------------------------------

VERTICES: 02/02 [==========================>>] 100% ELAPSED TIME: 15.34 s

----------------------------------------------------------------------------------------------

OK

app_v _c1

Time taken: 86.987 seconds

配置成功

如果想永久的配置使用tez作为计算引擎在hive中配置如下

路径/opt/lagou/servers/hive-2.3.7/conf/hive-site.xml

<property>

<name>hive.execution.engine</name>

<value>tez</value>

<description>

Expects one of [mr, tez, spark].

Chooses execution engine. Options are: mr (Map reduce, default), tez, spark. While MR

remains the default engine for historical reasons, it is itself a historical engine

and is deprecated in Hive 2 line. It may be removed without further warning.

</description>

</property>

再次启动我们发现hive使用的默认的Tez计算引擎

[root@Linux122 ~]# hive

SLF4J: Class path contains multiple SLF4J bindings.

SLF4J: Found binding in [jar:file:/opt/lagou/servers/tez/lib/slf4j-log4j12-1.7.10.jar!/org/slf4j/impl/StaticLoggerBinder.class]

SLF4J: Found binding in [jar:file:/opt/lagou/servers/hadoop-2.10.1/share/hadoop/common/lib/slf4j-log4j12-1.7.25.jar!/org/slf4j/impl/StaticLoggerBinder.class]

SLF4J: See http://www.slf4j.org/codes.html#multiple_bindings for an explanation.

SLF4J: Actual binding is of type [org.slf4j.impl.Log4jLoggerFactory]

Logging initialized using configuration in jar:file:/opt/lagou/servers/hive-2.3.7/lib/hive-common-2.3.7.jar!/hive-log4j2.properties Async: true

hive (default)> set hive.execution.engine;

hive.execution.engine=tez

1456

1456

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?