上篇:使用Docker 部署Django + Uwsgi(单容器)

部署一个简单的Django项目

在服务器部署一个简单的Django项目。不实用 uwsgi和nginx,数据库默认使用 sqllite3,只是把django放在一个简单的容器里面!

- 整个项目结构如下所示,目前该项目放在宿主机(服务器)上。

mysite1

├── db.sqlite3

├── Dockerfile # 用于生产docker镜像的Dockerfile

├── manage.py

├── mysite1

│ ├── asgi.py

│ ├── init.py

│ ├── settings.py

│ ├── urls.py

│ └── wsgi.py

├── pip.conf # 非必需。pypi源设置成国内,加速pip安装

└── requirements.txt # 项目只依赖Django,所以里面只有django==3.0.5一条

注意:Django默认ALLOWED_HOSTS = []为空,在正式部署前你需要修改settings.py, 把它设置为服务器实际对外IP地址,否则后面部署会出现错误,这个与docker无关。即使你不用docker部署,ALLOWED_HOSTS也要设置好的。

- 第一步:编写Dockerfile,内容如下:

# 建立 python3.7 环境

FROM python:3.7

# 镜像作者

MAINTAINER WW

# 设置 python 环境变量

ENV PYTHONUNBUFFERED 1

# 设置pip源为国内源

COPY pip.conf /root/.pip/pip.conf

# 在容器内/var/www/html/下创建 mysite1文件夹

RUN mkdir -p /var/www/html/mysite1

# 设置容器内工作目录

WORKDIR /var/www/html/mysite1

# 将当前目录文件加入到容器工作目录中(. 表示当前宿主机目录)

ADD . /var/www/html/mysite1

# 利用 pip 安装依赖

RUN pip install -r requirements.txt

将pip设置成阿里云镜像,pip.conf文件内容如下:

[global]

index-url = https://mirrors.aliyun.com/pypi/simple/

[install]

trusted-host=mirrors.aliyun.com

- 第二步:使用当前目录的 Dockerfile 创建镜像,标签为 django_docker_img:v1。

进入Dockerfile所在目录,输入如下命令:

# 根据Dockerfile创建名为django_docker_img的镜像,版本v1,.代表当前目录

sudo docker build -t django_docker_img:v1 .

# 查看镜像是否创建成功, 后面-a可以查看所有本地的镜像

sudo docker images

这是你应该可以看到有一个名为django_docker_img的docker镜像创建成功了,版本v1。

- 第三步:根据镜像生成容器并运行,容器名为mysite1, 并将宿主机的80端口映射到容器的8000端口。

# 如成功,根据镜像创建mysite1容器并运行。宿主机80:容器8000。-d表示后台运行。

sudo docker run -it -d --name mysite1 -p 80:8000 django_docker_img:v1

# 查看容器状态,后面加-a可以查看所有容器列表,包括停止运行的容器

sudo docker ps

# 进入容器,如果复制命令的话,结尾千万不能有空格。

sudo docker exec -it mysite1 /bin/bash

这时你应该可以看到mysite1容器开始运行了。使用sudo docker exec -it mysite1 /bin/bash即可进入容器内部。

- 第四步:进入容器内部后,执行如下命令

python3 manage.py makemigrations

python3 manage.py migrate

python3 manage.py runserver 0.0.0.0:8000

这时你打开Chrome浏览器输入http://your_server_ip,你就可以看到你的Django网站已经上线了,恭喜你!

从客户端到Docker容器内部到底发生了什么

前面我们已经提到如果不指定容器网络,Docker创建运行每个容器时会为每个容器分配一个IP地址。我们可以通过如下命令查看。

sudo docker inspect mysite1 | grep “IPAddress”

用户访问的是宿主机服务器地址,并不是我们容器的IP地址,那么用户是如何获取容器内部内容的呢?答案就是端口映射。因为我们将宿主机的80端口(HTTP协议)隐射到了容器的8000端口,所以当用户访问服务器IP地址80端口时自动转发到了容器的8000端口。

注意:容器的IP地址很重要,以后要经常用到。同一宿主机上的不同容器之间可以通过容器的IP地址直接通信。一般容器的IP地址与容器名进行绑定,只要容器名不变,容器IP地址不变。

把UWSGI加入Django容器中的准备工作

在前面例子中我们使用了Django了自带的runserver命令启动了测试服务器,但实际生成环境中你应该需要使用支持高并发的uwsgi服务器来启动Django服务。尽管本节标题是把uwsgi加入到Django容器中,但本身这句话就是错的,因为我们Django的容器是根据django_docker_img:v1这个镜像生成的,我们的镜像里并没有包含uwsgi相关内容,只是把uwsgi.ini配置文件拷入到Django容器是不会工作的。

所以这里我们需要构建新的Dockerfile并构建新的镜像和容器。为了方便演示,我们创建了一个名为mysite2的项目,项目结构如下所示:

mysite2

├── db.sqlite3

├── Dockerfile # 构建docker镜像所用到的文件

├── manage.py

├── mysite2

│ ├── asgi.py

│ ├── init.py

│ ├── settings.py

│ ├── urls.py

│ └── wsgi.py

├── pip.conf

├── requirements.txt # 两个依赖:django3.0.5 uwsgi2.0.18

├── start.sh # 进入容器后需要执行的命令,后面会用到

└── uwsgi.ini # uwsgi配置文件

新的Dockerfile内容如下所示:

# 建立 python3.7 环境

FROM python:3.7

# 镜像作者

MAINTAINER WW

# 设置 python 环境变量

ENV PYTHONUNBUFFERED 1

# 设置pypi源头为国内源

COPY pip.conf /root/.pip/pip.conf

# 在容器内/var/www/html/下创建 mysite2 文件夹

RUN mkdir -p /var/www/html/mysite2

# 设置容器内工作目录

WORKDIR /var/www/html/mysite2

# 将当前目录文件拷贝一份到工作目录中(. 表示当前目录)

ADD . /var/www/html/mysite2

# 利用 pip 安装依赖

RUN pip install -r requirements.txt

# Windows环境下编写的start.sh每行命令结尾有多余的\r字符,需移除。

RUN sed -i 's/\r//' ./start.sh

# 设置start.sh文件可执行权限

RUN chmod +x ./start.sh

start.sh脚本文件内容如下所示。最重要的是最后一句,使用uwsgi.ini配置文件启动Django服务。

#!/bin/bash

# 从第一行到最后一行分别表示:

# 1. 生成数据库迁移文件

# 2. 根据数据库迁移文件来修改数据库

# 3. 用 uwsgi启动 django 服务, 不再使用python manage.py runserver

python manage.py makemigrations&&

python manage.py migrate&&

uwsgi --ini /var/www/html/mysite2/uwsgi.ini

# python manage.py runserver 0.0.0.0:8000

uwsgi.ini配置文件内容如下所示。

[uwsgi]

project=mysite2

uid=www-data

gid=www-data

base=/var/www/html

chdir=%(base)/%(project)

module=%(project).wsgi:application

master=True

processes=2

http=0.0.0.0:8000 #这里直接使用uwsgi做web服务器,使用http。如果使用nginx,需要使用socket沟通。

buffer-size=65536

pidfile=/tmp/%(project)-master.pid

vacuum=True

max-requests=5000

daemonize=/tmp/%(project)-uwsgi.log

#设置一个请求的超时时间(秒),如果一个请求超过了这个时间,则请求被丢弃

harakiri=60

#当一个请求被harakiri杀掉会,会输出一条日志

harakiri-verbose=true

单容器部署 Django + UWSGI

- 第一步:生成名为django_uwsgi_img:v1的镜像

sudo docker build -t django_uwsgi_img:v1 .

- 第二步:启动并运行mysite2的容器

# 启动并运行mysite2的容器

sudo docker run -it -d --name mysite2 -p 80:8000 django_uwsgi_img:v1

- 第三步:进入mysite2的容器内部,并运行脚本命令start.sh

# 进入容器,如果复制命令的话,结尾千万不能有空格。

sudo docker exec -it mysite2 /bin/bash

# 执行脚本命令

sh start.sh

以上两句命令也可以合并成一条命令

sudo docker exec -it mysite2 /bin/bash start.sh

这时你打开浏览器输入http://your_server_ip,你就可以看到你的Django网站已经上线了,恭喜你!这次是uwsgi启动的服务哦,因为你根本没输入python manage.py runserver命令。

故障排查:此时如果你没有看到网站上线,主要有两个可能原因:

- uwsgi配置文件错误。尤其http服务IP地址为0.0.0.0:8000,不应是服务器的ip:8000,因为我们uwsgi在容器里,并不在服务器上。

- 浏览器设置了http(端口80)到https(端口443)自动跳转,。因为容器8000端口映射的是宿主机80端口,如果请求来自宿主机的443端口,容器将接收不到外部请求。解决方案清空浏览器设置缓存或换个浏览器。

注意:你会留意到网站虽然上线了,但有些图片和网页样式显示不对,这是因为uwsgi是处理动态请求的服务器,静态文件请求需要交给更专业的服务器比如Nginx处理。下篇文章中我们将介绍Docker双容器部署Django+Uwsgi+Nginx,欢迎关注

中篇:使用Docker 部署Django + Uwsgi + nginx(双容器)

我们将构建两个容器,一个容器放Django + Uwsgi,另一个容器放Nginx。

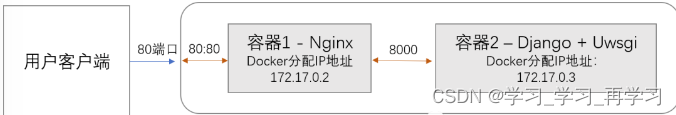

双容器部署Django+Uwsgi+Nginx项目示意图

整个项目流程示意图如下所示。用户通过客户端访问服务器的80端口(http协议默认端口)时,请求由于宿主机和容器1间存在80:80端口映射关系会被转发到Nginx所在的容器1。Nginx接收到请求后会判断请求是静态的还是动态的,静态文件请求自己处理,动态请求则转发到Django+Uwsgi所在的容器2处理,容器2的开放端口为8000。

本例中所使用到的容器1的名字为mysite3-nginx, 容器2的名称为mysite3。由于两个容器在一台宿主机上,你可以看到docker分配的容器IP地址非常接近,有点像局域网IP。使用如下命名即可查看容器的IP地址。

sudo docker inspect container_name | grep "IPAddress"

双容器部署Django+Uwsgi+Nginx代码布局图

整个项目的代码布局如下所示。我们新建了一个compose文件夹,专门存放用于创建其它镜像的Dockerfile及配置文件。在本例中,我们只创建了一个nginx文件夹。在下篇文章中,我们会将MySQL和Redis也加进去。

mysite3

├── compose

│ └── nginx

│ ├── Dockerfile # 创建nginx镜像需要用到的Dockerfile

│ ├── log # 存放nginx的日志

│ ├── nginx.conf # nginx配置文件

│ ├── ssl # 如果需要配置https需要用到

├── db.sqlite3

├── Dockerfile # 创建django+uwsgi镜像需要用到的Dockerfile

├── manage.py

├── mysite3

│ ├── asgi.py

│ ├── init.py

│ ├── settings.py

│ ├── urls.py

│ └── wsgi.py

├── pip.conf # 设置pypi为国内源,加速静态文件安装

├── static # 静态文件夹,存放css,js和图片

├── media # 媒体文件夹,存放用户上传的媒体文件

├── requirements.txt

├── start.sh # 容器运行后需要启动的脚本文件

└── uwsgi.ini # uwsgi配置文件

注意:

- Django项目默认ALLOWED_HOSTS = []为空,在正式部署前你需要修改settings.py, 把它设置为服务器实际对外IP地址,否则后面部署会出现错误,这个与docker无关。

- 本例中使用了nginx提供静态文件服务,你必须在settings.py设置MEDIA_ROOT和STATIC_ROOT,如下所示。否则即使nginx服务正常,静态文件也无法正常显示。

# STATIC ROOT 和 STATIC URL

STATIC_ROOT = os.path.join(BASE_DIR, 'static')

STATIC_URL = "/static/"

# MEDIA ROOT 和 MEDIA URL

MEDIA_ROOT = os.path.join(BASE_DIR, 'media')

MEDIA_URL = "/media/"

创建容器2 (Django+Uwsgi)对应的镜像并启动运行容器

创建容器2的镜像所使用的Dockerfile内容如下所示:

# 基础镜像:python3.7 环境,也可使用python3.7-alphine缩小镜像体积

FROM python:3.7

# 镜像作者

MAINTAINER XXX

# 设置 python 环境变量

ENV PYTHONUNBUFFERED 1

# 设置pypi源头为国内源

COPY pip.conf /root/.pip/pip.conf

# 在容器内/var/www/html/下创建 mysite3文件夹

RUN mkdir -p /var/www/html/mysite3

# 设置容器内工作目录

WORKDIR /var/www/html/mysite3

# 将当前目录文件拷贝一份到工作目录中(. 表示当前目录)

ADD . /var/www/html/mysite3

# 利用 pip 安装依赖

RUN pip install -r requirements.txt

# Windows环境下编写的start.sh每行命令结尾有多余的\r字符,需移除。

RUN sed -i 's/\r//' ./start.sh

# 设置start.sh文件可执行权限

RUN chmod +x ./start.sh

start.sh脚本文件内容如下所示。最重要的是最后一句,使用uwsgi.ini配置文件启动Django服务。

#!/bin/bash

# 从第一行到最后一行分别表示:

# 1. 收集静态文件到根目录

# 2. 生产数据库迁移文件

# 3. 根据数据库迁移文件来修改数据库

# 4. 用 uwsgi启动 django 服务, 不再使用python manage.py runserver

python manage.py collectstatic --noinput&&

python manage.py makemigrations&&

python manage.py migrate&&

uwsgi --ini /var/www/html/mysite3/uwsgi.ini

本例中使用到 Dockerfile和pip.conf和上篇单容器部署Django+Uwsgi的基本一样,唯一不同的是start.sh和uwsgi.ini。start.sh多了收集静态文件的命令。前例uwsgi.ini中我们使用了http协议与客户端通信。由于本例中uwsgi并不直接与客户端沟通,而是与nginx进行沟通,这时我们使用了socket通信。

[uwsgi]

project=mysite3

uid=www-data

gid=www-data

base=/var/www/html

chdir=%(base)/%(project)

module=%(project).wsgi:application

master=True

processes=2

socket=0.0.0.0:8000

chown-socket=%(uid):www-data

chmod-socket=660

buffer-size=65536

pidfile=/tmp/%(project)-master.pid

daemonize=/tmp/%(project)-uwsgi.log # 以守护进程运行,并将log生成与temp文件夹。

vacuum=True

max-requests=5000

#设置一个请求的超时时间(秒),如果一个请求超过了这个时间,则请求被丢弃

harakiri=60

post buffering=8678

#当一个请求被harakiri杀掉会,会输出一条日志

harakiri-verbose=true

#开启内存使用情况报告

memory-report=true

#设置平滑的重启(直到处理完接收到的请求)的长等待时间(秒)

reload-mercy=10

#设置工作进程使用虚拟内存超过N MB就回收重启

reload-on-as= 1024

现在我们可以构建容器2对应的镜像,并运行启动容器2了,容器取名mysite3。

# 进入mysite3目录下的Dockerfile创建名为django_mysite3的镜像,版本v1,.代表当前目录

sudo docker build -t django_mysite3:v1 .

# 启动并运行容器2(名称mysite3), -d为后台运行,-v进行目录挂载。

sudo docker run -it --name mysite3 -p 8000:8000 \

-v /home/enka/mysite3:/var/www/html/mysite3 \

-d django_mysite3:v1

# 查看容器是否运行

sudo docker ps

# 查看容器2(名称mysite3)的IP地址

sudo docker inspect mysite3 | grep "IPAddress"

注意:

- 启动运行容器时一定要考虑目录挂载,防止数据丢失。我们的项目在容器中的路径是/var/www/html/mysite3,用户产生的数据也存储在这个容器内。我们一但删除容器,那么容器内的数据也随之丢失了,即使重新创建容器数据也不会回来。

- 通过-v参数可进行目录挂载。冒号前为宿主机目录,冒号后为镜像容器内挂载的路径,两者必须为绝对路径。如果没有指定宿主机的目录,则容器会在/var/lib/docker/volumes/随机配置一个目录。

- 本例中我们使用了-v参数把容器中的目录/var/www/html/mysite3挂载到了宿主机的的目录/home/enka/mysite3上,实现了两者数据的同步。此时删除容器不用担心,数据会在宿主机上有备份。

创建容器1 (Nginx)的镜像并启动运行容器

创建Nginx镜像的Dockerfile如下所示:

# nginx镜像

FROM nginx:latest

# 删除原有配置文件,创建静态资源文件夹和ssl证书保存文件夹

RUN rm /etc/nginx/conf.d/default.conf \

&& mkdir -p /usr/share/nginx/html/static \

&& mkdir -p /usr/share/nginx/html/media \

&& mkdir -p /usr/share/nginx/ssl

# 添加配置文件

ADD ./nginx.conf /etc/nginx/conf.d/

# 关闭守护模式

CMD ["nginx", "-g", "daemon off;"]

Nginx的配置文件nginx.conf内容如下所示。你注意到Nginx是如何将动态请求转到容器2(对应IP的172.17.0.2)的8000端口了吗?本例中我们使用了socket通信与uwsgi服务器通信,所以要使用uwsgi_pass转发请求,而不是使用proxy_pass转发请求。

# nginx配置文件。

upstream django {

ip_hash;

server 172.17.0.3:8000; # Django+uwsgi容器所在IP地址及开放端口,非宿主机外网IP

}

server {

listen 80; # 监听80端口

server_name localhost; # 可以是nginx容器所在ip地址或127.0.0.1,不能写宿主机外网ip地址

location /static {

alias /usr/share/nginx/html/static; # 静态资源路径

}

location /media {

alias /usr/share/nginx/html/media; # 媒体资源,用户上传文件路径

}

location / {

include /etc/nginx/uwsgi_params;

uwsgi_pass django;

uwsgi_read_timeout 600;

uwsgi_connect_timeout 600;

uwsgi_send_timeout 600;

# proxy_set_header X-Forwarded-For $proxy_add_x_forwarded_for;

# proxy_set_header Host $http_host;

# proxy_redirect off;

# proxy_set_header X-Real-IP $remote_addr;

# proxy_pass http://django;

}

}

access_log /var/log/nginx/access.log main;

error_log /var/log/nginx/error.log warn;

现在我们可以构建容器1对应的镜像,并运行启动容器1了,并取名mysite3-nginx.

# 进入nginx目录, 使用该目录下的Dockerfile创建名为mynginx的镜像,版本v1,.代表当前目录

sudo docker build -t mynginx:v1 .

# 启动并运行容器2(名称mysite3), -d为后台运行,宿主机与Nginx端口映射为80:80。Nginx下的static和media目录挂载到宿主机的Django项目下的media和static文件夹。

sudo docker run -it -p 80:80 --name mysite3-nginx \

-v /home/enka/mysite3/static:/usr/share/nginx/html/static \

-v /home/enka/mysite3/media:/usr/share/nginx/html/media \

-v /home/enka/mysite3/compose/nginx/log:/var/log/nginx \

-d mynginx:v1

# 查看容器是否运行

sudo docker ps

这时你应该可以看到两个容器(mysite3和mysite3-nginx)都已运行,如下所示:

进入Django+UWSGI容器执行Django命令并启动uwsgi服务器

虽然我们两个容器都已启动运行,但我们还没有执行Django相关命令并启动uwsgi服务器。现在我们只需进入容器2执行我们先前编写的脚本文件start.sh即可。

sudo docker exec -it mysite3 /bin/bash start.sh

start.sh实际上干了如下几件事:

python manage.py collectstatic --noinput&&

python manage.py makemigrations&&

python manage.py migrate&&

uwsgi --ini /var/www/html/mysite3/uwsgi.ini

这时你打开浏览器输入http://your_server_ip/admin,你应该可以看到你的Django网站已经上线了,静态文件也可以显示正常,恭喜你!这次是Nginx+Uwsgi提供的服务,这才真正实现了Django在生产环境的部署。

Docker部署Django+Uwsgi+Nginx常见问题及解决方案

使用Docker双容器部署Django+Uwsgi和Nginx过程中容易出现的错误及解决方案如下所示,供参考:

-

Nginx提示502 Gateway错误,静态文件可以正常显示:这是在提示服务器配置错误。应检查nginx.conf文件,看看其指向的uwsgi所在的容器IP及开放的端口是否正确,同时检查uwsgi.ini配置文件中socket的开放端口。

-

uwsgi日志提示probably another instance of uWSGI is running on the same address (0.0.0.0:8000).bind(): Address already in use [core/socket.c line 769]:这是在提示其它uWSGI示例占用了端口。应使用sudo pkill -f uwsgi -9关闭所有uwsgi进程,再重新启动uwsgi服务。

-

uwsgi日志提示invalid request block size: 4547 (max 4096)…skip:uWsgi默认的buffer size 为4096,如果http请求数据超过这个量,就会报错,为了解决这个问题,就需要修改uwsgi的配置文件,增加buffer-size=65536一项。

-

浏览器总是从http(端口80)自动跳转到https(端口443)导致报错:这是因为Nginx容器80端口映射的是宿主机80端口,如果请求来自宿主机的443端口,容器将接收不到外部请求。解决方案清空浏览器设置缓存,换个浏览器或配置nginx支持https。

小结

本文成功使用Docker双容器在服务器上部署了Django + Uwsgi + Nginx。本例中我们只使用到了两个容器,所以可以手动去创建容器并运行。然而在实际的生产环境中,我们往往需要定义数量庞大的 docker 容器,并且容器之间具有错综复杂的依赖联系,手动的记录和配置这些复杂的容器关系,不仅效率低下而且容易出错,所以迫切需要一种定义容器集群编排和部署的工具,这就是Docker Compose。

下篇:使用docker-compose部署Django + Uwsgi + Nginx + MySQL + Redis (多容器组合)

使用docker-compose工具八步部署Django + Uwsgi + Nginx + MySQL + Redis,并分享一个可以复用的项目布局和各项服务的配置文件。

什么是docker-compose及docker-compose工具的安装

Docker-compose是一个用来定义和运行复杂应用的 Docker 工具。使用 docker-compose 后不再需要使用 shell 脚本来逐一创建和启动容器,还可以通过 docker-compose.yml 文件构建和管理复杂多容器组合。

Docker-compose的下载和安装很简单,这里只记录下ubuntu系统下docker-compose的安装过程。

# Step 1: 以ubuntu为例,下载docker-compose

$ sudo curl -L https://github.com/docker/compose/releases/download/1.17.0/docker-compose-`uname -s`-`uname -m` -o /usr/local/bin/docker-compose

# Step 2: 给予docker-compose可执行权限

$ sudo chmod +x /usr/local/bin/docker-compose

# Step 3: 查看docker-compose版本

$ docker-compose --version

注意:安装docker-compose前必需先安装好docker。

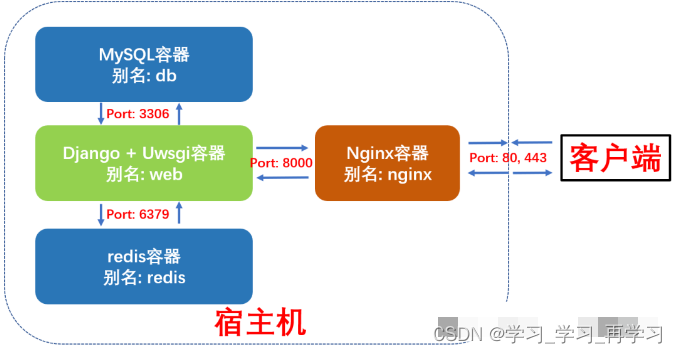

Django + Uwsgi + Nginx + MySQL + Redis组合容器示意图

本例中我们将使用docker-compose编排并启动4个容器,这更接近于实际生成环境下的部署。

- Django + Uwsgi容器:核心应用程序,处理动态请求

- MySQL 容器:数据库服务

- Redis 容器:缓存服务

- Nginx容器:反向代理服务并处理静态资源请求

这四个容器的依赖关系是:Django+Uwsgi 容器依赖 Redis 容器和 MySQL 容器,Nginx 容器依赖Django+Uwsgi容器。为了方便容器间的相互访问和通信,我们使用docker-compose时可以给每个容器取个别名,这样访问容器时就可以直接使用别名访问,而不使用Docker临时给容器分配的IP了。

这四个容器的别名及通信端口如下图所示:

Docker-compose部署Django项目布局树形图

我们新建了一个compose文件夹,专门存放用于构建其它容器镜像的Dockerfile及配置文件。compose文件夹与django项目的根目录myproject同级。这样做的好处是不同的django项目可以共享compose文件夹。

myproject_docker # 项目根目录

├── compose # 存放各项容器服务的Dockerfile和配置文件

│ ├── mysql

│ │ ├── conf

│ │ │ └── my.cnf # MySQL配置文件

│ │ └── init

│ │ └── init.sql # MySQL启动脚本

│ ├── nginx

│ │ ├── Dockerfile # 构建Nginx镜像所的Dockerfile

│ │ ├── log # 挂载保存nginx容器内nginx日志 | 目录

│ │ ├── nginx.conf # Nginx配置文件

│ │ └── ssl # 如果需要配置https需要用到 | 目录

│ ├── redis

│ │ └── redis.conf # redis配置文件

│ └── uwsgi # 挂载保存django+uwsgi容器内uwsgi日志

├── docker-compose.yml # 核心编排文件

└── myproject # 常规Django项目目录

├── Dockerfile # 构建Django+Uwsgi镜像的Dockerfile

├── apps # 存放Django项目的各个apps

├── manage.py

├── myproject # Django项目配置文件

│ ├── asgi.py

│ ├── __init__.py

│ ├── settings.py

│ ├── urls.py

│ └── wsgi.py

├── pip.conf # 非必需。pypi源设置成国内,加速pip安装

├── requirements.txt # Django项目依赖文件

├── start.sh # 启动Django+Uwsgi容器后要执行的脚本

├── media # 用户上传的媒体资源与静态文件

├── static # 项目所使用到的静态文件

└── uwsgi.ini # uwsgi配置文件

└── .env

下面我们开始正式部署。

第一步:编写docker-compose.yml文件

docker-compose.yml的核心内容如下。我们定义了3个数据卷,用于挂载各个容器内动态生成的数据,比如MySQL的存储数据,redis生成的快照和django容器中用户上传的媒体资源与文件。这样即使删除容器,容器内产生的数据也不会丢失。我们还编排了4项容器服务,别名分别为redis, db, nginx和web,接下来我们将依次看看各个容器的Dockerfile和配置文件。

version: "3"

volumes: # 自定义数据卷,位于宿主机/var/lib/docker/volumes内

myproject_db_vol: # 定义数据卷同步容器内mysql数据

myproject_redis_vol: # 定义数据卷同步redis容器内数据

myproject_media_vol: # 定义数据卷同步media文件夹数据

services:

redis:

image: redis:5

command: redis-server /etc/redis/redis.conf # 容器启动后启动redis服务器

volumes:

- myproject_redis_vol:/data # 通过挂载给redis数据备份

- ./compose/redis/redis.conf:/etc/redis/redis.conf # 挂载redis配置文件

ports:

- "6379:6379"

restart: always # always表容器运行发生错误时一直重启

db:

image: mysql:5.7

environment:

- MYSQL_ROOT_PASSWORD=123456 # 数据库密码

- MYSQL_DATABASE=myproject # 数据库名称

- MYSQL_USER=dbuser # 数据库用户名

- MYSQL_PASSWORD=password # 用户密码

volumes:

- myproject_db_vol:/var/lib/mysql:rw # 挂载数据库数据, 可读可写

- ./compose/mysql/conf/my.cnf:/etc/mysql/my.cnf # 挂载配置文件

- ./compose/mysql/init:/docker-entrypoint-initdb.d/ # 挂载数据初始化sql脚本

ports:

- "3306:3306" # 与配置文件保持一致

restart: always

web:

build: ./myproject # 使用myproject目录下的Dockerfile

expose:

- "8000"

volumes:

- ./myproject:/var/www/html/myproject # 挂载项目代码

- myproject_media_vol:/var/www/html/myproject/media # 以数据卷挂载容器内用户上传媒体文件

- ./compose/uwsgi:/tmp # 挂载uwsgi日志

links:

- db

- redis

depends_on: # 依赖关系

- db

- redis

environment:

- DEBUG=False

restart: always

tty: true

stdin_open: true

nginx:

build: ./compose/nginx

ports:

- "80:80"

- "443:443"

expose:

- "80"

volumes:

- ./myproject/static:/usr/share/nginx/html/static # 挂载静态文件

- ./compose/nginx/ssl:/usr/share/nginx/ssl # 挂载ssl证书目录

- ./compose/nginx/log:/var/log/nginx # 挂载日志

- myproject_media_vol:/usr/share/nginx/html/media # 挂载用户上传媒体文件

links:

- web

depends_on:

- web

restart: always

第二步:编写Web (Django+Uwsgi)镜像和容器所需文件

构建Web镜像(Django+Uwsgi)的所使用的Dockerfile如下所示:

# myproject/Dockerfile

# 建立 python3.7 环境

FROM python:3.7

# 镜像作者大江狗

MAINTAINER DJG

# 设置 python 环境变量

ENV PYTHONUNBUFFERED 1

COPY pip.conf /root/.pip/pip.conf

# 创建 myproject 文件夹

RUN mkdir -p /var/www/html/myproject

# 将 myproject 文件夹为工作目录

WORKDIR /var/www/html/myproject

# 将当前目录加入到工作目录中(. 表示当前目录)

ADD . /var/www/html/myproject

# 更新pip版本

RUN /usr/local/bin/python -m pip install --upgrade pip

# 利用 pip 安装依赖

RUN pip install -r requirements.txt

# 去除windows系统编辑文件中多余的\r回车空格

RUN sed -i 's/\r//' ./start.sh

# 给start.sh可执行权限

RUN chmod +x ./start.sh

本Django项目所依赖的requirements.txt内容如下所示:

# django

django==3.0.6

# uwsgi

uwsgi==2.0.18

# mysql

mysqlclient==1.4.6

# redis

django-redis==4.11.0

redis==3.5.0

# for images

Pillow==7.1.2

start.sh脚本文件内容如下所示。最重要的是最后一句,使用uwsgi.ini配置文件启动Django服务。

#!/bin/bash

# 从第一行到最后一行分别表示:

# 1. 收集静态文件到根目录,

# 2. 生成数据库可执行文件,

# 3. 根据数据库可执行文件来修改数据库

# 4. 用 uwsgi启动 django 服务

python manage.py collectstatic --noinput&&

python manage.py makemigrations&&

python manage.py migrate&&

uwsgi --ini /var/www/html/myproject/uwsgi.ini

uwsgi.ini配置文件如下所示:

[uwsgi]

project=myproject

uid=www-data

gid=www-data

base=/var/www/html

chdir=%(base)/%(project)

module=%(project).wsgi:application

master=True

processes=2

socket=0.0.0.0:8000

chown-socket=%(uid):www-data

chmod-socket=664

vacuum=True

max-requests=5000

pidfile=/tmp/%(project)-master.pid

daemonize=/tmp/%(project)-uwsgi.log

#设置一个请求的超时时间(秒),如果一个请求超过了这个时间,则请求被丢弃

harakiri = 60

post buffering = 8192

buffer-size= 65535

#当一个请求被harakiri杀掉会,会输出一条日志

harakiri-verbose = true

#开启内存使用情况报告

memory-report = true

#设置平滑的重启(直到处理完接收到的请求)的长等待时间(秒)

reload-mercy = 10

#设置工作进程使用虚拟内存超过N MB就回收重启

reload-on-as= 1024

python-autoreload=1

第三步:编写Nginx镜像和容器所需文件

构建Nginx镜像所使用的Dockerfile如下所示:

#nginx镜像compose/nginx/Dockerfile

FROM nginx:latest

# 删除原有配置文件,创建静态资源文件夹和ssl证书保存文件夹

RUN rm /etc/nginx/conf.d/default.conf \

&& mkdir -p /usr/share/nginx/html/static \

&& mkdir -p /usr/share/nginx/html/media \

&& mkdir -p /usr/share/nginx/ssl

# 设置Media文件夹用户和用户组为Linux默认www-data, 并给予可读和可执行权限,

# 否则用户上传的图片无法正确显示。

RUN chown -R www-data:www-data /usr/share/nginx/html/media \

&& chmod -R 775 /usr/share/nginx/html/media

# 添加配置文件

ADD ./nginx.conf /etc/nginx/conf.d/

# 关闭守护模式

CMD ["nginx", "-g", "daemon off;"]

Nginx的配置文件如下所示

# nginx配置文件

# compose/nginx/nginx.conf

upstream django {

ip_hash;

server web:8000; # Docker-compose web服务端口

}

server {

listen 80; # 监听80端口

server_name localhost; # 可以是nginx容器所在ip地址或127.0.0.1,不能写宿主机外网ip地址

charset utf-8;

client_max_body_size 10M; # 限制用户上传文件大小

location /static {

alias /usr/share/nginx/html/static; # 静态资源路径

}

location /media {

alias /usr/share/nginx/html/media; # 媒体资源,用户上传文件路径

}

location / {

include /etc/nginx/uwsgi_params;

uwsgi_pass django;

uwsgi_read_timeout 600;

uwsgi_connect_timeout 600;

uwsgi_send_timeout 600;

proxy_set_header X-Forwarded-For $proxy_add_x_forwarded_for;

proxy_set_header Host $http_host;

proxy_redirect off;

proxy_set_header X-Real-IP $remote_addr;

# proxy_pass http://django; # 使用uwsgi通信,而不是http,所以不使用proxy_pass。

}

}

access_log /var/log/nginx/access.log main;

error_log /var/log/nginx/error.log warn;

server_tokens off;

第四步:编写Db (MySQL)容器配置文件

启动MySQL容器我们直接使用官方镜像即可,不过我们需要给MySQL增加配置文件。

# compose/mysql/conf/my.cnf

[mysqld]

user=mysql

default-storage-engine=INNODB

character-set-server=utf8

port = 3306 # 端口与docker-compose里映射端口保持一致

#bind-address= localhost #一定要注释掉,mysql所在容器和django所在容器不同IP

basedir = /usr

datadir = /var/lib/mysql

tmpdir = /tmp

pid-file = /var/run/mysqld/mysqld.pid

socket = /var/run/mysqld/mysqld.sock

skip-name-resolve # 这个参数是禁止域名解析的,远程访问推荐开启skip_name_resolve。

[client]

port = 3306

default-character-set=utf8

[mysql]

no-auto-rehash

default-character-set=utf8

我们还需设置MySQL服务启动时需要执行的脚本命令, 注意这里的用户名和password必需和docker-compose.yml里与MySQL相关的环境变量保持一致。

# compose/mysql/init/init.sql

GRANT ALL PRIVILEGES ON myproject.* TO dbuser@"%" IDENTIFIED BY "password";

FLUSH PRIVILEGES;

第五步:编写Redis 容器配置文件

启动redis容器我们直接使用官方镜像即可,不过我们需要给redis增加配置文件。大部分情况下采用默认配置就好了,这里我们只做出了如下几条核心改动:

# compose/redis/redis.conf

# Redis 5配置文件下载地址

# https://raw.githubusercontent.com/antirez/redis/5.0/redis.conf

# 请注释掉下面一行,变成#bind 127.0.0.1,这样其它机器或容器也可访问

bind 127.0.0.1

# 取消下行注释,给redis设置登录密码。这个密码django settings.py会用到。

requirepass yourpassword

# compose/redis/redis.conf

# Redis configuration file example.

#

# Note that in order to read the configuration file, Redis must be

# started with the file path as first argument:

#

# ./redis-server /path/to/redis.conf

# Note on units: when memory size is needed, it is possible to specify

# it in the usual form of 1k 5GB 4M and so forth:

#

# 1k => 1000 bytes

# 1kb => 1024 bytes

# 1m => 1000000 bytes

# 1mb => 1024*1024 bytes

# 1g => 1000000000 bytes

# 1gb => 1024*1024*1024 bytes

#

# units are case insensitive so 1GB 1Gb 1gB are all the same.

################################## INCLUDES ###################################

# Include one or more other config files here. This is useful if you

# have a standard template that goes to all Redis servers but also need

# to customize a few per-server settings. Include files can include

# other files, so use this wisely.

#

# Notice option "include" won't be rewritten by command "CONFIG REWRITE"

# from admin or Redis Sentinel. Since Redis always uses the last processed

# line as value of a configuration directive, you'd better put includes

# at the beginning of this file to avoid overwriting config change at runtime.

#

# If instead you are interested in using includes to override configuration

# options, it is better to use include as the last line.

#

# include /path/to/local.conf

# include /path/to/other.conf

################################## MODULES #####################################

# Load modules at startup. If the server is not able to load modules

# it will abort. It is possible to use multiple loadmodule directives.

#

# loadmodule /path/to/my_module.so

# loadmodule /path/to/other_module.so

################################## NETWORK #####################################

# By default, if no "bind" configuration directive is specified, Redis listens

# for connections from all the network interfaces available on the server.

# It is possible to listen to just one or multiple selected interfaces using

# the "bind" configuration directive, followed by one or more IP addresses.

#

# Examples:

#

# bind 192.168.1.100 10.0.0.1

# bind 127.0.0.1 ::1

#

# ~~~ WARNING ~~~ If the computer running Redis is directly exposed to the

# internet, binding to all the interfaces is dangerous and will expose the

# instance to everybody on the internet. So by default we uncomment the

# following bind directive, that will force Redis to listen only into

# the IPv4 loopback interface address (this means Redis will be able to

# accept connections only from clients running into the same computer it

# is running).

#

# IF YOU ARE SURE YOU WANT YOUR INSTANCE TO LISTEN TO ALL THE INTERFACES

# JUST COMMENT THE FOLLOWING LINE.

# ~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~

bind 127.0.0.1

# Protected mode is a layer of security protection, in order to avoid that

# Redis instances left open on the internet are accessed and exploited.

#

# When protected mode is on and if:

#

# 1) The server is not binding explicitly to a set of addresses using the

# "bind" directive.

# 2) No password is configured.

#

# The server only accepts connections from clients connecting from the

# IPv4 and IPv6 loopback addresses 127.0.0.1 and ::1, and from Unix domain

# sockets.

#

# By default protected mode is enabled. You should disable it only if

# you are sure you want clients from other hosts to connect to Redis

# even if no authentication is configured, nor a specific set of interfaces

# are explicitly listed using the "bind" directive.

protected-mode yes

# Accept connections on the specified port, default is 6379 (IANA #815344).

# If port 0 is specified Redis will not listen on a TCP socket.

port 6379

# TCP listen() backlog.

#

# In high requests-per-second environments you need an high backlog in order

# to avoid slow clients connections issues. Note that the Linux kernel

# will silently truncate it to the value of /proc/sys/net/core/somaxconn so

# make sure to raise both the value of somaxconn and tcp_max_syn_backlog

# in order to get the desired effect.

tcp-backlog 511

# Unix socket.

#

# Specify the path for the Unix socket that will be used to listen for

# incoming connections. There is no default, so Redis will not listen

# on a unix socket when not specified.

#

# unixsocket /tmp/redis.sock

# unixsocketperm 700

# Close the connection after a client is idle for N seconds (0 to disable)

timeout 0

# TCP keepalive.

#

# If non-zero, use SO_KEEPALIVE to send TCP ACKs to clients in absence

# of communication. This is useful for two reasons:

#

# 1) Detect dead peers.

# 2) Take the connection alive from the point of view of network

# equipment in the middle.

#

# On Linux, the specified value (in seconds) is the period used to send ACKs.

# Note that to close the connection the double of the time is needed.

# On other kernels the period depends on the kernel configuration.

#

# A reasonable value for this option is 300 seconds, which is the new

# Redis default starting with Redis 3.2.1.

tcp-keepalive 300

################################# GENERAL #####################################

# By default Redis does not run as a daemon. Use 'yes' if you need it.

# Note that Redis will write a pid file in /var/run/redis.pid when daemonized.

daemonize no

# If you run Redis from upstart or systemd, Redis can interact with your

# supervision tree. Options:

# supervised no - no supervision interaction

# supervised upstart - signal upstart by putting Redis into SIGSTOP mode

# supervised systemd - signal systemd by writing READY=1 to $NOTIFY_SOCKET

# supervised auto - detect upstart or systemd method based on

# UPSTART_JOB or NOTIFY_SOCKET environment variables

# Note: these supervision methods only signal "process is ready."

# They do not enable continuous liveness pings back to your supervisor.

supervised no

# If a pid file is specified, Redis writes it where specified at startup

# and removes it at exit.

#

# When the server runs non daemonized, no pid file is created if none is

# specified in the configuration. When the server is daemonized, the pid file

# is used even if not specified, defaulting to "/var/run/redis.pid".

#

# Creating a pid file is best effort: if Redis is not able to create it

# nothing bad happens, the server will start and run normally.

pidfile /var/run/redis_6379.pid

# Specify the server verbosity level.

# This can be one of:

# debug (a lot of information, useful for development/testing)

# verbose (many rarely useful info, but not a mess like the debug level)

# notice (moderately verbose, what you want in production probably)

# warning (only very important / critical messages are logged)

loglevel notice

# Specify the log file name. Also the empty string can be used to force

# Redis to log on the standard output. Note that if you use standard

# output for logging but daemonize, logs will be sent to /dev/null

logfile ""

# To enable logging to the system logger, just set 'syslog-enabled' to yes,

# and optionally update the other syslog parameters to suit your needs.

# syslog-enabled no

# Specify the syslog identity.

# syslog-ident redis

# Specify the syslog facility. Must be USER or between LOCAL0-LOCAL7.

# syslog-facility local0

# Set the number of databases. The default database is DB 0, you can select

# a different one on a per-connection basis using SELECT <dbid> where

# dbid is a number between 0 and 'databases'-1

databases 16

# By default Redis shows an ASCII art logo only when started to log to the

# standard output and if the standard output is a TTY. Basically this means

# that normally a logo is displayed only in interactive sessions.

#

# However it is possible to force the pre-4.0 behavior and always show a

# ASCII art logo in startup logs by setting the following option to yes.

always-show-logo yes

################################ SNAPSHOTTING ################################

#

# Save the DB on disk:

#

# save <seconds> <changes>

#

# Will save the DB if both the given number of seconds and the given

# number of write operations against the DB occurred.

#

# In the example below the behaviour will be to save:

# after 900 sec (15 min) if at least 1 key changed

# after 300 sec (5 min) if at least 10 keys changed

# after 60 sec if at least 10000 keys changed

#

# Note: you can disable saving completely by commenting out all "save" lines.

#

# It is also possible to remove all the previously configured save

# points by adding a save directive with a single empty string argument

# like in the following example:

#

# save ""

save 900 1

save 300 10

save 60 10000

# By default Redis will stop accepting writes if RDB snapshots are enabled

# (at least one save point) and the latest background save failed.

# This will make the user aware (in a hard way) that data is not persisting

# on disk properly, otherwise chances are that no one will notice and some

# disaster will happen.

#

# If the background saving process will start working again Redis will

# automatically allow writes again.

#

# However if you have setup your proper monitoring of the Redis server

# and persistence, you may want to disable this feature so that Redis will

# continue to work as usual even if there are problems with disk,

# permissions, and so forth.

stop-writes-on-bgsave-error yes

# Compress string objects using LZF when dump .rdb databases?

# For default that's set to 'yes' as it's almost always a win.

# If you want to save some CPU in the saving child set it to 'no' but

# the dataset will likely be bigger if you have compressible values or keys.

rdbcompression yes

# Since version 5 of RDB a CRC64 checksum is placed at the end of the file.

# This makes the format more resistant to corruption but there is a performance

# hit to pay (around 10%) when saving and loading RDB files, so you can disable it

# for maximum performances.

#

# RDB files created with checksum disabled have a checksum of zero that will

# tell the loading code to skip the check.

rdbchecksum yes

# The filename where to dump the DB

dbfilename dump.rdb

# The working directory.

#

# The DB will be written inside this directory, with the filename specified

# above using the 'dbfilename' configuration directive.

#

# The Append Only File will also be created inside this directory.

#

# Note that you must specify a directory here, not a file name.

dir ./

################################# REPLICATION #################################

# Master-Replica replication. Use replicaof to make a Redis instance a copy of

# another Redis server. A few things to understand ASAP about Redis replication.

#

# +------------------+ +---------------+

# | Master | ---> | Replica |

# | (receive writes) | | (exact copy) |

# +------------------+ +---------------+

#

# 1) Redis replication is asynchronous, but you can configure a master to

# stop accepting writes if it appears to be not connected with at least

# a given number of replicas.

# 2) Redis replicas are able to perform a partial resynchronization with the

# master if the replication link is lost for a relatively small amount of

# time. You may want to configure the replication backlog size (see the next

# sections of this file) with a sensible value depending on your needs.

# 3) Replication is automatic and does not need user intervention. After a

# network partition replicas automatically try to reconnect to masters

# and resynchronize with them.

#

# replicaof <masterip> <masterport>

# If the master is password protected (using the "requirepass" configuration

# directive below) it is possible to tell the replica to authenticate before

# starting the replication synchronization process, otherwise the master will

# refuse the replica request.

#

# masterauth <master-password>

# When a replica loses its connection with the master, or when the replication

# is still in progress, the replica can act in two different ways:

#

# 1) if replica-serve-stale-data is set to 'yes' (the default) the replica will

# still reply to client requests, possibly with out of date data, or the

# data set may just be empty if this is the first synchronization.

#

# 2) if replica-serve-stale-data is set to 'no' the replica will reply with

# an error "SYNC with master in progress" to all the kind of commands

# but to INFO, replicaOF, AUTH, PING, SHUTDOWN, REPLCONF, ROLE, CONFIG,

# SUBSCRIBE, UNSUBSCRIBE, PSUBSCRIBE, PUNSUBSCRIBE, PUBLISH, PUBSUB,

# COMMAND, POST, HOST: and LATENCY.

#

replica-serve-stale-data yes

# You can configure a replica instance to accept writes or not. Writing against

# a replica instance may be useful to store some ephemeral data (because data

# written on a replica will be easily deleted after resync with the master) but

# may also cause problems if clients are writing to it because of a

# misconfiguration.

#

# Since Redis 2.6 by default replicas are read-only.

#

# Note: read only replicas are not designed to be exposed to untrusted clients

# on the internet. It's just a protection layer against misuse of the instance.

# Still a read only replica exports by default all the administrative commands

# such as CONFIG, DEBUG, and so forth. To a limited extent you can improve

# security of read only replicas using 'rename-command' to shadow all the

# administrative / dangerous commands.

replica-read-only yes

# Replication SYNC strategy: disk or socket.

#

# -------------------------------------------------------

# WARNING: DISKLESS REPLICATION IS EXPERIMENTAL CURRENTLY

# -------------------------------------------------------

#

# New replicas and reconnecting replicas that are not able to continue the replication

# process just receiving differences, need to do what is called a "full

# synchronization". An RDB file is transmitted from the master to the replicas.

# The transmission can happen in two different ways:

#

# 1) Disk-backed: The Redis master creates a new process that writes the RDB

# file on disk. Later the file is transferred by the parent

# process to the replicas incrementally.

# 2) Diskless: The Redis master creates a new process that directly writes the

# RDB file to replica sockets, without touching the disk at all.

#

# With disk-backed replication, while the RDB file is generated, more replicas

# can be queued and served with the RDB file as soon as the current child producing

# the RDB file finishes its work. With diskless replication instead once

# the transfer starts, new replicas arriving will be queued and a new transfer

# will start when the current one terminates.

#

# When diskless replication is used, the master waits a configurable amount of

# time (in seconds) before starting the transfer in the hope that multiple replicas

# will arrive and the transfer can be parallelized.

#

# With slow disks and fast (large bandwidth) networks, diskless replication

# works better.

repl-diskless-sync no

# When diskless replication is enabled, it is possible to configure the delay

# the server waits in order to spawn the child that transfers the RDB via socket

# to the replicas.

#

# This is important since once the transfer starts, it is not possible to serve

# new replicas arriving, that will be queued for the next RDB transfer, so the server

# waits a delay in order to let more replicas arrive.

#

# The delay is specified in seconds, and by default is 5 seconds. To disable

# it entirely just set it to 0 seconds and the transfer will start ASAP.

repl-diskless-sync-delay 5

# Replicas send PINGs to server in a predefined interval. It's possible to change

# this interval with the repl_ping_replica_period option. The default value is 10

# seconds.

#

# repl-ping-replica-period 10

# The following option sets the replication timeout for:

#

# 1) Bulk transfer I/O during SYNC, from the point of view of replica.

# 2) Master timeout from the point of view of replicas (data, pings).

# 3) Replica timeout from the point of view of masters (REPLCONF ACK pings).

#

# It is important to make sure that this value is greater than the value

# specified for repl-ping-replica-period otherwise a timeout will be detected

# every time there is low traffic between the master and the replica.

#

# repl-timeout 60

# Disable TCP_NODELAY on the replica socket after SYNC?

#

# If you select "yes" Redis will use a smaller number of TCP packets and

# less bandwidth to send data to replicas. But this can add a delay for

# the data to appear on the replica side, up to 40 milliseconds with

# Linux kernels using a default configuration.

#

# If you select "no" the delay for data to appear on the replica side will

# be reduced but more bandwidth will be used for replication.

#

# By default we optimize for low latency, but in very high traffic conditions

# or when the master and replicas are many hops away, turning this to "yes" may

# be a good idea.

repl-disable-tcp-nodelay no

# Set the replication backlog size. The backlog is a buffer that accumulates

# replica data when replicas are disconnected for some time, so that when a replica

# wants to reconnect again, often a full resync is not needed, but a partial

# resync is enough, just passing the portion of data the replica missed while

# disconnected.

#

# The bigger the replication backlog, the longer the time the replica can be

# disconnected and later be able to perform a partial resynchronization.

#

# The backlog is only allocated once there is at least a replica connected.

#

# repl-backlog-size 1mb

# After a master has no longer connected replicas for some time, the backlog

# will be freed. The following option configures the amount of seconds that

# need to elapse, starting from the time the last replica disconnected, for

# the backlog buffer to be freed.

#

# Note that replicas never free the backlog for timeout, since they may be

# promoted to masters later, and should be able to correctly "partially

# resynchronize" with the replicas: hence they should always accumulate backlog.

#

# A value of 0 means to never release the backlog.

#

# repl-backlog-ttl 3600

# The replica priority is an integer number published by Redis in the INFO output.

# It is used by Redis Sentinel in order to select a replica to promote into a

# master if the master is no longer working correctly.

#

# A replica with a low priority number is considered better for promotion, so

# for instance if there are three replicas with priority 10, 100, 25 Sentinel will

# pick the one with priority 10, that is the lowest.

#

# However a special priority of 0 marks the replica as not able to perform the

# role of master, so a replica with priority of 0 will never be selected by

# Redis Sentinel for promotion.

#

# By default the priority is 100.

replica-priority 100

# It is possible for a master to stop accepting writes if there are less than

# N replicas connected, having a lag less or equal than M seconds.

#

# The N replicas need to be in "online" state.

#

# The lag in seconds, that must be <= the specified value, is calculated from

# the last ping received from the replica, that is usually sent every second.

#

# This option does not GUARANTEE that N replicas will accept the write, but

# will limit the window of exposure for lost writes in case not enough replicas

# are available, to the specified number of seconds.

#

# For example to require at least 3 replicas with a lag <= 10 seconds use:

#

# min-replicas-to-write 3

# min-replicas-max-lag 10

#

# Setting one or the other to 0 disables the feature.

#

# By default min-replicas-to-write is set to 0 (feature disabled) and

# min-replicas-max-lag is set to 10.

# A Redis master is able to list the address and port of the attached

# replicas in different ways. For example the "INFO replication" section

# offers this information, which is used, among other tools, by

# Redis Sentinel in order to discover replica instances.

# Another place where this info is available is in the output of the

# "ROLE" command of a master.

#

# The listed IP and address normally reported by a replica is obtained

# in the following way:

#

# IP: The address is auto detected by checking the peer address

# of the socket used by the replica to connect with the master.

#

# Port: The port is communicated by the replica during the replication

# handshake, and is normally the port that the replica is using to

# listen for connections.

#

# However when port forwarding or Network Address Translation (NAT) is

# used, the replica may be actually reachable via different IP and port

# pairs. The following two options can be used by a replica in order to

# report to its master a specific set of IP and port, so that both INFO

# and ROLE will report those values.

#

# There is no need to use both the options if you need to override just

# the port or the IP address.

#

# replica-announce-ip 5.5.5.5

# replica-announce-port 1234

################################## SECURITY ###################################

# Require clients to issue AUTH <PASSWORD> before processing any other

# commands. This might be useful in environments in which you do not trust

# others with access to the host running redis-server.

#

# This should stay commented out for backward compatibility and because most

# people do not need auth (e.g. they run their own servers).

#

# Warning: since Redis is pretty fast an outside user can try up to

# 150k passwords per second against a good box. This means that you should

# use a very strong password otherwise it will be very easy to break.

#

# requirepass foobared

# Command renaming.

#

# It is possible to change the name of dangerous commands in a shared

# environment. For instance the CONFIG command may be renamed into something

# hard to guess so that it will still be available for internal-use tools

# but not available for general clients.

#

# Example:

#

# rename-command CONFIG b840fc02d524045429941cc15f59e41cb7be6c52

#

# It is also possible to completely kill a command by renaming it into

# an empty string:

#

# rename-command CONFIG ""

#

# Please note that changing the name of commands that are logged into the

# AOF file or transmitted to replicas may cause problems.

################################### CLIENTS ####################################

# Set the max number of connected clients at the same time. By default

# this limit is set to 10000 clients, however if the Redis server is not

# able to configure the process file limit to allow for the specified limit

# the max number of allowed clients is set to the current file limit

# minus 32 (as Redis reserves a few file descriptors for internal uses).

#

# Once the limit is reached Redis will close all the new connections sending

# an error 'max number of clients reached'.

#

# maxclients 10000

############################## MEMORY MANAGEMENT ################################

# Set a memory usage limit to the specified amount of bytes.

# When the memory limit is reached Redis will try to remove keys

# according to the eviction policy selected (see maxmemory-policy).

#

# If Redis can't remove keys according to the policy, or if the policy is

# set to 'noeviction', Redis will start to reply with errors to commands

# that would use more memory, like SET, LPUSH, and so on, and will continue

# to reply to read-only commands like GET.

#

# This option is usually useful when using Redis as an LRU or LFU cache, or to

# set a hard memory limit for an instance (using the 'noeviction' policy).

#

# WARNING: If you have replicas attached to an instance with maxmemory on,

# the size of the output buffers needed to feed the replicas are subtracted

# from the used memory count, so that network problems / resyncs will

# not trigger a loop where keys are evicted, and in turn the output

# buffer of replicas is full with DELs of keys evicted triggering the deletion

# of more keys, and so forth until the database is completely emptied.

#

# In short... if you have replicas attached it is suggested that you set a lower

# limit for maxmemory so that there is some free RAM on the system for replica

# output buffers (but this is not needed if the policy is 'noeviction').

#

# maxmemory <bytes>

# MAXMEMORY POLICY: how Redis will select what to remove when maxmemory

# is reached. You can select among five behaviors:

#

# volatile-lru -> Evict using approximated LRU among the keys with an expire set.

# allkeys-lru -> Evict any key using approximated LRU.

# volatile-lfu -> Evict using approximated LFU among the keys with an expire set.

# allkeys-lfu -> Evict any key using approximated LFU.

# volatile-random -> Remove a random key among the ones with an expire set.

# allkeys-random -> Remove a random key, any key.

# volatile-ttl -> Remove the key with the nearest expire time (minor TTL)

# noeviction -> Don't evict anything, just return an error on write operations.

#

# LRU means Least Recently Used

# LFU means Least Frequently Used

#

# Both LRU, LFU and volatile-ttl are implemented using approximated

# randomized algorithms.

#

# Note: with any of the above policies, Redis will return an error on write

# operations, when there are no suitable keys for eviction.

#

# At the date of writing these commands are: set setnx setex append

# incr decr rpush lpush rpushx lpushx linsert lset rpoplpush sadd

# sinter sinterstore sunion sunionstore sdiff sdiffstore zadd zincrby

# zunionstore zinterstore hset hsetnx hmset hincrby incrby decrby

# getset mset msetnx exec sort

#

# The default is:

#

# maxmemory-policy noeviction

# LRU, LFU and minimal TTL algorithms are not precise algorithms but approximated

# algorithms (in order to save memory), so you can tune it for speed or

# accuracy. For default Redis will check five keys and pick the one that was

# used less recently, you can change the sample size using the following

# configuration directive.

#

# The default of 5 produces good enough results. 10 Approximates very closely

# true LRU but costs more CPU. 3 is faster but not very accurate.

#

# maxmemory-samples 5

# Starting from Redis 5, by default a replica will ignore its maxmemory setting

# (unless it is promoted to master after a failover or manually). It means

# that the eviction of keys will be just handled by the master, sending the

# DEL commands to the replica as keys evict in the master side.

#

# This behavior ensures that masters and replicas stay consistent, and is usually

# what you want, however if your replica is writable, or you want the replica to have

# a different memory setting, and you are sure all the writes performed to the

# replica are idempotent, then you may change this default (but be sure to understand

# what you are doing).

#

# Note that since the replica by default does not evict, it may end using more

# memory than the one set via maxmemory (there are certain buffers that may

# be larger on the replica, or data structures may sometimes take more memory and so

# forth). So make sure you monitor your replicas and make sure they have enough

# memory to never hit a real out-of-memory condition before the master hits

# the configured maxmemory setting.

#

# replica-ignore-maxmemory yes

############################# LAZY FREEING ####################################

# Redis has two primitives to delete keys. One is called DEL and is a blocking

# deletion of the object. It means that the server stops processing new commands

# in order to reclaim all the memory associated with an object in a synchronous

# way. If the key deleted is associated with a small object, the time needed

# in order to execute the DEL command is very small and comparable to most other

# O(1) or O(log_N) commands in Redis. However if the key is associated with an

# aggregated value containing millions of elements, the server can block for

# a long time (even seconds) in order to complete the operation.

#

# For the above reasons Redis also offers non blocking deletion primitives

# such as UNLINK (non blocking DEL) and the ASYNC option of FLUSHALL and

# FLUSHDB commands, in order to reclaim memory in background. Those commands

# are executed in constant time. Another thread will incrementally free the

# object in the background as fast as possible.

#

# DEL, UNLINK and ASYNC option of FLUSHALL and FLUSHDB are user-controlled.

# It's up to the design of the application to understand when it is a good

# idea to use one or the other. However the Redis server sometimes has to

# delete keys or flush the whole database as a side effect of other operations.

# Specifically Redis deletes objects independently of a user call in the

# following scenarios:

#

# 1) On eviction, because of the maxmemory and maxmemory policy configurations,

# in order to make room for new data, without going over the specified

# memory limit.

# 2) Because of expire: when a key with an associated time to live (see the

# EXPIRE command) must be deleted from memory.

# 3) Because of a side effect of a command that stores data on a key that may

# already exist. For example the RENAME command may delete the old key

# content when it is replaced with another one. Similarly SUNIONSTORE

# or SORT with STORE option may delete existing keys. The SET command

# itself removes any old content of the specified key in order to replace

# it with the specified string.

# 4) During replication, when a replica performs a full resynchronization with

# its master, the content of the whole database is removed in order to

# load the RDB file just transferred.

#

# In all the above cases the default is to delete objects in a blocking way,

# like if DEL was called. However you can configure each case specifically

# in order to instead release memory in a non-blocking way like if UNLINK

# was called, using the following configuration directives:

lazyfree-lazy-eviction no

lazyfree-lazy-expire no

lazyfree-lazy-server-del no

replica-lazy-flush no

############################## APPEND ONLY MODE ###############################

# By default Redis asynchronously dumps the dataset on disk. This mode is

# good enough in many applications, but an issue with the Redis process or

# a power outage may result into a few minutes of writes lost (depending on

# the configured save points).

#

# The Append Only File is an alternative persistence mode that provides

# much better durability. For instance using the default data fsync policy

# (see later in the config file) Redis can lose just one second of writes in a

# dramatic event like a server power outage, or a single write if something

# wrong with the Redis process itself happens, but the operating system is

# still running correctly.

#

# AOF and RDB persistence can be enabled at the same time without problems.

# If the AOF is enabled on startup Redis will load the AOF, that is the file

# with the better durability guarantees.

#

# Please check http://redis.io/topics/persistence for more information.

appendonly no

# The name of the append only file (default: "appendonly.aof")

appendfilename "appendonly.aof"

# The fsync() call tells the Operating System to actually write data on disk

# instead of waiting for more data in the output buffer. Some OS will really flush

# data on disk, some other OS will just try to do it ASAP.

#

# Redis supports three different modes:

#

# no: don't fsync, just let the OS flush the data when it wants. Faster.

# always: fsync after every write to the append only log. Slow, Safest.

# everysec: fsync only one time every second. Compromise.

#

# The default is "everysec", as that's usually the right compromise between

# speed and data safety. It's up to you to understand if you can relax this to

# "no" that will let the operating system flush the output buffer when

# it wants, for better performances (but if you can live with the idea of

# some data loss consider the default persistence mode that's snapshotting),

# or on the contrary, use "always" that's very slow but a bit safer than

# everysec.

#

# More details please check the following article:

# http://antirez.com/post/redis-persistence-demystified.html

#

# If unsure, use "everysec".

# appendfsync always

appendfsync everysec

# appendfsync no

# When the AOF fsync policy is set to always or everysec, and a background

# saving process (a background save or AOF log background rewriting) is

# performing a lot of I/O against the disk, in some Linux configurations

# Redis may block too long on the fsync() call. Note that there is no fix for

# this currently, as even performing fsync in a different thread will block

# our synchronous write(2) call.

#

# In order to mitigate this problem it's possible to use the following option

# that will prevent fsync() from being called in the main process while a

# BGSAVE or BGREWRITEAOF is in progress.

#

# This means that while another child is saving, the durability of Redis is

# the same as "appendfsync none". In practical terms, this means that it is

# possible to l

第六步:修改Django项目settings.py

在你准备好docker-compose.yml并编排好各容器的Dockerfile及配置文件后,请先不要急于使用Docker-compose命令构建镜像和启动容器。这时还有一件非常重要的事情要做,那就是修改Django的settings.py, 提供mysql和redis服务的配置信息。最重要的几项配置如下所示:

# 生产环境设置 Debug = False

Debug = False

# 设置ALLOWED HOSTS

ALLOWED_HOSTS = ['your_server_IP', 'your_domain_name']

# 设置STATIC ROOT 和 STATIC URL

STATIC_ROOT = os.path.join(BASE_DIR, 'static')

STATIC_URL = "/static/"

# 设置MEDIA ROOT 和 MEDIA URL

MEDIA_ROOT = os.path.join(BASE_DIR, 'media')

MEDIA_URL = "/media/"

# 设置数据库。这里用户名和密码必需和docker-compose.yml里mysql环境变量保持一致

DATABASES = {

'default': {

'ENGINE': 'django.db.backends.mysql',

'NAME': 'myproject', # 数据库名

'USER':'dbuser', # 你设置的用户名 - 非root用户

'PASSWORD':'password', # # 换成你自己密码

'HOST': 'db', # 注意:这里使用的是db别名,docker会自动解析成ip

'PORT':'3306', # 端口

}

}

# 设置redis缓存。这里密码为redis.conf里设置的密码

CACHES = {

"default": {

"BACKEND": "django_redis.cache.RedisCache",

"LOCATION": "redis://redis:6379/1", #这里直接使用redis别名作为host ip地址

"OPTIONS": {

"CLIENT_CLASS": "django_redis.client.DefaultClient",

"PASSWORD": "yourpassword", # 换成你自己密码

},

}

}

第七步:使用docker-compose 构建镜像并启动容器组服务

现在我们可以使用docker-compose命名构建镜像并启动容器组了。

# 进入docker-compose.yml所在文件夹,输入以下命令构建镜像

sudo docker-compose build

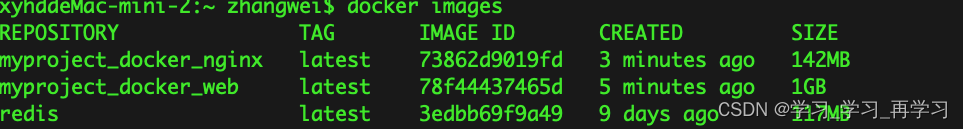

# 查看已生成的镜像

sudo docker images

# 启动容器组服务

sudo docker-compose up

# 查看运行中的容器

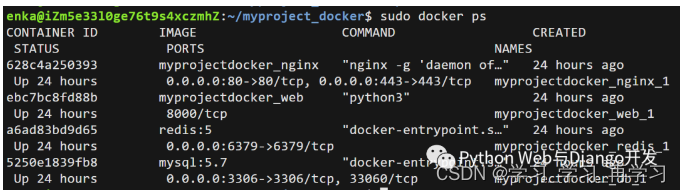

sudo docker ps

如果一切顺利,此时你应该可以看到四个容器都已经成功运行了。

第八步:进入web容器内执行Django命令并启动uwsgi服务器

虽然我们四个容器都已启动运行,但我们还没有执行Django相关命令并启动uwsgi服务器。现在我们只需进入web容器执行我们先前编写的脚本文件start.sh即可。

sudo docker exec -it myprojectdocker_web_1 /bin/bash start.sh

此时打开你的浏览器,输入你服务器的ip地址或域名指向地址,你就应该可以看到网站已经上线啦。

小结

本文详细地介绍了如何使用docker-compose工具分八步在生成环境下部署Django + Uwsgi + Nginx + MySQL + Redis。过程看似很复杂,但很多Dockerfile,项目布局及docker-compose.yml都是可以复用的。花时间学习并练习本章内容是非常值得的,一但你学会了,基本上可以10分钟内完成一个正式Django项目的部署,而且可以保证在任何一台Linux机器上顺利地运行。

Docker-compose 八步部署Django + Uwsgi + Nginx + MySQL + Redis升级篇

使用docker-compose八步部署Django + Uwsgi + Nginx + MySQL + Redis (多容器组合),但该教程里有很多值得改进的地方,比如:

- MySQL的数据库名,用户名和密码明文写在docker-compose.yml里,实际是可以由.env文件创建的;

- MySQL使用的版本比较老,为5.7。如果你使用MySQL 8,那么相关配置文件需要做较大修改;

- Nginx配置文件没有挂载,每次修改需要手动将配置文件从宿主机复制一份到容器内;

- 容器间通信使用了–links选项,这个docker已不推荐使用。本例将使用networks实现容器间通信;

- 原文每次web容器服务启动后,需要手动进入容器内部进行数据迁移并启动uwsgi服务;

下面仔细讲下如何在Docker部署时排错。

注意:本文侧重于Docker技术在部署Django时的实际应用,而不是Docker基础教程。对Docker命令不熟悉的读者们建议先学习下Docker及Docker-compose基础命令。

第一步:编写docker-compose.yml文件

修改过的docker-compose.yml的核心内容如下。我们定义了4个数据卷,用于挂载各个容器内动态生成的数据,比如MySQL的存储数据,redis生成的快照、django+uwsgi容器中收集的静态文件以及用户上传的媒体资源。这样即使删除容器,容器内产生的数据也不会丢失。

我们还定义了3个网络,分别为nginx_network(用于nginx和web容器间的通信),db_network(用于db和web容器间的通信)和redis_network(用于redis和web容器间的通信)。

整个编排里包含4项容器服务,别名分别为redis,`` db, ``nginx和web,接下来我们将依次看看各个容器的Dockerfile和配置文件。

version: "3"

volumes: # 自定义数据卷

db_vol: #定义数据卷同步存放容器内mysql数据

redis_vol: #定义数据卷同步存放redis数据

media_vol: #定义数据卷同步存放web项目用户上传到media文件夹的数据

static_vol: #定义数据卷同步存放web项目static文件夹的数据

networks: # 自定义网络(默认桥接), 不使用links通信

nginx_network:

driver: bridge

db_network:

driver: bridge

redis_network:

driver: bridge

services:

redis:

image: redis:latest

command: redis-server /etc/redis/redis.conf # 容器启动后启动redis服务器

networks:

- redis_network

volumes:

- redis_vol:/data # 通过挂载给redis数据备份

- ./compose/redis/redis.conf:/etc/redis/redis.conf # 挂载redis配置文件

ports:

- "6379:6379"

restart: always # always表容器运行发生错误时一直重启

db:

image: mysql

env_file:

- ./myproject/.env # 使用了环境变量文件

networks:

- db_network

volumes:

- db_vol:/var/lib/mysql:rw # 挂载数据库数据, 可读可写

- ./compose/mysql/conf/my.cnf:/etc/mysql/my.cnf # 挂载配置文件

- ./compose/mysql/init:/docker-entrypoint-initdb.d/ # 挂载数据初始化sql脚本

ports:

- "3306:3306" # 与配置文件保持一致

restart: always

web:

build: ./myproject

expose:

- "8000"

volumes:

- ./myproject:/var/www/html/myproject # 挂载项目代码

- static_vol:/var/www/html/myproject/static # 以数据卷挂载容器内static文件

- media_vol:/var/www/html/myproject/media # 以数据卷挂载容器内用户上传媒体文件

- ./compose/uwsgi:/tmp # 挂载uwsgi日志

networks:

- nginx_network

- db_network

- redis_network

depends_on:

- db

- redis

restart: always

tty: true

stdin_open: true

nginx:

build: ./compose/nginx

ports:

- "80:80"

- "443:443"

expose:

- "80"

volumes:

- ./compose/nginx/nginx.conf:/etc/nginx/conf.d/nginx.conf # 挂载nginx配置文件

- ./compose/nginx/ssl:/usr/share/nginx/ssl # 挂载ssl证书目录

- ./compose/nginx/log:/var/log/nginx # 挂载日志

- static_vol:/usr/share/nginx/html/static # 挂载静态文件

- media_vol:/usr/share/nginx/html/media # 挂载用户上传媒体文件

networks:

- nginx_network

depends_on:

- web

restart: always

第二步:编写Web (Django+Uwsgi)镜像和容器所需文件

构建Web镜像(Django+Uwsgi)的所使用的Dockerfile如下所示:

# 建立 python 3.9环境

FROM python:3.9

# 安装netcat

RUN apt-get update && apt install -y netcat

# 镜像作者

MAINTAINER WW

# 设置 python 环境变量

ENV PYTHONDONTWRITEBYTECODE 1

ENV PYTHONUNBUFFERED 1

# 可选:设置镜像源为国内

COPY pip.conf /root/.pip/pip.conf

# 容器内创建 myproject 文件夹

ENV APP_HOME=/var/www/html/myproject

RUN mkdir -p $APP_HOME

WORKDIR $APP_HOME

# 将当前目录加入到工作目录中(. 表示当前目录)

ADD . $APP_HOME

# 更新pip版本

RUN /usr/local/bin/python -m pip install --upgrade pip

# 安装项目依赖

RUN pip install -r requirements.txt

# 移除\r in windows

RUN sed -i 's/\r//' ./start.sh

# 给start.sh可执行权限

RUN chmod +x ./start.sh

# 数据迁移,并使用uwsgi启动服务

ENTRYPOINT /bin/bash ./start.sh

本Django项目所依赖的requirements.txt内容如下所示:

# django

django==3.2

# uwsgi

uwsgi==2.0.18

# mysql

mysqlclient==1.4.6

# redis

django-redis==4.12.1

redis==3.5.3

# for images

Pillow==8.2.0

start.sh脚本文件内容如下所示。最重要的是最后一句,使用uwsgi.ini配置文件启动Django服务。

#!/bin/bash

# 从第一行到最后一行分别表示:

# 1. 等待MySQL服务启动后再进行数据迁移。nc即netcat缩写

# 2. 收集静态文件到根目录static文件夹,

# 3. 生成数据库可执行文件,

# 4. 根据数据库可执行文件来修改数据库

# 5. 用 uwsgi启动 django 服务

# 6. tail空命令防止web容器执行脚本后退出

while ! nc -z db 3306 ; do

echo "Waiting for the MySQL Server"

sleep 3

done

python manage.py collectstatic --noinput&&

python manage.py makemigrations&&

python manage.py migrate&&

uwsgi --ini /var/www/html/myproject/uwsgi.ini&&

tail -f /dev/null

exec "$@"

uwsgi.ini配置文件如下所示:

[uwsgi]

project=myproject

uid=www-data

gid=www-data

base=/var/www/html

chdir=%(base)/%(project)

module=%(project).wsgi:application

master=True

processes=2

socket=0.0.0.0:8000

chown-socket=%(uid):www-data

chmod-socket=664

vacuum=True

max-requests=5000

pidfile=/tmp/%(project)-master.pid

daemonize=/tmp/%(project)-uwsgi.log

#设置一个请求的超时时间(秒),如果一个请求超过了这个时间,则请求被丢弃

harakiri = 60

post buffering = 8192

buffer-size= 65535

#当一个请求被harakiri杀掉会,会输出一条日志

harakiri-verbose = true

#开启内存使用情况报告

memory-report = true

#设置平滑的重启(直到处理完接收到的请求)的长等待时间(秒)

reload-mercy = 10

#设置工作进程使用虚拟内存超过N MB就回收重启

reload-on-as= 1024

第三步:编写Nginx镜像和容器所需文件

构建Nginx镜像所使用的Dockerfile如下所示:

# nginx镜像compose/nginx/Dockerfile

FROM nginx:latest

# 删除原有配置文件,创建静态资源文件夹和ssl证书保存文件夹

RUN rm /etc/nginx/conf.d/default.conf \

&& mkdir -p /usr/share/nginx/html/static \

&& mkdir -p /usr/share/nginx/html/media \

&& mkdir -p /usr/share/nginx/ssl \

&& mkdir -p /var/log/nginx

# 设置Media文件夹用户和用户组为Linux默认www-data, 并给予可读和可执行权限,

# 否则用户上传的图片无法正确显示。

RUN chown -R www-data:www-data /usr/share/nginx/html/media \

&& chmod -R 775 /usr/share/nginx/html/media

# 添加配置文件

ADD ./nginx.conf /etc/nginx/conf.d/

# 关闭守护模式

CMD ["nginx", "-g", "daemon off;"]

Nginx的配置文件 nginx.conf 如下所示

# nginx配置文件

# compose/nginx/nginx.conf

upstream django {

ip_hash;

server web:8000; # Docker-compose web服务端口

}

# 配置http请求,80端口

server {

listen 80; # 监听80端口

server_name 127.0.0.1; # 可以是nginx容器所在ip地址或127.0.0.1,不能写宿主机外网ip地址

charset utf-8;

client_max_body_size 10M; # 限制用户上传文件大小

access_log /var/log/nginx/access.log main;

error_log /var/log/nginx/error.log warn;

location /static {

alias /usr/share/nginx/html/static; # 静态资源路径

}

location /media {

alias /usr/share/nginx/html/media; # 媒体资源,用户上传文件路径

}

location / {

include /etc/nginx/uwsgi_params;

uwsgi_pass django;

uwsgi_read_timeout 600;

uwsgi_connect_timeout 600;

uwsgi_send_timeout 600;

proxy_set_header X-Forwarded-For $proxy_add_x_forwarded_for;

proxy_redirect off;

proxy_set_header X-Real-IP $remote_addr;

# proxy_pass http://django; # 使用uwsgi通信,而不是http,所以不使用proxy_pass。

}

}

第四步:编写Db (MySQL)容器配置文件

启动MySQL容器我们直接使用官方镜像即可,不过我们需要给MySQL增加配置文件 my.cnf

# compose/mysql/conf/my.cnf

[mysqld]

user=mysql

default-storage-engine=INNODB

character-set-server=utf8

secure-file-priv=NULL # mysql 8 新增这行配置

default-authentication-plugin=mysql_native_password # mysql 8 新增这行配置

port = 3306 # 端口与docker-compose里映射端口保持一致

#bind-address= localhost #一定要注释掉,mysql所在容器和django所在容器不同IP

basedir = /usr

datadir = /var/lib/mysql

tmpdir = /tmp

pid-file = /var/run/mysqld/mysqld.pid

socket = /var/run/mysqld/mysqld.sock

skip-name-resolve # 这个参数是禁止域名解析的,远程访问推荐开启skip_name_resolve。

[client]

port = 3306

default-character-set=utf8

[mysql]

no-auto-rehash

default-character-set=utf8

我们还需设置MySQL服务启动时需要执行的脚本命令, 注意这里的用户名和password必需和docker-compose.yml里与MySQL相关的环境变量(.env)保持一致。

# compose/mysql/init/init.sql

Alter user 'dbuser'@'%' IDENTIFIED WITH mysql_native_password BY 'password';

GRANT ALL PRIVILEGES ON myproject.* TO 'dbuser'@'%';

FLUSH PRIVILEGES;

.env文件内容如下所示:

MYSQL_ROOT_PASSWORD=123456

MYSQL_USER=dbuser

MYSQL_DATABASE=myproject

MYSQL_PASSWORD=password

第五步:编写Redis 容器配置文件

启动redis容器我们直接使用官方镜像即可,不过我们需要给redis增加配置redis.conf文件。大部分情况下采用默认配置就好了,这里我们只做出了如下几条核心改动:

# compose/redis/redis.conf

# Redis 5配置文件下载地址

# https://raw.githubusercontent.com/antirez/redis/5.0/redis.conf

# 请注释掉下面一行,变成#bind 127.0.0.1,这样其它机器或容器也可访问

bind 127.0.0.1

# 取消下行注释,给redis设置登录密码。这个密码django settings.py会用到。

requirepass yourpassword

第六步:修改Django项目settings.py

在你准备好docker-compose.yml并编排好各容器的Dockerfile及配置文件后,请先不要急于使用Docker-compose命令构建镜像和启动容器。这时还有一件非常重要的事情要做,那就是修改Django的settings.py, 提供mysql和redis服务的配置信息。最重要的几项配置如下所示:

- settings.conf

# 生产环境设置 Debug = False

Debug = False

# 设置ALLOWED HOSTS

ALLOWED_HOSTS = ['your_server_IP', 'your_domain_name']

# 设置STATIC ROOT 和 STATIC URL

STATIC_ROOT = os.path.join(BASE_DIR, 'static')

STATIC_URL = "/static/"

# 设置MEDIA ROOT 和 MEDIA URL

MEDIA_ROOT = os.path.join(BASE_DIR, 'media')

MEDIA_URL = "/media/"

# 设置数据库。这里用户名和密码必需和docker-compose.yml里mysql环境变量保持一致

DATABASES = {

'default': {

'ENGINE': 'django.db.backends.mysql',

'NAME': 'myproject', # 数据库名

'USER':'dbuser', # 你设置的用户名 - 非root用户

'PASSWORD':'password', # # 换成你自己密码

'HOST': 'db', # 注意:这里使用的是db别名,docker会自动解析成ip

'PORT':'3306', # 端口

}

}

# 设置redis缓存。这里密码为redis.conf里设置的密码

CACHES = {

"default": {

"BACKEND": "django_redis.cache.RedisCache",

"LOCATION": "redis://redis:6379/1", #这里直接使用redis别名作为host ip地址

"OPTIONS": {

"CLIENT_CLASS": "django_redis.client.DefaultClient",

"PASSWORD": "yourpassword", # 换成你自己密码

},

}

}

- pip.conf

[global]

index-url = https://pypi.tuna.tsinghua.edu.cn/simple

[install]

trusted-host=mirrors.aliyun.com

第七步:使用docker-compose 构建镜像并启动容器组服务

现在我们可以使用docker-compose命令构建镜像并启动容器组了。

# 进入docker-compose.yml所在文件夹,输入以下命令构建镜像

sudo docker-compose build

# 启动容器组服务

sudo docker-compose up

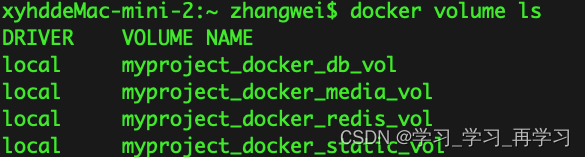

- docker images

- docker valume ls

第八步:排错

初学者使用Docker或Docker-compose部署会出现各种各样的错误,本文教你如何排错。

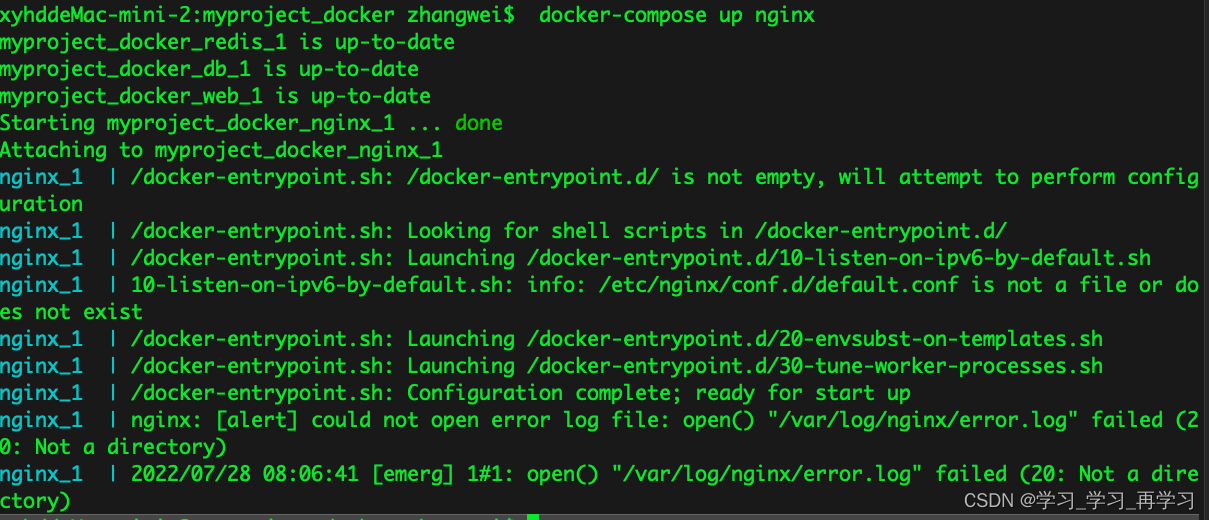

Nginx容器排错

容器已启动运行,网站打不开,最有用的是查看Nginx的错误日志error.log。由于我们对容器内Nginx的log进行了挂载,你在宿主机的compose/nginx/log目录里即可查看相关日志。

# 进入nginx日志目录,一个access.log, 一个error.log

cd compose/nginx/log

# 查看日志文件

sudo cat error.log

绝大部分网站打不开,Nginx日志显示nginx: connect() failed (111: Connection refused) while connecting to upstream或Nginx 502 gateway的错误都不是因为nginx自身的原因,而是Web容器中Django程序有问题或则uwsgi配置文件有问题。

在进入Web容器排错前,你首先要检查下Nginx转发请求的方式(proxy_pass和uwsgi_pass)以及转发端口与uwsgi里面的监听方式以及端口是否一致。

uWSGI和Nginx之间有3种通信方式unix socket,TCP socket和http如果Nginx以proxy_pass方式转发请求,uwsgi需要使用http协议进行通信。如果Nginx以uwsgi_pass转发请求,uwsgi建议配置socket进行通信。

更多关于Nginx和Uwsgi的配置介绍见个人博客:

https://pythondjango.cn/python/tools/6-uwsgi-configuration/https://pythondjango.cn/python/tools/5-nginx-configuration/- 错误

注意在:nginx的Dockerfile文件中添加mkdir -p /var/log/nginx

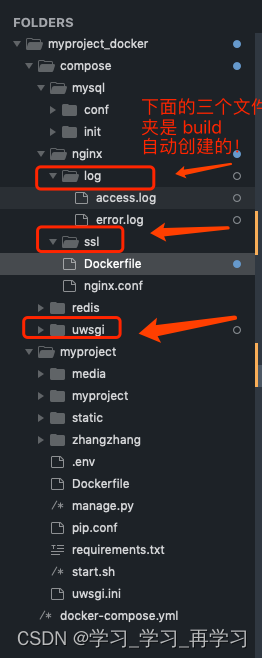

注意:下面的文件夹默认是不创建的,当执行 docker compose build 的时候会创建

./compose/nginx/{log,ssl}

./compose/uwsgi

Web容器排错

Web容器也就是Django+UWSGI所在的容器,是最容易出现错误的容器。如果Nginx配置没问题,你应该进入web容器查看运行脚本命令时有没有报错,并检查uwsgi的运行日志。uwsgi的日志非常有用,它会记录Django程序运行时发生了哪些错误或异常。一旦发生了错误,uwsgi的进程虽然不会停止,但也无法正常工作,自然也就不能处理nginx转发的动态请求从而出现nginx报错了。

# 查看web容器日志

$ docker-compose logs web

# 进入web容器执行启动命令,查看有无报错

$ docker-compose exec web /bin/bash start.sh

# 或则进入web柔情其,逐一执行python manage.py命令

$ docker-compose exec web /bin/bash

# 进入web容器,查看uwsgi是否正常启动

$ ps aux | grep uwsgi

# 进入uwsgi日志所在目录,查看Django项目是否有报错

cd /tmp

另外一个常发生的错误是 docker-compose生成的web容器执行脚本命令后立刻退出(exited with code 0), 这时的解决方案是在docker-compose.yml中包含以下2行, 另外脚本命令里加入tail -f /dev/null是容器服务持续运行。

stdin_open: true

tty: true

有时web容器会出现不能连接到数据库的报错,这时需要检查settings.py中的数据库配置信息是否正确(比如host为db),并检查web容器和db容器是否通过db_network正常通信(比如进入db容器查看数据表是否已经生成)。在进行数据库迁移时web容器还会出现if table exists or failed to open the referenced table ‘users_user’, inconsistent migration history的错误, 可以删除migrations目录下文件并进入MySQL容器删除django_migrations数据表即可。

- 启动报错

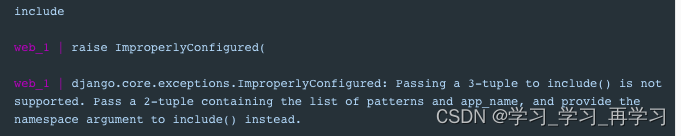

项目部署时出现问题1:

django.core.exceptions.ImproperlyConfigured: Passing a 3-tuple to include() is not supported. Pass a 2-tuple containing the list of patterns and app_name, and provide the namespace argument to include() instead.

解决步骤:

解决步骤: 注意:uwsgi.ini 不能有注释!!!django版本不一样,urls.py 中的 url也不一样from django.conf.urls import include, url from django.contrib import admin urlpatterns = [ # url(r'^admin/', include(admin.site.urls)), #注释掉的 url('admin/', admin.site.urls), ]setting.py修改内容

# 注释掉: MIDDLEWARE_CLASSES = (

# 'django.contrib.sessions.middleware.SessionMiddleware',

# 'django.middleware.common.CommonMiddleware',

# 'django.middleware.csrf.CsrfViewMiddleware',

# 'django.contrib.auth.middleware.AuthenticationMiddleware',

# 'django.contrib.auth.middleware.SessionAuthenticationMiddleware',

# 'django.contrib.messages.middleware.MessageMiddleware',

# 'django.middleware.clickjacking.XFrameOptionsMiddleware',

# 'django.middleware.security.SecurityMiddleware',

# )

MIDDLEWARE = (

'django.contrib.sessions.middleware.SessionMiddleware',

'django.middleware.common.CommonMiddleware',

'django.middleware.csrf.CsrfViewMiddleware',

'django.contrib.auth.middleware.AuthenticationMiddleware',

'django.contrib.messages.middleware.MessageMiddleware',

'django.middleware.clickjacking.XFrameOptionsMiddleware',

'django.middleware.security.SecurityMiddleware',

)

- 400 错误 /

--- nginx/log/access.log 错误

192.168.128.1 - - [29/Jul/2022:07:47:30 +0000] "GET /favicon.ico HTTP/1.1" 404 1998 "http://127.0.0.1:8088/" "Mozilla/5.0 (Macintosh; Intel Mac OS X 10_14_6) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/103.0.0.0 Safari/537.36" "-"

--- uwsgi/myproject-uwsgi.log

{address space usage: 81342464 bytes/77MB} {rss usage: 36319232 bytes/34MB} [pid: 17|app: 0|req: 1/2] 192.168.128.1 () {52 vars in 918 bytes} [Fri Jul 29 15:47:30 2022] GET /favicon.ico => generated 1998 bytes in 223 msecs (HTTP/1.1 404) 5 headers in 159 bytes (1 switches on core 0)

将配置文件 uwsgi.ini文件中关于请求头大小的注释掉

#harakiri=60

#post buffering=8192

#buffer-size=65535

#harakiri-verbose=true

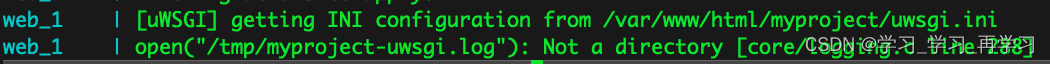

web–>uwsgi服务启动出错

uWSGI项目未启动-/tmp/logs/uwsgi.log权限被拒绝[core / logging.c第28行]

下面的配置导致的,目前在查找原因???

pidfile=/tmp/%(project)-master.pid

daemonize=/tmp/%(project)-uwsgi.log

数据库db容器排错

我们还需要经常进入数据库容器查看数据表是否已生成并删除一些数据,这时可以使用如下命令:

$ docker-compose exec db /bin/bash

# 登录

mysql -u usernmae -p;

# 选择数据库

USE dbname;

# 显示数据表

SHOW tables;

# 清空数据表

DELETE from tablenames;

# 删除数据表,特别是Django migrationstable

DROP TABLE tablenames;

小结

浏览器访问:http://127.0.0.1:80

docker-compose logs web

357

357

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?