1.部署node节点

在node执行

cd /opt/src

tar xf kubernetes-v1.20.4-server-linux-amd64.tar.gz -C /opt/

mv /opt/kubernetes /opt/kubernetes-v1.20.4

ln -s /opt/kubernetes-v1.20.4/ /opt/kubernetes

mkdir /opt/kubernetes/server/bin/{cert,conf}

mkdir -p /opt/kubernetes/server/conf/

清理源码包和docker镜像

cd /opt/kubernetes

rm -rf kubernetes-src.tar.gz

cd server/bin

rm -f *.tar

rm -f *_tag

创建命令软连接到系统环境变量下

ln -s /opt/kubernetes/server/bin/kubectl /usr/bin/kubectl

创建kubelet启动脚本

–hostname-override参数每个node节点都不一样,是节点的主机名,注意修改

此配置文件最好写绝对路径否则open kubelet-client-current.pem位置不对

cat >/opt/kubernetes/server/bin/kubelet.sh <<'EOF'

#!/bin/sh

./kubelet \

--v=2 \

--log-dir=/data/logs/kubernetes/kube-kubelet \

--hostname-override=lpc1-203.host.com \

--network-plugin=cni \

--kubeconfig=/opt/kubernetes/server/conf/kubelet.kubeconfig \

--bootstrap-kubeconfig=/opt/kubernetes/server/conf/bootstrap.kubeconfig \

--config=/opt/kubernetes/server/conf/kubelet-config.yml \

--cert-dir=/opt/kubernetes/server/bin/cert \

--pod-infra-container-image=harbor.zykj.com/public/pause:latest \

--root-dir=/data/kubelet

EOF

参数说明:

–hostname-override:显示名称,集群中唯一

–network-plugin:启用CNI

–kubeconfig:空路径,会自动生成,后面用于连接apiserver

–bootstrap-kubeconfig:首次启动向apiserver申请证书

–config:配置参数文件

–cert-dir:kubelet证书生成目录

–pod-infra-container-image:管理Pod网络容器的镜像

WARN: --cluster-dns 应该与api-server配置的cluser-ip 10.5.0.0段一致

创建目录&授权

chmod +x /opt/kubernetes/server/bin/kubelet.sh && \

mkdir -p /data/logs/kubernetes/kube-kubelet && \

mkdir -p /data/kubelet && \

mkdir -p /opt/kubernetes/server/conf/

拷贝证书文件

cd /opt/kubernetes/server/bin/cert && \

scp lpc1-201:/opt/certs/k8s/ca*.pem .

配置参数文件

记得修改clusterDNS IP,该IP为service IP段第二个IP

cat > /opt/kubernetes/server/conf/kubelet-config.yml << EOF

kind: KubeletConfiguration

apiVersion: kubelet.config.k8s.io/v1beta1

address: 0.0.0.0

port: 10250

readOnlyPort: 10255

cgroupDriver: systemd

clusterDNS:

- 10.5.0.2

clusterDomain: cluster.local

failSwapOn: false

authentication:

anonymous:

enabled: false

webhook:

cacheTTL: 2m0s

enabled: true

x509:

clientCAFile: /opt/kubernetes/server/bin/cert/ca.pem

authorization:

mode: Webhook

webhook:

cacheAuthorizedTTL: 5m0s

cacheUnauthorizedTTL: 30s

evictionHard:

imagefs.available: 15%

memory.available: 100Mi

nodefs.available: 10%

nodefs.inodesFree: 5%

maxOpenFiles: 1000000

maxPods: 110

EOF

生成kubelet初次加入集群引导kubeconfig文件

KUBE_APISERVER为 apiserver IP地址

使用ca证书创建集群lpc-k8s,使用的apiserver信息是192.168.1.254这个VIP

TOKEN为先前生成的/opt/kubernetes/cfg/token.csv #两者一定要相同

token=19fe9fedce6d052ccb6b2406cd421746

cd /opt/kubernetes/server/conf/ && \

kubectl config set-cluster kubernetes \

--certificate-authority=/opt/kubernetes/server/bin/cert/ca.pem \

--embed-certs=true \

--server=https://192.168.1.254:7443 \

--kubeconfig=bootstrap.kubeconfig && \

kubectl config set-credentials "kubelet-bootstrap" \

--token="19fe9fedce6d052ccb6b2406cd421746" \

--kubeconfig=bootstrap.kubeconfig && \

kubectl config set-context default \

--cluster=kubernetes \

--user="kubelet-bootstrap" \

--kubeconfig=bootstrap.kubeconfig && \

kubectl config use-context default --kubeconfig=bootstrap.kubeconfig

scp root@lpc1-203:/opt/kubernetes/server/conf/bootstrap.kubeconfig /opt/kubernetes/server/conf/

安装supervisor软件

yum install supervisor -y && \

systemctl start supervisord && \

systemctl enable supervisord

创建supervisor配置

cat >/etc/supervisord.d/kube-kubelet.ini<<EOF

[program:kube-kubelet-1-203]

command=sh /opt/kubernetes/server/bin/kubelet.sh

numprocs=1 ; 启动进程数 (def 1)

directory=/opt/kubernetes/server/bin

autostart=true ; 是否自启 (default: true)

autorestart=true ; 是否自动重启 (default: true)

startsecs=30 ; 服务运行多久判断为成功(def. 1)

startretries=3 ; 启动重试次数 (default 3)

exitcodes=0,2 ; 退出状态码 (default 0,2)

stopsignal=QUIT ; 退出信号 (default TERM)

stopwaitsecs=10 ; 退出延迟时间 (default 10)

user=root ; 运行用户

redirect_stderr=true ; 重定向错误输出到标准输出(def false)

stdout_logfile=/data/logs/kubernetes/kube-kubelet/kubelet.stdout.log

stdout_logfile_maxbytes=64MB ; 日志文件大小 (default 50MB)

stdout_logfile_backups=4 ; 日志文件滚动个数 (default 10)

stdout_capture_maxbytes=1MB ; 设定capture管道的大小(default 0)

;子进程还有子进程,需要添加这个参数,避免产生孤儿进程

killasgroup=true

stopasgroup=true

EOF

supervisorctl update && \

supervisorctl status

批准kubelet证书申请并加入集群

master节点

[root@lpc1-201 cert]# kubectl get csr

NAME AGE SIGNERNAME REQUESTOR CONDITION

node-csr-KFNDhyGfgioMbKB6nawLn_khEGkpxoEDpo3WMupvQZU 87s kubernetes.io/kube-apiserver-client-kubelet kubelet-bootstrap Pending

node-csr-QQsNSGSXwSGolcMAjXASwh68JjuVQ5of9iYa4twmnG8 87s kubernetes.io/kube-apiserver-client-kubelet kubelet-bootstrap Pending

node-csr-YktXGnnv8AUmHOulzF642BRAM62zouzKE10rmna0VR8 87s kubernetes.io/kube-apiserver-client-kubelet kubelet-bootstrap Pending

node-csr-cmtUkLd1VkDne8beHejVG-cXPThjdGu1WwrtqDTqAJ8 87s kubernetes.io/kube-apiserver-client-kubelet kubelet-bootstrap Pending

node-csr-o7epcKzW3B_veq-fYNIEedS-AN7X-hD-YNoGevemjdo 3m33s kubernetes.io/kube-apiserver-client-kubelet kubelet-bootstrap Pending

node-csr-qgS20nmrDPbm_0yXGAS2yFD9mZbxcKmjIk26jL9Ca2I 87s kubernetes.io/kube-apiserver-client-kubelet kubelet-bootstrap Pending

node-csr-uz49Z1iaw1Te77GJLOE_tY_uxFQiHcJoe7mQJvfzQ7U 87s kubernetes.io/kube-apiserver-client-kubelet kubelet-bootstrap Pending

[root@lpc1-201 cert]# kubectl certificate approve node-csr-KFNDhyGfgioMbKB6nawLn_khEGkpxoEDpo3WMupvQZU

certificatesigningrequest.certificates.k8s.io/node-csr-KFNDhyGfgioMbKB6nawLn_khEGkpxoEDpo3WMupvQZU approved

# 查看节点(由于网络插件还没有部署,节点显示准备就绪 NotReady,暂时先忽略。)

kubectl get node

重启所有组件:

supervisorctl restart all

2.部署kube-proxy

4.7.3创建开机ipvs脚本

cat >/etc/ipvs.sh <<'EOF'

#!/bin/bash

ipvs_mods_dir="/usr/lib/modules/$(uname -r)/kernel/net/netfilter/ipvs"

for i in $(ls $ipvs_mods_dir|grep -o "^[^.]*")

do

/sbin/modinfo -F filename $i &>/dev/null

if [ $? -eq 0 ];then

/sbin/modprobe $i

fi

done

EOF

执行脚本开启ipvs

sh /etc/ipvs.sh

验证开启结果

lsmod |grep ip_vs

ip_vs_wrr 12697 0

ip_vs_wlc 12519 0

......略

创建配置文件

cat >/opt/kubernetes/server/bin/kube-proxy.sh <<'EOF'

#!/bin/sh

./kube-proxy \

--v=2 \

--proxy-mode=ipvs \

--ipvs-scheduler=nq \

--cluster-cidr=172.7.0.0/16 \

--config=/opt/kubernetes/server/conf/kube-proxy-config.yml

EOF

授权

chmod +x /opt/kubernetes/server/bin/kube-proxy.sh

配置参数文件

注意clusterCIDR为pod网段

hostnameOverride为master01节点主机名,别写错了

cat > /opt/kubernetes/server/conf/kube-proxy-config.yml << EOF

kind: KubeProxyConfiguration

apiVersion: kubeproxy.config.k8s.io/v1alpha1

bindAddress: 0.0.0.0

metricsBindAddress: 0.0.0.0:10249

clientConnection:

kubeconfig: /opt/kubernetes/server/conf/kube-proxy.kubeconfig

hostnameOverride: lpc1-203.host.com

clusterCIDR: 172.7.0.0/16

EOF

生成kube-proxy.kubeconfig文件

master节点

生成kube-proxy证书:

创建证书请求文件:

cd /opt/certs/k8s

cat >/opt/certs/k8s/kube-proxy-csr.json <<EOF

{

"CN": "system:kube-proxy",

"hosts": [

"127.0.0.1",

"192.168.1.254",

"192.168.1.201",

"192.168.1.202",

"192.168.1.203",

"192.168.1.204",

"192.168.1.205",

"192.168.1.206",

"192.168.1.207",

"192.168.1.208",

"192.168.1.209",

"192.168.1.210",

"192.168.1.211",

"192.168.1.212",

"192.168.1.213",

"192.168.1.214",

"192.168.1.215",

"192.168.1.216"

],

"key": {

"algo": "rsa",

"size": 2048

},

"names": [

{

"C": "CN",

"ST": "hunan",

"L": "changsha",

"O": "k8s",

"OU": "System"

}

]

}

EOF

生成证书:

cfssl gencert -ca=ca.pem -ca-key=ca-key.pem -config=ca-config.json -profile=kubernetes kube-proxy-csr.json | cfssl-json -bare kube-proxy

ll | grep kube-proxy

-rw-r--r-- 1 root root 1001 8月 14 09:40 kube-proxy.csr

-rw-r--r-- 1 root root 223 8月 14 09:40 kube-proxy.json

-rw------- 1 root root 1675 8月 14 09:40 kube-proxy.pem

-rw-r--r-- 1 root root 1367 8月 14 09:40 kube-proxy.pem

拷贝证书文件

cd /opt/kubernetes/server/bin/cert && \

scp lpc1-201:/opt/certs/k8s/kube-proxy*.pem .

生成kubeconfig文件:

注意修改KUBE_APISERVER为 apiserver IP地址

使用ca证书创建集群lpc-k8s,使用的apiserver信息是192.168.1.254这个VIP

cd /opt/kubernetes/server/conf/ && \

kubectl config set-cluster kubernetes \

--certificate-authority=/opt/kubernetes/server/bin/cert/ca.pem \

--embed-certs=true \

--server=https://192.168.1.254:7443 \

--kubeconfig=kube-proxy.kubeconfig && \

kubectl config set-credentials kube-proxy \

--client-certificate=/opt/kubernetes/server/bin/cert/kube-proxy.pem \

--client-key=/opt/kubernetes/server/bin/cert/kube-proxy-key.pem \

--embed-certs=true \

--kubeconfig=kube-proxy.kubeconfig && \

kubectl config set-context default \

--cluster=kubernetes \

--user=kube-proxy \

--kubeconfig=kube-proxy.kubeconfig && \

kubectl config use-context default --kubeconfig=kube-proxy.kubeconfig

(5) 查看生成的kube-proxy.kubeconfig

cat kube-proxy.kubeconfig

apiVersion: v1

clusters:

- cluster:XXXXXXXXXXXXX

server: https://192.168.1.254:7443

name: kubernetes

contexts:

- context:

cluster: kubernetes

user: kube-proxy

name: default

current-context: default

kind: Config

preferences: {}

users:

- name: kube-proxy

user:

client-certificate-data:XXXXXXXXXXXXX

client-key-data:XXXXXXXXXXXXX

scp root@lpc1-203:/opt/kubernetes/server/conf/kube-proxy.kubeconfig /opt/kubernetes/server/conf/

创建kube-proxy的supervisor配置

cat >/etc/supervisord.d/kube-proxy.ini <<'EOF'

[program:kube-proxy-1-203]

command=sh /opt/kubernetes/server/bin/kube-proxy.sh

numprocs=1 ; 启动进程数 (def 1)

directory=/opt/kubernetes/server/bin

autostart=true ; 是否自启 (default: true)

autorestart=true ; 是否自动重启 (default: true)

startsecs=30 ; 服务运行多久判断为成功(def. 1)

startretries=3 ; 启动重试次数 (default 3)

exitcodes=0,2 ; 退出状态码 (default 0,2)

stopsignal=QUIT ; 退出信号 (default TERM)

stopwaitsecs=10 ; 退出延迟时间 (default 10)

user=root ; 运行用户

redirect_stderr=true ; 重定向错误输出到标准输出(def false)

stdout_logfile=/data/logs/kubernetes/kube-proxy/proxy.stdout.log

stdout_logfile_maxbytes=64MB ; 日志文件大小 (default 50MB)

stdout_logfile_backups=4 ; 日志文件滚动个数 (default 10)

stdout_capture_maxbytes=1MB ; 设定capture管道的大小(default 0) ;子进程还有子进程,需要添加这个参数,避免产生孤儿进程

killasgroup=true

stopasgroup=true

EOF

启动服务并检查

mkdir -p /data/logs/kubernetes/kube-proxy && \

supervisorctl update && \

supervisorctl status

3 部署网络组件Calico

Calico是一个纯三层的数据中心网络方案,是目前Kubernetes主流的网络方案。

官网:https://projectcalico.docs.tigera.io/about/about-calico

docker pull calico/cni:v3.14.2 && \

docker pull calico/pod2daemon-flexvol:v3.14.2 && \

docker pull calico/node:v3.14.2 && \

docker pull calico/kube-controllers:v3.14.2 && \

docker tag calico/cni:v3.14.2 harbor.zykj.com/public/calico-cni:v3.14.2 && \

docker tag calico/pod2daemon-flexvol:v3.14.2 harbor.zykj.com/public/calico-pod2daemon-flexvol:v3.14.2 && \

docker tag calico/node:v3.14.2 harbor.zykj.com/public/calico-node:v3.14.2 && \

docker tag calico/kube-controllers:v3.14.2 harbor.zykj.com/public/calico-kube-controllers:v3.14.2 && \

docker push harbor.zykj.com/public/calico-cni:v3.14.2 && \

docker push harbor.zykj.com/public/calico-pod2daemon-flexvol:v3.14.2 && \

docker push harbor.zykj.com/public/calico-node:v3.14.2 && \

docker push harbor.zykj.com/public/calico-kube-controllers:v3.14.2

配置文件K8S 统一FTP访问服务 nginx主从节点都要执行

mkdir -p /data/k8s-yaml/calico/

cat > /etc/nginx/conf.d/k8s-yaml.zykj.com.conf <<'EOF'

server {

listen 80;

server_name k8s-yaml.zykj.com;

location / {

autoindex on;

default_type text/plain;

root /data/k8s-yaml;

}

}

EOF

重新加载nginx

nginx -t

nginx -s reload

序列号向前滚动一位

新增一个k8s-yaml的业务域名:

# vi /var/named/zykj.com.zone

k8s-yaml A 192.168.1.218

systemctl restart named

验证服务能够访问

dig -t A k8s-yaml.zykj.com +short

部署Calico:

kubectl create -f http://k8s-yaml.zykj.com/calico/calico.yaml && \

kubectl create -f http://k8s-yaml.zykj.com/calico/apiserver-to-kubelet-rbac.yaml

注意:

1.calico.yaml修改时注意空格,否则排除问题怀疑人生

2.准备环境的时候,各node节点的/etc/hosts里面的默认记录,也就是localhost记录,一定不要删除或者误删,否则会出现以下报错,pod是运行的,但是健康检查一直无法通过

查看calico状态:

[root@lpc1-201 src]# kubectl get po,svc,deploy -A -o wide

NAMESPACE NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

kube-system pod/calico-kube-controllers-7584bff477-ltwwk 1/1 Running 0 30s 172.7.169.192 lpc1-206.host.com <none> <none>

kube-system pod/calico-node-84w58 0/1 Running 0 29s 192.168.1.205 lpc1-205.host.com <none> <none>

kube-system pod/calico-node-j8d9k 0/1 Running 0 29s 192.168.1.204 lpc1-204.host.com <none> <none>

kube-system pod/calico-node-j8dv2 0/1 Running 0 29s 192.168.1.203 lpc1-203.host.com <none> <none>

kube-system pod/calico-node-l5v4v 0/1 Running 0 29s 192.168.1.207 lpc1-207.host.com <none> <none>

kube-system pod/calico-node-p9ljc 0/1 Running 0 29s 192.168.1.209 lpc1-209.host.com <none> <none>

kube-system pod/calico-node-w556n 0/1 Running 0 29s 192.168.1.208 lpc1-208.host.com <none> <none>

kube-system pod/calico-node-w8v79 0/1 Running 0 29s 192.168.1.206 lpc1-206.host.com <none> <none>

NAMESPACE NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE SELECTOR

default service/kubernetes ClusterIP 10.5.0.1 <none> 443/TCP 7h8m <none>

NAMESPACE NAME READY UP-TO-DATE AVAILABLE AGE CONTAINERS IMAGES SELECTOR

kube-system deployment.apps/calico-kube-controllers 1/1 1 1 30s calico-kube-controllers harbor.zykj.com/public/calico-kube-controllers:v3.14.2 k8s-app=calico-kube-controllers

查看node状态:

[root@lpc1-201 src]# kubectl get node

NAME STATUS ROLES AGE VERSION

lpc1-203.host.com Ready <none> 6h25m v1.20.4

lpc1-204.host.com Ready <none> 6h25m v1.20.4

lpc1-205.host.com Ready <none> 6h25m v1.20.4

lpc1-206.host.com Ready <none> 6h25m v1.20.4

lpc1-207.host.com Ready <none> 6h25m v1.20.4

lpc1-208.host.com Ready <none> 6h26m v1.20.4

lpc1-209.host.com Ready <none> 6h29m v1.20.4

授权apiserver访问kubelet

cat > /opt/src/apiserver-to-kubelet-rbac.yaml << 'EOF'

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRole

metadata:

annotations:

rbac.authorization.kubernetes.io/autoupdate: "true"

labels:

kubernetes.io/bootstrapping: rbac-defaults

name: system:kube-apiserver-to-kubelet

rules:

- apiGroups:

- ""

resources:

- nodes/proxy

- nodes/stats

- nodes/log

- nodes/spec

- nodes/metrics

- pods/log

verbs:

- "*"

---

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRoleBinding

metadata:

name: system:kube-apiserver

namespace: ""

roleRef:

apiGroup: rbac.authorization.k8s.io

kind: ClusterRole

name: system:kube-apiserver-to-kubelet

subjects:

- apiGroup: rbac.authorization.k8s.io

kind: User

name: kubernetes

EOF

执行:

kubectl apply -f apiserver-to-kubelet-rbac.yaml

4 部署CoreDNS

从coredns开始,我们使用声明式向k8s中交付容器的方式,来部署服务

获取coredns的docker镜像

以下操作可以在任意节点上完成,推荐在1.218上做,因为接下来制作coredns的k8s配置清单也是在运维主机1.218上创建后,再到node节点上应用

docker pull docker.io/coredns/coredns:1.6.5 && \

docker tag coredns/coredns:1.6.5 harbor.zykj.com/public/coredns:v1.6.5 && \

docker push harbor.zykj.com/public/coredns:v1.6.5

创建coredns的资源配置清单

以下资源配置清单,都是参考官网改出来的

mkdir -p /data/k8s-yaml/coredns

rbac集群权限清单

cat >/data/k8s-yaml/coredns/rbac.yaml <<EOF

apiVersion: v1

kind: ServiceAccount

metadata:

name: coredns

namespace: kube-system

labels:

kubernetes.io/cluster-service: "true"

addonmanager.kubernetes.io/mode: Reconcile

---

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRole

metadata:

labels:

kubernetes.io/bootstrapping: rbac-defaults

addonmanager.kubernetes.io/mode: Reconcile

name: system:coredns

rules:

- apiGroups:

- ""

resources:

- endpoints

- services

- pods

- namespaces

verbs:

- list

- watch

---

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRoleBinding

metadata:

annotations:

rbac.authorization.kubernetes.io/autoupdate: "true"

labels:

kubernetes.io/bootstrapping: rbac-defaults

addonmanager.kubernetes.io/mode: EnsureExists

name: system:coredns

roleRef:

apiGroup: rbac.authorization.k8s.io

kind: ClusterRole

name: system:coredns

subjects:

- kind: ServiceAccount

name: coredns

namespace: kube-system

EOF

configmap配置清单

cat >/data/k8s-yaml/coredns/cm.yaml <<EOF

apiVersion: v1

kind: ConfigMap

metadata:

name: coredns

namespace: kube-system

data:

Corefile: |

.:53 {

errors

log

health

ready

kubernetes cluster.local 10.5.0.0/16 #service资源cluster地址

forward . 192.168.1.201 #上级DNS地址

cache 30

loop

reload

loadbalance

}

EOF

depoly控制器清单

cat >/data/k8s-yaml/coredns/dp.yaml <<EOF

apiVersion: apps/v1

kind: Deployment

metadata:

name: coredns

namespace: kube-system

labels:

k8s-app: coredns

kubernetes.io/cluster-service: "true"

addonmanager.kubernetes.io/mode: Reconcile

kubernetes.io/name: "CoreDNS"

spec:

strategy:

type: RollingUpdate

rollingUpdate:

maxUnavailable: 1

selector:

matchLabels:

k8s-app: coredns

template:

metadata:

labels:

k8s-app: coredns

annotations:

seccomp.security.alpha.kubernetes.io/pod: 'docker/default'

spec:

priorityClassName: system-cluster-critical

serviceAccountName: coredns

tolerations:

- key: node-role.kubernetes.io/master

effect: NoSchedule

- key: "CriticalAddonsOnly"

operator: "Exists"

containers:

- name: coredns

image: harbor.zykj.com/public/coredns:v1.6.5

imagePullPolicy: IfNotPresent

resources:

limits:

memory: 170Mi

requests:

cpu: 100m

memory: 70Mi

args:

- -conf

- /etc/coredns/Corefile

volumeMounts:

- name: config-volume

mountPath: /etc/coredns

readOnly: true

ports:

- containerPort: 53

name: dns

protocol: UDP

- containerPort: 53

name: dns-tcp

protocol: TCP

- containerPort: 9153

name: metrics

protocol: TCP

livenessProbe:

httpGet:

path: /health

port: 8080

scheme: HTTP

initialDelaySeconds: 60

timeoutSeconds: 5

successThreshold: 1

failureThreshold: 5

securityContext:

allowPrivilegeEscalation: false

capabilities:

add:

- NET_BIND_SERVICE

drop:

- all

readOnlyRootFilesystem: true

dnsPolicy: Default

volumes:

- name: config-volume

configMap:

name: coredns

items:

- key: Corefile

path: Corefile

EOF

service资源清单

cat >/data/k8s-yaml/coredns/svc.yaml <<EOF

apiVersion: v1

kind: Service

metadata:

name: coredns

namespace: kube-system

labels:

k8s-app: coredns

kubernetes.io/cluster-service: "true"

addonmanager.kubernetes.io/mode: Reconcile

kubernetes.io/name: "CoreDNS"

spec:

selector:

k8s-app: coredns

clusterIP: 10.5.0.2

ports:

- name: dns

port: 53

protocol: UDP

- name: dns-tcp

port: 53

- name: metrics

port: 9153

protocol: TCP

EOF

Note: 上面的10.5.0.2是与/opt/Kubernetes/server/bin/kubelet.sh中的—cluster-dns配置一样,否则配置会失败

创建资源并验证

在任意NODE节点执行配置都可以

创建资源:

kubectl create -f http://k8s-yaml.zykj.com/coredns/rbac.yaml && \

kubectl create -f http://k8s-yaml.zykj.com/coredns/cm.yaml && \

kubectl create -f http://k8s-yaml.zykj.com/coredns/dp.yaml && \

kubectl create -f http://k8s-yaml.zykj.com/coredns/svc.yaml

查看创建情况:

[root@lpc1-201 conf.d]# kubectl get all -n kube-system

NAME READY STATUS RESTARTS AGE

pod/calico-kube-controllers-7584bff477-ltwwk 1/1 Running 0 30m

pod/calico-node-84w58 1/1 Running 0 30m

pod/calico-node-j8d9k 1/1 Running 0 30m

pod/calico-node-j8dv2 1/1 Running 0 30m

pod/calico-node-l5v4v 1/1 Running 0 30m

pod/calico-node-p9ljc 1/1 Running 0 30m

pod/calico-node-w556n 1/1 Running 0 30m

pod/calico-node-w8v79 1/1 Running 0 30m

pod/coredns-f68d5648d-6bw8b 1/1 Running 0 10s

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

service/coredns ClusterIP 10.5.0.2 <none> 53/UDP,53/TCP,9153/TCP 6s

NAME DESIRED CURRENT READY UP-TO-DATE AVAILABLE NODE SELECTOR AGE

daemonset.apps/calico-node 7 7 7 7 7 kubernetes.io/os=linux 30m

NAME READY UP-TO-DATE AVAILABLE AGE

deployment.apps/calico-kube-controllers 1/1 1 1 30m

deployment.apps/coredns 1/1 1 1 10s

NAME DESIRED CURRENT READY AGE

replicaset.apps/calico-kube-controllers-7584bff477 1 1 1 30m

replicaset.apps/coredns-f68d5648d 1 1 1 10s

[root@lpc1-201 conf.d]# kubectl get svc -o wide -n kube-system

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE SELECTOR

coredns ClusterIP 10.5.0.2 <none> 53/UDP,53/TCP,9153/TCP 2m7s k8s-app=coredns

dig -t A www.baidu.com @10.5.0.2 +short

使用dig测试解析

Note: 下面的命令只能中Node节点中执行,否则会报找不到

dig -t A harbor.zykj.com @10.5.0.2 +short

coredns已经能解析外网域名了,因为coredns的配置中,写了他的上级DNS为192.168.1.201,如果它自己解析不出来域名,会通过递归查询一级级查找

但coredns我们不是用来做外网解析的,而是用来做service名和serviceIP的解析

创建一个service资源来验证

先查看kube-public名称空间有没有pod

[root@lpc1-218 ~]kubectl get pod -n kube-public

No resources found.

之前我调试问题已经清理了所有的POD,所以没有

如果没有则先创建pod

kubectl create deployment nginx-dp --image=harbor.zykj.com/public/nginx:v1.17.9 -n kube-public

# kubectl get deployments -n kube-public

NAME READY UP-TO-DATE AVAILABLE AGE

nginx-dp 1/1 1 1 35s

# kubectl get pod -n kube-public

NAME READY STATUS RESTARTS AGE

nginx-dp-568f8dc55-rxvx2 1/1 Running 0 56s

给pod创建一个service

kubectl expose deployment nginx-dp --port=80 -n kube-public

kubectl -n kube-public get service

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

nginx-dp ClusterIP 10.5.235.27 <none> 80/TCP 5s

验证是否可以解析

dig -t A nginx-dp @10.5.0.2 +short

; <<>> DiG 9.11.4-P2-RedHat-9.11.4-26.P2.el7_9.13 <<>> -t A nginx-dp @10.5.0.2 +short

;; global options: +cmd

;; connection timed out; no servers could be reached

发现无返回数据,难道解析不了

其实是需要完整域名:服务名.名称空间.svc.cluster.local.

dig -t A nginx-dp.kube-public.svc.cluster.local @10.5.0.2 +short

可以看到我们没有手动添加任何解析记录,我们nginx-dp的service资源的IP,已经被解析了:

进入到pod内部再次验证

kubectl -n kube-public exec -it nginx-dp-568f8dc55-rxvx2 /bin/bash

apt update && update

apt-get install iputils-ping

# ping nginx-dp

PING nginx-dp.kube-public.svc.cluster.local (192.168.191.232): 56 data bytes

64 bytes from 192.168.191.232: icmp_seq=0 ttl=64 time=0.184 ms

64 bytes from 192.168.191.232: icmp_seq=1 ttl=64 time=0.225 ms

为什么在容器中不用加全域名?

cat /etc/resolv.conf

nameserver 10.5.0.2

search kube-public.svc.cluster.local svc.cluster.local cluster.local host.com

options ndots:5

当我进入到pod内部以后,会发现我们的dns地址是我们的coredns地址,以及搜索域中已经添加了搜索域:

kube-public.svc.cluster.local

我们解决了在集群内部解析的问题,要想在集群外部访问我们的服务还需要igerss服务暴露功能

现在,我们已经解决了在集群内部解析的问题,但是我们怎么做到在集群外部访问我们的服务呢?

kubectl delete deployments nginx-dp -n kube-public

K8S核心插件-ingress(服务暴露)控制器-traefik

K8S两种服务暴露方法

前面通过coredns在k8s集群内部做了serviceNAME和serviceIP之间的自动映射,使得不需要记录service的IP地址,只需要通过serviceNAME就能访问POD

但是在K8S集群外部,显然是不能通过serviceNAME或serviceIP来解析服务的

要在K8S集群外部来访问集群内部的资源,需要用到服务暴露功能

K8S常用的两种服务暴露方法:

- 使用NodePort型的Service nodeport型的service原理相当于端口映射,将容器内的端口映射到宿主机上的某个端口。

K8S集群不能使用ipvs的方式调度,必须使用iptables,且只支持rr模式 - 使用Ingress资源 Ingress是K8S API标准资源之一,也是核心资源

是一组基于域名和URL路径的规则,把用户的请求转发至指定的service资源

可以将集群外部的请求流量,转发至集群内部,从而实现’服务暴露’

Ingress控制器是什么

可以理解为一个简化版本的nginx

Ingress控制器是能够为Ingress资源健康某套接字,然后根据ingress规则匹配机制路由调度流量的一个组件

只能工作在七层网络下,建议暴露http, https可以使用前端nginx来做证书方面的卸载

我们使用的ingress控制器为Traefik

traefik:https://github.com/containous/traefik

部署traefik

同样的,现在1.218(运维主机)完成docker镜像拉取和配置清单创建,然后再到任意master节点执行配置清单

准备docker镜像

mkdir -p /data/traefik/config && \

mkdir -p /data/logs/traefik/logs && \

docker pull traefik:v2.9.10 && \

docker tag traefik:v2.9.10 harbor.zykj.com/public/traefik:v2.9.10 && \

docker push harbor.zykj.com/public/traefik:v2.9.10

创建资源清单

mkdir -p /data/k8s-yaml/traefik

rbac授权清单

cat >/data/k8s-yaml/traefik/rbac.yaml <<EOF

apiVersion: v1

kind: ServiceAccount

metadata:

name: traefik-ingress-controller

namespace: kube-system

---

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRole

metadata:

name: traefik-ingress-controller

rules:

- apiGroups:

- ""

resources:

- services

- endpoints

- secrets

verbs:

- get

- list

- watch

- apiGroups:

- extensions

resources:

- ingresses

verbs:

- get

- list

- watch

---

kind: ClusterRoleBinding

apiVersion: rbac.authorization.k8s.io/v1

metadata:

name: traefik-ingress-controller

roleRef:

apiGroup: rbac.authorization.k8s.io

kind: ClusterRole

name: traefik-ingress-controller

subjects:

- kind: ServiceAccount

name: traefik-ingress-controller

namespace: kube-system

EOF

cat >/data/k8s-yaml/traefik/ds.yaml <<EOF

apiVersion: apps/v1

kind: DaemonSet

metadata:

name: traefik-ingress

namespace: kube-system

labels:

k8s-app: traefik-ingress

spec:

selector:

matchLabels:

name: traefik-ingress

template:

metadata:

labels:

k8s-app: traefik-ingress

name: traefik-ingress

annotations:

prometheus_io_scheme: "traefik"

prometheus_io_path: "/metrics"

prometheus_io_port: "8080"

spec:

serviceAccountName: traefik-ingress-controller

terminationGracePeriodSeconds: 60

containers:

- image: harbor.zykj.com/public/traefik:v2.2.11

name: traefik-ingress

env:

- name: KUBERNETES_SERVICE_HOST # 手动指定k8s api,避免网络组件不稳定

value: "192.168.1.254"

- name: KUBERNETES_SERVICE_PORT_HTTPS # API server端口

value: "7443"

- name: KUBERNETES_SERVICE_PORT # API server端口

value: "7443"

- name: TZ # 指定时区

value: "Asia/Shanghai"

ports:

- name: controller

containerPort: 80

hostPort: 182

- name: admin-web

containerPort: 8080

securityContext:

capabilities:

drop:

- ALL

add:

- NET_BIND_SERVICE

args:

- --api.insecure

- --providers.kubernetesingress

tolerations: # 设置容忍所有污点,防止节点被设置污点

- operator: "Exists"

hostNetwork: true # 开启host网络,提高网络入口的网络性能

dnsConfig: # 开启host网络后,默认使用主机的dns配置,添加coredns配置

nameservers:

- 10.5.0.2

searches:

- traefik.svc.cluster.local

- svc.cluster.local

- cluster.local

options:

- name: ndots

value: "5"

EOF

service清单

cat >/data/k8s-yaml/traefik/svc.yaml <<EOF

kind: Service

apiVersion: v1

metadata:

name: traefik-ingress-service

namespace: kube-system

spec:

selector:

k8s-app: traefik-ingress

ports:

- protocol: TCP

port: 80

name: controller

- protocol: TCP

port: 8080

name: admin-web

EOF

ingress清单

cat >/data/k8s-yaml/traefik/ingress.yaml <<EOF

apiVersion: networking.k8s.io/v1

kind: Ingress

metadata:

name: traefik-web-ui

namespace: kube-system

annotations:

kubernetes.io/ingress.class: traefik

spec:

rules:

- host: traefik.zykj.com

http:

paths:

- path: /

pathType: Prefix

backend:

service:

name: traefik-web-ui

port:

number: 8080

EOF

创建资源

在主节点上创建资源

kubectl create -f http://k8s-yaml.zykj.com/traefik/rbac.yaml && \

kubectl create -f http://k8s-yaml.zykj.com/traefik/ds.yaml && \

kubectl create -f http://k8s-yaml.zykj.com/traefik/svc.yaml && \

kubectl create -f http://k8s-yaml.zykj.com/traefik/ingress.yaml

在前端nginx上做反向代理

在所有Node节点上,都做反向代理,将泛域名的解析都转发到traefik上去

cat >/etc/nginx/conf.d/zykj.com.conf <<'EOF'

upstream default_backend_traefik {

server 192.168.1.203:182 max_fails=3 fail_timeout=10s;

server 192.168.1.204:182 max_fails=3 fail_timeout=10s;

server 192.168.1.205:182 max_fails=3 fail_timeout=10s;

server 192.168.1.206:182 max_fails=3 fail_timeout=10s;

server 192.168.1.207:182 max_fails=3 fail_timeout=10s;

server 192.168.1.208:182 max_fails=3 fail_timeout=10s;

server 192.168.1.209:182 max_fails=3 fail_timeout=10s;

}

server {

server_name *.zykj.com;

location / {

proxy_pass http://default_backend_traefik;

proxy_set_header Host $http_host;

proxy_set_header x-forwarded-for $proxy_add_x_forwarded_for;

}

}

EOF

# 重启nginx服务

nginx -t

nginx -s reload

在bind9中添加域名解析

需要将traefik 服务的解析记录添加的DNS解析中,注意是绑定到VIP上

vim /var/named/zykj.com.zone

........

traefik A 192.168.1.217

注意前滚serial编号

重启named服务

systemctl restart named

#dig验证解析结果

# dig -t A traefik.zykj.com +short

在集群外访问验证

在集群外,访问http://traefik.zykj.com,如果能正常显示web页面.说明我们已经暴露服务成功

Rancher2.x安装及k8s集群部署

官网地址:

https://rancher.com/

安装rancher:

在运维主机上下载rancher镜像

docker pull rancher/rancher:v2.4.5 && \

docker tag rancher/rancher:v2.4.5 harbor.zykj.com/public/rancher:v2.4.5 && \

docker push harbor.zykj.com/public/rancher:v2.4.5

mkdir -p /opt/certs/rancher_ssl && \

cd /opt/certs/rancher_ssl

生成自签证书

参考:https://www.cnblogs.com/hzw97/p/11608098.html

创建root CA私钥

[root@lpc1-201 rancher_ssl]# openssl req -newkey rsa:4096 -nodes -sha256 -keyout ca.key -x509 -days 3650 -out ca.crt

Generating a 4096 bit RSA private key

..........................................................................................................................................................................................................................................................................................................................................................................................................................++

..................................................................++

writing new private key to 'ca.key'

-----

You are about to be asked to enter information that will be incorporated

into your certificate request.

What you are about to enter is what is called a Distinguished Name or a DN.

There are quite a few fields but you can leave some blank

For some fields there will be a default value,

If you enter '.', the field will be left blank.

-----

Country Name (2 letter code) [XX]:CN

State or Province Name (full name) []:HUNAN

Locality Name (eg, city) [Default City]:CHANGSHA

Organization Name (eg, company) [Default Company Ltd]:zykj

Organizational Unit Name (eg, section) []:OPS

Common Name (eg, your name or your server's hostname) []:rancher.zykj.com

Email Address []:test@test.com

为服务端(web)生成证书签名请求文件

[root@lpc1-201 rancher_ssl]# openssl req -newkey rsa:4096 -nodes -sha256 -keyout rancher.zykj.com.key -out rancher.zykj.com.csr

Generating a 4096 bit RSA private key

.................++

............................................++

writing new private key to 'rancher.zykj.com.key'

-----

You are about to be asked to enter information that will be incorporated

into your certificate request.

What you are about to enter is what is called a Distinguished Name or a DN.

There are quite a few fields but you can leave some blank

For some fields there will be a default value,

If you enter '.', the field will be left blank.

-----

Country Name (2 letter code) [XX]:CN

State or Province Name (full name) []:HUNAN

Locality Name (eg, city) [Default City]:CHANGSHA

Organization Name (eg, company) [Default Company Ltd]:zykj

Organizational Unit Name (eg, section) []:OPS

Common Name (eg, your name or your server's hostname) []:rancher.zykj.com

Email Address []:test@test.com

Please enter the following 'extra' attributes

to be sent with your certificate request

A challenge password []:

An optional company name []:

用创建的CA证书给生成的签名请求进行签名

[root@lpc1-201 rancher_ssl]# openssl x509 -req -days 3650 -in rancher.zykj.com.csr -CA ca.crt -CAkey ca.key -CAcreateserial -out rancher.zykj.com.crt

Signature ok

subject=/C=CN/ST=HUNAN/L=CHANGSHA/O=zykj/OU=OPS/CN=rancher.zykj.com/emailAddress=test@test.com

Getting CA Private Key

在生成证书的文件目录下执行以下命令

cp rancher.zykj.com.crt rancher.zykj.com.pem && \

cp ca.crt ca.pem && \

cp rancher.zykj.com.key rancher.zykj.com_key.pem

在域名配置服务器添加rancher.zykj.com域名解析

vim /var/named/zykj.com.zone

新增一条记录

rancher A 192.168.1.201

再把序列号向前滚一位数: ; serial

1.202服务器上执行

mkdir -p /opt/certs/rancher_ssl && \

cd /opt/certs/rancher_ssl && \

scp lpc1-201:/opt/certs/rancher_ssl/rancher.zykj.com*.pem .

scp lpc1-201:/opt/certs/rancher_ssl/ca.pem .

在代理节点上用Nginx做转发

# cat > /etc/nginx/conf.d/rancher.zykj.com.conf << EOF

server {

listen 443 ssl;

server_name rancher.zykj.com;

ssl_certificate /opt/certs/rancher_ssl/rancher.zykj.com.pem;

ssl_certificate_key /opt/certs/rancher_ssl/rancher.zykj.com_key.pem;

ssl_session_timeout 5m;

location / {

proxy_pass https://192.168.1.201:442;

proxy_set_header Host $host;

proxy_set_header X-Real-IP $remote_addr;

proxy_set_header X-Forwarded-For $proxy_add_x_forwarded_for;

proxy_set_header Accept-Encoding 'gzip';

# proxy_connect_timeout 90;

# proxy_send_timeout 90;

# proxy_read_timeout 90;

# proxy_buffer_size 4k;

# proxy_buffers 4 32k;

# proxy_busy_buffers_size 64k;

# proxy_temp_file_write_size 64k;

# 大文件传输时设置,比如docker镜像上传私有仓库

client_max_body_size 2G;

# 支持wss协议方式转发

proxy_http_version 1.1;

proxy_set_header Upgrade $http_upgrade;

proxy_set_header Connection 'upgrade';

}

error_page 500 502 503 504 /50x.html;

location = /50x.html {

root /usr/share/nginx/html;

}

}

EOF

测试.conf文件并重启nginx

nginx -t

nginx -s reload

创建日志目录

mkdir -p /data/rancher/logs

下面步骤在rancher主机上执行rancher镜像

docker run -d --restart=unless-stopped \

--privileged \

-p 79:80 -p 442:443 \

-e AUDIT_LEVEL=1 \

-v /data/rancher/logs:/var/log/auditlog \

-e AUDIT_LOG_PATH=/var/log/auditlog/rancher-api-audit.log \

-e AUDIT_LOG_MAXAGE=20 \

-e AUDIT_LOG_MAXBACKUP=20 \

-e AUDIT_LOG_MAXSIZE=100 \

-v /opt/certs/rancher_ssl/rancher.zykj.com.pem:/etc/rancher/ssl/cert.pem \

-v /opt/certs/rancher_ssl/rancher.zykj.com_key.pem:/etc/rancher/ssl/key.pem \

-v /opt/certs/rancher_ssl/ca.pem:/etc/rancher/ssl/cacerts.pem \

harbor.zykj.com/public/rancher:v2.4.5

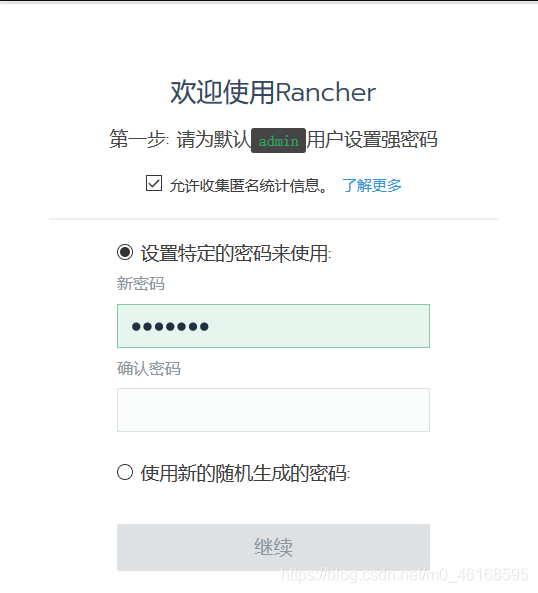

rancher的web界面

打开浏览器输入rancher.zykj.com即可访问,第一次要求设置密码

设置需要访问的URL地址

设置中文字体

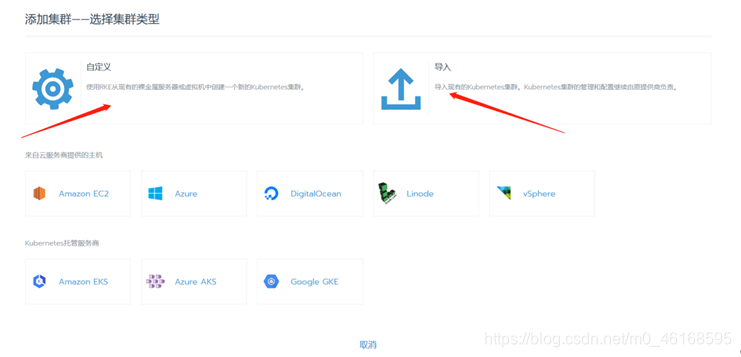

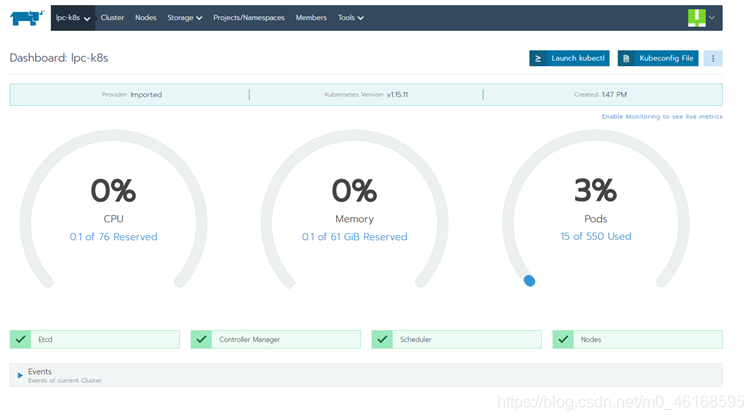

添加集群

生成自己的集群

可以自定义RKE集群,也可以导入自己已经建好的集群。

因为前面我们已经建好了K8S集群,直接用导入方式即可

导入创建好的集群

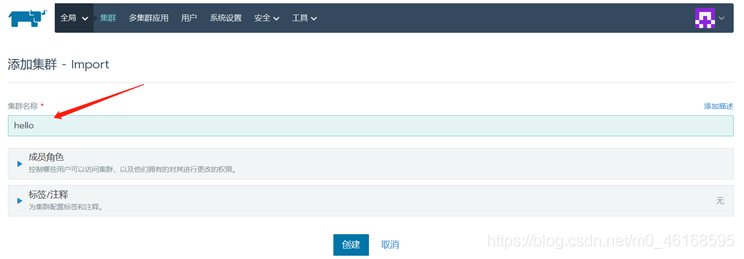

随便定义一个集群名

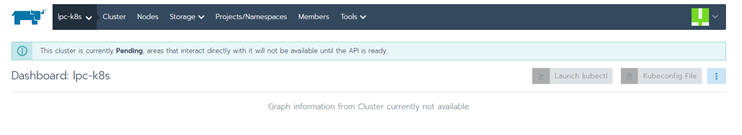

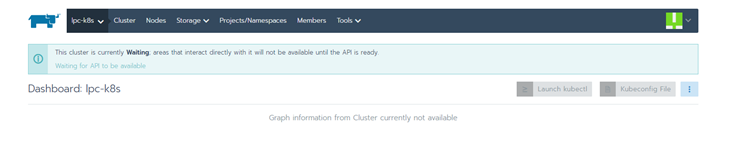

点击创建,中到添加集群 – import界面

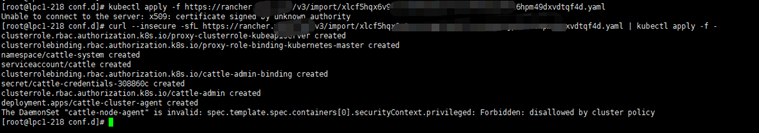

复制上面两条命令在master节点运行,运行第一条命令时,由于是自签证书会报x509错误,在执行下面一条命令跳过错误就好

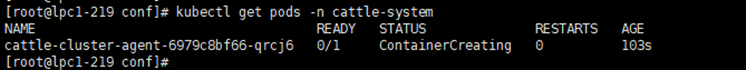

当在master节点看到

kubectl get pods -n cattle-system

611

611

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?