Zookeeper3.7源码剖析

Zookeeper3.7源码剖析

1 Zookeeper源码导入

Zookeeper是一个高可用的分布式数据管理和协调框架,并且能够很好的保证分布式环境中数据的一致性。在越来越多的分布式系。在越来越多的分布式系统(Hadoop、HBase、Kafka)中,Zookeeper都作为核心组件使用。

我们当前课程主要是研究Zookeeper源码,需要将Zookeeper工程导入到IDEA中,老版的zk是通过ant进行编译的,但最新的zk(3.7)源码中已经没了 build.xml ,而多了 pom.xml ,也就是说构建方式由原先的Ant变成了Maven,源码下下来后,直接编译、运行是跑不起来的,有一些配置需要调整。

我们当前课程主要是研究Zookeeper源码,需要将Zookeeper工程导入到IDEA中,老版的zk是通过ant进行编译的,但最新的zk(3.7)源码中已经没了 build.xml ,而多了 pom.xml ,也就是说构建方式由原先的Ant变成了Maven,源码下下来后,直接编译、运行是跑不起来的,有一些配置需要调整。

1.1 工程导入

Zookeeper各个版本源码下载地址,我们可以在该仓库下选择

不同的版本,我们选择最新版本,当前最新版本为3.7,如下图:

找到项目下载地址,我们选择 https 地址,并复制该地址,通过该地址把项目导入到 IDEA 中。

点击IDEA的 VSC>Checkout from Version Controller>GitHub ,操作如下图:

克隆项目到本地:

项目导入本地后,效果如下:

项目运行的时候,缺一个版本对象,创建 org.apache.zookeeper.version.Info ,代码如下:

public interface Info {

public static final int MAJOR=3;

public static final int MINOR=4;

public static final int MICRO=6;

public static final String QUALIFIER=null;

public static final int REVISION=-1;

public static final String REVISION_HASH = "1";

public static final String BUILD_DATE="2020-12-03 09:29:06";

}

1.2 Zookeeper源码错误解决

在 zookeeper-server 中找到 org.apache.zookeeper.server.quorum.QuorumPeerMain 并启动该类,启动前做如下配置:

启动的时候会会报很多错误,比如缺包、缺对象,如下几幅图:

为了解决上面的错误,我们需要手动引入一些包, pom.xml 引入如下依赖:

<!--引入依赖-->

<dependency>

<groupId>io.dropwizard.metrics</groupId>

<artifactId>metrics-core</artifactId>

<version>3.1.0</version>

</dependency>

<dependency>

<groupId>org.xerial.snappy</groupId>

<artifactId>snappy-java</artifactId>

<version>1.1.7.3</version>

</dependency>

<dependency>

<groupId>org.eclipse.jetty</groupId>

<artifactId>jetty-server</artifactId>

</dependency>

<dependency>

<groupId>org.eclipse.jetty</groupId>

<artifactId>jetty-servlet</artifactId>

</dependency>

<dependency>

<groupId>commons-cli</groupId>

<artifactId>commons-cli</artifactId>

</dependency>

1.3 Zookeeper命令

我们要想学习Zookeeper,需要先学会使用Zookeeper,它有很多丰富的命令,借助这些命令可以深入理解Zookeeper,我们启动源码中的客户端就可以使用Zookeeper相关命令。

启动客户端 org.apache.zookeeper.ZooKeeperMain ,如下图

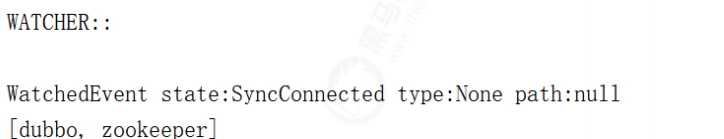

启动后,日志如下:

1)节点列表: ls /

ls / [dubbo, zookeeper]

ls /dubbo

[com.service.CarService]

2)查看节点状态: stat /dubbo

stat /dubbo

cZxid = 0x3

ctime = Thu Dec 03 09:19:29 CST 2020

mZxid = 0x3

mtime = Thu Dec 03 09:19:29 CST 2020

pZxid = 0x4

cversion = 1

dataVersion = 0

aclVersion = 0

ephemeralOwner = 0x0

dataLength = 13

numChildren = 1

节点信息参数说明如下:

| key | value |

|---|---|

| cZxid = 0x3 | 节点被创建时的事物的ID |

| ctime = Thu Dec 03 09:19:29 CST 2020 | 创建时间 |

| mZxid = 0x31 | 节点最后一次被修改时的事物的ID |

| mtime = Sat Mar 16 15:38:34 CST 2019 | 最后 一次修改时间 |

| pZxid = 0x31 | 子节点列表最近一次被修改的事物ID |

| cversion = 0 | 子节点版本号 |

| dataVersion = 0 | 节点被创建时的事物的ID |

| aclVersion = 0 | ACL版本号 |

| ephemeralOwner = 0x0 | 创建临时节点的事物ID,持久节点事物为0 dataLength = 22 数据长度,每个节点都可保存数据 |

| numChildren = 0 | 子节点的个数 |

3)创建节点: create /dubbo/code java

create /dubbo/code java

Created /dubbo/code

其中code表示节点,java表示节点下的内容。

4)查看节点数据: get /dubbo/code

get /dubbo/code

java

5)删除节点: delete /dubbo/code || deleteall /dubbo/code

删除没有子节点的节点: delete /dubbo/code

删除所有子节点: deleteall /dubbo/code

6)历史操作命令: history

history

1 - ls /dubbo

2 - ls /dubbo/code

3 - get /dubbo/code

4 - get /dubbo/code

5 - create /dubbo/code java

6 - get /dubbo/code

7 - get /dubbo/code

8 - delete /dubbo/code

9 - get /dubbo/code

10 - listquota path

11 - history

1.4 Zookeeper分析工具

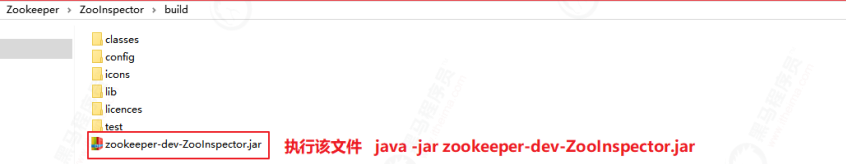

Zookeeper安装比较方便,在安装一个集群以后,查看数据却比较麻烦,下面介绍Zookeeper的数据查看工具——ZooInspector。

下载地址

下载压缩包后,解压后,我们需要运行 zookeeper-dev-ZooInspector.jar :

输入账号密码,就可以连接Zookeeper了,如下图:

连接后,Zookeeper信息如下:

节点操作:增加节点、修改节点、删除节点

2 ZK服务启动流程源码剖析

ZooKeeper 可以以 standalone 、分布式的方式部署, standalone 模式下只有一台机器作为服务器, ZooKeeper 会丧失高可用特性,分布式是使用多个机器,每台机器上部署一个 ZooKeeper 服务器,即使有服务器宕机,只要少于半数, ZooKeeper 集群依然可以正常对外提供服务,集群状态下 Zookeeper 是具备高可用特性。

我们接下来对 ZooKeeper 以 standalone 模式启动以及集群模式做一下源码分析。

2.1 ZK单机/集群启动流程

如上图,上图是 Zookeeper 单机/集群启动流程,每个细节所做的事情都在上图有说明,我们接下来按照流程图对源码进行分析。

2.2 ZK启动入口分析

启动入口类:QuorumPeerMain

该类是 zookeeper 单机/集群的启动入口类,是用来加载配置、启动 QuorumPeer (选举相关)线程、创建ServerCnxnFactory 等,我们可以把代码切换到该类的主方法( main )中,从该类的主方法开始分析, main 方法代码分析如下:

上面main方法虽然只是做了初始化配置,但调用了 initializeAndRun() 方法,initializeAndRun() 方法中会根据配置来决定启动单机Zookeeper还是集群Zookeeper,源码如下:

如果启动单机版,会调用 ZooKeeperServerMain.main(args); ,如果启动集群版,会调用QuorumPeerMain.runFromConfig(config); ,我们接下来对单机版启动做源码详细剖析,集群版在后

面章节中讲解选举机制时详细讲解。

2.3 ZK单机启动源码剖析

针对ZK单机启动源码方法调用链,我们已经提前做了一个方法调用关系图,我们讲解ZK单机启动源码,将和该图进行一一匹对,如下图:

1)单机启动入口

按照上面的源码分析,我们找到 ZooKeeperServerMain.main(args) 方法,该方法调用了ZooKeeperServerMain 的 initializeAndRun 方法,在 initializeAndRun 方法中执行初始化操作,并运行Zookeeper服务,main方法如下:

2)配置文件解析

initializeAndRun() 方法会注册JMX,同时解析 zoo.cfg 配置文件,并调用 runFromConfig() 方法启动Zookeeper服务,源码如下

3)单机启动主流程

runFromConfig 方法是单机版启动的主要方法,该方法会做如下几件事:

1:初始化各类运行指标,比如一次提交数据最大花费多长时间、批量同步数据大小等。

2:初始化权限操作,例如IP权限、Digest权限。

3:创建事务日志操作对象,Zookeeper中每次增加节点、修改数据、删除数据都是一次事务操作,都会记录日志。

4:定义Jvm监控变量和常量,例如警告时间、告警阀值次数、提示阀值次数等。

5:创建ZookeeperServer,这里只是创建,并不在ZooKeeperServerMain类中启动。

6:启动Zookeeper的控制台管理对象AdminServer,该对象采用Jetty启动。

7:创建ServerCnxnFactory,该对象其实是Zookeeper网络通信对象,默认使用了

NIOServerCnxnFactory。

8:在ServerCnxnFactory中启动ZookeeperServer服务。

9:创建并启动ContainerManager,该对象通过Timer定时执行,清理过期的容器节点和TTL节点,执行周期为分钟。

10:防止主线程结束,阻塞主线程

方法源码如下:

4)网络通信对象创建

上面方法在创建网络通信对象的时候调用了 ServerCnxnFactory.createFactory() ,该方法其实是根据系统配置创建Zookeeper通信组件,可选的有 NIOServerCnxnFactory(默认) 和 NettyServerCnxnFactory ,该方法源码如下:

5)单机启动

cnxnFactory.startup(zkServer); 方法其实就是启动了 ZookeeperServer ,它调用NIOServerCnxnFactory 的 startup 方法,该方法中会调用 ZookeeperServer 的 startup 方法启动服务, ZooKeeperServerMain 运行到 shutdownLatch.await(); 主线程会阻塞住,源码如下

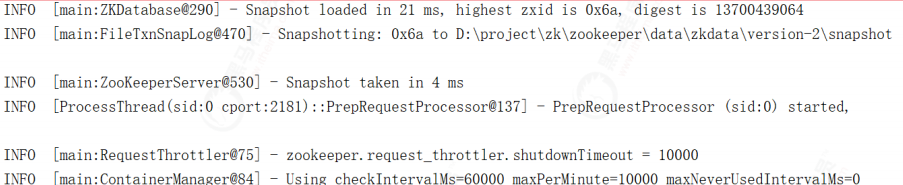

启动后,日志如下

3 ZK网络通信源码剖析

Zookeeper 作为一个服务器,自然要与客户端进行网络通信,如何高效的与客户端进行通信,让网络 IO 不成为 ZooKeeper 的瓶颈是 ZooKeeper 急需解决的问题, ZooKeeper 中使用 ServerCnxnFactory 管理与客户端的连接,其有两个实现,一个是 NIOServerCnxnFactory ,使用Java原生 NIO 实现;一个是NettyServerCnxnFactory ,使用netty实现;使用 ServerCnxn 代表一个客户端与服务端的连接。

从单机版启动中可以发现 Zookeeper 默认通信组件为 NIOServerCnxnFactory ,他们和ServerCnxnFactory 的关系如下图:

3.1 NIOServerCnxnFactory工作流程

一般使用Java NIO的思路为使用1个线程组监听 OP_ACCEPT 事件,**负责处理客户端的连接;**使用1个线程组监听客户端连接的 OP_READ 和 OP_WRITE 事件,处理IO事件(netty也是这种实现方式). 但ZooKeeper并不是如此划分线程功能的, NIOServerCnxnFactory 启动时会启动四类线程:

1:accept thread:该线程接收来自客户端的连接,并将其分配给selector thread(启动一个线程)。

2:selector thread:该线程执行select(),由于在处理大量连接时,select()会成为性能瓶颈,因此启动多个selector thread,使用系统属性zookeeper.nio.numSelectorThreads配置该类线程数,默认 个数为 核心数/2。

3:worker thread:该线程执行基本的套接字读写,使用系统属性zookeeper.nio.numWorkerThreads 配置该类线程数,默认为核心数∗2核心数∗2.如果该类线程数为0,则另外启动一线程进行IO处理,见下文

worker thread介绍。

4:connection expiration thread:若连接上的session已过期,则关闭该连接。

这四个线程在 NIOServerCnxnFactory 类上有说明,如下图:

ZooKeeper 中对线程需要处理的工作做了更细的拆分,解决了有大量客户端连接的情况

下, selector.select() 会成为性能瓶颈,将 selector.select() 拆分出来,交由 selector thread 处理。

3.2 NIOServerCnxnFactory源码

NIOServerCnxnFactory的源码分析我们将按照上面所介绍的4个线程实现相关分析,并实现数据操作,在程序中获取指定数据。

3.2.1 AcceptThread剖析

为了让大家更容易理解AcceptThread,我们把它的结构和方法调用关系画了一个详细的流程图,如下图:

在 NIOServerCnxnFactory 类中有一个AcceptThread 线程,为什么说它是一个线程?我们看下它的继承关系: AcceptThread> AbstractSelectThread > ZooKeeperThread > Thread ,该线程接收来自客户端的连接,并将其分配给selector thread(启动一个线程)。

该线程执行流程: run 执行 selector.select() ,并调用 doAccept() 接收客户端连接,因此我们可以

着重关注 doAccept() 方法,该类源码如下:

doAccept() 方法用于处理客户端链接,当客户端链接 Zookeeper 的时候,首先会调用该方法,调用该方法执行过程如下:

1:和当前服务建立链接。

2:获取远程客户端计算机地址信息。

3:判断当前链接是否超出最大限制。

4:调整为非阻塞模式。

5:轮询获取一个SelectorThread,将当前链接分配给该SelectorThread。

6:将当前请求添加到该SelectorThread的acceptedQueue中,并唤醒该SelectorThread。

上面代码中 addAcceptedConnection 方法如下:

我们把项目中的分布式案例服务启动,可以看到如下日志打印:

AcceptThread----------链接服务的IP:127.0.0.1

3.2.2 SelectorThread剖析

该线程的主要作用是从Socket读取数据,并封装成 workRequest ,并将 workRequest 交给workerPool 工作线程池处理,同时将acceptedQueue中未处理的链接取出,并未每个链接绑定OP_READ 读事件,并封装对应的上下文对象 NIOServerCnxn 。 SelectorThread 的run方法如下:

run() 方法中会调用 select() ,而 select() 中的核心调用地方是 handleIO() ,我们看名字其实就知道这里是处理客户端请求的数据,但客户端请求数据并非在 SelectorThread 线程中处理,我们接着看 handleIO() 方法。

handleIO() 方法会封装当前 SelectorThread 为 IOWorkRequest ,并将 IOWorkRequest 交给workerPool 来调度,而 workerPool 调度才是读数据的开始,源码如下:

3.2.3 WorkerThread剖析

WorkerThread相比上面的线程而言,调用关系颇为复杂,设计到了多个对象方法调用,主要用于处理IO,但并未对数据做出处理,数据处理将有业务链对象RequestProcessor处理,调用关系图如下:

ZooKeeper 中通过 WorkerService 管理一组 worker thread 线程,前面我们在看 SelectorThread 的时候,能够看到 workerPool 的schedule方法被执行,如下图:

我们跟踪 workerPool.schedule(workRequest); 可以发现它调用了WorkerService.schedule(workRequest) > WorkerService.schedule(WorkRequest, long) ,该方法创建了一个新的线程 ScheduledWorkRequest ,并启动了该线程,源码如下:

ScheduledWorkRequest 实现了 Runnable 接口,并在 run() 方法中调用了 IOWorkRequest 中的doWork 方法,在该方法中会调用 doIO 执行IO数据处理,源码如下:

IOWorkRequest 的 doWork 源码如下:

接下来的调用链路比较复杂,我们把核心步骤列出,在能直接看到数据读取的地方详细分析源码。上面方法调用链路: NIOServerCnxn.doIO()>readPayload()>readRequest() >ZookeeperServer.processPacket() ,最后一方法是获取核心数据的地方,我们可以修改下代码读取数据

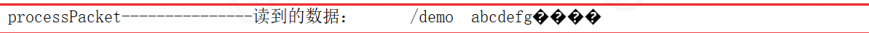

读取数据:

添加测试代码如下:

//==========测试 Start===========

//定义接收输入流对象(输出流)

ByteArrayOutputStream os=new ByteArrayOutputStream();

//将网络输入流读取到输出流中

byte[]buffer=new byte[1024];

int len=0;

while((len=bais.read(buffer))!=-1){

os.write(buffer,0,len);

}

String result=new String(os.toByteArray(),"UTF-8");

System.out.println("processPacket---------------读到的数据:"+result);

//==========测试 End===========

我们启动客户端创建一个demo节点,并添加数据为 abcdefg

create /demo abcdefg

控制台数据如下:

测试完成后,不要忘了将该测试注释掉。我们可以执行其他增删改查操作,可以输出

RequestHeader.type 查看操作类型,操作类型代码在 ZooDefs 中有标识,常用的操作类型如下:

int create = 1;

int delete = 2;

int exists = 3;

int getData = 4;

int setData = 5;

int getACL = 6;

int setACL = 7;

int getChildren = 8;

int sync = 9;

int ping = 11;

2.3.4 ConnectionExpirerThread剖析

后台启动 ConnectionExpirerThread 清理线程清理过期的 session ,线程中无限循环,执行工作如下:

2.3 ZK通信优劣总结

Zookeeper在通信方面默认使用了NIO,并支持扩展Netty实现网络数据传输。相比传统IO,NIO在网络数据传输方面有很多明显优势:

1:传统IO在处理数据传输请求时,针对每个传输请求生成一个线程,如果IO异常,那么线程阻塞,在IO恢复后唤醒处理线程。在同时处理大量连接时,会实例化大量的线程对象。每个线程的实例化和回收都需要消耗资源,jvm需要为其分配TLAB(缓冲区),然后初始TLAB,最后绑定线程,线程结束时又需要回收TLAB,这些都 需要CPU资源。

2:NIO使用selector来轮询IO流,内部使用poll或者epoll,以事件驱动形式来相应IO事件的处理。同一时间只需实例化很少的线程对象,通过对线程的复用来提高CPU资源的使用效率。

3:CPU轮流为每个线程分配时间片的形式,间接的实现单物理核处理多线程。当线程越多时,每个线程分配 到的时间片越短,或者循环分配的周期越长,CPU很多时间都耗费在了线程的切换上。线程切换包含线程上个线程数据的同步(TLAB同步),同步变量同步至主存,下个线程数据的加载等等,他们都是很耗费CPU资源的。

4:在同时处理大量连接,但活跃连接不多时,NIO的事件响应模式相比于传统IO有着极大的性能提升。NIO 还提供了FileChannel,以zero-copy的形式传输数据,相较于传统的IO,数据不需要拷贝至用户空间, 可直接由物理硬件(磁盘等)通过内核缓冲区后直接传递至网关,极大的提高了性能。

5:NIO提供了MappedByteBuffer,其将文件直接映射到内存(这里的内存指的是虚拟内存,并不是物理内存),能极大的提高IO吞吐能力

ZK在使用NIO通信虽然大幅提升了数据传输能力,但也存在一些代码诟病问题:

1:Zookeeper通信源码部分学习成本高,需要掌握NIO和多线程

2:多线程使用频率高,消耗资源多,但性能得到提升

3:Zookeeper数据处理调用链路复杂,多处存在内部类,代码结构不清晰,写法比较经典

4 RequestProcessor处理请求源码剖析

zookeeper 的业务处理流程就像工作流一样,其实就是一个单链表;在 zookeeper 启动的时候,会确立各个节点的角色特性,即 leader 、 follower 和 observer ,每个角色确立后,就会初始化它的工作责任链;

4.1 RequestProcessor结构

客户端请求过来,每次执行不同事务操作的时候,Zookeeper也提供了一套业务处理流程RequestProcessor , RequestProcessor 的处理流程如下图:

我们来看一下 RequestProcessor 初始化流程, ZooKeeperServer.setupRequestProcessors() 方法源码如下:

它的创建步骤:

1:创建finalProcessor。

2:创建syncProcessor,并将finalProcessor作为它的下一个业务链。

3:启动syncProcessor。

4:创建firstProcessor(PrepRequestProcessor),将syncProcessor作为firstProcessor的下一个业务链。

5:启动firstProcessor。

syncProcessor 创建时,将 finalProcessor 作为参数传递进来源码如下:

firstProcessor 创建时,将 syncProcessor 作为参数传递进来源码如下:

PrepRequestProcessor/SyncRequestProcessor 关系图:

PrepRequestProcessor 和 SyncRequestProcessor 的结构一样,都是实现了 Thread 的一个线程,所以在这里初始化时便启动了这两个线程。

4.2 PrepRequestProcessor剖析

PrepRequestProcessor 是请求处理器的第1个处理器,我们把之前的请求业务处理衔接起来,一步一步分析。

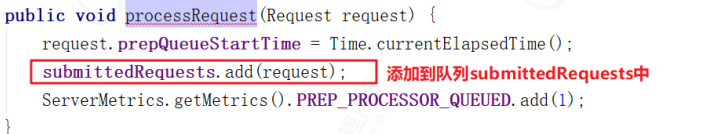

ZooKeeperServer.processPacket()>submitRequest()>enqueueRequest()>RequestThrottler.submitRequest() ,我们来看下 RequestThrottler.submitRequest() 源码,它将当前请求添加到submittedRequests 队列中了,源码如下:

而 RequestThrottler 继承了 ZooKeeperCriticalThread > ZooKeeperThread > Thread ,也就是说当前 RequestThrottler 是个线程,我们看看它的 run 方法做了什么事,源码如下:

RequestThrottler 调用了 ZooKeeperServer.submitRequestNow() 方法,而该方法又调用了firstProcessor 的方法,源码如下:

ZooKeeperServer.submitRequestNow() 方法调用了 firstProcessor.processRequest() 方法,而这里的 firstProcessor 就是初始化业务处理链中的 PrepRequestProcessor ,也就是说三个RequestProecessor 中最先调用的是 PrepRequestProcessor 。

PrepRequestProcessor.processRequest() 方法将当前请求添加到了队列 submittedRequests 中,源码如下:

上面方法中并未从 submittedRequests 队列中获取请求,如何执行请求的呢,因为PrepRequestProcessor 是一个线程,因此会在 run 中执行,我们查看 run 方法源码的时候发现它调用了 pRequest() 方法, pRequest() 方法源码如下:

首先先执行 pRequestHelper() 方法,该方法是 PrepRequestProcessor 处理核心业务流程,主要是一些过滤操作,操作完成后,会将请求交给下一个业务链,也就是SyncRequestProcessor.processRequest() 方法处理请求。

我们来看一下 PrepRequestProcessor.pRequestHelper() 方法做了哪些事,源码如下:

从上面源码可以看出 PrepRequestProcessor.pRequestHelper() 方法判断了客户端操作类型,但无论哪种操作类型几乎都调用了 pRequest2Txn() 方法,我们来看看源码:

/**

* This method will be called inside the ProcessRequestThread, which is a

* singleton, so there will be a single thread calling this code.

*

* @param type

* @param zxid

* @param request

* @param record

*/

protected void pRequest2Txn(int type, long zxid, Request request, Record record, boolean deserialize) throws KeeperException, IOException, RequestProcessorException {

if (request.getHdr() == null) {

request.setHdr(new TxnHeader(request.sessionId, request.cxid, zxid,

Time.currentWallTime(), type));

}

PrecalculatedDigest precalculatedDigest;

switch (type) {

case OpCode.create:

case OpCode.create2:

case OpCode.createTTL:

case OpCode.createContainer: {

pRequest2TxnCreate(type, request, record, deserialize);

break;

}

case OpCode.deleteContainer: {

String path = new String(request.request.array(), UTF_8);

String parentPath = getParentPathAndValidate(path);

ChangeRecord nodeRecord = getRecordForPath(path);

if (nodeRecord.childCount > 0) {

throw new KeeperException.NotEmptyException(path);

}

if (EphemeralType.get(nodeRecord.stat.getEphemeralOwner()) == EphemeralType.NORMAL) {

throw new KeeperException.BadVersionException(path);

}

//修改快照数据记录

ChangeRecord parentRecord = getRecordForPath(parentPath);

//事务信息记录

request.setTxn(new DeleteTxn(path));

parentRecord = parentRecord.duplicate(request.getHdr().getZxid());

parentRecord.childCount--;

//状态数据记录

parentRecord.stat.setPzxid(request.getHdr().getZxid());

parentRecord.precalculatedDigest = precalculateDigest(

DigestOpCode.UPDATE, parentPath, parentRecord.data, parentRecord.stat);

addChangeRecord(parentRecord);

nodeRecord = new ChangeRecord(request.getHdr().getZxid(), path, null, -1, null);

nodeRecord.precalculatedDigest = precalculateDigest(DigestOpCode.REMOVE, path);

setTxnDigest(request, nodeRecord.precalculatedDigest);

addChangeRecord(nodeRecord);

break;

}

case OpCode.delete:

zks.sessionTracker.checkSession(request.sessionId, request.getOwner());

DeleteRequest deleteRequest = (DeleteRequest) record;

if (deserialize) {

ByteBufferInputStream.byteBuffer2Record(request.request, deleteRequest);

}

String path = deleteRequest.getPath();

String parentPath = getParentPathAndValidate(path);

ChangeRecord parentRecord = getRecordForPath(parentPath);

//权限检查

zks.checkACL(request.cnxn, parentRecord.acl, ZooDefs.Perms.DELETE, request.authInfo, path, null);

ChangeRecord nodeRecord = getRecordForPath(path);

checkAndIncVersion(nodeRecord.stat.getVersion(), deleteRequest.getVersion(), path);

if (nodeRecord.childCount > 0) {

throw new KeeperException.NotEmptyException(path);

}

request.setTxn(new DeleteTxn(path));

parentRecord = parentRecord.duplicate(request.getHdr().getZxid());

parentRecord.childCount--;

parentRecord.stat.setPzxid(request.getHdr().getZxid());

parentRecord.precalculatedDigest = precalculateDigest(

DigestOpCode.UPDATE, parentPath, parentRecord.data, parentRecord.stat);

addChangeRecord(parentRecord);

nodeRecord = new ChangeRecord(request.getHdr().getZxid(), path, null, -1, null);

nodeRecord.precalculatedDigest = precalculateDigest(DigestOpCode.REMOVE, path);

setTxnDigest(request, nodeRecord.precalculatedDigest);

addChangeRecord(nodeRecord);

break;

case OpCode.setData:

zks.sessionTracker.checkSession(request.sessionId, request.getOwner());

SetDataRequest setDataRequest = (SetDataRequest) record;

if (deserialize) {

ByteBufferInputStream.byteBuffer2Record(request.request, setDataRequest);

}

path = setDataRequest.getPath();

validatePath(path, request.sessionId);

nodeRecord = getRecordForPath(path);

//权限检查

zks.checkACL(request.cnxn, nodeRecord.acl, ZooDefs.Perms.WRITE, request.authInfo, path, null);

int newVersion = checkAndIncVersion(nodeRecord.stat.getVersion(), setDataRequest.getVersion(), path);

request.setTxn(new SetDataTxn(path, setDataRequest.getData(), newVersion));

nodeRecord = nodeRecord.duplicate(request.getHdr().getZxid());

nodeRecord.stat.setVersion(newVersion);

nodeRecord.stat.setMtime(request.getHdr().getTime());

nodeRecord.stat.setMzxid(zxid);

nodeRecord.data = setDataRequest.getData();

nodeRecord.precalculatedDigest = precalculateDigest(

DigestOpCode.UPDATE, path, nodeRecord.data, nodeRecord.stat);

setTxnDigest(request, nodeRecord.precalculatedDigest);

addChangeRecord(nodeRecord);

break;

case OpCode.reconfig:

if (!zks.isReconfigEnabled()) {

LOG.error("Reconfig operation requested but reconfig feature is disabled.");

throw new KeeperException.ReconfigDisabledException();

}

//权限检查

if (ZooKeeperServer.skipACL) {

LOG.warn("skipACL is set, reconfig operation will skip ACL checks!");

}

zks.sessionTracker.checkSession(request.sessionId, request.getOwner());

LeaderZooKeeperServer lzks;

try {

lzks = (LeaderZooKeeperServer) zks;

} catch (ClassCastException e) {

// standalone mode - reconfiguration currently not supported

throw new KeeperException.UnimplementedException();

}

QuorumVerifier lastSeenQV = lzks.self.getLastSeenQuorumVerifier();

// check that there's no reconfig in progress

if (lastSeenQV.getVersion() != lzks.self.getQuorumVerifier().getVersion()) {

throw new KeeperException.ReconfigInProgress();

}

ReconfigRequest reconfigRequest = (ReconfigRequest) record;

long configId = reconfigRequest.getCurConfigId();

if (configId != -1 && configId != lzks.self.getLastSeenQuorumVerifier().getVersion()) {

String msg = "Reconfiguration from version "

+ configId

+ " failed -- last seen version is "

+ lzks.self.getLastSeenQuorumVerifier().getVersion();

throw new KeeperException.BadVersionException(msg);

}

String newMembers = reconfigRequest.getNewMembers();

if (newMembers != null) { //non-incremental membership change

LOG.info("Non-incremental reconfig");

// Input may be delimited by either commas or newlines so convert to common newline separated format

newMembers = newMembers.replaceAll(",", "\n");

try {

Properties props = new Properties();

props.load(new StringReader(newMembers));

request.qv = QuorumPeerConfig.parseDynamicConfig(props, lzks.self.getElectionType(), true, false);

request.qv.setVersion(request.getHdr().getZxid());

} catch (IOException | ConfigException e) {

throw new KeeperException.BadArgumentsException(e.getMessage());

}

} else { //incremental change - must be a majority quorum system

LOG.info("Incremental reconfig");

List<String> joiningServers = null;

String joiningServersString = reconfigRequest.getJoiningServers();

if (joiningServersString != null) {

joiningServers = StringUtils.split(joiningServersString, ",");

}

List<String> leavingServers = null;

String leavingServersString = reconfigRequest.getLeavingServers();

if (leavingServersString != null) {

leavingServers = StringUtils.split(leavingServersString, ",");

}

if (!(lastSeenQV instanceof QuorumMaj)) {

String msg = "Incremental reconfiguration requested but last configuration seen has a non-majority quorum system";

LOG.warn(msg);

throw new KeeperException.BadArgumentsException(msg);

}

Map<Long, QuorumServer> nextServers = new HashMap<Long, QuorumServer>(lastSeenQV.getAllMembers());

try {

if (leavingServers != null) {

for (String leaving : leavingServers) {

long sid = Long.parseLong(leaving);

nextServers.remove(sid);

}

}

if (joiningServers != null) {

for (String joiner : joiningServers) {

// joiner should have the following format: server.x = server_spec;client_spec

String[] parts = StringUtils.split(joiner, "=").toArray(new String[0]);

if (parts.length != 2) {

throw new KeeperException.BadArgumentsException("Wrong format of server string");

}

// extract server id x from first part of joiner: server.x

Long sid = Long.parseLong(parts[0].substring(parts[0].lastIndexOf('.') + 1));

QuorumServer qs = new QuorumServer(sid, parts[1]);

if (qs.clientAddr == null || qs.electionAddr == null || qs.addr == null) {

throw new KeeperException.BadArgumentsException("Wrong format of server string - each server should have 3 ports specified");

}

// check duplication of addresses and ports

for (QuorumServer nqs : nextServers.values()) {

if (qs.id == nqs.id) {

continue;

}

qs.checkAddressDuplicate(nqs);

}

nextServers.remove(qs.id);

nextServers.put(qs.id, qs);

}

}

} catch (ConfigException e) {

throw new KeeperException.BadArgumentsException("Reconfiguration failed");

}

request.qv = new QuorumMaj(nextServers);

request.qv.setVersion(request.getHdr().getZxid());

}

if (QuorumPeerConfig.isStandaloneEnabled() && request.qv.getVotingMembers().size() < 2) {

String msg = "Reconfig failed - new configuration must include at least 2 followers";

LOG.warn(msg);

throw new KeeperException.BadArgumentsException(msg);

} else if (request.qv.getVotingMembers().size() < 1) {

String msg = "Reconfig failed - new configuration must include at least 1 follower";

LOG.warn(msg);

throw new KeeperException.BadArgumentsException(msg);

}

if (!lzks.getLeader().isQuorumSynced(request.qv)) {

String msg2 = "Reconfig failed - there must be a connected and synced quorum in new configuration";

LOG.warn(msg2);

throw new KeeperException.NewConfigNoQuorum();

}

//修改快照设置

nodeRecord = getRecordForPath(ZooDefs.CONFIG_NODE);

//权限检查

zks.checkACL(request.cnxn, nodeRecord.acl, ZooDefs.Perms.WRITE, request.authInfo, null, null);

SetDataTxn setDataTxn = new SetDataTxn(ZooDefs.CONFIG_NODE, request.qv.toString().getBytes(), -1);

//事务数据记录

request.setTxn(setDataTxn);

nodeRecord = nodeRecord.duplicate(request.getHdr().getZxid());

//版本信息、修改时间、修改的事务id记录

nodeRecord.stat.setVersion(-1);

nodeRecord.stat.setMtime(request.getHdr().getTime());

nodeRecord.stat.setMzxid(zxid);

nodeRecord.data = setDataTxn.getData();

// Reconfig is currently a noop from digest computation

// perspective since config node is not covered by the digests.

nodeRecord.precalculatedDigest = precalculateDigest(

DigestOpCode.NOOP, ZooDefs.CONFIG_NODE, nodeRecord.data, nodeRecord.stat);

setTxnDigest(request, nodeRecord.precalculatedDigest);

addChangeRecord(nodeRecord);

break;

case OpCode.setACL:

zks.sessionTracker.checkSession(request.sessionId, request.getOwner());

SetACLRequest setAclRequest = (SetACLRequest) record;

if (deserialize) {

ByteBufferInputStream.byteBuffer2Record(request.request, setAclRequest);

}

path = setAclRequest.getPath();

validatePath(path, request.sessionId);

List<ACL> listACL = fixupACL(path, request.authInfo, setAclRequest.getAcl());

nodeRecord = getRecordForPath(path);

zks.checkACL(request.cnxn, nodeRecord.acl, ZooDefs.Perms.ADMIN, request.authInfo, path, listACL);

newVersion = checkAndIncVersion(nodeRecord.stat.getAversion(), setAclRequest.getVersion(), path);

request.setTxn(new SetACLTxn(path, listACL, newVersion));

nodeRecord = nodeRecord.duplicate(request.getHdr().getZxid());

nodeRecord.stat.setAversion(newVersion);

nodeRecord.precalculatedDigest = precalculateDigest(

DigestOpCode.UPDATE, path, nodeRecord.data, nodeRecord.stat);

setTxnDigest(request, nodeRecord.precalculatedDigest);

addChangeRecord(nodeRecord);

break;

case OpCode.createSession:

request.request.rewind();

int to = request.request.getInt();

request.setTxn(new CreateSessionTxn(to));

request.request.rewind();

// only add the global session tracker but not to ZKDb

zks.sessionTracker.trackSession(request.sessionId, to);

zks.setOwner(request.sessionId, request.getOwner());

break;

case OpCode.closeSession:

// We don't want to do this check since the session expiration thread

// queues up this operation without being the session owner.

// this request is the last of the session so it should be ok

//zks.sessionTracker.checkSession(request.sessionId, request.getOwner());

long startTime = Time.currentElapsedTime();

synchronized (zks.outstandingChanges) {

// need to move getEphemerals into zks.outstandingChanges

// synchronized block, otherwise there will be a race

// condition with the on flying deleteNode txn, and we'll

// delete the node again here, which is not correct

Set<String> es = zks.getZKDatabase().getEphemerals(request.sessionId);

for (ChangeRecord c : zks.outstandingChanges) {

if (c.stat == null) {

// Doing a delete

es.remove(c.path);

} else if (c.stat.getEphemeralOwner() == request.sessionId) {

es.add(c.path);

}

}

for (String path2Delete : es) {

if (digestEnabled) {

parentPath = getParentPathAndValidate(path2Delete);

parentRecord = getRecordForPath(parentPath);

parentRecord = parentRecord.duplicate(request.getHdr().getZxid());

parentRecord.stat.setPzxid(request.getHdr().getZxid());

parentRecord.precalculatedDigest = precalculateDigest(

DigestOpCode.UPDATE, parentPath, parentRecord.data, parentRecord.stat);

addChangeRecord(parentRecord);

}

nodeRecord = new ChangeRecord(

request.getHdr().getZxid(), path2Delete, null, 0, null);

nodeRecord.precalculatedDigest = precalculateDigest(

DigestOpCode.REMOVE, path2Delete);

addChangeRecord(nodeRecord);

}

if (ZooKeeperServer.isCloseSessionTxnEnabled()) {

request.setTxn(new CloseSessionTxn(new ArrayList<String>(es)));

}

zks.sessionTracker.setSessionClosing(request.sessionId);

}

ServerMetrics.getMetrics().CLOSE_SESSION_PREP_TIME.add(Time.currentElapsedTime() - startTime);

break;

case OpCode.check:

zks.sessionTracker.checkSession(request.sessionId, request.getOwner());

CheckVersionRequest checkVersionRequest = (CheckVersionRequest) record;

if (deserialize) {

ByteBufferInputStream.byteBuffer2Record(request.request, checkVersionRequest);

}

path = checkVersionRequest.getPath();

validatePath(path, request.sessionId);

nodeRecord = getRecordForPath(path);

zks.checkACL(request.cnxn, nodeRecord.acl, ZooDefs.Perms.READ, request.authInfo, path, null);

request.setTxn(new CheckVersionTxn(

path,

checkAndIncVersion(nodeRecord.stat.getVersion(), checkVersionRequest.getVersion(), path)));

break;

default:

LOG.warn("unknown type {}", type);

break;

}

// If the txn is not going to mutate anything, like createSession,

// we just set the current tree digest in it

if (request.getTxnDigest() == null && digestEnabled) {

setTxnDigest(request);

}

}

从上面代码可以看出 pRequest2Txn() 方法主要做了权限校验、快照记录、事务信息记录相关的事,还并未涉及数据处理,也就是说 PrepRequestProcessor 其实是做了操作前权限校验、快照记录、事务信息记录相关的事。

我们DEBUG调试一次,看看业务处理流程是否和我们上面所分析的一致。

添加节点

create /zkdemo itheima

DEBUG测试如下:

客户端请求先经过 ZooKeeperServer.submitRequestNow() 方法,并调用firstProcessor.processRequest() 方法,而 firstProcessor = PrepRequestProcessor ,如下图:

进入 PrepRequestProcessor.pRequest() 方法,执行完 pRequestHelper() 方法后,开始执行下一个业务链的方法,而下一个业务链 nextProcessor = SyncRequestProcessor ,如下测试图:

4.3 SyncRequestProcessor剖析

分析了 PrepRequestProcessor 处理器后,接着来分析 SyncRequestProcessor ,该处理器主要是将请求数据高效率存入磁盘,并且请求在写入磁盘之前是不会被转发到下个处理器的。

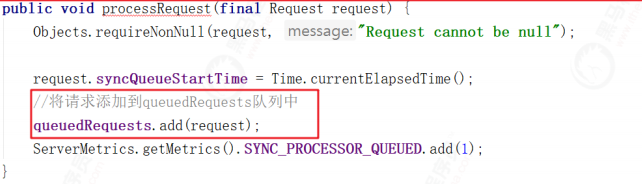

我们先看请求被添加到队列的方法:

同样 SyncRequestProcessor 是一个线程,执行队列中的请求也在线程中触发,我们看它的run方法,

源码如下:

@Override

public void run() {

try {

// we do this in an attempt to ensure that not all of the servers

// in the ensemble take a snapshot at the same time

//避免所有的server都同时进行snapshot,重置是否需要snapshot判断相关的统计

resetSnapshotStats();

lastFlushTime = Time.currentElapsedTime();

while (true) {

ServerMetrics.getMetrics().SYNC_PROCESSOR_QUEUE_SIZE.add(queuedRequests.size());

long pollTime = Math.min(zks.getMaxWriteQueuePollTime(), getRemainingDelay());

//获取一个需要处理的请求

Request si = queuedRequests.poll(pollTime, TimeUnit.MILLISECONDS);

if (si == null) {

/* We timed out looking for more writes to batch, go ahead and flush immediately */

flush();

//阻塞方法获取一个请求

si = queuedRequests.take();

}

if (si == REQUEST_OF_DEATH) {

break;

}

long startProcessTime = Time.currentElapsedTime();

ServerMetrics.getMetrics().SYNC_PROCESSOR_QUEUE_TIME.add(startProcessTime - si.syncQueueStartTime);

// 跟踪写入日志的记录数量

if (!si.isThrottled() && zks.getZKDatabase().append(si)) {

//shouldSnapshot():保存快照条件:根据logCount日志数量和logSize日志大小与snapCount快照的比较,具有随机性。

if (shouldSnapshot()) {

//重置是否需要snapshot判断相关的统计

resetSnapshotStats();

//重置自上次rollLog以来的txn数量

zks.getZKDatabase().rollLog();

// take a snapshot

if (!snapThreadMutex.tryAcquire()) {

LOG.warn("Too busy to snap, skipping");

} else {

new ZooKeeperThread("Snapshot Thread") {

//创建线程保存快照数据

public void run() {

try {

//保存快照数据

zks.takeSnapshot();

} catch (Exception e) {

LOG.warn("Unexpected exception", e);

} finally {

snapThreadMutex.release();

}

}

}.start();

}

}

} else if (toFlush.isEmpty()) {

// optimization for read heavy workloads

// iff this is a read or a throttled request(which doesn't need to be written to the disk),

// and there are no pending flushes (writes), then just pass this to the next processor

if (nextProcessor != null) {

nextProcessor.processRequest(si);

if (nextProcessor instanceof Flushable) {

((Flushable) nextProcessor).flush();

}

}

continue;

}

//将当前请求添加到toFlush队列中,toFlush队列是已经写入并等待刷新到磁盘的事务

toFlush.add(si);

if (shouldFlush()) {

//提交数据

flush();

}

ServerMetrics.getMetrics().SYNC_PROCESS_TIME.add(Time.currentElapsedTime() - startProcessTime);

}

} catch (Throwable t) {

handleException(this.getName(), t);

}

LOG.info("SyncRequestProcessor exited!");

}

run 方法会从 queuedRequests 队列中获取一个请求,如果获取不到就会阻塞等待直到获取到一个请求对象,程序才会继续往下执行,接下来会调用 Snapshot Thread 线程实现将客户端发送的数据以快照的方式写入磁盘,最终调用 flush() 方法实现数据提交, flush() 方法源码如下

flush() 方法实现了数据提交,并且会将请求交给下一个业务链,下一个业务链为FinalRequestProcessor 。

4.4 FinalRequestProcessor剖析

前面分析了 SyncReqeustProcessor ,接着分析请求处理链中最后的一个处理器FinalRequestProcessor ,该业务处理对象主要用于返回Response

public void processRequest(Request request) {

LOG.debug("Processing request:: {}", request);

if (LOG.isTraceEnabled()) {

long traceMask = ZooTrace.CLIENT_REQUEST_TRACE_MASK;

if (request.type == OpCode.ping) {

traceMask = ZooTrace.SERVER_PING_TRACE_MASK;

}

ZooTrace.logRequest(LOG, traceMask, 'E', request, "");

}

ProcessTxnResult rc = null;

if (!request.isThrottled()) {

rc = applyRequest(request);

}

if (request.cnxn == null) {

return;

}

ServerCnxn cnxn = request.cnxn;

long lastZxid = zks.getZKDatabase().getDataTreeLastProcessedZxid();

String lastOp = "NA";

// Notify ZooKeeperServer that the request has finished so that it can

// update any request accounting/throttling limits

zks.decInProcess();

zks.requestFinished(request);

Code err = Code.OK;

Record rsp = null;

String path = null;

int responseSize = 0;

try {

if (request.getHdr() != null && request.getHdr().getType() == OpCode.error) {

AuditHelper.addAuditLog(request, rc, true);

/*

* When local session upgrading is disabled, leader will

* reject the ephemeral node creation due to session expire.

* However, if this is the follower that issue the request,

* it will have the correct error code, so we should use that

* and report to user

*/

if (request.getException() != null) {

throw request.getException();

} else {

throw KeeperException.create(KeeperException.Code.get(((ErrorTxn) request.getTxn()).getErr()));

}

}

KeeperException ke = request.getException();

if (ke instanceof SessionMovedException) {

throw ke;

}

if (ke != null && request.type != OpCode.multi) {

throw ke;

}

LOG.debug("{}", request);

if (request.isStale()) {

ServerMetrics.getMetrics().STALE_REPLIES.add(1);

}

if (request.isThrottled()) {

throw KeeperException.create(Code.THROTTLEDOP);

}

AuditHelper.addAuditLog(request, rc);

switch (request.type) {

case OpCode.ping: {

lastOp = "PING";

//设置响应状态

updateStats(request, lastOp, lastZxid);

//响应数据

responseSize = cnxn.sendResponse(new ReplyHeader(ClientCnxn.PING_XID, lastZxid, 0), null, "response");

return;

}

case OpCode.createSession: {

lastOp = "SESS";

//设置响应状态

updateStats(request, lastOp, lastZxid);

//响应数据并结束会话

zks.finishSessionInit(request.cnxn, true);

return;

}

case OpCode.multi: {

// 多重操作

lastOp = "MULT";

rsp = new MultiResponse();

// 遍历多重操作结果

for (ProcessTxnResult subTxnResult : rc.multiResult) {

OpResult subResult;

switch (subTxnResult.type) {

case OpCode.check:

// 检查

subResult = new CheckResult();

break;

case OpCode.create:

// 创建

subResult = new CreateResult(subTxnResult.path);

break;

case OpCode.create2:

case OpCode.createTTL:

case OpCode.createContainer:

subResult = new CreateResult(subTxnResult.path, subTxnResult.stat);

break;

case OpCode.delete:

case OpCode.deleteContainer:

// 删除

subResult = new DeleteResult();

break;

case OpCode.setData:

// 设置数据

subResult = new SetDataResult(subTxnResult.stat);

break;

case OpCode.error:

// 错误

subResult = new ErrorResult(subTxnResult.err);

if (subTxnResult.err == Code.SESSIONMOVED.intValue()) {

throw new SessionMovedException();

}

break;

default:

throw new IOException("Invalid type of op");

}

// 添加至响应结果集中

((MultiResponse) rsp).add(subResult);

}

break;

}

case OpCode.multiRead: {

//多重读取操作,同上

lastOp = "MLTR";

MultiOperationRecord multiReadRecord = new MultiOperationRecord();

ByteBufferInputStream.byteBuffer2Record(request.request, multiReadRecord);

rsp = new MultiResponse();

OpResult subResult;

for (Op readOp : multiReadRecord) {

try {

Record rec;

switch (readOp.getType()) {

case OpCode.getChildren:

rec = handleGetChildrenRequest(readOp.toRequestRecord(), cnxn, request.authInfo);

subResult = new GetChildrenResult(((GetChildrenResponse) rec).getChildren());

break;

case OpCode.getData:

rec = handleGetDataRequest(readOp.toRequestRecord(), cnxn, request.authInfo);

GetDataResponse gdr = (GetDataResponse) rec;

subResult = new GetDataResult(gdr.getData(), gdr.getStat());

break;

default:

throw new IOException("Invalid type of readOp");

}

} catch (KeeperException e) {

subResult = new ErrorResult(e.code().intValue());

}

// 添加至响应结果集中

((MultiResponse) rsp).add(subResult);

}

break;

}

case OpCode.create: {

lastOp = "CREA";

rsp = new CreateResponse(rc.path);

err = Code.get(rc.err);

requestPathMetricsCollector.registerRequest(request.type, rc.path);

break;

}

case OpCode.create2:

case OpCode.createTTL:

case OpCode.createContainer: {

lastOp = "CREA";

rsp = new Create2Response(rc.path, rc.stat);

err = Code.get(rc.err);

requestPathMetricsCollector.registerRequest(request.type, rc.path);

break;

}

case OpCode.delete:

case OpCode.deleteContainer: {

lastOp = "DELE";

err = Code.get(rc.err);

requestPathMetricsCollector.registerRequest(request.type, rc.path);

break;

}

case OpCode.setData: {

lastOp = "SETD";

rsp = new SetDataResponse(rc.stat);

err = Code.get(rc.err);

requestPathMetricsCollector.registerRequest(request.type, rc.path);

break;

}

case OpCode.reconfig: {

lastOp = "RECO";

rsp = new GetDataResponse(

((QuorumZooKeeperServer) zks).self.getQuorumVerifier().toString().getBytes(UTF_8),

rc.stat);

err = Code.get(rc.err);

break;

}

case OpCode.setACL: {

lastOp = "SETA";

rsp = new SetACLResponse(rc.stat);

err = Code.get(rc.err);

requestPathMetricsCollector.registerRequest(request.type, rc.path);

break;

}

case OpCode.closeSession: {

lastOp = "CLOS";

err = Code.get(rc.err);

break;

}

case OpCode.sync: {

lastOp = "SYNC";

SyncRequest syncRequest = new SyncRequest();

ByteBufferInputStream.byteBuffer2Record(request.request, syncRequest);

rsp = new SyncResponse(syncRequest.getPath());

requestPathMetricsCollector.registerRequest(request.type, syncRequest.getPath());

break;

}

case OpCode.check: {

lastOp = "CHEC";

rsp = new SetDataResponse(rc.stat);

err = Code.get(rc.err);

break;

}

case OpCode.exists: {

lastOp = "EXIS";

// TODO we need to figure out the security requirement for this!

ExistsRequest existsRequest = new ExistsRequest();

ByteBufferInputStream.byteBuffer2Record(request.request, existsRequest);

path = existsRequest.getPath();

if (path.indexOf('\0') != -1) {

throw new KeeperException.BadArgumentsException();

}

Stat stat = zks.getZKDatabase().statNode(path, existsRequest.getWatch() ? cnxn : null);

rsp = new ExistsResponse(stat);

requestPathMetricsCollector.registerRequest(request.type, path);

break;

}

case OpCode.getData: {

lastOp = "GETD";

GetDataRequest getDataRequest = new GetDataRequest();

ByteBufferInputStream.byteBuffer2Record(request.request, getDataRequest);

path = getDataRequest.getPath();

rsp = handleGetDataRequest(getDataRequest, cnxn, request.authInfo);

requestPathMetricsCollector.registerRequest(request.type, path);

break;

}

case OpCode.setWatches: {

lastOp = "SETW";

SetWatches setWatches = new SetWatches();

// TODO we really should not need this

request.request.rewind();

ByteBufferInputStream.byteBuffer2Record(request.request, setWatches);

long relativeZxid = setWatches.getRelativeZxid();

zks.getZKDatabase()

.setWatches(

relativeZxid,

setWatches.getDataWatches(),

setWatches.getExistWatches(),

setWatches.getChildWatches(),

Collections.emptyList(),

Collections.emptyList(),

cnxn);

break;

}

case OpCode.setWatches2: {

lastOp = "STW2";

SetWatches2 setWatches = new SetWatches2();

// TODO we really should not need this

request.request.rewind();

ByteBufferInputStream.byteBuffer2Record(request.request, setWatches);

long relativeZxid = setWatches.getRelativeZxid();

zks.getZKDatabase().setWatches(relativeZxid,

setWatches.getDataWatches(),

setWatches.getExistWatches(),

setWatches.getChildWatches(),

setWatches.getPersistentWatches(),

setWatches.getPersistentRecursiveWatches(),

cnxn);

break;

}

case OpCode.addWatch: {

lastOp = "ADDW";

AddWatchRequest addWatcherRequest = new AddWatchRequest();

ByteBufferInputStream.byteBuffer2Record(request.request,

addWatcherRequest);

zks.getZKDatabase().addWatch(addWatcherRequest.getPath(), cnxn, addWatcherRequest.getMode());

rsp = new ErrorResponse(0);

break;

}

case OpCode.getACL: {

lastOp = "GETA";

GetACLRequest getACLRequest = new GetACLRequest();

ByteBufferInputStream.byteBuffer2Record(request.request, getACLRequest);

path = getACLRequest.getPath();

DataNode n = zks.getZKDatabase().getNode(path);

if (n == null) {

throw new KeeperException.NoNodeException();

}

zks.checkACL(

request.cnxn,

zks.getZKDatabase().aclForNode(n),

ZooDefs.Perms.READ | ZooDefs.Perms.ADMIN, request.authInfo, path,

null);

Stat stat = new Stat();

List<ACL> acl = zks.getZKDatabase().getACL(path, stat);

requestPathMetricsCollector.registerRequest(request.type, getACLRequest.getPath());

try {

zks.checkACL(

request.cnxn,

zks.getZKDatabase().aclForNode(n),

ZooDefs.Perms.ADMIN,

request.authInfo,

path,

null);

rsp = new GetACLResponse(acl, stat);

} catch (KeeperException.NoAuthException e) {

List<ACL> acl1 = new ArrayList<ACL>(acl.size());

for (ACL a : acl) {

if ("digest".equals(a.getId().getScheme())) {

Id id = a.getId();

Id id1 = new Id(id.getScheme(), id.getId().replaceAll(":.*", ":x"));

acl1.add(new ACL(a.getPerms(), id1));

} else {

acl1.add(a);

}

}

rsp = new GetACLResponse(acl1, stat);

}

break;

}

case OpCode.getChildren: {

lastOp = "GETC";

GetChildrenRequest getChildrenRequest = new GetChildrenRequest();

ByteBufferInputStream.byteBuffer2Record(request.request, getChildrenRequest);

path = getChildrenRequest.getPath();

rsp = handleGetChildrenRequest(getChildrenRequest, cnxn, request.authInfo);

requestPathMetricsCollector.registerRequest(request.type, path);

break;

}

case OpCode.getAllChildrenNumber: {

lastOp = "GETACN";

GetAllChildrenNumberRequest getAllChildrenNumberRequest = new GetAllChildrenNumberRequest();

ByteBufferInputStream.byteBuffer2Record(request.request, getAllChildrenNumberRequest);

path = getAllChildrenNumberRequest.getPath();

DataNode n = zks.getZKDatabase().getNode(path);

if (n == null) {

throw new KeeperException.NoNodeException();

}

zks.checkACL(

request.cnxn,

zks.getZKDatabase().aclForNode(n),

ZooDefs.Perms.READ,

request.authInfo,

path,

null);

int number = zks.getZKDatabase().getAllChildrenNumber(path);

rsp = new GetAllChildrenNumberResponse(number);

break;

}

case OpCode.getChildren2: {

lastOp = "GETC";

GetChildren2Request getChildren2Request = new GetChildren2Request();

ByteBufferInputStream.byteBuffer2Record(request.request, getChildren2Request);

Stat stat = new Stat();

path = getChildren2Request.getPath();

DataNode n = zks.getZKDatabase().getNode(path);

if (n == null) {

throw new KeeperException.NoNodeException();

}

zks.checkACL(

request.cnxn,

zks.getZKDatabase().aclForNode(n),

ZooDefs.Perms.READ,

request.authInfo, path,

null);

List<String> children = zks.getZKDatabase()

.getChildren(path, stat, getChildren2Request.getWatch() ? cnxn : null);

rsp = new GetChildren2Response(children, stat);

requestPathMetricsCollector.registerRequest(request.type, path);

break;

}

case OpCode.checkWatches: {

lastOp = "CHKW";

CheckWatchesRequest checkWatches = new CheckWatchesRequest();

ByteBufferInputStream.byteBuffer2Record(request.request, checkWatches);

WatcherType type = WatcherType.fromInt(checkWatches.getType());

path = checkWatches.getPath();

boolean containsWatcher = zks.getZKDatabase().containsWatcher(path, type, cnxn);

if (!containsWatcher) {

String msg = String.format(Locale.ENGLISH, "%s (type: %s)", path, type);

throw new KeeperException.NoWatcherException(msg);

}

requestPathMetricsCollector.registerRequest(request.type, checkWatches.getPath());

break;

}

case OpCode.removeWatches: {

lastOp = "REMW";

RemoveWatchesRequest removeWatches = new RemoveWatchesRequest();

ByteBufferInputStream.byteBuffer2Record(request.request, removeWatches);

WatcherType type = WatcherType.fromInt(removeWatches.getType());

path = removeWatches.getPath();

boolean removed = zks.getZKDatabase().removeWatch(path, type, cnxn);

if (!removed) {

String msg = String.format(Locale.ENGLISH, "%s (type: %s)", path, type);

throw new KeeperException.NoWatcherException(msg);

}

requestPathMetricsCollector.registerRequest(request.type, removeWatches.getPath());

break;

}

case OpCode.whoAmI: {

lastOp = "HOMI";

rsp = new WhoAmIResponse(AuthUtil.getClientInfos(request.authInfo));

break;

}

case OpCode.getEphemerals: {

lastOp = "GETE";

GetEphemeralsRequest getEphemerals = new GetEphemeralsRequest();

ByteBufferInputStream.byteBuffer2Record(request.request, getEphemerals);

String prefixPath = getEphemerals.getPrefixPath();

Set<String> allEphems = zks.getZKDatabase().getDataTree().getEphemerals(request.sessionId);

List<String> ephemerals = new ArrayList<>();

if (prefixPath == null || prefixPath.trim().isEmpty() || "/".equals(prefixPath.trim())) {

ephemerals.addAll(allEphems);

} else {

for (String p : allEphems) {

if (p.startsWith(prefixPath)) {

ephemerals.add(p);

}

}

}

rsp = new GetEphemeralsResponse(ephemerals);

break;

}

}

} catch (SessionMovedException e) {

// session moved is a connection level error, we need to tear

// down the connection otw ZOOKEEPER-710 might happen

// ie client on slow follower starts to renew session, fails

// before this completes, then tries the fast follower (leader)

// and is successful, however the initial renew is then

// successfully fwd/processed by the leader and as a result

// the client and leader disagree on where the client is most

// recently attached (and therefore invalid SESSION MOVED generated)

cnxn.sendCloseSession();

return;

} catch (KeeperException e) {

err = e.code();

} catch (Exception e) {

// log at error level as we are returning a marshalling

// error to the user

LOG.error("Failed to process {}", request, e);

StringBuilder sb = new StringBuilder();

ByteBuffer bb = request.request;

bb.rewind();

while (bb.hasRemaining()) {

sb.append(Integer.toHexString(bb.get() & 0xff));

}

LOG.error("Dumping request buffer: 0x{}", sb.toString());

err = Code.MARSHALLINGERROR;

}

ReplyHeader hdr = new ReplyHeader(request.cxid, lastZxid, err.intValue());

updateStats(request, lastOp, lastZxid);

try {

if (path == null || rsp == null) {

responseSize = cnxn.sendResponse(hdr, rsp, "response");

} else {

int opCode = request.type;

Stat stat = null;

// Serialized read and get children responses could be cached by the connection

// object. Cache entries are identified by their path and last modified zxid,

// so these values are passed along with the response.

switch (opCode) {

case OpCode.getData : {

GetDataResponse getDataResponse = (GetDataResponse) rsp;

stat = getDataResponse.getStat();

responseSize = cnxn.sendResponse(hdr, rsp, "response", path, stat, opCode);

break;

}

case OpCode.getChildren2 : {

GetChildren2Response getChildren2Response = (GetChildren2Response) rsp;

stat = getChildren2Response.getStat();

responseSize = cnxn.sendResponse(hdr, rsp, "response", path, stat, opCode);

break;

}

default:

responseSize = cnxn.sendResponse(hdr, rsp, "response");

}

}

if (request.type == OpCode.closeSession) {

cnxn.sendCloseSession();

}

} catch (IOException e) {

LOG.error("FIXMSG", e);

} finally {

ServerMetrics.getMetrics().RESPONSE_BYTES.add(responseSize);

}

}

4.5 ZK业务链处理优劣总结

Zookeeper业务链处理,思想遵循了AOP思想,但并未采用相关技术,为了提升效率,仍然大幅使用到了多线程。正因为有了业务链路处理先后顺序,使得Zookeeper业务处理流程更清晰更容易理解,但大量混入了多线程,也使得学习成本增加。

989

989

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?