切换clickhouse keeper

1.单独部署(即clickhouse-server和clickhouse keeper起俩个进程运行)

1.检测端口是否被占用

netstat -anp | grep 9181 ##tcp的端口

netstat -anp | grep 9444 ##raft协议端口

2.准备配置文件

keeper配置文件(本配置是zk1的配置,zk2的配置中<server_id>需要修改为2):

<clickhouse>

<listen_host>0.0.0.0</listen_host>

<keeper_server>

<tcp_port>2181</tcp_port>

<server_id>1</server_id>

<log_storage_path>/var/lib/clickhouse/coordination/log</log_storage_path>

<snapshot_storage_path>/var/lib/clickhouse/coordination/snapshots</snapshot_storage_path>

<coordination_settings>

<operation_timeout_ms>10000</operation_timeout_ms>

<session_timeout_ms>30000</session_timeout_ms>

<raft_logs_level>trace</raft_logs_level>

</coordination_settings>

<raft_configuration>

<server>

<id>1</id>

<hostname>zk1</hostname>

<port>9444</port>

</server>

<server>

<id>2</id>

<hostname>zk2</hostname>

<port>9444</port>

</server>

<server>

<id>3</id>

<hostname>zk3</hostname>

<port>9444</port>

</server>

</raft_configuration>

</keeper_server>

</clickhouse>

创建日志和快照目录(不能自动生成)

mkdir /var/lib/clickhouse/coordination

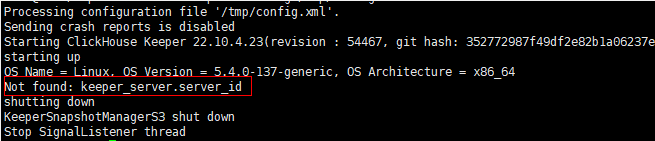

只有一个节点时<raft_configuration>也需要配置,否则会报错

clickhouse的配置文件:

<zookeeper>

<node>

<host>zk1</host>

<port>2181</port>

</node>

<node>

<host>zk2</host>

<port>2182</port>

</node>

<node>

<host>zk3</host>

<port>2182</port>

</node>

</zookeeper>

3.启动keeper

clickhouse-keeper --config /etc/your_path_to_config/config.xml

如果您没有符号链接 (),您可以创建它或指定为参数:clickhouse-keeper keeperclickhouse

clickhouse keeper --config /etc/your_path_to_config/config.xml

2.如何迁移 Zookeeper 至ClicHouse-Keeper

从ZooKeeper无缝迁移到ClickHouse Keeper是不可能的,你必须停止你的ZooKeeper集群,转换数据并启动ClickHouse Keeper。ClickHouse - Keeper -converter工具可以将ZooKeeper日志和快照转换为ClickHouse Keeper快照。它只适用于ZooKeeper > 3.4。迁移步骤:

- 停止所有ZooKeeper节点。

- 可选,但建议:找到ZooKeeper leader节点,重新启动和停止。它会强制ZooKeeper创建一致的快照。

找到leader节点

查看zk主节点

./zkServer.sh status

- 在keeper的leader运行clickhouse-keeper-converter,例如:

clickhouse-keeper-converter --zookeeper-logs-dir /tmp/zookeeper/datalog/version-2 --zookeeper-snapshots-dir /tmp/zookeeper/data/version-2 --output-dir /tmp/keeper/snapshots

keeper快照位置

/var/lib/clickhouse/coordination/snapshots

- 复制快照到配置了keeper的ClickHouse服务器节点或启动ClickHouse keeper而不是ZooKeeper。快照必须保存在所有节点上,否则,空节点可能更快,其中一个节点可能成为leader。

附:

3.由于开发大多使用dockers,我试着用docker起了集群做尝试

docker-compose起集群配置文件:

version: '3.9'

networks:

zk-net:

driver: bridge

services:

# ch-manager:

# image: dev.docker.di.ibr.net.cn/di/zeus/ch-manager:0.0.9-eabad757-SNAPSHOT

# environment:

# TZ: Asia/Shanghai

# ports:

# - 18171:18171

# restart: always

# volumes:

# - ./manager-vol/chmanagerlog:/data/br/jar/ch-manager/log

# - ./manager-vol/ch-manager.env:/data/br/jar/ch-manager/ch-manager.env

# #- ./ch-manager.jar:/data/br/jar/ch-manager/ch-manager.jar

#

zk1:

image: clickhouse/clickhouse-keeper:22.10-alpine

restart: always

container_name: zk1

hostname: zk1

ports:

- "2181:9181"

volumes:

- ./keeper01/config.xml:/etc/clickhouse-keeper/keeper_config.xml

- ./keeper01/log:/var/log/clickhouse-keeper/

networks:

- zk-net

zk2:

image: clickhouse/clickhouse-keeper:22.10-alpine

restart: always

container_name: zk2

hostname: zk2

ports:

- "2182:9181"

volumes:

- ./keeper02/config.xml:/etc/clickhouse-keeper/keeper_config.xml

- ./keeper02/log:/var/log/clickhouse-keeper/

networks:

- zk-net

zk2:

image: clickhouse/clickhouse-keeper:22.10-alpine

restart: always

container_name: zk2

hostname: zk2

ports:

- "2183:9181"

volumes:

- ./keeper03/config.xml:/etc/clickhouse-keeper/keeper_config.xml

- ./keeper01/log:/var/log/clickhouse-keeper/

networks:

- zk-net

clickhouse的config.xml中配置

<zookeeper>

<node>

<host>zk1</host>

<port>2181</port>

</node>

<node>

<host>zk2</host>

<port>2182</port>

</node>

<node>

<host>zk3</host>

<port>2183</port>

</node>

</zookeeper>

3152

3152

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?