1. 安装虚拟机

1.1 硬件分配

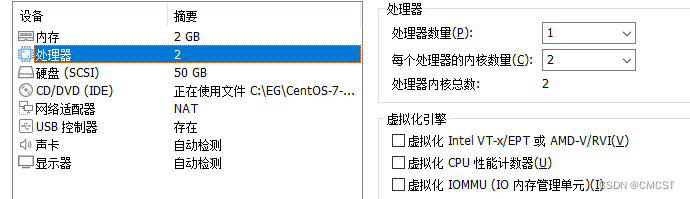

- CPU : 1 颗 2 核 CPU

- 内存:2048 MB

- 磁盘:50 GB

- 其它:默认

- 加载系统时,选择基础服务器

1.2 集群规划

| Master | node01 | node02 | |

|---|---|---|---|

| IP | 192.168.100.100 | 192.168.100.101 | 192.168.100.102 |

| OS | Cent OS 7.9 | Cent OS 7.9 | Cent OS 7.9 |

[root@Master ~]# cat /etc/redhat-release

CentOS Linux release 7.9.2009 (Core)

1.3 配置网卡

1.3.1 Master

| op | key | value | 位置 |

|---|---|---|---|

| 修改 | BOOTPROTO | none | 4 |

| 修改 | ONBOOT | yes | 15 |

| 新增 | IPADDR | 192.168.100.100 | |

| 新增 | PREFIX | 24 | |

| 新增 | GATEWAY | 192.168.100.2 | |

| 新增 | DNS1 | 223.5.5.5 | |

| 新增 | IPV6_PRIVACY | no |

[root@Master ~]# vim /etc/sysconfig/network-scripts/ifcfg-ens33

TYPE=Ethernet

PROXY_METHOD=none

BROWSER_ONLY=no

BOOTPROTO=none

DEFROUTE=yes

IPV4_FAILURE_FATAL=no

IPV6INIT=yes

IPV6_AUTOCONF=yes

IPV6_DEFROUTE=yes

IPV6_FAILURE_FATAL=no

IPV6_ADDR_GEN_MODE=stable-privacy

NAME=ens33

UUID=eb4335b9-98b7-4c63-ba0d-a25fa63aa5f5

DEVICE=ens33

ONBOOT=yes

IPADDR=192.168.100.100

PREFIX=24

GATEWAY=192.168.100.2

DNS1=223.5.5.5

IPV6_PRIVACY=no

1.3.2 node 01

| op | key | value | 位置 |

|---|---|---|---|

| 修改 | BOOTPROTO | none | 4 |

| 修改 | ONBOOT | yes | 15 |

| 新增 | IPADDR | 192.168.100.101 | |

| 新增 | PREFIX | 24 | |

| 新增 | GATEWAY | 192.168.100.2 | |

| 新增 | DNS1 | 223.5.5.5 | |

| 新增 | IPV6_PRIVACY | no |

[root@Master ~]# vim /etc/sysconfig/network-scripts/ifcfg-ens33

TYPE=Ethernet

PROXY_METHOD=none

BROWSER_ONLY=no

BOOTPROTO=none

DEFROUTE=yes

IPV4_FAILURE_FATAL=no

IPV6INIT=yes

IPV6_AUTOCONF=yes

IPV6_DEFROUTE=yes

IPV6_FAILURE_FATAL=no

IPV6_ADDR_GEN_MODE=stable-privacy

NAME=ens33

UUID=eb4335b9-98b7-4c63-ba0d-a25fa63aa5f5

DEVICE=ens33

ONBOOT=yes

IPADDR=192.168.100.101

PREFIX=24

GATEWAY=192.168.100.2

DNS1=223.5.5.5

IPV6_PRIVACY=no

1.3.3 node 02

| op | key | value | 位置 |

|---|---|---|---|

| 修改 | BOOTPROTO | none | 4 |

| 修改 | ONBOOT | yes | 15 |

| 新增 | IPADDR | 192.168.100.102 | |

| 新增 | PREFIX | 24 | |

| 新增 | GATEWAY | 192.168.100.2 | |

| 新增 | DNS1 | 223.5.5.5 | |

| 新增 | IPV6_PRIVACY | no |

[root@Master ~]# vim /etc/sysconfig/network-scripts/ifcfg-ens33

TYPE=Ethernet

PROXY_METHOD=none

BROWSER_ONLY=no

BOOTPROTO=none

DEFROUTE=yes

IPV4_FAILURE_FATAL=no

IPV6INIT=yes

IPV6_AUTOCONF=yes

IPV6_DEFROUTE=yes

IPV6_FAILURE_FATAL=no

IPV6_ADDR_GEN_MODE=stable-privacy

NAME=ens33

UUID=eb4335b9-98b7-4c63-ba0d-a25fa63aa5f5

DEVICE=ens33

ONBOOT=yes

IPADDR=192.168.100.102

PREFIX=24

GATEWAY=192.168.100.2

DNS1=223.5.5.5

IPV6_PRIVACY=no

1.4 修改hosts文件

1.3.1 Master

[root@Master ~]# vim /etc/hosts

127.0.0.1 localhost localhost.localdomain localhost4 localhost4.localdomain4

::1 localhost localhost.localdomain localhost6 localhost6.localdomain6

192.168.100.100 Master

192.168.100.101 node01

192.168.100.102 node02

1.3.2 node 01

[root@node01 ~]# vim /etc/hosts

127.0.0.1 localhost localhost.localdomain localhost4 localhost4.localdomain4

::1 localhost localhost.localdomain localhost6 localhost6.localdomain6

192.168.100.100 Master

192.168.100.101 node01

192.168.100.102 node02

1.3.3 node 02

[root@node02 ~]# vim /etc/hosts

127.0.0.1 localhost localhost.localdomain localhost4 localhost4.localdomain4

::1 localhost localhost.localdomain localhost6 localhost6.localdomain6

192.168.100.100 Master

192.168.100.101 node01

192.168.100.102 node02

2. 修改内核

2.1~2.7的命令,需要在Master、node 01、node 02上同时执行

- 在XShell上,单击右键->发送键输入到->所有会话

2.1 时间同步

# 启动chronyd服务

[root@Master ~]# systemctl start chronyd

# 设置chronyd服务开机自启

[root@Master ~]# systemctl enable chronyd

# 验证时间

[root@Master ~]# date

2.2 禁用iptables&firewalld

[root@Master ~]# systemctl stop firewalld

[root@Master ~]# systemctl disable firewalld

[root@Master ~]# systemctl stop iptables

[root@Master ~]# systemctl disable iptables

2.3 禁用SELinux

- 命令getenforce可查看是否开启

# 仅修改SELINUX值为disabled

[root@Master ~]# vim /etc/selinux/config

# This file controls the state of SELinux on the system.

# SELINUX= can take one of these three values:

# enforcing - SELinux security policy is enforced.

# permissive - SELinux prints warnings instead of enforcing.

# disabled - No SELinux policy is loaded.

# SELINUX=enforcing

SELINUX=disabled

# SELINUXTYPE= can take one of three values:

# targeted - Targeted processes are protected,

# minimum - Modification of targeted policy. Only selected processes are protected.

# mls - Multi Level Security protection.

SELINUXTYPE=targeted

2.4 禁用swap

# 将最后一行注释掉

[root@Master ~]# vim /etc/fstab

#

# /etc/fstab

# Created by anaconda on Sun Jun 23 11:17:14 2024

#

# Accessible filesystems, by reference, are maintained under '/dev/disk'

# See man pages fstab(5), findfs(8), mount(8) and/or blkid(8) for more info

#

/dev/mapper/centos-root / xfs defaults 0 0

UUID=83ed761a-3ce3-46d3-889c-e4078b53b0c1 /boot xfs defaults 0 0

# /dev/mapper/centos-swap swap swap defaults 0 0

# 查看swap是否关闭,如刚刚修改,需要重启才能生效,此时暂不重启,2.7会重启

[root@Master ~]# free -m

2.5 修改linux内核参数

[root@Master ~]# vim /etc/sysctl.d/kubernetes.conf

# 添加网桥过滤和地址转发功能

# 在/etc/sysctl.d/kubernetes.conf文件中添加如下内容

net.bridge.bridge-nf-call-ip6tables = 1

net.bridge.bridge-nf-call-iptables = 1

net.ipv4.ip_forward = 1

# 重新加载配置

[root@Master ~]# sysctl -p

# 加载网桥过滤模块

[root@Master ~]# modprobe br_netfilter

# 查看网桥过滤模块是否加载成功

[root@Master ~]# lsmod | grep br_netfilter

2.6 配置ipvs功能

此处可能需要配置yum源

[root@Master ~]# mkdir -p /etc/yum.repos.d/backup/

# 备份本地yum包

[root@Master ~]# mv /etc/yum.repos.d/*.repo /etc/yum.repos.d/backup/

[root@Master ~]# wget -O /etc/yum.repos.d/CentOs-Base.repo http://mirrors.aliyun.com/repo/Centos-7.repo

[root@Master ~]# yum clean all

[root@Master ~]# yum makecache

在k8s中Service有两种代理模型:

- 基于iptables的

- 基于ipvs的

本人使用ipvs,下面手动载入ipvs模块

[root@Master ~]# yum install ipset ipvsadm -y

[root@Master ~]# vim /etc/sysconfig/modules/ipvs.modulesmodprobe

#!/bin/bash

modprobe -- ip_vs

modprobe -- ip_vs_rr

modprobe -- ip_vs_wrr

modprobe -- ip_vs_sh

modprobe -- nf_conntrack_ipv4

[root@Master ~]# chmod +x /etc/sysconfig/modules/ipvs.modulesmodprobe

[root@Master ~]# /bin/bash /etc/sysconfig/modules/ipvs.modulesmodprobe

[root@Master ~]# lsmod | grep -e ip_vs -e nf_conntrack_ipv4

2.7 重启

[root@Master ~]# reboot

注意:2.1~2.7的命令Master、node01、node02都要全部执行

3. 安装Docker

3.1~3.5的命令,需要在Master、node 01、node 02上同时执行

3.1 切换镜像源

[root@Master ~]# wget https://mirrors.aliyun.com/docker-ce/linux/centos/docker-ce.repo -O /etc/yum.repos.d/docker-ce.repo

3.2 查看镜像源中docker版本

[root@Master ~]# yum list docker-ce --showduplicates

3.3 安装docker-ce

- 若不指定–setopt=obsoletes=0 参数,yum自动安装更高版本

[root@Master ~]# yum install --setopt=obsoletes=0 docker-ce-18.06.3.ce-3.el7 -y

3.4 添加配置文件

- Docker默认使用Cgroup Driver为cgroupfs

- K8s推荐使用systemd替代cgroupfs

[root@Master ~]# mkdir /etc/docker

[root@Master ~]# vim /etc/docker/daemon.json

{

"exec-opts":["native.cgroupdriver=systemd"],

"registry-mirrors":["https://kn0t2bca.mirror.aliyuncs.com"]

}

3.5 启动docker

[root@Master ~]# systemctl restart docker

[root@Master ~]# systemctl enable docker

[root@Master ~]# docker version

注意:3.1~3.5的命令Master、node01、node02都要全部执行

4. 安装kubernetes组件

4.1~4.4的命令,需要在Master、node 01、node 02上同时执行

4.1 更换K8s镜像源为阿里镜像源

[root@Master ~]# vim /etc/yum.repos.d/kubernetes.repo

[kubernetes]

name=Kubernetes

baseurl=http://mirrors.aliyun.com/kubernetes/yum/repos/kubernetes-el7-x86_64

enabled=1

gpgcheck=0

repo_gpgcheck=0

gpgkey=http://mirrors.aliyun.com/kubernetes/yum/doc/yum-key.gpg http://mirrors.aliyun.com/kubernetes/yum/doc/rpm-package-key.gpg

4.2 安装kubeadm、kubelet、kubectl

[root@Master ~]# yum install --setopt=obsoletes=0 kubeadm-1.17.4-0 kubelet-1.17.4-0 kubectl-1.17.4-0 -y

4.3 配置kubelet的cgroup

# 配置kubelet的cgroup

[root@Master ~]# vim /etc/sysconfig/kubelet

KUBELET_CGROUP_ARGS="--cgroup-driver=systemd"

KUBE_PROXY_MODE="ipvs"

4.4 kubelet开机自启

[root@Master ~]# systemctl enable kubelet

注意:4.1~4.4的命令Master、node01、node02都要全部执行

5. 准备集群镜像

5.1~5.2的命令,需要在Master、node 01、node 02上同时执行

5.1 查看集群所需镜像

[root@Master ~]# kubeadm config images list

5.2 手动编写脚本,拉取镜像

[root@Master ~]# vim k8s.repo

#!/bin/bash

images=(

kube-apiserver:v1.17.4

kube-controller-manager:v1.17.4

kube-scheduler:v1.17.4

kube-proxy:v1.17.4

pause:3.1

etcd:3.4.3-0

coredns:1.6.5

)

for imageName in ${images[@]} ; do

docker pull registry.cn-hangzhou.aliyuncs.com/google_containers/$imageName

docker tag registry.cn-hangzhou.aliyuncs.com/google_containers/$imageName k8s.gcr.io/$imageName

docker rmi registry.cn-hangzhou.aliyuncs.com/google_containers/$imageName

done

[root@Master ~]# chmod +x k8s.repo

[root@Master ~]# ./k8s.repo

[root@Master ~]# docker images

注意:5.1~5.2的命令Master、node01、node02都要全部执行

6. 集群初始化

仅在Master节点上操作

[root@Master ~]# kubeadm init --kubernetes-version=v1.17.4 --pod-network-cidr=10.244.0.0/16 --service-cidr=10.96.0.0/12 --apiserver-advertise-address=192.168.100.100

[root@Master ~]# mkdir -p $HOME/.kube

[root@Master ~]# sudo cp -i /etc/kubernetes/admin.conf $HOME/.kube/config

[root@Master ~]# sudo chown $(id -u):$(id -g) $HOME/.kube/config

- 在node01和node02上执行上图下方位置的命令[此处没有截出]

kubeadm join 192.168.100.100:[port] --token [...] --discovery-token-ca-cert-hash sha256:[...]

- 此时在Master上查看节点情况:均为NotReady状态,原因为:未安装网络插件

7. 安装插件

仅在Master节点上操作

7.1 获取flannel的配置文件

# 下载文件,如无法科学上网,下文已提供源码

[root@Master ~]# wget https://raw.gihubusercontent.com/coreos/flannel/master/Documentation/kube-flannel.yml

官方源文件

---

apiVersion: policy/v1beta1

kind: PodSecurityPolicy

metadata:

name: psp.flannel.unprivileged

annotations:

seccomp.security.alpha.kubernetes.io/allowedProfileNames: docker/default

seccomp.security.alpha.kubernetes.io/defaultProfileName: docker/default

apparmor.security.beta.kubernetes.io/allowedProfileNames: runtime/default

apparmor.security.beta.kubernetes.io/defaultProfileName: runtime/default

spec:

privileged: false

volumes:

- configMap

- secret

- emptyDir

- hostPath

allowedHostPaths:

- pathPrefix: "/etc/cni/net.d"

- pathPrefix: "/etc/kube-flannel"

- pathPrefix: "/run/flannel"

readOnlyRootFilesystem: false

# Users and groups

runAsUser:

rule: RunAsAny

supplementalGroups:

rule: RunAsAny

fsGroup:

rule: RunAsAny

# Privilege Escalation

allowPrivilegeEscalation: false

defaultAllowPrivilegeEscalation: false

# Capabilities

allowedCapabilities: ['NET_ADMIN', 'NET_RAW']

defaultAddCapabilities: []

requiredDropCapabilities: []

# Host namespaces

hostPID: false

hostIPC: false

hostNetwork: true

hostPorts:

- min: 0

max: 65535

# SELinux

seLinux:

# SELinux is unused in CaaSP

rule: 'RunAsAny'

---

kind: ClusterRole

apiVersion: rbac.authorization.k8s.io/v1

metadata:

name: flannel

rules:

- apiGroups: ['extensions']

resources: ['podsecuritypolicies']

verbs: ['use']

resourceNames: ['psp.flannel.unprivileged']

- apiGroups:

- ""

resources:

- pods

verbs:

- get

- apiGroups:

- ""

resources:

- nodes

verbs:

- list

- watch

- apiGroups:

- ""

resources:

- nodes/status

verbs:

- patch

---

kind: ClusterRoleBinding

apiVersion: rbac.authorization.k8s.io/v1

metadata:

name: flannel

roleRef:

apiGroup: rbac.authorization.k8s.io

kind: ClusterRole

name: flannel

subjects:

- kind: ServiceAccount

name: flannel

namespace: kube-system

---

apiVersion: v1

kind: ServiceAccount

metadata:

name: flannel

namespace: kube-system

---

kind: ConfigMap

apiVersion: v1

metadata:

name: kube-flannel-cfg

namespace: kube-system

labels:

tier: node

app: flannel

data:

cni-conf.json: |

{

"name": "cbr0",

"cniVersion": "0.3.1",

"plugins": [

{

"type": "flannel",

"delegate": {

"hairpinMode": true,

"isDefaultGateway": true

}

},

{

"type": "portmap",

"capabilities": {

"portMappings": true

}

}

]

}

net-conf.json: |

{

"Network": "10.244.0.0/16",

"Backend": {

"Type": "vxlan"

}

}

---

apiVersion: apps/v1

kind: DaemonSet

metadata:

name: kube-flannel-ds

namespace: kube-system

labels:

tier: node

app: flannel

spec:

selector:

matchLabels:

app: flannel

template:

metadata:

labels:

tier: node

app: flannel

spec:

affinity:

nodeAffinity:

requiredDuringSchedulingIgnoredDuringExecution:

nodeSelectorTerms:

- matchExpressions:

- key: kubernetes.io/os

operator: In

values:

- linux

hostNetwork: true

priorityClassName: system-node-critical

tolerations:

- operator: Exists

effect: NoSchedule

serviceAccountName: flannel

initContainers:

- name: install-cni-plugin

image: rancher/mirrored-flannelcni-flannel-cni-plugin:v1.0.0

command:

- cp

args:

- -f

- /flannel

- /opt/cni/bin/flannel

volumeMounts:

- name: cni-plugin

mountPath: /opt/cni/bin

- name: install-cni

image: quay.io/coreos/flannel:v0.15.1

command:

- cp

args:

- -f

- /etc/kube-flannel/cni-conf.json

- /etc/cni/net.d/10-flannel.conflist

volumeMounts:

- name: cni

mountPath: /etc/cni/net.d

- name: flannel-cfg

mountPath: /etc/kube-flannel/

containers:

- name: kube-flannel

image: quay.io/coreos/flannel:v0.15.1

command:

- /opt/bin/flanneld

args:

- --ip-masq

- --kube-subnet-mgr

resources:

requests:

cpu: "100m"

memory: "50Mi"

limits:

cpu: "100m"

memory: "50Mi"

securityContext:

privileged: false

capabilities:

add: ["NET_ADMIN", "NET_RAW"]

env:

- name: POD_NAME

valueFrom:

fieldRef:

fieldPath: metadata.name

- name: POD_NAMESPACE

valueFrom:

fieldRef:

fieldPath: metadata.namespace

volumeMounts:

- name: run

mountPath: /run/flannel

- name: flannel-cfg

mountPath: /etc/kube-flannel/

volumes:

- name: run

hostPath:

path: /run/flannel

- name: cni-plugin

hostPath:

path: /opt/cni/bin

- name: cni

hostPath:

path: /etc/cni/net.d

- name: flannel-cfg

configMap:

name: kube-flannel-cfg

7.2 启动fannel

[root@Master ~]# kubectl apply -f kube-flannel.yml

7.3 稍等片刻,查看集群节点状态

[root@Master ~]# kubectl get nodes

2971

2971

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?