提示:文章写完后,目录可以自动生成,如何生成可参考右边的帮助文档

k8s发布后端服务

1、编写springboos后端接口,实现增删改查的功能,以及条件查询的功能,测试通过将项目打包

使用idea开发工具编写完成后在浏览器URL地址栏中直接输入接口进行测试,确定每个模块都没有问题,然后将后端代码上传到gitee

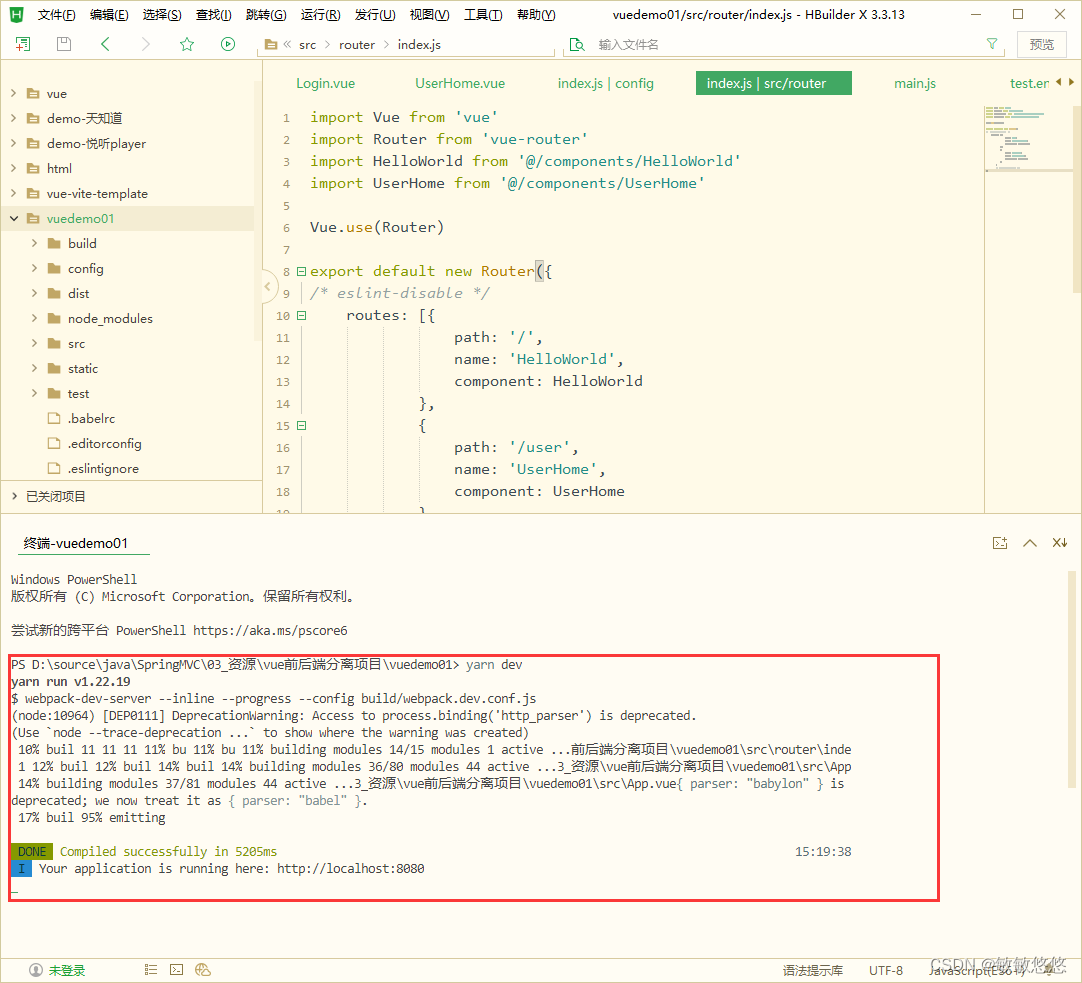

2、2.基于element-ui进行修改,主要实现前端模块的展示功能,以及按条件查询,对单个用户的信息的修改、删除操作,配置后端路径

使用HBuilder开发工具编写前端代码使用浏览器测试与后端的交互,没有问题将代码上传到gitee

3、环境准备

3.1 准备三台虚拟机

配置静态ip

master:1核2G(192.168.58.54)

node-1:2核4G(192.168.58.55)

node-2:2核4G(192.168.58.56)

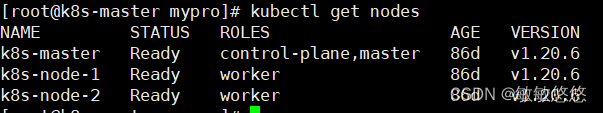

3.2 配置虚拟机

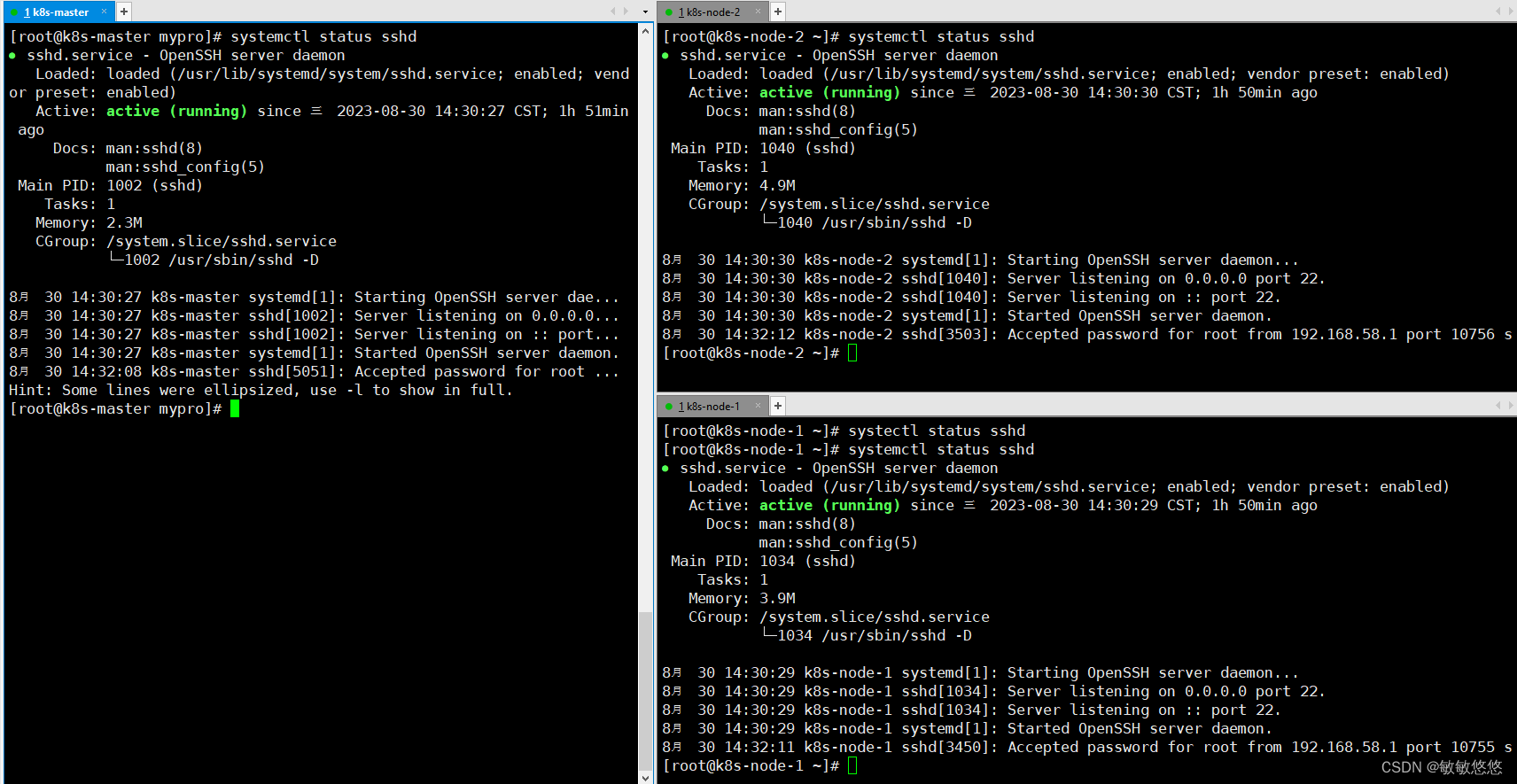

在每一台虚拟机上安装好docker和kubernetes环境,构建单master以及双node节点的集群,每个机器之前配置ssh免密协议,方便后续集群搭建文件的传输

三台机器:

配置ssh:

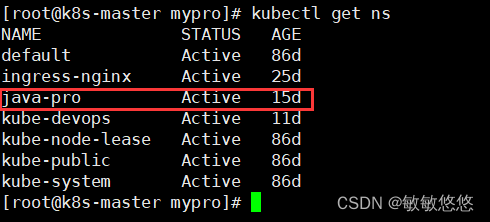

创建一个命名空间(java-pro),整个项目都放在该命令空间下

3.3制作springboot镜像

将源代码使用maven进行打包然后上传到服务器,然后编写Dockefile文件

FROM openjdk:17.0.1

COPY *.jar /app.jar

CMD ["--server.port=8081"]

EXPOSE 8081

ENTRYPOINT ["java","-jar","/app.jar"]

制作镜像

docker build -t spring-boot-curd .

# 使用docker构建一个容器进行测试

docker run -d --name test -p 8081:8081 spring-boot-curd

# 动态检查日志

docker logs -f test

# 浏览器输入服务器id+端口+测试路径

上传镜像

# 先在服务器中登录

docker login # 需要现在hub.docker.com上登录

# 提交

docker commit -m="springboot" b432d550117e minminyouyou/spring-boot

# 推送到仓库

docker push minminyouyou/spring-boot:latest

在hub.docker上查看

4.搭建springboot项目然后发布服务

apiVersion: apps/v1

kind: Deployment

metadata:

name: java-deployment

namespace: java-pro

labels:

app: spring-boot

spec:

replicas: 3

selector:

matchLabels:

app: spring-boot

template:

metadata:

labels:

app: spring-boot

spec:

containers:

- name: spring-boot

image: minminyouyou/spring-boot

imagePullPolicy: IfNotPresent

---

apiVersion: v1

kind: Service

metadata:

name: spring-boot-svc

namespace: java-pro

spec:

ports:

- port: 8181

nodePort: 32201

targetPort: 8081

selector:

app: spring-boot

type: NodePort

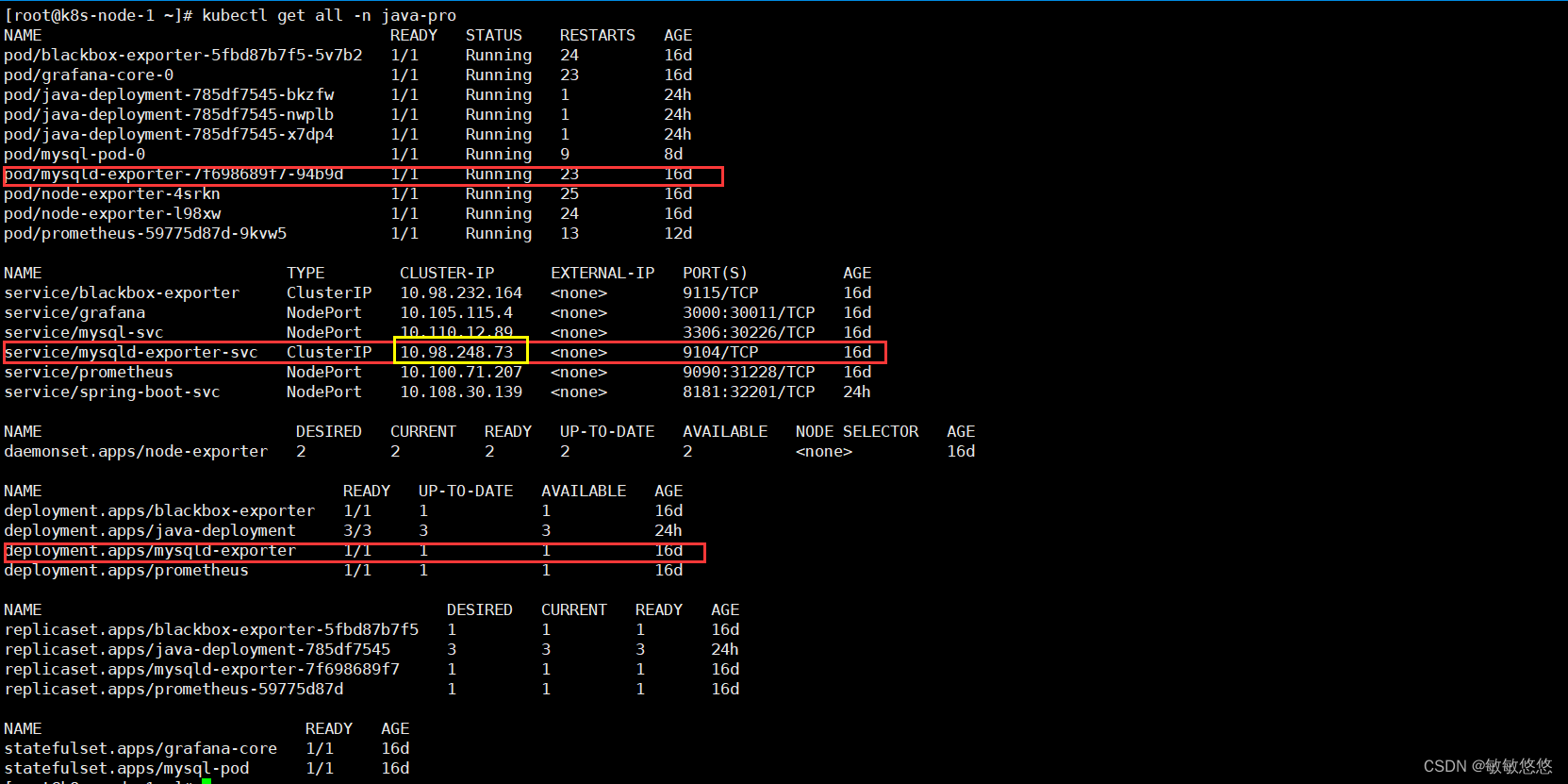

查看pod以及service

kubectl get all -n java-pro

NAME READY STATUS RESTARTS AGEpod/java-deployment-785df7545-bkzfw 1/1 Running 0 118m

pod/java-deployment-785df7545-nwplb 1/1 Running 0 118m

pod/java-deployment-785df7545-x7dp4 1/1 Running 0 118m

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

service/spring-boot-svc NodePort 10.108.30.139 <none> 8181:32201/TCP 118m

浏览器访问测试

5.创建一个StorageClass实现NFS动态制备

k8s 中提供了一套自动创建 PV 的机制,就是基于 StorageClass 进行的,通过 StorageClass 可以实现仅仅配置 PVC,然后交由 StorageClass 根据 PVC 的需求动态创建 PV。

5.1 搭建nfs服务

这里资源有限,所以就用node-2节点当作nfs服务器,安装nfs步骤省略

# 配置nfs

echo "/mypro/mysql/data *(rw,sync,no_subtree_check,no_root_squash)" > /etc/exports

echo "/mypro/prometheus/grafana *(rw,no_root_squash)" >> /etc/exports

# 让nfs服务生效

exportfs -r

5.2 RBAC配置

apiVersion: v1

kind: ServiceAccount

metadata:

name: nfs-client-provisioner

# namespace: java-pro

---

kind: ClusterRole

apiVersion: rbac.authorization.k8s.io/v1

metadata:

name: nfs-client-provisioner-runner

# namespace: java-pro

rules:

- apiGroups: [""]

resources: ["persistentvolumes"]

verbs: ["get", "list", "watch", "create", "delete"]

- apiGroups: [""]

resources: ["persistentvolumeclaims"]

verbs: ["get", "list", "watch", "update"]

- apiGroups: ["storage.k8s.io"]

resources: ["storageclasses"]

verbs: ["get", "list", "watch"]

- apiGroups: [""]

resources: ["events"]

verbs: ["create", "update", "patch"]

---

kind: ClusterRoleBinding

apiVersion: rbac.authorization.k8s.io/v1

metadata:

name: run-nfs-client-provisioner

# namespace: java-pro

subjects:

- kind: ServiceAccount

name: nfs-client-provisioner

namespace: default

roleRef:

kind: ClusterRole

name: nfs-client-provisioner-runner

apiGroup: rbac.authorization.k8s.io

---

kind: Role

apiVersion: rbac.authorization.k8s.io/v1

metadata:

name: leader-locking-nfs-client-provisioner

# namespace: java-pro

rules:

- apiGroups: [""]

resources: ["endpoints"]

verbs: ["get", "list", "watch", "create", "update", "patch"]

---

kind: RoleBinding

apiVersion: rbac.authorization.k8s.io/v1

metadata:

name: leader-locking-nfs-client-provisioner

# namespace: java-pro

subjects:

- kind: ServiceAccount

name: nfs-client-provisioner

roleRef:

kind: Role

name: leader-locking-nfs-client-provisioner

apiGroup: rbac.authorization.k8s.io

5.3 制备器provisioner配置

grafana的配置

apiVersion: apps/v1

kind: Deployment

metadata:

name: nfs-client-provisioner

# namespace: java-pro

labels:

app: nfs-client-provisioner

spec:

replicas: 1

strategy:

type: Recreate

selector:

matchLabels:

app: nfs-client-provisioner

template:

metadata:

labels:

app: nfs-client-provisioner

spec:

serviceAccountName: nfs-client-provisioner

containers:

- name: nfs-client-provisioner

image: registry.cn-beijing.aliyuncs.com/mydlq/nfs-subdir-external-provisioner:v4.0.0

imagePullPolicy: IfNotPresent

volumeMounts:

- name: nfs-client-root

mountPath: /persistentvolumes

env:

- name: PROVISIONER_NAME

value: fuseim.pri/ifs

- name: NFS_SERVER

value: 192.168.58.56

- name: NFS_PATH

value: /mypro/prometheus/grafana

volumes:

- name: nfs-client-root

nfs:

server: 192.168.58.56

path: /mypro/prometheus/grafana

5.4 StorageClass的配置

apiVersion: storage.k8s.io/v1

kind: StorageClass

metadata:

name: managed-nfs-storage

# namespace: java-pro

provisioner: fuseim.pri/ifs # 外部制备器提供者,编写为提供者的名称

parameters:

archiveOnDelete: "false" # 是否存档,false 表示不存档,会删除 oldPath 下面的数据,true 表示存档,会重命名路径

reclaimPolicy: Delete # 回收策略,默认为 Delete 可以配置为 Retain

volumeBindingMode: Immediate # 默认为 Immediate,表示创建 PVC 立即进行绑定,只有 azuredisk 和 AWSelasticblockstore 支持其他值

查看pod和StorageClass

kubectl get pod

NAME READY STATUS RESTARTS AGE

nfs-client-provisioner-5d854b988f-kl6gz 1/1 Running 76 19d

kubectl get sc

NAME PROVISIONER RECLAIMPOLICY VOLUMEBINDINGMODE ALLOWVOLUMEEXPANSION AGE

managed-nfs-storage fuseim.pri/ifs Retain Immediate false 19d

6.搭建MySQL服务

6.1 发布MySQL服务

apiVersion: v1

kind: Secret

metadata:

name: mysql-password

namespace: java-pro

type: Opaque

data:

#加密后的密码

PASSWORD: Mjg4Mjg1NjQwdGFuZw==

---

apiVersion: apps/v1

kind: StatefulSet

metadata:

name: mysql-pod

namespace: java-pro

spec:

selector:

matchLabels:

app: mysql-pod

serviceName: "mysql-svc"

replicas: 1 # 默认值是 1

template:

metadata:

labels:

app: mysql-pod

spec:

containers:

- name: mysql

image: mysql:5.7

env:

- name: MYSQL_ROOT_PASSWORD

value: "288285640tang"

ports:

- containerPort: 3306

name: web

volumeMounts:

- name: data-volume

mountPath: /var/lib/mysql

volumeClaimTemplates:

- metadata:

name: data-volume

spec:

accessModes: [ "ReadWriteOnce" ]

storageClassName: "local-storage"

resources:

requests:

storage: 1Gi

---

apiVersion: v1

kind: Service

metadata:

name: mysql-svc

namespace: java-pro

spec:

ports:

- port: 3306

targetPort: 3306

nodePort: 30226

selector:

app: mysql-pod

type: NodePort

---

apiVersion: v1

kind: ConfigMap

metadata:

name: mysql-config

namespace: java-pro

data:

mysql.cnf: |

[mysqld]

character-set-server=utf8mb4

collation-server=utf8mb4_general_ci

init-connect='SET NAMES utf8mb4'

[client]

default-character-set=utf8mb4

[mysql]

default-character-set=utf8mb4

查看pod和service

kubectl get pod -n java-pro

mysql-pod-0 1/1 Running 8 8d

kubectl get svc -n java-pro

mysql-svc NodePort 10.110.12.89 <none> 3306:30226/TCP 15d

6.2 外部连接测试

使用Navicat进行连接测试

导入准备好的数据库,然后去挂载目录查看

出现了新添加的数据库,证明挂载没有问题

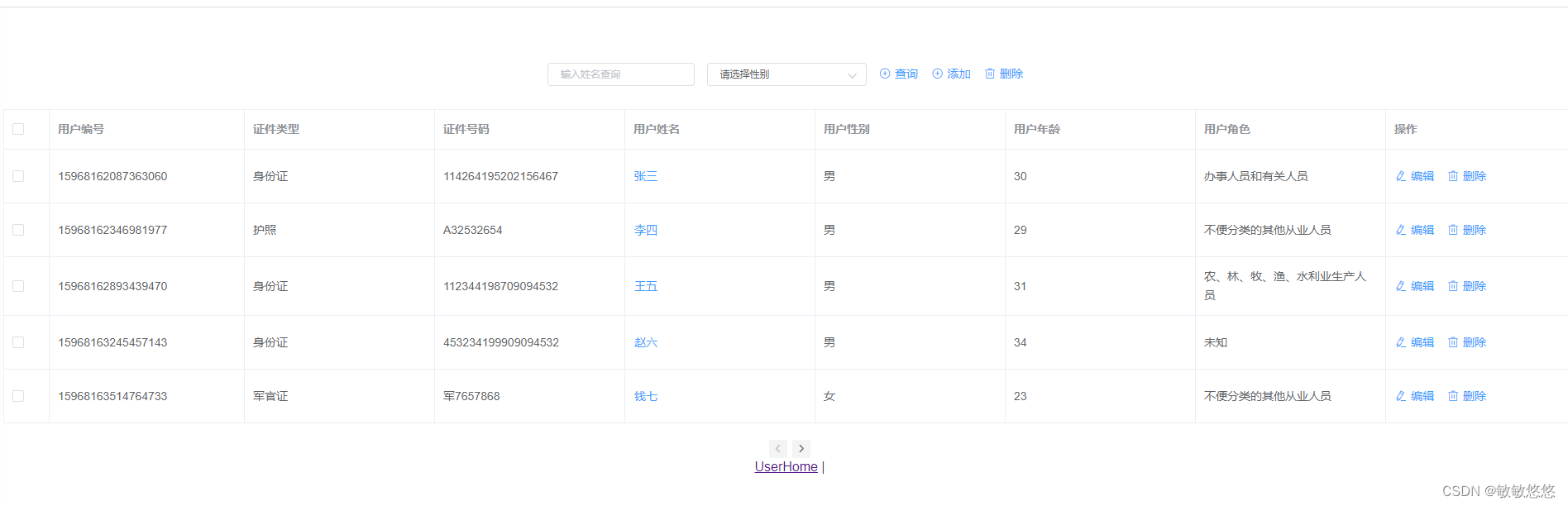

测试前后端项目

打开HBuilder启动服务

浏览器访问

发现没有问题,接下来就是对整个集群的监控和日志的收集

7.搭建Prometheus服务

7.1Prometheus基础配置

创建ConfigMap配置

apiVersion: v1

kind: ConfigMap

metadata:

name: prometheus-config

namespace: java-pro

data:

prometheus.yaml: |

global:

scrape_interval: 15s

evaluation_interval: 15s

scrape_configs:

- job_name: 'prometheus'

static_configs:

- targets: ['localhost:9090']

部署Prometheus

apiVersion: v1

kind: Service

metadata:

name: prometheus

namespace: java-pro

labels:

name: prometheus

spec:

ports:

- name: prometheus

protocol: TCP

port: 9090

nodePort: 31228

targetPort: 9090

selector:

app: prometheus

type: NodePort

---

apiVersion: apps/v1

kind: Deployment

metadata:

labels:

name: prometheus

name: prometheus

namespace: java-pro

spec:

replicas: 1

selector:

matchLabels:

app: prometheus

template:

metadata:

labels:

app: prometheus

spec:

serviceAccountName: prometheus

serviceAccount: prometheus

containers:

- name: prometheus

image: prom/prometheus:v2.2.1

imagePullPolicy: IfNotPresent

command:

- "/bin/prometheus"

args:

- "--config.file=/mypro/prometheus/prometheus.yaml"

ports:

- containerPort: 9090

protocol: TCP

volumeMounts:

- mountPath: "/mypro/prometheus"

name: prometheus-config

volumes:

- name: prometheus-config

configMap:

name: prometheus-config

配置权限

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRole

metadata:

name: prometheus

namespace: java-pro

rules:

- apiGroups: [""]

resources:

- nodes

- nodes/proxy

- services

- endpoints

- pods

verbs: ["get", "list", "watch"]

- apiGroups:

- extensions

resources:

- ingresses

verbs: ["get", "list", "watch"]

- nonResourceURLs: ["/metrics"]

verbs: ["get"]

---

apiVersion: v1

kind: ServiceAccount

metadata:

name: prometheus

namespace: java-pro

---

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRoleBinding

metadata:

name: prometheus

namespace: java-pro

roleRef:

apiGroup: rbac.authorization.k8s.io

kind: ClusterRole

name: prometheus

subjects:

- kind: ServiceAccount

name: prometheus

namespace: java-pro

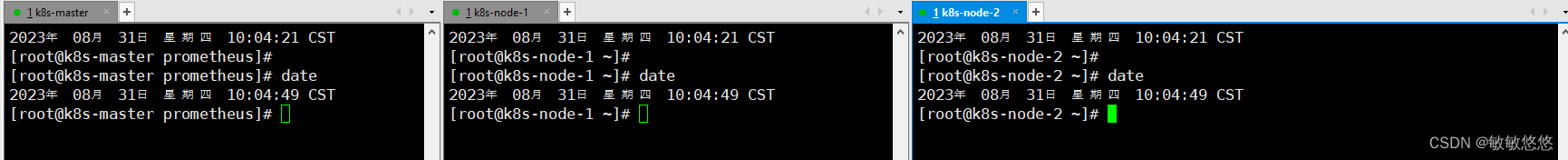

系统时间同步,这里因为之前安装k8s集群的时候就进行了时间同步,所以这里时间是一致的

查看部署情况

这里发现pod和service都启动起来了,然后打开浏览器查看

出现上述图片证明服务没有问题

7.2 监控k8s集群

7.2.1 从kubelet获取节点容器资源使用情况,在prometheus-config.yaml添加以下内容

- job_name: 'kubernetes-cadvisor'

scheme: https

tls_config:

ca_file: /var/run/secrets/kubernetes.io/serviceaccount/ca.crt

bearer_token_file: /var/run/secrets/kubernetes.io/serviceaccount/token

kubernetes_sd_configs:

- role: node

relabel_configs:

- action: labelmap

regex: __meta_kubernetes_node_label_(.+)

- target_label: __address__

replacement: kubernetes.default.svc:443

- source_labels: [__meta_kubernetes_node_name]

regex: (.+)

target_label: __metrics_path__

replacement: /api/v1/nodes/${1}/proxy/metrics/cadvisor

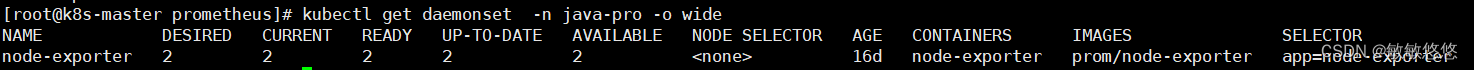

7.2.2 使用exporter监控资源使用情况

创建一个daemonset从每一个节点下获取当前节点的资源使用情况

apiVersion: apps/v1

kind: DaemonSet

metadata:

name: node-exporter

namespace: java-pro

spec:

selector:

matchLabels:

app: node-exporter

template:

metadata:

annotations:

prometheus.io/scrape: 'true'

prometheus.io/port: '9100'

prometheus.io/path: 'metrics'

labels:

app: node-exporter

name: node-exporter

spec:

containers:

- image: prom/node-exporter

imagePullPolicy: IfNotPresent

name: node-exporter

ports:

- containerPort: 9100

hostPort: 9100

name: scrape

hostNetwork: true

hostPID: true

查看 daemonset 运行状态

修改配置文件,添加监控采集任务

- job_name: 'kubernetes-pods'

kubernetes_sd_configs:

- role: pod

relabel_configs:

- source_labels: [__meta_kubernetes_pod_annotation_prometheus_io_scrape]

action: keep

regex: true

- source_labels: [__meta_kubernetes_pod_annotation_prometheus_io_path]

action: replace

target_label: __metrics_path__

regex: (.+)

- source_labels: [__address__, __meta_kubernetes_pod_annotation_prometheus_io_port]

action: replace

regex: ([^:]+)(?::\d+)?;(\d+)

replacement: $1:$2

target_label: __address__

- action: labelmap

regex: __meta_kubernetes_pod_label_(.+)

- source_labels: [__meta_kubernetes_namespace]

action: replace

target_label: kubernetes_namespace

- source_labels: [__meta_kubernetes_pod_name]

action: replace

target_label: kubernetes_pod_name

通过监控 apiserver 来监控所有对应的入口请求,增加api-server 监控配置,在配置文件中添加以下内容

- job_name: 'kubernetes-apiservers'

kubernetes_sd_configs:

- role: endpoints

scheme: https

tls_config:

ca_file: /var/run/secrets/kubernetes.io/serviceaccount/ca.crt

bearer_token_file: /var/run/secrets/kubernetes.io/serviceaccount/token

relabel_configs:

- source_labels: [__meta_kubernetes_namespace, __meta_kubernetes_service_name, __meta_kubernetes_endpoint_port_name]

action: keep

regex: default;kubernetes;https

- target_label: __address__

replacement: kubernetes.default.svc:443

7.2.3 对Ingress和Service进行网络探测

创建一个blackbox-exporter进行网络探测

apiVersion: v1

kind: Service

metadata:

labels:

app: blackbox-exporter

name: blackbox-exporter

namespace: java-pro

spec:

ports:

- name: blackbox

port: 9115

protocol: TCP

selector:

app: blackbox-exporter

type: ClusterIP

---

apiVersion: apps/v1

kind: Deployment

metadata:

labels:

app: blackbox-exporter

name: blackbox-exporter

namespace: java-pro

spec:

replicas: 1

selector:

matchLabels:

app: blackbox-exporter

template:

metadata:

labels:

app: blackbox-exporter

spec:

containers:

- image: prom/blackbox-exporter

imagePullPolicy: IfNotPresent

name: blackbox-exporter

查看运行状态

配置监控采集所有 service/ingress 信息,在配置文件中添加以下内容

- job_name: 'kubernetes-services'

metrics_path: /probe

params:

module: [http_2xx]

kubernetes_sd_configs:

- role: service

relabel_configs:

- source_labels: [__meta_kubernetes_service_annotation_prometheus_io_probe]

action: keep

regex: true

- source_labels: [__address__]

target_label: __param_target

- target_label: __address__

replacement: blackbox-exporter.default.svc.cluster.local:9115

- source_labels: [__param_target]

target_label: instance

- action: labelmap

regex: __meta_kubernetes_service_label_(.+)

- source_labels: [__meta_kubernetes_namespace]

target_label: kubernetes_namespace

- source_labels: [__meta_kubernetes_service_name]

target_label: kubernetes_name

- job_name: 'kubernetes-ingresses'

metrics_path: /probe

params:

module: [http_2xx]

kubernetes_sd_configs:

- role: ingress

relabel_configs:

- source_labels: [__meta_kubernetes_ingress_annotation_prometheus_io_probe]

action: keep

regex: true

- source_labels: [__meta_kubernetes_ingress_scheme,__address__,__meta_kubernetes_ingress_path]

regex: (.+);(.+);(.+)

replacement: ${1}://${2}${3}

target_label: __param_target

- target_label: __address__

replacement: blackbox-exporter.default.svc.cluster.local:9115

- source_labels: [__param_target]

target_label: instance

- action: labelmap

regex: __meta_kubernetes_ingress_label_(.+)

- source_labels: [__meta_kubernetes_namespace]

target_label: kubernetes_namespace

- source_labels: [__meta_kubernetes_ingress_name]

target_label: kubernetes_name

7.2.4 对数据库进行监控

编写mysql-exporter

apiVersion: apps/v1

kind: Deployment

metadata:

name: mysqld-exporter

namespace: java-pro

labels:

app: mysqld-exporter

spec:

replicas: 1

selector:

matchLabels:

app: mysqld-exporter

template:

metadata:

labels:

app: mysqld-exporter

spec:

containers:

- name: mysqld-exporter

image: prom/mysqld-exporter

imagePullPolicy: IfNotPresent

env:

# 此处为mysql-exporter指定监控的数据库地址以及对应的用户名、密码

- name: DATA_SOURCE_NAME

value: root:288285640tang@(192.168.58.55:30226)/mydb

ports:

- containerPort: 9104

---

apiVersion: v1

kind: Service

metadata:

name: mysqld-exporter-svc

namespace: java-pro

labels:

app: mysqld-exporter

spec:

type: ClusterIP

selector:

app: mysqld-exporter

ports:

- name: mysqld-exporter-api

port: 9104

protocol: TCP

查看运行状态,这里的cluster-ip和port是待会需要加入到配置文件中的

修改配置文件

- job_name: 'mysql-performance'

scrape_interval: 1m

static_configs:

- targets:

['10.98.248.73:9104'] # 写mysqld-exporter的ip和port

params:

collect[]:

- global_status

- perf_schema.tableiowaits

- perf_schema.indexiowaits

- perf_schema.tablelocks

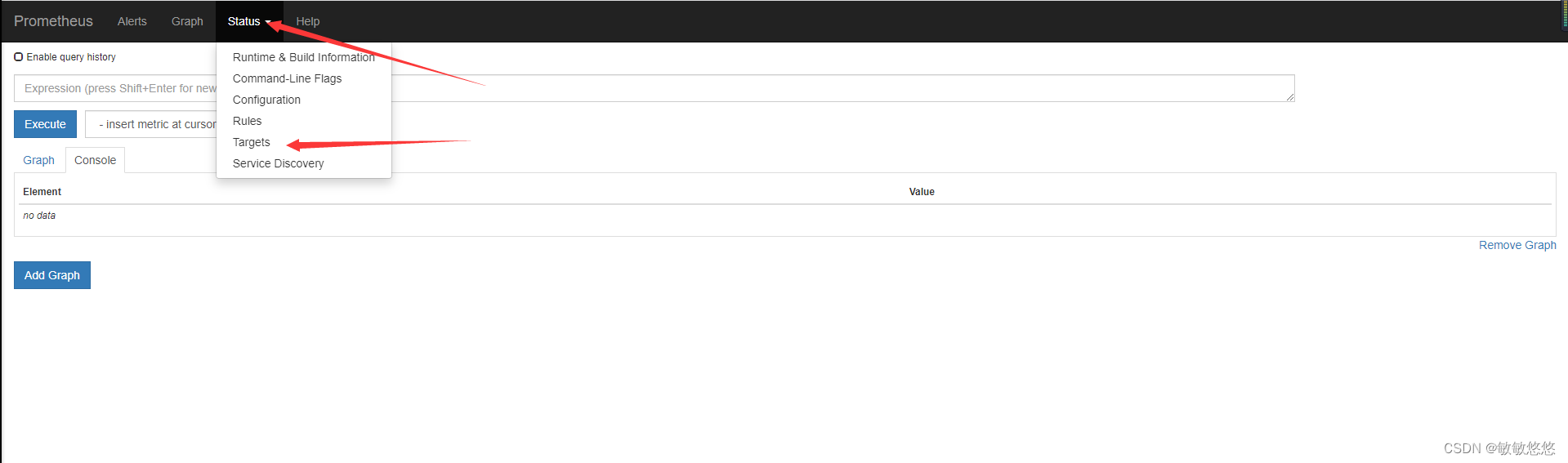

7.2.5 浏览器访问测试

来到targets之后就会看到所有被监控的内容了

7.3 Grafana可视化

7.3.1 部署grafana

编写Deployment

apiVersion: apps/v1

kind: StatefulSet

metadata:

name: grafana-core

namespace: java-pro

labels:

app: grafana

component: core

spec:

serviceName: "grafana"

selector:

matchLabels:

app: grafana

replicas: 1

template:

metadata:

labels:

app: grafana

component: core

spec:

containers:

- image: grafana/grafana:6.5.3

name: grafana-core

imagePullPolicy: IfNotPresent

env:

# The following env variables set up basic auth twith the default admin user and admin password.

- name: GF_AUTH_BASIC_ENABLED

value: "true"

- name: GF_AUTH_ANONYMOUS_ENABLED

value: "false"

readinessProbe:

httpGet:

path: /login

port: 3000

volumeMounts:

- name: grafana-persistent-storage

mountPath: /mypro/prometheus/grafana

subPath: grafana

volumeClaimTemplates:

- metadata:

name: grafana-persistent-storage

spec:

storageClassName: managed-nfs-storage

accessModes:

- ReadWriteOnce

resources:

requests:

storage: "1G"

编写Service

apiVersion: v1

kind: Service

metadata:

name: grafana

namespace: java-pro

labels:

app: grafana

component: core

spec:

type: NodePort

ports:

- port: 3000

nodePort: 30011

selector:

app: grafana

component: core

查看运行状态

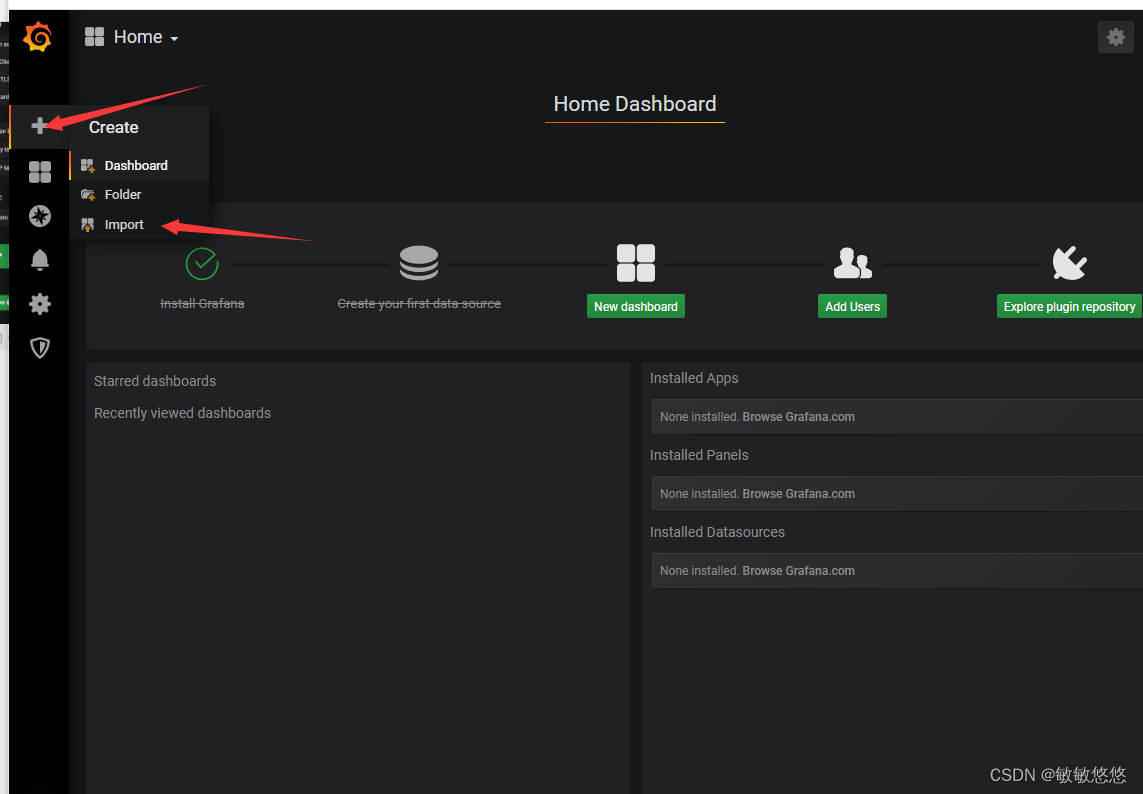

7.3.2 配置grafana面板

浏览器url输入地址得到以下画面,输入用户名和密码

进入页面后在添加数据源中选择Prometheus

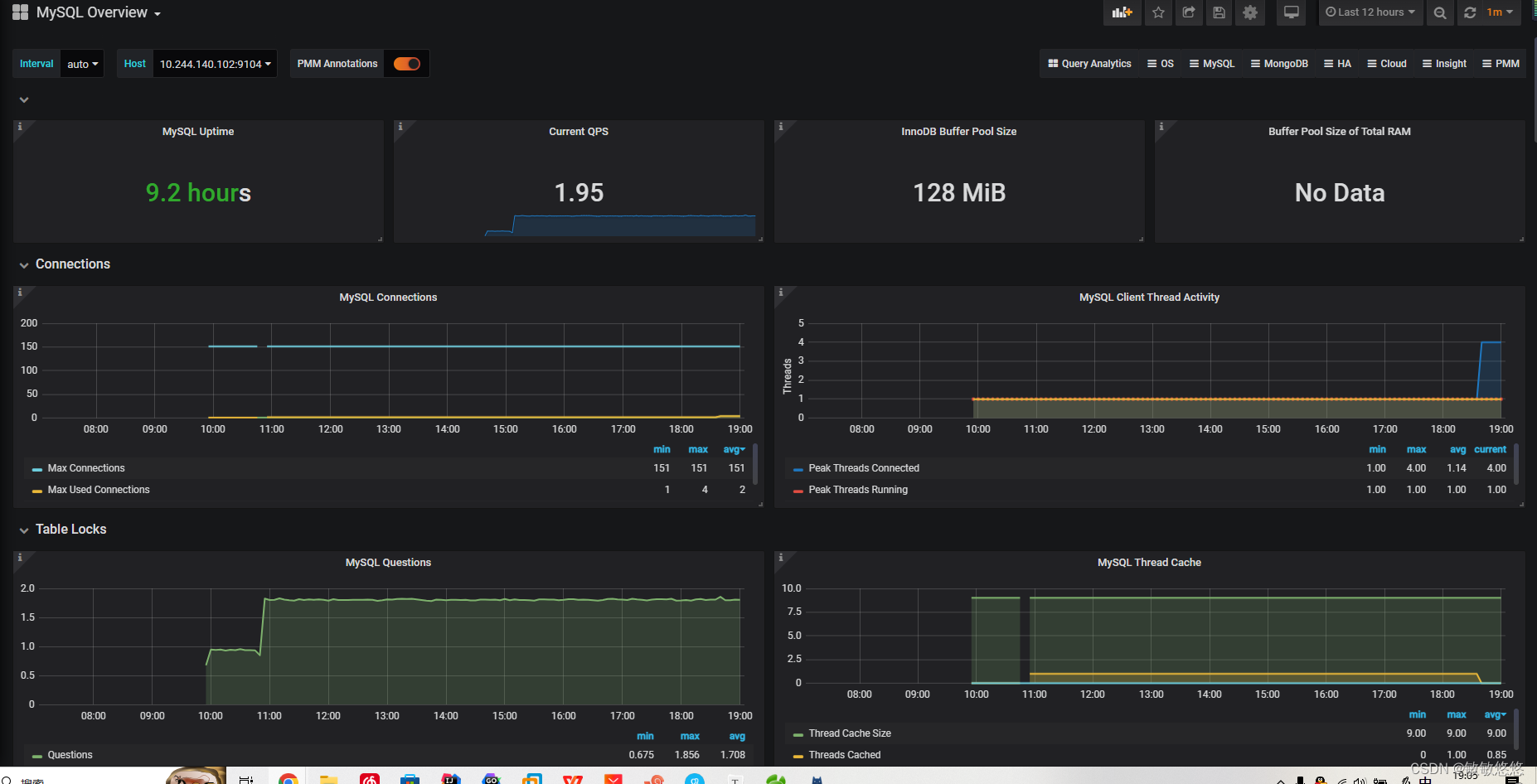

创建完成之后导入两个模板,一个用来监控整个k8s集群(8588)的情况,还有一个用来监控数据库(7362)的情况

数据库:

k8s集群:

8.ELK日志管理

8.1 部署Elasticsearch、logstash、filebeat、kibana服务

因为这四个文件的内容比较多,这里就给出了配置文件下载链接

yaml文件下载

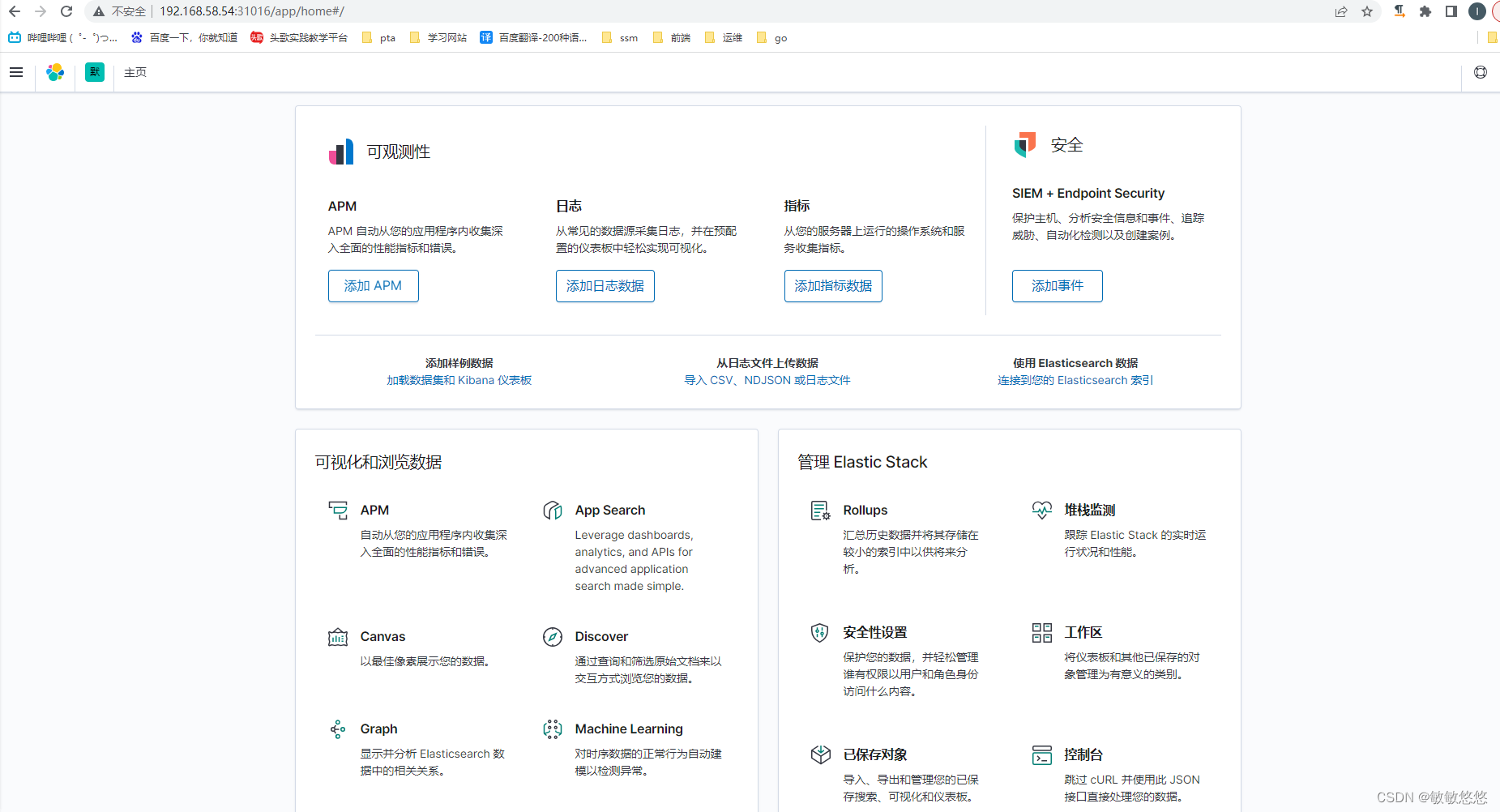

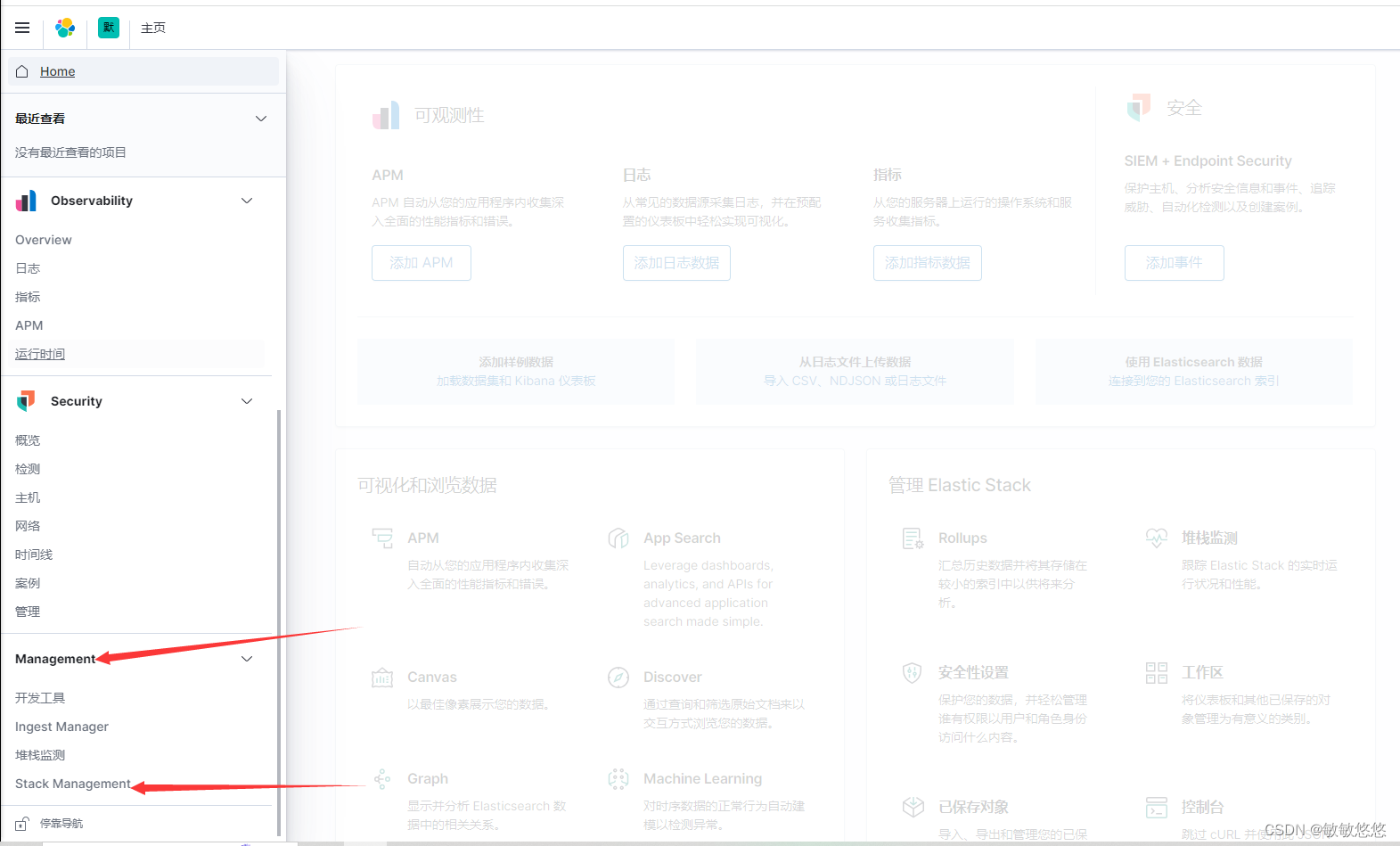

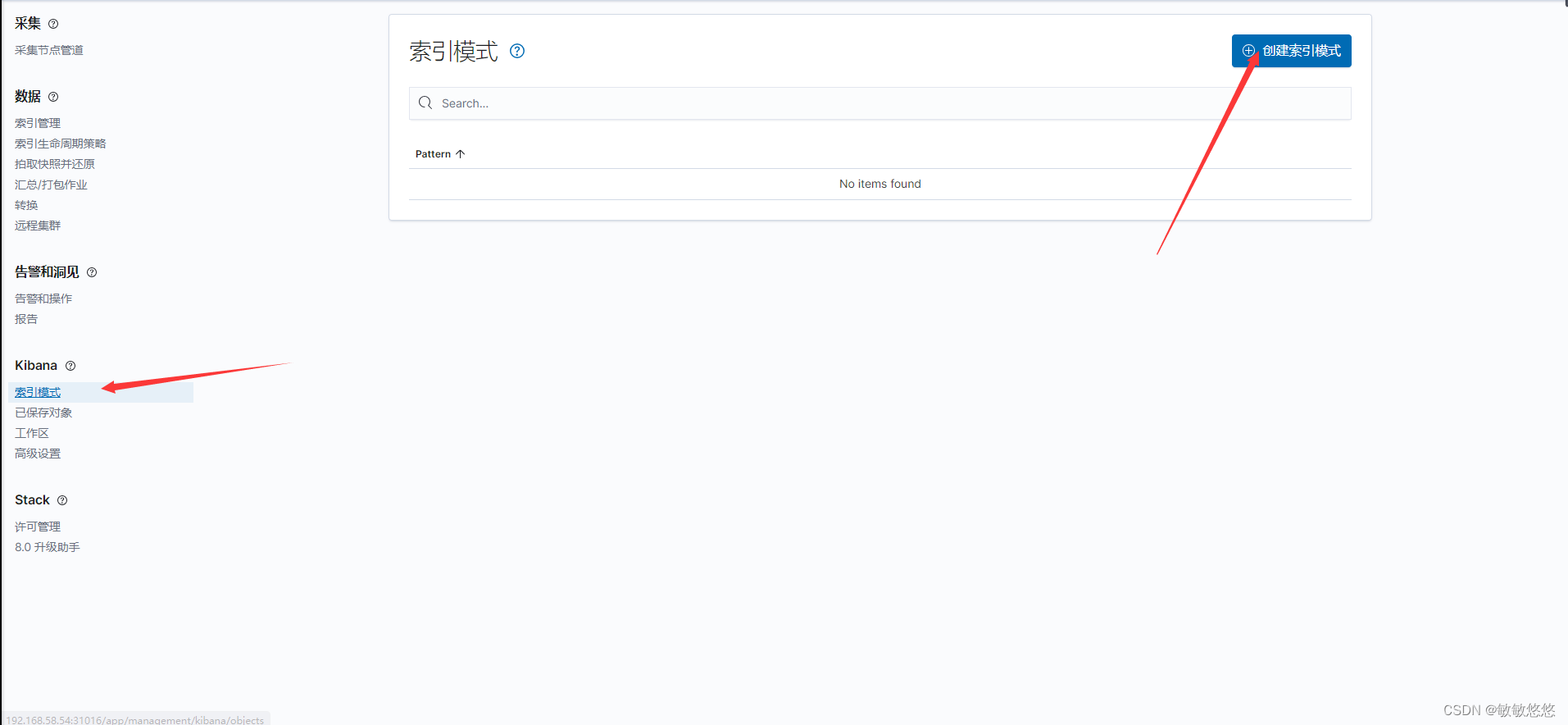

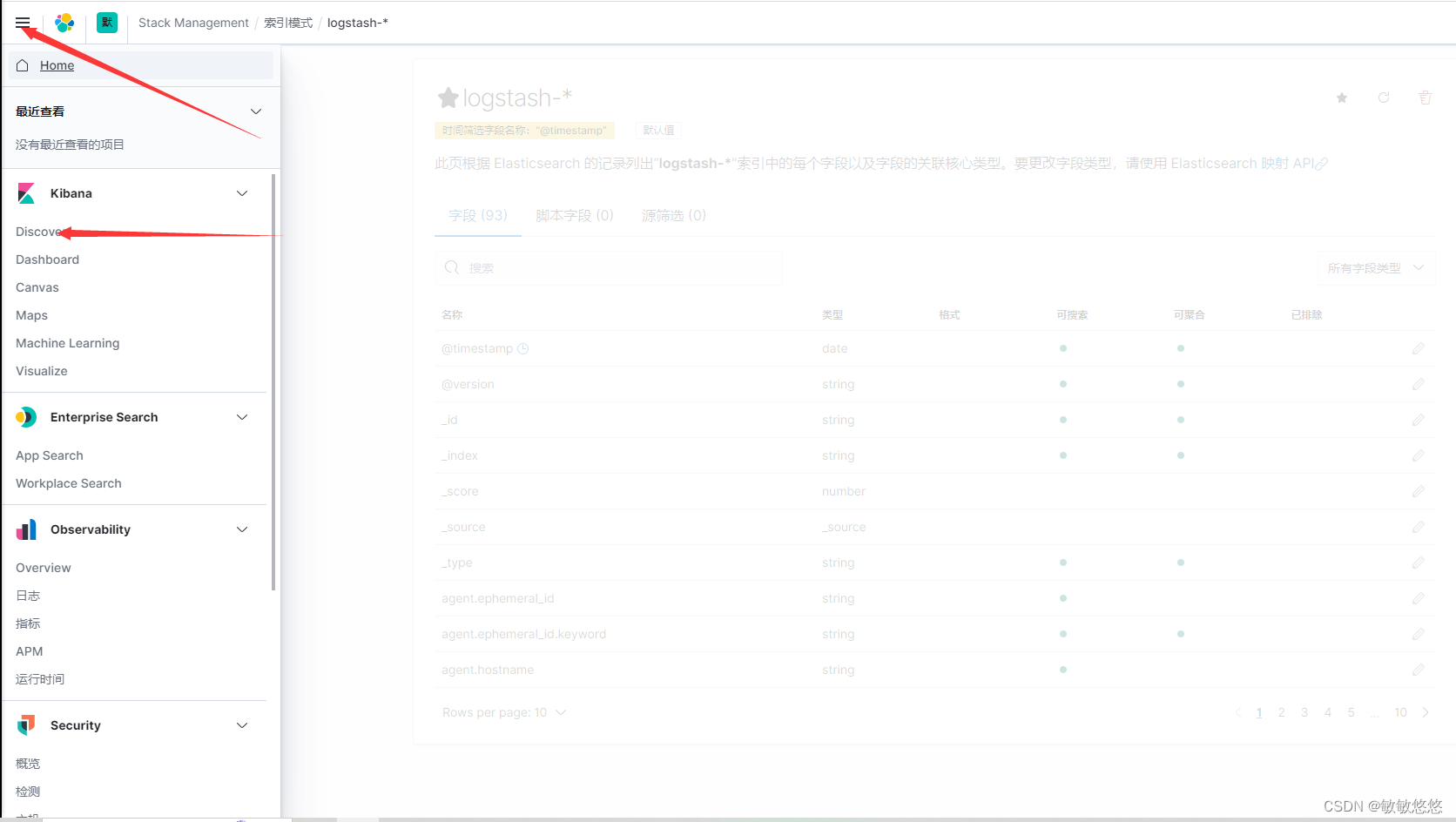

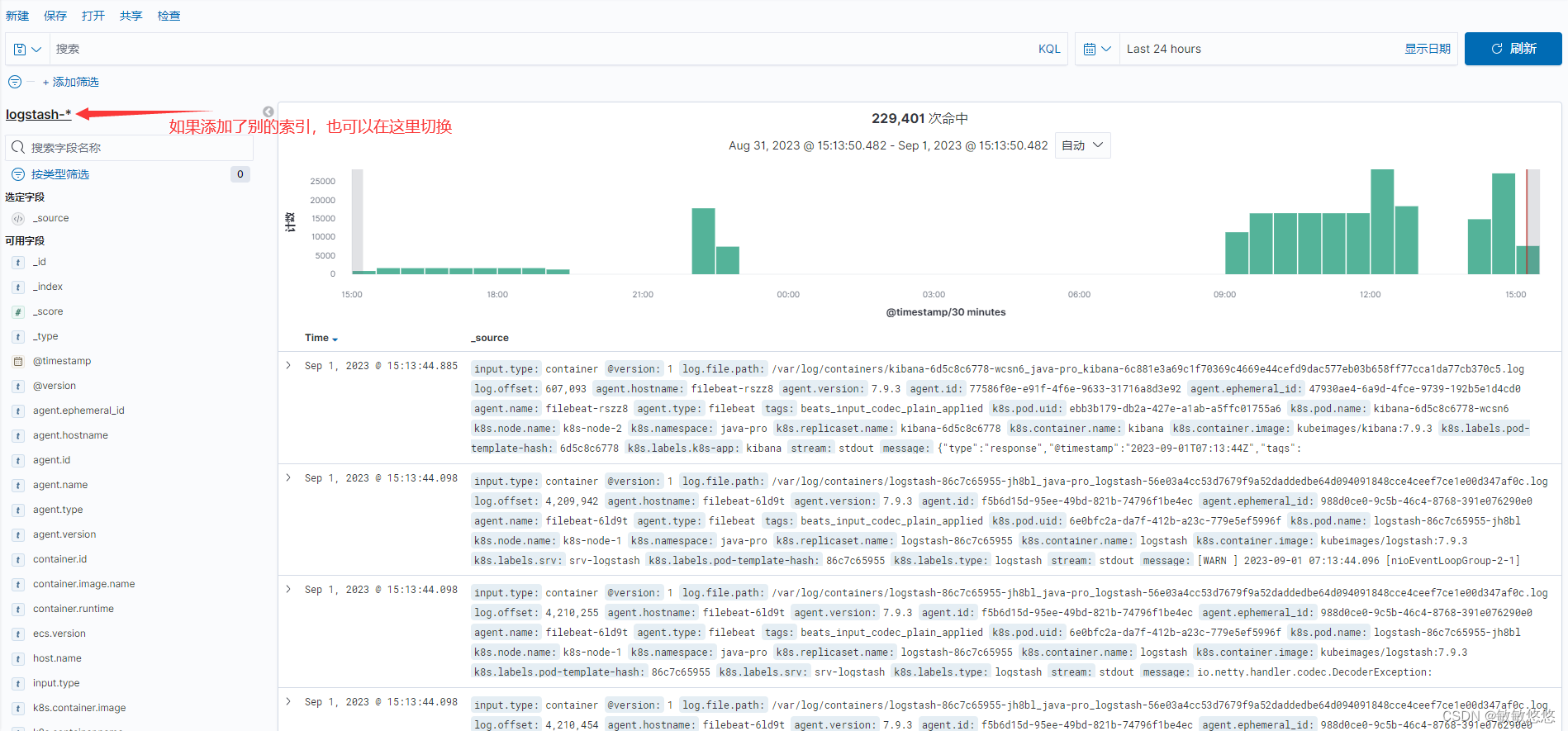

8.2 配置kibana

3494

3494

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?