目录

非高可用版本

集群信息

节点规划

部署k8s集群的节点按照用途可以划分为如下2类角色:

* master: 集群的master节点,集群的初始化节点,基础配置不低于2C4G

* slave: 集群的slave节点,可以多台基础配置不低于2C4G

本例为了演示slave节点的添加,会部署一台master+2台slave,节点规划如下

| 主机名 | 节点ip | 角色 | 部署组件 |

|---|---|---|---|

| gjx-master | 192.168.1.11 | master | etcd, kube-apiserver, kube-controller-manager, kubectl, kubeadm, kubelet, kube-proxy, flannel |

| gjx-node1 | 192.168.1.12 | slave | kubectl, kubelet, kube-proxy, flannel |

| gjx-node2 | 192.168.1.13 | slave | kubectl, kubelet, kube-proxy, flannel |

安装前准备

设置hosts解析

操作节点:所有节点(gjx-master,gjx-node1,gjx-node2)

* 修改hostname

# 在master节点

$ hostnamectl set-hostname gjx-master #设置master节点的hostname

# 在slave-1节点

$ hostnamectl set-hostname gjx-node1 #设置node1节点的hostname

# 在slave-2节点

$ hostnamectl set-hostname gjx-node2 #设置node2节点的hostname添加hosts解析

$ cat >>/etc/hosts<<EOF

172.21.65.226 gjx-master

172.21.65.227 gjx-node1

172.21.65.228 gjx-node2

EOF调整系统配置

操作节点:所有节点(gjx-master,gjx-node1,gjx-node2)

设置安全组开发端口

如果节点间无安全组限制(内网机器间可以任意访问),可以忽略,否则,至少保证如下端口可通: k8s-master节点:TCP:6443,2379,2380,60080,60081UDP协议端口全部打开 k8s-slave节点:UDP协议端口全部打开

设置iptables

iptables -P FORWARD ACCEPT关闭swap分区

swapoff -a

# 防止开机自动挂载 swap 分区

sed -i '/ swap / s/^\(.*\)$/#\1/g' /etc/fstab关闭selinux和防火墙

sed -ri 's#(SELINUX=).*#\1disabled#' /etc/selinux/config

setenforce 0

systemctl disable firewalld && systemctl stop firewalld修改内核参数

cat <<EOF > /etc/sysctl.d/k8s.conf

net.bridge.bridge-nf-call-ip6tables = 1

net.bridge.bridge-nf-call-iptables = 1

net.ipv4.ip_forward=1

vm.max_map_count=262144

EOF

modprobe br_netfilter

sysctl -p /etc/sysctl.d/k8s.conf设置yum源

$ curl -o /etc/yum.repos.d/Centos-7.repo http://mirrors.aliyun.com/repo/Centos-7.repo

$ curl -o /etc/yum.repos.d/docker-ce.repo http://mirrors.aliyun.com/docker-ce/linux/centos/docker-ce.repo

$ cat <<EOF > /etc/yum.repos.d/kubernetes.repo

[kubernetes]

name=Kubernetes

baseurl=http://mirrors.aliyun.com/kubernetes/yum/repos/kubernetes-el7-x86_64

enabled=1

gpgcheck=0

repo_gpgcheck=0

gpgkey=http://mirrors.aliyun.com/kubernetes/yum/doc/yum-key.gpg

http://mirrors.aliyun.com/kubernetes/yum/doc/rpm-package-key.gpg

EOF

$ yum clean all && yum makecache安装docker

操作节点:所有节点

## 查看所有的可用版本

$ yum list docker-ce --showduplicates | sort -r

##安装旧版本 yum install docker-ce-cli-18.09.9-3.el7 docker-ce-18.09.9-3.el7

## 安装源里最新版本

$ yum install docker-ce-20.10.18 -y

## 配置docker加速和非安全的镜像仓库,需要根据个人的实际环境修改

$ mkdir -p /etc/docker

vi /etc/docker/daemon.json

{

"insecure-registries": [

"192.168.1.11:5000"

],

"registry-mirrors" : [

"https://8xpk5wnt.mirror.aliyuncs.com"

]

}

## 启动docker

$ systemctl enable docker && systemctl start docker初始化集群

安装kubeadm、kubelet和kubectl

操作节点:所有节点执行

$ yum install -y kubelet-1.24.4 kubeadm-1.24.4 kubectl-1.24.4 --disableexcludes=kubernetes

## 查看kubeadm 版本

$ kubeadm version

## 设置kubelet开机启动

$ systemctl enable kubelet 配置containerd

操作节点:所有节点

* 将 sandbox_image 镜像源设置为阿里云google_containers镜像源:

# 导出默认配置,config.toml这个文件默认是不存在的

containerd config default > /etc/containerd/config.toml

grep sandbox_image /etc/containerd/config.toml

sed -i "s#k8s.gcr.io/pause#registry.aliyuncs.com/google_containers/pause#g" /etc/containerd/config.toml

sed -i "s#registry.k8s.io/pause#registry.aliyuncs.com/google_containers/pause#g" /etc/containerd/config.toml* 配置containerd cgroup驱动程序systemd:

sed -i 's#SystemdCgroup = false#SystemdCgroup = true#g' /etc/containerd/config.toml* 配置docker hub镜像加速:

# 修改配置文件/etc/containerd/config.toml, 145行添加config_path

...

144 [plugins."io.containerd.grpc.v1.cri".registry]

145 config_path = "/etc/containerd/certs.d"

146

147 [plugins."io.containerd.grpc.v1.cri".registry.auths]

148

149 [plugins."io.containerd.grpc.v1.cri".registry.configs]

150

151 [plugins."io.containerd.grpc.v1.cri".registry.headers]

152

153 [plugins."io.containerd.grpc.v1.cri".registry.mirrors]

...

# 创建对应的目录

mkdir -p /etc/containerd/certs.d/docker.io

# 配置加速

cat >/etc/containerd/certs.d/docker.io/hosts.toml <<EOF

server = "https://docker.io"

[host."https://8xpk5wnt.mirror.aliyuncs.com"]

capabilities = ["pull","resolve"]

[host."https://docker.mirrors.ustc.edu.cn"]

capabilities = ["pull","resolve"]

[host."https://registry-1.docker.io"]

capabilities = ["pull","resolve","push"]

EOF* 配置非安全的私有镜像仓库:

# 此处目录必须和个人环境中实际的仓库地址保持一致

mkdir -p /etc/containerd/certs.d/192.168.1.11:5000

cat >/etc/containerd/certs.d/192.168.1.11:5000/hosts.toml <<EOF

server = "http://192.168.1.11:5000"

[host."http://192.168.1.11:5000"]

capabilities = ["pull", "resolve", "push"]

skip_verify = true

EOF初始化配置文件

操作节点:只在master节点上执行

$ kubeadm config print init-defaults > kubeadm.yaml

$ cat kubeadm.yaml

apiVersion: kubeadm.k8s.io/v1beta3

bootstrapTokens:

- groups:

- system:bootstrappers:kubeadm:default-node-token

token: abcdef.0123456789abcdef

ttl: 24h0m0s

usages:

- signing

- authentication

kind: InitConfiguration

localAPIEndpoint:

advertiseAddress: 192.168.1.11 # 此处替换为k8s-master的ip地址

bindPort: 6443

nodeRegistration:

criSocket: unix:///var/run/containerd/containerd.sock

imagePullPolicy: IfNotPresent

name: gjx-master # 此处替换为k8s-master的hostname

taints: null

---

apiServer:

timeoutForControlPlane: 4m0s

apiVersion: kubeadm.k8s.io/v1beta3

certificatesDir: /etc/kubernetes/pki

clusterName: kubernetes

controllerManager: {}

dns: {}

etcd:

local:

dataDir: /var/lib/etcd

imageRepository: registry.aliyuncs.com/google_containers # 替换为国内镜像源

kind: ClusterConfiguration

kubernetesVersion: 1.24.4 # 替换为1.24.4

networking:

dnsDomain: cluster.local

podSubnet: 10.244.0.0/16 # 添加此行,用来分配k8s节点的pod ip

serviceSubnet: 10.96.0.0/12

scheduler: {}提前下载镜像

操作节点:只在master节点上执行

# 查看需要使用的镜像列表,若无问题,将得到如下列表

$ kubeadm config images list --config kubeadm.yaml

registry.aliyuncs.com/google_containers/kube-apiserver:v1.24.4

registry.aliyuncs.com/google_containers/kube-controller-manager:v1.24.4

registry.aliyuncs.com/google_containers/kube-scheduler:v1.24.4

registry.aliyuncs.com/google_containers/kube-proxy:v1.24.4

registry.aliyuncs.com/google_containers/pause:3.7

registry.aliyuncs.com/google_containers/etcd:3.5.3-0

registry.aliyuncs.com/google_containers/coredns:v1.8.6

# 提前下载镜像到本地

$ kubeadm config images pull --config kubeadm.yaml

[config/images] Pulled registry.aliyuncs.com/google_containers/kube-apiserver:v1.24.4

[config/images] Pulled registry.aliyuncs.com/google_containers/kube-controller-manager:v1.24.4

[config/images] Pulled registry.aliyuncs.com/google_containers/kube-scheduler:v1.24.4

[config/images] Pulled registry.aliyuncs.com/google_containers/kube-proxy:v1.24.4

[config/images] Pulled registry.aliyuncs.com/google_containers/pause:3.7

[config/images] Pulled registry.aliyuncs.com/google_containers/etcd:3.5.3-0

[config/images] Pulled registry.aliyuncs.com/google_containers/coredns:v1.8.6初始化master节点

操作节点:只在master上

$ kubeadm init --config kubeadm.yaml如初始化成功后,最后会提示如下信息

...

Your Kubernetes master has initialized successfully!

To start using your cluster, you need to run the following as a regular user:

mkdir -p $HOME/.kube

sudo cp -i /etc/kubernetes/admin.conf $HOME/.kube/config

sudo chown $(id -u):$(id -g) $HOME/.kube/config

You should now deploy a pod network to the cluster.

Run "kubectl apply -f [podnetwork].yaml" with one of the options listed at:

https://kubernetes.io/docs/concepts/cluster-administration/addons/

Then you can join any number of worker nodes by running the following on each as root:

kubeadm join 192.168.1.11:6443 --token abcdef.0123456789abcdef \

--discovery-token-ca-cert-hash sha256:1c4305f032f4bf534f628c32f5039084f4b103c922ff71b12a5f0f98d1ca9a4f接下来按照上述提示信息操作,配置kubectl客户端的认证

mkdir -p $HOME/.kube

sudo cp -i /etc/kubernetes/admin.conf $HOME/.kube/config

sudo chown $(id -u):$(id -g) $HOME/.kube/config⚠️注意:此时使用 kubectl get nodes查看节点应该处于notReady状态,因为还未配置网络插件

若执行初始化过程中出错,根据错误信息调整后,执行kubeadm reset后再次执行init操作即可

添加node节点到集群中

操作节点:所有的slave节点(k8s-slave)需要执行 在每台slave节点,执行如下命令,该命令是在kubeadm init成功后提示信息中打印出来的,需要替换成实际init后打印出的命令。

kubeadm join 192.168.1.11:6443 --token abcdef.0123456789abcdef \

--discovery-token-ca-cert-hash sha256:1c4305f032f4bf534f628c32f5039084f4b103c922ff71b12a5f0f98d1ca9a4f如果忘记添加命令,可以通过如下命令生成:

kubeadm token create --print-join-command网络插件

操作节点:只在master节点执行

* 下载flannel的yaml文件:

wget https://raw.githubusercontent.com/coreos/flannel/master/Documentation/kube-flannel.yml* 如不能下载文章如下为配置文件

---

kind: Namespace

apiVersion: v1

metadata:

name: kube-flannel

labels:

pod-security.kubernetes.io/enforce: privileged

---

kind: ClusterRole

apiVersion: rbac.authorization.k8s.io/v1

metadata:

name: flannel

rules:

- apiGroups:

- ""

resources:

- pods

verbs:

- get

- apiGroups:

- ""

resources:

- nodes

verbs:

- list

- watch

- apiGroups:

- ""

resources:

- nodes/status

verbs:

- patch

---

kind: ClusterRoleBinding

apiVersion: rbac.authorization.k8s.io/v1

metadata:

name: flannel

roleRef:

apiGroup: rbac.authorization.k8s.io

kind: ClusterRole

name: flannel

subjects:

- kind: ServiceAccount

name: flannel

namespace: kube-flannel

---

apiVersion: v1

kind: ServiceAccount

metadata:

name: flannel

namespace: kube-flannel

---

kind: ConfigMap

apiVersion: v1

metadata:

name: kube-flannel-cfg

namespace: kube-flannel

labels:

tier: node

app: flannel

data:

cni-conf.json: |

{

"name": "cbr0",

"cniVersion": "0.3.1",

"plugins": [

{

"type": "flannel",

"delegate": {

"hairpinMode": true,

"isDefaultGateway": true

}

},

{

"type": "portmap",

"capabilities": {

"portMappings": true

}

}

]

}

net-conf.json: |

{

"Network": "10.244.0.0/16",

"Backend": {

"Type": "vxlan"

}

}

---

apiVersion: apps/v1

kind: DaemonSet

metadata:

name: kube-flannel-ds

namespace: kube-flannel

labels:

tier: node

app: flannel

spec:

selector:

matchLabels:

app: flannel

template:

metadata:

labels:

tier: node

app: flannel

spec:

affinity:

nodeAffinity:

requiredDuringSchedulingIgnoredDuringExecution:

nodeSelectorTerms:

- matchExpressions:

- key: kubernetes.io/os

operator: In

values:

- linux

hostNetwork: true

priorityClassName: system-node-critical

tolerations:

- operator: Exists

effect: NoSchedule

serviceAccountName: flannel

initContainers:

- name: install-cni-plugin

#image: flannelcni/flannel-cni-plugin:v1.1.0 for ppc64le and mips64le (dockerhub limitations may apply)

image: docker.io/rancher/mirrored-flannelcni-flannel-cni-plugin:v1.1.0

command:

- cp

args:

- -f

- /flannel

- /opt/cni/bin/flannel

volumeMounts:

- name: cni-plugin

mountPath: /opt/cni/bin

- name: install-cni

#image: flannelcni/flannel:v0.20.1 for ppc64le and mips64le (dockerhub limitations may apply)

image: docker.io/rancher/mirrored-flannelcni-flannel:v0.20.1

command:

- cp

args:

- -f

- /etc/kube-flannel/cni-conf.json

- /etc/cni/net.d/10-flannel.conflist

volumeMounts:

- name: cni

mountPath: /etc/cni/net.d

- name: flannel-cfg

mountPath: /etc/kube-flannel/

containers:

- name: kube-flannel

#image: flannelcni/flannel:v0.20.1 for ppc64le and mips64le (dockerhub limitations may apply)

image: docker.io/rancher/mirrored-flannelcni-flannel:v0.20.1

command:

- /opt/bin/flanneld

args:

- --ip-masq

- --kube-subnet-mgr

resources:

requests:

cpu: "100m"

memory: "50Mi"

limits:

cpu: "100m"

memory: "50Mi"

securityContext:

privileged: false

capabilities:

add: ["NET_ADMIN", "NET_RAW"]

env:

- name: POD_NAME

valueFrom:

fieldRef:

fieldPath: metadata.name

- name: POD_NAMESPACE

valueFrom:

fieldRef:

fieldPath: metadata.namespace

- name: EVENT_QUEUE_DEPTH

value: "5000"

volumeMounts:

- name: run

mountPath: /run/flannel

- name: flannel-cfg

mountPath: /etc/kube-flannel/

- name: xtables-lock

mountPath: /run/xtables.lock

volumes:

- name: run

hostPath:

path: /run/flannel

- name: cni-plugin

hostPath:

path: /opt/cni/bin

- name: cni

hostPath:

path: /etc/cni/net.d

- name: flannel-cfg

configMap:

name: kube-flannel-cfg

- name: xtables-lock

hostPath:

path: /run/xtables.lock

type: FileOrCreate

* 修改配置,指定网卡名称,大概在文件的159行,添加一行配置:

vi kube-flannel.yaml

...

150 containers:

151 - name: kube-flannel

152 #image: flannelcni/flannel:v0.19.2 for ppc64le and mips64le (dockerhub limitations may apply)

153 image: docker.io/rancher/mirrored-flannelcni-flannel:v0.19.2

154 command:

155 - /opt/bin/flanneld

156 args:

157 - --ip-masq

158 - --kube-subnet-mgr

159 - --iface=eth0 # 如果机器存在多网卡的话,指定内网网卡的名称,默认不指定的话会找第一块网卡

160 resources:

161 requests:

162 cpu: "100m"

163 memory: "50Mi"

...* 确认pod网段

vi kube-flannel.yaml

82 net-conf.json: |

83 {

84 "Network": "10.244.0.0/16",

85 "Backend": {

86 "Type": "vxlan"

87 }

88 }

# 确认84行的网段和前面kubeadm.yaml中初始化使用的配置中的podSubnet保持一致!* 执行安装flannel网络插件:

# 执行flannel安装

kubectl apply -f kube-flannel.yml

kubectl -n kube-flannel get po -owide集群设置

设置master节点是否可调度(可选)

操作节点:gjx-master

默认部署成功后,master节点无法调度业务pod,如需设置master节点也可以参与pod的调度,需执行:

kubectl taint node k8s-master node-role.kubernetes.io/master:NoSchedule-

kubectl taint node k8s-master node-role.kubernetes.io/control-plane:NoSchedule-设置kubectl自动补齐

操作节点:gjx-master

yum install bash-completion -y

source /usr/share/bash-completion/bash_completion

source <(kubectl completion bash)

echo "source <(kubectl completion bash)" >> ~/.bashrc调用证书过期

使用kubeadm安装的集群,证书默认有效期为1年,可以通过如下方式修改为10年。

cd /etc/kubernetes/pki

# 查看当前证书有效期

for i in $(ls *.crt); do echo "===== $i ====="; openssl x509 -in $i -text -noout | grep -A 3 'Validity' ; done

mkdir backup_key; cp -rp ./* backup_key/

git clone https://github.com/yuyicai/update-kube-cert.git

cd update-kube-cert/

bash update-kubeadm-cert.sh all

#若无法clone项目,可以手动在浏览器中打开后,复制update-kubeadm-cert.sh 脚本内容到机器中执行验证集群

操作节点:在master节点执行

kubectl get nodes #观察集群节点是否全部Ready创建测试nginx服务

kubectl run test-nginx --image=nginx:alpine查看pod是否创建成功,并访问测试pod ip测试是否可用

$ kubectl get po -o wide

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

test-nginx-5bd8859b98-5nnnw 1/1 Running 0 9s 10.244.1.2 k8s-slave1 <none> <none>

$ curl 10.244.1.2

...

<h1>Welcome to nginx!</h1>

<p>If you see this page, the nginx web server is successfully installed and

working. Further configuration is required.</p>

<p>For online documentation and support please refer to

<a href="http://nginx.org/">nginx.org</a>.<br/>

Commercial support is available at

<a href="http://nginx.com/">nginx.com</a>.</p>

<p><em>Thank you for using nginx.</em></p>

</body>

</html>containerd客户端介绍

操作节点:所有节点

由于新版本的k8s直接采用containerd作为容器运行时,因此,后续创建的服务,通过docker的命令无法查询,因此,如果有需要对节点中的容器进行操作的需求,需要用containerd的命令行工具来替换,目前总共有三种,包含:

- ctr

- crictl

- nerctl

ctr

ctr为最基础的containerd的操作命令行工具,安装containerd时已默认安装,因此无需再单独安装。

ctr的可操作的命令很少,且很不人性化,因此极力不推荐使用

Containerd 也有 namespaces 的概念,对于上层编排系统的支持,ctr 客户端 主要区分了 3 个命名空间分别是k8s.io、moby和default

比如操作容器和镜像:

# 查看containerd的命名空间

ctr ns ls;

# 查看containerd启动的容器列表

ctr -n k8s.io container ls

# 查看镜像列表

ctrl -n k8s.io image ls

# 导入镜像

ctr -n=k8s.io image import dashboard.tar

# 从私有仓库拉取镜像,前提是/etc/containerd/certs.d下已经配置过该私有仓库的非安全认证

ctr images pull --user admin:admin --hosts-dir "/etc/containerd/certs.d" 172.21.65.226:5000/eladmin/eladmin-api:v1-rc1

# ctr命令无法查看容器的日志,也无法执行exec等操作crictl

crictl 是遵循 CRI 接口规范的一个命令行工具,通常用它来检查和管理kubelet节点上的容器运行时和镜像。

主机安装了 k8s 后,命令行会有 crictl 命令,无需单独安装。

crictl 命令默认使用k8s.io 这个名称空间,因此无需单独指定,使用前,需要先加一下配置文件:

cat /etc/crictl.yaml

runtime-endpoint: unix:///run/containerd/containerd.sock

image-endpoint: unix:///run/containerd/containerd.sock

timeout: 10

debug: false常用操作:

# 查看容器列表

crictl ps

# 查看镜像列表

crictl images

# 删除镜像

crictl rmi 172.21.65.226:5000/eladmin/eladmin-api:v1-rc1

# 拉取镜像, 若拉取私有镜像,需要修改containerd配置添加认证信息,比较麻烦且不安全

crictl pull nginx:alpine

# 执行exec操作

crictl ps

CONTAINER IMAGE CREATED STATE NAME ATTEMPT POD ID POD

d23fe516d2eeb 8b0e63fd4fec6 5 hours ago Running nginx-test 0 5dbae572dcb6b nginx-test-5d979bb778-tc5kz

# 注意只能使用containerid

crictl exec -ti d23fe516d2eeb bash

# 查看容器日志

crictl logs -f d23fe516d2eeb

# 清理镜像

crictl rmi --prunenerdctl

推荐使用 nerdctl,使用效果与 docker 命令的语法基本一致 , 官网https://github.com/containerd/nerdctl

安装:

# 下载精简版安装包,精简版的包无法使用nerdctl进行构建镜像

wget https://github.com/containerd/nerdctl/releases/download/v0.23.0/nerdctl-0.23.0-linux-amd64.tar.gz

# 解压后,将nerdctl 命令拷贝至$PATH下即可

cp nerdctl /usr/bin/常用操作:

# 查看镜像列表

nerdctl -n k8s.io ps -a

# 执行exec

nerdctl -n k8s.io exec -ti e2cd02190005 sh

# 登录镜像仓库

nerdctl login 172.21.65.226:5000

# 拉取镜像,如果是想拉取了让k8s使用,一定加上-n k8s.io,否则会拉取到default空间中, k8s默认只使用k8s.io

nerdctl -n k8s.io pull 172.21.65.226:5000/eladmin/eladmin-api:v1-rc1

# 启动容器

nerdctl -n k8s.io run -d --name test nginx:alpine

# exec

nerdctl -n k8s.io exec -ti test sh

# 查看日志, 注意,nerdctl 只能查看使用nerdctl命令创建从容器的日志,k8s中kubelet创建的产生的容器无法查看

nerdctl -n k8s.io logs -f test

# 构建,但是需要额外安装buildkit的包

nerdctl build . -t xxxx:tag -f Dockerfile使用小经验

- 用了k8s后,对于业务应用的基本操作,90%以上都可以通过

kubectl命令行完成 - 对于镜像的构建,仍然推荐使用

docker build来完成,推送到镜像仓库后,containerd可以直接使用 - 对于查看containerd中容器的日志,使用

crictl logs完成,因为ctr、nerdctl均不支持 - 对于其他常规的containerd容器操作,建议使用nerdctl完成

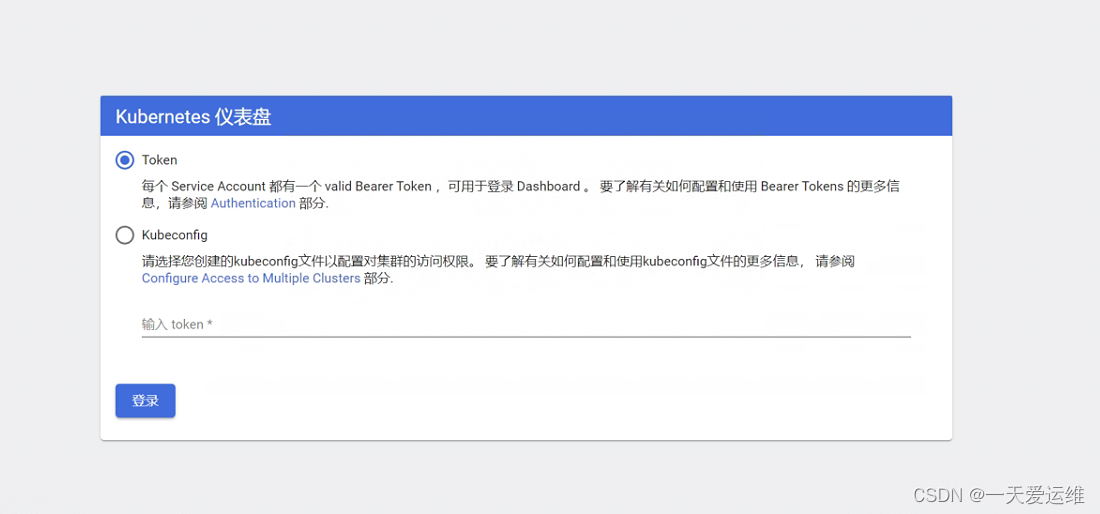

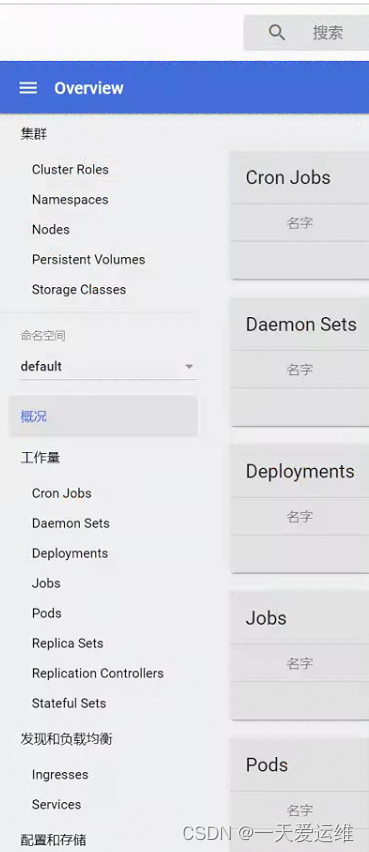

部署dashboard

部署服务:

# 推荐使用下面这种方式

$ wget https://raw.githubusercontent.com/kubernetes/dashboard/v2.2.0/aio/deploy/recommended.yaml

$ vi recommended.yaml

# 修改Service为NodePort类型,文件的45行上下

......

kind: Service

apiVersion: v1

metadata:

labels:

k8s-app: kubernetes-dashboard

name: kubernetes-dashboard

namespace: kubernetes-dashboard

spec:

ports:

- port: 443

targetPort: 8443

selector:

k8s-app: kubernetes-dashboard

type: NodePort # 加上type=NodePort变成NodePort类型的服务

......- 查看访问地址,本例为30133端口

$ kubectl apply -f recommended.yaml

$ kubectl -n kubernetes-dashboard get svc

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

dashboard-metrics-scraper ClusterIP 10.105.62.124 <none> 8000/TCP 31m

kubernetes-dashboard NodePort 10.103.74.46 <none> 443:30133/TCP 31m -

创建ServiceAccount进行访问

$ vi dashboard-admin.conf

kind: ClusterRoleBinding

apiVersion: rbac.authorization.k8s.io/v1

metadata:

name: admin

annotations:

rbac.authorization.kubernetes.io/autoupdate: "true"

roleRef:

kind: ClusterRole

name: cluster-admin

apiGroup: rbac.authorization.k8s.io

subjects:

- kind: ServiceAccount

name: admin

namespace: kubernetes-dashboard

---

apiVersion: v1

kind: ServiceAccount

metadata:

name: admin

namespace: kubernetes-dashboard

$ kubectl apply -f dashboard-admin.conf

# 创建访问token

$ kubectl -n kubernetes-dashboard create token admin

#生秘钥

清理集群

如果你的集群安装过程中遇到了其他问题,我们可以使用下面的命令来进行重置:

# 在全部集群节点执行

kubeadm reset

ifconfig cni0 down && ip link delete cni0

ifconfig flannel.1 down && ip link delete flannel.1

rm -rf /run/flannel/subnet.env

rm -rf /var/lib/cni/

mv /etc/kubernetes/ /tmp

mv /var/lib/etcd /tmp

mv ~/.kube /tmp

iptables -F

iptables -t nat -F

ipvsadm -C

ip link del kube-ipvs0

ip link del dummy0特别声明:

留下一个小心心哦,我会更新后续的k8s技术知识,希望你的运维技术快快增长哦!!!

1万+

1万+

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?