综合了以下特点:

1、MobileNetV1的深度可分离卷积DW(depthwise separable convolutions)。

2、MobileNetV2的具有线性瓶颈的逆残差结构(the inverted residual with linear bottleneck)

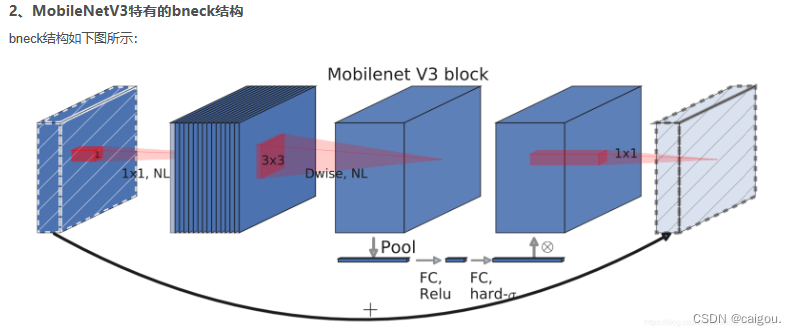

相比V2的创新(如上图)

1. 轻量级的注意力模型:提取用于DW卷积的池化后一维向量,两个fc全连接层,第一个全连接把和特征图通道数一样长度的一维向量缩短为1/4,之后第二个全连接把通道数还原回原来的长度,经过训练的一维向量每个位置的值就是经过DW卷积后的特征矩阵的每一层的权重,把这个每一层的权重和每个通道上的数值相乘就是加入了注意力机制。

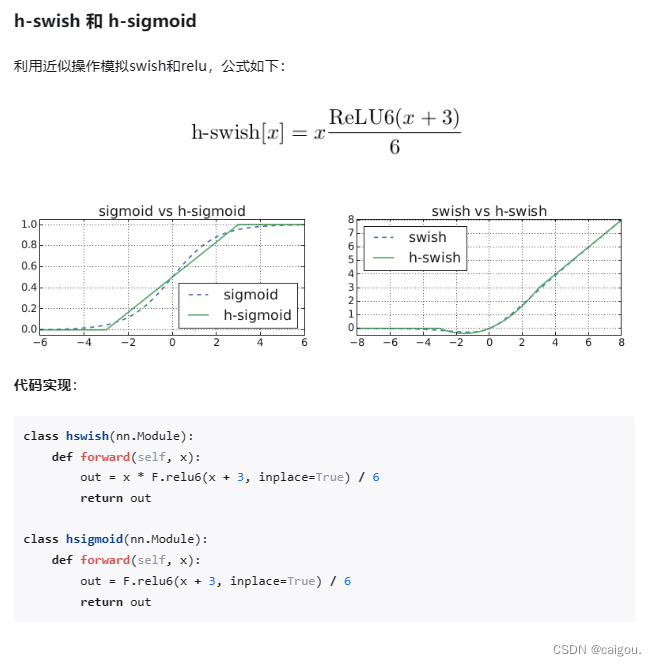

2. 利用h-swish代替swish函数(激活函数,对速度有帮助)。减少运算量,提高性能。

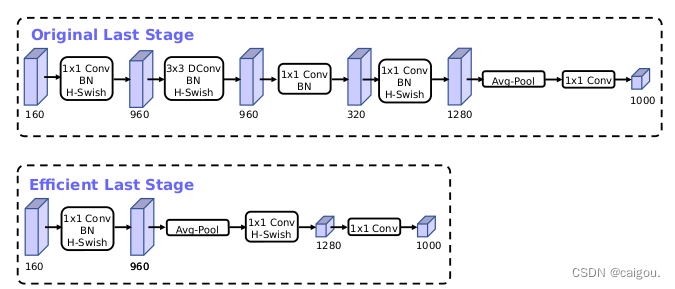

3. 重新设计耗时层结构,减少第一层卷积层的卷积核个数(32->16)(节省2ms),精简最后的全连接层结构(节省7ms,11%)。

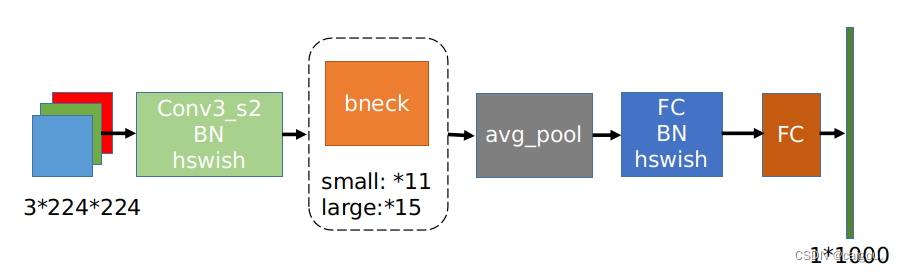

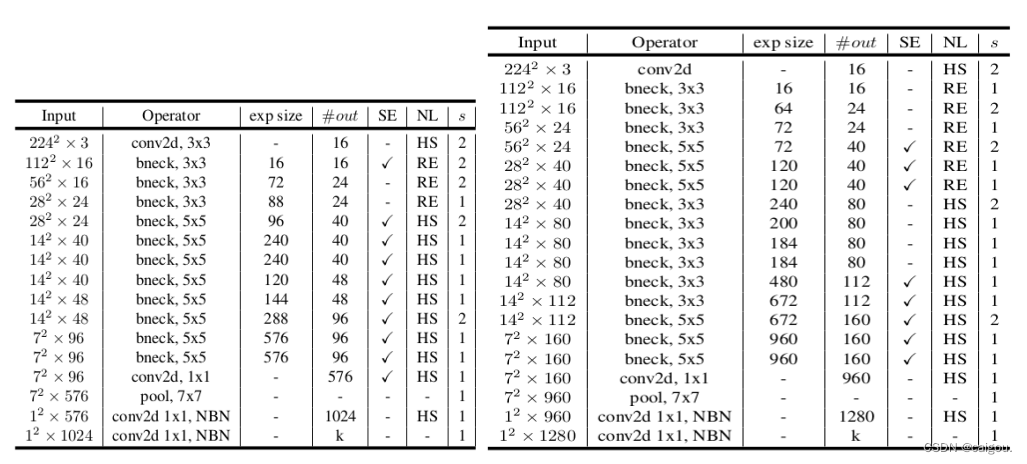

MobilenetV3整体架构:

small和large两个版本的MobileNetV3的参数表:

from typing import Callable, List, Optional

import torch

from torch import nn, Tensor

from torch.nn import functional as F

from functools import partial

def _make_divisible(ch, divisor=8, min_ch=None):

"""

This function is taken from the original tf repo.

It ensures that all layers have a channel number that is divisible by 8

It can be seen here:

https://github.com/tensorflow/models/blob/master/research/slim/nets/mobilenet/mobilenet.py

"""

if min_ch is None:

min_ch = divisor

new_ch = max(min_ch, int(ch + divisor / 2) // divisor * divisor)

# Make sure that round down does not go down by more than 10%.

if new_ch < 0.9 * ch:

new_ch += divisor

return new_ch

class ConvBNActivation(nn.Sequential):

#卷积BN激活层,一个小模块

def __init__(self,

in_planes: int,

out_planes: int,

kernel_size: int = 3,

stride: int = 1,

groups: int = 1,

norm_layer: Optional[Callable[..., nn.Module]] = None,

activation_layer: Optional[Callable[..., nn.Module]] = None):

# groups:控制group参数实现DW卷积,

padding = (kernel_size - 1) // 2

if norm_layer is None:

norm_layer = nn.BatchNorm2d

if activation_layer is None:

activation_layer = nn.ReLU6

#构造ConvBNActivation结构,包括卷积,标准化,激活的结构

super(ConvBNActivation, self).__init__(nn.Conv2d(in_channels=in_planes,

out_channels=out_planes,

kernel_size=kernel_size,

stride=stride,

padding=padding,

groups=groups,

bias=False),

norm_layer(out_planes),

activation_layer(inplace=True))

class SqueezeExcitation(nn.Module):

#注意力机制模块,只需要一个输入:经过DW卷积后的特征图的通道数

def __init__(self, input_c: int, squeeze_factor: int = 4):

super(SqueezeExcitation, self).__init__()

#squeeze_c:注意力机制第一个全连接层处理后的一维矩阵的长度是原来特征图的1/4

squeeze_c = _make_divisible(input_c // squeeze_factor, 8)

self.fc1 = nn.Conv2d(input_c, squeeze_c, 1)

self.fc2 = nn.Conv2d(squeeze_c, input_c, 1)

def forward(self, x: Tensor) -> Tensor:

scale = F.adaptive_avg_pool2d(x, output_size=(1, 1))

scale = self.fc1(scale)

scale = F.relu(scale, inplace=True)

scale = self.fc2(scale)

scale = F.hardsigmoid(scale, inplace=True)

#x是输入特征图,scale是经过训练注意力机制后的参数,把这个类似特征图的通道权重的参数乘上每一个通道的数值,就是引入了注意力机制

return scale * x

class InvertedResidualConfig:

#MobileNetV3中每一个 bneck 模块的结构参数配置

def __init__(self,

input_c: int,

kernel: int,

expanded_c: int,

out_c: int,

use_se: bool,

activation: str,

stride: int,

width_multi: float):

#width_multi是alpha系数,决定模型整体的参数

#对input_c,expanded_c进行alpha因子的调整,这个expand_c是PW升维卷积后的维度,

# 和MobileNetV2中的t的通道缩放倍数不一样,这里直接是升维后的通道数,不是一个倍数

self.input_c = self.adjust_channels(input_c, width_multi)

self.kernel = kernel

self.expanded_c = self.adjust_channels(expanded_c, width_multi)

self.out_c = self.adjust_channels(out_c, width_multi)

#se是是否使用注意力机制参数,一个布尔变量

self.use_se = use_se

self.use_hs = activation == "HS" # whether using h-swish activation

self.stride = stride

@staticmethod

#引入alpha参数width_multi后,模型依然是8的整数倍,和计算机底层实现原理有关

def adjust_channels(channels: int, width_multi: float):

return _make_divisible(channels * width_multi, 8)

class InvertedResidual(nn.Module):

#构造MobileNerV3的 bneck 模块,总体也是倒残差模块

def __init__(self,

cnf: InvertedResidualConfig,

norm_layer: Callable[..., nn.Module]):

super(InvertedResidual, self).__init__()

#整个模型stride只能是1和2,不是这两个直接抛出错误

if cnf.stride not in [1, 2]:

raise ValueError("illegal stride value.")

#判断是否使用shortcut连接,满足两个条件才使用shortcut连接

self.use_res_connect = (cnf.stride == 1 and cnf.input_c == cnf.out_c)

#layers是一个元素类型为nn.Module的列表,根据bneck配置参数r判断activation_layer激活层使用哪种激活函数

layers: List[nn.Module] = []

activation_layer = nn.Hardswish if cnf.use_hs else nn.ReLU

# expand 或者叫pw升维

#expanded_c是PW卷积升维后的维度,如果和输入通道数不相等,说明要用PW卷积升维,

#以下就是把PW升维模块加入layers

if cnf.expanded_c != cnf.input_c:

layers.append(ConvBNActivation(cnf.input_c,

cnf.expanded_c,

kernel_size=1,

norm_layer=norm_layer,

activation_layer=activation_layer))

# depthwise dw卷积,再MobileNetV3中卷积核大小不全是3*3

layers.append(ConvBNActivation(cnf.expanded_c,

cnf.expanded_c,

kernel_size=cnf.kernel,

stride=cnf.stride,

groups=cnf.expanded_c,

norm_layer=norm_layer,

activation_layer=activation_layer))

#决定是否引入注意力机制,注意力机制只需传入DW卷积后特征图的维度

#即PW升维后的维度

if cnf.use_se:

layers.append(SqueezeExcitation(cnf.expanded_c))

# project

#这个是PW降维操作,这里改变通道维度作为这一层bneck结构的输出维度

#同时注意这一层的激活函数是线性激活,这是MobileNetV2的一个亮点

#MobileNetV2的另一个亮点就是倒残差结构,PW+DW+PW

layers.append(ConvBNActivation(cnf.expanded_c,

cnf.out_c,

kernel_size=1,

norm_layer=norm_layer,

activation_layer=nn.Identity))

#注意:block只是一个到残差bneck模块,这也是这个模块前向传播里shortcut连接的依据

self.block = nn.Sequential(*layers)

self.out_channels = cnf.out_c

self.is_strided = cnf.stride > 1

def forward(self, x: Tensor) -> Tensor:

result = self.block(x)

#判断是否使用shortcut捷径连接

if self.use_res_connect:

result += x

return result

class MobileNetV3(nn.Module):

#构建MobileNetV3模型

def __init__(self,

inverted_residual_setting: List[InvertedResidualConfig],

last_channel: int,

num_classes: int = 1000,

block: Optional[Callable[..., nn.Module]] = None,

norm_layer: Optional[Callable[..., nn.Module]] = None):

# inverted_residual_setting 这个是bneck模块参数配置列表,每一行表示一个bneck的参数配置

# 并且每一行的元素都是InvertedResidualConfig 类型

super(MobileNetV3, self).__init__()

#判断是否有inverted_residual_setting参数,没有bneck配置参数是没办法构建模型的

if not inverted_residual_setting:

raise ValueError("The inverted_residual_setting should not be empty.")

#检查 inverted_residual_setting 是否为列表类型,并且列表中的每个元素是否都是 InvertedResidualConfig 类型

elif not (isinstance(inverted_residual_setting, List) and

all([isinstance(s, InvertedResidualConfig) for s in inverted_residual_setting])):

raise TypeError("The inverted_residual_setting should be List[InvertedResidualConfig]")

if block is None:

# 定义block为倒残差块

block = InvertedResidual

if norm_layer is None:

norm_layer = partial(nn.BatchNorm2d, eps=0.001, momentum=0.01)

# layers是一个元素类型为nn.Module的列表,根据bneck配置参数r判断activation_layer激活层使用哪种激活函数

layers: List[nn.Module] = []

# building first layer

# 注意firstconv_output_c是得到第一个卷积层的输出通道数,利用inverted_residual_setting列表里第一个

# InvertedResidualConfig 类型参数的输入通道数推出整个模型第一个卷积块的输出通道数

firstconv_output_c = inverted_residual_setting[0].input_c

#第一层conv2d卷积操作

layers.append(ConvBNActivation(3,

firstconv_output_c,

kernel_size=3,

stride=2,

norm_layer=norm_layer,

activation_layer=nn.Hardswish))

# building inverted residual blocks

#遍历配置参数,根据每个bneck模块参数构建接下来的MobileNetV3模型

for cnf in inverted_residual_setting:

layers.append(block(cnf, norm_layer))

# building last several layers

# 构建特征层feature的最后一个卷积层

# 最后卷积层的输入通道数是最后bneck的输出通道数,

# 最后卷积层的输出通道数是输入通道数的6倍

lastconv_input_c = inverted_residual_setting[-1].out_c

lastconv_output_c = 6 * lastconv_input_c

layers.append(ConvBNActivation(lastconv_input_c,

lastconv_output_c,

kernel_size=1,

norm_layer=norm_layer,

activation_layer=nn.Hardswish))

#打包以上所有卷积为特征层,features,这是官方的代码,

#官方的训练权重也是在这个结构的代码上得到的符合这个结构的权重

self.features = nn.Sequential(*layers)

#自适应池化,7*7*960 -> 1*1*960

self.avgpool = nn.AdaptiveAvgPool2d(1)

#nn.Hardswish 改进的 Swish 激活函数

self.classifier = nn.Sequential(nn.Linear(lastconv_output_c, last_channel),

nn.Hardswish(inplace=True),

nn.Dropout(p=0.2, inplace=True),

nn.Linear(last_channel, num_classes))

# initial weights

for m in self.modules():

if isinstance(m, nn.Conv2d):

nn.init.kaiming_normal_(m.weight, mode="fan_out")

if m.bias is not None:

nn.init.zeros_(m.bias)

elif isinstance(m, (nn.BatchNorm2d, nn.GroupNorm)):

nn.init.ones_(m.weight)

nn.init.zeros_(m.bias)

elif isinstance(m, nn.Linear):

nn.init.normal_(m.weight, 0, 0.01)

nn.init.zeros_(m.bias)

def _forward_impl(self, x: Tensor) -> Tensor:

x = self.features(x)

x = self.avgpool(x)

x = torch.flatten(x, 1)

x = self.classifier(x)

return x

def forward(self, x: Tensor) -> Tensor:

return self._forward_impl(x)

def mobilenet_v3_large(num_classes: int = 1000,

reduced_tail: bool = False) -> MobileNetV3:

"""

Constructs a large MobileNetV3 architecture from

"Searching for MobileNetV3" <https://arxiv.org/abs/1905.02244>.

weights_link:

https://download.pytorch.org/models/mobilenet_v3_large-8738ca79.pth

Args:

num_classes (int): number of classes

reduced_tail (bool): If True, reduces the channel counts of all feature layers

between C4 and C5 by 2. It is used to reduce the channel redundancy in the

backbone for Detection and Segmentation.

"""

width_multi = 1.0 #alpha系数

#partial是python的一个语法,初始化默认参数,提高网络训练速度

bneck_conf = partial(InvertedResidualConfig, width_multi=width_multi)

adjust_channels = partial(InvertedResidualConfig.adjust_channels, width_multi=width_multi)

# 这个残差对最后3个bneck结构进行通道减少参数,可以设置False,不使用

reduce_divider = 2 if reduced_tail else 1

# 倒残差层的参数设计

inverted_residual_setting = [

# input_c, kernel, expanded_c, out_c, use_se, activation, stride

bneck_conf(16, 3, 16, 16, False, "RE", 1),

bneck_conf(16, 3, 64, 24, False, "RE", 2), # C1

bneck_conf(24, 3, 72, 24, False, "RE", 1),

bneck_conf(24, 5, 72, 40, True, "RE", 2), # C2

bneck_conf(40, 5, 120, 40, True, "RE", 1),

bneck_conf(40, 5, 120, 40, True, "RE", 1),

bneck_conf(40, 3, 240, 80, False, "HS", 2), # C3

bneck_conf(80, 3, 200, 80, False, "HS", 1),

bneck_conf(80, 3, 184, 80, False, "HS", 1),

bneck_conf(80, 3, 184, 80, False, "HS", 1),

bneck_conf(80, 3, 480, 112, True, "HS", 1),

bneck_conf(112, 3, 672, 112, True, "HS", 1),

bneck_conf(112, 5, 672, 160 // reduce_divider, True, "HS", 2), # C4

bneck_conf(160 // reduce_divider, 5, 960 // reduce_divider, 160 // reduce_divider, True, "HS", 1),

bneck_conf(160 // reduce_divider, 5, 960 // reduce_divider, 160 // reduce_divider, True, "HS", 1),

]

last_channel = adjust_channels(1280 // reduce_divider) # C5

#参数传入MobileNetV3,构建mobilenet_v3_large模型

return MobileNetV3(inverted_residual_setting=inverted_residual_setting,

last_channel=last_channel,

num_classes=num_classes)

def mobilenet_v3_small(num_classes: int = 1000,

reduced_tail: bool = False) -> MobileNetV3:

"""

Constructs a large MobileNetV3 architecture from

"Searching for MobileNetV3" <https://arxiv.org/abs/1905.02244>.

weights_link:

https://download.pytorch.org/models/mobilenet_v3_small-047dcff4.pth

Args:

num_classes (int): number of classes

reduced_tail (bool): If True, reduces the channel counts of all feature layers

between C4 and C5 by 2. It is used to reduce the channel redundancy in the

backbone for Detection and Segmentation.

"""

width_multi = 1.0

bneck_conf = partial(InvertedResidualConfig, width_multi=width_multi)

adjust_channels = partial(InvertedResidualConfig.adjust_channels, width_multi=width_multi)

reduce_divider = 2 if reduced_tail else 1

inverted_residual_setting = [

# input_c, kernel, expanded_c, out_c, use_se, activation, stride

bneck_conf(16, 3, 16, 16, True, "RE", 2), # C1

bneck_conf(16, 3, 72, 24, False, "RE", 2), # C2

bneck_conf(24, 3, 88, 24, False, "RE", 1),

bneck_conf(24, 5, 96, 40, True, "HS", 2), # C3

bneck_conf(40, 5, 240, 40, True, "HS", 1),

bneck_conf(40, 5, 240, 40, True, "HS", 1),

bneck_conf(40, 5, 120, 48, True, "HS", 1),

bneck_conf(48, 5, 144, 48, True, "HS", 1),

bneck_conf(48, 5, 288, 96 // reduce_divider, True, "HS", 2), # C4

bneck_conf(96 // reduce_divider, 5, 576 // reduce_divider, 96 // reduce_divider, True, "HS", 1),

bneck_conf(96 // reduce_divider, 5, 576 // reduce_divider, 96 // reduce_divider, True, "HS", 1)

]

last_channel = adjust_channels(1024 // reduce_divider) # C5

return MobileNetV3(inverted_residual_setting=inverted_residual_setting,

last_channel=last_channel,

num_classes=num_classes)

train.py

MobileNetV3_large模型也是冻结预训练权重进行训练的

import os

import sys

import json

import torch

import torch.nn as nn

import torch.optim as optim

from torchvision import transforms, datasets

from tqdm import tqdm

from model_v2 import MobileNetV2

from model_v3 import mobilenet_v3_large

def main():

device = torch.device("cuda:0" if torch.cuda.is_available() else "cpu")

print("using {} device.".format(device))

batch_size = 16

epochs = 5

data_transform = {

"train": transforms.Compose([transforms.RandomResizedCrop(224),

transforms.RandomHorizontalFlip(),

transforms.ToTensor(),

transforms.Normalize([0.485, 0.456, 0.406], [0.229, 0.224, 0.225])]),

"val": transforms.Compose([transforms.Resize(256),

transforms.CenterCrop(224),

transforms.ToTensor(),

transforms.Normalize([0.485, 0.456, 0.406], [0.229, 0.224, 0.225])])}

data_root = os.path.abspath(os.path.join(os.getcwd(), "../..")) # get data root path

image_path = os.path.join(data_root, "data_set", "flower_data") # flower data set path

assert os.path.exists(image_path), "{} path does not exist.".format(image_path)

train_dataset = datasets.ImageFolder(root=os.path.join(image_path, "train"),

transform=data_transform["train"])

train_num = len(train_dataset)

# {'daisy':0, 'dandelion':1, 'roses':2, 'sunflower':3, 'tulips':4}

flower_list = train_dataset.class_to_idx

cla_dict = dict((val, key) for key, val in flower_list.items())

# write dict into json file

json_str = json.dumps(cla_dict, indent=4)

with open('class_indices.json', 'w') as json_file:

json_file.write(json_str)

nw = min([os.cpu_count(), batch_size if batch_size > 1 else 0, 8]) # number of workers

print('Using {} dataloader workers every process'.format(nw))

train_loader = torch.utils.data.DataLoader(train_dataset,

batch_size=batch_size, shuffle=True,

num_workers=nw)

validate_dataset = datasets.ImageFolder(root=os.path.join(image_path, "val"),

transform=data_transform["val"])

val_num = len(validate_dataset)

validate_loader = torch.utils.data.DataLoader(validate_dataset,

batch_size=batch_size, shuffle=False,

num_workers=nw)

print("using {} images for training, {} images for validation.".format(train_num,

val_num))

# create model

net = mobilenet_v3_large(num_classes=5)

# load pretrain weights

# download url: https://download.pytorch.org/models/mobilenet_v2-b0353104.pth

model_weight_path = "./mobilenet_v3_large-pre.pth"

assert os.path.exists(model_weight_path), "file {} dose not exist.".format(model_weight_path)

pre_weights = torch.load(model_weight_path, map_location='cpu')

# delete classifier weights

pre_dict = {k: v for k, v in pre_weights.items() if net.state_dict()[k].numel() == v.numel()}

missing_keys, unexpected_keys = net.load_state_dict(pre_dict, strict=False)

# freeze features weights

for param in net.features.parameters():

param.requires_grad = False

net.to(device)

# define loss function

loss_function = nn.CrossEntropyLoss()

# construct an optimizer

params = [p for p in net.parameters() if p.requires_grad]

optimizer = optim.Adam(params, lr=0.0001)

best_acc = 0.0

save_path = './MobileNetV3_large.pth'

train_steps = len(train_loader)

for epoch in range(epochs):

# train

net.train()

running_loss = 0.0

train_bar = tqdm(train_loader, file=sys.stdout)

for step, data in enumerate(train_bar):

images, labels = data

optimizer.zero_grad()

logits = net(images.to(device))

loss = loss_function(logits, labels.to(device))

loss.backward()

optimizer.step()

# print statistics

running_loss += loss.item()

train_bar.desc = "train epoch[{}/{}] loss:{:.3f}".format(epoch + 1,

epochs,

loss)

# validate

net.eval()

acc = 0.0 # accumulate accurate number / epoch

with torch.no_grad():

val_bar = tqdm(validate_loader, file=sys.stdout)

for val_data in val_bar:

val_images, val_labels = val_data

outputs = net(val_images.to(device))

# loss = loss_function(outputs, test_labels)

predict_y = torch.max(outputs, dim=1)[1]

acc += torch.eq(predict_y, val_labels.to(device)).sum().item()

val_bar.desc = "valid epoch[{}/{}]".format(epoch + 1,

epochs)

val_accurate = acc / val_num

print('[epoch %d] train_loss: %.3f val_accuracy: %.3f' %

(epoch + 1, running_loss / train_steps, val_accurate))

if val_accurate > best_acc:

best_acc = val_accurate

torch.save(net.state_dict(), save_path)

print('Finished Training')

if __name__ == '__main__':

main()

训练结果

using cuda:0 device.

Using 8 dataloader workers every process

using 3306 images for training, 364 images for validation.

train epoch[1/5] loss:0.878: 100%|██████████| 207/207 [00:22<00:00, 9.17it/s]

valid epoch[1/5]: 100%|██████████| 23/23 [00:13<00:00, 1.64it/s]

[epoch 1] train_loss: 0.892 val_accuracy: 0.876

train epoch[2/5] loss:0.280: 100%|██████████| 207/207 [00:19<00:00, 10.66it/s]

valid epoch[2/5]: 100%|██████████| 23/23 [00:13<00:00, 1.67it/s]

[epoch 2] train_loss: 0.512 val_accuracy: 0.898

train epoch[3/5] loss:0.714: 100%|██████████| 207/207 [00:19<00:00, 10.76it/s]

valid epoch[3/5]: 100%|██████████| 23/23 [00:13<00:00, 1.66it/s]

[epoch 3] train_loss: 0.452 val_accuracy: 0.890

train epoch[4/5] loss:0.507: 100%|██████████| 207/207 [00:18<00:00, 11.07it/s]

valid epoch[4/5]: 100%|██████████| 23/23 [00:13<00:00, 1.70it/s]

[epoch 4] train_loss: 0.415 val_accuracy: 0.901

train epoch[5/5] loss:0.400: 100%|██████████| 207/207 [00:18<00:00, 11.04it/s]

valid epoch[5/5]: 100%|██████████| 23/23 [00:13<00:00, 1.71it/s]

[epoch 5] train_loss: 0.391 val_accuracy: 0.909

Finished Training

9万+

9万+

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?