流式输出的效果实现

1、基本情况介绍

流式输出中,API 规定 stream = True

震惊!!!官方文档也出错啦!!!

官方:API 指南 ==> 推理模型 ==> 流式输出代码如下:

from openai import OpenAI

client = OpenAI(api_key="sk-123456", base_url="https://api.deepseek.com")

# Round 1

messages = [{"role": "user", "content": "9.11 and 9.8, which is greater?"}]

response = client.chat.completions.create(

model="deepseek-reasoner",

messages=messages,

stream=True

)

reasoning_content = ""

content = ""

for chunk in response:

if chunk.choices[0].delta.reasoning_content:

reasoning_content += chunk.choices[0].delta.reasoning_content

else:

content += chunk.choices[0].delta.content

# Round 2

messages.append({"role": "assistant", "content": content})

messages.append({'role': 'user', 'content': "How many Rs are there in the word 'strawberry'?"})

response = client.chat.completions.create(

model="deepseek-reasoner",

messages=messages,

stream=True

)

# ...

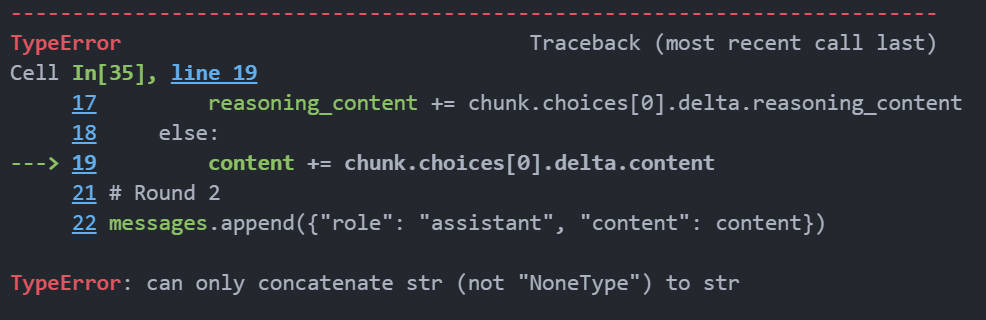

如果运行,结果是报错的,输出结果如下:

说明:多轮对话流式输出与非流式输出的不同在于上一轮对话的结果需要拼接在一起,作为下一轮对话的messages。

1.1 官方文档出错内容介绍和改正

1.1.1 出错内容和原因

for chunk in response:

if chunk.choices[0].delta.reasoning_content:

reasoning_content += chunk.choices[0].delta.reasoning_content

else:

content += chunk.choices[0].delta.content

以上为出错代码,上面错误原因指出:str只能与str相连。为什么呢?

因为chunk不仅仅只有reasoning_content和content还存在空值,所以不能只是单单的else

1.1.2 官方文档改正

for chunk in response:

if chunk.choices[0].delta.reasoning_content:

reasoning_content += chunk.choices[0].delta.reasoning_content

elif chunk.choices[0].delta.content:

content += chunk.choices[0].delta.content

已经运行完毕,结果没有出错

2、 下面流式输出使用 DeepSeek-R1 模型,V3模型也可以使用,但是V3模型没有思维链,太简单了

3、流式输出实现

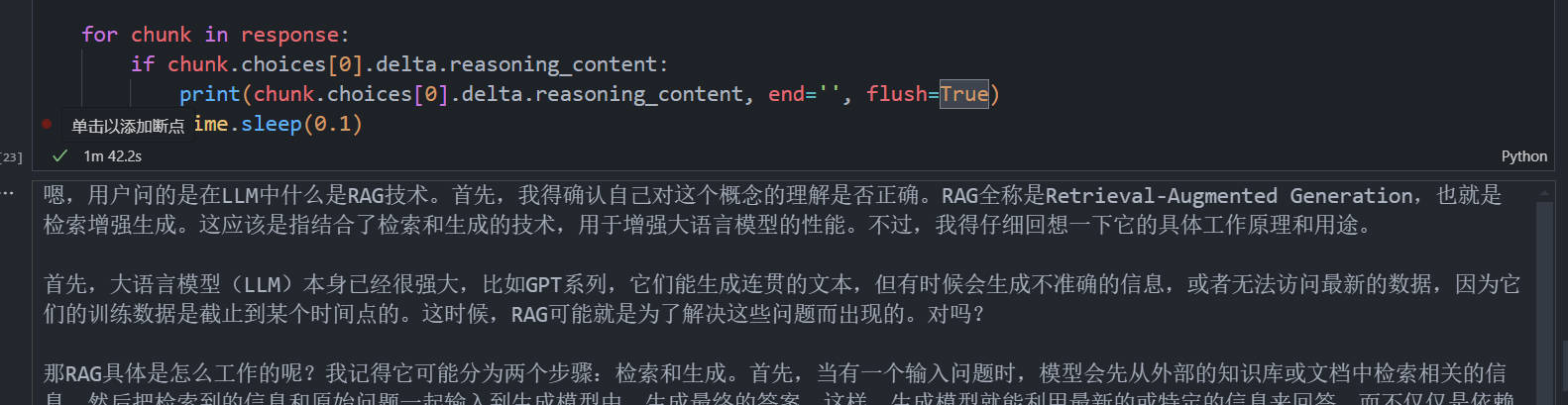

3.1 只输出思维链

代码如下:

from openai import OpenAI

import time

client = OpenAI(api_key="sk-123456", base_url="https://api.deepseek.com")

messages = [{"role": "user", "content": "在llm中,什么是rag技术?"}]

response = client.chat.completions.create(

model="deepseek-reasoner",

messages=messages,

stream=True)

for chunk in response:

if chunk.choices[0].delta.reasoning_content:

print(chunk.choices[0].delta.reasoning_content, end='', flush=True)

time.sleep(0.1)

代码解释:

- 引入了 time 库

- 从 response 获取 chunk ,判断是不是 reasoning_content。代码:

if chunk.choices[0].delta.reasoning_content:- print() 中,设置

end=''。不让程序输出一个字就换一行。默认end=‘/n’flush = True,默认为False。强制将缓冲区的内容立即输出,确保实时显示。time.sleep(0.1):控制输出速度:每个响应块(chunk)打印后暂停 0.1 秒,模拟逐字输出的效果,避免内容一次性显示过快,提升用户体验。

结果:

3.2 只流式输出结果

同理,代码如下:

from openai import OpenAI

import time

client = OpenAI(api_key="sk-123456", base_url="https://api.deepseek.com")

messages = [{"role": "user", "content": "在llm中,什么是rag技术?"}]

response = client.chat.completions.create(

model="deepseek-reasoner",

messages=messages,

stream=True)

for chunk in response:

if chunk.choices[0].delta.content:

print(chunk.choices[0].delta.content, end='', flush=True)

time.sleep(0.1)

只是将 reasoning_content 替换为 content

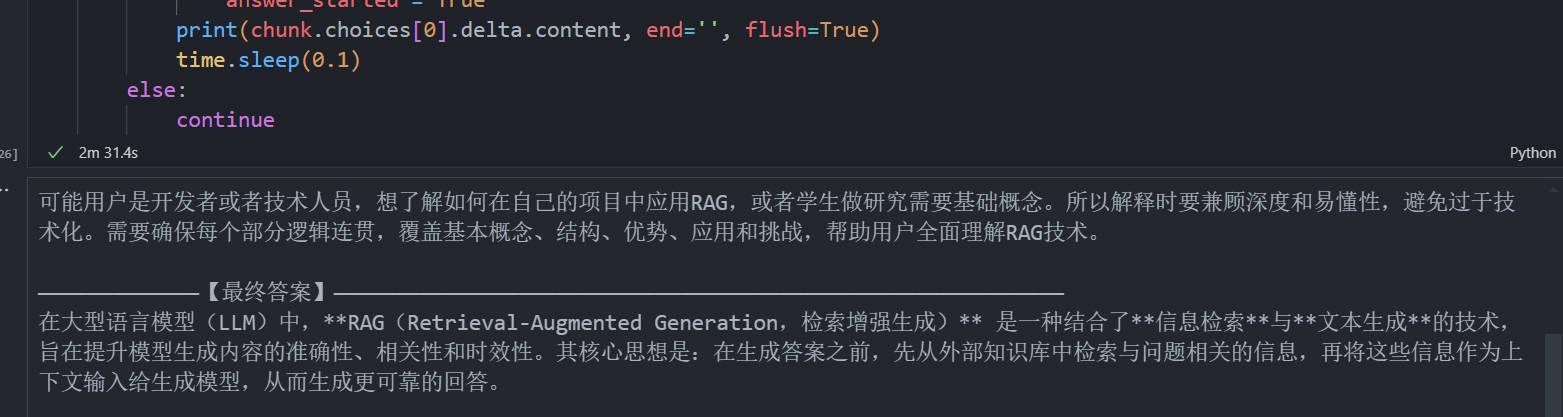

3.3 流式输出思维链和文本内容

代码如下:

from openai import OpenAI

import time

client = OpenAI(api_key="sk-123456", base_url="https://api.deepseek.com")

answer_started = False

messages = [{"role": "user", "content": "在llm中,什么是rag技术?"}]

response = client.chat.completions.create(

model="deepseek-reasoner",

messages=messages,

stream=True

)

for chunk in response:

if chunk.choices[0].delta.reasoning_content:

print(chunk.choices[0].delta.reasoning_content, end='', flush=True)

time.sleep(0.1)

else:

if not answer_started and chunk.choices[0].delta.content:

print("\n\n—————————————【最终答案】———————————————————————————\n", end='')

answer_started = True

print(chunk.choices[0].delta.content, end='', flush=True)

time.sleep(0.1)

设置一个开关:

answer_started,初始值为负值,代表输出思维链的状态。当开始输出文本内容时,就结束了思维链的输出,所以answer_started的值变为正值。输出:————【最终答案】————。然后再输出文本答案。

但是出现一个问题,就是输出思维链的前面输出了一个字符:None,分析原因可知:chunk在获得思维链之前为空值,使用了print(chunk.choices[0].delta.content, end=‘’, flush=True)为其将 None 输出出来。

3.4 解决思维链中出现None的问题,为最后完整的代码,实现流式输出

from openai import OpenAI

import time

client = OpenAI(api_key="sk-123456", base_url="https://api.deepseek.com")

answer_started = False

messages = [{"role": "user", "content": "在llm中,什么是rag技术?"}]

response = client.chat.completions.create(

model="deepseek-reasoner",

messages=messages,

stream=True

)

for chunk in response:

if chunk.choices[0].delta.reasoning_content:

print(chunk.choices[0].delta.reasoning_content, end='', flush=True)

time.sleep(0.1)

elif chunk.choices[0].delta.content:

if not answer_started:

print("\n\n—————————————【最终答案】—————————————————————\n", end='')

answer_started = True

print(chunk.choices[0].delta.content, end='', flush=True)

time.sleep(0.1)

else:

continue

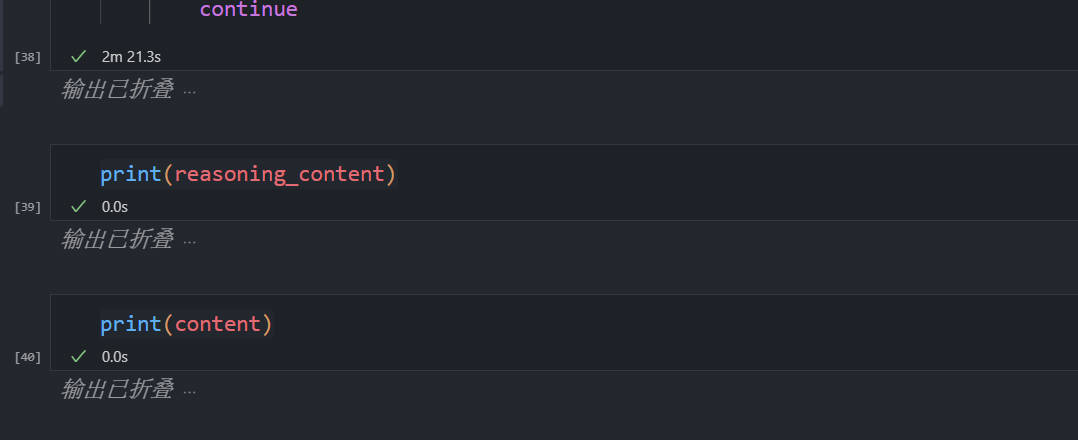

4、 总结

本文解决了官方文档的出错,同时实现了思维链和文本结果的流式输出。

最终代码为:

from openai import OpenAI

import time

client = OpenAI(api_key="sk-123456", base_url="https://api.deepseek.com")

answer_started = False

messages = [{"role": "user", "content": "在llm中,什么是rag技术?"}]

response = client.chat.completions.create(

model="deepseek-reasoner",

messages=messages,

stream=True

)

reasoning_content = ""

content = ""

for chunk in response:

if chunk.choices[0].delta.reasoning_content:

reasoning_content += chunk.choices[0].delta.reasoning_content

print(chunk.choices[0].delta.reasoning_content, end='', flush=True)

time.sleep(0.1)

elif chunk.choices[0].delta.content:

if not answer_started:

print("\n\n—————————————【最终答案】———————————————————————————\n", end='')

answer_started = True

content += chunk.choices[0].delta.content

print(chunk.choices[0].delta.content, end='', flush=True)

time.sleep(0.1)

else:

continue

print(reasoning_content)

print(content)

1万+

1万+

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?