目录

一、基于Hadoop编写的Mapreduce程序

public class mapreduce {

//define input/ouput PATH

public static String INPUT_PATH="hdfs://##/hdfs_test.txt";

public static String OUTPUT_PATH="hdfs://##/mapreduce/output";

public static void main(String[] args) throws IOException {

//define Job config

JobConf conf=new JobConf(mapreduce.class);

conf.setJobName("wordcount");

//check excist PATH

Configuration configuration=new Configuration();

configuration.set("fs.defaultFS", "hdfs://#:9000/");

System.setProperty("HADOOP_USER_NAME","root");

FileSystem fileSystem= FileSystem.get(configuration);

fileSystem.delete(new Path(OUTPUT_PATH),true);

//set Map config

conf.setMapperClass(MyMapper.class);

conf.setOutputKeyClass(Text.class);

conf.setOutputValueClass(LongWritable.class);

conf.setInputFormat(TextInputFormat.class);

//set Reduce config

conf.setReducerClass(MyReducer.class);

conf.setOutputKeyClass(Text.class);

conf.setOutputValueClass(LongWritable.class);

conf.setOutputFormat(TextOutputFormat.class);

//set input/output path

FileInputFormat.setInputPaths(conf,new Path(INPUT_PATH));

FileOutputFormat.setOutputPath(conf,new Path(OUTPUT_PATH));

//start job

JobClient.runJob(conf);

}

//map

public static class MyMapper extends MapReduceBase

implements Mapper<LongWritable, Text,Text,LongWritable>{

private Text word=new Text();

private LongWritable writable=new LongWritable(1);

@Override

public void map(LongWritable longWritable, Text text, OutputCollector<Text, LongWritable> outputCollector, Reporter reporter) throws IOException {

if (text!=null){

String line=text.toString();

StringTokenizer tokenizer=new StringTokenizer(line);

while (tokenizer.hasMoreElements()){

word=new Text(tokenizer.nextToken());

outputCollector.collect(word,writable);

}

}

}

}

//reduce

public static class MyReducer extends MapReduceBase implements

Reducer<Text,LongWritable,Text,LongWritable>{

@Override

public void reduce(Text text, Iterator<LongWritable> iterator, OutputCollector<Text, LongWritable> outputCollector, Reporter reporter) throws IOException {

long sum=0;

while (iterator.hasNext()){

LongWritable value=iterator.next();

sum+=value.get();

}

outputCollector.collect(text,new LongWritable(sum));

}

}

}

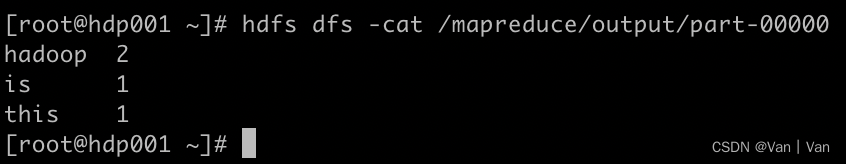

这是通过服务器上部署好的Hadoop集群来运行的程序,程序主要分为三个部分,1、定义配置信息conf;2、定义Map阶段;3、定义Reduce阶段。

实现wordcount的思路是通过INPUT_PATH首先读取hdfs上的文件内容,Map阶段会将数据进行切片,然后进行shuffle阶段,对数据进行分区、排序、合并,最后在reduce阶段进行汇总,最后输出结果到OUTPUT_PATH。

二、基于Spark编写的WordCount程序

Scala版:

object WordCountScala {

def main(args: Array[String]): Unit = {

val conf = new SparkConf().setAppName("spark").setMaster("local[*]")

val sc = new SparkContext(conf)

sc.setLogLevel("WARN")

//读取数据

val textFile = sc.textFile("/Users/van/Desktop/脚本/hdfs_test.txt")

//处理统计

textFile.filter(StringUtils.isNotBlank)

.flatMap(_.split(" "))

.map((_, 1))

.reduceByKey(_ + _)

.foreach(s => println(s._1 + " " + s._2))

}

}

Java版:

public class Spark{

public static void main(String[] args) {

SparkConf conf = new SparkConf().setAppName("spark").setMaster("local[*]");

JavaSparkContext sc = new JavaSparkContext(conf);

sc.setLogLevel("WARN");

//读取数据

JavaRDD<String> textFile = sc.textFile("/Users/van/Desktop/脚本/hdfs_test.txt");

//处理统计

textFile.filter(StringUtils::isNoneBlank)

.flatMap(s -> Arrays.asList(s.split(" ")).iterator())

.mapToPair(s -> new Tuple2<>(s, 1))

.reduceByKey(Integer::sum)

.foreach(s-> System.out.println(s._1 + " " + s._2));

}

}

Python版:

import pyspark

from operator import add

sc=pyspark.SparkContext("local")

f=sc.textFile("data.txt")

f.flatMap(lambda line : line.split(" ")).map(lambda word : (word,1)).reduceByKey(add).collect()

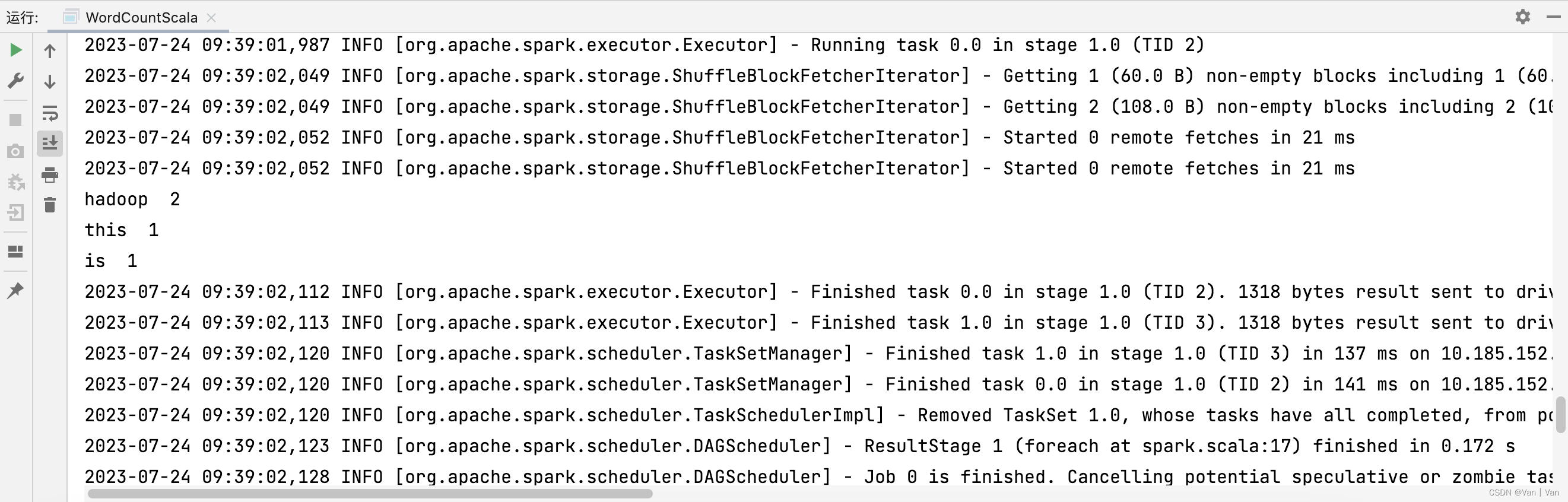

对比Mapreduce,在Spark下编写 wordcount程序代码上看十分简洁,而且运行速度要更快一些,这也涉及到Spark与MR的区别,一个是可以用于实时计算,一个只能进行静态批量处理。

在Spark中笔者认为通过Pyspark api编写的python代码最为简单、易懂,甚至可以写成一句话。split->map->reduceBykey,过程所见即所得!

在reduceBekey中填入内置add函数,即可完成相加,最后collect输出结果!

182

182

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?