目录

SummaryWriter 类 add_image() , add_scalar()使用

torchvision中数据集(DataLoader)的使用、与transforms相结合

二维卷积conv2d:F.conv2d(函数)和nn.conv2d(类)

优化器的使用(optim.zero_grad()、loss.backward()、optim.step())

-

dir()函数和help()的使用:

# dir()函数

dir(torch)

dir(torch.cuda)

dir(torch.cuda.is_available)

#help()函数 注意函数不不能加括号

help(torch.cuda.is_available)

-

Dataset类代码实践

PIL包 处理图片

from PIL import Image

img_path = "D:\\PycharmProjects\\learn_pytorch\\dataset\\train\\ants\\0013035.jpg"

img = Image.open(img_path)

img.size

# Out[6]: (768, 512)

img.show()

# 展示图片os包 路径问题

dir_path = "dataset/train/ants"

import os

img_path_list = os.listdir(dir_path) # 将路径中的图片形成列表

# Return a list containing the names of the files in the directory.

img_path_list[0]

# Out[11]: '0013035.jpg'读取数据

from torch.utils.data import Dataset

from PIL import Image

import os

class MyData(Dataset):

# 获取图片地址

def __init__(self, root_dir, label_dir):

self.root_dir = root_dir

self.label_dir = label_dir

self.path = os.path.join(self.root_dir , self.label_dir)

self.img_path = os.listdir(self.path)

#获取图片和label

def __getitem__(self, idx):

img_name = self.img_path[idx]

img_item_path = os.path.join(self.root_dir, self.label_dir, img_name)

img = Image.open(img_item_path)

label = self.label_dir

return img, label

# 返回数据集长度

def __len__(self):

return len(self.img_path)

root_dir = "dataset/train"

ants_label_dir = "ants"

bees_label_dir = "bees"

ants_dataset = MyData(root_dir, ants_label_dir)

bees_dataset = MyData(root_dir, bees_label_dir)

# 将数据集拼接

train_dataset = ants_dataset + bees_dataset-

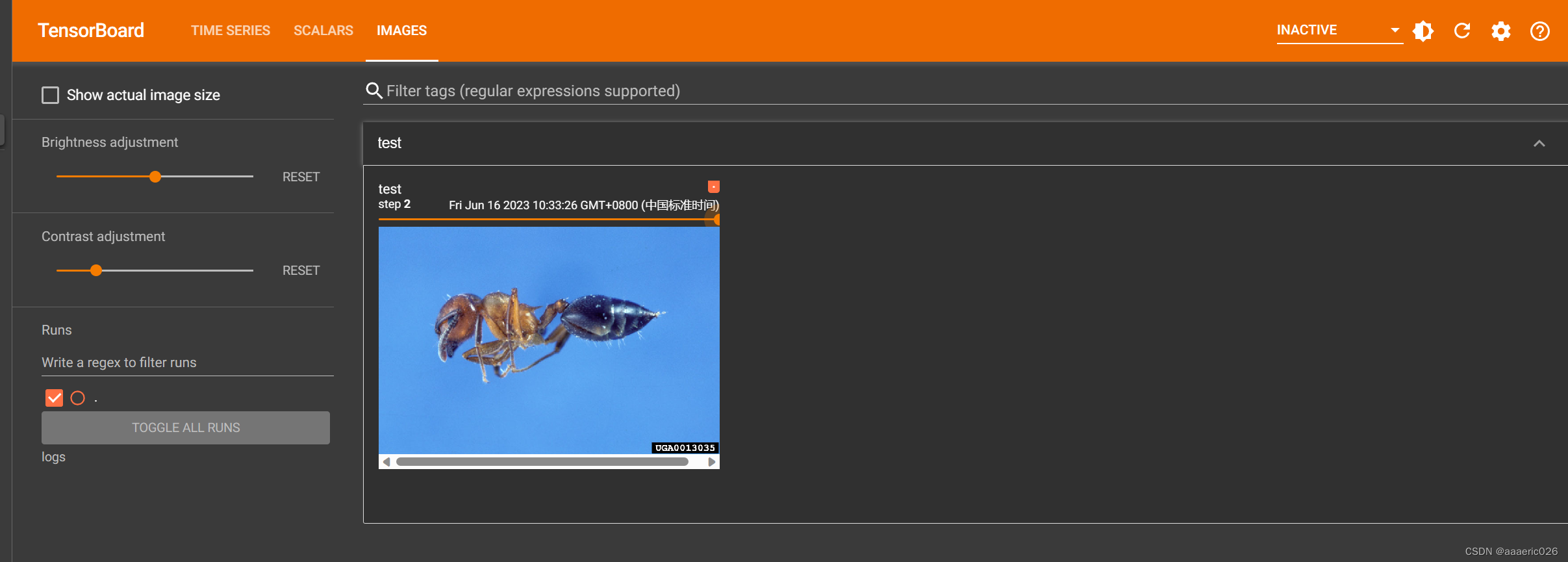

Tensorboard使用

SummaryWriter 类 add_image() , add_scalar()使用

from torch.utils.tensorboard import SummaryWriter

import numpy as np

from PIL import Image

writer = SummaryWriter("logs")

image_path = "data/train/ants_image/0013035.jpg"

img_PIL = Image.open(image_path)

img_array = np.array(img_PIL)

print(type(img_array))

print(img_array.shape)

writer.add_image("test", img_array, 2, dataformats='HWC') #官方说明:通道必须为HWC

# y = 2x ,可以每次删除一次log里面的文件

for i in range(0,100):

writer.add_scalar("y=2x",2 * i , i)

writer.close()

显示图片:

D:\PycharmProjects\learn_pytorch>tensorboard --logdir=logs

# Serving TensorBoard on localhost; to expose to the network, use a proxy or pass --bind_all

# TensorBoard 2.13.0 at http://localhost:6006/ (Press CTRL+C to quit)

## 可以改变上面端口6006

tensorboard --logdir=logs --port=6007 #改为6007

打开链接,展示:

Transforms 类

from PIL import Image

from torch.utils.tensorboard import SummaryWriter

from torchvision import transforms

# Python的用法 -》 tensor数据类型

img_path = "dataset/train/ants/6743948_2b8c096dda.jpg"

img_PIL = Image.open(img_path)

# print(img_PIL)

write = SummaryWriter("logs") # 会出现一个logs的文件夹目录

# 1. transforms如何使用

tensor_trans = transforms.ToTensor() # ToTensor是一个class , 在()中ctrl+p可以查看需要输入的类型

tensor_img = tensor_trans(img_PIL)

print(tensor_img)

write.add_image("tensor_img", tensor_img)

write.close()运行结果:

常见的Trasnform:

常见transforms类的使用:

from PIL import Image

from torch.utils.tensorboard import SummaryWriter

from torchvision import transforms

writer = SummaryWriter("logs")

img = Image.open("image/pytorch.jpg") #PIL读取图片

print(img)

# ToTensor

trans_totensor = transforms.ToTensor()

img_tensor = trans_totensor(img)

writer.add_image("ToTensor", img_tensor)

# Normalize

print(img_tensor[0][0][0])

trans_norm = transforms.Normalize([0.4, 0.5, 0.8,0.2], [0.3, 0.5, 0.7,0.9]) #图片是RGBA 四维, A代表透明度

img_norm = trans_norm(img_tensor)

print(img_norm[0][0][0])

writer.add_image("trans_norm", img_norm, 2)

# Resize

print(img.size)

# img PIL -> resize() -> PIL

trans_resize = transforms.Resize((512, 512))

img_resize = trans_resize(img)

# img_resize PIL -> Totensor -> tensor类型

img_resize = trans_totensor(img_resize)

print(img_resize)

writer.add_image("trans_resize", img_resize, 0)

# Compose - resize - 2

trans_resize2 = transforms.Resize(512)

# PIL -> PIL -> tensor

trans_compose = transforms.Compose([trans_resize2, trans_totensor]) #compose方法

img_resize2 = trans_compose(img)

writer.add_image("trans_resize", img_resize2, 1)

# RandomCrop

trans_random = transforms.RandomCrop(50)

trans_compose2 = transforms.Compose([trans_random, trans_totensor])

for i in range(10):

img_crop = trans_compose2(img)

writer.add_image("RandomCrop", img_crop, i)

writer.close()总结小记: 注意输入和输出类型;注意官方文档、所需参数;不确定返回值就用print(), print(type()), debug

torchvision中数据集(DataLoader)的使用、与transforms相结合

import torchvision

from torch.utils.data import DataLoader

from torch.utils.tensorboard import SummaryWriter

test_data = torchvision.datasets.CIFAR10("./dataset", train=False, transform=torchvision.transforms.ToTensor())

test_loader = DataLoader(dataset=test_data, batch_size=64, shuffle=True, num_workers=0, drop_last=False)

# 测试数据集中第一张图片及target

img, target = test_data[0]

print(img.shape)

print(target)

print(test_data.classes[target])

writer = SummaryWriter('dataloader')

for epoch in range(2):

step = 0

for data in test_loader:

imgs, target = data

# print(img.shape)

# print(target)

writer.add_images('epoch:{}'.format(epoch), imgs, step) #注意不是writer.add_image()

step = step + 1

# tensorboard显示完整step 可改为scalars

tensorboard --logdir=dataloader --samples_per_plugin=images=100000 神经网络(NN)

继承父类nn.Module

import torch

from torch import nn

class Tudui(nn.Module):

# code -> generate -> override methods

def __init__(self):

super().__init__() # 继承父类nn.Module的方法

def forward(self, input):

output = input + 1

return output

tudui = Tudui() #实例化

x1 = torch.tensor(1.0)

output1 = tudui(x1)

print(output1)二维卷积conv2d:F.conv2d(函数)和nn.conv2d(类)

1、F.conv2d

import torch

import torch.nn.functional as F

input = torch.tensor([[1, 2, 0, 3, 1],

[0, 1, 2, 3, 1],

[1, 2, 1, 0, 0],

[5, 2, 3, 1, 1],

[2, 1, 0, 1, 1]])

kernel = torch.tensor([[1, 2, 1],

[0, 1, 0],

[2, 1, 0]])

input = torch.reshape(input, (1, 1, 5, 5))

kernel = torch.reshape(kernel, (1, 1, 3, 3))

print(input.shape)

print(kernel.shape)

output = F.conv2d(input, kernel, stride=1, padding=0)

print(output)

# print(output.shape) #torch.Size([1, 1, 3, 3])2、nn.conv2d

import torch

import torchvision

from torch import nn

from torch.nn import Conv2d

from torch.utils.data import DataLoader

from torch.utils.tensorboard import SummaryWriter

dataset = torchvision.datasets.CIFAR10("./data", train=False, transform=torchvision.transforms.ToTensor(),

download=True)

dataloader = DataLoader(dataset, batch_size=64, shuffle=True, drop_last=True)

class Tudui(nn.Module): #们编写的模型所继承的nn.Module类中,其__call__方法内便包含了某种形式的对forward方法的调用,从而使得我们不需要显式地调用forward方法

def __init__(self):

super().__init__()

self.conv1 = Conv2d(in_channels=3, out_channels=6, kernel_size=3, stride=1, padding=0)

def forward(self, x):

x = self.conv1(x)

return x

tudui = Tudui()

# print(tudui)

writer = SummaryWriter("all_logs/P18")

step = 0

for data in dataloader:

imgs, targets = data

output = tudui(imgs)

print(output.shape)

writer.add_images("input", imgs, step)

output = torch.reshape(output, (-1, 3, 30, 30)) #通道为3才能显示彩色图像

writer.add_images("output", output, step)

step += 1

writer.close()池化层(以MaxPool2d为例)

import torch

import torchvision

from torch import nn

from torch.nn import MaxPool2d

from torch.utils.data import DataLoader

from torch.utils.tensorboard import SummaryWriter

input = torch.tensor([[1, 2, 0, 3, 1],

[0, 1, 2, 3, 1],

[1, 2, 1, 0, 0],

[5, 2, 3, 1, 1],

[2, 1, 0, 1, 1]], dtype=float)

input = torch.reshape(input, (-1, 1, 5, 5))

# print(input.shape)

dataset = torchvision.datasets.CIFAR10("./data", train=False, transform=torchvision.transforms.ToTensor(),

download=True)

dataloader = DataLoader(dataset, batch_size=64, shuffle=False)

class maxpooling(nn.Module):

def __init__(self):

super().__init__()

self.maxpool1 = MaxPool2d(kernel_size=3, ceil_mode=True)

def forward(self, x):

x = self.maxpool1(x)

return x

mp = maxpooling()

# output = mp(input)

# print(mp)

# print(output)

writer = SummaryWriter("./all_logs/P19")

step = 0

for data in dataloader:

imgs, target = data

writer.add_images("P19_input", imgs, step)

imgs = mp(imgs)

# print(imgs)

writer.add_images("P19_output", imgs, step)

step += 1

writer.close()非线性激活(ReLU、Sigmoid)

# 非线性激活

import torch

import torchvision.datasets

from torch import nn

from torch.nn import ReLU, Sigmoid

from torch.utils.data import DataLoader

from torch.utils.tensorboard import SummaryWriter

input = torch.randn((2, 2))

print(f'------input:------\n{input}')

input = torch.reshape(input, (-1, 1, 2, 2)) #-1自动计算batchsize,其余维度是1*2*2

print(input.shape)

dataset = torchvision.datasets.CIFAR10("./data", train=False, transform=torchvision.transforms.ToTensor(),

download=True)

dataloader = DataLoader(dataset, batch_size=64, shuffle=True)

# 直接写法

# relu = nn.ReLU() #注意nn.ReLU是一个类,与F.ReLU不同

# output = relu(input) #有无.forward都可以

#类写法

class fun(nn.Module):

def __init__(self):

super().__init__() #super继承nn.Module所有的东西,下一步可直接用ReLU

self.sigmoid1 = Sigmoid()

def forward(self, x):

x = self.sigmoid1(x)

return x

# relu1 = fun()

# output = relu1(input)

# print(f'\n\n-----output:------\n{output}')

sigmoid1 = fun()

writer = SummaryWriter("./all_logs/P20")

step = 0

for data in dataloader:

imgs, target = data

writer.add_images("P20relu_in", imgs, step)

output = sigmoid1(imgs)

writer.add_images("P20relu_out", output, step)

step += 1

writer.close()线性层(linear layer)

import torch

import torchvision.datasets

from torch import nn

from torch.utils.data import DataLoader

dataset = torchvision.datasets.CIFAR10("data", train=False, transform=torchvision.transforms.ToTensor(),

download=True)

dataloader = DataLoader(dataset, batch_size=64, drop_last=True)

class linear(nn.Module):

def __init__(self):

super().__init__()

self.linear1 = nn.Linear(in_features=196608, out_features=10)

def forward(self, x):

y = self.linear1(x)

return y

linear1 = linear()

for data in dataloader:

imgs, target = data

print(imgs.shape)

# flatten = nn.Flatten()

# imgs = flatten(imgs) #torch.Size([64, 3072]) 这里的flatten考虑了batchsize

imgs = torch.flatten(imgs) #torch.Size([196608]) 没有batchsize,直接一维

print(imgs.shape)

imgs = linear1(imgs)

print(imgs.shape)写网络模型(nn.Sequential用法)

import torch

import torch.nn as nn

class cifar10_model(nn.Module):

def __init__(self):

super().__init__()

self.model = nn.Sequential(

nn.Conv2d(3, 32, 5, stride=1, padding=2), # (n+2p-f)/s + 1 计算s和p

nn.MaxPool2d(2),

nn.Conv2d(32, 32, 5, stride=1, padding=2),

nn.MaxPool2d(2),

nn.Conv2d(32, 64, kernel_size=5, stride=1, padding=2),

nn.MaxPool2d(2),

nn.Flatten(), # input默认第0维是batchsize, 后三维形成全连接层

nn.Linear(64 * 4 * 4, 64),

nn.Linear(64, 10),

)

def forward(self, x):

x = self.model(x)

return x

model = cifar10_model()

print(model)

loss_function(network)

#loss function

#1、计算目标和输出之间的差距

#2、为更新输出提供依据(反向传播)grad

import torchvision.datasets

from torch import nn

from torch.utils.data import DataLoader

dataset = torchvision.datasets.CIFAR10("./data", train=False, transform=torchvision.transforms.ToTensor(),

download=True)

dataloader = DataLoader(dataset, batch_size=1)

class Cifar10_model(nn.Module):

def __init__(self):

super().__init__()

self.model = nn.Sequential(

nn.Conv2d(3, 32, 5, stride=1, padding=2), # (n+2p-f)/s + 1 计算s和p

nn.MaxPool2d(2),

nn.Conv2d(32, 32, 5, stride=1, padding=2),

nn.MaxPool2d(2),

nn.Conv2d(32, 64, kernel_size=5, stride=1, padding=2),

nn.MaxPool2d(2),

nn.Flatten(), # input默认第0维是batchsize, 后三维形成全连接层

nn.Linear(64 * 4 * 4, 64),

nn.Linear(64, 10),

)

def forward(self, x):

x = self.model(x)

return x

cifar10_model = Cifar10_model()

loss_cross = nn.CrossEntropyLoss()

for data in dataloader:

imgs, target = data

output = cifar10_model(imgs)

# print(imgs)

loss = loss_cross(output, target)

print(f'output:{output}\n'

f'target:{target}\n'

f'loss:{loss}\n')

loss.backward() #求梯度

print("ok")优化器的使用(optim.zero_grad()、loss.backward()、optim.step())

import torch

import torchvision.datasets

from torch import nn

from torch.utils.data import DataLoader

dataset = torchvision.datasets.CIFAR10("./data", train=False, transform=torchvision.transforms.ToTensor(),

download=True)

dataloader = DataLoader(dataset, batch_size=1)

class Cifar10_model(nn.Module):

def __init__(self):

super().__init__()

self.model = nn.Sequential(

nn.Conv2d(3, 32, 5, stride=1, padding=2), # (n+2p-f)/s + 1 计算s和p

nn.MaxPool2d(2),

nn.Conv2d(32, 32, 5, stride=1, padding=2),

nn.MaxPool2d(2),

nn.Conv2d(32, 64, kernel_size=5, stride=1, padding=2),

nn.MaxPool2d(2),

nn.Flatten(), # input默认第0维是batchsize, 后三维形成全连接层

nn.Linear(64 * 4 * 4, 64),

nn.Linear(64, 10),

)

def forward(self, x):

x = self.model(x)

return x

cifar10_model = Cifar10_model()

loss_cross = nn.CrossEntropyLoss()

optim = torch.optim.SGD(params=cifar10_model.parameters(), lr=0.01)

for epoch in range(20):

running_loss = 0.0

for data in dataloader:

imgs, target = data

output = cifar10_model(imgs)

# print(imgs)

loss = loss_cross(output, target)

# print(f'output:{output}\n'

# f'target:{target}\n'

# f'loss:{loss}\n')

optim.zero_grad() #梯度值归零

loss.backward() #求梯度

optim.step() #参数更新

running_loss += loss

print(running_loss)对现有网络进行修改(VGG16)

#对现有网络模型的修改 VGG16

import torchvision.datasets

from torch import nn

# train_data = torchvision.datasets.ImageNet("./data", split="train", download=True) 公开数据集无法下载

vgg16 = torchvision.models.vgg16()

vgg16_1 = torchvision.models.vgg16()

vgg16_2 = torchvision.models.vgg16()

vgg16_3 = torchvision.models.vgg16()

print(vgg16) # out_feature = 1000 , CIFAR10数据集输出为10

#在整个模型最后加

vgg16_1.add_module("add_linear", nn.Linear(1000, 10))

print(vgg16_1)

#在模型的classifier后加

vgg16_2.classifier.add_module("add_linear", nn.Linear(1000, 10))

print(vgg16_2)

#在原有模型中改

vgg16_3.classifier[6] = nn.Linear(4096, 10)

print(vgg16_3)模型的保存与加载

# 模型保存

import torchvision

import torch

vgg16 = torchvision.models.vgg16()

# save方式一 :模型结构+模型参数

torch.save(vgg16, "./P26/vgg16_method1.pth")

# save方式二 :模型参数(官方推荐)

torch.save(vgg16.state_dict(), "./P26/vgg16_method2.pth")# 模型加载

import torch

import torchvision.models

# load方式一

## 有陷阱 from P26_load import * (不用把模型重新写一遍)

model1 = torch.load("./P26/vgg16_method1.pth")

# print(model1)

# load方式二 (官方推荐)

model2 = torch.load("./P26/vgg16_method2.pth") # 只加载了参数

# print(model2)

vgg16 = torchvision.models.vgg16() # 把参数给到模型,一般这样用

vgg16.load_state_dict(torch.load("./P26/vgg16_method2.pth"))

print(vgg16)模型的完整训练

利用cpu训练:

import torchvision.datasets

from torch.utils.data import DataLoader

from torch.utils.tensorboard import SummaryWriter

from P27_model import *

train_dataset = torchvision.datasets.CIFAR10("./data", train=True, transform=torchvision.transforms.ToTensor(),

download=True)

test_dataset = torchvision.datasets.CIFAR10("./data", train=False, transform=torchvision.transforms.ToTensor(),

download=False)

#数据长度 len

len_train = len(train_dataset)

len_test = len(test_dataset)

print(f"train_dataset长度:{len_train}")

print("test长度:{}".format(len_test))

# 数据加载器

train_dataloader = DataLoader(train_dataset, batch_size=64, shuffle=True, drop_last=False)

test_dataloader = DataLoader(test_dataset, batch_size=64, shuffle=True, drop_last=False)

# 创建模型

model = Cifar10_model()

# 损失函数

loss_fn = nn.CrossEntropyLoss()

# 优化器

learning_rate = 1e-2

optimizer = torch.optim.SGD(model.parameters(), lr=learning_rate)

# 设置训练网络的一些参数

total_train_step = 0

total_test_step = 0

epoch = 10

# 添加tensorboard

writer = SummaryWriter("./all_logs/P28_train")

for i in range(epoch):

print("------第{}轮训练开始:------".format(i+1))

# 训练开始

model.train() # 有特殊层的话要调用

for data in train_dataloader: # 50000张图片,一次data的batchsize为64, 单轮训练782次(看print)

imgs, target = data

output = model(imgs)

loss = loss_fn(output, target)

# 优化器优化模型

optimizer.zero_grad()

loss.backward()

optimizer.step()

total_train_step += 1

if total_train_step % 100 == 0:

print(f"训练次数:{total_train_step},loss:{loss.item()}") # .item() 例如可以将tensor数据类型转换为标量

writer.add_scalar("train_loss", loss, total_train_step)

# 测试模型

model.eval() # 有特殊层的话要调用

total_test_loss = 0

total_accuracy = 0

with torch.no_grad(): # 节约内存,提高速度

for data in test_dataloader:

imgs, target = data

output = model(imgs)

loss = loss_fn(output, target)

total_test_loss += loss.item()

accuracy = (output.argmax(1) == target).sum() # 1方向为横向

total_accuracy += accuracy

print("整体测试Loss:{}".format(total_test_loss))

print("整体测试集上的正确率:{}".format(total_accuracy / len_test))

writer.add_scalar("test_loss", total_test_loss, total_test_step)

writer.add_scalar("test_accuracy", total_accuracy / len_test, total_test_step)

total_test_step += 1

torch.save(model, "./P27_model/P27model_{}.pth".format(i)) # 第i轮次保存的模型

print("第{}轮模型已保存".format(i))

writer.close()

利用GPU训练

gpu训练方式一:

网络模型、损失函数、数据(输入、标注).cuda()

if torch.cuda.is_available():

xxxx = xxxx.cuda()

* gpu训练方式二:

设备: device = torch.device("cpu") or torch.device("cuda:0") 、 torch.device("cuda:1") 单显卡或者多显卡

# 简单写法

device = torch.device("cuda:0" if torch.cuda.is_available() else "cpu")利用设备:xxx.to(device)

# 使用GPU训练模型

import torch.cuda

# 网络模型、、损失函数、数据(输入、标注) .to(device)

import torchvision.datasets

from torch.utils.data import DataLoader

from torch.utils.tensorboard import SummaryWriter

from P27_model import *

import time

train_dataset = torchvision.datasets.CIFAR10("./data", train=True, transform=torchvision.transforms.ToTensor(),

download=True)

test_dataset = torchvision.datasets.CIFAR10("./data", train=False, transform=torchvision.transforms.ToTensor(),

download=False)

#数据长度 len

len_train = len(train_dataset)

len_test = len(test_dataset)

print(f"train_dataset长度:{len_train}")

print("test长度:{}".format(len_test))

# 定义训练设备

device = torch.device("cuda:0" if torch.cuda.is_available() else "cpu")

# 数据加载器

train_dataloader = DataLoader(train_dataset, batch_size=64, shuffle=True, drop_last=False)

test_dataloader = DataLoader(test_dataset, batch_size=64, shuffle=True, drop_last=False)

# 创建模型

model = Cifar10_model()

model = model.to(device)

# 损失函数

loss_fn = nn.CrossEntropyLoss()

loss_fn = loss_fn.to(device)

# 优化器

learning_rate = 1e-2

optimizer = torch.optim.SGD(model.parameters(), lr=learning_rate)

# 设置训练网络的一些参数

total_train_step = 0

total_test_step = 0

epoch = 10

# 添加tensorboard

writer = SummaryWriter("./all_logs/P30_train_gpu1")

start_time = time.time()

for i in range(epoch):

print("------第{}轮训练开始:------".format(i+1))

# 训练开始

model.train() # 有特殊层的话要调用

for data in train_dataloader: # 50000张图片,一次data的batchsize为64, 单轮训练782次(看print)

imgs, target = data

imgs = imgs.to(device)

target = target.to(device)

output = model(imgs)

loss = loss_fn(output, target)

# 优化器优化模型

optimizer.zero_grad()

loss.backward()

optimizer.step()

total_train_step += 1

if total_train_step % 100 == 0:

print(f"训练次数:{total_train_step},loss:{loss.item()}") # .item() 例如可以将tensor数据类型转换为标量

end_time = time.time()

print(end_time - start_time)

writer.add_scalar("train_loss", loss, total_train_step)

# 测试模型

model.eval() # 有特殊层的话要调用

total_test_loss = 0

total_accuracy = 0

with torch.no_grad(): # 节约内存,提高速度

for data in test_dataloader:

imgs, target = data

imgs = imgs.to(device)

target = target.to(device)

output = model(imgs)

loss = loss_fn(output, target)

total_test_loss += loss.item()

accuracy = (output.argmax(1) == target).sum() # 1方向为横向

total_accuracy += accuracy

print("整体测试Loss:{}".format(total_test_loss))

print("整体测试集上的正确率:{}".format(total_accuracy / len_test))

writer.add_scalar("test_loss", total_test_loss, total_test_step)

writer.add_scalar("test_accuracy", total_accuracy / len_test, total_test_step)

total_test_step += 1

torch.save(model, "./P30_gpu1_train/P30_gpu1_model_{}.pth".format(i)) # 第i轮次保存的模型

print("第{}轮模型已保存".format(i+1))

writer.close()完整的模型验证套路(测试、demo)

利用已经训练好的模型,给它提供输入。

# 完整模型验证套路(测试、demo)

import torch

import torchvision.transforms

from PIL import Image

# 有报错:Input type (torch.FloatTensor) and weight type (torch.cuda.FloatTensor) should be the same or input should be a MKLDNN tensor and weight is a dense tensor

# 使用gpu数据类型

device = torch.device("cuda:0" if torch.cuda.is_available() else "cpu")

img1_path = "image/dog1.png" # win中用 /

img1 = Image.open(img1_path)

# print(img1)

# img1 = img1.convert('RGB') # 如果是RGBA四通道(透明度),则需要转化为RGB

# print(img1)

transform = torchvision.transforms.Compose([torchvision.transforms.Resize((32, 32)),

torchvision.transforms.ToTensor()])

img1 = transform(img1).to(device)

# print(img1.shape)

model = torch.load("./P30_gpu1_train/P30_gpu1_model_9.pth")

print(model)

# RuntimeError: mat1 and mat2 shapes cannot be multiplied (64x16 and 1024x64)

# 错误:图片需要指定batchsize

img1 = torch.reshape(img1, (1, 3, 32, 32))

model.eval() # model测试

with torch.no_grad(): # 节约内存、性能

output = model(img1)

output = output.argmax(1)

print(output)开源项目

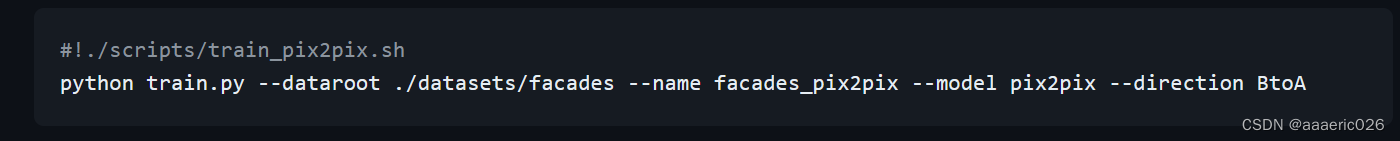

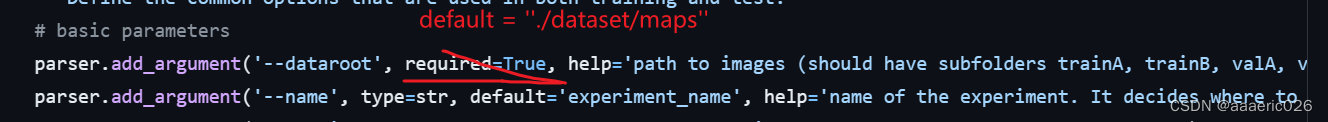

运行开源项目时,train过程中的参数有required = True, 可以删掉并改为default = xxx

下面--dataroot --name --model 都是参数的名字,在源码中找到参数之后进行修改。

2110

2110

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?