基础环境:三个节点 前提/etc/hosts master对其他节点可免密登录(包括自己) 上传jdk和hadoop包到master节点,都解包到/usr/local/src下 vim /etc/profile 末尾追加

#set java environment export JAVA_HOME=/usr/local/src/jdk export JRE_HOME=/usr/local/src/jre export CLASSPATH=.:$CLASSPATH:$JAVA_HOME/lib:$JRE_HOME/lib export PATH=$PATH:$JAVA_HOME/bin:$JRE_HOME/bin #set hadoop environment export HADOOP_HOME=/usr/local/src/hadoop export PATH=$PATH:$HADOOP_HOME/bin:$HADOOP_HOME/sbin #set zookeeper export ZOOKEEPER_HOME=/usr/local/src/zookeeper export PATH=$PATH:$ZOOKEEPER_HOME/bin

source /etc/profile 重新加载 java -version &&hadoop

source /etc/profile 重新加载 java -version &&hadoop

vim /etc/sysconfig/network

HOSTNAME=master(其他两节点对应)

ZooKeeper HA集群 wget http://dlcdn.apache.org/zookeeper/zookeeper-3.6.3/apache-zookeeper-3.6.3.tar.gz

[root@master ~]# mkdir /usr/local/src/zookeeper/data [root@master ~]# mkdir /usr/local/src/zookeeper/logs echo "1" /usr/lcoal/src/zookeeper/data/myid cp /usr/local/src/zookeeper/conf/zoo_sample.cfg zoo.cfg vim /usr/local/src/zookeeper/conf/zoo.cfg

server.1=master:2888:3888 server.2=slave1:2888:3888 server.3=slave2:2888:3888 dataLogDir=/usr/local/src/zookeeper/logs dataDir=/usr/local/src/zookeeper/data

3.5.5后的新版本zookeeper包分为 bin.tar.gz标准版本和tar.gz源码版本 应选择前者,我选的后者start时zookeeper启动失败,早前的版本不会报错 我用的zookeeper-3.4.5.tar.gz(坑)

scp -r /usr/local/src/zookeeper slave1:/usr/local/src

scp -r /usr/local/src/zookeeper slave2:/usr/local/src

三个节点myid对应zoo.cfg

echo "2" /usr/lcoal/src/zookeeper/data/myid

echo "3" /usr/lcoal/src/zookeeper/data/myid

三个节点依次这样启动zk

[root@master ~]# cd /usr/local/src/zookeeper/ [root@master zookeeper]# bin/zkServer.sh start [root@master zookeeper]# bin/zkServer.sh status ZooKeeper JMX enabled by default Using config: /usr/local/src/zookeeper/bin/../conf/zoo.cfg Client port found: 2181. Client address: localhost. Client SSL: false. Mode: follower

3.5.5后的新版本zookeeper包分为 bin.tar.gz标准版本和tar.gz源码版本 应选择前者,我选的后者start时zookeeper启动失败,早前的版本不会报错 教材上用的zookeeper-3.4.5.tar.gz(坑)

hadoop HA集群的文件参数 master:

1.hadoop-env.sh文件 vim /usr/local/src/hadoop/etc/hadoop/hadoop-env.sh 修改 export JAVA_HOME=/usr/local/src/jdk 2.core-site.xml文件

core-site.xml指定HDFS的命令服务nameservices的名称为ns,与hdfs-site.xml文件的配置相同。(坑)

<configuration> <property> <name>fs.defaultFS</name> <value>hdfs://ns</value> </property> <property> <name>hadoop.tmp.dir</name> <value>file:/usr/local/src/hadoop/tmp</value> </property> <property> <name>ha.zookeeper.quorum</name> <value>master:2181,slave1:2181,slave2:2181</value> </property> </configuration>

3.hdfs-site.xml文件 vim /usr/local/src/hadoop/etc/hadoop/hdfs-site.xml

<configuration> <property> <name>dfs.nameservices</name> <value>ns</value> </property> <property> <name>dfs.ha.namenodes.ns</name> <value>nn1,nn2</value> </property> <property> <name>dfs.namenode.rpc-address.ns.nn1</name> <value>master:9000</value> </property> <property> <name>dfs.namenode.http-address.ns.nn1</name> <value>master:50070</value> </property> <property> <name>dfs.namenode.rpc-address.ns.nn2</name> <value>slave1:9000</value> </property> <property> <name>dfs.namenode.http-address.ns.nn2</name> <value>slave1:50070</value> </property> <property> <name>dfs.namenode.shared.edits.dir</name> <value>qjournal://master:8485;slave1:8485;slave2:8485/ns</value> </property> <property> <name>dfs.journalnode.edits.dir</name> <value>/usr/local/src/hadoop/journal</value> </property> <property> <name>dfs.ha.automatic-failover.enabled</name> <value>true</value> </property> <property> <name>dfs.client.failover.proxy.provider.ns</name> <value>org.apache.hadoop.hdfs.server.namenode.ha.ConfiguredFailoverProxyProvider</value> </property> <property> <name>dfs.ha.fencing.methods</name> <value>shell(/bin/true)</value> </property> <property> <name>dfs.ha.fencing.ssh.private-key-files</name> <value>/root/.ssh/id_rsa</value> </property> <property> <name>dfs.namenode.name.dir</name> <value>/usr/local/src/hadoop/tmp/dfs/name</value> </property> <property> <name>dfs.datanode.data.dir</name> <value>/usr/local/src/hadoop/tmp/dfs/data</value> </property> <property> <name>dfs.replication</name> <value>3</value> </property> </configuration>

4.mapred-site.xml

<configuration> <property> <name>mapreduce.framework.name</name> <value>yarn</value> </property> <property> <name>mapreduce.jobhistory.address</name> <value>master:10020</value> </property> <property> <name>mapreduce.jobhistory.webapp.address</name> <value>master:19888</value> </property> </configuration>

5.yarn-site.xml

<configuration> <property> <name>yarn.resourcemanager.ha.enabled</name> <value>true</value> </property> <property> <name>yarn.resourcemanager.ha.rm-ids</name> <value>rm1,rm2</value> </property> <property> <name>yarn.resourcemanager.hostname.rm1</name> <value>master</value> </property> <property> <name>yarn.resourcemanager.hostname.rm2</name> <value>slave1</value> </property> <property> <name>yarn.resourcemanager.recovery.enabled</name> <value>true</value> </property> <property> <name>yarn.resourcemanager.store.class</name> <value>org.apache.hadoop.yarn.server.resourcemanager.recovery.ZKRMStateStore</value> </property> <property> <name>yarn.resourcemanager.zk-address</name> <value>master:2181,slave1:2181,slave2:2181</value> </property> <property> <name>yarn.resourcemanager.cluster-id</name> <value>yarn-ha</value> </property> <property> <name>yarn.resourcemanager.hostname</name> <value>master</value> </property> <property> <name>yarn.nodemanager.vmem-check-enabled</name> <value>false</value> </property> <property> <name>yarn.nodemanager.aux-services</name> <value>mapreduce_shuffle</value> </property> ##新版下面这个(排错记录,最后wordcount时候报错) <property> <name>yarn.resourcemanager.webapp.address.rm1</name> <value>master</value> </property> <property> <name>yarn.resourcemanager.scheduler.address.rm2</name> <value>slave1</value> </property> <property> <name>yarn.resourcemanager.webapp.address.rm2</name> <value>slave1</value> </property> </configuration>

修改yarn后这样重启:

[root@master sbin]# ./yarn-daemon.sh stop resourcemanager [root@master sbin]# yarn-daemons.sh stop nodemanager

[root@master sbin]# ./yarn-daemon.sh start resourcemanager [root@master sbin]# yarn-daemons.sh start nodemanager

[root@slave1 sbin]# yarn-daemon.sh stop resourcemanager

[root@slave1 sbin]# yarn-daemon.sh start resourcemanager

6.

vim /usr/local/src/hadoop/etc/hadoop/masters master slave1 vim /usrlocal/src/hadoop/etc/hadoop/slaves master slave1 slave2 mkdir -p /usr/local/src/hadoop/dfs/name mkdir -p /usr/local/src/hadoop/dfs/data mkdir /usr/local/src/hadoop/tmp mkdir /usr/local/src/hadoop/journal

修改hadoop为所属用户,同上scp到salve1,2

1.JournalNode服务启动

①/usr/local/src/hadoop/sbin/hadoop-daemons.sh

2.NameNode ①/usr/local/src/hadoop/bin/hdfs namenode –-format 格式化元数据 /usr/local/src/hadoop/sbin/hadoop-daemon.sh start namenode ②/usr/local/src/hadoop/bin/hdfs namenode -bootstrapStandby /usr/local/src/hadoop/sbin/hadoop-daemon.sh start namenode

3.ZKFC ①②任意一个namenode上 /usr/local/src/hadoop/bin/hdfs/zkfc -formatZK (格式化ZKFC) sbin/start-dfs.sh 启动两个namenode、三个datanode、三个journalnode、两个FailoverController

4.YARN ①sbin/start-yarn.sh 启动一个ResourceManager、三个NodeManager jps查看三个节点上进程个数依次为 985 ②sbin/yarn-daemon.sh start resourcemanager

5.MapReduce的历史服务器 sbin/yarn-daemon.sh start proxyserver sbin/mr-jobhistory-daemon.sh start historyserver

[root@master hadoop]# jps 9952 QuorumPeerMain 12816 DataNode 13232 ResourceManager 13761 JobHistoryServer 12324 JournalNode 13798 Jps 13337 NodeManager 13115 DFSZKFailoverController 12461 NameNode [root@slave1 hadoop]# jps 11440 DataNode 11809 ResourceManager 11251 NameNode 11124 JournalNode 9621 QuorumPeerMain 11576 DFSZKFailoverController 11660 NodeManager 11900 Jps [root@slave2 hadoop]# jps 10802 DataNode 11077 Jps 10935 NodeManager 10681 JournalNode 9389 QuorumPeerMain

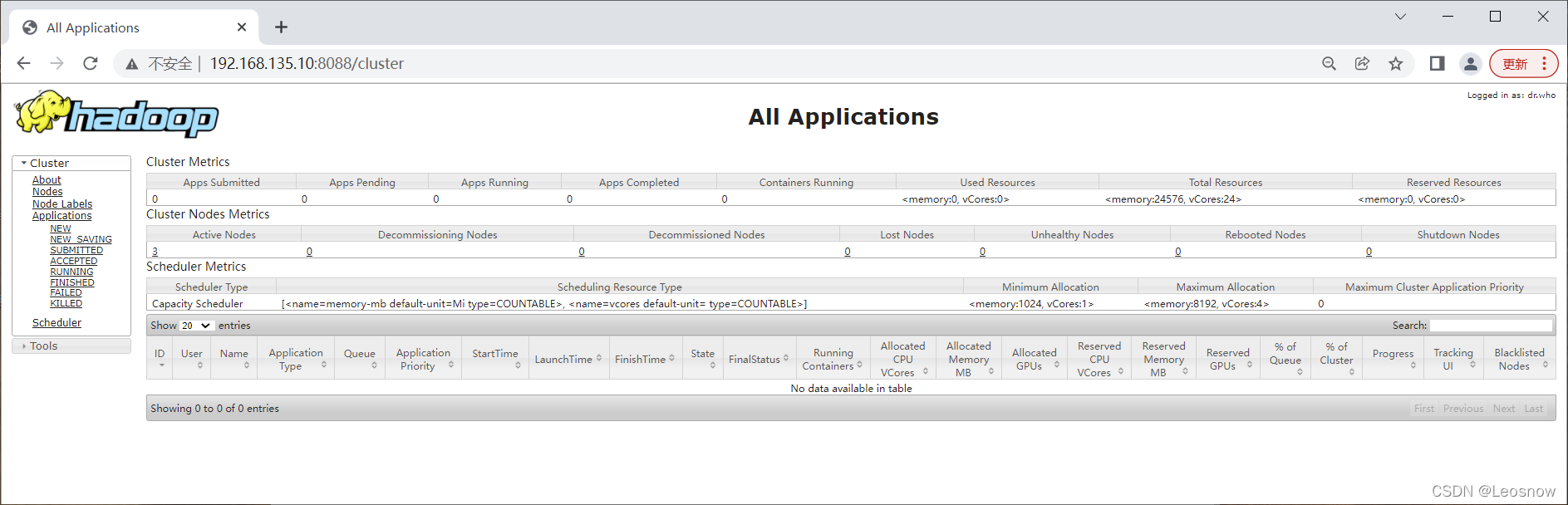

访问端口:

运行测试:

service firewalld stop systemctl disable firewalld.service [root@master ~]# vim a.txt [root@master ~]# hadoop fs -mkdir /input (hdfs dfs ) [root@master ~]# hadoop fs -put a.txt /input [root@master mapreduce]# hadoop fs -ls / (或 hdfs dfs -ls / ) Found 2 items drwxr-xr-x - root supergroup 0 2022-04-06 10:34 /input drwxrwx--- - root supergroup 0 2022-04-06 09:52 /tmp [root@master ~]# hdfs dfs -cat /input/a.txt hello world hello wangzihao [root@master ~]# cd /usr/local/src/hadoop/share/hadoop/mapreduce/ [root@master mapreduce]# ls hadoop-mapreduce-client-app-2.10.1.jar hadoop-mapreduce-client-shuffle-2.10.1.jar hadoop-mapreduce-client-common-2.10.1.jar hadoop-mapreduce-examples-2.10.1.jar hadoop-mapreduce-client-core-2.10.1.jar jdiff hadoop-mapreduce-client-hs-2.10.1.jar lib hadoop-mapreduce-client-hs-plugins-2.10.1.jar lib-examples hadoop-mapreduce-client-jobclient-2.10.1.jar sources hadoop-mapreduce-client-jobclient-2.10.1-tests.jar [root@master mapreduce]# hadoop jar hadoop-mapreduce-examples-2.10.1.jar wordcount /input/a.txt /output (注意:输出目录不能存在) 22/04/07 12:10:21 INFO mapreduce.Job: map 0% reduce 0% 22/04/07 12:10:29 INFO mapreduce.Job: map 100% reduce 0% 22/04/07 12:10:35 INFO mapreduce.Job: map 100% reduce 100% 22/04/07 12:10:36 INFO mapreduce.Job: Job job_1649304081222_0002 completed successfully [root@master mapreduce]# hadoop fs -ls -R /out -rw-r--r-- 3 root supergroup 0 2022-04-07 12:10 /out/_SUCCESS -rw-r--r-- 3 root supergroup 17 2022-04-07 12:10 /out/part-r-00000 [root@master mapreduce]# hadoop fs -cat /out/part-r-00000 aa 1 leosnow 1

手动切换

故障转移报错(因为已经配了故障自动转移,就不用加后两个参数)

[root@master hadoop]# hdfs haadmin -failover --forcefence -forceactive nn1 nn2 forcefence and forceactive flags not supported with auto-failover enabled. [root@master hadoop]# hdfs haadmin -failover nn1 nn2 Failover to NameNode at slave1/192.168.135.20:9000 successful [root@master hadoop]# hdfs haadmin -getServiceState nn1 standby [root@master hadoop]# hdfs haadmin -getServiceState nn2 active [root@master hadoop]# hdfs haadmin -failover nn2 nn1 Failover to NameNode at master/192.168.135.10:9000 successful [root@master hadoop]# hdfs haadmin -getServiceState nn1 active [root@master hadoop]# hdfs haadmin -getServiceState nn2 standby

自动切换

[root@master sbin]# hadoop-daemon.sh stop namenode stopping namenode [root@master sbin]# hdfs haadmin -getServiceState nn1 22/04/08 10:12:17 INFO ipc.Client: Retrying connect to server: master/192.168.135.10:9000. Already tried 0 time(s); retry policy is RetryUpToMaximumCountWithFixedSleep(maxRetries=1, sleepTime=1000 MILLISECONDS) Operation failed: Call From master/192.168.135.10 to master:9000 failed on connection exception: java.net.ConnectException: Connection refused; For more details see: http://wiki.apache.org/hadoop/ConnectionRefused [root@master sbin]# hdfs haadmin -getServiceState nn2 active

630

630

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?