1 编写执行入口

# 1.导包

from pyspark import SparkConf, SparkContext

# 2. 创建SparkConf类对象

conf = SparkConf().setMaster("local[*]").setAppName("test_spark_app")

# 3. 基于SparkConf类对象创建SparkContext对象

sc = SparkContext(conf=conf) # 执行入口

# 4.打印pySpark的运行版本

# print(sc.version)

# 5.停止SparkContext对象的运行

sc.stop()pySpark大数据分析过程分为3步:数据输入、数据计算、数据输出 ,以下内容将重点介绍这三个过程

2 数据输入

在数据输入完成后,都会得到一个RDD类的对象(RDD全称为弹性分布式数据集)

# 1.构建执行环境入口对象

from pyspark import SparkConf, SparkContext

conf = SparkConf().setMaster("local[*]").setAppName("test_spark")

sc = SparkContext(conf=conf)

# 2.通过parallelize方法将Python对象加载到Spark内,成为RDD对象

# 通过sc对象构建RDD

rdd1 = sc.parallelize([1, 2, 3, 4, 5])

rdd2 = sc.parallelize((6, 7, 8, 9, 10))

rdd3 = sc.parallelize("adjsjfjsg")

rdd4 = sc.parallelize({1, 2, 3, 4})

rdd5 = sc.parallelize({"key1": "value1", "key2": "value2"})

# 如果要查看RDD对象的内容,可以通过collect方法

print(rdd1.collect())

print(rdd2.collect())

print(rdd3.collect())

print(rdd4.collect())

print(rdd5.collect())

# 3.用textFiled方法,读取文件数据加载到Spark内,成为RDD对象

rdd6 = sc.textFile("D:/hello.txt")

print(rdd6.collect())

sc.stop()

3 数据计算

3.1 map算子

map算子是将RDD的数据进行一条条处理(处理的逻辑基于map算子接收的处理函数),返回新的RDD

import os

os.environ["PYSPARK_PYTHON"] = "D:/python3.7/python.exe" # 设置环境变量,因为Spark找不到python解释器在什么地方

# 构建执行环境入口对象

from pyspark import SparkConf, SparkContext

conf = SparkConf().setMaster("local[*]").setAppName("test_spark")

sc = SparkContext(conf=conf)

# 1. map 算子

rdd = sc.parallelize([1, 2, 3, 4])

# 通过map方法将全部的元素都乘10

rdd_map = rdd.map(lambda x: x * 10)

print(rdd_map.collect())

# 链式调用

rdd_map1 = rdd.map(lambda x: x * 10).map(lambda x: x + 5)

print(rdd_map1.collect())

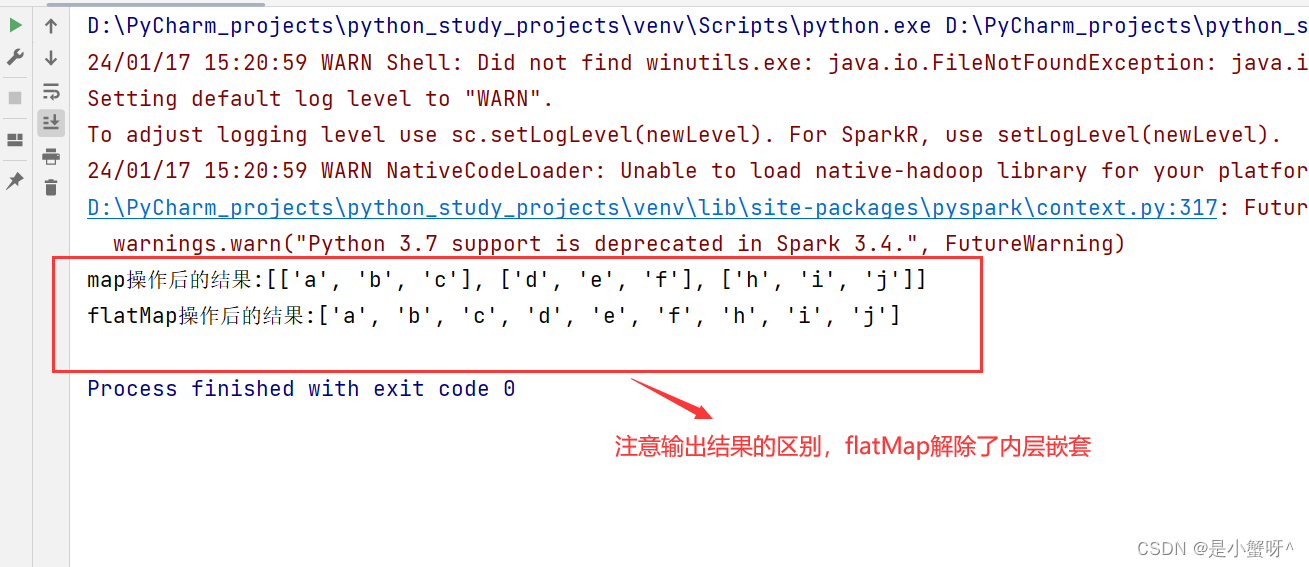

3.2 flatMap算子

对RDD进行map操作后,进行解除嵌套的作用

import os

os.environ["PYSPARK_PYTHON"] = "D:/python3.7/python.exe" # 设置环境变量

# 构建执行环境入口对象

from pyspark import SparkConf, SparkContext

conf = SparkConf().setMaster("local[*]").setAppName("test_spark")

sc = SparkContext(conf=conf)

rdd = sc.parallelize(["a b c", "d e f", "h i j"])

# 需求:将RDD数据里面的一个个单词都提取出来

rdd2 = rdd.map(lambda x: x.split(" "))

print(f"map操作后的结果:{rdd2.collect()}")

#解嵌套

rdd3 = rdd.flatMap(lambda x: x.split(" "))

print(f"flatMap操作后的结果:{rdd3.collect()}")

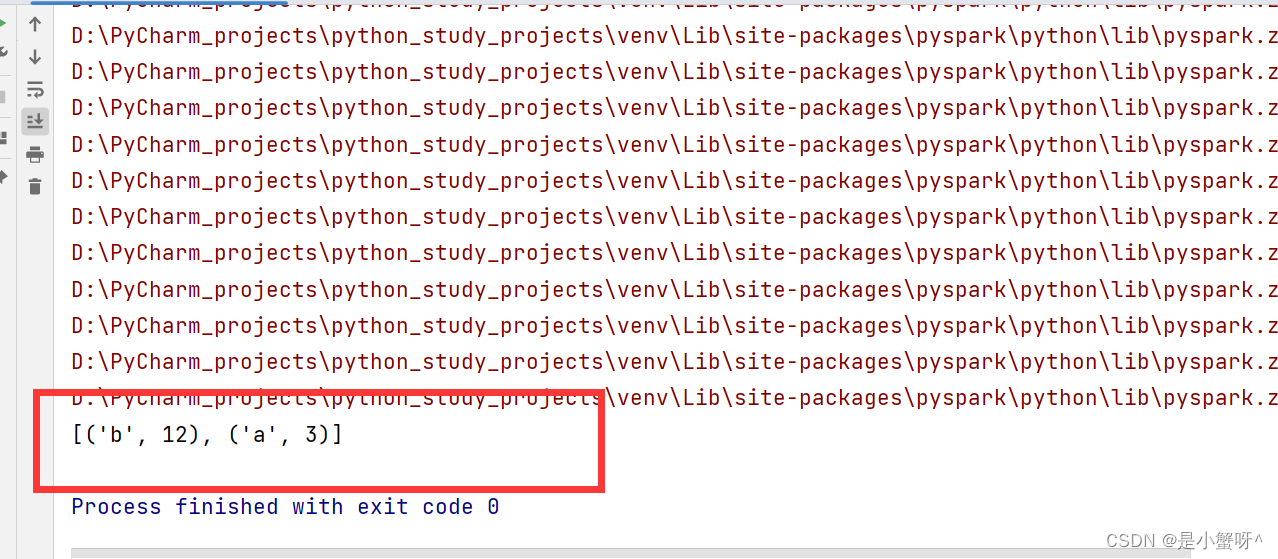

3.3 reduceByKey算子

reduceByKey算子:

功能:针对(K,V)类型的数据,按照K进行分组,然后根据你提供的聚合逻辑,完成

组内数据(value)的聚合操作。(K,V)类型的数据 -> 二元元组

import os

os.environ["PYSPARK_PYTHON"] = "D:/python3.7/python.exe" # 设置环境变量

# 构建执行环境入口对象

from pyspark import SparkConf, SparkContext

conf = SparkConf().setMaster("local[*]").setAppName("test_spark")

sc = SparkContext(conf=conf)

rdd = sc.parallelize([("a", 1), ("a", 2), ("b", 3), ("b", 4), ("b", 5)])

result = rdd.reduceByKey(lambda x, y: x + y)

print(result.collect())

3.4 单词计数案例

import os

os.environ["PYSPARK_PYTHON"] = "D:/python3.7/python.exe" # 设置环境变量

# 1.构建执行环境入口对象

from pyspark import SparkConf, SparkContext

conf = SparkConf().setMaster("local[*]").setAppName("test_spark")

sc = SparkContext(conf=conf)

# 2.读取数据文件

rdd = sc.textFile("D:/hello.txt")

word_rdd = rdd.flatMap(lambda line: line.split(" "))

# print(word_rdd.collect())

# 3.对数据进行转换为二元元组

word_count_rdd = word_rdd.map(lambda word: (word, 1))

# 4. 对二元元组进行聚合

word_count_rdd_result = word_count_rdd.reduceByKey(lambda a, b: a + b)

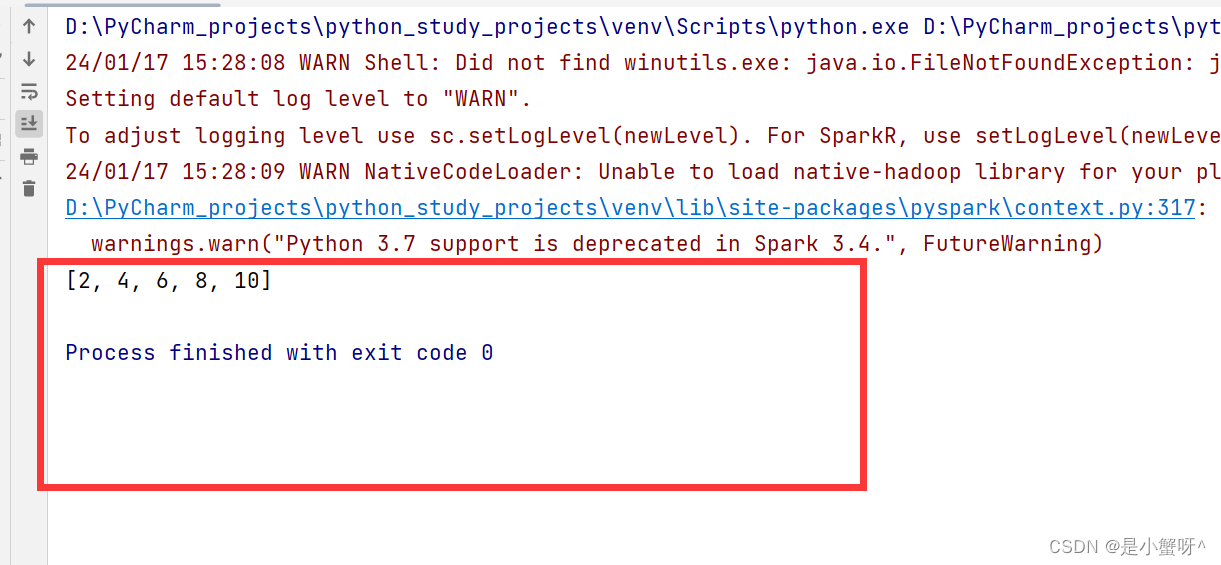

print(word_count_rdd_result.collect())3.5 filter算子

过滤想要的数据,进行保留

import os

os.environ["PYSPARK_PYTHON"] = "D:/python3.7/python.exe" # 设置环境变量

# 1.构建执行环境入口对象

from pyspark import SparkConf, SparkContext

conf = SparkConf().setMaster("local[*]").setAppName("test_spark")

sc = SparkContext(conf=conf)

rdd = sc.parallelize([1, 2, 3, 4, 5, 6, 7, 8, 9,10])

filter_rdd = rdd.filter(lambda x: x % 2 == 0) # 得到True则保留

print(filter_rdd.collect())

3.6 distinct算子

对RDD数据进行去重,返回新的RDD

import os

os.environ["PYSPARK_PYTHON"] = "D:/python3.7/python.exe" # 设置环境变量

# 1.构建执行环境入口对象

from pyspark import SparkConf, SparkContext

conf = SparkConf().setMaster("local[*]").setAppName("test_spark")

sc = SparkContext(conf=conf)

rdd = sc.parallelize([1, 2, 3, 4, 1, 2, 3, 4])

distinct_rdd = rdd.distinct()

print(distinct_rdd.collect())

3.7 sortBy算子

对RDD数据进行排序,基于你指定的排序依据

import os

os.environ["PYSPARK_PYTHON"] = "D:/python3.7/python.exe" # 设置环境变量

# 1.构建执行环境入口对象

from pyspark import SparkConf, SparkContext

conf = SparkConf().setMaster("local[*]").setAppName("test_spark")

sc = SparkContext(conf=conf)

# 2.读取数据文件

rdd = sc.textFile("D:/hello.txt")

word_rdd = rdd.flatMap(lambda line: line.split(" "))

# print(word_rdd.collect())

# 3.对数据进行转换为二元元组

word_count_rdd = word_rdd.map(lambda word: (word, 1))

# 4. 对二元元组进行聚合

word_count_rdd_result = word_count_rdd.reduceByKey(lambda a, b: a + b)

# 5.对步骤四求的结果进行排序

word_count_rdd_result_sort = word_count_rdd_result.sortBy(lambda x: x[1], ascending=False, numPartitions=1)

# 参数1设置排序的依据;参数2设置升序还是降序;参数3全局排序需要设置分区数为1

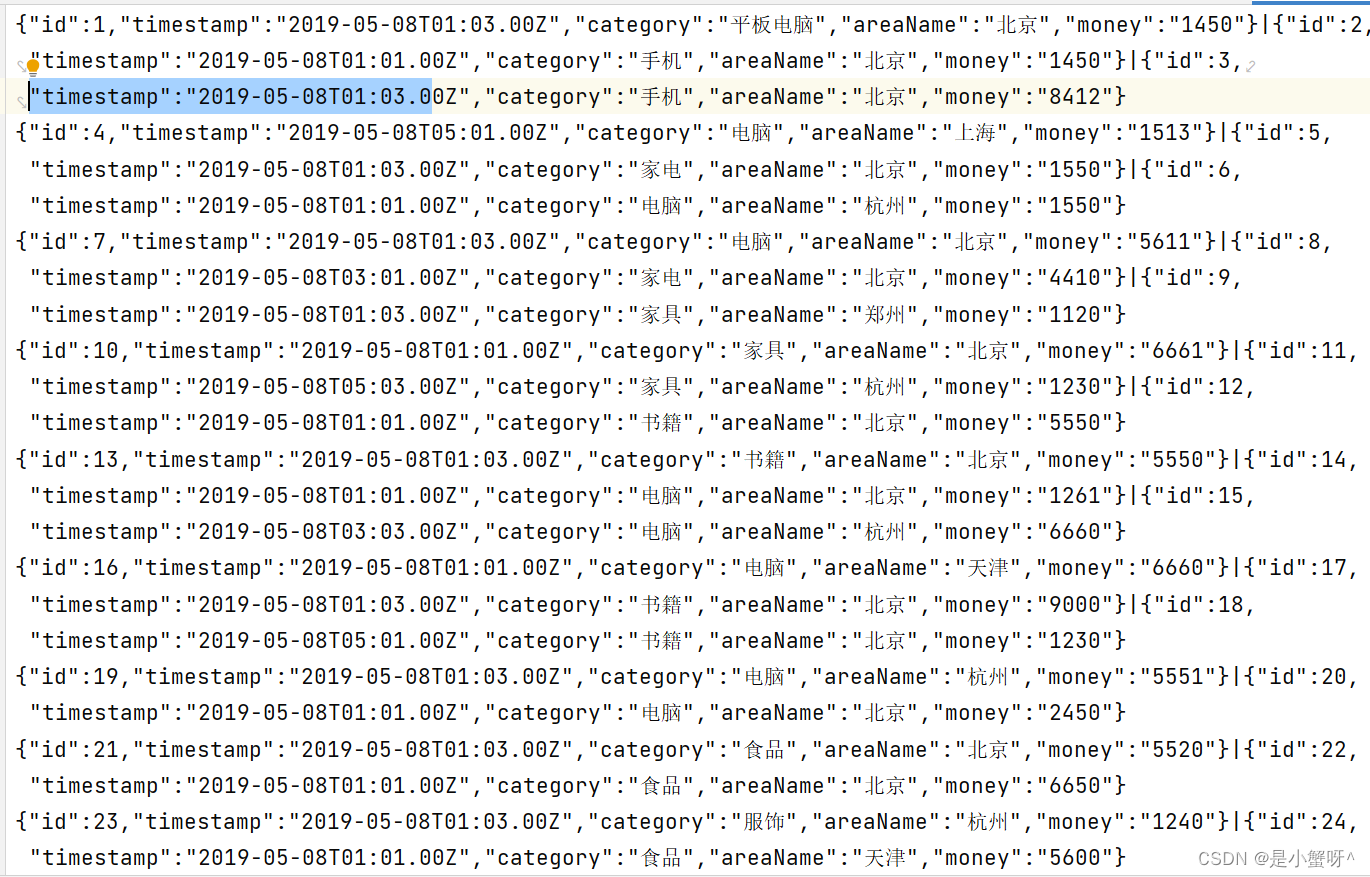

print(word_count_rdd_result_sort.collect())3.8 数据计算综合案例

准备需要的文件

import json

import os

os.environ["PYSPARK_PYTHON"] = "D:/python3.7/python.exe" # 设置环境变量

# 1.构建执行环境入口对象

from pyspark import SparkConf, SparkContext

conf = SparkConf().setMaster("local[*]").setAppName("test_spark")

sc = SparkContext(conf=conf)

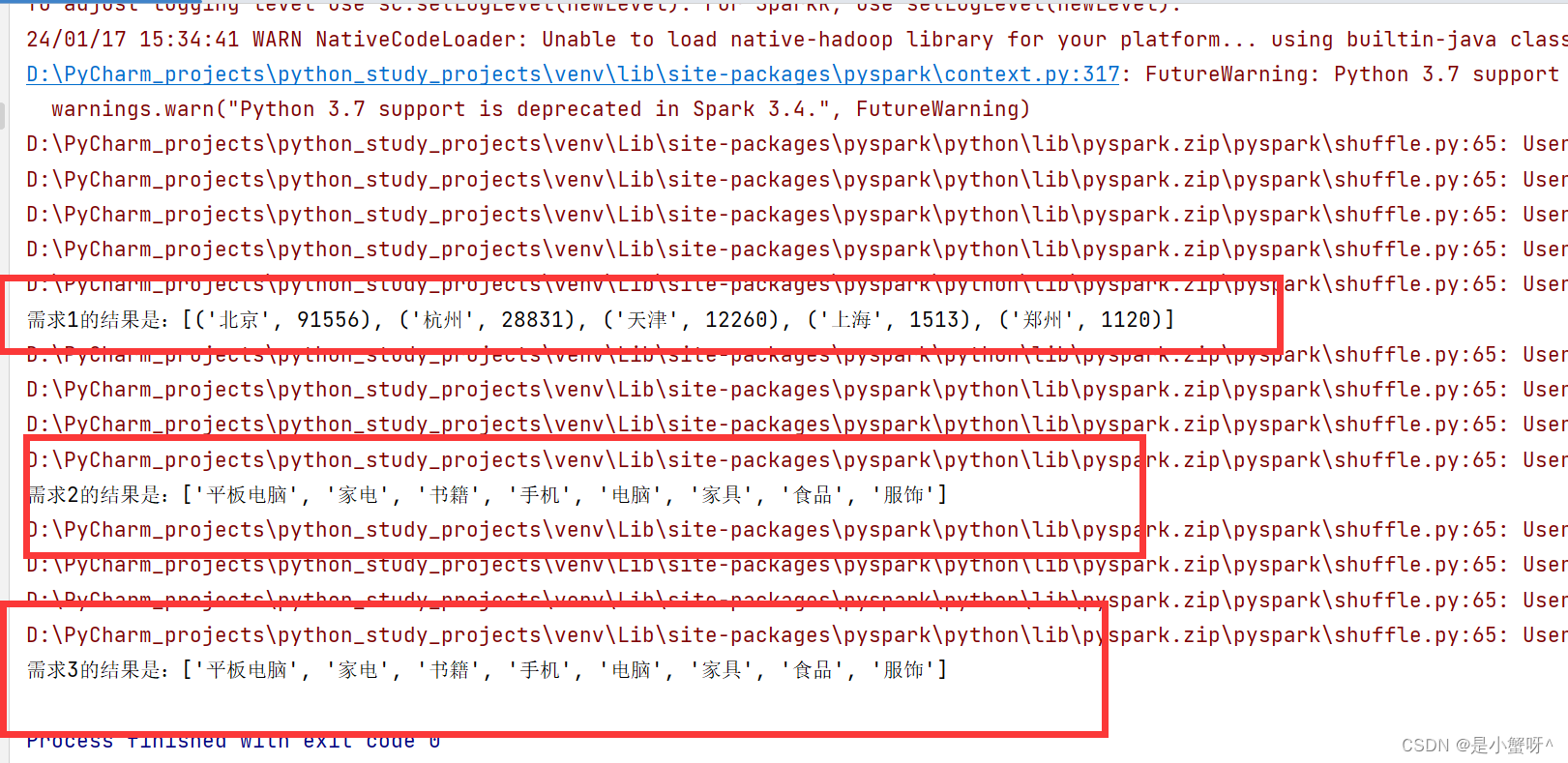

# TODO 需求1:城市销售额排名

# 1.1 读取文件得到RDD

rdd = sc.textFile("D:/PyCharm_projects/python_study_projects/text/orders.txt")

# 1.2 取出JSON字符串

rdd_json = rdd.flatMap(lambda x: x.split("|"))

# print(rdd_json.collect())

# 1.3 json字符串转为字典

rdd_dict = rdd_json.map(lambda x: json.loads(x))

# print(rdd_dict.collect())

# 1.4 取出城市和销售额数据

# (城市, 销售额)

rdd_city_with_money = rdd_dict.map(lambda x: (x["areaName"], int(x["money"])))

# 1.5 按照城市分组

rdd_group = rdd_city_with_money.reduceByKey(lambda x, y: x + y)

# 1.6 按照销售额降序排序

result_rdd1 = rdd_group.sortBy(lambda x: x[1], ascending=False, numPartitions=1)

print(f"需求1的结果是:{result_rdd1.collect()}")

# TODO 需求2:全部城市有哪些商品类别在售卖

# 2.1 取出所有的商品类别

category_rdd = rdd_dict.map(lambda x: x["category"]).distinct()

print(f"需求2的结果是:{category_rdd.collect()}")

# TODO 需求3:北京市有哪些商品类别在售卖

# 3.1 过滤北京市的数据

beijing_data_rdd = rdd_dict.filter(lambda x: x["areaName"] == "北京")

# 3.2 取出所有商品类别

beijing_category_data_rdd = beijing_data_rdd.map(lambda x: x["category"]).distinct()

print(f"需求3的结果是:{beijing_category_data_rdd.collect()}")

4 数据输出

import os

os.environ["PYSPARK_PYTHON"] = "D:/python3.7/python.exe" # 设置环境变量

os.environ["HADOOP_HOME"] = "D:/Hadoop/hadoop-3.0.0" # 输出为文件需要的配置

# 1.构建执行环境入口对象

from pyspark import SparkConf, SparkContext

conf = SparkConf().setMaster("local[*]").setAppName("test_spark")

# conf.set("spark.default.parallelism", 1) # 设置全局的并行度为1

sc = SparkContext(conf=conf)

# 准备RDD

rdd = sc.parallelize([1, 2, 3, 4, 5, 6, 7, 8, 9])

# 1. 将RDD数据输出为Python对象

"""

collect 算子: -> 将RDD输出为list对象

功能:将RDD各个分区内的数据统一收集到Driver中,形成一个List对象

用法:rdd.collect()

"""

# print(rdd.collect())

"""

reduce 算子:

功能:将RDD数据按照你传入的逻辑进行聚合

用法:rdd.reduce(func)

# func: (T, T) -> T 返回值和参数要求类型相同

"""

# result = rdd.reduce(lambda x, y: x + y)

# print(result)

"""

take 算子:

功能:取RDD的前N个元素,组合成list返回给你

用法:rdd.take(N)

"""

# result1 = rdd.take(3)

# print(result1)

"""

count 算子:

功能:计算RDD有多少条数据,返回值是一个数字

用法:rdd.count()

"""

# result2 = rdd.count()

# print(result2)

# 2. 将RDD数据输出为文件

"""

saveAsTextFile 算子:

功能:将RDD的数据写入文本文件中

用法:rdd.saveAsTextFile(path)

"""

rdd.saveAsTextFile("D:/output")5 pySaprk综合案例

\ 表示当前行还未写完,下一行仍是这行的内容

以下都采取链式的写法:

import os

os.environ["PYSPARK_PYTHON"] = "D:/python3.7/python.exe" # 设置环境变量

# 1.构建执行环境入口对象

from pyspark import SparkConf, SparkContext

conf = SparkConf().setMaster("local[*]").setAppName("test_spark")

conf.set("spark.default.parallelism", 1) # 设置全局的并行度为1

sc = SparkContext(conf=conf)

# 读取文件

file_rdd = sc.textFile("D:/PyCharm_projects/python_study_projects/text/search_log.txt")

# TODO 需求1:热门搜索时间段Top3 (小时精度)

# 1.1 取出所有的时间并转换为小时

# 1.2 转换为(小时,1)的二元元组

# 1.3 Key分组,集合Value

# 1.4 降序排序,取前3

# \表示当前行还未写完,下一行仍是这行的内容

result1 = file_rdd.map(lambda x: x.split("\t")).\

map(lambda x: x[0][:2]).\

map(lambda x: (x, 1)).\

reduceByKey(lambda a, b: a + b).\

sortBy(lambda x: x[1], ascending=False, numPartitions=1).\

take(3)

print(f"需求1的结果是:{result1}")

# TODO 需求2:热门搜索词Top3

# 2.1 取出全部的搜索词

# 2.2 (词,1) 二元元组

# 2.3 分组集合

# 2.4 排序,取Top3

result2 = file_rdd.map(lambda x: (x.split("\t")[2], 1)).\

reduceByKey(lambda a, b: a + b).\

sortBy(lambda x: x[1], ascending=False, numPartitions=1).\

take(3)

print(f"需求2的结果是:{result2}")

# TODO 需求3:统计黑马程序员关键字在什么时段被搜索的最多

# 3.1 过滤内容,只保留黑马程序员关键字

# 3.2 转换为(小时, 1) 的二元元组

# 3.3 Key 分组聚合Value

# 3.4 排序,取前1

result3 = file_rdd.map(lambda x: x.split("\t")).\

filter(lambda x: x[2] == "黑马程序员").\

map(lambda x: (x[0][:2], 1)).\

reduceByKey(lambda a, b: a + b).\

sortBy(lambda x: x[1], ascending=False, numPartitions=1).\

take(1)

print(f"需求3的结果是:{result3}")

# TODO 需求4:将数据转换为JSON格式,写到文件中

# 4.1 转换为JSON格式的RDD

# 4.2 写出到文件

file_rdd.map(lambda x: x.split("\t")).\

map(lambda x: {"time": x[0], "user_id": x[1], "key_word": x[2], "rank1": x[3], "rank2": x[4], "url": x[5]}).\

saveAsTextFile("D:\output_json") # hadoop报错,无法实现,是我自己的环境问题,代码没有问题

sc.stop()

1215

1215

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?