使用前馈神经网络进行姓氏分类

一、前馈神经网络

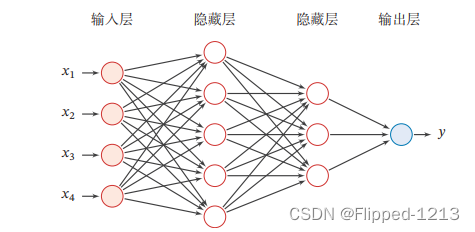

1.网络结构

前馈网络中各个神经元按接收信息的先后分为不同的组。每一组可以看作 一个神经层,每一层中的神经元接收前一层神经元的输出,并输出到下一层神经元。整个网络中的信息是朝一个方向传播,没有反向的信息传播,可以用一个有向无环路图表示。前馈网络包括全连接前馈网络和卷积神经网络等。前馈网络可以看作一个函数,通过简单非线性函数的多次复合,实现输入空间到输出空间的复杂映射。这种网络结构简单,易于实现。

在前馈神经网络中,各神经元分别属于不同的层。每一层的神经元可以接收前一层神经元的信号,并产生信号输出到下一层。第0层称为输入层,最后一层称为输出层,其他中间层称为隐藏层。整个网络中无反馈,信号从输入层向输出层单向传播,可用一个有向无环图表示。

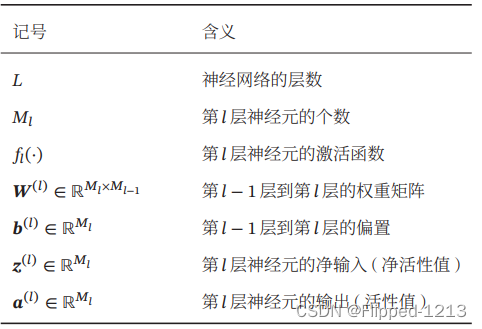

令𝒂 (0) = 𝒙,前馈神经网络通过不断迭代下面公式进行信息传播:

,

首先根据第𝑙−1层神经元的活性值(Activation) 计算出第𝑙层神经元的净活性值(Net Activation)

,然后经过一个激活函数得到第 𝑙 层神经元的活性值。因此,我们也可以把每个神经层看作一个仿射变换(Affine Transformation) 和一个非线性变换。

这样,前馈神经网络可以通过逐层的信息传递,得到网络最后的输出。

2.MLP多层感知机

多层感知器(Multilayer Perceptron,MLP)是一种基本的人工神经网络(Artificial Neural Network,ANN)架构,通常由多个全连接层组成。每个全连接层由一组神经元组成,每个神经元与上一层的所有神经元都有连接。

MLP 的基本结构包括输入层、若干个隐藏层和输出层。其中,输入层接收输入数据,隐藏层用于学习数据中的特征表示,输出层则产生最终的预测结果。每个隐藏层通常由多个神经元组成,每个神经元都包含一个激活函数,用于引入非线性特性。

MLP 在许多任务中都表现出色,尤其在分类和回归问题上。它具有良好的拟合能力和适应性,能够学习和捕获复杂的数据模式。然而,MLP 也有一些局限性,例如对于大型数据集和高维数据的训练需要大量的计算资源,并且容易受到过拟合问题的影响。

为了提高 MLP 的性能,可以采用一些改进方法,例如添加正则化项、使用更复杂的激活函数、调整网络结构或采用更先进的优化算法等。此外,还可以结合其他技术,如卷积神经网络(Convolutional Neural Networks,CNN)或循环神经网络(Recurrent Neural Networks,RNN),以解决特定领域的问题。

下面开始代码实例练习

Example1:

from argparse import Namespace from collections import Counter import json import os import string import collections import numpy as np import pandas as pd import re import torch import torch.nn as nn import torch.nn.functional as F import torch.optim as optim from torch.utils.data import Dataset, DataLoader from tqdm import tqdm_notebook import matplotlib.pyplot as plt seed = 1337 torch.manual_seed(seed) torch.cuda.manual_seed_all(seed)class MultilayerPerceptron(nn.Module): #定义一个nn.Module的子类 def __init__(self, input_dim, hidden_dim, output_dim): """ Args: input_dim (int): the size of the input vectors hidden_dim (int): the output size of the first Linear layer output_dim (int): the output size of the second Linear layer """ super(MultilayerPerceptron, self).__init__() #调用父类nn.Module的_init()方法 self.fc1 = nn.Linear(input_dim, hidden_dim) self.fc2 = nn.Linear(hidden_dim, output_dim) #全连接层1,2是线性连接层 def forward(self, x_in, apply_softmax=False): """The forward pass of the MLP Args: x_in (torch.Tensor): an input data tensor. x_in.shape should be (batch, input_dim) apply_softmax (bool): a flag for the softmax activation should be false if used with the Cross Entropy losses Returns: the resulting tensor. tensor.shape should be (batch, output_dim) """ intermediate = F.relu(self.fc1(x_in)) #ReLU函数,将输入进行激活修正后得到第一个全连接层的输出,即第二个全连接层的输入 output = self.fc2(intermediate) if apply_softmax: #决定是否对输出进行归一化 output = F.softmax(output, dim=1) return output

Example2:

An example instantiation of an MLP(实例化)

batch_size = 2 # number of samples input at once input_dim = 3 #输入维度 hidden_dim = 100 #隐藏层维度 output_dim = 4 #输出维度 # Initialize model mlp = MultilayerPerceptron(input_dim, hidden_dim, output_dim) ##构建模型mlp print(mlp) #输出模型中全连接层的信息

输出:

MultilayerPerceptron( (fc1): Linear(in_features=3, out_features=100, bias=True) (fc2): Linear(in_features=100, out_features=4, bias=True) )

Example3:

Testing the MLP with random inputs

import torch

def describe(x): #用于描述变量,输出类型,维度以及内容

print("Type: {}".format(x.type()))

print("Shape/size: {}".format(x.shape))

print("Values: \n{}".format(x))

x_input = torch.rand(batch_size, input_dim)

describe(x_input)

输出:

Type: torch.FloatTensor

Shape/size: torch.Size([2, 3])

Values:

tensor([[0.8329, 0.4277, 0.4363],

[0.9686, 0.6316, 0.8494]])

y_output = mlp(x_input, apply_softmax=False) describe(y_output) #使用mlp模型对输入x_input前向传播,结果为y_output

输出:

Type: torch.FloatTensor

Shape/size: torch.Size([2, 4])

Values:

tensor([[-0.2456, 0.0723, 0.1589, -0.3294],

[-0.3497, 0.0828, 0.3391, -0.4271]], grad_fn=<AddmmBackward>)

如果想将预测向量转换为概率,则需要softmax函数,它用于将一个值向量转换为概率。

Example4:

MLP with apply_softmax=True

y_output = mlp(x_input, apply_softmax=True) describe(y_output) #在上一段代码的功能上加上在输出层上应用激活函数

输出:

Type: torch.FloatTensor

Shape/size: torch.Size([2, 4])

Values:

tensor([[0.2087, 0.2868, 0.3127, 0.1919],

[0.1832, 0.2824, 0.3649, 0.1696]], grad_fn=<SoftmaxBackward>)

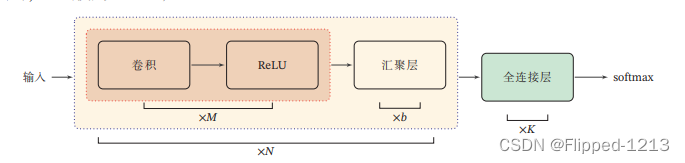

3.CNN卷积神经网络

整体结构

一个典型的卷积网络是由卷积层、汇聚层、全连接层交叉堆叠而成。目前常用的卷积网络整体结构如图3.1所示.一个卷积块为连续 𝑀 个卷积层和 𝑏 个汇聚层(𝑀 通常设置为2 ∼ 5,𝑏为0或1)。一个卷积网络中可以堆叠𝑁 个连续的卷积块,然后在后面接着 𝐾 个全连接层(𝑁 的取值区间比较大,比如 1 ∼ 100 或者更大;𝐾 一般为0 ∼ 2).

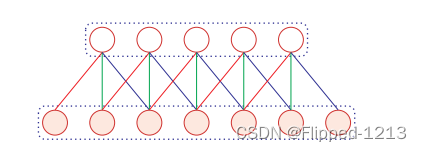

卷积层

输入特征映射组:

为三维张量(Tensor),其中每个切片(Slice)矩阵

为一个输入特征映射,1 ≤ 𝑑 ≤ 𝐷; (2)

输出特征映射组:

为三维张量,其中每个切片矩阵

为一个输出特征映射,1 ≤ 𝑝 ≤ 𝑃

卷积核:

为四维张量,其中每个切片矩阵

为一个二维卷积核,1 ≤ 𝑝 ≤ 𝑃, 1 ≤ 𝑑 ≤ 𝐷.

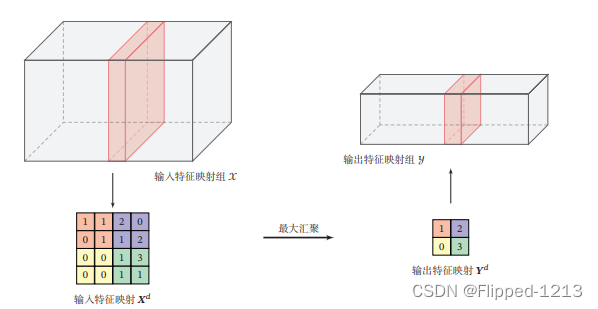

汇聚层

汇聚层(Pooling Layer)也叫子采样层(Subsampling Layer),其作用是进行特征选择,降低特征数量,从而减少参数数量。卷积层虽然可以显著减少网络中连接的数量,但特征映射组中的神经元个数并没有显著减少。如果后面接一个分类器,分类器的输入维数依然很高,很容易出现过拟合。为了解决这个问题,可以在卷积层之后加上一个汇聚层,从而降低特征维数,避免过拟合。

假设汇聚层的输入特征映射组为 ,对于其中每一个特征映射

, 1 ≤ 𝑑 ≤ 𝐷,将其划分为很多区域

, 1 ≤ 𝑚 ≤ 𝑀′ , 1 ≤ 𝑛 ≤ 𝑁′, 这些区域可以重叠,也可以不重叠。汇聚(Pooling)是指对每个区域进行下采样(Down Sampling)得到一个值,作为这个区域的概括。

Example1:

Artificial data and using a Conv1d class

import torch

import torch.nn as nn

batch_size = 2#数据的批次大小

one_hot_size = 10

sequence_width = 7#特征向量的长度

data = torch.randn(batch_size, one_hot_size, sequence_width)

conv1 = nn.Conv1d(in_channels=one_hot_size, out_channels=16,

kernel_size=3)

#创建了一个一维卷积层 conv1。

#这个卷积层的输入通道数为 one_hot_size,输出通道数为16卷积核大小为3

intermediate1 = conv1(data)#卷积操作

print(data.size())

print(intermediate1.size())

输出:

torch.Size([2, 10, 7]) torch.Size([2, 16, 5])

Example2:

The iterative application of convolutions to data

conv2 = nn.Conv1d(in_channels=16, out_channels=32, kernel_size=3) conv3 = nn.Conv1d(in_channels=32, out_channels=64, kernel_size=3) intermediate2 = conv2(intermediate1) intermediate3 = conv3(intermediate2) print(intermediate2.size()) print(intermediate3.size())

输出

torch.Size([2, 32, 3]) torch.Size([2, 64, 1])

Example3:

Two additional methods for reducing to feature vectors

# Method 2 of reducing to feature vectors print(intermediate1.view(batch_size, -1).size()) # Method 3 of reducing to feature vectors print(torch.mean(intermediate1, dim=2).size()) # print(torch.max(intermediate1, dim=2).size()) # print(torch.sum(intermediate1, dim=2).size())

输出:

torch.Size([2, 80]) torch.Size([2, 16])

二、姓氏分类

1.MLP

SurnameDataset数据集

import torch.utils.data.dataset as Dataset

class SurnameDataset(Dataset.Dataset):

# Implementation is nearly identical to Section 3.5

def __getitem__(self, index):

row = self._target_df.iloc[index] # Dataset存储目标变量和标签

surname_vector = \

self._vectorizer.vectorize(row.surname) # 存储向量化的姓氏

nationality_index = \

self._vectorizer.nationality_vocab.lookup_token(row.nationality) # 存储其国籍对应的索引

return {'x_surname': surname_vector,

'y_nationality': nationality_index}

使用词汇表、向量化器和DataLoader将姓氏字符串转换为向量化的minibatches。

class SurnameVectorizer(object):

""" The Vectorizer which coordinates the Vocabularies and puts them to use"""

def __init__(self, surname_vocab, nationality_vocab):

"""

Args:

surname_vocab (Vocabulary): maps characters to integers

nationality_vocab (Vocabulary): maps nationalities to integers

"""

self.surname_vocab = surname_vocab

self.nationality_vocab = nationality_vocab

def vectorize(self, surname):

"""

Args:

surname (str): the surname

Returns:

one_hot (np.ndarray): a collapsed one-hot encoding

"""

vocab = self.surname_vocab

one_hot = np.zeros(len(vocab), dtype=np.float32)

for token in surname:

one_hot[vocab.lookup_token(token)] = 1

return one_hot

@classmethod

def from_dataframe(cls, surname_df):

"""Instantiate the vectorizer from the dataset dataframe

Args:

surname_df (pandas.DataFrame): the surnames dataset

Returns:

an instance of the SurnameVectorizer

"""

surname_vocab = Vocabulary(unk_token="@")

nationality_vocab = Vocabulary(add_unk=False)

for index, row in surname_df.iterrows():

for letter in row.surname:

surname_vocab.add_token(letter)

nationality_vocab.add_token(row.nationality)

return cls(surname_vocab, nationality_vocab)

@classmethod

def from_serializable(cls, contents):

surname_vocab = Vocabulary.from_serializable(contents['surname_vocab'])

nationality_vocab = Vocabulary.from_serializable(contents['nationality_vocab'])

return cls(surname_vocab=surname_vocab, nationality_vocab=nationality_vocab)

def to_serializable(self):

return {'surname_vocab': self.surname_vocab.to_serializable(),

'nationality_vocab': self.nationality_vocab.to_serializable()}

class Vocabulary(object):

"""Class to process text and extract vocabulary for mapping"""

def __init__(self, token_to_idx=None, add_unk=True, unk_token="<UNK>"):

"""

Args:

token_to_idx (dict): a pre-existing map of tokens to indices

add_unk (bool): a flag that indicates whether to add the UNK token

unk_token (str): the UNK token to add into the Vocabulary

"""

if token_to_idx is None:

token_to_idx = {}

self._token_to_idx = token_to_idx

self._idx_to_token = {idx: token

for token, idx in self._token_to_idx.items()}

self._add_unk = add_unk

self._unk_token = unk_token

self.unk_index = -1

if add_unk:

self.unk_index = self.add_token(unk_token)

def to_serializable(self):

""" returns a dictionary that can be serialized """

return {'token_to_idx': self._token_to_idx,

'add_unk': self._add_unk,

'unk_token': self._unk_token}

@classmethod

def from_serializable(cls, contents):

""" instantiates the Vocabulary from a serialized dictionary """

return cls(**contents)

def add_token(self, token):

"""Update mapping dicts based on the token.

Args:

token (str): the item to add into the Vocabulary

Returns:

index (int): the integer corresponding to the token

"""

try:

index = self._token_to_idx[token]

except KeyError:

index = len(self._token_to_idx)

self._token_to_idx[token] = index

self._idx_to_token[index] = token

return index

def add_many(self, tokens):

"""Add a list of tokens into the Vocabulary

Args:

tokens (list): a list of string tokens

Returns:

indices (list): a list of indices corresponding to the tokens

"""

return [self.add_token(token) for token in tokens]

def lookup_token(self, token):

"""Retrieve the index associated with the token

or the UNK index if token isn't present.

Args:

token (str): the token to look up

Returns:

index (int): the index corresponding to the token

Notes:

`unk_index` needs to be >=0 (having been added into the Vocabulary)

for the UNK functionality

"""

if self.unk_index >= 0:

return self._token_to_idx.get(token, self.unk_index)

else:

return self._token_to_idx[token]

def lookup_index(self, index):

"""Return the token associated with the index

Args:

index (int): the index to look up

Returns:

token (str): the token corresponding to the index

Raises:

KeyError: if the index is not in the Vocabulary

"""

if index not in self._idx_to_token:

raise KeyError("the index (%d) is not in the Vocabulary" % index)

return self._idx_to_token[index]

def __str__(self):

return "<Vocabulary(size=%d)>" % len(self)

def __len__(self):

return len(self._token_to_idx)

SurnameClassifier是MLP的实现,第一个线性层将输入向量映射到中间向量,并对该向量应用非线性。第二线性层将中间向量映射到预测向量。

import torch.nn as nn

import torch.nn.functional as F

class SurnameClassifier(nn.Module):

""" A 2-layer Multilayer Perceptron for classifying surnames """

def __init__(self, input_dim, hidden_dim, output_dim):

"""

Args:

input_dim (int): the size of the input vectors

hidden_dim (int): the output size of the first Linear layer

output_dim (int): the output size of the second Linear layer

"""

super(SurnameClassifier, self).__init__()

self.fc1 = nn.Linear(input_dim, hidden_dim)

self.fc2 = nn.Linear(hidden_dim, output_dim)

def forward(self, x_in, apply_softmax=False):

"""The forward pass of the classifier

Args:

x_in (torch.Tensor): an input data tensor.

x_in.shape should be (batch, input_dim)

apply_softmax (bool): a flag for the softmax activation

should be false if used with the Cross Entropy losses

Returns:

the resulting tensor. tensor.shape should be (batch, output_dim)

"""

intermediate_vector = F.relu(self.fc1(x_in))

prediction_vector = self.fc2(intermediate_vector)

if apply_softmax:

prediction_vector = F.softmax(prediction_vector, dim=1)

return prediction_vector

import torch.utils.data.dataset as Dataset

class SurnameDataset(Dataset.Dataset):

def __init__(self, surname_df, vectorizer):

"""

Args:

surname_df (pandas.DataFrame): the dataset

vectorizer (SurnameVectorizer): vectorizer instatiated from dataset

"""

self.surname_df = surname_df

self._vectorizer = vectorizer

self.train_df = self.surname_df[self.surname_df.split == 'train']

self.train_size = len(self.train_df)

self.val_df = self.surname_df[self.surname_df.split == 'val']

self.validation_size = len(self.val_df)

self.test_df = self.surname_df[self.surname_df.split == 'test']

self.test_size = len(self.test_df)

self._lookup_dict = {'train': (self.train_df, self.train_size),

'val': (self.val_df, self.validation_size),

'test': (self.test_df, self.test_size)}

self.set_split('train')

# Class weights

class_counts = surname_df.nationality.value_counts().to_dict()

def sort_key(item):

return self._vectorizer.nationality_vocab.lookup_token(item[0])

sorted_counts = sorted(class_counts.items(), key=sort_key)

frequencies = [count for _, count in sorted_counts]

self.class_weights = 1.0 / torch.tensor(frequencies, dtype=torch.float32)

@classmethod

def load_dataset_and_make_vectorizer(cls, surname_csv):

"""Load dataset and make a new vectorizer from scratch

Args:

surname_csv (str): location of the dataset

Returns:

an instance of SurnameDataset

"""

surname_df = pd.read_csv(surname_csv)

train_surname_df = surname_df[surname_df.split == 'train']

return cls(surname_df, SurnameVectorizer.from_dataframe(train_surname_df))

@classmethod

def load_dataset_and_load_vectorizer(cls, surname_csv, vectorizer_filepath):

"""Load dataset and the corresponding vectorizer.

Used in the case in the vectorizer has been cached for re-use

Args:

surname_csv (str): location of the dataset

vectorizer_filepath (str): location of the saved vectorizer

Returns:

an instance of SurnameDataset

"""

surname_df = pd.read_csv(surname_csv)

vectorizer = cls.load_vectorizer_only(vectorizer_filepath)

return cls(surname_df, vectorizer)

@staticmethod

def load_vectorizer_only(vectorizer_filepath):

"""a static method for loading the vectorizer from file

Args:

vectorizer_filepath (str): the location of the serialized vectorizer

Returns:

an instance of SurnameVectorizer

"""

with open(vectorizer_filepath) as fp:

return SurnameVectorizer.from_serializable(json.load(fp))

def save_vectorizer(self, vectorizer_filepath):

"""saves the vectorizer to disk using json

Args:

vectorizer_filepath (str): the location to save the vectorizer

"""

with open(vectorizer_filepath, "w") as fp:

json.dump(self._vectorizer.to_serializable(), fp)

def get_vectorizer(self):

""" returns the vectorizer """

return self._vectorizer

def set_split(self, split="train"):

""" selects the splits in the dataset using a column in the dataframe """

self._target_split = split

self._target_df, self._target_size = self._lookup_dict[split]

def __len__(self):

return self._target_size

def __getitem__(self, index):

"""the primary entry point method for PyTorch datasets

Args:

index (int): the index to the data point

Returns:

a dictionary holding the data point's:

features (x_surname)

label (y_nationality)

"""

row = self._target_df.iloc[index]

surname_vector = \

self._vectorizer.vectorize(row.surname)

nationality_index = \

self._vectorizer.nationality_vocab.lookup_token(row.nationality)

return {'x_surname': surname_vector,

'y_nationality': nationality_index}

def get_num_batches(self, batch_size):

"""Given a batch size, return the number of batches in the dataset

Args:

batch_size (int)

Returns:

number of batches in the dataset

"""

return len(self) // batch_size

def generate_batches(dataset, batch_size, shuffle=True,

drop_last=True, device="cpu"):

"""

A generator function which wraps the PyTorch DataLoader. It will

ensure each tensor is on the write device location.

"""

dataloader = DataLoader(dataset=dataset, batch_size=batch_size,

shuffle=shuffle, drop_last=drop_last)

for data_dict in dataloader:

out_data_dict = {}

for name, tensor in data_dict.items():

out_data_dict[name] = data_dict[name].to(device)

yield out_data_dict

初始化一个训练状态的字典 train_state

def make_train_state(args):

return {'stop_early': False,

'early_stopping_step': 0,

'early_stopping_best_val': 1e8,

'learning_rate': args.learning_rate,

'epoch_index': 0,

'train_loss': [],

'train_acc': [],

'val_loss': [],

'val_acc': [],

'test_loss': -1,

'test_acc': -1,

'model_filename': args.model_state_file}

def update_train_state(args, model, train_state):

"""Handle the training state updates.

Components:

- Early Stopping: Prevent overfitting.

- Model Checkpoint: Model is saved if the model is better

:param args: main arguments

:param model: model to train

:param train_state: a dictionary representing the training state values

:returns:

a new train_state

"""

# Save one model at least

if train_state['epoch_index'] == 0:

torch.save(model.state_dict(), train_state['model_filename'])

train_state['stop_early'] = False

# Save model if performance improved

elif train_state['epoch_index'] >= 1:

loss_tm1, loss_t = train_state['val_loss'][-2:]

# If loss worsened

if loss_t >= train_state['early_stopping_best_val']:

# Update step

train_state['early_stopping_step'] += 1

# Loss decreased

else:

# Save the best model

if loss_t < train_state['early_stopping_best_val']:

torch.save(model.state_dict(), train_state['model_filename'])

# Reset early stopping step

train_state['early_stopping_step'] = 0

# Stop early ?

train_state['stop_early'] = \

train_state['early_stopping_step'] >= args.early_stopping_criteria

return train_state

def compute_accuracy(y_pred, y_target):

_, y_pred_indices = y_pred.max(dim=1)

n_correct = torch.eq(y_pred_indices, y_target).sum().item()

return n_correct / len(y_pred_indices) * 100

设置随机种子以确保实验的可重复性和稳定性

def set_seed_everywhere(seed, cuda):

np.random.seed(seed)

torch.manual_seed(seed)

if cuda:

torch.cuda.manual_seed_all(seed)

def handle_dirs(dirpath):

if not os.path.exists(dirpath):

os.makedirs(dirpath)

定义包含各种参数和选项的命名空间 args

args = Namespace(

# Data and path information

surname_csv="surnames_with_splits.csv",

vectorizer_file="vectorizer.json",

model_state_file="model.pth",

save_dir="surname_mlp",

# Model hyper parameters

hidden_dim=300,

# Training hyper parameters

seed=1337,

num_epochs=100,

early_stopping_criteria=5,

learning_rate=0.001,

batch_size=64,

# Runtime options

cuda=False,

reload_from_files=False,

expand_filepaths_to_save_dir=True,

raw_dataset_csv="surnames.csv",

train_proportion=0.7,

val_proportion=0.15,

test_proportion=0.15,

output_munged_csv="surnames_with_splits.csv",

device='cpu'

# Runtime options omitted for space

)

if args.expand_filepaths_to_save_dir:

args.vectorizer_file = os.path.join(args.save_dir,

args.vectorizer_file)

args.model_state_file = os.path.join(args.save_dir,

args.model_state_file)

print("Expanded filepaths: ")

print("\t{}".format(args.vectorizer_file))

print("\t{}".format(args.model_state_file))

# Check CUDA

if not torch.cuda.is_available():

args.cuda = False

args.device = torch.device("cuda" if args.cuda else "cpu")

print("Using CUDA: {}".format(args.cuda))

# Set seed for reproducibility

set_seed_everywhere(args.seed, args.cuda)

# handle dirs

handle_dirs(args.save_dir)

surnames = pd.read_csv(args.raw_dataset_csv, header=0)

print(surnames.head())

输出:

Expanded filepaths:

surname_mlp/vectorizer.json

surname_mlp/model.pth

Using CUDA: False

surname nationality

0 Woodford English

1 Coté French

2 Kore English

3 Koury Arabic

4 Lebzak Russian

# Unique classes print(set(surnames.nationality))

输出:

{'Russian', 'Italian', 'Korean', 'French', 'Vietnamese', 'Czech', 'Japanese', 'German', 'Irish', 'Polish', 'Scottish', 'Chinese', 'Portuguese', 'English', 'Arabic', 'Spanish', 'Dutch', 'Greek'}

将姓氏数据集按照国籍进行划分,并将划分结果保存到CSV文件中

# Splitting train by nationality

# Create dict

by_nationality = collections.defaultdict(list)

for _, row in surnames.iterrows():

by_nationality[row.nationality].append(row.to_dict())

# Create split data

final_list = []

np.random.seed(args.seed)

for _, item_list in sorted(by_nationality.items()):

np.random.shuffle(item_list)

n = len(item_list)

n_train = int(args.train_proportion * n)

n_val = int(args.val_proportion * n)

n_test = int(args.test_proportion * n)

# Give data point a split attribute

for item in item_list[:n_train]:

item['split'] = 'train'

for item in item_list[n_train:n_train + n_val]:

item['split'] = 'val'

for item in item_list[n_train + n_val:]:

item['split'] = 'test'

# Add to final list

final_list.extend(item_list)

# Write split data to file

final_surnames = pd.DataFrame(final_list)

print(final_surnames.split.value_counts())

print()

print(final_surnames.head())

# Write munged data to CSV

final_surnames.to_csv(args.output_munged_csv, index=False)

输出:

train 7680 test 1660 val 1640 Name: split, dtype: int64 nationality split surname 0 Arabic train Totah 1 Arabic train Abboud 2 Arabic train Fakhoury 3 Arabic train Srour 4 Arabic train Sayegh

根据参数设置,加载已有的模型检查点或者创建新的数据集、向量化器,并基于向量化器的词汇大小设置创建一个姓氏分类器 (SurnameClassifier) 的实例。

if args.reload_from_files:

# training from a checkpoint

print("Reloading!")

dataset = SurnameDataset.load_dataset_and_load_vectorizer(args.surname_csv,

args.vectorizer_file)

else:

# create dataset and vectorizer

print("Creating fresh!")

dataset = SurnameDataset.load_dataset_and_make_vectorizer(args.surname_csv)

dataset.save_vectorizer(args.vectorizer_file)

vectorizer = dataset.get_vectorizer() # 获取向量化器,并使用它来确定输入维度和输出维度

classifier = SurnameClassifier(input_dim=len(vectorizer.surname_vocab),

hidden_dim=args.hidden_dim,

output_dim=len(vectorizer.nationality_vocab))

训练和评估分类器模型

classifier = classifier.to(args.device)

dataset.class_weights = dataset.class_weights.to(args.device)

loss_func = nn.CrossEntropyLoss(dataset.class_weights)

optimizer = optim.Adam(classifier.parameters(), lr=args.learning_rate)

scheduler = optim.lr_scheduler.ReduceLROnPlateau(optimizer=optimizer,

mode='min', factor=0.5,

patience=1)

train_state = make_train_state(args)

epoch_bar = tqdm_notebook(desc='training routine',

total=args.num_epochs,

position=0)

dataset.set_split('train')

train_bar = tqdm_notebook(desc='split=train',

total=dataset.get_num_batches(args.batch_size),

position=1,

leave=True)

dataset.set_split('val')

val_bar = tqdm_notebook(desc='split=val',

total=dataset.get_num_batches(args.batch_size),

position=1,

leave=True)

try:

for epoch_index in range(args.num_epochs):

train_state['epoch_index'] = epoch_index

# Iterate over training dataset

# setup: batch generator, set loss and acc to 0, set train mode on

dataset.set_split('train')

batch_generator = generate_batches(dataset,

batch_size=args.batch_size,

device=args.device)

running_loss = 0.0

running_acc = 0.0

classifier.train()

for batch_index, batch_dict in enumerate(batch_generator):

# the training routine is these 5 steps:

# --------------------------------------

# step 1. zero the gradients

optimizer.zero_grad()

# step 2. compute the output

y_pred = classifier(batch_dict['x_surname'])

# step 3. compute the loss

loss = loss_func(y_pred, batch_dict['y_nationality'])

loss_t = loss.item()

running_loss += (loss_t - running_loss) / (batch_index + 1)

# step 4. use loss to produce gradients

loss.backward()

# step 5. use optimizer to take gradient step

optimizer.step()

# -----------------------------------------

# compute the accuracy

acc_t = compute_accuracy(y_pred, batch_dict['y_nationality'])

running_acc += (acc_t - running_acc) / (batch_index + 1)

# update bar

train_bar.set_postfix(loss=running_loss, acc=running_acc,

epoch=epoch_index)

train_bar.update()

train_state['train_loss'].append(running_loss)

train_state['train_acc'].append(running_acc)

# Iterate over val dataset

# setup: batch generator, set loss and acc to 0; set eval mode on

dataset.set_split('val')

batch_generator = generate_batches(dataset,

batch_size=args.batch_size,

device=args.device)

running_loss = 0.

running_acc = 0.

classifier.eval()

for batch_index, batch_dict in enumerate(batch_generator):

# compute the output

y_pred = classifier(batch_dict['x_surname'])

# step 3. compute the loss

loss = loss_func(y_pred, batch_dict['y_nationality'])

loss_t = loss.to("cpu").item()

running_loss += (loss_t - running_loss) / (batch_index + 1)

# compute the accuracy

acc_t = compute_accuracy(y_pred, batch_dict['y_nationality'])

running_acc += (acc_t - running_acc) / (batch_index + 1)

val_bar.set_postfix(loss=running_loss, acc=running_acc,

epoch=epoch_index)

val_bar.update()

train_state['val_loss'].append(running_loss)

train_state['val_acc'].append(running_acc)

train_state = update_train_state(args=args, model=classifier,

train_state=train_state)

scheduler.step(train_state['val_loss'][-1])

if train_state['stop_early']:

break

train_bar.n = 0

val_bar.n = 0

epoch_bar.update()

except KeyboardInterrupt:

print("Exiting loop")

评估训练过程中得到的最佳模型在测试数据上的泛化能力

# compute the loss & accuracy on the test set using the best available model

classifier.load_state_dict(torch.load(train_state['model_filename']))

classifier = classifier.to(args.device)

dataset.class_weights = dataset.class_weights.to(args.device)

loss_func = nn.CrossEntropyLoss(dataset.class_weights)

dataset.set_split('test')

batch_generator = generate_batches(dataset,

batch_size=args.batch_size,

device=args.device)

running_loss = 0.

running_acc = 0.

classifier.eval()

for batch_index, batch_dict in enumerate(batch_generator):

# compute the output

y_pred = classifier(batch_dict['x_surname'])

# compute the loss

loss = loss_func(y_pred, batch_dict['y_nationality'])

loss_t = loss.item()

running_loss += (loss_t - running_loss) / (batch_index + 1)

# compute the accuracy

acc_t = compute_accuracy(y_pred, batch_dict['y_nationality'])

running_acc += (acc_t - running_acc) / (batch_index + 1)

train_state['test_loss'] = running_loss

train_state['test_acc'] = running_acc

print("Test loss: {};".format(train_state['test_loss']))

print("Test Accuracy: {}".format(train_state['test_acc']))

输出:

Test loss: 1.819154896736145; Test Accuracy: 46.68749999999999

预测一个新的姓氏 surname 对应的国籍

def predict_nationality(surname, classifier, vectorizer):

"""Predict the nationality from a new surname

Args:

surname (str): the surname to classifier

classifier (SurnameClassifer): an instance of the classifier

vectorizer (SurnameVectorizer): the corresponding vectorizer

Returns:

a dictionary with the most likely nationality and its probability

"""

vectorized_surname = vectorizer.vectorize(surname)

vectorized_surname = torch.tensor(vectorized_surname).view(1, -1)

result = classifier(vectorized_surname, apply_softmax=True)

probability_values, indices = result.max(dim=1)

index = indices.item()

predicted_nationality = vectorizer.nationality_vocab.lookup_index(index)

probability_value = probability_values.item()

return {'nationality': predicted_nationality, 'probability': probability_value}

new_surname = input("Enter a surname to classify: ")

classifier = classifier.to("cpu")

prediction = predict_nationality(new_surname, classifier, vectorizer)

print("{} -> {} (p={:0.2f})".format(new_surname,

prediction['nationality'],

prediction['probability']))

输出:

Enter a surname to classify: McMahanMcMahan -> Irish (p=0.41)

基于给定的姓氏 name 进行国籍预测,并输出前 k 个最有可能的国籍及其对应的概率

vectorizer.nationality_vocab.lookup_index(8)def predict_topk_nationality(name, classifier, vectorizer, k=5): vectorized_name = vectorizer.vectorize(name) vectorized_name = torch.tensor(vectorized_name).view(1, -1) prediction_vector = classifier(vectorized_name, apply_softmax=True) probability_values, indices = torch.topk(prediction_vector, k=k) # topk函数找到前k个最大概率值及其对应的索引 # returned size is 1,k probability_values = probability_values.detach().numpy()[0] indices = indices.detach().numpy()[0] results = [] for prob_value, index in zip(probability_values, indices): nationality = vectorizer.nationality_vocab.lookup_index(index) results.append({'nationality': nationality, 'probability': prob_value}) return results new_surname = input("Enter a surname to classify: ") classifier = classifier.to("cpu") k = int(input("How many of the top predictions to see? ")) if k > len(vectorizer.nationality_vocab): print("Sorry! That's more than the # of nationalities we have.. defaulting you to max size :)") k = len(vectorizer.nationality_vocab) predictions = predict_topk_nationality(new_surname, classifier, vectorizer, k=k) print("Top {} predictions:".format(k)) print("===================") for prediction in predictions: print("{} -> {} (p={:0.2f})".format(new_surname, prediction['nationality'], prediction['probability']))

输出:

Enter a surname to classify: McMahan How many of the top predictions to see? 5Top 5 predictions: =================== McMahan -> Irish (p=0.41) McMahan -> Scottish (p=0.25) McMahan -> Czech (p=0.08) McMahan -> Vietnamese (p=0.06) McMahan -> German (p=0.05)

2.CNN

from argparse import Namespace from collections import Counter import json import os import string import numpy as np import pandas as pd import torch import torch.nn as nn import torch.nn.functional as F import torch.optim as optim from torch.utils.data import Dataset, DataLoader from tqdm import tqdm_notebook

class Vocabulary(object):

"""Class to process text and extract vocabulary for mapping"""

def __init__(self, token_to_idx=None, add_unk=True, unk_token="<UNK>"):

"""

Args:

token_to_idx (dict): a pre-existing map of tokens to indices

add_unk (bool): a flag that indicates whether to add the UNK token

unk_token (str): the UNK token to add into the Vocabulary

"""

if token_to_idx is None:

token_to_idx = {}

self._token_to_idx = token_to_idx

self._idx_to_token = {idx: token

for token, idx in self._token_to_idx.items()}

self._add_unk = add_unk

self._unk_token = unk_token

self.unk_index = -1

if add_unk:

self.unk_index = self.add_token(unk_token)

def to_serializable(self):

""" returns a dictionary that can be serialized """

return {'token_to_idx': self._token_to_idx,

'add_unk': self._add_unk,

'unk_token': self._unk_token}

@classmethod

def from_serializable(cls, contents):

""" instantiates the Vocabulary from a serialized dictionary """

return cls(**contents)

def add_token(self, token):

"""Update mapping dicts based on the token.

Args:

token (str): the item to add into the Vocabulary

Returns:

index (int): the integer corresponding to the token

"""

try:

index = self._token_to_idx[token]

except KeyError:

index = len(self._token_to_idx)

self._token_to_idx[token] = index

self._idx_to_token[index] = token

return index

def add_many(self, tokens):

"""Add a list of tokens into the Vocabulary

Args:

tokens (list): a list of string tokens

Returns:

indices (list): a list of indices corresponding to the tokens

"""

return [self.add_token(token) for token in tokens]

def lookup_token(self, token):

"""Retrieve the index associated with the token

or the UNK index if token isn't present.

Args:

token (str): the token to look up

Returns:

index (int): the index corresponding to the token

Notes:

`unk_index` needs to be >=0 (having been added into the Vocabulary)

for the UNK functionality

"""

if self.unk_index >= 0:

return self._token_to_idx.get(token, self.unk_index)

else:

return self._token_to_idx[token]

def lookup_index(self, index):

"""Return the token associated with the index

Args:

index (int): the index to look up

Returns:

token (str): the token corresponding to the index

Raises:

KeyError: if the index is not in the Vocabulary

"""

if index not in self._idx_to_token:

raise KeyError("the index (%d) is not in the Vocabulary" % index)

return self._idx_to_token[index]

def __str__(self):

return "<Vocabulary(size=%d)>" % len(self)

def __len__(self):

return len(self._token_to_idx)

class SurnameVectorizer(object):

""" The Vectorizer which coordinates the Vocabularies and puts them to use"""

def __init__(self, surname_vocab, nationality_vocab, max_surname_length):

"""

Args:

surname_vocab (Vocabulary): maps characters to integers

nationality_vocab (Vocabulary): maps nationalities to integers

max_surname_length (int): the length of the longest surname

"""

self.surname_vocab = surname_vocab

self.nationality_vocab = nationality_vocab

self._max_surname_length = max_surname_length

def vectorize(self, surname):

"""

Args:

surname (str): the surname

Returns:

one_hot_matrix (np.ndarray): a matrix of one-hot vectors

"""

one_hot_matrix_size = (len(self.surname_vocab), self._max_surname_length)

one_hot_matrix = np.zeros(one_hot_matrix_size, dtype=np.float32)

for position_index, character in enumerate(surname):

character_index = self.surname_vocab.lookup_token(character)

one_hot_matrix[character_index][position_index] = 1

return one_hot_matrix

@classmethod

def from_dataframe(cls, surname_df):

"""Instantiate the vectorizer from the dataset dataframe

Args:

surname_df (pandas.DataFrame): the surnames dataset

Returns:

an instance of the SurnameVectorizer

"""

surname_vocab = Vocabulary(unk_token="@")

nationality_vocab = Vocabulary(add_unk=False)

max_surname_length = 0

for index, row in surname_df.iterrows():

max_surname_length = max(max_surname_length, len(row.surname))

for letter in row.surname:

surname_vocab.add_token(letter)

nationality_vocab.add_token(row.nationality)

return cls(surname_vocab, nationality_vocab, max_surname_length)

@classmethod

def from_serializable(cls, contents):

surname_vocab = Vocabulary.from_serializable(contents['surname_vocab'])

nationality_vocab = Vocabulary.from_serializable(contents['nationality_vocab'])

return cls(surname_vocab=surname_vocab, nationality_vocab=nationality_vocab,

max_surname_length=contents['max_surname_length'])

def to_serializable(self):

return {'surname_vocab': self.surname_vocab.to_serializable(),

'nationality_vocab': self.nationality_vocab.to_serializable(),

'max_surname_length': self._max_surname_length}

class SurnameDataset(Dataset):

def __init__(self, surname_df, vectorizer):

"""

Args:

name_df (pandas.DataFrame): the dataset

vectorizer (SurnameVectorizer): vectorizer instatiated from dataset

"""

self.surname_df = surname_df

self._vectorizer = vectorizer

self.train_df = self.surname_df[self.surname_df.split == 'train']

self.train_size = len(self.train_df)

self.val_df = self.surname_df[self.surname_df.split == 'val']

self.validation_size = len(self.val_df)

self.test_df = self.surname_df[self.surname_df.split == 'test']

self.test_size = len(self.test_df)

self._lookup_dict = {'train': (self.train_df, self.train_size),

'val': (self.val_df, self.validation_size),

'test': (self.test_df, self.test_size)}

self.set_split('train')

# Class weights

class_counts = surname_df.nationality.value_counts().to_dict()

def sort_key(item):

return self._vectorizer.nationality_vocab.lookup_token(item[0])

sorted_counts = sorted(class_counts.items(), key=sort_key)

frequencies = [count for _, count in sorted_counts]

self.class_weights = 1.0 / torch.tensor(frequencies, dtype=torch.float32)

@classmethod

def load_dataset_and_make_vectorizer(cls, surname_csv):

"""Load dataset and make a new vectorizer from scratch

Args:

surname_csv (str): location of the dataset

Returns:

an instance of SurnameDataset

"""

surname_df = pd.read_csv(surname_csv)

train_surname_df = surname_df[surname_df.split == 'train']

return cls(surname_df, SurnameVectorizer.from_dataframe(train_surname_df))

@classmethod

def load_dataset_and_load_vectorizer(cls, surname_csv, vectorizer_filepath):

"""Load dataset and the corresponding vectorizer.

Used in the case in the vectorizer has been cached for re-use

Args:

surname_csv (str): location of the dataset

vectorizer_filepath (str): location of the saved vectorizer

Returns:

an instance of SurnameDataset

"""

surname_df = pd.read_csv(surname_csv)

vectorizer = cls.load_vectorizer_only(vectorizer_filepath)

return cls(surname_df, vectorizer)

@staticmethod

def load_vectorizer_only(vectorizer_filepath):

"""a static method for loading the vectorizer from file

Args:

vectorizer_filepath (str): the location of the serialized vectorizer

Returns:

an instance of SurnameDataset

"""

with open(vectorizer_filepath) as fp:

return SurnameVectorizer.from_serializable(json.load(fp))

def save_vectorizer(self, vectorizer_filepath):

"""saves the vectorizer to disk using json

Args:

vectorizer_filepath (str): the location to save the vectorizer

"""

with open(vectorizer_filepath, "w") as fp:

json.dump(self._vectorizer.to_serializable(), fp)

def get_vectorizer(self):

""" returns the vectorizer """

return self._vectorizer

def set_split(self, split="train"):

""" selects the splits in the dataset using a column in the dataframe """

self._target_split = split

self._target_df, self._target_size = self._lookup_dict[split]

def __len__(self):

return self._target_size

def __getitem__(self, index):

"""the primary entry point method for PyTorch datasets

Args:

index (int): the index to the data point

Returns:

a dictionary holding the data point's features (x_data) and label (y_target)

"""

row = self._target_df.iloc[index]

surname_matrix = \

self._vectorizer.vectorize(row.surname)

nationality_index = \

self._vectorizer.nationality_vocab.lookup_token(row.nationality)

return {'x_surname': surname_matrix,

'y_nationality': nationality_index}

def get_num_batches(self, batch_size):

"""Given a batch size, return the number of batches in the dataset

Args:

batch_size (int)

Returns:

number of batches in the dataset

"""

return len(self) // batch_size

def generate_batches(dataset, batch_size, shuffle=True,

drop_last=True, device="cpu"):

"""

A generator function which wraps the PyTorch DataLoader. It will

ensure each tensor is on the write device location.

"""

dataloader = DataLoader(dataset=dataset, batch_size=batch_size,

shuffle=shuffle, drop_last=drop_last)

for data_dict in dataloader:

out_data_dict = {}

for name, tensor in data_dict.items():

out_data_dict[name] = data_dict[name].to(device)

yield out_data_dict

定义姓氏分类器的神经网络模型

class SurnameClassifier(nn.Module):

def __init__(self, initial_num_channels, num_classes, num_channels):

"""

Args:

initial_num_channels (int): size of the incoming feature vector

num_classes (int): size of the output prediction vector

num_channels (int): constant channel size to use throughout network

"""

super(SurnameClassifier, self).__init__()

self.convnet = nn.Sequential(

nn.Conv1d(in_channels=initial_num_channels,

out_channels=num_channels, kernel_size=3),

nn.ELU(),

nn.Conv1d(in_channels=num_channels, out_channels=num_channels,

kernel_size=3, stride=2),

nn.ELU(),

nn.Conv1d(in_channels=num_channels, out_channels=num_channels,

kernel_size=3, stride=2),

nn.ELU(),

nn.Conv1d(in_channels=num_channels, out_channels=num_channels,

kernel_size=3),

nn.ELU()

)

self.fc = nn.Linear(num_channels, num_classes)

def forward(self, x_surname, apply_softmax=False):

"""The forward pass of the classifier

Args:

x_surname (torch.Tensor): an input data tensor.

x_surname.shape should be (batch, initial_num_channels, max_surname_length)

apply_softmax (bool): a flag for the softmax activation

should be false if used with the Cross Entropy losses

Returns:

the resulting tensor. tensor.shape should be (batch, num_classes)

"""

features = self.convnet(x_surname).squeeze(dim=2)

prediction_vector = self.fc(features)

if apply_softmax:

prediction_vector = F.softmax(prediction_vector, dim=1)

return prediction_vector

创建并返回一个训练状态的字典

def make_train_state(args):

return {'stop_early': False,

'early_stopping_step': 0,

'early_stopping_best_val': 1e8,

'learning_rate': args.learning_rate,

'epoch_index': 0,

'train_loss': [],

'train_acc': [],

'val_loss': [],

'val_acc': [],

'test_loss': -1,

'test_acc': -1,

'model_filename': args.model_state_file}

管理和更新训练状态

def update_train_state(args, model, train_state):

"""Handle the training state updates.

Components:

- Early Stopping: Prevent overfitting.

- Model Checkpoint: Model is saved if the model is better

:param args: main arguments

:param model: model to train

:param train_state: a dictionary representing the training state values

:returns:

a new train_state

"""

# Save one model at least

if train_state['epoch_index'] == 0:

torch.save(model.state_dict(), train_state['model_filename'])

train_state['stop_early'] = False

# Save model if performance improved

elif train_state['epoch_index'] >= 1:

loss_tm1, loss_t = train_state['val_loss'][-2:]

# If loss worsened

if loss_t >= train_state['early_stopping_best_val']:

# Update step

train_state['early_stopping_step'] += 1

# Loss decreased

else:

# Save the best model

if loss_t < train_state['early_stopping_best_val']:

torch.save(model.state_dict(), train_state['model_filename'])

# Reset early stopping step

train_state['early_stopping_step'] = 0

# Stop early ?

train_state['stop_early'] = \

train_state['early_stopping_step'] >= args.early_stopping_criteria

return train_state

计算预测准确率

def compute_accuracy(y_pred, y_target):

y_pred_indices = y_pred.max(dim=1)[1]

n_correct = torch.eq(y_pred_indices, y_target).sum().item()

return n_correct / len(y_pred_indices) * 100

配置和准备一个基于卷积神经网络(CNN)的姓氏分类模型的训练环境

args = Namespace(

# Data and Path information

surname_csv="surnames_with_splits.csv",

vectorizer_file="vectorizer.json",

model_state_file="model.pth",

save_dir="model_storage/ch4/cnn",

# Model hyper parameters

hidden_dim=100,

num_channels=256,

# Training hyper parameters

seed=1337,

learning_rate=0.001,

batch_size=128,

num_epochs=100,

early_stopping_criteria=5,

dropout_p=0.1,

# Runtime options

cuda=False,

reload_from_files=False,

expand_filepaths_to_save_dir=True,

catch_keyboard_interrupt=True

)

if args.expand_filepaths_to_save_dir:

args.vectorizer_file = os.path.join(args.save_dir,

args.vectorizer_file)

args.model_state_file = os.path.join(args.save_dir,

args.model_state_file)

print("Expanded filepaths: ")

print("\t{}".format(args.vectorizer_file))

print("\t{}".format(args.model_state_file))

# Check CUDA

if not torch.cuda.is_available():

args.cuda = False

args.device = torch.device("cuda" if args.cuda else "cpu")

print("Using CUDA: {}".format(args.cuda))

def set_seed_everywhere(seed, cuda):

np.random.seed(seed)

torch.manual_seed(seed)

if cuda:

torch.cuda.manual_seed_all(seed)

def handle_dirs(dirpath):

if not os.path.exists(dirpath):

os.makedirs(dirpath)

# Set seed for reproducibility

set_seed_everywhere(args.seed, args.cuda)

# handle dirs

handle_dirs(args.save_dir)

输出:

Expanded filepaths: model_storage/ch4/cnn/vectorizer.json model_storage/ch4/cnn/model.pth Using CUDA: False

准备和初始化一个机器学习模型的训练过程

if args.reload_from_files:

# training from a checkpoint

dataset = SurnameDataset.load_dataset_and_load_vectorizer(args.surname_csv,

args.vectorizer_file)

else:

# create dataset and vectorizer

dataset = SurnameDataset.load_dataset_and_make_vectorizer(args.surname_csv)

dataset.save_vectorizer(args.vectorizer_file)

vectorizer = dataset.get_vectorizer()

classifier = SurnameClassifier(initial_num_channels=len(vectorizer.surname_vocab),

num_classes=len(vectorizer.nationality_vocab),

num_channels=args.num_channels)

classifer = classifier.to(args.device)

dataset.class_weights = dataset.class_weights.to(args.device)

loss_func = nn.CrossEntropyLoss(weight=dataset.class_weights)

optimizer = optim.Adam(classifier.parameters(), lr=args.learning_rate)

scheduler = optim.lr_scheduler.ReduceLROnPlateau(optimizer=optimizer,

mode='min', factor=0.5,

patience=1)

train_state = make_train_state(args)

构建一个完整的训练和验证过程

epoch_bar = tqdm_notebook(desc='training routine',

total=args.num_epochs,

position=0)

dataset.set_split('train')

train_bar = tqdm_notebook(desc='split=train',

total=dataset.get_num_batches(args.batch_size),

position=1,

leave=True)

dataset.set_split('val')

val_bar = tqdm_notebook(desc='split=val',

total=dataset.get_num_batches(args.batch_size),

position=1,

leave=True)

try:

for epoch_index in range(args.num_epochs):

train_state['epoch_index'] = epoch_index

# Iterate over training dataset

# setup: batch generator, set loss and acc to 0, set train mode on

dataset.set_split('train')

batch_generator = generate_batches(dataset,

batch_size=args.batch_size,

device=args.device)

running_loss = 0.0

running_acc = 0.0

classifier.train()

for batch_index, batch_dict in enumerate(batch_generator):

# the training routine is these 5 steps:

# --------------------------------------

# step 1. zero the gradients

optimizer.zero_grad()

# step 2. compute the output

y_pred = classifier(batch_dict['x_surname'])

# step 3. compute the loss

loss = loss_func(y_pred, batch_dict['y_nationality'])

loss_t = loss.item()

running_loss += (loss_t - running_loss) / (batch_index + 1)

# step 4. use loss to produce gradients

loss.backward()

# step 5. use optimizer to take gradient step

optimizer.step()

# -----------------------------------------

# compute the accuracy

acc_t = compute_accuracy(y_pred, batch_dict['y_nationality'])

running_acc += (acc_t - running_acc) / (batch_index + 1)

# update bar

train_bar.set_postfix(loss=running_loss, acc=running_acc,

epoch=epoch_index)

train_bar.update()

train_state['train_loss'].append(running_loss)

train_state['train_acc'].append(running_acc)

# Iterate over val dataset

# setup: batch generator, set loss and acc to 0; set eval mode on

dataset.set_split('val')

batch_generator = generate_batches(dataset,

batch_size=args.batch_size,

device=args.device)

running_loss = 0.

running_acc = 0.

classifier.eval()

for batch_index, batch_dict in enumerate(batch_generator):

# compute the output

y_pred = classifier(batch_dict['x_surname'])

# step 3. compute the loss

loss = loss_func(y_pred, batch_dict['y_nationality'])

loss_t = loss.item()

running_loss += (loss_t - running_loss) / (batch_index + 1)

# compute the accuracy

acc_t = compute_accuracy(y_pred, batch_dict['y_nationality'])

running_acc += (acc_t - running_acc) / (batch_index + 1)

val_bar.set_postfix(loss=running_loss, acc=running_acc,

epoch=epoch_index)

val_bar.update()

train_state['val_loss'].append(running_loss)

train_state['val_acc'].append(running_acc)

train_state = update_train_state(args=args, model=classifier,

train_state=train_state)

scheduler.step(train_state['val_loss'][-1])

if train_state['stop_early']:

break

train_bar.n = 0

val_bar.n = 0

epoch_bar.update()

except KeyboardInterrupt:

print("Exiting loop")

进行模型在测试集上的评估,并计算测试集上的损失和准确率

classifier.load_state_dict(torch.load(train_state['model_filename']))

classifier = classifier.to(args.device)

dataset.class_weights = dataset.class_weights.to(args.device)

loss_func = nn.CrossEntropyLoss(dataset.class_weights)

dataset.set_split('test')

batch_generator = generate_batches(dataset,

batch_size=args.batch_size,

device=args.device)

running_loss = 0.

running_acc = 0.

classifier.eval()

for batch_index, batch_dict in enumerate(batch_generator):

# compute the output

y_pred = classifier(batch_dict['x_surname'])

# compute the loss

loss = loss_func(y_pred, batch_dict['y_nationality'])

loss_t = loss.item()

running_loss += (loss_t - running_loss) / (batch_index + 1)

# compute the accuracy

acc_t = compute_accuracy(y_pred, batch_dict['y_nationality'])

running_acc += (acc_t - running_acc) / (batch_index + 1)

train_state['test_loss'] = running_loss

train_state['test_acc'] = running_acc

print("Test loss: {};".format(train_state['test_loss']))

print("Test Accuracy: {}".format(train_state['test_acc']))

输出:

Test loss: 1.9216371824343998; Test Accuracy: 60.7421875

根据输入的姓氏预测其可能的国籍,并返回预测的国籍及其概率值

def predict_nationality(surname, classifier, vectorizer):

"""Predict the nationality from a new surname

Args:

surname (str): the surname to classifier

classifier (SurnameClassifer): an instance of the classifier

vectorizer (SurnameVectorizer): the corresponding vectorizer

Returns:

a dictionary with the most likely nationality and its probability

"""

vectorized_surname = vectorizer.vectorize(surname)

vectorized_surname = torch.tensor(vectorized_surname).unsqueeze(0)

result = classifier(vectorized_surname, apply_softmax=True)

probability_values, indices = result.max(dim=1)

index = indices.item()

predicted_nationality = vectorizer.nationality_vocab.lookup_index(index)

probability_value = probability_values.item()

return {'nationality': predicted_nationality, 'probability': probability_value}

new_surname = input("Enter a surname to classify: ")

classifier = classifier.cpu()

prediction = predict_nationality(new_surname, classifier, vectorizer)

print("{} -> {} (p={:0.2f})".format(new_surname,

prediction['nationality'],

prediction['probability']))

输出:

Enter a surname to classify: McMahanMcMahan -> Irish (p=1.00)

三、参考书籍

基于注意力的机器翻译

一、编码器—解码器

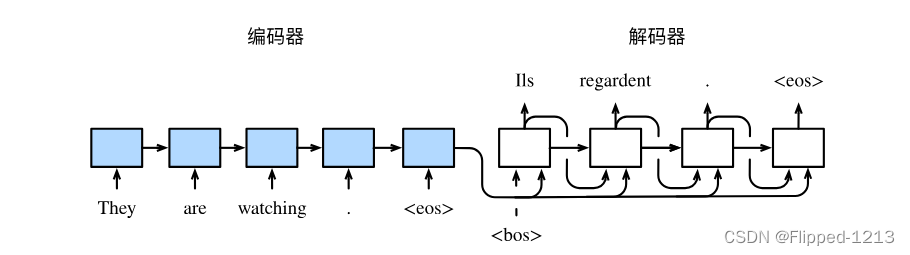

当输入和输出都是不定长序列时,我们可以使用编码器—解码器(encoder-decoder) 或者seq2seq模型。这两个模型本质上都用到了两个循环神经网络,分别叫做编码器和解码器。编码器用来分析输入序列,解码器用来生成输出序列。

例子:

英语输入:“They”、“are”、“watching”、“.”

法语输出:“Ils”、“regardent”、“.”

图1描述了使用编码器—解码器将英语句子翻译成法语句子的一种方法。在训练数据集中,我们可以在每个句子后附上特殊符号“<eos>”(end of sequence)以表示序列的终止。编码器每个时间步的输入依次为英语句子中的单词、标点和特殊符号“<eos>”。图10.8中使用了编码器在最终时间步的隐藏状态作为输入句子的表征或编码信息。解码器在各个时间步中使用输入句子的编码信息和上个时间步的输出以及隐藏状态作为输入。我们希望解码器在各个时间步能正确依次输出翻译后的法语单词、标点和特殊符号"<eos>"。需要注意的是,解码器在最初时间步的输入用到了一个表示序列开始的特殊符号"<bos>"(beginning of sequence)。

1.编码器

编码器的作用是把一个不定长的输入序列变换成一个定长的背景变量𝑐𝑐,并在该背景变量中编码输入序列信息。常用的编码器是循环神经网络。

让我们考虑批量大小为1的时序数据样本。假设输入序列是𝑥1,…,𝑥𝑇,例如𝑥𝑖是输入句子中的第𝑖个词。在时间步𝑡,循环神经网络将输入𝑥𝑡的特征向量𝑥𝑡和上个时间步的隐藏状态ℎ𝑡−1变换为当前时间步的隐藏状态ℎ𝑡。我们可以用函数𝑓表达循环神经网络隐藏层的变换

接下来,编码器通过自定义函数𝑞将各个时间步的隐藏状态变换为背景变量

例如,当选择时,背景变量是输入序列最终时间步的隐藏状态

。

以上描述的编码器是一个单向的循环神经网络,每个时间步的隐藏状态只取决于该时间步及之前的输入子序列。我们也可以使用双向循环神经网络构造编码器。在这种情况下,编码器每个时间步的隐藏状态同时取决于该时间步之前和之后的子序列(包括当前时间步的输入),并编码了整个序列的信息。

2.解码器

给定训练样本中的输出序列𝑦1,𝑦2,…,𝑦𝑇′,对每个时间步𝑡′(符号与输入序列或编码器的时间步𝑡有区别),解码器输出𝑦𝑡′的条件概率将基于之前的输出序列𝑦1,…,𝑦𝑡′−1和背景变量𝑐,即𝑃(𝑦𝑡′∣𝑦1,…,𝑦𝑡′−1,𝑐)。

为此,我们可以使用另一个循环神经网络作为解码器。在输出序列的时间步𝑡′,解码器将上一时间步的输出𝑦𝑡′−1以及背景变量𝑐作为输入,并将它们与上一时间步的隐藏状态𝑠𝑡′−1变换为当前时间步的隐藏状态𝑠𝑡′。因此,我们可以用函数𝑔表达解码器隐藏层的变换:

有了解码器的隐藏状态后,我们可以使用自定义的输出层和softmax运算来计算𝑃(𝑦𝑡′∣𝑦1,…,𝑦𝑡′−1,𝑐),例如,基于当前时间步的解码器隐藏状态 𝑠𝑡′、上一时间步的输出𝑦𝑡′−1以及背景变量𝑐来计算当前时间步输出𝑦𝑡′的概率分布。

二、束搜索

2.1 贪婪搜索

对于输出序列任一时间步𝑡′,我们从![]() 个词中搜索出条件概率最大的词

个词中搜索出条件概率最大的词

![]()

作为输出。一旦搜索出"<eos>"符号,或者输出序列长度已经达到了最大长度𝑇′,便完成输出。

我们在描述解码器时提到,基于输入序列生成输出序列的条件概率是。我们将该条件概率最大的输出序列称为最优输出序列。而贪婪搜索的主要问题是不能保证得到最优输出序列。

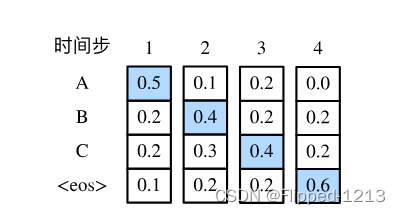

下面来看一个例子。假设输出词典里面有“A”“B”“C”和“<eos>”这4个词。图10.9中每个时间步下的4个数字分别代表了该时间步生成“A”“B”“C”和“<eos>”这4个词的条件概率。在每个时间步,贪婪搜索选取条件概率最大的词。因此,图中将生成输出序列“A”“B”“C”“<eos>”。该输出序列的条件概率是0.5×0.4×0.4×0.6=0.0480.5×0.4×0.4×0.6=0.048。

接下来,观察图2.2演示的例子。与图2.1中不同,图2.2在时间步2中选取了条件概率第二大的词“C”。由于时间步3所基于的时间步1和2的输出子序列由图2.1中的“A”“B”变为了图2.2中的“A”“C”,图2.2中时间步3生成各个词的条件概率发生了变化。我们选取条件概率最大的词“B”。此时时间步4所基于的前3个时间步的输出子序列为“A”“C”“B”,与图2.1中的“A”“B”“C”不同。因此,图2.2中时间步4生成各个词的条件概率也与图2.1中的不同。我们发现,此时的输出序列“A”“C”“B”“<eos>”的条件概率是0.5×0.3×0.6×0.6=0.0540.5×0.3×0.6×0.6=0.054,大于贪婪搜索得到的输出序列的条件概率。因此,贪婪搜索得到的输出序列“A”“B”“C”“<eos>”并非最优输出序列。

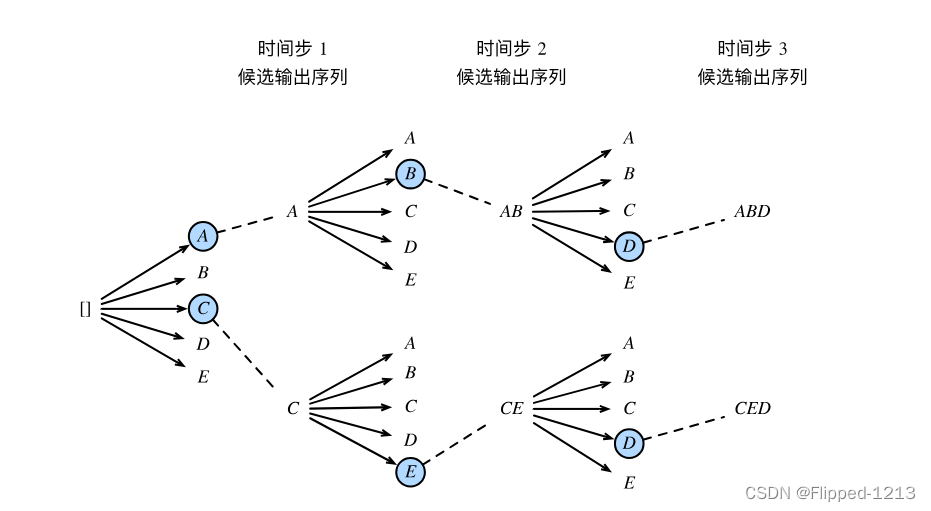

2.2束搜索

束搜索(beam search)是对贪婪搜索的一个改进算法。它有一个束宽(beam size)超参数。我们将它设为𝑘。在时间步1时,选取当前时间步条件概率最大的𝑘个词,分别组成𝑘个候选输出序列的首词。在之后的每个时间步,基于上个时间步的𝑘𝑘个候选输出序列,从𝑘![]() 个可能的输出序列中选取条件概率最大的𝑘个,作为该时间步的候选输出序列。最终,我们从各个时间步的候选输出序列中筛选出包含特殊符号“<eos>”的序列,并将它们中所有特殊符号“<eos>”后面的子序列舍弃,得到最终候选输出序列的集合。

个可能的输出序列中选取条件概率最大的𝑘个,作为该时间步的候选输出序列。最终,我们从各个时间步的候选输出序列中筛选出包含特殊符号“<eos>”的序列,并将它们中所有特殊符号“<eos>”后面的子序列舍弃,得到最终候选输出序列的集合。

图10.11通过一个例子演示了束搜索的过程。假设输出序列的词典中只包含5个元素,即

![]() ={𝐴,𝐵,𝐶,𝐷,𝐸}

={𝐴,𝐵,𝐶,𝐷,𝐸}

,且其中一个为特殊符号“<eos>”。设束搜索的束宽等于2,输出序列最大长度为3。在输出序列的时间步1时,假设条件概率𝑃(𝑦1∣𝑐)最大的2个词为𝐴和𝐶。我们在时间步2时将对所有的𝑦2∈![]() 都分别计算𝑃(𝑦2∣𝐴,𝑐)和𝑃(𝑦2∣𝐶,𝑐),并从计算出的10个条件概率中取最大的2个,假设为𝑃(𝐵∣𝐴,𝑐)和𝑃(𝐸∣𝐶,𝑐)。那么,我们在时间步3时将对所有的𝑦3∈

都分别计算𝑃(𝑦2∣𝐴,𝑐)和𝑃(𝑦2∣𝐶,𝑐),并从计算出的10个条件概率中取最大的2个,假设为𝑃(𝐵∣𝐴,𝑐)和𝑃(𝐸∣𝐶,𝑐)。那么,我们在时间步3时将对所有的𝑦3∈![]() 都分别计算𝑃(𝑦3∣𝐴,𝐵,𝑐)和𝑃(𝑦3∣𝐶,𝐸,𝑐),并从计算出的10个条件概率中取最大的2个,假设为𝑃(𝐷∣𝐴,𝐵,𝑐)和𝑃(𝐷∣𝐶,𝐸,𝑐)。如此一来,我们得到6个候选输出序列:(1)𝐴;(2)𝐶;(3)𝐴、𝐵;(4)𝐶、𝐸;(5)𝐴、𝐵、𝐷和(6)𝐶、𝐸、𝐷。接下来,我们将根据这6个序列得出最终候选输出序列的集合。

都分别计算𝑃(𝑦3∣𝐴,𝐵,𝑐)和𝑃(𝑦3∣𝐶,𝐸,𝑐),并从计算出的10个条件概率中取最大的2个,假设为𝑃(𝐷∣𝐴,𝐵,𝑐)和𝑃(𝐷∣𝐶,𝐸,𝑐)。如此一来,我们得到6个候选输出序列:(1)𝐴;(2)𝐶;(3)𝐴、𝐵;(4)𝐶、𝐸;(5)𝐴、𝐵、𝐷和(6)𝐶、𝐸、𝐷。接下来,我们将根据这6个序列得出最终候选输出序列的集合。

在最终候选输出序列的集合中,我们取以下分数最高的序列作为输出序列:

其中𝐿为最终候选序列长度,𝛼一般可选为0.75。分母上的是为了惩罚较长序列在以上分数中较多的对数相加项。分析可知,束搜索的计算开销为

。这介于贪婪搜索和穷举搜索的计算开销之间。此外,贪婪搜索可看作是束宽为1的束搜索。束搜索通过灵活的束宽𝑘𝑘来权衡计算开销和搜索质量。

。这介于贪婪搜索和穷举搜索的计算开销之间。此外,贪婪搜索可看作是束宽为1的束搜索。束搜索通过灵活的束宽𝑘𝑘来权衡计算开销和搜索质量。

三、注意力机制

以循环神经网络为例,注意力机制通过对编码器所有时间步的隐藏状态做加权平均来得到背景变量。解码器在每一时间步调整这些权重,即注意力权重,从而能够在不同时间步分别关注输入序列中的不同部分并编码进相应时间步的背景变量。

3.1计算背景变量

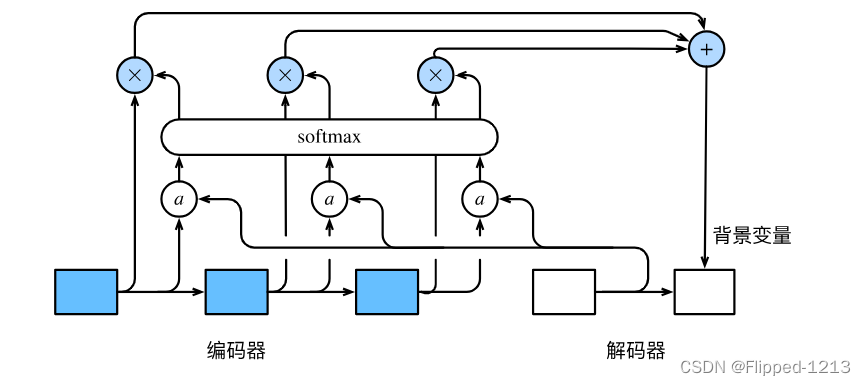

图3.1描绘了注意力机制如何为解码器在时间步2计算背景变量。首先,函数𝑎𝑎根据解码器在时间步1的隐藏状态和编码器在各个时间步的隐藏状态计算softmax运算的输入。softmax运算输出概率分布并对编码器各个时间步的隐藏状态做加权平均,从而得到背景变量。

具体来说,令编码器在时间步𝑡的隐藏状态为ℎ𝑡,且总时间步数为𝑇。那么解码器在时间步𝑡′的背景变量为所有编码器隐藏状态的加权平均:

其中给定𝑡′时,权重在𝑡=1,…,𝑇的值是一个概率分布。为了得到概率分布,我们可以使用softmax运算:

, t= 1,...,T,

现在,我们需要定义如何计算上式中softmax运算的输入。由于

同时取决于解码器的时间步𝑡′和编码器的时间步𝑡,我们不妨以解码器在时间步𝑡′−1的隐藏状态𝑠𝑡′−1与编码器在时间步𝑡的隐藏状态ℎ𝑡为输入,并通过函数𝑎计算

:

这里函数𝑎有多种选择,如果两个输入向量长度相同,一个简单的选择是计算它们的内积。而最早提出注意力机制的论文则将输入连结后通过含单隐藏层的多层感知机变换 [1]:

其中𝑣、𝑊𝑠、𝑊ℎ都是可以学习的模型参数。

我们还可以对注意力机制采用更高效的矢量化计算。广义上,注意力机制的输入包括查询项以及一一对应的键项和值项,其中值项是需要加权平均的一组项。在加权平均中,值项的权重来自查询项以及与该值项对应的键项的计算。

在上面的例子中,查询项为解码器的隐藏状态,键项和值项均为编码器的隐藏状态。 让我们考虑一个常见的简单情形,即编码器和解码器的隐藏单元个数均为ℎ,且函数。假设我们希望根据解码器单个隐藏状态𝑠𝑡′−1∈𝑅ℎ和编码器所有隐藏状态ℎ𝑡∈𝑅ℎ,𝑡=1,…,𝑇来计算背景向量𝑐𝑡′∈𝑅ℎ。 我们可以将查询项矩阵𝑄∈𝑅1×ℎ设为

,并令键项矩阵𝐾∈𝑅𝑇×ℎ和值项矩阵𝑉∈𝑅𝑇×ℎ相同且第𝑡行均为

。此时,我们只需要通过矢量化计算

即可算出转置后的背景向量。当查询项矩阵𝑄的行数为𝑛时,上式将得到𝑛行的输出矩阵。输出矩阵与查询项矩阵在相同行上一一对应。

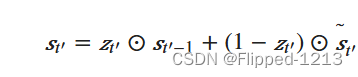

3.2更新隐藏状态

以门控循环单元为例,在解码器中我们可以对门控循环单元的设计稍作修改,从而变换上一时间步𝑡′−1的输出𝑦𝑡′−1、隐藏状态𝑠𝑡′−1和当前时间步𝑡′的含注意力机制的背景变量𝑐𝑡′。解码器在时间步𝑡′的隐藏状态为

其中的重置门、更新门和候选隐藏状态分别为

其中含下标的𝑊𝑊和𝑏𝑏分别为门控循环单元的权重参数和偏差参数。

四、机器翻译

机器翻译是指将一段文本从一种语言自动翻译到另一种语言。因为一段文本序列在不同语言中的长度不一定相同,所以我们使用机器翻译为例来介绍编码器—解码器和注意力机制的应用。

4.1读取和预处理数据

我们先定义一些特殊符号。其中“<pad>”(padding)符号用来添加在较短序列后,直到每个序列等长,而“<bos>”和“<eos>”符号分别表示序列的开始和结束。

!tar -xf d2lzh_pytorch.tar#解压文件

结果:

tar: d2lzh_pytorch.tar#解压文件: Cannot open: No such file or directory tar: Error is not recoverable: exiting now

准备运行深度学习模型所需的环境:

import collections

import os

import io

import math

import torch

from torch import nn

import torch.nn.functional as F

import torchtext.vocab as Vocab

import torch.utils.data as Data

import sys

# sys.path.append("..")

import d2lzh_pytorch as d2l

PAD, BOS, EOS = '<pad>', '<bos>', '<eos>'

os.environ["CUDA_VISIBLE_DEVICES"] = "0"

device = torch.device('cuda' if torch.cuda.is_available() else 'cpu')

print(torch.__version__, device)

1.5.0 cpu

接着定义两个辅助函数对后面读取的数据进行预处理

# 将一个序列中所有的词记录在all_tokens中以便之后构造词典,然后在该序列后面添加PAD直到序列

# 长度变为max_seq_len,然后将序列保存在all_seqs中

def process_one_seq(seq_tokens, all_tokens, all_seqs, max_seq_len):

all_tokens.extend(seq_tokens)#将当前序列中的词加入到总词汇列表中

seq_tokens += [EOS] + [PAD] * (max_seq_len - len(seq_tokens) - 1)#将序列末尾添加EOS标记,并使用PAD填充至指定的最大序列长度

all_seqs.append(seq_tokens)#将处理后的序列加入到总序列列表中

# 使用所有的词来构造词典。并将所有序列中的词变换为词索引后构造Tensor

def build_data(all_tokens, all_seqs):

vocab = Vocab.Vocab(collections.Counter(all_tokens),

specials=[PAD, BOS, EOS])

indices = [[vocab.stoi[w] for w in seq] for seq in all_seqs]

return vocab, torch.tensor(indices)#将所有序列中的词转换为对应的索引,并构造成一个PyTorch张量

在这个数据集里,每一行是一对法语句子和它对应的英语句子,中间使用'\t'隔开。在读取数据时,我们在句末附上“<eos>”符号,并可能通过添加“<pad>”符号使每个序列的长度均为max_seq_len。我们为法语词和英语词分别创建词典。法语词的索引和英语词的索引相互独立。

def read_data(max_seq_len):

# in和out分别是input和output的缩写

in_tokens, out_tokens, in_seqs, out_seqs = [], [], [], []

with io.open('fr-en-small.txt') as f:

lines = f.readlines()#从fr-en-small.txt文件中读取所有行数据,并存储在lines列表中

for line in lines:

in_seq, out_seq = line.rstrip().split('\t')#使用\t分割每行数据

in_seq_tokens, out_seq_tokens = in_seq.split(' '), out_seq.split(' ')#将这些序列分割成单词列表

if max(len(in_seq_tokens), len(out_seq_tokens)) > max_seq_len - 1:

continue # 如果加上EOS后长于max_seq_len,则忽略掉此样本

process_one_seq(in_seq_tokens, in_tokens, in_seqs, max_seq_len)

process_one_seq(out_seq_tokens, out_tokens, out_seqs, max_seq_len)#将单词加入到总词汇列表,并将序列加入到总序列列表

in_vocab, in_data = build_data(in_tokens, in_seqs)

out_vocab, out_data = build_data(out_tokens, out_seqs)

return in_vocab, out_vocab, Data.TensorDataset(in_data, out_data)

将序列的最大长度设成7,然后查看读取到的第一个样本。该样本分别包含法语词索引序列和英语词索引序列。

max_seq_len = 7 in_vocab, out_vocab, dataset = read_data(max_seq_len) dataset[0]

输出

(tensor([ 5, 4, 45, 3, 2, 0, 0]), tensor([ 8, 4, 27, 3, 2, 0, 0]))

4.2编码器-解码器

我们将使用含注意力机制的编码器—解码器来将一段简短的法语翻译成英语

4.2.1编码器

在编码器中,我们将输入语言的词索引通过词嵌入层得到词的表征,然后输入到一个多层门控循环单元中。正如我们在6.5节(循环神经网络的简洁实现)中提到的,PyTorch的nn.GRU实例在前向计算后也会分别返回输出和最终时间步的多层隐藏状态。其中的输出指的是最后一层的隐藏层在各个时间步的隐藏状态,并不涉及输出层计算。注意力机制将这些输出作为键项和值项。

class Encoder(nn.Module):

def __init__(self, vocab_size, embed_size, num_hiddens, num_layers,

drop_prob=0, **kwargs):

super(Encoder, self).__init__(**kwargs)

self.embedding = nn.Embedding(vocab_size, embed_size)#创建一个词嵌入层,将词汇表中的单词索引映射为固定大小的密集向量

self.rnn = nn.GRU(embed_size, num_hiddens, num_layers, dropout=drop_prob)

def forward(self, inputs, state):

# 输入形状是(批量大小, 时间步数)。将输出互换样本维和时间步维

embedding = self.embedding(inputs.long()).permute(1, 0, 2) # (seq_len, batch, input_size) 将输入序列转换为长整型(即单词索引),并通过Embedding层进行词嵌入

return self.rnn(embedding, state)

def begin_state(self):

return None

下面我们来创建一个批量大小为4、时间步数为7的小批量序列输入。设门控循环单元的隐藏层个数为2,隐藏单元个数为16。编码器对该输入执行前向计算后返回的输出形状为(时间步数, 批量大小, 隐藏单元个数)。门控循环单元在最终时间步的多层隐藏状态的形状为(隐藏层个数, 批量大小, 隐藏单元个数)。对于门控循环单元来说,state就是一个元素,即隐藏状态;如果使用长短期记忆,state是一个元组,包含两个元素即隐藏状态和记忆细胞。

encoder = Encoder(vocab_size=10, embed_size=8, num_hiddens=16, num_layers=2) output, state = encoder(torch.zeros((4, 7)), encoder.begin_state()) output.shape, state.shape # GRU的state是h, 而LSTM的是一个元组(h, c)

输出:

(torch.Size([7, 4, 16]), torch.Size([2, 4, 16]))

4.2.2注意力机制

我们将实现前面定义的函数𝑎:将输入连结后通过含单隐藏层的多层感知机变换。其中隐藏层的输入是解码器的隐藏状态与编码器在所有时间步上隐藏状态的一一连结,且使用tanh函数作为激活函数。输出层的输出个数为1。两个Linear实例均不使用偏差。其中函数𝑎定义里向量𝑣的长度是一个超参数,即attention_size。

def attention_model(input_size, attention_size):

#创建一个线性层,将大小为input_size的输入特征映射到大小为attention_size的隐藏表示

#设置 bias=False 表示该层不包括偏置参数

model = nn.Sequential(nn.Linear(input_size, attention_size, bias=False),

nn.Tanh(),

nn.Linear(attention_size, 1, bias=False))

#返回整个模型,封装在一个 nn.Sequential 容器中

return model

注意力机制的输入包括查询项、键项和值项。设编码器和解码器的隐藏单元个数相同。这里的查询项为解码器在上一时间步的隐藏状态,形状为(批量大小, 隐藏单元个数);键项和值项均为编码器在所有时间步的隐藏状态,形状为(时间步数, 批量大小, 隐藏单元个数)。注意力机制返回当前时间步的背景变量,形状为(批量大小, 隐藏单元个数)。

def attention_forward(model, enc_states, dec_state):

"""

enc_states: (时间步数, 批量大小, 隐藏单元个数)

dec_state: (批量大小, 隐藏单元个数)

"""

# 将解码器隐藏状态广播到和编码器隐藏状态形状相同后进行连结

dec_states = dec_state.unsqueeze(dim=0).expand_as(enc_states)

enc_and_dec_states = torch.cat((enc_states, dec_states), dim=2)

e = model(enc_and_dec_states) # 形状为(时间步数, 批量大小, 1) model是一个预先定义的神经网络模型,用于计算注意力分数

alpha = F.softmax(e, dim=0) # 在时间步维度做softmax运算

return (alpha * enc_states).sum(dim=0) # 返回背景变量

在下面的例子中,编码器的时间步数为10,批量大小为4,编码器和解码器的隐藏单元个数均为8。注意力机制返回一个小批量的背景向量,每个背景向量的长度等于编码器的隐藏单元个数。因此输出的形状为(4, 8)。

seq_len, batch_size, num_hiddens = 10, 4, 8 model = attention_model(2*num_hiddens, 10) # 创建一个注意力模型,输入维度为两倍的隐藏单元数,输出维度为10 enc_states = torch.zeros((seq_len, batch_size, num_hiddens))# 创建编码器状态张量 dec_state = torch.zeros((batch_size, num_hiddens))# 创建解码器状态张量 attention_forward(model, enc_states, dec_state).shape #调用attention_forward函数,传入注意力模型、编码器状态和解码器状态,并获取返回张量的形状

输出:

torch.Size([4, 8])

4.2.3含注意力机制的解码器

我们直接将编码器在最终时间步的隐藏状态作为解码器的初始隐藏状态。这要求编码器和解码器的循环神经网络使用相同的隐藏层个数和隐藏单元个数。

在解码器的前向计算中,我们先通过刚刚介绍的注意力机制计算得到当前时间步的背景向量。由于解码器的输入来自输出语言的词索引,我们将输入通过词嵌入层得到表征,然后和背景向量在特征维连结。我们将连结后的结果与上一时间步的隐藏状态通过门控循环单元计算出当前时间步的输出与隐藏状态。最后,我们将输出通过全连接层变换为有关各个输出词的预测,形状为(批量大小, 输出词典大小)。

#解码器模型

class Decoder(nn.Module):

def __init__(self, vocab_size, embed_size, num_hiddens, num_layers,

attention_size, drop_prob=0):

super(Decoder, self).__init__()

# 定义词嵌入层,将词索引映射为密集向量表示

self.embedding = nn.Embedding(vocab_size, embed_size)

# 定义注意力模型,输入维度为两倍的隐藏单元数,输出维度为 attention_size

self.attention = attention_model(2*num_hiddens, attention_size)

# GRU的输入包含attention输出的c和实际输入, 所以尺寸是 num_hiddens+embed_size

self.rnn = nn.GRU(num_hiddens + embed_size, num_hiddens,

num_layers, dropout=drop_prob)

self.out = nn.Linear(num_hiddens, vocab_size)

#这是一个线性层,将GRU的输出映射到词汇表大小的空间,以便生成每个词的概率分布

def forward(self, cur_input, state, enc_states):

"""

cur_input shape: (batch, )

state shape: (num_layers, batch, num_hiddens)

"""

# 使用注意力机制计算背景向量

c = attention_forward(self.attention, enc_states, state[-1])

# 将嵌入后的输入和背景向量在特征维连结, (批量大小, num_hiddens+embed_size)

input_and_c = torch.cat((self.embedding(cur_input), c), dim=1)

# 为输入和背景向量的连结增加时间步维,时间步个数为1

output, state = self.rnn(input_and_c.unsqueeze(0), state)

# 移除时间步维,输出形状为(批量大小, 输出词典大小)

output = self.out(output).squeeze(dim=0)

return output, state

def begin_state(self, enc_state):

# 直接将编码器最终时间步的隐藏状态作为解码器的初始隐藏状态

return enc_state

4.3训练模型

我们先实现batch_loss函数计算一个小批量的损失。解码器在最初时间步的输入是特殊字符BOS。之后,解码器在某时间步的输入为样本输出序列在上一时间步的词,即强制教学,在这里使用掩码变量避免填充项对损失函数计算的影响。

def batch_loss(encoder, decoder, X, Y, loss):

batch_size = X.shape[0]

enc_state = encoder.begin_state()

enc_outputs, enc_state = encoder(X, enc_state)

# 初始化解码器的隐藏状态

dec_state = decoder.begin_state(enc_state)

# 解码器在最初时间步的输入是BOS

dec_input = torch.tensor([out_vocab.stoi[BOS]] * batch_size)

# 我们将使用掩码变量mask来忽略掉标签为填充项PAD的损失, 初始全1

mask, num_not_pad_tokens = torch.ones(batch_size,), 0

l = torch.tensor([0.0])

for y in Y.permute(1,0): # Y shape: (batch, seq_len)

dec_output, dec_state = decoder(dec_input, dec_state, enc_outputs)

l = l + (mask * loss(dec_output, y)).sum()

dec_input = y # 使用强制教学

num_not_pad_tokens += mask.sum().item()

# EOS后面全是PAD. 下面一行保证一旦遇到EOS接下来的循环中mask就一直是0

mask = mask * (y != out_vocab.stoi[EOS]).float()

return l / num_not_pad_tokens

在训练函数中,我们需要同时迭代编码器和解码器的模型参数

def train(encoder, decoder, dataset, lr, batch_size, num_epochs):

enc_optimizer = torch.optim.Adam(encoder.parameters(), lr=lr)#初始化编码器的Adam优化器,用于更新编码器的参数

dec_optimizer = torch.optim.Adam(decoder.parameters(), lr=lr)#初始化解码器的Adam优化器,用于更新解码器的参数

loss = nn.CrossEntropyLoss(reduction='none')#交叉熵损失函数

data_iter = Data.DataLoader(dataset, batch_size, shuffle=True)#shuffle=True打乱数据顺序

for epoch in range(num_epochs):

l_sum = 0.0

for X, Y in data_iter:

enc_optimizer.zero_grad()

dec_optimizer.zero_grad()#清空编码器和解码器的梯度缓存

l = batch_loss(encoder, decoder, X, Y, loss)#计算当前批次的损失 l

l.backward()

enc_optimizer.step()

dec_optimizer.step()#更新编码器和解码器的参数,根据其计算的梯度

l_sum += l.item()

if (epoch + 1) % 10 == 0:

print("epoch %d, loss %.3f" % (epoch + 1, l_sum / len(data_iter)))

接下来,创建模型实例并设置超参数。然后,我们就可以训练模型了。

embed_size, num_hiddens, num_layers = 64, 64, 2#embed_size词嵌入的维度,num_hiddens 是隐藏单元的维度,num_layers 是GRU的层数

attention_size, drop_prob, lr, batch_size, num_epochs = 10, 0.5, 0.01, 2, 50# 定义注意力模型的输出维度、dropout概率、学习率、批量大小和训练周期数

# 初始化编码器模型

encoder = Encoder(len(in_vocab), embed_size, num_hiddens, num_layers,

drop_prob)

# 初始化解码器模型

decoder = Decoder(len(out_vocab), embed_size, num_hiddens, num_layers,

attention_size, drop_prob)#初始化

# 调用训练函数,传入编码器、解码器、数据集、学习率、批量大小和训练周期数

train(encoder, decoder, dataset, lr, batch_size, num_epochs)

输出

epoch 10, loss 0.466 epoch 20, loss 0.238 epoch 30, loss 0.113 epoch 40, loss 0.108 epoch 50, loss 0.029

4.4预测不定长的序列

贪婪搜索

def translate(encoder, decoder, input_seq, max_seq_len):

in_tokens = input_seq.split(' ')#input_seq是输入的字符串序列,通过空格分割为单词列表 in_tokens

in_tokens += [EOS] + [PAD] * (max_seq_len - len(in_tokens) - 1)

#EOS 表示结束符,用于标记输入序列的末尾

#PAD 是填充符,用于填充输入序列,确保其长度达到 max_seq_len

enc_input = torch.tensor([[in_vocab.stoi[tk] for tk in in_tokens]]) # batch=1

#in_vocab.stoi[tk]将单词 tk 转换为其在输入词汇表 in_vocab 中的索引,并构建一个张量表示输入序列

enc_state = encoder.begin_state()

enc_output, enc_state = encoder(enc_input, enc_state)# 编码器处理输入序列,获取编码器输出和最终状态

dec_input = torch.tensor([out_vocab.stoi[BOS]])

dec_state = decoder.begin_state(enc_state)# 使用编码器的最终状态作为解码器的初始状态

output_tokens = []

for _ in range(max_seq_len):# 迭代生成输出序列的每个单词,最多迭代 max_seq_len 次

dec_output, dec_state = decoder(dec_input, dec_state, enc_output)

pred = dec_output.argmax(dim=1)#找到输出中概率最高的预测标记

pred_token = out_vocab.itos[int(pred.item())] #将预测的索引转换为输出词汇表中的单词

if pred_token == EOS: # 当任一时间步搜索出EOS时,输出序列即完成

break

else:

output_tokens.append(pred_token)

dec_input = pred

return output_tokens

简单测试一下模型。输入法语句子“ils regardent.”,翻译后的英语句子应该是“they are watching.”。

input_seq = 'ils regardent .'# 定义输入序列 translate(encoder, decoder, input_seq, max_seq_len)

输出

['they', 'are', 'watching', '.']

4.5评价翻译结果

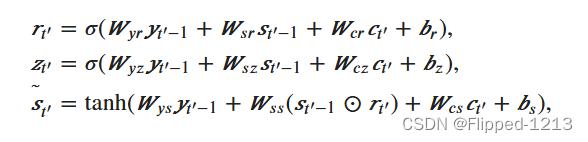

评价机器翻译结果通常使用BLEU(Bilingual Evaluation Understudy)。对于模型预测序列中任意的子序列,BLEU考察这个子序列是否出现在标签序列中。

具体来说,设词数为𝑛的子序列的精度为𝑝𝑛。它是预测序列与标签序列匹配词数为𝑛的子序列的数量与预测序列中词数为𝑛的子序列的数量之比。举个例子,假设标签序列为𝐴、𝐵、𝐶、𝐷、𝐸、𝐹,预测序列为𝐴、𝐵、𝐵、𝐶、𝐷,那么𝑝1=4/5,𝑝2=3/4,𝑝3=1/3,𝑝4=0。设和

分别为标签序列和预测序列的词数,那么,BLEU的定义为

其中𝑘是我们希望匹配的子序列的最大词数。可以看到当预测序列和标签序列完全一致时,BLEU为1。

实现BLEU的计算

def bleu(pred_tokens, label_tokens, k):#k是最大考虑的n-gram大小

len_pred, len_label = len(pred_tokens), len(label_tokens)

score = math.exp(min(0, 1 - len_label / len_pred))#根据 pred_tokens 和 label_tokens 的长度比例计算长度惩罚

for n in range(1, k + 1):#计算n-gram精度

num_matches, label_subs = 0, collections.defaultdict(int)

for i in range(len_label - n + 1):

label_subs[''.join(label_tokens[i: i + n])] += 1# 统计参考序列中的 n-gram 出现次数

for i in range(len_pred - n + 1):# 计算预测序列中与参考序列匹配的 n-gram 数量

if label_subs[''.join(pred_tokens[i: i + n])] > 0:

num_matches += 1

label_subs[''.join(pred_tokens[i: i + n])] -= 1

score *= math.pow(num_matches / (len_pred - n + 1), math.pow(0.5, n))# 计算当前 n-gram 的精度分数,并乘以相应的权重

return score

定义一个辅助打印函数

def score(input_seq, label_seq, k):

pred_tokens = translate(encoder, decoder, input_seq, max_seq_len) # 使用 translate 函数生成预测序列的单词列表

label_tokens = label_seq.split(' ') # 将参考序列字符串按空格分割为单词列表

print('bleu %.3f, predict: %s' % (bleu(pred_tokens, label_tokens, k),

' '.join(pred_tokens)))

预测正确则分数为1

score('ils regardent .', 'they are watching .', k=2)#输入序列和参考序列的定义

输出

bleu 1.000, predict: they are watching

score('ils sont canadienne .', 'they are canadian .', k=2)

输出

bleu 0.658, predict: they are russian

五、基于Transformer实现机器翻译(日译中)

5.1导入所需的包

import math

import torchtext

import torch

import torch.nn as nn

from torch import Tensor

from torch.nn.utils.rnn import pad_sequence

from torch.utils.data import DataLoader

from collections import Counter

from torchtext.vocab import Vocab

from torch.nn import TransformerEncoder, TransformerDecoder, TransformerEncoderLayer, TransformerDecoderLayer

import io

import time

import pandas as pd

import numpy as np

import pickle

import tqdm

import sentencepiece as spm

torch.manual_seed(0)

device = torch.device('cuda' if torch.cuda.is_available() else 'cpu')

# print(torch.cuda.get_device_name(0)) ## 如果你有GPU,请在你自己的电脑上尝试运行这一套代码

device

device(type='cpu')

5.2获取并行数据集

df = pd.read_csv('./zh-ja/zh-ja.bicleaner05.txt', sep='\\t', engine='python', header=None)

# 使用 pandas 读取 CSV 文件到 DataFrame,指定分隔符为 '\t'

trainen = df[2].values.tolist()#[:10000]# 将 DataFrame 第三列(索引为2)的值提取为列表

trainja = df[3].values.tolist()#[:10000]# 将 DataFrame 第四列(索引为3)的值提取为列表

# trainen.pop(5972)

# trainja.pop(5972)

在导入所有日语和英语对应数据后,删除了数据集中的最后一个数据,因为它缺少值。

下面是数据集中包含的句子示例

print(trainen[500])# 打印 trainen 列表中索引为 500 的元素,即中文句子 print(trainja[500])# 打印 trainja 列表中索引为 500 的元素,即日文句子

Chinese HS Code Harmonized Code System < HS编码 2905 无环醇及其卤化、磺化、硝化或亚硝化衍生物 HS Code List (Harmonized System Code) for US, UK, EU, China, India, France, Japan, Russia, Germany, Korea, Canada ... Japanese HS Code Harmonized Code System < HSコード 2905 非環式アルコール並びにそのハロゲン化誘導体、スルホン化誘導体、ニトロ化誘導体及びニトロソ化誘導体 HS Code List (Harmonized System Code) for US, UK, EU, China, India, France, Japan, Russia, Germany, Korea, Canada ...

准备分词器

与英语或其他字母语言不同,日语句子不包含空格来分隔单词。我们可以使用JParaCrawl提供的分词器,该分词器是使用SentencePiece创建的日语和英语

en_tokenizer = spm.SentencePieceProcessor(model_file='enja_spm_models/spm.en.nopretok.model')#加载已经训练好的英文模型 ja_tokenizer = spm.SentencePieceProcessor(model_file='enja_spm_models/spm.ja.nopretok.model')#加载已经训练好的日文模型

加载分词器后,对其进行测试。

en_tokenizer.encode("All residents aged 20 to 59 years who live in Japan must enroll in public pension system.", out_type='str')

# 使用 en_tokenizer 对输入的英文文本进行编码

ja_tokenizer.encode("年金 日本に住んでいる20歳~60歳の全ての人は、公的年金制度に加入しなければなりません。", out_type='str')

# 使用 ja_tokenizer 对输入的日文文本进行编码

5.3构建 TorchText Vocab 对象并将句子转换为 Torch 张量

然后,使用分词器和原始句子,我们构建从 TorchText 导入的 Vocab 对象。此过程可能需要几秒钟或几分钟,具体取决于我们的数据集大小和计算能力。不同的分词器也会影响构建词汇所需的时间,这里使用SentencePiece。

def build_vocab(sentences, tokenizer):

counter = Counter()# 创建一个计数器,用于统计词频

for sentence in sentences:

# 使用 tokenizer 对句子进行编码,并更新计数器

counter.update(tokenizer.encode(sentence, out_type=str))

# 使用统计好的词频构建词汇表,并添加特殊符号

return Vocab(counter, specials=['<unk>', '<pad>', '<bos>', '<eos>'])

ja_vocab = build_vocab(trainja, ja_tokenizer)

en_vocab = build_vocab(trainen, en_tokenizer)# 构建日文、英文的词汇表

使用词汇表和分词器对象来构建训练数据的张量

def data_process(ja, en):

data = []#创建一个空列表,用于存储处理后的数据

for (raw_ja, raw_en) in zip(ja, en):

ja_tensor_ = torch.tensor([ja_vocab[token] for token in ja_tokenizer.encode(raw_ja.rstrip("\n"), out_type=str)],

dtype=torch.long)

en_tensor_ = torch.tensor([en_vocab[token] for token in en_tokenizer.encode(raw_en.rstrip("\n"), out_type=str)],

dtype=torch.long)

data.append((ja_tensor_, en_tensor_))

return data

train_data = data_process(trainja, trainen)

5.4创建要在训练期间迭代的 DataLoader 对象

将BATCH_SIZE设置为 16 以防止“cuda 内存不足”,实际应该根据需要随意更改批处理大小

BATCH_SIZE = 8

PAD_IDX = ja_vocab['<pad>']

BOS_IDX = ja_vocab['<bos>']

EOS_IDX = ja_vocab['<eos>']

def generate_batch(data_batch):

ja_batch, en_batch = [], []

for (ja_item, en_item) in data_batch:

ja_batch.append(torch.cat([torch.tensor([BOS_IDX]), ja_item, torch.tensor([EOS_IDX])], dim=0))

en_batch.append(torch.cat([torch.tensor([BOS_IDX]), en_item, torch.tensor([EOS_IDX])], dim=0))

# 在句子前后添加开始符和结束符,然后转换为张量

ja_batch = pad_sequence(ja_batch, padding_value=PAD_IDX)

en_batch = pad_sequence(en_batch, padding_value=PAD_IDX)

# 对批量数据进行填充,使其长度相同

return ja_batch, en_batch

train_iter = DataLoader(train_data, batch_size=BATCH_SIZE,

shuffle=True, collate_fn=generate_batch)

5.5序列到序列转换器

Transformer 是 “Attention is all you need” 论文中介绍的 Seq2Seq 模型,用于解决机器翻译任务。Transformer 模型由编码器和解码器块组成,每个块包含固定数量的层。

编码器通过一系列多头注意力和前馈网络层传播输入序列来处理输入序列。编码器的输出称为内存,与目标张量一起馈送到解码器。编码器和解码器使用教师强制技术以端到端的方式进行训练。

from torch.nn import (TransformerEncoder, TransformerDecoder,

TransformerEncoderLayer, TransformerDecoderLayer)

class Seq2SeqTransformer(nn.Module):

def __init__(self, num_encoder_layers: int, num_decoder_layers: int,

emb_size: int, src_vocab_size: int, tgt_vocab_size: int,

dim_feedforward:int = 512, dropout:float = 0.1):

super(Seq2SeqTransformer, self).__init__()

# 定义 TransformerEncoder 层

encoder_layer = TransformerEncoderLayer(d_model=emb_size, nhead=NHEAD,

dim_feedforward=dim_feedforward)

self.transformer_encoder = TransformerEncoder(encoder_layer, num_layers=num_encoder_layers)

# 定义 TransformerDecoder 层

decoder_layer = TransformerDecoderLayer(d_model=emb_size, nhead=NHEAD,

dim_feedforward=dim_feedforward)

self.transformer_decoder = TransformerDecoder(decoder_layer, num_layers=num_decoder_layers)

self.generator = nn.Linear(emb_size, tgt_vocab_size)

self.src_tok_emb = TokenEmbedding(src_vocab_size, emb_size)

self.tgt_tok_emb = TokenEmbedding(tgt_vocab_size, emb_size)#词嵌入层