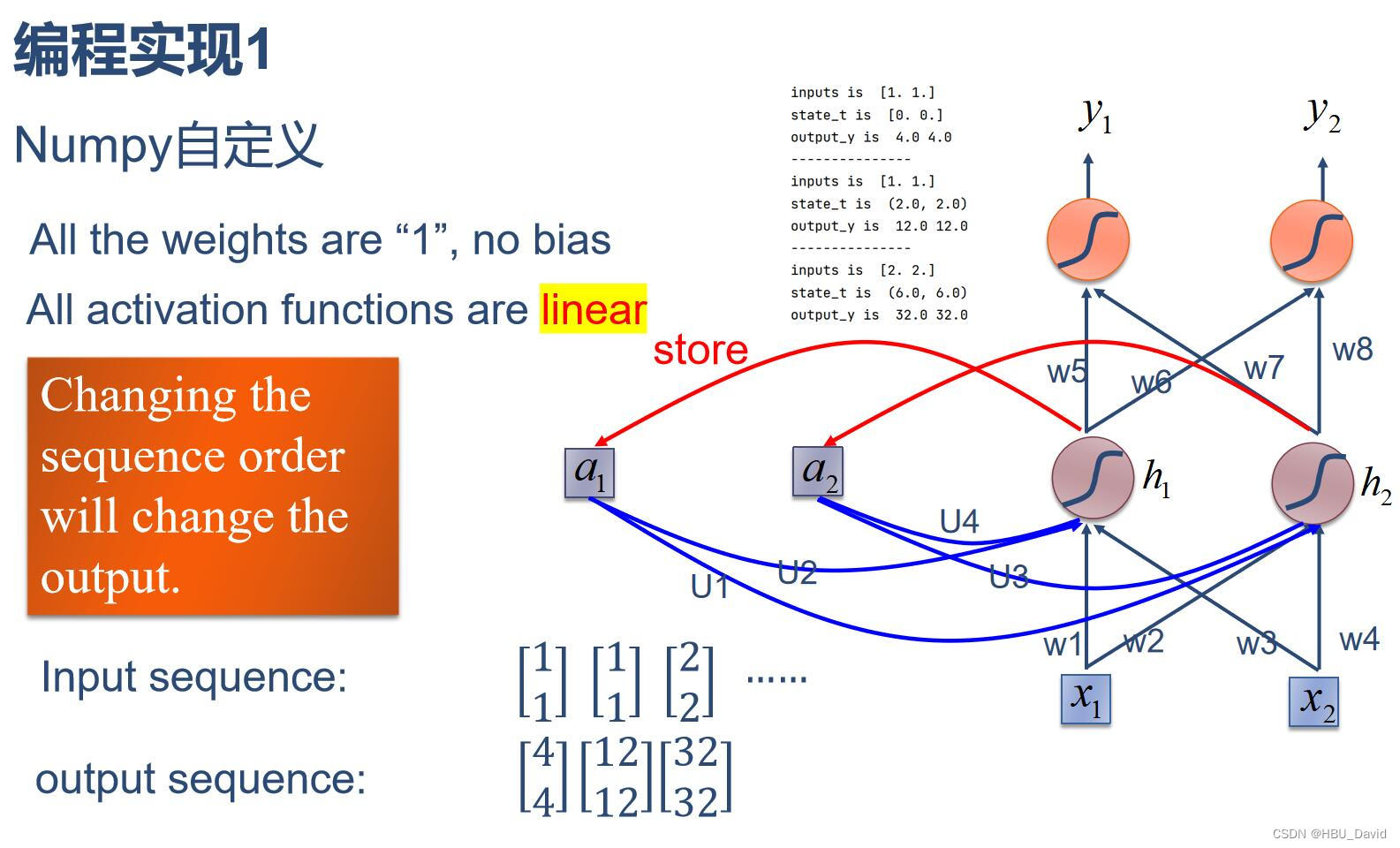

1. 实现SRN

(1)使用Numpy

import numpy as np

inputs = np.array([[1., 1.],

[1., 1.],

[2., 2.]]) # 初始化输入序列

print('inputs is ', inputs)

state_t = np.zeros(2, ) # 初始化存储器

print('state_t is ', state_t)

w1, w2, w3, w4, w5, w6, w7, w8 = 1., 1., 1., 1., 1., 1., 1., 1.

U1, U2, U3, U4 = 1., 1., 1., 1.

print('--------------------------------------')

for input_t in inputs:

print('inputs is ', input_t)

print('state_t is ', state_t)

in_h1 = np.dot([w1, w3], input_t) + np.dot([U2, U4], state_t)

in_h2 = np.dot([w2, w4], input_t) + np.dot([U1, U3], state_t)

state_t = in_h1, in_h2

output_y1 = np.dot([w5, w7], [in_h1, in_h2])

output_y2 = np.dot([w6, w8], [in_h1, in_h2])

print('output_y is ', output_y1, output_y2)

print('---------------')

(2)在1的基础上,增加激活函数tanh

import numpy as np

inputs = np.array([[1., 1.],

[1., 1.],

[2., 2.]]) # 初始化输入序列

print('inputs is ', inputs)

state_t = np.zeros(2, ) # 初始化存储器

print('state_t is ', state_t)

w1, w2, w3, w4, w5, w6, w7, w8 = 1., 1., 1., 1., 1., 1., 1., 1.

U1, U2, U3, U4 = 1., 1., 1., 1.

print('--------------------------------------')

for input_t in inputs:

print('inputs is ', input_t)

print('state_t is ', state_t)

in_h1 = np.tanh(np.dot([w1, w3], input_t) + np.dot([U2, U4], state_t))

in_h2 = np.tanh(np.dot([w2, w4], input_t) + np.dot([U1, U3], state_t))

state_t = in_h1, in_h2

output_y1 = np.dot([w5, w7], [in_h1, in_h2])

output_y2 = np.dot([w6, w8], [in_h1, in_h2])

print('output_y is ', output_y1, output_y2)

print('---------------')

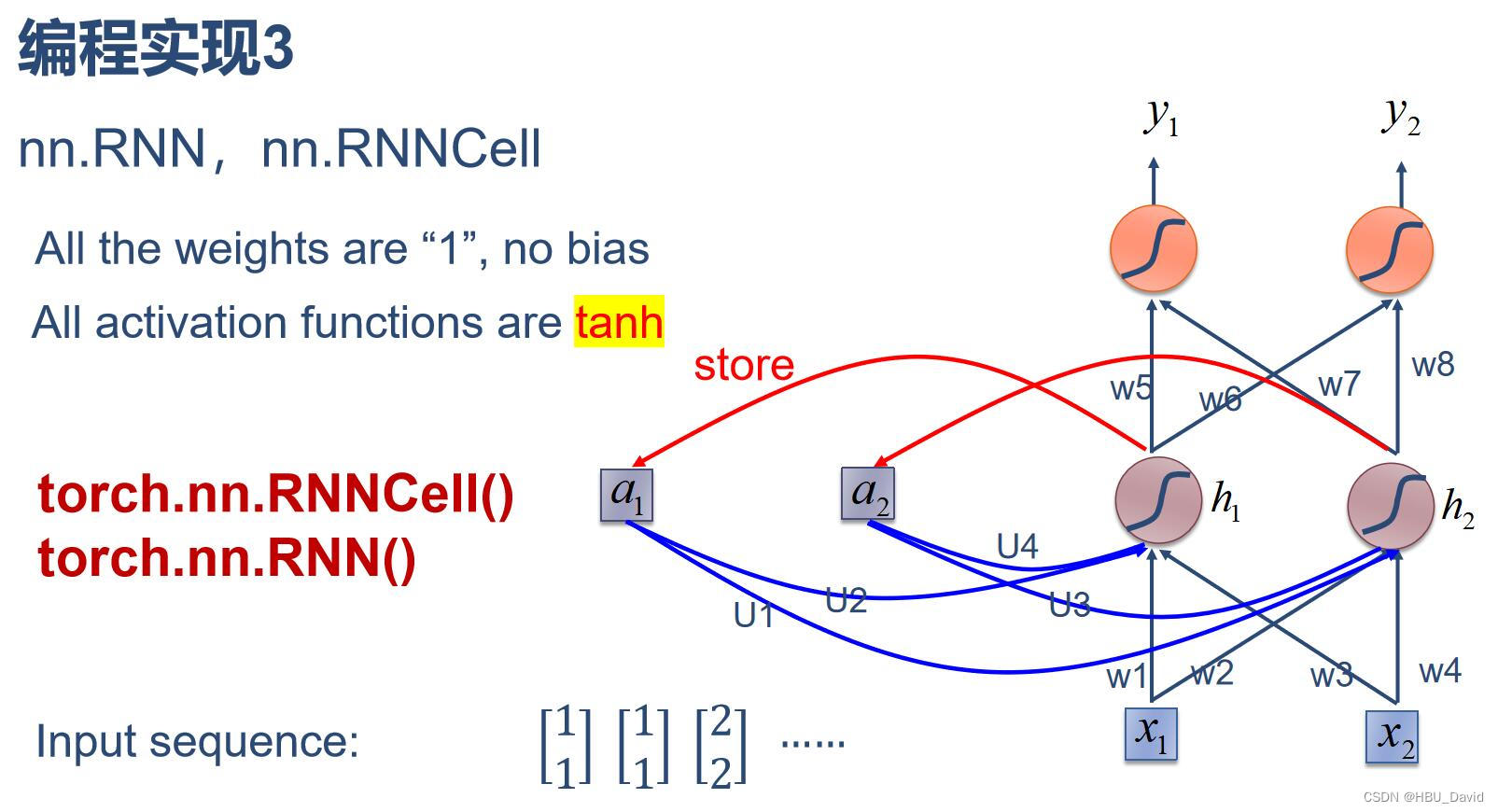

(3)使用nn.RNNCell实现

import torch

batch_size = 1

seq_len = 3 # 序列长度

input_size = 2 # 输入序列维度

hidden_size = 2 # 隐藏层维度

output_size = 2 # 输出层维度

# RNNCell

cell = torch.nn.RNNCell(input_size=input_size, hidden_size=hidden_size)

# 初始化参数 https://zhuanlan.zhihu.com/p/342012463

for name, param in cell.named_parameters():

if name.startswith("weight"):

torch.nn.init.ones_(param)

else:

torch.nn.init.zeros_(param)

# 线性层

liner = torch.nn.Linear(hidden_size, output_size)

liner.weight.data = torch.Tensor([[1, 1], [1, 1]])

liner.bias.data = torch.Tensor([0.0])

seq = torch.Tensor([[[1, 1]],

[[1, 1]],

[[2, 2]]])

hidden = torch.zeros(batch_size, hidden_size)

output = torch.zeros(batch_size, output_size)

for idx, input in enumerate(seq):

print('=' * 20, idx, '=' * 20)

print('Input :', input)

print('hidden :', hidden)

hidden = cell(input, hidden)

output = liner(hidden)

print('output :', output)

(4)使用nn.RNN实现

import torch

batch_size = 1

seq_len = 3

input_size = 2

hidden_size = 2

num_layers = 1

output_size = 2

cell = torch.nn.RNN(input_size=input_size, hidden_size=hidden_size, num_layers=num_layers)

for name, param in cell.named_parameters(): # 初始化参数

if name.startswith("weight"):

torch.nn.init.ones_(param)

else:

torch.nn.init.zeros_(param)

# 线性层

liner = torch.nn.Linear(hidden_size, output_size)

liner.weight.data = torch.Tensor([[1, 1], [1, 1]])

liner.bias.data = torch.Tensor([0.0])

inputs = torch.Tensor([[[1, 1]],

[[1, 1]],

[[2, 2]]])

hidden = torch.zeros(num_layers, batch_size, hidden_size)

out, hidden = cell(inputs, hidden)

print('Input :', inputs[0])

print('hidden:', 0, 0)

print('Output:', liner(out[0]))

print('--------------------------------------')

print('Input :', inputs[1])

print('hidden:', out[0])

print('Output:', liner(out[1]))

print('--------------------------------------')

print('Input :', inputs[2])

print('hidden:', out[1])

print('Output:', liner(out[2]))

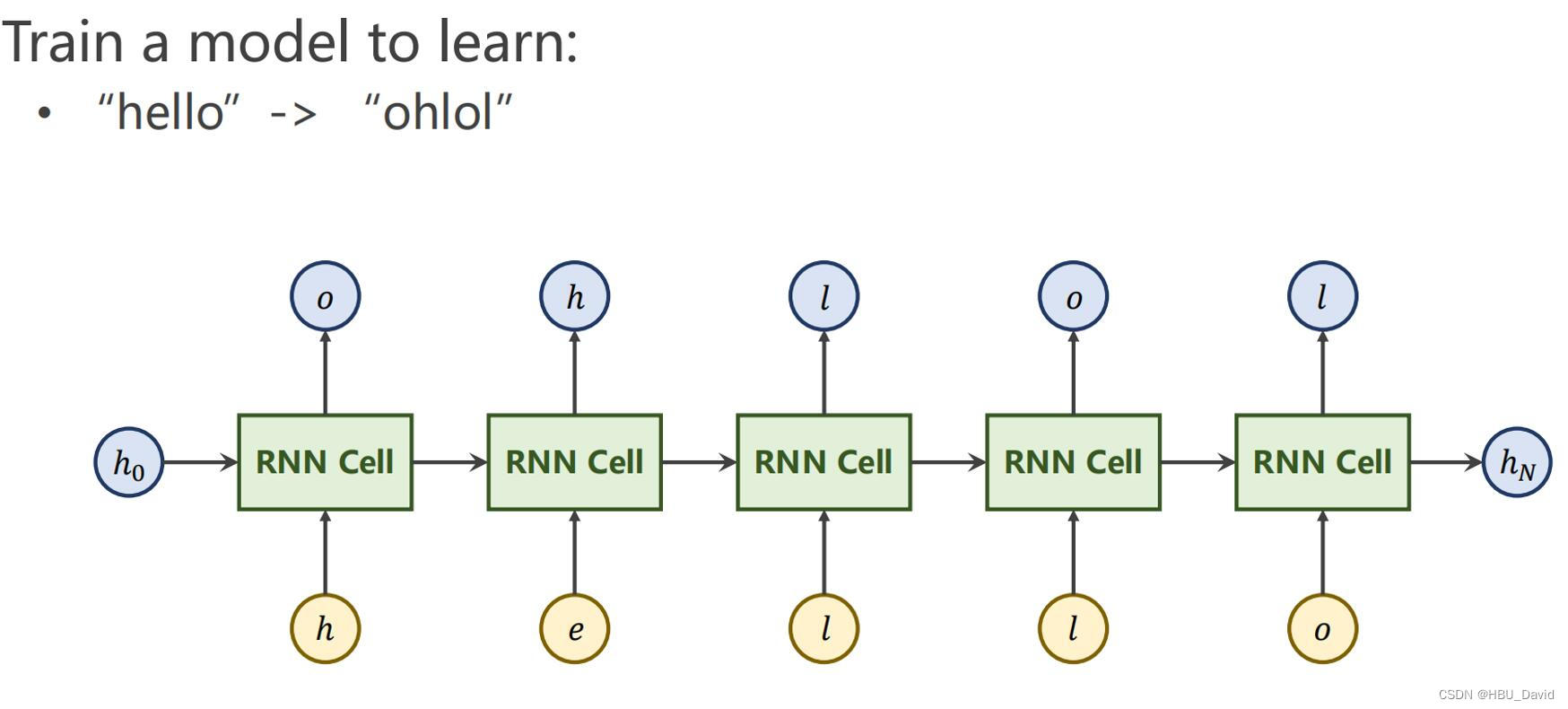

2. 实现“序列到序列”

观看视频,学习RNN原理,并实现视频P12中的教学案例

案例

import torch

batch_size = 1

seq_len = 3

input_size = 4

hidden_size = 2

num_layers = 1

cell = torch.nn.RNN(input_size=input_size, hidden_size=hidden_size,

num_layers=num_layers, batch_first=True)

# (seqLen, batchSize, inputSize)

inputs = torch.randn(batch_size, seq_len, input_size)

hidden = torch.zeros(num_layers, batch_size, hidden_size)

out, hidden = cell(inputs, hidden)

print('Output size:', out.shape)

print('Output:', out)

print('Hidden size: ', hidden.shape)

print('Hidden: ', hidden)

import torch

input_size=4

hidden_size=4

batch_size=1

idx2char=['e','h','l','o']

x_data=[1,0,2,2,3]

y_data=[3,1,2,3,2]

one_hot_lookup=[[1,0,0,0],[0,1,0,0],[0,0,1,0],[0,0,0,1]]

x_one_hot=[one_hot_lookup[x] for x in x_data]

inputs=torch.Tensor(x_one_hot).view(-1,batch_size,input_size)

labels=torch.LongTensor(y_data).view(-1,1)

class Model(torch.nn.Module):

def __init__(self,input_size,hidden_size,batch_size):

super(Model,self).__init__()

self.batch_size=batch_size

self.input_size=input_size

self.hidden_size=hidden_size

self.rnncell=torch.nn.RNNCell(input_size=self.input_size,hidden_size=self.hidden_size)

def forward(self,input,hidden):

hidden=self.rnncell(input,hidden)

return hidden

def init_hidden(self):

return torch.zeros(self.batch_size,self.hidden_size)

net=Model(input_size,hidden_size,batch_size)

criterion=torch.nn.CrossEntropyLoss()

optimizer=torch.optim.Adam(net.parameters(),lr=0.1)

for epoch in range(15):

loss=0

optimizer.zero_grad()

hidden=net.init_hidden()

print('Predicted string:',end='')

for input,label in zip(inputs,labels):

hidden=net(input,hidden)

loss+=criterion(hidden,label)

_,idx=hidden.max(dim=1)

print(idx2char[idx.item()],end='')

loss.backward()

optimizer.step()

print(',Epoch [%d/15] loss=%.4f'%(epoch+1,loss.item()))

3. “编码器-解码器”的简单实现

seq2seq的PyTorch实现_哔哩哔哩_bilibili

import torch

import numpy as np

import torch.nn as nn

import torch.utils.data as Data

device = torch.device('cuda' if torch.cuda.is_available() else 'cpu')

letter = [c for c in 'SE?abcdefghijklmnopqrstuvwxyz']

letter2idx = {n: i for i, n in enumerate(letter)}

seq_data = [['man', 'women'], ['black', 'white'], ['king', 'queen'], ['girl', 'boy'], ['up', 'down'], ['high', 'low']]

n_step = max([max(len(i), len(j)) for i, j in seq_data]) # max_len(=5)

n_hidden = 128

n_class = len(letter2idx)

batch_size = 3

def make_data(seq_data):

enc_input_all, dec_input_all, dec_output_all = [], [], []

for seq in seq_data:

for i in range(2):

seq[i] = seq[i] + '?' * (n_step - len(seq[i]))

enc_input = [letter2idx[n] for n in (seq[0] + 'E')]

dec_input = [letter2idx[n] for n in ('S' + seq[1])]

dec_output = [letter2idx[n] for n in (seq[1] + 'E')]

enc_input_all.append(np.eye(n_class)[enc_input])

dec_input_all.append(np.eye(n_class)[dec_input])

dec_output_all.append(dec_output)

return torch.Tensor(enc_input_all), torch.Tensor(dec_input_all), torch.LongTensor(dec_output_all)

enc_input_all, dec_input_all, dec_output_all = make_data(seq_data)

class TranslateDataSet(Data.Dataset):

def __init__(self, enc_input_all, dec_input_all, dec_output_all):

self.enc_input_all = enc_input_all

self.dec_input_all = dec_input_all

self.dec_output_all = dec_output_all

def __len__(self):

return len(self.enc_input_all)

def __getitem__(self, idx):

return self.enc_input_all[idx], self.dec_input_all[idx], self.dec_output_all[idx]

loader = Data.DataLoader(TranslateDataSet(enc_input_all, dec_input_all, dec_output_all), batch_size, True)

class Seq2Seq(nn.Module):

def __init__(self):

super(Seq2Seq, self).__init__()

self.encoder = nn.RNN(input_size=n_class, hidden_size=n_hidden, dropout=0.5)

self.decoder = nn.RNN(input_size=n_class, hidden_size=n_hidden, dropout=0.5)

self.fc = nn.Linear(n_hidden, n_class)

def forward(self, enc_input, enc_hidden, dec_input):

enc_input = enc_input.transpose(0, 1)

dec_input = dec_input.transpose(0, 1)

_, h_t = self.encoder(enc_input, enc_hidden)

outputs, _ = self.decoder(dec_input, h_t)

model = self.fc(outputs)

return model

model = Seq2Seq().to(device)

criterion = nn.CrossEntropyLoss().to(device)

optimizer = torch.optim.Adam(model.parameters(), lr=0.001)

for epoch in range(5000):

for enc_input_batch, dec_input_batch, dec_output_batch in loader:

h_0 = torch.zeros(1, batch_size, n_hidden).to(device)

(enc_input_batch, dec_intput_batch, dec_output_batch) = (

enc_input_batch.to(device), dec_input_batch.to(device), dec_output_batch.to(device))

pred = model(enc_input_batch, h_0, dec_intput_batch)

pred = pred.transpose(0, 1)

loss = 0

for i in range(len(dec_output_batch)):

loss += criterion(pred[i], dec_output_batch[i])

if (epoch + 1) % 1000 == 0:

print('Epoch:', '%04d' % (epoch + 1), 'cost =', '{:.6f}'.format(loss))

optimizer.zero_grad()

loss.backward()

optimizer.step()

def translate(word):

enc_input, dec_input, _ = make_data([[word, '?' * n_step]])

enc_input, dec_input = enc_input.to(device), dec_input.to(device)

hidden = torch.zeros(1, 1, n_hidden).to(device)

output = model(enc_input, hidden, dec_input)

predict = output.data.max(2, keepdim=True)[1]

decoded = [letter[i] for i in predict]

translated = ''.join(decoded[:decoded.index('E')])

return translated.replace('?', '')

print('test')

print('man ->', translate('man'))

print('mans ->', translate('mans'))

print('king ->', translate('king'))

print('black ->', translate('black'))

print('up ->', translate('up'))

4.简单总结nn.RNNCell、nn.RNN

具体来说,nn.RNNCell是RNN的一个单操作,它接受一个输入端的输入和一个隐藏状态作为输入,并输出一个新的隐藏状态。nn.RNNCell可以通过堆叠多个单元来构建多层的RNN。

nn.RNN则是通过重复调用nn.RNNCell来实现整个循环过程。它接受一个序列作为输入,并在序列的每个时间步应用nn.RNNCell。nn.RNN还可以支持双向RNN,它在每个时间步同时应用一个前向和一个反向的RNNCell。

概括起来就是,nn.RNNCell用于定义循环神经网络的一个单元操作,而nn.RNN利用nn.RNNCell来构建整个循环神经网络。

5.谈一谈对“序列”、“序列到序列”的理解

序列是指一系列按照顺序排列的元素或事件。在计算机领域,序列一般都是一组数据、文本或符号号串,按照排序组合成的一种结构。

“序列到序列”(sequence-to-sequence简seq2seq),该模型包括两个主要部分:编码器(encoder)和解码器(decoder)。编码器将输入序列(如一个句子)转换为固定长度的向量表示,然后解码器将这个向量表示转换为输出序列(如另一种语言的句子)。序列到序列模型可以用来训练循环神经网络(RNN)或者长短期记忆网络LSTM,有效地处理输入和输出序列之间的复杂关系。

6.总结本周理论课和作业,写心得体会

理论课上的学习初步了解到了循环的原理,结合视频理解了“时间”在循环神经网络的具体体现,循环神经网络中的“循环”让其内部的一或多个节点保存上一时刻的状态,并将其作为当前时刻的输入,因此网络会“记忆”之前的信息并将其应用于当前的计算。【循环神经网络】5分钟搞懂RNN,3D动画深入浅出_哔哩哔哩_bilibili

本次实验也有很多含金量高的视频,对视频里嵌入大概的理解——举例子是:

有一个向量(a,b),其中,a表示对A的喜爱程度,b表示对B的喜爱程度,其中数值越大,表示喜爱程度越大,负数表示厌恶。现有甲乙丙丁四人,分别表示的向量可以是甲(-1,5)代表甲轻微厌恶A且很喜欢B,乙(1,5),丙(3,-1),丁(-3,-2),通过这样的方式同样定义一组词嵌入,然后我们就可以通过向量的夹角来判断他们的相似度,而当维度过高时,嵌入维度的定义也可以让神经网络去更新,而不需要举例子来探究实际意义,只需要根据夹角来判断语义相似度即可。

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?