4. 查询语句

4.1 语法规则与数据准备

官方网址

https://cwiki.apache.org/confluence/display/Hive/LanguageManual+Select

基本查询语句语法:

SELECT [ALL | DISTINCT] select_expr, select_expr, ...

FROM table_reference

[WHERE where_condition]

[GROUP BY col_list]

[ORDER BY col_list]

[CLUSTER BY col_list

| [DISTRIBUTE BY col_list] [SORT BY col_list]

]

[LIMIT [offset,] rows]

select [all | distinct] select_expr, select_expr, ...

from table_reference

[where where_condition]

[group by col_list]

[order by col_list]

[cluster by col_list

| [distribute by col_list] [sort by col_list]

]

[limit [offset,] rows]

数据准备:

原始数据

部门表:dept

10 accounting 1700

20 research 1800

30 sales 1900

40 operations 1700

雇员表emp:

7369 smith clerk 7902 1980-12-17 800.00 20

7499 allen salesman 7698 1981-2-20 1600.00 300.00 30

7521 ward salesman 7698 1981-2-22 1250.00 500.00 30

7566 jones manager 7839 1981-4-2 2975.00 20

7654 martin salesman 7698 1981-9-28 1250.00 1400.00 30

7698 blake manager 7839 1981-5-1 2850.00 30

7782 clark manager 7839 1981-6-9 2450.00 10

7788 scott analyst 7566 1987-4-19 3000.00 20

7839 king president 1981-11-17 5000.00 10

7844 turner salesman 7698 1981-9-8 1500.00 0.00 30

7876 adams clerk 7788 1987-5-23 1100.00 20

7900 james clerk 7698 1981-12-3 950.00 30

7902 ford analyst 7566 1981-12-3 3000.00 20

7934 miller clerk 7782 1982-1-23 1300.00 10

插入数据

创建部门表

create table if not exists dept(

deptno int,

dname string,

loc int

)

row format delimited fields terminated by '\t';

创建员工表

create table if not exists emp(

empno int,

ename string,

job string,

mgr int,

hiredate string,

sal double,

comm double,

deptno int)

row format delimited fields terminated by '\t';

在node4将数据写到/root/data/下面的两个文件中

[root@node4 data]# vim dept.txt

[root@node4 data]# vim emp.txt

[root@node4 data]# cat dept.txt

10 ccounting 1700

20 research 1800

30 sales 1900

40 operations 1700

[root@node4 data]# cat emp.txt

7369 mith clerk 7902 1980-12-17 800.00 20

7499 allen salesman 7698 1981-2-20 1600.00 300.00 30

7521 ward salesman 7698 1981-2-22 1250.00 500.00 30

7566 jones manager 7839 1981-4-2 2975.00 20

7654 martin salesman 7698 1981-9-28 1250.00 1400.00 30

7698 blake manager 7839 1981-5-1 2850.00 30

7782 clark manager 7839 1981-6-9 2450.00 10

7788 scott analyst 7566 1987-4-19 3000.00 20

7839 king president 1981-11-17 5000.00 10

7844 turner salesman 7698 1981-9-8 1500.00 0.00 30

7876 adams clerk 7788 1987-5-23 1100.00 20

7900 james clerk 7698 1981-12-3 950.00 30

7902 ford analyst 7566 1981-12-3 3000.00 20

7934 miller clerk 7782 1982-1-23 1300.00 10

[root@node4 data]# pwd

/root/data

hive上操作:创建表+导

hive中创建表结构,hive中导入txt进入表

hive> create table if not exists dept(

> deptno int,

> dname string,

> loc int

> )

> row format delimited fields terminated by '\t';

OK

Time taken: 0.829 seconds

hive> load data local inpath '/root/data/dept.txt' into table dept;

Loading data to table default.dept

OK

Time taken: 0.916 seconds

hive> create table if not exists emp(

> empno int,

> ename string,

> job string,

> mgr int,

> hiredate string,

> sal double,

> comm double,

> deptno int)

> row format delimited fields terminated by '\t';

OK

Time taken: 0.226 seconds

hive> load data local inpath '/root/data/emp.txt' into table emp;

Loading data to table default.emp

OK

Time taken: 0.599 seconds

4.2 基本查询

4.2.1 全表和指定列查询

全表查询:使用*表示所有的列

hive> select * from dept;

OK

10 ccounting 1700

20 research 1800

30 sales 1900

40 operations 1700

Time taken: 0.424 seconds, Fetched: 4 row(s)

hive> select * from emp;

OK

7369 smith clerk 7902 1980-12-17 800.0 NULL 20

7499 allen salesman 7698 NULL 1600.00 300.0 30.0 NULL

7521 ward salesman 7698 NULL 1250.00 500.00 30.0 NULL NULL

Time taken: 0.401 seconds, Fetched: 15 row(s)

hive中查询指定列:select后加上具体列名称

hive> select deptno,dname from dept;

OK

10 ccounting

20 research

30 sales

40 operations

Time taken: 0.442 seconds, Fetched: 4 row(s)

SQL语言对大小写不敏感

可以写在一行or多行都行

关键词不能被缩写,不能被分行

复杂sql一般得分行来写

使用缩进符提高语句可读性

4.2.2 列别名

--查询雇员的名称和部门

hive> select ename name, deptno as no from emp;

OK

smith 20

allen salesman NULL

ward salesman NULL

jones manager 7839 NULL

martin salesman NULL

blake manager 7839 NULL

clark manager 7839 NULL

scott analyst 7566 NULL

king president NULL

turner salesman NULL

adams clerk 7788 1987-5-23 NULL

james clerk 7698 1981-12-3 NULL

ford analyst 7566 1981-12-3 NULL

miller clerk 7782 1982-1-23 NULL

NULL NULL

Time taken: 0.443 seconds, Fetched: 15 row(s)

......

hive列别名如何使用:在列名后面直接跟别名或使用as关键字跟列别名

使用列别名的好处:简化使用。

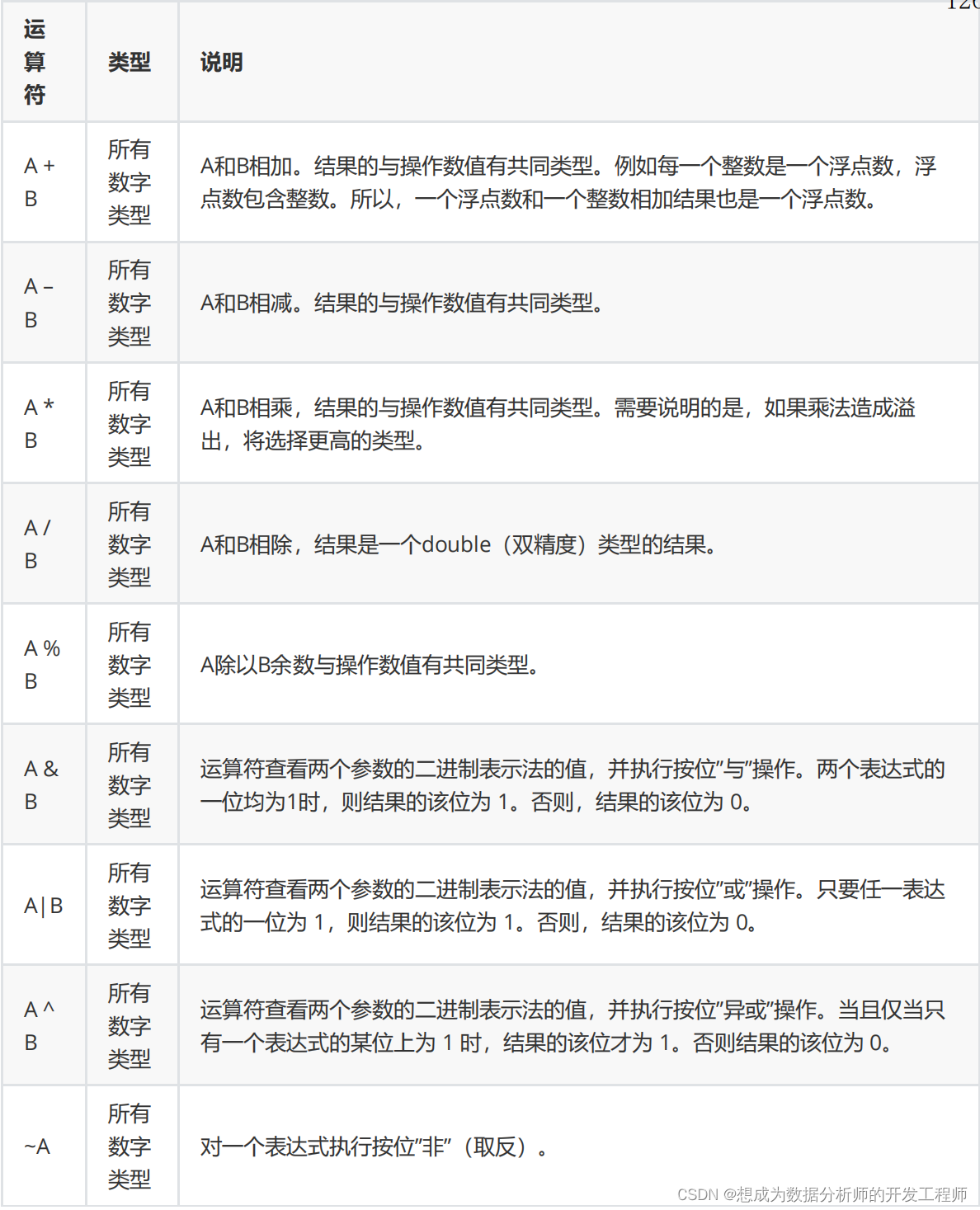

4.2.3 算术运算符

演示

hive> select ename,sal*12 from emp;

OK

smith 9600.0

allen 19200.0

ward 15000.0

jones 35700.0

martin 15000.0

blake 34200.0

clark 29400.0

scott 36000.0

king 60000.0

turner 18000.0

adams 13200.0

james 11400.0

ford 36000.0

miller 15600.0

Time taken: 0.993 seconds, Fetched: 14 row(s)

hive> select ename,sal*12

year_money,sal*12+2000 year_all_money from emp;

OK

smith 9600.0 11600.0

allen 19200.0 21200.0

ward 15000.0 17000.0

jones 35700.0 37700.0

martin 15000.0 17000.0

blake 34200.0 36200.0

clark 29400.0 31400.0

scott 36000.0 38000.0

king 60000.0 62000.0

turner 18000.0 20000.0

adams 13200.0 15200.0

james 11400.0 13400.0

ford 36000.0 38000.0

miller 15600.0 17600.0

Time taken: 0.322 seconds, Fetched: 14 row(s)

4.2.4 常用的聚合函数

# 查询总共多少行

hive> select count(*) from emp;

hive> select count(empno) from emp;

# 查询最低工资

hive> select min(sal) from emp;

# 查询最高工资

hive> select max(sal) from emp;

# 查询平均工资

hive> select avg(sal) from emp;

# 查询和

hive> select sum(sal) from emp;

4.2.5 where语句

使用where语句,作用:将不符合条件的数据过滤掉。

#查询工资大于2500的所有雇员。

hive> select * from emp where sal>2500;

OK

7566 jones manager 7839 1981-4-2

2975.0 NULL 20

7698 blake manager 7839 1981-5-1

2850.0 NULL 30

7788 scott analyst 7566 1987-4-19

3000.0 NULL 20

7839 king president NULL 1981-11-17

5000.0 NULL 10

7902 ford analyst 7566 1981-12-3

3000.0 NULL 20

hive> select * from emp where sal>2500 and

deptno=20;

OK

128

7566 jones manager 7839 1981-4-2

2975.0 NULL 20

7788 scott analyst 7566 1987-4-19

3000.0 NULL 20

7902 ford analyst 7566 1981-12-3

3000.0 NULL 20

Time taken: 0.504 seconds, Fetched: 3 row(s)

hive> select * from emp where sal>2500 and

deptno!=20;

OK

7698 blake manager 7839 1981-5-1

2850.0 NULL 30

7839 king president NULL 1981-11-17

5000.0 NULL 10

Time taken: 0.391 seconds, Fetched: 2 row(s)

4.2.6 limit语句

使用limit实现查询指定的行数。

hive> select * from emp limit 8;

OK

7369 smith clerk 7902 1980-12-17

800.0 NULL 20

7499 allen salesman 7698 1981-2-20

1600.0 300.0 30

7521 ward salesman 7698 1981-2-22

1250.0 500.0 30

7566 jones manager 7839 1981-4-2

2975.0 NULL 20

7654 martin salesman 7698 1981-9-28

1250.0 1400.0 30

129

130

7698 blake manager 7839 1981-5-1

2850.0 NULL 30

7782 clark manager 7839 1981-6-9

2450.0 NULL 10

7788 scott analyst 7566 1987-4-19

3000.0 NULL 20

Time taken: 0.434 seconds, Fetched: 8 row(s)

hive> select * from emp limit 0,5;

OK

7369 smith clerk 7902 1980-12-17

800.0 NULL 20

7499 allen salesman 7698 1981-2-20

1600.0 300.0 30

7521 ward salesman 7698 1981-2-22

1250.0 500.0 30

7566 jones manager 7839 1981-4-2

2975.0 NULL 20

7654 martin salesman 7698 1981-9-28

1250.0 1400.0 30

Time taken: 0.308 seconds, Fetched: 5 row(s)

hive> select * from emp limit 5,5;

OK

7698 blake manager 7839 1981-5-1

2850.0 NULL 30

7782 clark manager 7839 1981-6-9

2450.0 NULL 10

7788 scott analyst 7566 1987-4-19

3000.0 NULL 20

7839 king president NULL 1981-11-17

5000.0 NULL 10

7844 turner salesman 7698 1981-9-8

1500.0 0.0 30

Time taken: 0.293 seconds, Fetched: 5 row(s)

# limit语句和where语句可以一起使用,一起使用时,需要放在where语句的后面

hive> select * from emp where sal>1000 limit 5;

OK

7499 allen salesman 7698 1981-2-20

1600.0 300.0 30

7521 ward salesman 7698 1981-2-22

1250.0 500.0 30

7566 jones manager 7839 1981-4-2

2975.0 NULL 20

7654 martin salesman 7698 1981-9-28

1250.0 1400.0 30

7698 blake manager 7839 1981-5-1

2850.0 NULL 30

Time taken: 0.307 seconds, Fetched: 5 row(s)

4.2.7 比较运算符

通常用在where语句、having语句、join … on

# 查询工资等于1600的

hive> select * from emp where sal = 1600;

OK

7499 allen salesman 7698 1981-2-20

1600.0 300.0 30

Time taken: 0.313 seconds, Fetched: 1 row(s)

# 查询工资 [1000,2000]所有雇员

hive> select * from emp where sal between 1000

and 2000;

OK

133

134

7499 allen salesman 7698 1981-2-20

1600.0 300.0 30

7521 ward salesman 7698 1981-2-22

1250.0 500.0 30

7654 martin salesman 7698 1981-9-28

1250.0 1400.0 30

7844 turner salesman 7698 1981-9-8

1500.0 0.0 30

7876 adams clerk 7788 1987-5-23

1100.0 NULL 20

7934 miller clerk 7782 1982-1-23

1300.0 NULL 10

Time taken: 0.31 seconds, Fetched: 6 row(s)

# 查询工资不在 [1000,2000]区间的所有雇员 <1000 和

>2000的

hive> select * from emp where sal not between

1000 and 2000;

OK

7369 smith clerk 7902 1980-12-17

800.0 NULL 20

7566 jones manager 7839 1981-4-2

2975.0 NULL 20

7698 blake manager 7839 1981-5-1

2850.0 NULL 30

7782 clark manager 7839 1981-6-9

2450.0 NULL 10

7788 scott analyst 7566 1987-4-19

3000.0 NULL 20

7839 king president NULL 1981-11-17

5000.0 NULL 10

7900 james clerk 7698 1981-12-3

950.0 NULL 30

7902 ford analyst 7566 1981-12-3

3000.0 NULL 20

Time taken: 0.305 seconds, Fetched: 8 row(s)

#查询 comm列的值为null的

hive> select * from emp where comm is null;

OK

7369 smith clerk 7902 1980-12-17

800.0 NULL 20

7566 jones manager 7839 1981-4-2

2975.0 NULL 20

7698 blake manager 7839 1981-5-1

2850.0 NULL 30

7782 clark manager 7839 1981-6-9

2450.0 NULL 10

7788 scott analyst 7566 1987-4-19

3000.0 NULL 20

7839 king president NULL 1981-11-17

5000.0 NULL 10

7876 adams clerk 7788 1987-5-23

1100.0 NULL 20

7900 james clerk 7698 1981-12-3

950.0 NULL 30

7902 ford analyst 7566 1981-12-3

3000.0 NULL 20

7934 miller clerk 7782 1982-1-23

1300.0 NULL 10

Time taken: 0.335 seconds, Fetched: 10 row(s)

#查询 comm列的值不为null的

hive> select * from emp where comm is not null;

OK

7499 allen salesman 7698 1981-2-20

1600.0 300.0 30

136

7521 ward salesman 7698 1981-2-22

1250.0 500.0 30

7654 martin salesman 7698 1981-9-28

1250.0 1400.0 30

7844 turner salesman 7698 1981-9-8

1500.0 0.0 30

# 查询工资1500或1600的所有雇员

hive> select * from emp where sal in

(1500,1600);

OK

7499 allen salesman 7698 1981-2-20

1600.0 300.0 30

7844 turner salesman 7698 1981-9-8

1500.0 0.0 30

like和rlike:模糊查询,查询指定列中包含关键字的。

通配符:

% 代表0个或多个任意字符

_ 代表1个任意字符

rlike:是hive中的一个扩展功能,可以通过正则表达式指定匹配的条件。

案例实战:

#查询名称以字符m开头的所有雇员

hive> select * from emp where ename like 'm%';

OK

7654 martin salesman 7698 1981-9-28

1250.0 1400.0 30

7934 miller clerk 7782 1982-1-23

1300.0 NULL 10

137

Time taken: 0.282 seconds, Fetched: 2 row(s)

#查询名称中第二字符是m的所有雇员的信息

hive> select * from emp where ename like '_m%';

OK

7369 smith clerk 7902 1980-12-17

800.0 NULL 20

Time taken: 0.335 seconds, Fetched: 1 row(s)

# like查询名称中包含m的所有雇员的信息

hive> select * from emp where ename like '%m%';

OK

7369 smith clerk 7902 1980-12-17

800.0 NULL 20

7654 martin salesman 7698 1981-9-28

1250.0 1400.0 30

7876 adams clerk 7788 1987-5-23

1100.0 NULL 20

7900 james clerk 7698 1981-12-3

950.0 NULL 30

7934 miller clerk 7782 1982-1-23

1300.0 NULL 10

Time taken: 0.293 seconds, Fetched: 5 row(s)

# rlike查询名称中包含m的所有雇员的信息

hive> select * from emp where ename rlike

'[m]';

OK

7369 smith clerk 7902 1980-12-17

800.0 NULL 20

7654 martin salesman 7698 1981-9-28

1250.0 1400.0 30

7876 adams clerk 7788 1987-5-23

1100.0 NULL 20

7900 james clerk 7698 1981-12-3

950.0 NULL 30

7934 miller clerk 7782 1982-1-23

1300.0 NULL 10

Time taken: 0.262 seconds, Fetched: 5 row(s)

4.2.8 逻辑运算符

案例实战:

查询薪资大于1000,并且奖金大于等于500

hive> select * from emp where sal>1000 and comm >=500;

OK

7521 ward salesman 7698 1981-2-22

1250.0 500.0 30

7654 martin salesman 7698 1981-9-28

1250.0 1400.0 30

查询薪资大于1000,或者奖金大于等于500

hive> select * from emp where sal>1000 or comm>=500;

OK

7499 allen salesman 7698 1981-2-20

1600.0 300.0 30

7521 ward salesman 7698 1981-2-22

1250.0 500.0 30

7566 jones manager 7839 1981-4-2

2975.0 NULL 20

7654 martin salesman 7698 1981-9-28

1250.0 1400.0 30

7698 blake manager 7839 1981-5-1

2850.0 NULL 30

7782 clark manager 7839 1981-6-9

2450.0 NULL 10

7788 scott analyst 7566 1987-4-19

3000.0 NULL 20

7839 king president NULL 1981-11-17

5000.0 NULL 10

7844 turner salesman 7698 1981-9-8

1500.0 0.0 30

7876 adams clerk 7788 1987-5-23

1100.0 NULL 20

7902 ford analyst 7566 1981-12-3

3000.0 NULL 20

7934 miller clerk 7782 1982-1-23

1300.0 NULL 10

查询除了10和30部门下的所有雇员

4.3 分组

4.3.1 group by语句

group by通常和聚合函数一起使用,按照一个或者多个列进行分组,然后在对每组数据进行聚合操作。

案例实战:

查询每个部门的平均工资、最高工资、最低工资

hive> select deptno,avg(sal) avg_sal,max(sal)

max_sal,min(sal) min_sal from emp group by

deptno;

Query ID = root_20211115150801_985c1ac7-9260-

423b-a9b0-72eae86b3b92

Total jobs = 1

Launching Job 1 out of 1

Number of reduce tasks not specified. Estimated

from input data size: 1

In order to change the average load for a

reducer (in bytes):

set hive.exec.reducers.bytes.per.reducer=

<number>

In order to limit the maximum number of

reducers:

set hive.exec.reducers.max=<number>

In order to set a constant number of reducers:

set mapreduce.job.reduces=<number>

Starting Job = job_1636950452604_0003, Tracking

URL =

http://node4:8088/proxy/application_16369504526

04_0003/

Kill Command = /opt/hadoop-3.1.3/bin/mapred job

-kill job_1636950452604_0003

Hadoop job information for Stage-1: number of

mappers: 1; number of reducers: 1

10 2916.6666666666665 5000.0 1300.0

20 2175.0 3000.0 800.0

30 1566.6666666666667 2850.0 950.0

使用group by语句后,select语句中执行出现group by语句中的列名和聚合函数

hive> select deptno,avg(sal) avg_sal,max(sal) max_sal,min(sal) min_sal,ename from emp group by deptno;

FAILED: SemanticException [Error 10025]: Line

1:65 Expression not in GROUP BY key 'ename'

4.3.2 having语句

where语句中不能出现聚合函数,或者通过聚合函数计算的结果。

having语句只能用在group by语句的后面,在一个没有group by语句的sql中不能出现having语句。

案例实战:

查询每个部门平均薪资大于1800的部门的平均工资、最高工资、最低工资。

hive> select deptno,avg(sal) avg_sal,max(sal)

max_sal,min(sal) min_sal from emp group by

deptno where avg_sal>1800;

FAILED: ParseException line 1:90 missing EOF at

'where' near 'deptno'

hive> select deptno,avg(sal) avg_sal,max(sal)

max_sal,min(sal) min_sal from emp group by

deptno having avg_sal>1800;

Query ID = root_20211115151258_0b1728f2-27f6-

4b33-84aa-15a93421212b

Total jobs = 1

Launching Job 1 out of 1

4.4 关联查询

4.4.1 表别名

好处:简化查询和提交查询效率

案例实战:

hive> select e.empno,e.ename,d.deptno,d.dname

> from emp e,dept as d

> where e.deptno = d.deptno;

Query ID = root_20211115153044_53c8363f-fe06-

40ec-95ab-4d85e4c0d521

Total jobs = 1

Execution completed successfully

7369 smith 20 research

7499 allen 30 sales

7521 ward 30 sales

7566 jones 20 research

7654 martin 30 sales

7698 blake 30 sales

7782 clark 10 accounting

7788 scott 20 research

7839 king 10 accounting

7844 turner 30 sales

7876 adams 20 research

7900 james 30 sales

7902 ford 20 research

7934 miller 10 accounting

4.4.2 笛卡尔积

hive> select e.empno,e.ename,d.deptno,d.dname

> from emp e,dept as d;

Warning: Map Join MAPJOIN[9][bigTable=?] in

task 'Stage-3:MAPRED' is a cross product

Query ID = root_20211115153651_bd271024-3bd7-

4355-acad-2c74042c5938

Total jobs = 1

Execution completed successfully

7900 james 10 accounting

7900 james 20 research

7900 james 30 sales

7900 james 40 operations

7902 ford 10 accounting

7902 ford 20 research

7902 ford 30 sales

7902 ford 40 operations

7934 miller 10 accounting

7934 miller 20 research

7934 miller 30 sales

7934 miller 40 operations

Time taken: 43.827 seconds, Fetched: 56 row(s)

笛卡尔积:在省略了连接条件或连接条件无效是会出现笛卡尔积。

编写sql时要避免出现笛卡尔积。将第一个表的每一条数据和第二表中的数据依次连接,第一个表中m行数据,第二表中有n行数据,笛卡尔积的行数为m*n行。

4.4.3 join语句

- 内连接

只有进行连接的两张表的数据都存在连接条件相匹配时才会查询出来。

hive> select e.empno,e.ename,d.dname from

emp e join dept d on e.deptno=d.deptno;

Query ID = root_20211118102413_dda90f63-

c584-4d88-997c-318ddfd18b6b

Total jobs = 1

7369 smith research

7499 allen sales

7521 ward sales

7566 jones research

7654 martin sales

7698 blake sales

7782 clark accounting

7788 scott research

7839 king accounting

7844 turner sales

7876 adams research

7900 james sales

7902 ford research

7934 miller accounting

- 右外连接

右外连接:join关键字右边的表所有的记录都会返回。

hive>select e.empno,e.ename,d.dname from

emp e right join dept d on

e.deptno=d.deptno;

7782 clark accounting

7839 king accounting

7934 miller accounting

7369 smith research

7566 jones research

7788 scott research

7876 adams research

7902 ford research

7499 allen sales

7521 ward sales

7654 martin sales

7698 blake sales

7844 turner sales

7900 james sales

NULL NULL operations #使用null替代

- 左外连接

左外连接:join关键字左边的表所有的记录都会返回。

hive>select e.empno,e.ename,d.dname from

emp e left join dept d on

e.deptno=d.deptno;

- 全外连接

hive>select e.empno,e.ename,d.dname from

emp e full join dept d on

e.deptno=d.deptno;

第一部分:两张有对应关联关系数据。

第二部分:左表中在右边表找不到匹配的数据。

第三部分:右表中在左边表找不到匹配的数据

4.5 排序

4.5.1 order by

全局排序,hql转换后的mr左右只有一个reduce任务。当数据量比较大时order by就要慎用,很有可能导致reduce需要较长的时间才能完成,或者完不成。

格式: order by 字段名 [asc|desc]

默认是asc 升序,desc表示降序

位置: order by语句通常防止hql语句的最后。

hive> select * from emp order by sal;

Query ID = root_20211118103903_c519efbd-9615-

431b-9a56-730616a097ba

Total jobs = 1

......

Hadoop job information for Stage-1: number of

mappers: 1; number of reducers: 1

......

7369 smith clerk 7902 1980-12-17

800.0 NULL 20

7900 james clerk 7698 1981-12-3

950.0 NULL 30

7876 adams clerk 7788 1987-5-23

1100.0 NULL 20

7521 ward salesman 7698 1981-2-22

1250.0 500.0 30

7654 martin salesman 7698 1981-9-28

1250.0 1400.0 30

7934 miller clerk 7782 1982-1-23

1300.0 NULL 10

7844 turner salesman 7698 1981-9-8

1500.0 0.0 30

7499 allen salesman 7698 1981-2-20

1600.0 300.0 30

7782 clark manager 7839 1981-6-9

2450.0 NULL 10

7698 blake manager 7839 1981-5-1

2850.0 NULL 30

7566 jones manager 7839 1981-4-2

2975.0 NULL 20

148

7788 scott analyst 7566 1987-4-19

3000.0 NULL 20

7902 ford analyst 7566 1981-12-3

3000.0 NULL 20

7839 king president NULL 1981-11-17

5000.0 NULL 10

Time taken: 33.587 seconds, Fetched: 14 row(s)

# 可以使用列的别名进行排序

hive> select empno,ename,sal*12 year_sal from

emp order by year_sal;

7369 smith 9600.0

7900 james 11400.0

7876 adams 13200.0

7521 ward 15000.0

7654 martin 15000.0

......

# 多列排序

hive> select empno,ename,deptno,sal from emp

order by deptno,sal;

7934 miller 10 1300.0

7782 clark 10 2450.0

7839 king 10 5000.0

7369 smith 20 800.0

7876 adams 20 1100.0

7566 jones 20 2975.0

7788 scott 20 3000.0

7902 ford 20 3000.0

7900 james 30 950.0

7654 martin 30 1250.0

7521 ward 30 1250.0

7844 turner 30 1500.0

7499 allen 30 1600

7698 blake 30 2850.0

#先按照部门编号从小到大排序,部门相同时,在按照sal从小到大排序

hive> select empno,ename,deptno,sal from emp

order by sal,deptno;

7369 smith 20 800.0

7900 james 30 950.0

7876 adams 20 1100.0

7521 ward 30 1250.0

7654 martin 30 1250.0

7934 miller 10 1300.0

7844 turner 30 1500.0

7499 allen 30 1600.0

7782 clark 10 2450.0

7698 blake 30 2850.0

7566 jones 20 2975.0

7788 scott 20 3000.0

7902 ford 20 3000.0

7839 king 10 5000.0

#先按照sal从小到大排序,sal相同时,在按照deptno从小到大排序

4.5.2 sort by

**sort by作用:**在每一个reduce task任务内部排序,在大量数据集时使用order by存在着效率低下的问题,很多场景中并不需要全局排序。

每个reduce任务都会对应的结果文件part-r-xxxxxx,在每一个结果文件中都是有序的,全局是无序的。

通过set命令设置reduce任务的数量,有效期是直到下次修改该参数的值或hive连接关闭:

# set 参数=value; 设置参数的值

hive> set mapreduce.job.reduces=3;

# set 参数; 查看reduce产生的值

hive> set mapreduce.job.reduces;

mapreduce.job.reduces=3

hive> select * from emp sort by deptno desc;

Query ID = root_20211118105647_6964a47b-fc4b-4907-8f35-ea3c9465ed59

Total jobs = 1

Launching Job 1 out of 1

......

Hadoop job information for Stage-1: number of

mappers: 1; number of reducers: 3

7844 turner salesman 7698 1981-9-8

1500.0 0.0 30

7698 blake manager 7839 1981-5-1

2850.0 NULL 30

7654 martin salesman 7698 1981-9-28

1250.0 1400.0 30

7788 scott analyst 7566 1987-4-19

3000.0 NULL 20

7839 king president NULL 1981-11-17

5000.0 NULL 10

7782 clark manager 7839 1981-6-9

2450.0 NULL 10

7521 ward salesman 7698 1981-2-22

1250.0 500.0 30

7499 allen salesman 7698 1981-2-20

1600.0 300.0 30

7900 james clerk 7698 1981-12-3

950.0 NULL 30

7876 adams clerk 7788 1987-5-23

1100.0 NULL 20

7566 jones manager 7839 1981-4-2

2975.0 NULL 20

7934 miller clerk 7782 1982-1-23

1300.0 NULL 10

7902 ford analyst 7566 1981-12-3

3000.0 NULL 20

7369 smith clerk 7902 1980-12-17

800.0 NULL 20

结果不够直观,将之后的结果文件下载到本地。

hive>insert overwrite local directory

'/opt/sortbyresult'

select * from emp sort by deptno desc;

node4查看文件:

[root@node4 ~]# cd /opt/sortbyresult/

[root@node4 sortbyresult]# pwd

/opt/sortbyresult

[root@node4 sortbyresult]# ls

000000_0 000001_0 000002_0

[root@node4 sortbyresult]# ll

总用量 12

-rw-r--r-- 1 root root 288 11月 18 11:01

000000_0

-rw-r--r-- 1 root root 282 11月 18 11:01

000001_0

-rw-r--r-- 1 root root 91 11月 18 11:01

000002_0

[root@node4 sortbyresult]# cat -A 000000_0

7844^Aturner^Asalesman^A7698^A1981-9-

8^A1500.0^A0.0^A30$

7698^Ablake^Amanager^A7839^A1981-5-

1^A2850.0^A\N^A30$

7654^Amartin^Asalesman^A7698^A1981-9-

28^A1250.0^A1400.0^A30$

7788^Ascott^Aanalyst^A7566^A1987-4-

19^A3000.0^A\N^A20$

7839^Aking^Apresident^A\N^A1981-11-

17^A5000.0^A\N^A10$

7782^Aclark^Amanager^A7839^A1981-6-

9^A2450.0^A\N^A10$

[root@node4 sortbyresult]# cat -A 000001_0

7521^Award^Asalesman^A7698^A1981-2-

22^A1250.0^A500.0^A30$

7499^Aallen^Asalesman^A7698^A1981-2-

20^A1600.0^A300.0^A30$

7900^Ajames^Aclerk^A7698^A1981-12-

3^A950.0^A\N^A30$

7876^Aadams^Aclerk^A7788^A1987-5-

23^A1100.0^A\N^A20$

7566^Ajones^Amanager^A7839^A1981-4-

2^A2975.0^A\N^A20$

7934^Amiller^Aclerk^A7782^A1982-1-

23^A1300.0^A\N^A10$

[root@node4 sortbyresult]# cat -A 000002_0

7902^Aford^Aanalyst^A7566^A1981-12-3^A3000.0^A\N^A20$

7369^Asmith^Aclerk^A7902^A1980-12-17^A800.0^A\N^A20$

4.5.3 distribute by 分区

**distribute by:**对应MR作业的partition(自定义分区),通常结合sort by一起使用。在某些情况下需要控制特定的行应该到哪个reduce任务中,为了后续的聚合操作。分区有对应reduce任务,有几个分区就有几个reduce任务;否则就看不到distribute by的效果。

实战演示:

先按照部门编号分区,再按照雇员编号的降序排序

hive> set mapreduce.job.reduces=4;

hive> insert overwrite local directory

'/opt/distributebyresult'

select * from emp distribute by deptno sort by

empno desc;

Query ID = root_20211118111723_4afc790e-a7e8-

4d5c-ba7a-8d790a379ea5

Total jobs = 1

Launching Job 1 out of 1

Number of reduce tasks not specified.

Defaulting to jobconf value of: 4

......

Hadoop job information for Stage-1: number of

mappers: 1; number of reducers: 4

在node4中查看结果文件:

[root@node4 distributebyresult]# pwd

/opt/distributebyresult

[root@node4 distributebyresult]# ll

总用量 8

-rw-r--r-- 1 root root 229 11月 18 11:18

000000_0

-rw-r--r-- 1 root root 0 11月 18 11:18

000001_0

-rw-r--r-- 1 root root 432 11月 18 11:18

000002_0

-rw-r--r-- 1 root root 0 11月 18 11:18

000003_0

[root@node4 distributebyresult]# cat -A

000000_0

7902^Aford^Aanalyst^A7566^A1981-12-

3^A3000.0^A\N^A20$

7876^Aadams^Aclerk^A7788^A1987-5-

23^A1100.0^A\N^A20$

7788^Ascott^Aanalyst^A7566^A1987-4-

19^A3000.0^A\N^A20$

7566^Ajones^Amanager^A7839^A1981-4-

2^A2975.0^A\N^A20$

7369^Asmith^Aclerk^A7902^A1980-12-

17^A800.0^A\N^A20$

[root@node4 distributebyresult]# cat -A

000001_0

[root@node4 distributebyresult]# cat -A

000002_0

7934^Amiller^Aclerk^A7782^A1982-1-

23^A1300.0^A\N^A10$

7900^Ajames^Aclerk^A7698^A1981-12-

3^A950.0^A\N^A30$

7844^Aturner^Asalesman^A7698^A1981-9-

8^A1500.0^A0.0^A30$

7839^Aking^Apresident^A\N^A1981-11-

17^A5000.0^A\N^A10$

7782^Aclark^Amanager^A7839^A1981-6-

9^A2450.0^A\N^A10$

7698^Ablake^Amanager^A7839^A1981-5-

1^A2850.0^A\N^A30$

7654^Amartin^Asalesman^A7698^A1981-9-

28^A1250.0^A1400.0^A30$

7521^Award^Asalesman^A7698^A1981-2-

22^A1250.0^A500.0^A30$

7499^Aallen^Asalesman^A7698^A1981-2-

20^A1600.0^A300.0^A30$

[root@node4 distributebyresult]# cat -A

000003_0

distribute by分区规则是根据分区字段的hash值与分区数(reduce任务的总数)进行除模后,余数相同的分到一个分区中。

要求:distribute by语句写在sort by语句的前面。

4.5.4 cluster by

当distribute by和sort by后面的字段相同时,可以使用cluster by进行简化。功能是等价的;但是只能使用升序排序,不能指定排序规则为asc或者desc。

distribute by是分区;sort by是排序

hive>select * from emp distribute by deptno sort by deptno;

#可以简化为

hive>select * from emp cluster by deptno;

hive>insert overwrite local directory

'/opt/clusterbyresult'

select * from emp cluster by deptno;

按照部门的编号进行分区,在reduce任务内部再按照部门的编号进行升序排序。使用部门编号求hash值%分区的数量 取余数,结果相同的数据被分到一个分区中。

node4上查看结果:

[root@node4 distributebyresult]# cd

/opt/clusterbyresult/

[root@node4 clusterbyresult]# ll

总用量 8

-rw-r--r-- 1 root root 229 11月 18 11:28

000000_0

-rw-r--r-- 1 root root 0 11月 18 11:28

000001_0

-rw-r--r-- 1 root root 432 11月 18 11:28

000002_0

-rw-r--r-- 1 root root 0 11月 18 11:28

000003_0

[root@node4 clusterbyresult]# cat -A 000000_0

7902^Aford^Aanalyst^A7566^A1981-12-

3^A3000.0^A\N^A20$

7788^Ascott^Aanalyst^A7566^A1987-4-

19^A3000.0^A\N^A20$

7566^Ajones^Amanager^A7839^A1981-4-

2^A2975.0^A\N^A20$

7876^Aadams^Aclerk^A7788^A1987-5-

23^A1100.0^A\N^A20$

7369^Asmith^Aclerk^A7902^A1980-12-

17^A800.0^A\N^A20$

[root@node4 clusterbyresult]# cat -A 000002_0

7934^Amiller^Aclerk^A7782^A1982-1-

23^A1300.0^A\N^A10$

7839^Aking^Apresident^A\N^A1981-11-

17^A5000.0^A\N^A10$

7782^Aclark^Amanager^A7839^A1981-6-

9^A2450.0^A\N^A10$

7698^Ablake^Amanager^A7839^A1981-5-

1^A2850.0^A\N^A30$

7654^Amartin^Asalesman^A7698^A1981-9-

28^A1250.0^A1400.0^A30$

7900^Ajames^Aclerk^A7698^A1981-12-

3^A950.0^A\N^A30$

7521^Award^Asalesman^A7698^A1981-2-

22^A1250.0^A500.0^A30$

7499^Aallen^Asalesman^A7698^A1981-2-

20^A1600.0^A300.0^A30$

7844^Aturner^Asalesman^A7698^A1981-9-

8^A1500.0^A0.0^A30$

4.6 基站掉话率分析实战

需求:找出掉话率最高的前10基站

创建原始数据表:

create table jizhan(

record_time string,

imei int,

cell string,

ph_num int,

call_num int,

drop_num int,

duration int,

drop_rate double,

net_type string,

erl int)

row format delimited fields terminated by ',';

字段描述

record_time:通话时间

imei:基站编号

cell:手机编号

drop_num:掉话的秒数

duration:通话持续总秒数

创建结果表:

create table jizhan_result(

imei string,

drop_num int,

duration int,

drop_rate double

);

首先将软件/data/cdr_summ_imei_cell_info.csv上传到node4上/root/data目录下,将第一行的表头数据删除

然后load到hive的jizhan表中

hive> load data local inpath

'/root/data/cdr_summ_imei_cell_info.csv' into

table jizhan;

Loading data to table default.jizhan

OK

Time taken: 2.734 seconds

hive> select * from jizhan limit 10;

OK

2011-07-13 00:00:00+08 356966 29448-37062 0

0 0 0 0.0 0

2011-07-13 00:00:00+08 352024 29448-51331 0

0 0 0 0.0 0

2011-07-13 00:00:00+08 353736 29448-51331 0

0 0 0 0.0 0

2011-07-13 00:00:00+08 353736 29448-51333 0

0 0 0 0.0 0

2011-07-13 00:00:00+08 351545 29448-51333 0

0 0 0 0.0 0

2011-07-13 00:00:00+08 353736 29448-51343 1

0 0 8 0.0 0

2011-07-13 00:00:00+08 359681 29448-51462 0

0 0 0 0.0 0

2011-07-13 00:00:00+08 354707 29448-51462 0

0 0 0 0.0 0

2011-07-13 00:00:00+08 356137 29448-51470 0

0 0 0 0.0 0

2011-07-13 00:00:00+08 352739 29448-51971 0

0 0 0 0.0 0

hive> select count(*) from jizhan;

976305

编写分析的sql语句

select imei,sum(drop_num) sdrop,sum(duration) sdura,sum(drop_num)/sum(duration) drop_rate

from jizhan

group by imei

order by drop_rate desc;

将分析的结果写入到jizhan_result表中:

from jizhan

insert into jizhan_result

select imei,sum(drop_num) sdrop,sum(duration) sdura,sum(drop_num)/sum(duration) drop_rate

group by imei

order by drop_rate desc;

查询结果表,获取前10条数据,也就掉话率最高的前10个基站:

hive> select * from jizhan_result limit 10;

OK

639876 1 734 0.0013623978201634877

356436 1 1028 9.727626459143969E-4

351760 1 1232 8.116883116883117E-4

368883 1 1448 6.906077348066298E-4

358849 1 1469 6.807351940095302E-4

358231 1 1613 6.199628022318661E-4

863738 2 3343 5.982650314089142E-4

865011 1 1864 5.36480686695279E-4

862242 1 1913 5.227391531625719E-4

350301 2 3998 5.002501250625312E-4

1650

1650

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?