Kubernetes

Kubernetes简介

Kubernetes是一个开源的容器编排引擎,最初由Google开发,并于2014年发布为开源项目。它旨在自动化容器部署、扩展和管理,使用户能够更轻松地管理容器化应用程序。Kubernetes提供了一个强大的平台,可帮助用户在私有、公有和混合云环境中轻松部署、扩展和管理容器化应用程序。在Docker技术的基础上,为容器化的应用提供部署运行、资源调度、服务发现和动态伸缩等一系列完整功能,提高了大规模容器集群管理的便捷性。

Kubernetes的主要特点包括自动化容器部署和扩展、自动容器健康检查、自动故障恢复、自动水平扩展、服务发现和负载均衡等。它还提供了丰富的API,可帮助用户轻松地管理和监控其容器化应用程序。

Kubernetes概念

-

Master:集群控制节点,每个集群需要至少一个master节点负责集群的管控

-

Node:工作负载节点,由master分配容器到这些node工作节点上,然后node节点上的docker负责容器的运行

-

Pod:kubernetes的最小控制单元,容器都是运行在pod中的,一个pod中可以有1个或者多个容器

-

Controller:控制器,通过它来实现对pod的管理,比如启动pod、停止pod、伸缩pod的数量等等

-

Service:pod对外服务的统一入口,下面可以维护者同一类的多个pod

-

Label:标签,用于对pod进行分类,同一类pod会拥有相同的标签

-

NameSpace:命名空间,用来隔离pod的运行环境

kubernetes在集群启动之后,会默认创建几个namespace:default、kube-node-lease、kube-public、kube-system 。

默认情况下,kubernetes集群中的所有的Pod都是可以相互访问的。但是在实际中,可能不想让两个Pod之间进行互相的访问,那此时就可以将两个Pod划分到不同的namespace下。kubernetes通过将集群内部的资源分配到不同的Namespace中,可以形成逻辑上的"组",以方便不同的组的资源进行隔离使用和管理

Kubernetes组件

master:集群的控制平面,负责集群的决策

-

ApiServer : 资源操作的唯一入口,接收用户输入的命令,提供认证、授权、API注册和发现等机制

-

Scheduler : 负责集群资源调度,按照预定的调度策略将Pod调度到相应的node节点上

-

ControllerManager : 负责维护集群的状态,比如程序部署安排、故障检测、自动扩展、滚动更新等

-

Etcd :负责存储集群中各种资源对象的信息,k/v方式存储,所有的 k8s 集群数据存放在此

-

Kuberctl: 命令行配置工具

node:集群的数据平面,负责为容器提供运行环境

-

Kubelet : 负责维护容器的生命周期,即通过控制docker,来创建、更新、销毁容器,会按固定频率检查节点健康状态并上报给 APIServer,该状态会记录在 Node 对象的 status 中。

-

KubeProxy : 负责提供集群内部的服务发现和负载均衡,主要就是为 Service 提供服务的,来实现内部从 Pod 到 Service

和外部 NodePort 到 Service 的访问。 -

Docker : 负责节点上容器的各种操作

Kubernetes部署

环境准备

| 主机名 | ip地址 | 环境说明 |

|---|---|---|

| controller | 192.168.200.10 | docker、kubeadm、kubelet |

| node1 | 192.168.200.20 | docker、kubeadm、kubelet |

| node2 | 192.168.200.30 | docker、kubeadm、kubelet |

准备工作:

关闭防火墙和selinux(在所有主机上操作)

[root@controller ~]# systemctl disable --now firewalld

Removed /etc/systemd/system/multi-user.target.wants/firewalld.service.

Removed /etc/systemd/system/dbus-org.fedoraproject.FirewallD1.service.

[root@controller ~]#

[root@controller ~]# setenforce 0

[root@controller ~]#

[root@controller ~]# sed -i 's/enforcing/disabled/' /etc/selinux/config

[root@controller ~]#

配置所需的yum源(在所有主机上操作)

[root@controller ~]# curl -o /etc/yum.repos.d/CentOS-Base.repo https://mirrors.aliyun.com/repo/Centos-vault-8.5.2111.repo

[root@controller ~]#

[root@controller ~]# curl -o docker-ce.repo https://mirrors.tuna.tsinghua.edu.cn/docker-ce/linux/centos/docker-ce.repo

[root@controller ~]# sed -i 's@https://download.docker.com@https://mirrors.tuna.tsinghua.edu.cn/docker-ce@g' docker-ce.repo

[root@controller ~]#

[root@controller ~]# vi /etc/yum.repos.d/kubernetes.repo

[root@controller ~]# cat /etc/yum.repos.d/kubernetes.repo

[kubernetes]

name=Kubernetes

baseurl=https://mirrors.aliyun.com/kubernetes/yum/repos/kubernetes-el7-x86_64

enabled=1

gpgcheck=0

repo_gpgcheck=0

gpgkey=https://mirrors.aliyun.com/kubernetes/yum/doc/yum-key.gpg https://mirrors.aliyun.com/kubernetes/yum/doc/rpm-package-key.gpg

[root@controller ~]#

[root@controller ~]# ls /etc/yum.repos.d/

CentOS-Base.repo docker-ce.repo kubernetes.repo

[root@controller ~]#

关闭swap分区,将swap那一行注释掉,重启(在所有主机上操作)

[root@controller ~]# vi /etc/fstab

[root@controller ~]#

[root@controller ~]# cat /etc/fstab

...

UUID=dbe042c2-a785-4b94-a4e9-dc50dd80ef1a /boot xfs defaults 0 0

#/dev/mapper/cs-swap none swap defaults 0 0

[root@controller ~]# reboot

配置主机名和ip映射(在所有主机上操作)

[root@controller ~]# vi /etc/hosts

[root@controller ~]#

[root@controller ~]# cat /etc/hosts

127.0.0.1 localhost localhost.localdomain localhost4 localhost4.localdomain4

::1 localhost localhost.localdomain localhost6 localhost6.localdomain6

192.168.200.10 controller

192.168.200.20 node1

192.168.200.30 node2

[root@controller ~]#

将桥接的IPv4流量传递到iptables的链:(仅在controller主机上操作)

[root@controller ~]# cat > /etc/sysctl.d/k8s.conf << EOF

net.bridge.bridge-nf-call-ip6tables = 1

net.bridge.bridge-nf-call-iptables = 1

net.ipv4.ip_forward = 1

EOF

[root@controller ~]#

[root@controller ~]# sysctl --system

...

* Applying /etc/sysctl.d/99-sysctl.conf ...

* Applying /etc/sysctl.d/k8s.conf ...

net.ipv4.ip_forward = 1

* Applying /etc/sysctl.conf ...

[root@controller ~]#

配置时间同步(在所有主机上操作)

配置controller主机上的时间同步,使用阿里云地址

[root@controller ~]# yum -y install chrony

[root@controller ~]# systemctl enable --now chronyd

[root@controller ~]#

[root@controller ~]# vi /etc/chrony.conf

# Use public servers from the pool.ntp.org project.

# Please consider joining the pool (http://www.pool.ntp.org/join.html).

pool time1.aliyun.com iburst

...

[root@controller ~]#

[root@controller ~]# systemctl restart chronyd

[root@controller ~]#

[root@controller ~]# timedatectl set-timezone Asia/Shanghai

[root@controller ~]# chronyc sources

MS Name/IP address Stratum Poll Reach LastRx Last sample

===============================================================================

^* 203.107.6.88 2 6 17 32 +12ms[ +12ms] +/- 34ms

[root@controller ~]#

配置node1和node2主机上的时间同步,pool后面修改为controller主机的主机名

[root@node1 ~]# yum -y install chrony

[root@node1 ~]# systemctl enable --now chronyd

[root@node1 ~]#

[root@node1 ~]# vi /etc/chrony.conf

# Use public servers from the pool.ntp.org project.

# Please consider joining the pool (http://www.pool.ntp.org/join.html).

pool controller iburst

...

[root@node1 ~]#

[root@node1 ~]# systemctl restart chronyd

[root@node1 ~]#

[root@node1 ~]# chronyc sources

MS Name/IP address Stratum Poll Reach LastRx Last sample

===============================================================================

^? controller 0 6 0 - +0ns[ +0ns] +/- 0ns

[root@node1 ~]#

配置免密登录

[root@controller ~]# ssh-keygen -t rsa

[root@controller ~]# ssh-copy-id root@192.168.200.10

[root@controller ~]# ssh-copy-id root@192.168.200.20

[root@controller ~]# ssh-copy-id root@192.168.200.30

在所有节点安装Docker/kubeadm/kubelet

Kubernetes默认CRI(容器运行时)为Docker,因此先安装Docker。

安装docker(在所有主机上操作)

[root@controller ~]# yum -y install docker-ce

[root@controller ~]# systemctl enable --now docker

Created symlink /etc/systemd/system/multi-user.target.wants/docker.service → /usr/lib/systemd/system/docker.service.

[root@controller ~]#

配置docker加速器(在所有主机上操作)

[root@controller ~]# vi /etc/docker/daemon.json

[root@controller ~]#

[root@controller ~]# cat /etc/docker/daemon.json

{

"registry-mirrors": ["https://b9pmyelo.mirror.aliyuncs.com"]

}

[root@controller ~]#

[root@controller ~]# systemctl restart docker

安装kubeadm,kubelet和kubectl

在所有主机上安装kubeadm,kubelet和kubectl,并设为开机自启但先不要启动服务。

[root@controller ~]# yum -y install kubelet kubectl kubeadm

[root@controller ~]# systemctl enable kubelet

Created symlink /etc/systemd/system/multi-user.target.wants/kubelet.service → /usr/lib/systemd/system/kubelet.service.

[root@controller ~]#

[root@controller ~]# systemctl status kubelet

● kubelet.service - kubelet: The Kubernetes Node Agent

Loaded: loaded (/usr/lib/systemd/system/kubelet.service; enabled; vendor preset: disabled)

Drop-In: /usr/lib/systemd/system/kubelet.service.d

└─10-kubeadm.conf

Active: inactive (dead)

Docs: https://kubernetes.io/docs/

[root@controller ~]#

配置containerd

为确保后面集群初始化及加入集群能够成功执行,需要配置containerd的配置文件/etc/containerd/config.toml.

[root@controller ~]# containerd config default > /etc/containerd/config.toml

[root@controller ~]#

[root@controller ~]# vi /etc/containerd/config.toml

...

sandbox_image = "registry.aliyuncs.com/google_containers/pause:3.6"

...

[root@controller ~]#

[root@controller ~]# systemctl restart containerd

[root@controller ~]# systemctl enable containerd

Created symlink /etc/systemd/system/multi-user.target.wants/containerd.service → /usr/lib/systemd/system/containerd.service.

[root@controller ~]#

将修改后的配置文件拷贝至node1和node2主机上,然后重启node1和node2主机上的containerd服务,并设置开机自启。

[root@controller ~]# cd /etc/containerd/

[root@controller containerd]#

[root@controller containerd]# scp config.toml 192.168.200.20:/etc/containerd/

config.toml 100% 6996 2.0MB/s 00:00

[root@controller containerd]#

[root@controller containerd]# scp config.toml 192.168.200.30:/etc/containerd/

config.toml 100% 6996 1.5MB/s 00:00

[root@controller containerd]#

[root@node1 ~]# systemctl restart containerd

[root@node1 ~]# systemctl enable containerd

[root@node2 ~]# systemctl restart containerd

[root@node2 ~]# systemctl enable containerd

部署Kubernetes Master

在controller主机上执行以下操作

初始化集群

[root@controller containerd]# kubeadm init \

--apiserver-advertise-address=192.168.200.10 \

--image-repository registry.aliyuncs.com/google_containers \

--kubernetes-version v1.28.2 \

--service-cidr=10.96.0.0/12 \

--pod-network-cidr=10.244.0.0/16

...

[WARNING FileExisting-tc]: tc not found in system path

[preflight] Pulling images required for setting up a Kubernetes cluster

Your Kubernetes control-plane has initialized successfully!

...

kubeadm join 192.168.200.10:6443 --token jrf9rj.68hf330j5b4b493n \

--discovery-token-ca-cert-hash sha256:75bc31eb21f696ae412ef7451d610081f6d527ab0ae1d8c4b5e412c5cf67f6a6

[root@controller containerd]#

将初始化的结果从To start using开始的内容保存到一个文件中,里面有后面要操作的步骤。

[root@controller containerd]# vi init

[root@controller containerd]#

[root@controller containerd]# cat init

To start using your cluster, you need to run the following as a regular user:

mkdir -p $HOME/.kube

sudo cp -i /etc/kubernetes/admin.conf $HOME/.kube/config

sudo chown $(id -u):$(id -g) $HOME/.kube/config

Alternatively, if you are the root user, you can run:

export KUBECONFIG=/etc/kubernetes/admin.conf

You should now deploy a pod network to the cluster.

Run "kubectl apply -f [podnetwork].yaml" with one of the options listed at:

https://kubernetes.io/docs/concepts/cluster-administration/addons/

Then you can join any number of worker nodes by running the following on each as root:

kubeadm join 192.168.200.10:6443 --token jrf9rj.68hf330j5b4b493n \

--discovery-token-ca-cert-hash sha256:75bc31eb21f696ae412ef7451d610081f6d527ab0ae1d8c4b5e412c5cf67f6a6

[root@controller containerd]#

添加环境变量

[root@controller ~]# export KUBECONFIG=/etc/kubernetes/admin.conf

[root@controller ~]# echo 'export KUBECONFIG=/etc/kubernetes/admin.conf' > /etc/profile.d/k8s.sh

[root@controller ~]#

[root@controller ~]# echo $KUBECONFIG

/etc/kubernetes/admin.conf

[root@controller ~]#

部署flannel

安装pod网络插件,配置flannel

[root@controller ~]# wget https://github.com/flannel-io/flannel/releases/latest/download/kube-flannel.yml

[root@controller ~]# ls

anaconda-ks.cfg kube-flannel.yml

[root@controller ~]#

[root@controller ~]# kubectl apply -f kube-flannel.yml

namespace/kube-flannel created

serviceaccount/flannel created

clusterrole.rbac.authorization.k8s.io/flannel created

clusterrolebinding.rbac.authorization.k8s.io/flannel created

configmap/kube-flannel-cfg created

daemonset.apps/kube-flannel-ds created

[root@controller ~]#

查看运行状态

[root@controller ~]# kubectl get -f kube-flannel.yml

NAME STATUS AGE

namespace/kube-flannel Active 4m54s

NAME SECRETS AGE

serviceaccount/flannel 0 4m54s

NAME CREATED AT

clusterrole.rbac.authorization.k8s.io/flannel 2023-11-16T13:47:51Z

NAME ROLE AGE

clusterrolebinding.rbac.authorization.k8s.io/flannel ClusterRole/flannel 4m54s

NAME DATA AGE

configmap/kube-flannel-cfg 2 4m54s

NAME DESIRED CURRENT READY UP-TO-DATE AVAILABLE NODE SELECTOR AGE

daemonset.apps/kube-flannel-ds 1 1 0 1 0 <none> 4m54s

[root@controller ~]#

将node1和node2加入到集群中(在node1和node2主机上操作)

[root@node1 ~]# kubeadm join 192.168.200.10:6443 --token jrf9rj.68hf330j5b4b493n \

> --discovery-token-ca-cert-hash sha256:75bc31eb21f696ae412ef7451d610081f6d527ab0ae1d8c4b5e412c5cf67f6a6

[root@node2 ~]# kubeadm join 192.168.200.10:6443 --token jrf9rj.68hf330j5b4b493n \

> --discovery-token-ca-cert-hash sha256:75bc31eb21f696ae412ef7451d610081f6d527ab0ae1d8c4b5e412c5cf67f6a6

查看是否成功加入集群,刚加入可能会显示notready状态,是因为在加载flannel组件需要等一等,建议打开vpn会快一些。

[root@controller ~]# kubectl get nodes

NAME STATUS ROLES AGE VERSION

controller Ready control-plane 12m v1.28.2

node1 Ready <none> 20s v1.28.2

node2 Ready <none> 8s v1.28.2

[root@controller ~]#

[root@controller ~]# kubectl get pods -n kube-flannel -o wide

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

kube-flannel-ds-27fl9 1/1 Running 0 11m 192.168.200.10 controller <none> <none>

kube-flannel-ds-bs7jx 1/1 Running 0 41s 192.168.200.30 node2 <none> <none>

kube-flannel-ds-s8t6d 1/1 Running 0 53s 192.168.200.20 node1 <none> <none>

[root@controller ~]#

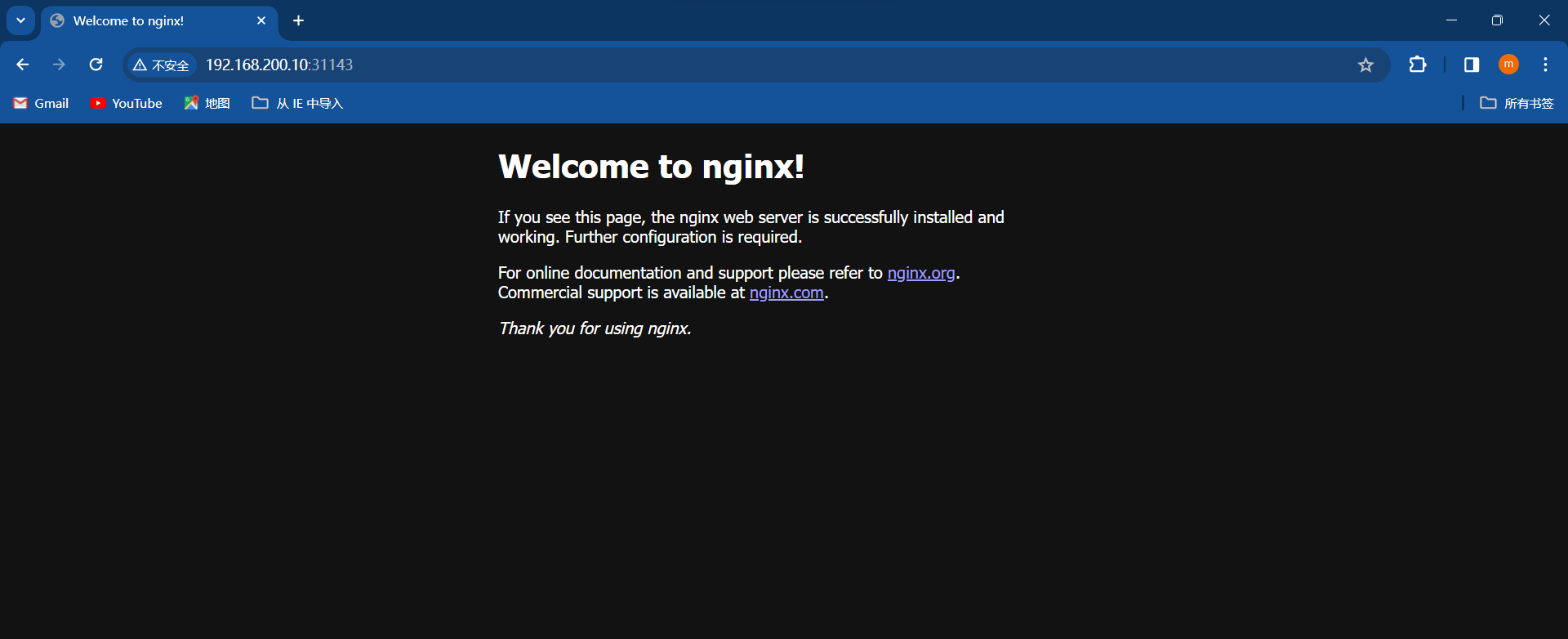

测试Kubernetes集群

在Kubernetes集群中创建一个pod,验证是否正常运行

[root@controller ~]# kubectl create deployment nginx --image=nginx

deployment.apps/nginx created

[root@controller ~]#

[root@controller ~]# kubectl get pods

NAME READY STATUS RESTARTS AGE

nginx-7854ff8877-4nz6n 1/1 Running 0 86s

[root@controller ~]#

暴露nginx端口号,指定类型为NodePort,使真机能够访问到nginx网页

[root@controller ~]# kubectl expose deployment nginx --port=80 --type=NodePort

service/nginx exposed

[root@controller ~]#

[root@controller ~]# kubectl get svc

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

kubernetes ClusterIP 10.96.0.1 <none> 443/TCP 25m

nginx NodePort 10.104.239.26 <none> 80:31143/TCP 10s

[root@controller ~]#

访问网页

ip地址后面带上nginx容器暴露到真机上的端口号

7万+

7万+

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?