1.创建命名空间

kubectl create ns elk

2. 创建elasticsearch的svc,sts

vim es-sts.yaml---

apiVersion: v1

kind: Service

metadata:

name: elasticsearch-logging

namespace: elk

labels:

k8s-app: elasticsearch-logging

kubernetes.io/cluster-service: "true"

addonmanager.kubernetes.io/mode: Reconcile

kubernetes.io/name: "Elasticsearch"

spec:

ports:

- port: 9200

protocol: TCP

targetPort: db

selector:

k8s-app: elasticsearch-logging

---

# RBAC authn and authz

apiVersion: v1

kind: ServiceAccount

metadata:

name: elasticsearch-logging

namespace: elk

labels:

k8s-app: elasticsearch-logging

kubernetes.io/cluster-service: "true"

addonmanager.kubernetes.io/mode: Reconcile

---

kind: ClusterRole

apiVersion: rbac.authorization.k8s.io/v1

metadata:

name: elasticsearch-logging

labels:

k8s-app: elasticsearch-logging

kubernetes.io/cluster-service: "true"

addonmanager.kubernetes.io/mode: Reconcile

rules:

- apiGroups:

- ""

resources:

- "services"

- "namespaces"

- "endpoints"

verbs:

- "get"

---

kind: ClusterRoleBinding

apiVersion: rbac.authorization.k8s.io/v1

metadata:

namespace: elk

name: elasticsearch-logging

labels:

k8s-app: elasticsearch-logging

kubernetes.io/cluster-service: "true"

addonmanager.kubernetes.io/mode: Reconcile

subjects:

- kind: ServiceAccount

name: elasticsearch-logging

namespace: elk

apiGroup: ""

roleRef:

kind: ClusterRole

name: elasticsearch-logging

apiGroup: ""

---

# Elasticsearch deployment itself

apiVersion: apps/v1

kind: StatefulSet #使用statefulset创建Pod

metadata:

name: elasticsearch-logging #pod名称,使用statefulSet创建的Pod是有序号有顺序的

namespace: elk #命名空间

labels:

k8s-app: elasticsearch-logging

kubernetes.io/cluster-service: "true"

addonmanager.kubernetes.io/mode: Reconcile

srv: srv-elasticsearch

spec:

serviceName: elasticsearch-logging #与svc相关联,这可以确保使用以下DNS地址访问Statefulset中的每个pod (es-cluster-[0,1,2].elasticsearch.elk.svc.cluster.local)

replicas: 1 #副本数量,单节点

selector:

matchLabels:

k8s-app: elasticsearch-logging #和pod template配置的labels相匹配

template:

metadata:

labels:

k8s-app: elasticsearch-logging

kubernetes.io/cluster-service: "true"

spec:

serviceAccountName: elasticsearch-logging

containers:

- image: docker.io/library/elasticsearch:7.9.3

name: elasticsearch-logging

resources:

# need more cpu upon initialization, therefore burstable class

limits:

cpu: 1000m

memory: 2Gi

requests:

cpu: 100m

memory: 500Mi

ports:

- containerPort: 9200

name: db

protocol: TCP

- containerPort: 9300

name: transport

protocol: TCP

volumeMounts:

- name: elasticsearch-logging

mountPath: /usr/share/elasticsearch/data/ #挂载点

env:

- name: "NAMESPACE"

valueFrom:

fieldRef:

fieldPath: metadata.namespace

- name: "discovery.type" #定义单节点类型

value: "single-node"

- name: ES_JAVA_OPTS #设置Java的内存参数,可以适当进行加大调整

value: "-Xms512m -Xmx2g"

volumes:

- name: elasticsearch-logging

hostPath:

path: /data/es/

nodeSelector: #如果需要匹配落盘节点可以添加nodeSelect

es: data

tolerations:

- effect: NoSchedule

operator: Exists

# Elasticsearch requires vm.max_map_count to be at least 262144.

# If your OS already sets up this number to a higher value, feel free

# to remove this init container.

initContainers: #容器初始化前的操作

- name: elasticsearch-logging-init

image: alpine:3.6

command: ["/sbin/sysctl", "-w", "vm.max_map_count=262144"] #添加mmap计数限制,太低可能造成内存不足的错误

securityContext: #仅应用到指定的容器上,并且不会影响Volume

privileged: true #运行特权容器

- name: increase-fd-ulimit

image: busybox

imagePullPolicy: IfNotPresent

command: ["sh", "-c", "ulimit -n 65536"] #修改文件描述符最大数量

securityContext:

privileged: true

- name: elasticsearch-volume-init #es数据落盘初始化,加上777权限

image: alpine:3.6

command:

- chmod

- -R

- "777"

- /usr/share/elasticsearch/data/

volumeMounts:

- name: elasticsearch-logging

mountPath: /usr/share/elasticsearch/data/

生成sts

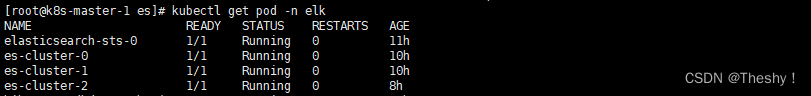

kubectl apply -f es-sts.yaml查看es是否启动

kubectl get pod -n elk

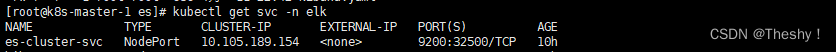

查看svc

kubectl get svc -n elk

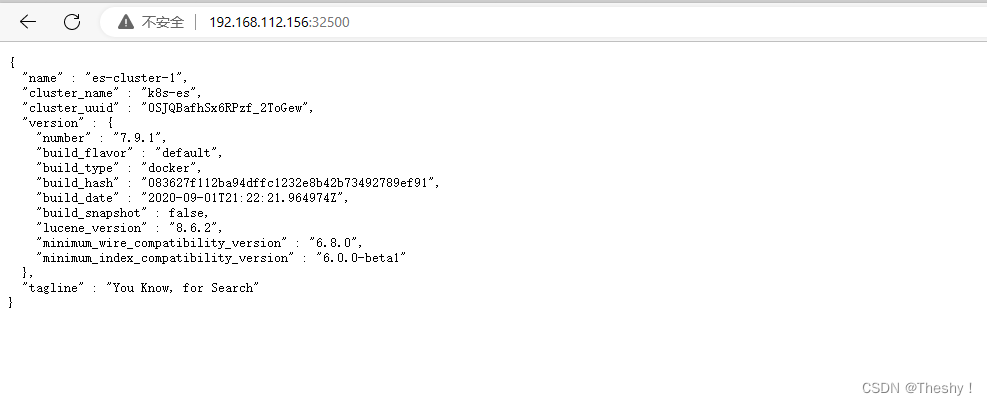

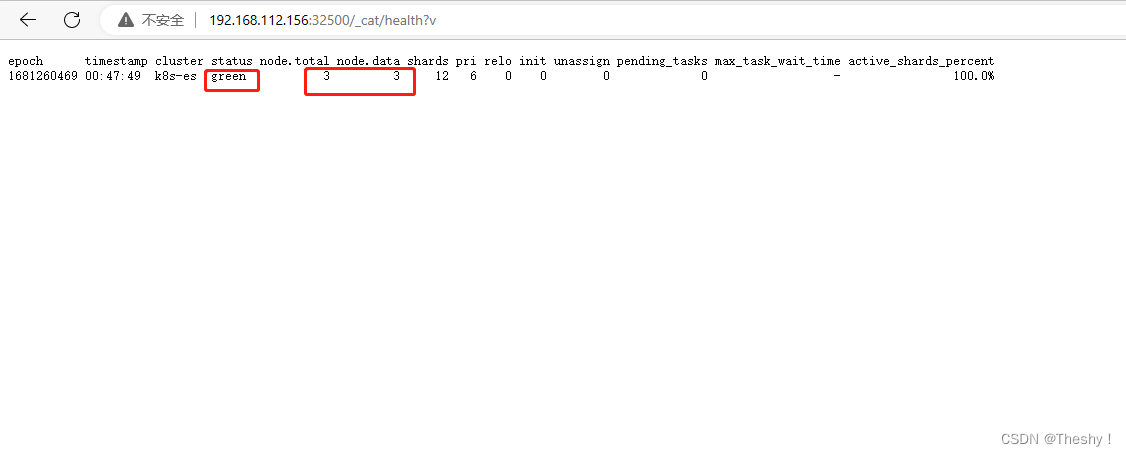

3.检查es集群

#登录ip+端口

192.168.112.156:32500

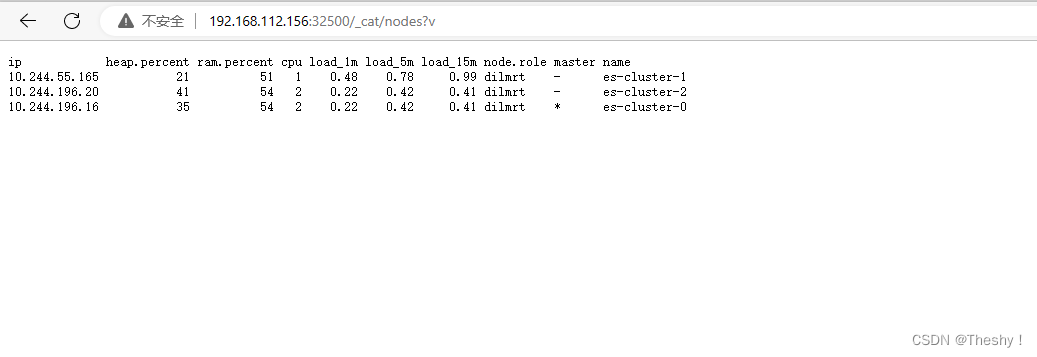

查看集群节点:

http://192.168.112.156:32500/_cat/nodes?v

查看集群健康状态:

http://192.168.112.156:32500/_cat/health?v

4.创建kibana

vim kibana.yamlapiVersion: apps/v1

kind: Deployment

metadata:

name: kibana

namespace: elk

spec:

selector:

matchLabels:

app: kibana

replicas: 1

template:

metadata:

labels:

app: kibana

spec:

restartPolicy: Always

containers:

- name: kibana

image: kibana:7.9.1

imagePullPolicy: Always

ports:

- containerPort: 5601

env:

- name: ELASTICSEARCH_HOSTS

value: http://es-cluster-svc:9200

- name: I18N.LOCALE

value: zh-CN #汉化

resources:

requests:

memory: 1024Mi

cpu: 50m

limits:

memory: 1024Mi

cpu: 1000m

---

apiVersion: v1

kind: Service

metadata:

name: kibana

namespace: elk

spec:

type: NodePort

ports:

- name: kibana

port: 5601

targetPort: 5601

nodePort: 32681

selector:

app: kibana

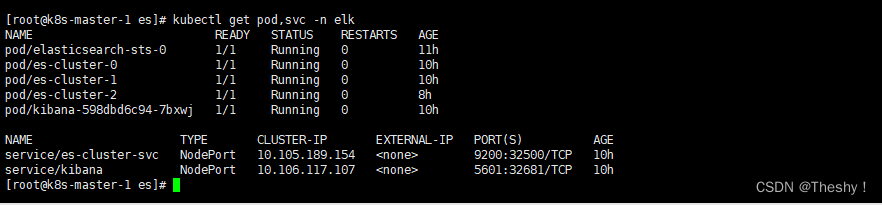

kubectl apply -f kibana.yaml查看pod和svc

kubectl get pod,svc -n elk

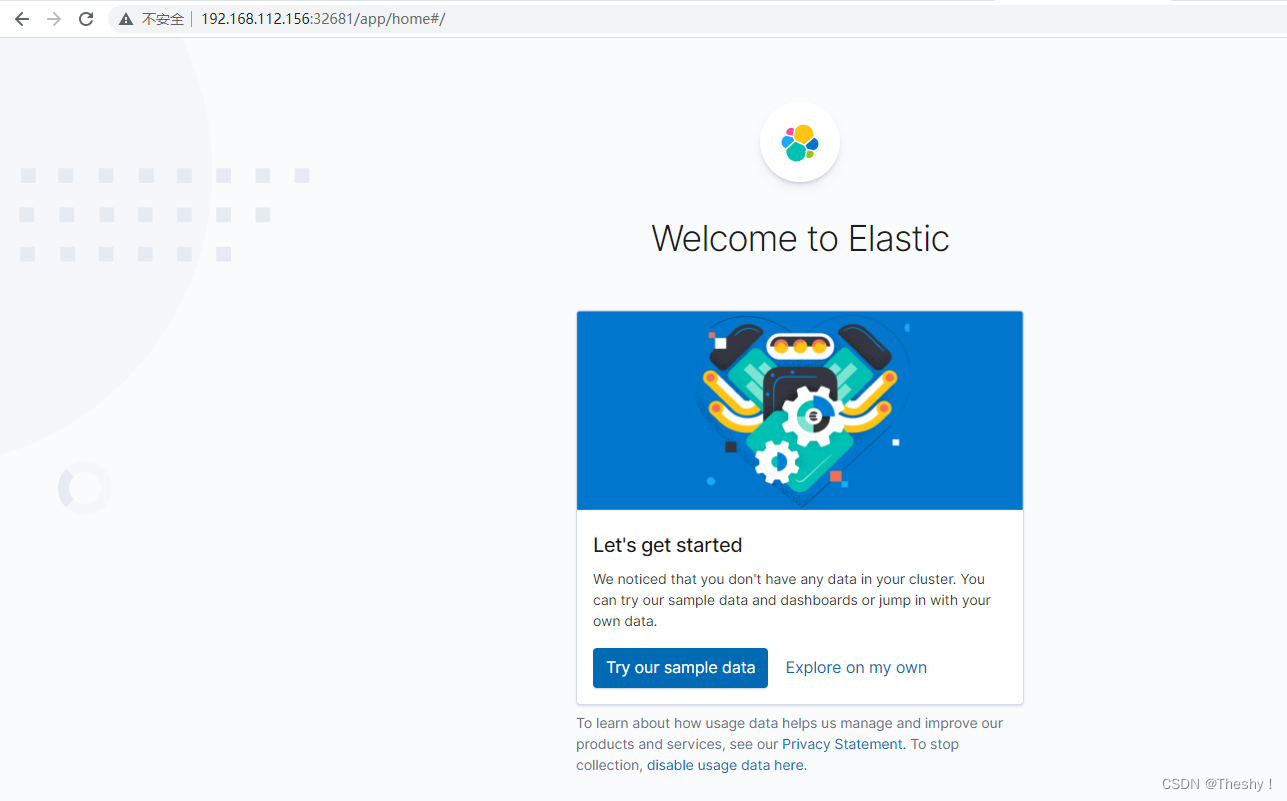

5.访问kibana

192.168.112.156:32681

1万+

1万+

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?