【前面是部署和打包,代码实例在文章最后,包括Maven配置】

【前面是部署和打包,代码实例在文章最后,包括Maven配置】

【前面是部署和打包,代码实例在文章最后,包括Maven配置】

先安装 zk,hdfs,yarn ,redis【百度一堆,自行处理】

安装flink:

Flink 的 flink-conf.yaml

jobmanager.rpc.address: xxxxxxx

jobmanager.rpc.port: 6123

jobmanager.memory.process.size: 1600m

taskmanager.memory.process.size: 1728m

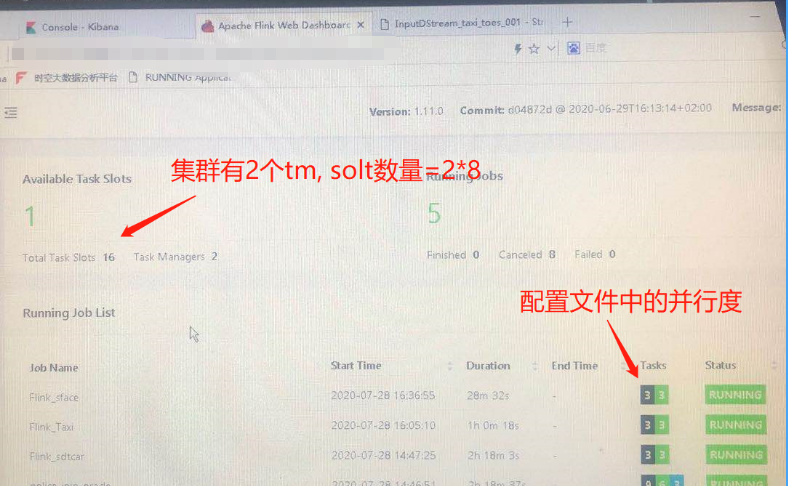

taskmanager.numberOfTaskSlots: 8 【请注意,一般yarn的 nodemanager的数量默认是8,yarn模式要注意】

parallelism.default: 3

high-availability: zookeeper

high-availability.storageDir: hdfs://FounderHdfs/flink

high-availability.zookeeper.quorum: xxxxxx01:2181,xxxxxx02:2181,xxxxxx03:2181

high-availability.zookeeper.path.root: /flink

high-availability.cluster-id: /cluster_one

jobmanager.execution.failover-strategy: region

yarn.application-attempts: 10再修改 :

Vim Masters

Xxxxx02:8081

Xxxxx01:8081

修改:

Vim workers

Xxxxxx01

Xxxxxx02

Xxxxxx03

Yarn 的yarn-site.xml

添加:

<property>

<name>yarn.resourcemanager.am.max-attempts</name>

<value>4</value>

</property>

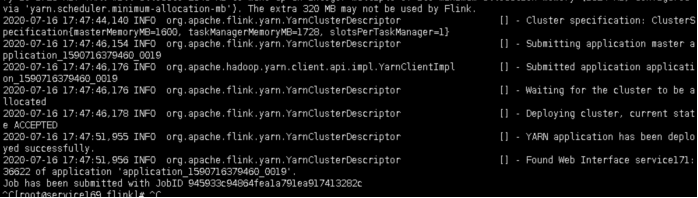

启动一般的 话有两种:

第一种 :

Flink/bin/yarn-session.sh -n 3 -jm 1024 -tm 1024

简单来说就是先开辟了一个空间,flink的所有任务都会在此空间上运行

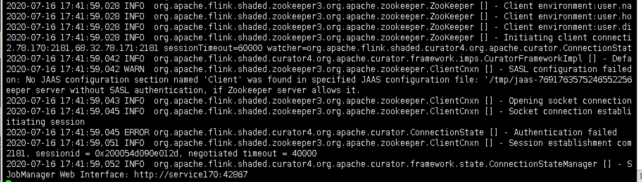

运行成功样例:

-------------------------------------------------------------------------------------------

【代码部分】

代码请见最下面 完整的maven 也在最后

------------------------------------------------------------------------------------------------------------------------------------------

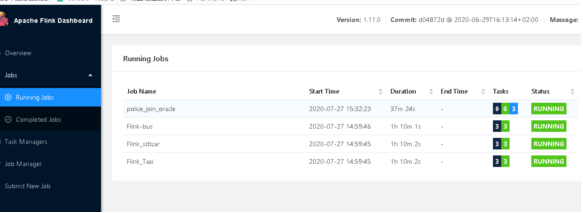

【部署多个任务】

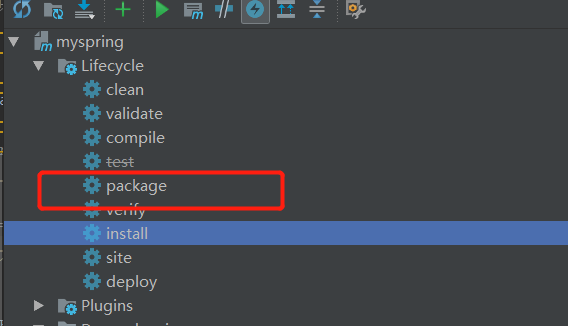

- 代码提交和打包的注意事项:

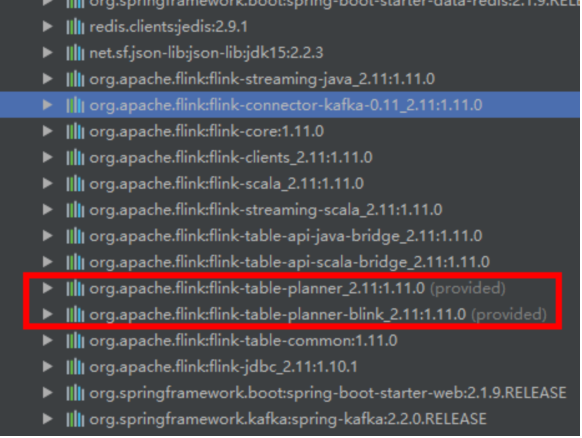

Sql的查询中,flink本地(你本地的idea中)环境和集群上读取的jar是不同的,官网给出的建议中,本地运行需要这两个包:

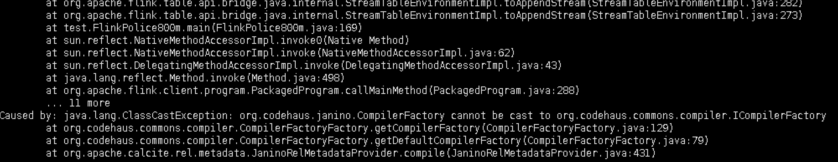

但是,打包的时候(远程flink集群上运行),需要屏蔽这两个包,

Pom中需要屏蔽相关的jar,否则会报错:

pom文件做处理,然后再打包:

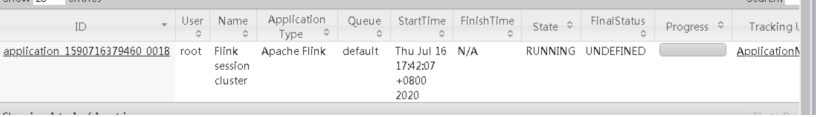

- 首先需要查阅 flink在yarn 上app所使用的名称id :

- 在部署任务时候,需要指定运行的id:

/home/flink/bin/flink run -yid application_1595236736519_0039 \

-yD env.java.opts="-Djava.security.auth.login.config=/home/flink/kafka_client_jaas.conf" \

--class test.FlinkPolice800m \

myspring-0.0.1-SNAPSHOT-jar-with-dependencies.jar

成功样例:

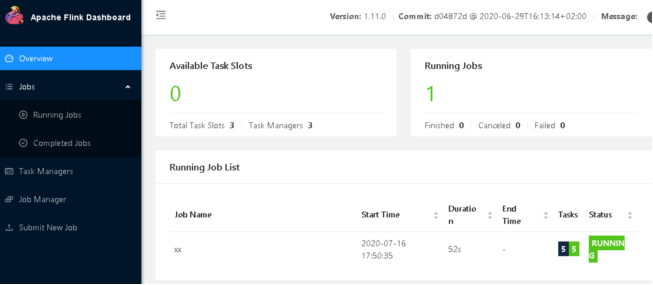

第二种:

直接运行时申请yarn的资源:【不推介使用,因为只能启动一个,没啥卵用】

./bin/flink run -m yarn-cluster \

-yD env.java.opts="-Djava.security.auth.login.config=/home/flink/kafka_client_jaas.conf" \

--class test.FlinkTest \

myspring-0.0.1-SNAPSHOT-jar-with-dependencies.jar

运行任务的时候才申请yarn 的资源

成功样例:

【代码实现】:

主程序:

在程序中 代码指定了

java.security.auth.login.config 的路径,linux上的绝对路径 /home/flink/mytest/kafka_client_jaas.conf但是奇怪的是 没有生效!!!!! 还有一个遗留问题写在最后,希望有大佬指点一下

public class FlinkPolice800m {

static {

// System.setProperty("java.security.auth.login.config",

// "C:\\Users\\Administrator\\Desktop\\machen\\myspring001\\kafka_client_jaas.conf");

/**

下面这么写可能没有效果,哪怕指定了绝对路径

*/

System.setProperty("java.security.auth.login.config",

"/home/flink/mytest/kafka_client_jaas.conf");

}

public static void main(String[] args) throws SQLException {

//tableEnv

StreamExecutionEnvironment streamExecutionEnv = StreamExecutionEnvironment.getExecutionEnvironment();

StreamTableEnvironment tableEnv = StreamTableEnvironment.create(streamExecutionEnv,TableConfig.getDefault());

//

//oracle data

TypeInformation[] fieldTypes = new TypeInformation[]{

BasicTypeInfo.BIG_DEC_TYPE_INFO,

BasicTypeInfo.STRING_TYPE_INFO

};

RowTypeInfo rowTypeInfo = new RowTypeInfo(fieldTypes);

JDBCInputFormat jdbcInputFormat = JDBCInputFormat.buildJDBCInputFormat()

.setDrivername("oracle.jdbc.OracleDriver")

.setDBUrl("jdbc:oracle:thin:@68.32.78.153:1521/skjck")

.setUsername("U_SKPT_GA")

.setPassword("U_SKPT_GA")

.setQuery("select termid,plateno from u_skpt_ga.g_vehicle_all")

.setRowTypeInfo(rowTypeInfo)

.finish();

DataStreamSource<Row> dataStreamSource = streamExecutionEnv.createInput(jdbcInputFormat);

DataStream<Row> newRowData = dataStreamSource.map(new MapFunction<Row,Row>() {

@Override

public Row map(Row row) throws Exception {

Row row1 = new Row(2);

String id = Integer.valueOf(row.getField(0).toString()).toString();

String name = row.getField(1).toString();

row1.setField(0,id);

row1.setField(1,name);

// System.out.println("oarcle 数据:"+row1.toString());

return row1;

}

}).returns(new RowTypeInfo(Types.STRING(),Types.STRING()));

tableEnv.registerDataStream("orcTable",newRowData,"carid,carname");

//redis相关配置

FlinkJedisClusterConfig clusterConfig = FlinkClusterConfig.clusterConfig();

//kafaka配置

streamExecutionEnv.setStreamTimeCharacteristic(TimeCharacteristic.ProcessingTime);

streamExecutionEnv.enableCheckpointing(60000);

streamExecutionEnv.getCheckpointConfig().setCheckpointingMode(CheckpointingMode.EXACTLY_ONCE);

//kafka相关

String topicName = "pgis_dw_data";

Properties props = KafkaProperty.consumerProps("flink_police_800m_groupid_01");

/***

* 由于在linux服务器上通过命令方式指定 读取的kafka SASL权限认证文件路径不生效 ,

所以此处通过码的方式添加,其实很不友好

*/

props.put(CommonClientConfigs.SECURITY_PROTOCOL_CONFIG, "SASL_PLAINTEXT");

props.put(SaslConfigs.SASL_MECHANISM, "PLAIN");

props.put("sasl.jaas.config",

"org.apache.kafka.common.security.plain.PlainLoginModule required username=\"machen\" password=\"machen\";");

FlinkKafkaConsumer010 consumer =

new FlinkKafkaConsumer010(

topicName,

new SimpleStringSchema(),

props);

consumer.setCommitOffsetsOnCheckpoints(true);

consumer.setStartFromGroupOffsets();

DataStream<String> stream = streamExecutionEnv.addSource(consumer);

DataStream<String> filterStream = stream.filter(new FilterFunction<String>() {

@Override

public boolean filter(String s) throws Exception {

String[] values = s.split("\\|");

if((values.length >=15)

&& !values[1].equals("") && !values[2].equals("")

&& values[8].length()==19 && values[9].length()==19){

return true;

}

return false;

}

});

DataStream<Row> kafkaStream = filterStream.map(new MapFunction<String, Row>() {

@Override

public Row map(String s) throws Exception {

Row row = new Row(2);

String[] array = s.split("\\|");

float lon = 0f;

float lat = lon;

try {

lon = Math.abs(Float.valueOf(array[1])/1000000);

lat = Math.abs(Float.valueOf(array[2])/1000000);

} catch (NumberFormatException e) {

e.printStackTrace();

}

if(lon<90 && lat >90){

float temp = 0f;

temp = lat;

lat = lon;

lon = temp;

}

if(lon <-180 ||lon>180){

lon= 0f;

}

if(lat<-90 || lat >90){

lat = 0f;

}

String location = lat+","+lon;

String collecttime = DateFunction.compareDate(array[8],array[9])? array[8] : array[9];

// collecttime = DateFunction.compareNowTime(collecttime);

String redisValue = collecttime+"|"+location;

String idcardnoid = removeZero(array[0]);

row.setField(0,idcardnoid);

row.setField(1,redisValue);

return row;

}

}).returns(new RowTypeInfo(Types.STRING(),Types.STRING()));

tableEnv.registerDataStream("kafkaTable",kafkaStream,"carid,redisValue");

//--------------- 测试专用---------------------

// DataStream<Row> result = tableEnv.toAppendStream(kafkaTable,Row.class);

// result.print();

//---------------------------------------

String joinSQL = "select o.carname,k.redisValue from kafkaTable as k join orcTable as o on o.carid=k.carid ";

Table joinTable = tableEnv.sqlQuery(joinSQL);

DataStream<Row> result = tableEnv.toAppendStream(joinTable,Row.class);

result.flatMap(new FlatMapFunction<Row, Tuple2<String,String>>() {

@Override

public void flatMap(Row row, Collector<Tuple2<String,String>> collector) throws Exception {

System.out.println("join 数据: "+row.toString());

collector.collect(new Tuple2<String,String>(row.getField(0).toString(),row.getField(1).toString()));

}

}).addSink(new RedisSink<Tuple2<String, String>>(clusterConfig,new RedisExampleMapper()))

.name("spolice");

try {

streamExecutionEnv.execute("police_join_oracle");

} catch (Exception e) {

e.printStackTrace();

}

}

public static String removeZero(String ss){

ss = ss.replaceFirst("^0*","");

return ss;

}

}

redis集群的配置

public class FlinkClusterConfig {

public static FlinkJedisClusterConfig clusterConfig(){

Set<InetSocketAddress> nodes = new HashSet<>();

nodes.add(new InetSocketAddress("xxxxx",8001));

nodes.add(new InetSocketAddress("xxxxxx",8002));

nodes.add(new InetSocketAddress("xxxxxx",8003));

nodes.add(new InetSocketAddress("xxxxxx",8004));

nodes.add(new InetSocketAddress("xxxxxx",8005));

nodes.add(new InetSocketAddress("xxxxxx",8006));

FlinkJedisClusterConfig clusterConfig = new FlinkJedisClusterConfig.Builder()

.setNodes(nodes).setTimeout(2000)

.setMaxRedirections(2).setMaxIdle(8)

.setMaxTotal(8).setMinIdle(0)

.build();

return clusterConfig;

}

}kafka 的校验文件: kafka_jass.conf

KafkaClient {

org.apache.kafka.common.security.plain.PlainLoginModule required

username="machen"

password="machen";

};kafka 的一些默认参数配置,用于定义consumer

public class KafkaProperty {

public static Properties consumerProps(String groupid){

Properties props = new Properties();

props.setProperty("bootstrap.servers", "kafka01:9092,kafka02:9092,kafka03:9092,kafka04:9092");

props.setProperty("group.id", groupid);

props.put("enable.auto.commit", "true");

props.put("auto.commit.interval.ms", "100000");

props.put("session.timeout.ms", "30000");

props.put("auto.offset.reset","latest");//latest earliest

props.put("key.deserializer", "org.apache.kafka.common.serialization.StringDeserializer");

props.put("value.deserializer", "org.apache.kafka.common.serialization.StringDeserializer");

//kafka 验证方式:

props.put("security.protocol","SASL_PLAINTEXT");

props.put("sasl.mechanism","PLAIN");

return props;

}

}kafka中的数据处理map转换类:【根据业务自己修改】

public class Pollice800mDataFunction implements FlatMapFunction<String,Tuple2<String,String>> {

@Override

public void flatMap(String s, Collector<Tuple2<String, String>> collector) throws Exception {

// System.out.println("进入的数据:"+ s);

if(null!=s){

String[] array = s.split("\\|");

//初始坐标

float lon = 0f;

float lat = lon;

try {

lon = Math.abs(Float.valueOf(array[1])/1000000);

lat = Math.abs(Float.valueOf(array[2])/1000000);

} catch (NumberFormatException e) {

e.printStackTrace();

}

//因为数据考量的标准在中国,所以有一个坐标取值的范围,这里因为有些 脏数据 坐标是反的,所以交换了一下

if(lon<90 && lat >90){

float temp = 0f;

temp = lat;

lat = lon;

lon = temp;

}

if(lon <-180 ||lon>180){

lon= 0f;

}

if(lat<-90 || lat >90){

lat = 0f;

}

String location = lat+","+lon;

String collecttime = DateFunction.compareDate(array[8],array[9])? array[8] : array[9];

String idcardno = removeZero(array[0]);

try {

idcardno = RedisService.getRedisValue(idcardno);

} catch (Exception e) {

e.printStackTrace();

}

String redisValue = collecttime+"|"+location;

System.out.println(idcardno+" "+redisValue);

collector.collect(new Tuple2<>(idcardno,redisValue));

}

}

//去除数据中 0, 比如 000001234 变成了 1234

public static String removeZero(String ss){

ss = ss.replaceFirst("^0*","");

return ss;

}

}时间的一些转换函数类:

public class DateFunction {

public static boolean compareDate(String date1,String date2){

try {

DateTimeFormatter format = DateTimeFormatter.ofPattern("yyyy-MM-dd HH:mm:ss");

LocalDate d1 = LocalDate.parse(date1,format);

LocalDate d2 = LocalDate.parse(date2,format);

return d1.isBefore(d2);

} catch (Exception e) {

e.printStackTrace();

}

return false;

}

}redis 的 处理类

public class RedisExampleMapper implements RedisMapper<Tuple2<String,String>> {

@Override

public RedisCommandDescription getCommandDescription() {

System.out.println("save data。。。");

return new RedisCommandDescription(RedisCommand.SET, null);

}

@Override

public String getKeyFromData(Tuple2<String, String> data) {

// System.out.println(data.f0);

return data.f0;

}

@Override

public String getValueFromData(Tuple2<String, String> data) {

// System.out.println(data.f1.toString());

return data.f1.toString();

}

}【遗留的问题】:如果有哪位朋友已经解决请留言告知,交流

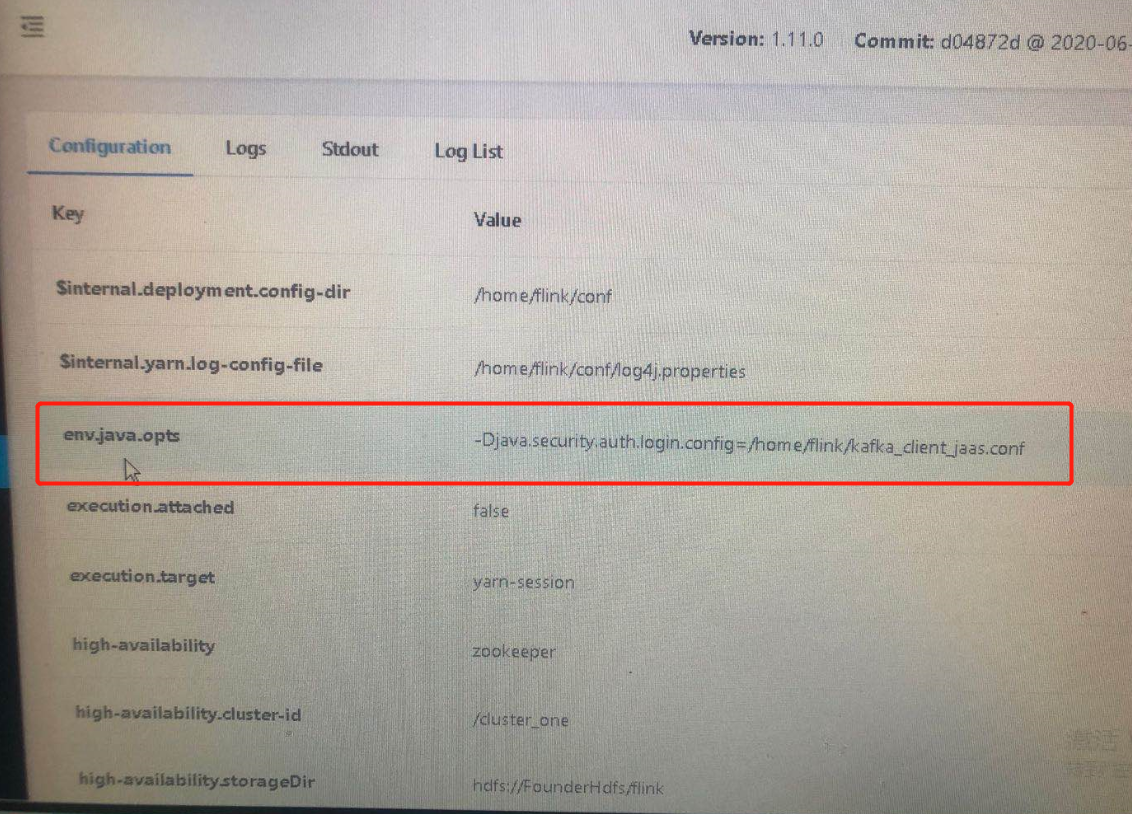

在命令中

-yD env.java.opts="-Djava.security.auth.login.config=/home/flink/kafka_client_jaas.conf" \

在 yarn-session 的模式中是不生效的,但是在yarn-cluster中是生效的

就是说,用下面这个 的话,在flink 页面中 会有 env.java.opts 一项

./bin/flink run -m yarn-cluster \

-yD env.java.opts="-Djava.security.auth.login.config=/home/flink/kafka_client_jaas.conf" \

--class test.FlinkTest \

myspring-0.0.1-SNAPSHOT-jar-with-dependencies.jar

但是如果使用 yarn-session的话,flink 的 页面是没有 env.java.opts 一项 的

/home/flink/bin/flink run -yid application_1595236736519_0039 \

-yD env.java.opts="-Djava.security.auth.login.config=/home/flink/kafka_client_jaas.conf" \

--class test.FlinkPolice800m \

myspring-0.0.1-SNAPSHOT-jar-with-dependencies.jar 甚至 使用 -D env.java.opts="-Djava.security.auth.login.config=/home/flink/kafka_client_jaas.conf" \ 也不生效,

所以 我这个预读权限文件我 添加到了 flink-conf.yaml里面,勉强可以执行,flink页面也会有 env.java.opts 一项:

env.java.opts : -Djava.security.auth.login.config=/home/flink/kafka_client_jaas.conf(虽然通过代码方式 也可以读取,但是还是通过加载文件方式比较适用于生产),希望这个方面已经解决的大佬 留言告知,

maven 文件;

<?xml version="1.0" encoding="UTF-8"?>

<project xmlns="http://maven.apache.org/POM/4.0.0" xmlns:xsi="http://www.w3.org/2001/XMLSchema-instance"

xsi:schemaLocation="http://maven.apache.org/POM/4.0.0 https://maven.apache.org/xsd/maven-4.0.0.xsd">

<modelVersion>4.0.0</modelVersion>

<parent>

<groupId>org.springframework.boot</groupId>

<artifactId>spring-boot-starter-parent</artifactId>

<version>2.1.9.RELEASE</version>

<relativePath/> <!-- lookup parent from repository -->

</parent>

<groupId>com.example</groupId>

<artifactId>myspring</artifactId>

<version>0.0.1-SNAPSHOT</version>

<name>myspring</name>

<description>Demo project for Spring Boot</description>

<properties>

<java.version>1.8</java.version>

<flink.version>1.11.0</flink.version>

<flink.scala.version>2.11</flink.scala.version>

<spring-kafka.version>2.2.0.RELEASE</spring-kafka.version>

</properties>

<dependencies>

<dependency>

<groupId>org.apache.flink</groupId>

<artifactId>flink-connector-redis_2.10</artifactId>

<version>1.1.5</version>

<exclusions>

<exclusion>

<groupId>org.slf4j</groupId>

<artifactId>slf4j-log4j12</artifactId>

</exclusion>

</exclusions>

</dependency>

<dependency>

<groupId>org.springframework.boot</groupId>

<artifactId>spring-boot-starter-data-redis</artifactId>

<exclusions>

<exclusion>

<groupId>ch.qos.logback</groupId>

<artifactId>logback-classic</artifactId>

</exclusion>

</exclusions>

</dependency>

<dependency>

<groupId>redis.clients</groupId>

<artifactId>jedis</artifactId>

<version>2.9.1</version>

</dependency>

<dependency>

<groupId>net.sf.json-lib</groupId>

<artifactId>json-lib</artifactId>

<version>2.2.3</version>

<classifier>jdk15</classifier>

</dependency>

<dependency>

<groupId>org.apache.flink</groupId>

<artifactId>flink-java</artifactId>

<version>${flink.version}</version>

<!--<scope>provided</scope>-->

</dependency>

<dependency>

<groupId>org.apache.flink</groupId>

<artifactId>flink-streaming-java_2.11</artifactId>

<version>${flink.version}</version>

<!--<scope>provided</scope>-->

</dependency>

<dependency>

<groupId>org.apache.flink</groupId>

<artifactId>flink-connector-kafka-0.11_2.11</artifactId>

<version>${flink.version}</version>

<!--<scope>provided</scope>-->

</dependency>

<dependency>

<groupId>org.apache.flink</groupId>

<artifactId>flink-core</artifactId>

<version>${flink.version}</version>

<!--<scope>provided</scope>-->

</dependency>

<dependency>

<groupId>org.apache.flink</groupId>

<artifactId>flink-clients_${flink.scala.version}</artifactId>

<version>${flink.version}</version>

<!--<scope>provided</scope>-->

</dependency>

<dependency>

<groupId>org.apache.flink</groupId>

<artifactId>flink-scala_${flink.scala.version}</artifactId>

<version>${flink.version}</version>

<!--<scope>provided</scope>-->

</dependency>

<dependency>

<groupId>org.apache.flink</groupId>

<artifactId>flink-streaming-scala_${flink.scala.version}</artifactId>

<version>${flink.version}</version>

<!--<scope>provided</scope>-->

</dependency>

<dependency>

<groupId>org.apache.flink</groupId>

<artifactId>flink-table-api-java-bridge_2.11</artifactId>

<version>1.11.0</version>

<!--<scope>provided</scope>-->

</dependency>

<!-- or... -->

<dependency>

<groupId>org.apache.flink</groupId>

<artifactId>flink-table-api-scala-bridge_2.11</artifactId>

<version>1.11.0</version>

<!--<scope>provided</scope>-->

</dependency>

<dependency>

<groupId>org.apache.flink</groupId>

<artifactId>flink-table-planner_2.11</artifactId>

<version>1.11.0</version>

<scope>provided</scope>

</dependency>

<dependency>

<groupId>org.apache.flink</groupId>

<artifactId>flink-table-common</artifactId>

<version>1.11.0</version>

<scope>provided</scope>

</dependency>

<dependency>

<groupId>org.apache.flink</groupId>

<artifactId>flink-jdbc_2.11</artifactId>

<version>1.10.1</version>

</dependency>

<dependency>

<groupId>org.springframework.boot</groupId>

<artifactId>spring-boot-starter-web</artifactId>

<!--<exclusions>-->

<!--<exclusion>-->

<!--<groupId>ch.qos.logback</groupId>-->

<!--<artifactId>logback-classic</artifactId>-->

<!--</exclusion>-->

<!--</exclusions>-->

</dependency>

<dependency>

<groupId>org.springframework.kafka</groupId>

<artifactId>spring-kafka</artifactId>

</dependency>

<dependency>

<groupId>org.springframework.boot</groupId>

<artifactId>spring-boot-starter-tomcat</artifactId>

</dependency>

<dependency>

<groupId>org.springframework.boot</groupId>

<artifactId>spring-boot-starter</artifactId>

</dependency>

<dependency>

<groupId>org.springframework.boot</groupId>

<artifactId>spring-boot-devtools</artifactId>

<scope>runtime</scope>

<optional>true</optional>

</dependency>

<!--<dependency>-->

<!--<groupId>org.springframework.boot</groupId>-->

<!--<artifactId>spring-boot-configuration-processor</artifactId>-->

<!--<optional>true</optional>-->

<!--</dependency>-->

<dependency>

<groupId>org.projectlombok</groupId>

<artifactId>lombok</artifactId>

<optional>true</optional>

</dependency>

<dependency>

<groupId>org.springframework.boot</groupId>

<artifactId>spring-boot-starter-test</artifactId>

<scope>test</scope>

<exclusions>

<exclusion>

<groupId>org.junit.vintage</groupId>

<artifactId>junit-vintage-engine</artifactId>

</exclusion>

</exclusions>

</dependency>

<dependency>

<groupId>org.springframework.kafka</groupId>

<artifactId>spring-kafka-test</artifactId>

<scope>test</scope>

</dependency>

<!--spring 的 test测试用的-->

<dependency>

<groupId>org.junit.jupiter</groupId>

<artifactId>junit-jupiter-api</artifactId>

<version>5.3.1</version>

<scope>test</scope>

</dependency>

</dependencies>

<build>

<plugins>

<plugin>

<groupId>org.apache.maven.plugins</groupId>

<artifactId>maven-surefire-plugin</artifactId>

<version>2.8.1</version>

<configuration>

<includes>

<include>**/*.java</include>

<include>**/*.scala</include>

</includes>

</configuration>

</plugin>

<!--<plugin>-->

<!--<groupId>org.springframework.boot</groupId>-->

<!--<artifactId>spring-boot-maven-plugin</artifactId>-->

<!--</plugin>-->

<plugin>

<groupId>org.apache.maven.plugins</groupId>

<artifactId>maven-dependency-plugin</artifactId>

<executions>

<execution>

<id>copy</id>

<phase>package</phase>

<goals>

<goal>copy-dependencies</goal>

</goals>

<configuration>

<outputDirectory>${project.build.directory}/lib</outputDirectory>

</configuration>

</execution>

</executions>

</plugin>

<plugin>

<artifactId>maven-assembly-plugin</artifactId>

<version>2.6</version>

<configuration>

<descriptorRefs>

<descriptorRef>jar-with-dependencies</descriptorRef>

</descriptorRefs>

</configuration>

<executions>

<execution>

<id>make-assembly</id>

<phase>package</phase>

<goals>

<goal>single</goal>

</goals>

</execution>

</executions>

</plugin>

</plugins>

</build>

</project>

155

155

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?