HIVE学习总结

Hive只需要装载一台机器上,可以通过webui,console,thrift接口访问(jdbc,odbc),仅适合离线数据分析,降低数据分析成本(不用编写mapreduce)。

Hive优势

1. 简单易上手,类sql的hql、

2. 有大数据集的计算和扩展能力,mr作为计算引擎,hdfs作为存储系统

3. 统一的元数据管理(可与pig。presto)等共享

Hive缺点

1. Hive表达能力有限。迭代和复杂运算不易表达

2. Hive效率较低,mr作业不够智能,hql调优困难,可控性差

Hive访问

1. 提供jdbc、odbc访问方式。

2. 采用开源软件thrift实现C/S模型,支持任何语言。

3. WebUI的方式

4. 控制台方式

Hive WEBUI使用

在HIVE_HOME/conf目录hive-site.xml文件中添加如下文件

修改配置文件:hive-site.xml增加如下三个参数项:

<property>

<name>hive.hwi.listen.host</name>

<value>0.0.0.0</value>

</property>

<property>

<name>hive.hwi.listen.port</name>

<value>9999</value>

</property>

<property>

<name>hive.hwi.war.file</name>

<value>lib/hive-hwi-0.13.1.war</value>

</property>

其中lib/hive-hwi-0.13.1.war为hive页面对应的war包,0.13.1版本没有对应的war包需要自己打包,步骤如下:

wgethttp://apache.fayea.com/apache-mirror/hive/hive-0.13.1/apache-hive-0.13.1-src.tar.gz

tar-zxvf apache-hive-0.13.1-src.tar.gz

cdapache-hive-0.13.1-src

cdhwi/web

ziphive-hwi-0.13.1.zip ./* //打包成.zip文件。

scphive-hwi-0.13.1.war db96:/usr/local/hive/lib/ //放到hive的安装目录的lib目录下。

启动hwi:

hive --service hwi

如有下报错:

Problem accessing /hwi/. Reason:

Unable to find a javac compiler;

com.sun.tools.javac.Main is not on theclasspath.

Perhaps JAVA_HOME does not point to the JDK.

Itis currently set to "/usr/java/jdk1.7.0_55/jre"

解决办法:

cp/usr/java/jdk1.7.0_55/lib/tools.jar /usr/local/hive/lib/

hive --service hwi 重启即可。

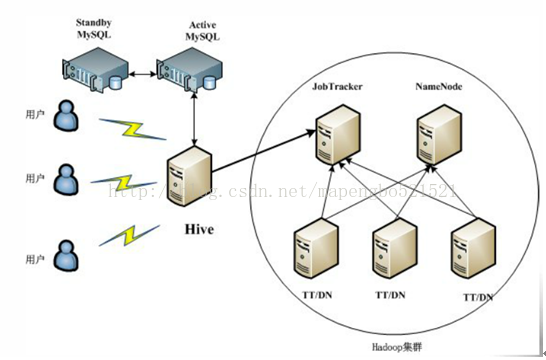

典型部署:采用主备结构mysql存储元数据信息。

Java通过jdbc调用hive

使用jdbc连接hive必须启动hiveserver,默认端口为10000,也可以指定。

bin/hive --service hiveserver -p 10002

显示Starting Hive Thrift Server说明启动成功。

创建eclipse创建java工程,导入hive/lib下的所有jar,及hadoop的一下三个jar

hadoop-2.5.0/share/hadoop/common/hadoop-common-2.5.0.jar

hadoop-2.5.0/share/hadoop/common/lib/slf4j-api-1.7.5.jar

hadoop-2.5.0/share/hadoop/common/lib/slf4j-log4j12-1.7.5.jar

理论上只用导入hive下面的jar,

$HIVE_HOME/lib/hive-exec-0.13.1.jar

$HIVE_HOME/lib/hive-jdbc-0.13.1.jar

$HIVE_HOME/lib/hive-metastore-0.13.1.jar

$HIVE_HOME/lib/hive-service-0.13.1.jar

$HIVE_HOME/lib/libfb303-0.9.0.jar

$HIVE_HOME/lib/commons-logging-1.1.3.jar

测试数据/home/hadoop01/data 内容如下(中间用tab键隔开):

1 abd

2 2sdf

3 Fdd

Java代码如下:

package org.apache.hadoop.hive;

import java.sql.Connection;

import java.sql.DriverManager;

import java.sql.ResultSet;

import java.sql.SQLException;

import java.sql.Statement;

import org.apache.log4j.Logger;

public class HiveJdbcCli {

private static String driverName ="org.apache.hadoop.hive.jdbc.HiveDriver";

private static String url ="jdbc:hive://hadoop3:10000/default";

private static String user ="";

private static String password ="";

private static String sql = "";

private static ResultSet res;

private static final Logger log =Logger.getLogger(HiveJdbcCli.class);

public static void main(String[] args){

Connection conn = null;

Statement stmt = null;

try {

conn = getConn();

stmt =conn.createStatement();

// 第一步:存在就先删除

String tableName =dropTable(stmt);

// 第二步:不存在就创建

createTable(stmt, tableName);

// 第三步:查看创建的表

showTables(stmt, tableName);

// 执行describe table操作

describeTables(stmt,tableName);

// 执行load data intotable操作

loadData(stmt, tableName);

// 执行 select * query 操作

selectData(stmt, tableName);

// 执行 regular hive query统计操作

countData(stmt, tableName);

} catch (ClassNotFoundException e){

e.printStackTrace();

log.error(driverName + " notfound!", e);

System.exit(1);

} catch (SQLException e) {

e.printStackTrace();

log.error("Connectionerror!", e);

System.exit(1);

} finally {

try {

if (conn != null) {

conn.close();

conn = null;

}

if (stmt != null) {

stmt.close();

stmt = null;

}

} catch (SQLException e) {

e.printStackTrace();

}

}

}

private static void countData(Statementstmt, String tableName)

throws SQLException {

sql = "select count(1) from" + tableName;

System.out.println("Running:" + sql);

res = stmt.executeQuery(sql);

System.out.println("执行“regularhive query”运行结果:");

while (res.next()) {

System.out.println("count------>" + res.getString(1));

}

}

private static void selectData(Statementstmt, String tableName)

throws SQLException {

sql = "select * from " +tableName;

System.out.println("Running:" + sql);

res = stmt.executeQuery(sql);

System.out.println("执行 select *query运行结果:");

while (res.next()) {

System.out.println(res.getInt(1) +"\t" + res.getString(2));

}

}

private static void loadData(Statementstmt, String tableName)

throws SQLException {

String filepath ="/home/hadoop01/data";

sql = "load data local inpath'" + filepath + "' into table "

+ tableName;

System.out.println("Running:" + sql);

res = stmt.executeQuery(sql);

}

private static voiddescribeTables(Statement stmt, String tableName)

throws SQLException {

sql = "describe " +tableName;

System.out.println("Running:"+ sql);

res = stmt.executeQuery(sql);

System.out.println("执行 describetable运行结果:");

while (res.next()) {

System.out.println(res.getString(1) + "\t" +res.getString(2));

}

}

private static void showTables(Statementstmt, String tableName)

throws SQLException {

sql = "show tables '" +tableName + "'";

System.out.println("Running:" + sql);

res = stmt.executeQuery(sql);

System.out.println("执行 showtables运行结果:");

if (res.next()) {

System.out.println(res.getString(1));

}

}

private static void createTable(Statementstmt, String tableName)

throws SQLException {

sql = "create table "

+ tableName

+ " (key int, valuestring) row format delimited fieldsterminated by '\t'";

stmt.executeQuery(sql);

}

private static String dropTable(Statementstmt) throws SQLException {

// 创建的表名

String tableName ="testHive";

sql = "drop table " +tableName;

stmt.executeQuery(sql);

return tableName;

}

private static Connection getConn() throwsClassNotFoundException,

SQLException {

Class.forName(driverName);

Connection conn =DriverManager.getConnection(url, user, password);

return conn;

}

}

767

767

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?