xxl-job是一个很好用的分布式任务调度平台,不过它本身是用的mysql, 如果想在其它数据库就会有或多或少的问题,在这里我归纳总结一下。

xxl-job适配数据库总共有三大点:

一,导入对应数据库的驱动包

二,对于数据库分页的适配,

三,对于数据库保存之后返回自增ID的适配

在网上面也能看到一些xxl-job对于国产数据适配,基本都是导一个驱动包就完事儿了,但是实际用的时候会有些问题。一个是有的数据库分页不支持LIMIT 语法例如oracle, OceanBase的oracle模式。还有一个是数据保存之后返回自增ID问题,具体表现就是执行任务之后任务的执行结果不能正常显示出来。

这里我们挨个解决。

一驱动包,

驱动包,直接在maven仓库里弄或者去官网下载就可以了。

然后配置一下数据库连接信息。我这边贴几个maven配置信息

<!-- 达梦数据库驱动 -->

<dependency>

<groupId>com.dameng</groupId>

<artifactId>Dm8JdbcDriver18</artifactId>

<version>8.1.1.49</version>

</dependency>

<dependency>

<groupId>com.dameng</groupId>

<artifactId>DmDialect-for-hibernate5.3</artifactId>

<version>8.1.1.49</version>

</dependency>

<!-- 神通数据库驱动 -->

<dependency>

<groupId>com.shentong</groupId> <!--自定义-->

<artifactId>oscarJDBC</artifactId> <!--自定义-->

<version>1.0</version> <!--自定义-->

<scope>system</scope>

<systemPath>${pom.basedir}/lib/oscarJDBC.jar</systemPath> <!--项目根目录下的lib文件夹下-->

</dependency>

<dependency>

<groupId>com.shentong</groupId> <!--自定义-->

<artifactId>oscarHibernate54</artifactId> <!--自定义-->

<version>1.0</version> <!--自定义-->

<scope>system</scope>

<systemPath>${pom.basedir}/lib/oscarHibernate54.jar</systemPath> <!--项目根目录下的lib文件夹下-->

</dependency>

<!-- 人大金仓数据库驱动 -->

<dependency>

<groupId>com.kingbase</groupId> <!--自定义-->

<artifactId>hibernate-dialect</artifactId> <!--自定义-->

<version>4.0</version> <!--自定义-->

<scope>system</scope>

<systemPath>${pom.basedir}/lib/hibernate-4.dialect.jar</systemPath> <!--项目根目录下的lib文件夹下-->

</dependency>

<!-- 人大金仓V8R6 -->

<dependency>

<groupId>com.kingbase</groupId>

<artifactId>kingbase8</artifactId>

<version>8.6.0</version>

<scope>system</scope>

<systemPath>${pom.basedir}/lib/kingbase8-8.6.0.jar</systemPath>

</dependency>

<!-- 人大金仓V8R3 -->

<!-- <dependency>-->

<!-- <groupId>com.kingbase</groupId>-->

<!-- <artifactId>kingbase8</artifactId>-->

<!-- <version>8.2.0</version>-->

<!-- <scope>system</scope>-->

<!-- <systemPath>${pom.basedir}/lib/kingbase8-8.2.0.jar</systemPath>-->

<!-- </dependency>-->

<!-- 高斯数据库驱动 -->

<dependency>

<groupId>org.postgresql</groupId> <!--自定义-->

<artifactId>gauss</artifactId> <!--自定义-->

<version>1.0</version> <!--自定义-->

<scope>system</scope>

<systemPath>${pom.basedir}/lib/gsjdbc4.jar</systemPath> <!--项目根目录下的lib文件夹下-->

</dependency>

<!-- oceanBase数据库驱动包 -->

<!-- https://mvnrepository.com/artifact/com.alipay.oceanbase/oceanbase-client -->

<dependency>

<groupId>com.alipay.oceanbase</groupId>

<artifactId>oceanbase-client</artifactId>

<version>1.1.10</version>

<scope>system</scope>

<systemPath>${pom.basedir}/lib/oceanbase-client-1.1.10.2.jar</systemPath>

</dependency>

<!-- 瀚高数据库 -->

<dependency>

<groupId>com.highgo</groupId>

<artifactId>HgdbJdbc</artifactId>

<version>6.2.2</version>

</dependency>

<!-- 瀚高数据库 end -->

<!--pagehelper-->

<dependency>

<groupId>com.github.pagehelper</groupId>

<artifactId>pagehelper-spring-boot-starter</artifactId>

<version>1.3.0</version>

<exclusions>

<exclusion>

<groupId>org.mybatis.spring.boot</groupId>

<artifactId>mybatis-spring-boot-starter</artifactId>

</exclusion>

</exclusions>

</dependency> datasource:

driver-class-name: dm.jdbc.driver.DmDriver

url: jdbc:dm://127.0.0.1:1111/DMNAME?zeroDateTimeBehavior=convertToNull&useUnicode=true&characterEncoding=utf-8

username: SYSDBA

password: 123456

platform: dm

# mysql dm pg kingbase oscar

# driver-class-name: com.kingbase8.Driver

# url: jdbc:kingbase8://127.0.0.1:1111/DMNAME

# username: SYSTEM

# password: 123456

# platform: kingbase

# driver-class-name: com.oscar.Driver

# url: jdbc:oscar://127.0.0.1:1111/DMNAME

# username: sysdba

# password: 123456

# platform: pg

# driver-class-name: com.mysql.cj.jdbc.Driver

# url: jdbc:mysql://127.0.0.1:1111/DMNAME?useUnicode=true&characterEncoding=utf8&useSSL=false&serverTimezone=GMT%2B8&allowMultiQueries=true&jdbcCompliantTruncation=false&zeroDateTimeBehavior=convertToNull

# username: root

# password: 123456

# platform: mysql

# driver-class-name: com.alipay.oceanbase.jdbc.Driver

# url: jdbc:oceanbase:oracle://127.0.0.1:1111/SYS?zeroDateTimeBehavior=convertToNull&useUnicode=true&characterEncoding=utf-8&clobAsString=true&allowMultiQueries=true&rewriteBatchedStatements=true

# username: SYS@ziguang_oracle#isv_pansoft:100005

# password: 123456

# platform: oracle

# url: jdbc:highgo://127.0.0.1:1111/dbname?strToNull=true&productNameCompatible=true

# driver-class-name: com.highgo.jdbc.Driver

# username: sysdba

# password: 123456

# platform: highgo二,对于数据库分页的适配

对于数据库的分页,由于不用数据库,方言不同。这边引入了pageHelper组件。

不同数据库分分页方式不一样,mysql用的是limit, oracle这里用的是rownum. 在这里用了一个分页插件PageHelper. 它可以根据不同的数据库来生成对应的分页语句。具体应用为

<!--pagehelper-->

<dependency>

<groupId>com.github.pagehelper</groupId>

<artifactId>pagehelper-spring-boot-starter</artifactId>

<version>1.3.0</version>

<exclusions>

<exclusion>

<groupId>org.mybatis.spring.boot</groupId>

<artifactId>mybatis-spring-boot-starter</artifactId>

</exclusion>

</exclusions>

</dependency>在配置文件设置对应参数

### resources

pagehelper:

reasonable: true

support-methods-arguments: true

params: count=countSql

# 关于pagehelper支持哪些数据库方言参考:com.github.pagehelper.page.PageAutoDialect

helper-dialect: postgresql #达梦

# helper-dialect: postgresql #人大金仓

# helper-dialect: oscar #神通

# helper-dialect: mysql #mysql

# helper-dialect: oracle #OB

# helperDialect: postgresql #瀚高配置号之后就可以使用了。 使用方法很简单。

PageInfo<Map<String, Object>> pageInfo =

PageHelper.startPage(pageDTO.getPage(), pageDTO.getLimit())

.doSelectPageInfo(() ->accessRecordMapper.findAccessRecordByPageDTO(pageDTO));

这个组件在另一篇里面也说到过

项目适配oceanBase oracle数据库 mysql适配oracle_maqinghui的博客-CSDN博客

配置好之后,就需要找到xxl-job代码里面用到分页的地方进行修改了。需要改地方不算多总共有10个地方需要修改。

这里列举两,地方:

XxlJobServiceImpl.pageList

@Override

public Map<String, Object> pageList(int start, int length, int jobGroup, int triggerStatus, String jobDesc, String executorHandler, String author) {

// page list 这里注意页数的计算, 如果计算不对分页会有问题。

Integer startpage = start/length + 1;

PageInfo<XxlJobInfo> xxlJobInfoPageInfo = PageHelper.startPage(startpage, length)

.doSelectPageInfo(() -> xxlJobInfoDao.pageList(jobGroup, triggerStatus, jobDesc, executorHandler, author));

List<XxlJobInfo> list = xxlJobInfoPageInfo.getList();

Long list_count = xxlJobInfoPageInfo.getTotal();

//List<XxlJobInfo> list = xxlJobInfoDao.pageList(start, length, jobGroup, triggerStatus, jobDesc, executorHandler, author);

//int list_count = xxlJobInfoDao.pageListCount(start, length, jobGroup, triggerStatus, jobDesc, executorHandler, author);

// package result

Map<String, Object> maps = new HashMap<String, Object>();

maps.put("recordsTotal", list_count); // 总记录数

maps.put("recordsFiltered", list_count); // 过滤后的总记录数

maps.put("data", list); // 分页列表

return maps;

}2,xxl-job里面删除日志的时候也是用分页删的。 在这里只吧一个sql分成两个,先查询,再删除

JobLogReportHelper.start()

package com.xxl.job.admin.core.thread;

import com.github.pagehelper.PageHelper;

import com.github.pagehelper.PageInfo;

import com.xxl.job.admin.core.conf.XxlJobAdminConfig;

import com.xxl.job.admin.core.model.XxlJobLogReport;

import org.slf4j.Logger;

import org.slf4j.LoggerFactory;

import java.util.*;

import java.util.concurrent.TimeUnit;

/**

* job log report helper

*

* @author xuxueli 2019-11-22

*/

public class JobLogReportHelper {

private static Logger logger = LoggerFactory.getLogger(JobLogReportHelper.class);

private static JobLogReportHelper instance = new JobLogReportHelper();

public static JobLogReportHelper getInstance(){

return instance;

}

private Thread logrThread;

private volatile boolean toStop = false;

public void start(){

logrThread = new Thread(new Runnable() {

@Override

public void run() {

// last clean log time

long lastCleanLogTime = 0;

while (!toStop) {

// 1、log-report refresh: refresh log report in 3 days

try {

for (int i = 0; i < 3; i++) {

// today

Calendar itemDay = Calendar.getInstance();

itemDay.add(Calendar.DAY_OF_MONTH, -i);

itemDay.set(Calendar.HOUR_OF_DAY, 0);

itemDay.set(Calendar.MINUTE, 0);

itemDay.set(Calendar.SECOND, 0);

itemDay.set(Calendar.MILLISECOND, 0);

Date todayFrom = itemDay.getTime();

itemDay.set(Calendar.HOUR_OF_DAY, 23);

itemDay.set(Calendar.MINUTE, 59);

itemDay.set(Calendar.SECOND, 59);

itemDay.set(Calendar.MILLISECOND, 999);

Date todayTo = itemDay.getTime();

// refresh log-report every minute

XxlJobLogReport xxlJobLogReport = new XxlJobLogReport();

xxlJobLogReport.setTriggerDay(todayFrom);

xxlJobLogReport.setRunningCount(0);

xxlJobLogReport.setSucCount(0);

xxlJobLogReport.setFailCount(0);

Map<String, Object> triggerCountMap = XxlJobAdminConfig.getAdminConfig().getXxlJobLogDao().findLogReport(todayFrom, todayTo);

if (triggerCountMap!=null && triggerCountMap.size()>0) {

int triggerDayCount = triggerCountMap.containsKey("triggerDayCount")?Integer.valueOf(String.valueOf(triggerCountMap.get("triggerDayCount"))):0;

int triggerDayCountRunning = triggerCountMap.containsKey("triggerDayCountRunning")?Integer.valueOf(String.valueOf(triggerCountMap.get("triggerDayCountRunning"))):0;

int triggerDayCountSuc = triggerCountMap.containsKey("triggerDayCountSuc")?Integer.valueOf(String.valueOf(triggerCountMap.get("triggerDayCountSuc"))):0;

int triggerDayCountFail = triggerDayCount - triggerDayCountRunning - triggerDayCountSuc;

xxlJobLogReport.setRunningCount(triggerDayCountRunning);

xxlJobLogReport.setSucCount(triggerDayCountSuc);

xxlJobLogReport.setFailCount(triggerDayCountFail);

}

// do refresh

int ret = XxlJobAdminConfig.getAdminConfig().getXxlJobLogReportDao().update(xxlJobLogReport);

if (ret < 1) {

XxlJobAdminConfig.getAdminConfig().getXxlJobLogReportDao().save(xxlJobLogReport);

}

}

} catch (Exception e) {

if (!toStop) {

logger.error(">>>>>>>>>>> xxl-job, job log report thread error:{}", e);

}

}

// 2、log-clean: switch open & once each day

if (XxlJobAdminConfig.getAdminConfig().getLogretentiondays()>0

&& System.currentTimeMillis() - lastCleanLogTime > 24*60*60*1000) {

// expire-time

Calendar expiredDay = Calendar.getInstance();

expiredDay.add(Calendar.DAY_OF_MONTH, -1 * XxlJobAdminConfig.getAdminConfig().getLogretentiondays());

expiredDay.set(Calendar.HOUR_OF_DAY, 0);

expiredDay.set(Calendar.MINUTE, 0);

expiredDay.set(Calendar.SECOND, 0);

expiredDay.set(Calendar.MILLISECOND, 0);

Date clearBeforeTime = expiredDay.getTime();

// clean expired log

List<Long> logIds = null;

do {

//1先查出来,

PageInfo<Long> xxlJobLogPageInfo = PageHelper.startPage(0, 1000)

.doSelectPageInfo(() -> XxlJobAdminConfig.getAdminConfig().getXxlJobLogDao().findClearLogIds(0, 0, clearBeforeTime, new ArrayList<>()));

logIds = xxlJobLogPageInfo.getList();

//这里原来是直接在查询里面有分页。

//logIds = XxlJobAdminConfig.getAdminConfig().getXxlJobLogDao().findClearLogIds(0, 0, clearBeforeTime, 0, 1000);

if (logIds!=null && logIds.size()>0) {

//再删除

XxlJobAdminConfig.getAdminConfig().getXxlJobLogDao().clearLog(logIds);

}

} while (logIds!=null && logIds.size()>0);

// update clean time

lastCleanLogTime = System.currentTimeMillis();

}

try {

TimeUnit.MINUTES.sleep(1);

} catch (Exception e) {

if (!toStop) {

logger.error(e.getMessage(), e);

}

}

}

logger.info(">>>>>>>>>>> xxl-job, job log report thread stop");

}

});

logrThread.setDaemon(true);

logrThread.setName("xxl-job, admin JobLogReportHelper");

logrThread.start();

}

public void toStop(){

toStop = true;

// interrupt and wait

logrThread.interrupt();

try {

logrThread.join();

} catch (InterruptedException e) {

logger.error(e.getMessage(), e);

}

}

}

<select id="findClearLogIds" resultType="long" >

SELECT id FROM xxl_job_log

<trim prefix="WHERE" prefixOverrides="AND | OR" >

<if test="jobGroup gt 0">

AND job_group = #{jobGroup}

</if>

<if test="jobId gt 0">

AND job_id = #{jobId}

</if>

<if test="clearBeforeTime != null">

AND trigger_time <![CDATA[ <= ]]> #{clearBeforeTime}

</if>

<if test="logIds.size gt 0">

AND id NOT in

<foreach collection="logIds" item="item" open="(" close=")" separator="," >

#{item}

</foreach>

<!-- (

SELECT id FROM(

SELECT id FROM xxl_job_log t

<trim prefix="WHERE" prefixOverrides="AND | OR" >

<if test="jobGroup gt 0">

AND t.job_group = #{jobGroup}

</if>

<if test="jobId gt 0">

AND t.job_id = #{jobId}

</if>

</trim>

ORDER BY t.trigger_time desc

LIMIT 0, #{clearBeforeNum}

) t1

) -->

</if>

</trim>

order by id asc

<!-- LIMIT #{pagesize} -->

</select>

<select id="findNotClearLogIds" resultType="long" >

SELECT id FROM xxl_job_log t

<trim prefix="WHERE" prefixOverrides="AND | OR" >

<if test="jobGroup gt 0">

AND t.job_group = #{jobGroup}

</if>

<if test="jobId gt 0">

AND t.job_id = #{jobId}

</if>

</trim>

ORDER BY t.trigger_time desc

</select>三,对于数据库保存之后返回自增ID的适配

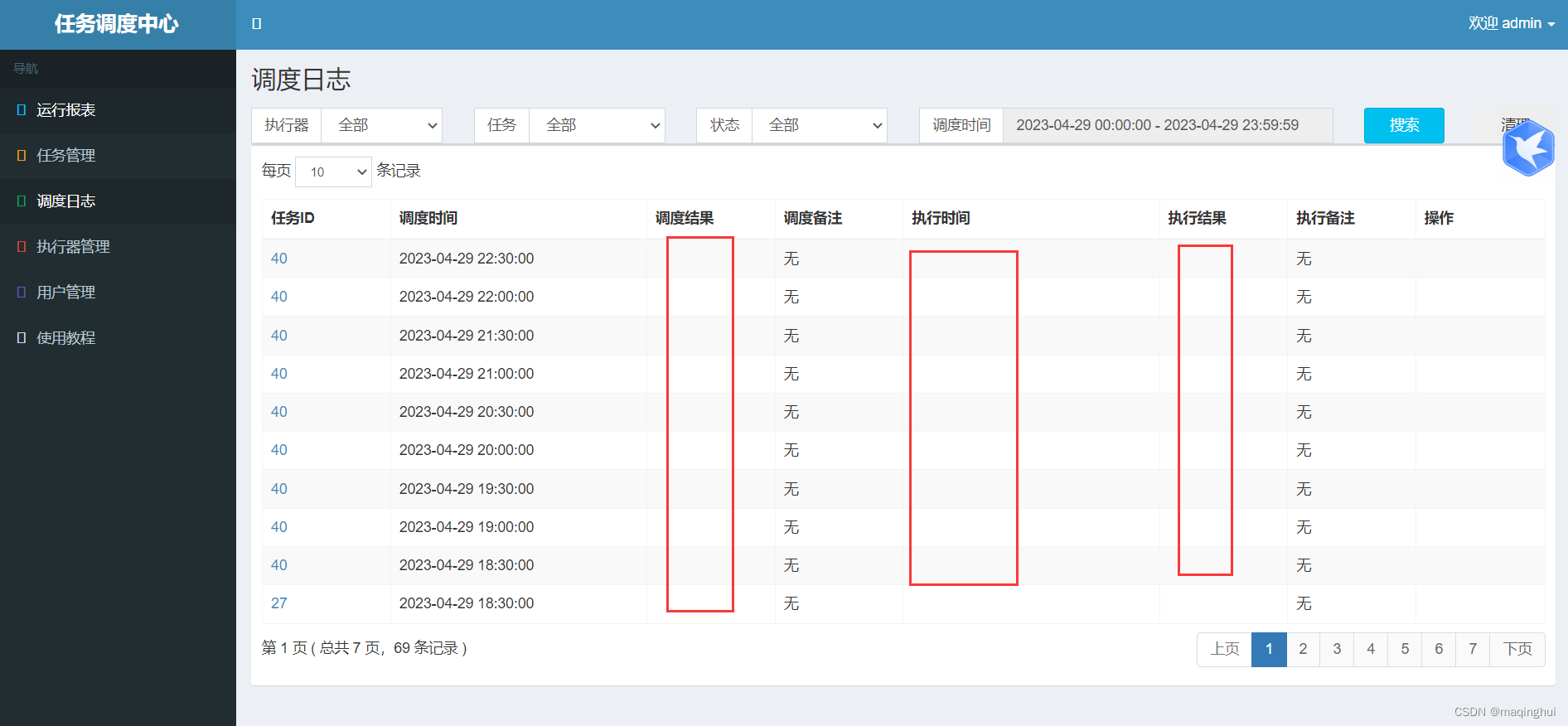

前两个适配好之后,基本就可以正常用了, 但是使用中会发现,,执行的任务没有返回结果。

然后就找了一下日志的保存方法。

发现这个

XxlJobTrigger.processTrigger()

发现XxlJobLog保存之后又用到了它的ID。 但是XxlJobLog 的ID是自增的,实际保存的时候没有给ID赋值,保存之后,数据库生成的ID又没有正常返回,导致执行结果失败。针对这个

看了一下xxl-job的保存方法。在里面加了返回自增ID的片段。

<insert id="save" parameterType="com.xxl.job.admin.core.model.XxlJobLog" >

INSERT INTO xxl_job_log (

job_group,

job_id,

trigger_time,

trigger_code,

handle_code

) VALUES (

#{jobGroup},

#{jobId},

#{triggerTime},

#{triggerCode},

#{handleCode}

);

<selectKey resultType="java.lang.Integer" order="AFTER" keyProperty="id">

<if test="_databaseId == 'dm'">

SELECT @@IDENTITY AS id

</if>

<if test="_databaseId == 'pg'">

select XXL_JOB_LOG_ID_SEQ.currval

</if>

<if test="_databaseId == 'kingbase'">

SELECT lastval()

</if>

<if test="_databaseId == 'highgo'">

SELECT lastval()

</if>

<if test="_databaseId == 'gs'">

set enable_beta_features=true;SELECT lastval()

</if>

<if test="_databaseId == 'mysql'">

SELECT LAST_INSERT_ID()

</if>

</selectKey>

</insert>这里面不同的数据库 返回自增ID的写法也不一样,

里面的

_databaseId是通过mybatis参数设置进去的。

### mybatis

mybatis:

configuration:

database-id: dm #达梦

# database-id: mysql #mysql

# database-id: kingbase #人大金仓

# database-id: oracle #OB

# database-id: highgo #瀚高

# database-id: gs #高斯

# database-id: vast #海量然后看了一下xxl-job里面的保存方法,只有XxlJobLog 保存之后后面又用到了它的ID,然后就只改XxlJobLog的save方法就可以了。

到此适配工作就结束了。

如果后面再有新的数据库只需要把驱动jar包导进去,然后适配一下返回的自增ID,就可以用了。

1439

1439

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?