原文网址(转载请注明出处):

源码基于:Android Q

文章目录

1.简介

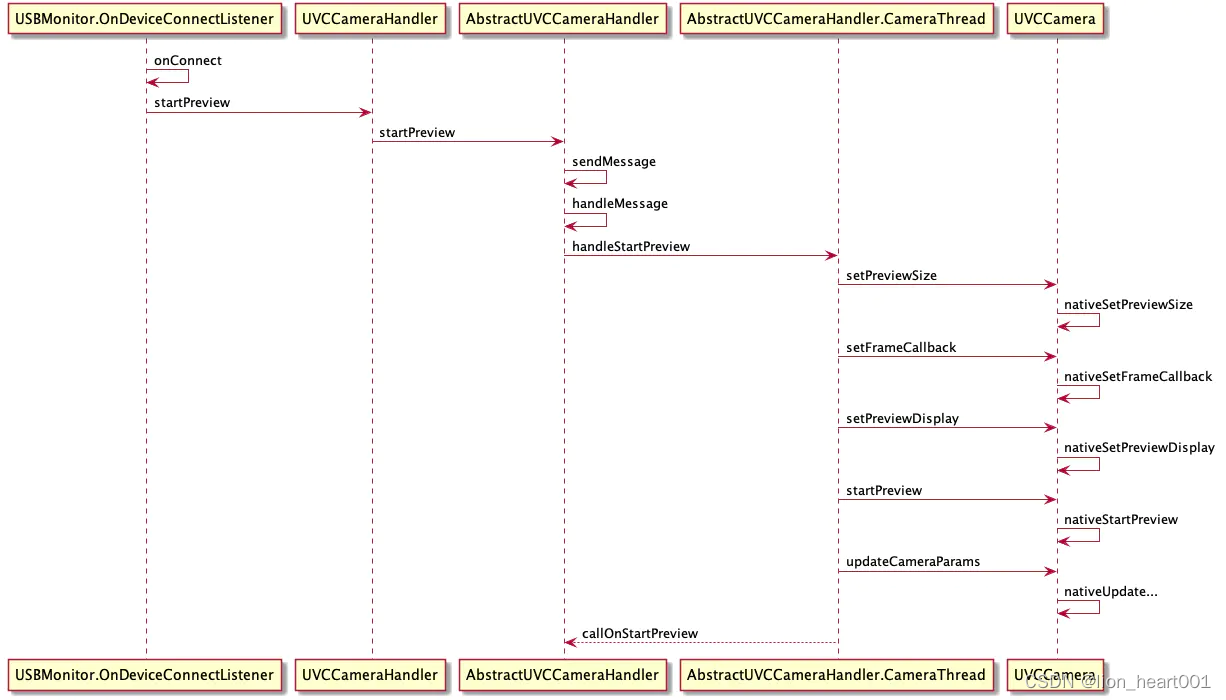

在成功调用UVCCamera的一系列open操作之后,我们就可以进入startPreview阶段。这个阶段的上层调用逻辑相对比较简单,我们先看一下一个大概的时序图:

2.时序图

我们在USBMonitor.OnDeviceConnectListener的onConnect回调中(openCamra之后)先调用了UVCCameraHandler的startPreview方法。

查看源码发现UVCCameraHandler中实际是调用了super的startPreview,那么我们再看到super也就是AbstractUVCCameraHandler中

3.Java usbcameratest7

3.1.UVCCameraHandler.java

@Override

public void startPreview(final Object surface) {

super.startPreview(surface);

}

3.2.AbstractUVCCameraHandler.java

protected void startPreview(final Object surface) {

checkReleased();

if (!((surface instanceof SurfaceHolder) || (surface instanceof Surface) || (surface instanceof SurfaceTexture))) {

throw new IllegalArgumentException("surface should be one of SurfaceHolder, Surface or SurfaceTexture");

}

sendMessage(obtainMessage(MSG_PREVIEW_START, surface));

}

...

@Override

public void handleMessage(final Message msg) {

final CameraThread thread = mWeakThread.get();

if (thread == null) return;

switch (msg.what) {

...

case MSG_PREVIEW_START:

thread.handleStartPreview(msg.obj);

break;

...

public void handleStartPreview(final Object surface) {

if (DEBUG) Log.v(TAG_THREAD, "handleStartPreview:");

if ((mUVCCamera == null) || mIsPreviewing) return;

try {

mUVCCamera.setPreviewSize(mWidth, mHeight, 1, 31, mPreviewMode, mBandwidthFactor); // 4.1

} catch (final IllegalArgumentException e) {

try {

// fallback to YUV mode

mUVCCamera.setPreviewSize(mWidth, mHeight, 1, 31, UVCCamera.DEFAULT_PREVIEW_MODE, mBandwidthFactor);

} catch (final IllegalArgumentException e1) {

callOnError(e1);

return;

}

}

if (surface instanceof SurfaceHolder) {

mUVCCamera.setPreviewDisplay((SurfaceHolder)surface);// 4.2

} if (surface instanceof Surface) {

mUVCCamera.setPreviewDisplay((Surface)surface);

} else {

mUVCCamera.setPreviewTexture((SurfaceTexture)surface);

}

mUVCCamera.startPreview();// 4.3

mUVCCamera.updateCameraParams();// 4.4

synchronized (mSync) {

mIsPreviewing = true;

}

callOnStartPreview();

}

相机使用的整个流程大致可以分为“数据采集”+“渲染”,而startPreview就是这两个过程结合之处,我们可以看到startPreview需要传入一个类型为Object的surface,这个就是我们渲染所需要的纹理,相机采集到的每一帧数据都需要绘制到这块纹理上,后面实现拍照、视频录制甚至是图像编辑(裁剪、滤镜等)都需要与这块纹理打交道,我们将在后续讲到“渲染”流程的时候再详细介绍。这里我们只需要知道从外部传入一块纹理即可。在Android UI中对于纹理的封装就是SurfaceView或者TextureView,而在UVCCamera中就是UVCCameraTextureView,它继承自android.view.TextureView。

可以看到AbstractUVCCameraHandler中拿到外部传入的surface之后,执行了与openCamera相同的流程——通过消息机制调度相机操作,这里就发送了一个MSG_PREVIEW_START消息通知AbstractUVCCameraHandler.CameraThread来开启预览。

CameraThread中的 handleStartPreview 大概就是做了设置预览相关参数、设置预览数据回调监听、绑定纹理、开启预览等这几部操作。根据代码中方法的名字基本也能了解整个调用的逻辑。之后的代码就进入到了jni层。我们一个个来分析。

4.Native层

4.1.setPreviewSize

在Java层的UVCCamera中,该方法实际调用了jni的方法——nativeSetPreviewSize。在上一篇文章中,我们发现在UVCCamera的open方法中也调用了nativeSetPreviewSize。nativeSetPreviewSize是用来设置预览所需要的一些相关参数的,如宽、高、fps等等。而在open方法中调用时,只是为这些参数赋了默认值,在setPreviewSize中则是从外部传入的上层业务中实际的值。至于c层代码也没什么好说的,就是一通赋值操作

int UVCPreview::setPreviewSize(int width, int height, int min_fps, int max_fps, int mode, float bandwidth) {

ENTER();

int result = 0;

if ((requestWidth != width) || (requestHeight != height) || (requestMode != mode)) {

requestWidth = width;

requestHeight = height;

requestMinFps = min_fps;

requestMaxFps = max_fps;

requestMode = mode;

requestBandwidth = bandwidth;

uvc_stream_ctrl_t ctrl;

result = uvc_get_stream_ctrl_format_size_fps(mDeviceHandle, &ctrl,

!requestMode ? UVC_FRAME_FORMAT_YUYV : UVC_FRAME_FORMAT_MJPEG,

requestWidth, requestHeight, requestMinFps, requestMaxFps);

}

RETURN(result, int);

}

4.2.setPreviewDisplay

该方法调用流程是:

Java层:UVCCamera.startPreview

C层:serenegiant_usb_UVCCamera->nativeSetPreviewDisplay

C层:UVCCamera->setPreviewDisplay

C层:UVCPreview->setPreviewDisplay

public synchronized void setPreviewDisplay(final Surface surface) {

nativeSetPreviewDisplay(mNativePtr, surface);

}

> serenegiant_usb_UVCCamera.cpp

static jint nativeSetPreviewDisplay(JNIEnv *env, jobject thiz,

ID_TYPE id_camera, jobject jSurface) {

jint result = JNI_ERR;

ENTER();

UVCCamera *camera = reinterpret_cast<UVCCamera *>(id_camera);

if (LIKELY(camera)) {

ANativeWindow *preview_window = jSurface ? ANativeWindow_fromSurface(env, jSurface) : NULL;

result = camera->setPreviewDisplay(preview_window);

}

RETURN(result, jint);

}

> UVCCamera.cpp

int UVCCamera::setPreviewDisplay(ANativeWindow *preview_window) {

ENTER();

int result = EXIT_FAILURE;

if (mPreview) {

result = mPreview->setPreviewDisplay(preview_window);

}

RETURN(result, int);

}

> UVCPreview

int UVCPreview::setPreviewDisplay(ANativeWindow *preview_window) {

ENTER();

pthread_mutex_lock(&preview_mutex);

{

if (mPreviewWindow != preview_window) {

if (mPreviewWindow)

ANativeWindow_release(mPreviewWindow);

mPreviewWindow = preview_window;

if (LIKELY(mPreviewWindow)) {

ANativeWindow_setBuffersGeometry(mPreviewWindow,

frameWidth, frameHeight, previewFormat);

}

}

}

pthread_mutex_unlock(&preview_mutex);

RETURN(0, int);

}

这个方法中,传入的ANativeWindow就是我们之前提到的纹理,转回Java层就是UVC库里封装的UVCCameraTextureView。然后这个方法主要作用就是将传入的纹理保存到mPreviewWindow这个全局变量中。之后有调用了ANativeWindow_setBuffersGeometry方法设置缓冲区的宽高,这个方法在安卓的文档就就介绍得比较详细了:

Change the format and size of the window buffers.

The width and height control the number of pixels in the buffers, not the dimensions of the window on screen. If these are different than the window’s physical size, then its buffer will be scaled to match that size when compositing it to the screen. The width and height must be either both zero or both non-zero.

For all of these parameters, if 0 is supplied then the window’s base value will come back in force.

翻译过来大概就是:

更改窗口缓冲区的颜色格式和尺寸。

宽度和高度控制缓冲区中的像素,而不是屏幕上窗口的尺寸。如果这些大小与窗口的物理大小不同,则在将其合成到屏幕时,将缩放其缓冲区以匹配该大小。宽度和高度必须同时为零或同时为非零。

对于所有这些参数,如果设置为0,则窗口的默认值将重新生效。

4.3 startPreview

接着之前开启预览过程最终走到AbstractUVCCameraHandler.CameraThread的handleStartPreview方法,继而调用UVCCamera的startPreview,如上图所示,UVCCamera的startPreview最终调用到C层的UVCPreview的startPreview方法。

AbstractUVCCameraHandler.java

public void handleStartPreview(final Object surface) {

...

mUVCCamera.startPreview();

mUVCCamera.updateCameraParams();

synchronized (mSync) {

mIsPreviewing = true;

}

callOnStartPreview();

}

UVCCamera.java

public synchronized void startPreview() {

if (mCtrlBlock != null) {

nativeStartPreview(mNativePtr);

}

}

serenegiant_usb_UVCCamera.cpp

static jint nativeStartPreview(JNIEnv *env, jobject thiz,

ID_TYPE id_camera) {

ENTER();

UVCCamera *camera = reinterpret_cast<UVCCamera *>(id_camera);

if (LIKELY(camera)) {

return camera->startPreview();

}

RETURN(JNI_ERR, jint);

}

UVCCamera.cpp

int UVCCamera::startPreview() {

ENTER();

int result = EXIT_FAILURE;

if (mDeviceHandle) {

return mPreview->startPreview();

}

RETURN(result, int);

}

UVCPreview.cpp

int UVCPreview::startPreview() {

ENTER();

int result = EXIT_FAILURE;

if (!isRunning()) {

mIsRunning = true;

pthread_mutex_lock(&preview_mutex);

{

if (LIKELY(mPreviewWindow)) {

result = pthread_create(&preview_thread, NULL, preview_thread_func, (void *)this);

}

}

pthread_mutex_unlock(&preview_mutex);

if (UNLIKELY(result != EXIT_SUCCESS)) {

LOGW("UVCCamera::window does not exist/already running/could not create thread etc.");

mIsRunning = false;

pthread_mutex_lock(&preview_mutex);

{

pthread_cond_signal(&preview_sync);

}

pthread_mutex_unlock(&preview_mutex);

}

}

RETURN(result, int);

}

在startPreview方法中通过调用 pthread_create 起了一个线程,该线程中执行的内容在preview_thread_func中,于是我们继续看preview_thread_func:

4.4.preview_thread_func

void *UVCPreview::preview_thread_func(void *vptr_args) {

int result;

ENTER();

UVCPreview *preview = reinterpret_cast<UVCPreview *>(vptr_args);

if (LIKELY(preview)) {

uvc_stream_ctrl_t ctrl;

result = preview->prepare_preview(&ctrl);

if (LIKELY(!result)) {

preview->do_preview(&ctrl);

}

}

PRE_EXIT();

pthread_exit(NULL);

}

这里我们就关心 prepare_preview、do_preview这两个方法。先看 prepare_preview:

4.5.prepare_preview

int UVCPreview::prepare_preview(uvc_stream_ctrl_t *ctrl) {

uvc_error_t result;

ENTER();

result = uvc_get_stream_ctrl_format_size_fps(mDeviceHandle, ctrl,

!requestMode ? UVC_FRAME_FORMAT_YUYV : UVC_FRAME_FORMAT_MJPEG,

requestWidth, requestHeight, requestMinFps, requestMaxFps

);

if (LIKELY(!result)) {

#if LOCAL_DEBUG

uvc_print_stream_ctrl(ctrl, stderr);

#endif

uvc_frame_desc_t *frame_desc;

result = uvc_get_frame_desc(mDeviceHandle, ctrl, &frame_desc);

if (LIKELY(!result)) {

frameWidth = frame_desc->wWidth;

frameHeight = frame_desc->wHeight;

LOGI("frameSize=(%d,%d)@%s", frameWidth, frameHeight, (!requestMode ? "YUYV" : "MJPEG"));

pthread_mutex_lock(&preview_mutex);

if (LIKELY(mPreviewWindow)) {

ANativeWindow_setBuffersGeometry(mPreviewWindow,

frameWidth, frameHeight, previewFormat);

}

pthread_mutex_unlock(&preview_mutex);

} else {

frameWidth = requestWidth;

frameHeight = requestHeight;

}

frameMode = requestMode;

frameBytes = frameWidth * frameHeight * (!requestMode ? 2 : 4);

previewBytes = frameWidth * frameHeight * PREVIEW_PIXEL_BYTES;

} else {

LOGE("could not negotiate with camera:err=%d", result);

}

RETURN(result, int);

}

这个方法主要还是做了一些预览的参数设置工作,包括帧宽高、根据色彩模式配置所需要的内存空间等。其中也调用了ANativeWindow_setBuffersGeometry来更新原生窗口的参数。之后我们再看do_preview:

4.6.do_preview

void UVCPreview::do_preview(uvc_stream_ctrl_t *ctrl) {

ENTER();

uvc_frame_t *frame = NULL;

uvc_frame_t *frame_mjpeg = NULL;

uvc_error_t result = uvc_start_streaming_bandwidth(

mDeviceHandle, ctrl, uvc_preview_frame_callback, (void *)this, requestBandwidth, 0);

if (LIKELY(!result)) {

clearPreviewFrame();

pthread_create(&capture_thread, NULL, capture_thread_func, (void *)this);

#if LOCAL_DEBUG

LOGI("Streaming...");

#endif

if (frameMode) {

// MJPEG mode

for ( ; LIKELY(isRunning()) ; ) {

frame_mjpeg = waitPreviewFrame();

if (LIKELY(frame_mjpeg)) {

frame = get_frame(frame_mjpeg->width * frame_mjpeg->height * 2);

result = uvc_mjpeg2yuyv(frame_mjpeg, frame); // MJPEG => yuyv

recycle_frame(frame_mjpeg);

if (LIKELY(!result)) {

frame = draw_preview_one(frame, &mPreviewWindow, uvc_any2rgbx, 4);

addCaptureFrame(frame);

} else {

recycle_frame(frame);

}

}

}

} else {

// yuvyv mode

for ( ; LIKELY(isRunning()) ; ) {

frame = waitPreviewFrame();

if (LIKELY(frame)) {

frame = draw_preview_one(frame, &mPreviewWindow, uvc_any2rgbx, 4);

addCaptureFrame(frame);

}

}

}

pthread_cond_signal(&capture_sync);

#if LOCAL_DEBUG

LOGI("preview_thread_func:wait for all callbacks complete");

#endif

uvc_stop_streaming(mDeviceHandle);

#if LOCAL_DEBUG

LOGI("Streaming finished");

#endif

} else {

uvc_perror(result, "failed start_streaming");

}

EXIT();

}

在该方法中我们看到调用了 uvc_start_streaming_bandwidth,这个方式是在libuvc的stream.c中。

4.7.uvc_start_streaming_bandwidth

straeam.c

/** Begin streaming video from the camera into the callback function.

* @ingroup streaming

*

* @param devh UVC device

* @param ctrl Control block, processed using {uvc_probe_stream_ctrl} or

* {uvc_get_stream_ctrl_format_size}

* @param cb User callback function. See {uvc_frame_callback_t} for restrictions.

* @param bandwidth_factor [0.0f, 1.0f]

* @param flags Stream setup flags, currently undefined. Set this to zero. The lower bit

* is reserved for backward compatibility.

*/

uvc_error_t uvc_start_streaming_bandwidth(uvc_device_handle_t *devh,

uvc_stream_ctrl_t *ctrl, uvc_frame_callback_t *cb, void *user_ptr,

float bandwidth_factor,

uint8_t flags) {

uvc_error_t ret;

uvc_stream_handle_t *strmh;

ret = uvc_stream_open_ctrl(devh, &strmh, ctrl);

if (UNLIKELY(ret != UVC_SUCCESS))

return ret;

ret = uvc_stream_start_bandwidth(strmh, cb, user_ptr, bandwidth_factor, flags);

if (UNLIKELY(ret != UVC_SUCCESS)) {

uvc_stream_close(strmh);

return ret;

}

return UVC_SUCCESS;

}

根据注释我们可以知道,这个方法作用就是将相机采集到的数据放到回调函数中,于是我们接着看传进来的回调函数:uvc_preview_frame_callback

4.8.uvc_preview_frame_callback

UVCPreview.cpp

void UVCPreview::uvc_preview_frame_callback(uvc_frame_t *frame, void *vptr_args) {

UVCPreview *preview = reinterpret_cast<UVCPreview *>(vptr_args);

if UNLIKELY(!preview->isRunning() || !frame || !frame->frame_format || !frame->data || !frame->data_bytes) return;

if (UNLIKELY(

((frame->frame_format != UVC_FRAME_FORMAT_MJPEG) && (frame->actual_bytes < preview->frameBytes))

|| (frame->width != preview->frameWidth) || (frame->height != preview->frameHeight) )) {

#if LOCAL_DEBUG

LOGD("broken frame!:format=%d,actual_bytes=%d/%d(%d,%d/%d,%d)",

frame->frame_format, frame->actual_bytes, preview->frameBytes,

frame->width, frame->height, preview->frameWidth, preview->frameHeight);

#endif

return;

}

if (LIKELY(preview->isRunning())) {

uvc_frame_t *copy = preview->get_frame(frame->data_bytes);// 这里

if (UNLIKELY(!copy)) {

#if LOCAL_DEBUG

LOGE("uvc_callback:unable to allocate duplicate frame!");

#endif

return;

}

uvc_error_t ret = uvc_duplicate_frame(frame, copy);

if (UNLIKELY(ret)) {

preview->recycle_frame(copy);

return;

}

preview->addPreviewFrame(copy);

}

}

这个方法前面一些可以先忽略,我们关心的是怎么样处理一帧数据的,可以看到 uvc_frame_t *copy = preview->get_frame(frame->data_bytes);这个方法:

4.9.get_frame

/**

* get uvc_frame_t from frame pool

* if pool is empty, create new frame

* this function does not confirm the frame size

* and you may need to confirm the size

*/

uvc_frame_t *UVCPreview::get_frame(size_t data_bytes) {

uvc_frame_t *frame = NULL;

pthread_mutex_lock(&pool_mutex);

{

if (!mFramePool.isEmpty()) {

frame = mFramePool.last();

}

}

pthread_mutex_unlock(&pool_mutex);

if UNLIKELY(!frame) {

LOGW("allocate new frame");

frame = uvc_allocate_frame(data_bytes);

}

return frame;

}

先从全局的mFramePool中取出一帧,然后再调用libuvc中frame_original.c的方法—— uvc_duplicate_frame来把从相机获取到的帧数据复制到刚才mFramePool中取出的*copy中。

4.10.uvc_duplicate_frame

uvc_error_t uvc_duplicate_frame(uvc_frame_t *in, uvc_frame_t *out) {

if (uvc_ensure_frame_size(out, in->data_bytes) < 0)

return UVC_ERROR_NO_MEM;

out->width = in->width;

out->height = in->height;

out->frame_format = in->frame_format;

out->step = in->step;

out->sequence = in->sequence;

out->capture_time = in->capture_time;

out->source = in->source;

memcpy(out->data, in->data, in->data_bytes);

return UVC_SUCCESS;

}

最后再通过UVCPreview的addPreviewFrame方法将当前帧放入previewFrames中。

4.11.addPreviewFrame

void UVCPreview::addPreviewFrame(uvc_frame_t *frame) {

pthread_mutex_lock(&preview_mutex);

if (isRunning() && (previewFrames.size() < MAX_FRAME)) {

previewFrames.put(frame);

frame = NULL;

pthread_cond_signal(&preview_sync);

}

pthread_mutex_unlock(&preview_mutex);

if (frame) {

recycle_frame(frame);

}

}

以上是UVCPreview中 do_preview 方法中有关于预览回调处理的逻辑。接着我们继续回到do_preview的后续代码中,核心是这一段代码:

if (frameMode) {

// MJPEG mode

for ( ; LIKELY(isRunning()) ; ) {

frame_mjpeg = waitPreviewFrame();

if (LIKELY(frame_mjpeg)) {

frame = get_frame(frame_mjpeg->width * frame_mjpeg->height * 2);

result = uvc_mjpeg2yuyv(frame_mjpeg, frame); // MJPEG => yuyv

recycle_frame(frame_mjpeg);

if (LIKELY(!result)) {

frame = draw_preview_one(frame, &mPreviewWindow, uvc_any2rgbx, 4);

addCaptureFrame(frame);

} else {

recycle_frame(frame);

}

}

}

} else {

// yuvyv mode

for ( ; LIKELY(isRunning()) ; ) {

frame = waitPreviewFrame();

if (LIKELY(frame)) {

frame = draw_preview_one(frame, &mPreviewWindow, uvc_any2rgbx, 4);

addCaptureFrame(frame);

}

}

}

大概意思就是根据设置的模式来处理帧数据,其中MJPEG模式下只是比yuvyv多了一步转换工作,调用的是libuvc中frame-mjpeg.c的 uvc_mjpeg2yuyv方法。我们再回到do_preview方法中,无论是MJPEG还是yuvyv,最终都会调用draw_preview_one方法,听这个方法名字就能大概知道,这里是把最终采集到的数据绘制到原生窗口上:

// changed to return original frame instead of returning converted frame even if convert_func is not null.

uvc_frame_t *UVCPreview::draw_preview_one(uvc_frame_t *frame, ANativeWindow **window, convFunc_t convert_func, int pixcelBytes) {

// ENTER();

int b = 0;

pthread_mutex_lock(&preview_mutex);

{

b = *window != NULL;

}

pthread_mutex_unlock(&preview_mutex);

if (LIKELY(b)) {

uvc_frame_t *converted;

if (convert_func) {

converted = get_frame(frame->width * frame->height * pixcelBytes);

if LIKELY(converted) {

b = convert_func(frame, converted);

if (!b) {

pthread_mutex_lock(&preview_mutex);

copyToSurface(converted, window);

pthread_mutex_unlock(&preview_mutex);

} else {

LOGE("failed converting");

}

recycle_frame(converted);

}

} else {

pthread_mutex_lock(&preview_mutex);

copyToSurface(frame, window);

pthread_mutex_unlock(&preview_mutex);

}

}

return frame; //RETURN(frame, uvc_frame_t *);

}

核心是将准备好的 frame 通过调用copyToSurface方法来绘制到ANativeWindow上:

// transfer specific frame data to the Surface(ANativeWindow)

int copyToSurface(uvc_frame_t *frame, ANativeWindow **window) {

// ENTER();

int result = 0;

if (LIKELY(*window)) {

ANativeWindow_Buffer buffer;

if (LIKELY(ANativeWindow_lock(*window, &buffer, NULL) == 0)) {

// source = frame data

const uint8_t *src = (uint8_t *)frame->data;

const int src_w = frame->width * PREVIEW_PIXEL_BYTES;

const int src_step = frame->width * PREVIEW_PIXEL_BYTES;

// destination = Surface(ANativeWindow)

uint8_t *dest = (uint8_t *)buffer.bits;

const int dest_w = buffer.width * PREVIEW_PIXEL_BYTES;

const int dest_step = buffer.stride * PREVIEW_PIXEL_BYTES;

// use lower transfer bytes

const int w = src_w < dest_w ? src_w : dest_w;

// use lower height

const int h = frame->height < buffer.height ? frame->height : buffer.height;

// transfer from frame data to the Surface

copyFrame(src, dest, w, h, src_step, dest_step);

ANativeWindow_unlockAndPost(*window);

} else {

result = -1;

}

} else {

result = -1;

}

return result; //RETURN(result, int);

}

static void copyFrame(const uint8_t *src, uint8_t *dest, const int width, int height, const int stride_src, const int stride_dest) {

const int h8 = height % 8;

for (int i = 0; i < h8; i++) {

memcpy(dest, src, width);

dest += stride_dest; src += stride_src;

}

for (int i = 0; i < height; i += 8) {

memcpy(dest, src, width);

dest += stride_dest; src += stride_src;

memcpy(dest, src, width);

dest += stride_dest; src += stride_src;

memcpy(dest, src, width);

dest += stride_dest; src += stride_src;

memcpy(dest, src, width);

dest += stride_dest; src += stride_src;

memcpy(dest, src, width);

dest += stride_dest; src += stride_src;

memcpy(dest, src, width);

dest += stride_dest; src += stride_src;

memcpy(dest, src, width);

dest += stride_dest; src += stride_src;

memcpy(dest, src, width);

dest += stride_dest; src += stride_src;

}

}

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?