在前面一篇文章:

Swift - 视频录制系列教程1(调用摄像头拍摄录像,并保存到系统相册)

。我介绍了如何通过

AVFoundation.framework

框架提供的

AVCaptureSession

类来实现视频的录制。

3,实现原理

(1)每次按下按钮录制的视频片段,同样是使用 AVCaptureMovieFileOutput 输出到 Documents 文件夹下,命名为 output-1.mov、 output-2.mov.... (2)通过

AVMutableComposition 来拼接合成各个视频片段的视频、音频轨道。

(3)使用 AVAssetExportSession 将合并后的视频压缩输出成一个最终的视频文件: mergeVideo-****.mov(本文使用高质量压缩),并保存到系统相册中去。

(4)通过 AVPlayerViewController 进行录像的回看。

4,录制一段由三个片段合成的视频,控制台信息如下:

5,样例代码

原文出自: www.hangge.com 转载请保留原文链接: http://www.hangge.com/blog/cache/detail_1185.html

当时的程序是点击“开始”按钮就开始视频录制,点击“停止”则将视频保存起来。整个视频是连续地录制,没有时间限制。今天继续在其基础之上做个改进,实现小视频拍摄功能。

1,小视频拍摄要实现的功能

(1)视频可以分段录制。按住“录像”按钮,则开始捕获摄像头进行视频录制,放开按钮则暂停录制。

(2)所有视频片段加起来的时间长度会有限制(本样例限制为15秒)。录制的时候顶部会有实时的进度条。

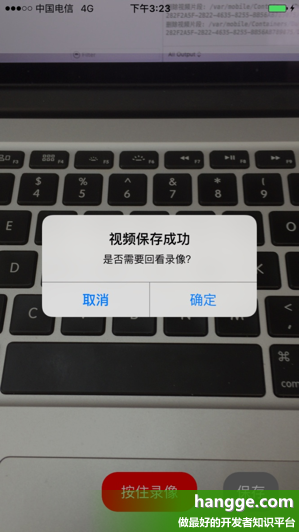

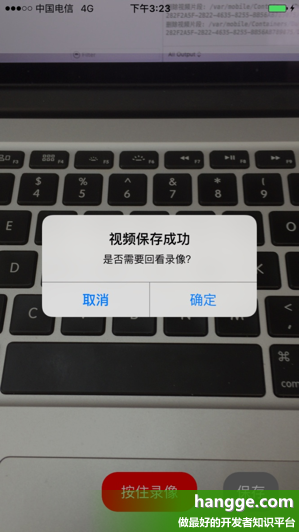

(3)点击“保存”按钮,或者总时长到达15秒时,则停止继续录像。程序会将各个视频片段进行合并,并保存到系统相册中。

(4)保存成功后,可以回看生成的录像。

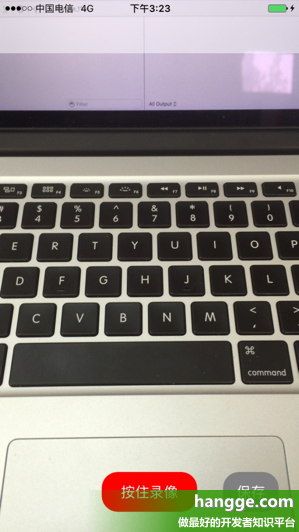

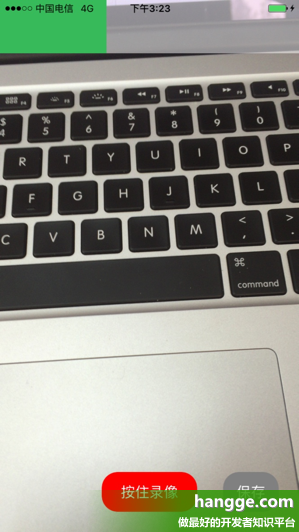

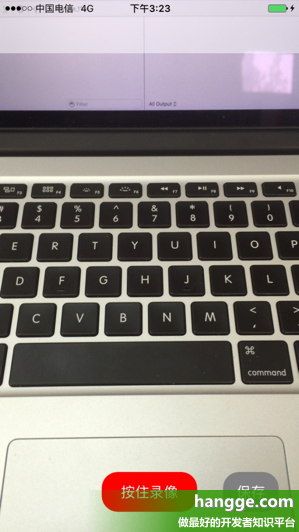

2,效果图如下

3,实现原理

(1)每次按下按钮录制的视频片段,同样是使用 AVCaptureMovieFileOutput 输出到 Documents 文件夹下,命名为 output-1.mov、 output-2.mov....

(3)使用 AVAssetExportSession 将合并后的视频压缩输出成一个最终的视频文件: mergeVideo-****.mov(本文使用高质量压缩),并保存到系统相册中去。

(4)通过 AVPlayerViewController 进行录像的回看。

4,录制一段由三个片段合成的视频,控制台信息如下:

5,样例代码

|

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39

40

41

42

43

44

45

46

47

48

49

50

51

52

53

54

55

56

57

58

59

60

61

62

63

64

65

66

67

68

69

70

71

72

73

74

75

76

77

78

79

80

81

82

83

84

85

86

87

88

89

90

91

92

93

94

95

96

97

98

99

100

101

102

103

104

105

106

107

108

109

110

111

112

113

114

115

116

117

118

119

120

121

122

123

124

125

126

127

128

129

130

131

132

133

134

135

136

137

138

139

140

141

142

143

144

145

146

147

148

149

150

151

152

153

154

155

156

157

158

159

160

161

162

163

164

165

166

167

168

169

170

171

172

173

174

175

176

177

178

179

180

181

182

183

184

185

186

187

188

189

190

191

192

193

194

195

196

197

198

199

200

201

202

203

204

205

206

207

208

209

210

211

212

213

214

215

216

217

218

219

220

221

222

223

224

225

226

227

228

229

230

231

232

233

234

235

236

237

238

239

240

241

242

243

244

245

246

247

248

249

250

251

252

253

254

255

256

257

258

259

260

261

262

263

264

265

266

267

268

269

270

271

272

273

274

275

276

277

278

279

280

281

282

283

284

285

286

287

288

289

290

291

292

293

294

295

296

297

298

299

300

301

302

303

304

305

306

307

308

309

310

311

312

313

314

315

316

317

318

319

320

321

322

323

324

325

326

327

328

329

330

331

332

333

|

import

UIKit

import

AVFoundation

import

Photos

import

AVKit

class

ViewController

:

UIViewController

,

AVCaptureFileOutputRecordingDelegate

{

//视频捕获会话。它是input和output的桥梁。它协调着intput到output的数据传输

let

captureSession =

AVCaptureSession

()

//视频输入设备

let

videoDevice =

AVCaptureDevice

.defaultDeviceWithMediaType(

AVMediaTypeVideo

)

//音频输入设备

let

audioDevice =

AVCaptureDevice

.defaultDeviceWithMediaType(

AVMediaTypeAudio

)

//将捕获到的视频输出到文件

let

fileOutput =

AVCaptureMovieFileOutput

()

//录制、保存按钮

var

recordButton, saveButton :

UIButton

!

//保存所有的录像片段数组

var

videoAssets = [

AVAsset

]()

//保存所有的录像片段url数组

var

assetURLs = [

String

]()

//单独录像片段的index索引

var

appendix:

Int32

= 1

//最大允许的录制时间(秒)

let

totalSeconds:

Float64

= 15.00

//每秒帧数

var

framesPerSecond:

Int32

= 30

//剩余时间

var

remainingTime :

NSTimeInterval

= 15.0

//表示是否停止录像

var

stopRecording:

Bool

=

false

//剩余时间计时器

var

timer:

NSTimer

?

//进度条计时器

var

progressBarTimer:

NSTimer

?

//进度条计时器时间间隔

var

incInterval:

NSTimeInterval

= 0.05

//进度条

var

progressBar:

UIView

=

UIView

()

//当前进度条终点位置

var

oldX:

CGFloat

= 0

override

func

viewDidLoad() {

super

.viewDidLoad()

//添加视频、音频输入设备

let

videoInput = try!

AVCaptureDeviceInput

(device:

self

.videoDevice)

self

.captureSession.addInput(videoInput)

let

audioInput = try!

AVCaptureDeviceInput

(device:

self

.audioDevice)

self

.captureSession.addInput(audioInput);

//添加视频捕获输出

let

maxDuration =

CMTimeMakeWithSeconds

(totalSeconds, framesPerSecond)

self

.fileOutput.maxRecordedDuration = maxDuration

self

.captureSession.addOutput(

self

.fileOutput)

//使用AVCaptureVideoPreviewLayer可以将摄像头的拍摄的实时画面显示在ViewController上

let

videoLayer =

AVCaptureVideoPreviewLayer

(session:

self

.captureSession)

videoLayer.frame =

self

.view.bounds

videoLayer.videoGravity =

AVLayerVideoGravityResizeAspectFill

self

.view.layer.addSublayer(videoLayer)

//创建按钮

self

.setupButton()

//启动session会话

self

.captureSession.startRunning()

//添加进度条

progressBar.frame =

CGRect

(x: 0, y: 0, width:

self

.view.bounds.width,

height:

self

.view.bounds.height * 0.1)

progressBar.backgroundColor =

UIColor

(red: 4, green: 3, blue: 3, alpha: 0.5)

self

.view.addSubview(progressBar)

}

//创建按钮

func

setupButton(){

//创建录制按钮

self

.recordButton =

UIButton

(frame:

CGRectMake

(0,0,120,50))

self

.recordButton.backgroundColor =

UIColor

.redColor();

self

.recordButton.layer.masksToBounds =

true

self

.recordButton.setTitle(

"按住录像"

, forState: .

Normal

)

self

.recordButton.layer.cornerRadius = 20.0

self

.recordButton.layer.position =

CGPoint

(x:

self

.view.bounds.width/2,

y:

self

.view.bounds.height-50)

self

.recordButton.addTarget(

self

, action: #selector(onTouchDownRecordButton(_:)),

forControlEvents: .

TouchDown

)

self

.recordButton.addTarget(

self

, action: #selector(onTouchUpRecordButton(_:)),

forControlEvents: .

TouchUpInside

)

//创建保存按钮

self

.saveButton =

UIButton

(frame:

CGRectMake

(0,0,70,50))

self

.saveButton.backgroundColor =

UIColor

.grayColor();

self

.saveButton.layer.masksToBounds =

true

self

.saveButton.setTitle(

"保存"

, forState: .

Normal

)

self

.saveButton.layer.cornerRadius = 20.0

self

.saveButton.layer.position =

CGPoint

(x:

self

.view.bounds.width - 60,

y:

self

.view.bounds.height-50)

self

.saveButton.addTarget(

self

, action: #selector(onClickStopButton(_:)),

forControlEvents: .

TouchUpInside

)

//添加按钮到视图上

self

.view.addSubview(

self

.recordButton);

self

.view.addSubview(

self

.saveButton);

}

//按下录制按钮,开始录制片段

func

onTouchDownRecordButton(sender:

UIButton

){

if

(!stopRecording) {

let

paths =

NSSearchPathForDirectoriesInDomains

(.

DocumentDirectory

,

.

UserDomainMask

,

true

)

let

documentsDirectory = paths[0]

as

String

let

outputFilePath =

"\(documentsDirectory)/output-\(appendix).mov"

appendix += 1

let

outputURL =

NSURL

(fileURLWithPath: outputFilePath)

let

fileManager =

NSFileManager

.defaultManager()

if

(fileManager.fileExistsAtPath(outputFilePath)) {

do {

try fileManager.removeItemAtPath(outputFilePath)

} catch _ {

}

}

print

(

"开始录制:\(outputFilePath) "

)

fileOutput.startRecordingToOutputFileURL(outputURL, recordingDelegate:

self

)

}

}

//松开录制按钮,停止录制片段

func

onTouchUpRecordButton(sender:

UIButton

){

if

(!stopRecording) {

timer?.invalidate()

progressBarTimer?.invalidate()

fileOutput.stopRecording()

}

}

//录像开始的代理方法

func

captureOutput(captureOutput:

AVCaptureFileOutput

!,

didStartRecordingToOutputFileAtURL fileURL:

NSURL

!,

fromConnections connections: [

AnyObject

]!) {

startProgressBarTimer()

startTimer()

}

//录像结束的代理方法

func

captureOutput(captureOutput:

AVCaptureFileOutput

!,

didFinishRecordingToOutputFileAtURL outputFileURL:

NSURL

!,

fromConnections connections: [

AnyObject

]!, error:

NSError

!) {

let

asset :

AVURLAsset

=

AVURLAsset

(

URL

: outputFileURL, options:

nil

)

var

duration :

NSTimeInterval

= 0.0

duration =

CMTimeGetSeconds

(asset.duration)

print

(

"生成视频片段:\(asset)"

)

videoAssets.append(asset)

assetURLs.append(outputFileURL.path!)

remainingTime = remainingTime - duration

//到达允许最大录制时间,自动合并视频

if

remainingTime <= 0 {

mergeVideos()

}

}

//剩余时间计时器

func

startTimer() {

timer =

NSTimer

(timeInterval: remainingTime, target:

self

,

selector: #selector(

ViewController

.timeout), userInfo:

nil

,

repeats:

true

)

NSRunLoop

.currentRunLoop().addTimer(timer!, forMode:

NSDefaultRunLoopMode

)

}

//录制时间达到最大时间

func

timeout() {

stopRecording =

true

print

(

"时间到。"

)

fileOutput.stopRecording()

timer?.invalidate()

progressBarTimer?.invalidate()

}

//进度条计时器

func

startProgressBarTimer() {

progressBarTimer =

NSTimer

(timeInterval: incInterval, target:

self

,

selector: #selector(

ViewController

.progress),

userInfo:

nil

, repeats:

true

)

NSRunLoop

.currentRunLoop().addTimer(progressBarTimer!,

forMode:

NSDefaultRunLoopMode

)

}

//修改进度条进度

func

progress() {

let

progressProportion:

CGFloat

=

CGFloat

(incInterval / totalSeconds)

let

progressInc:

UIView

=

UIView

()

progressInc.backgroundColor =

UIColor

(red: 55/255, green: 186/255, blue: 89/255,

alpha: 1)

let

newWidth = progressBar.frame.width * progressProportion

progressInc.frame =

CGRect

(x: oldX , y: 0, width: newWidth,

height: progressBar.frame.height)

oldX = oldX + newWidth

progressBar.addSubview(progressInc)

}

//保存按钮点击

func

onClickStopButton(sender:

UIButton

){

mergeVideos()

}

//合并视频片段

func

mergeVideos() {

let

duration = totalSeconds

let

composition =

AVMutableComposition

()

//合并视频、音频轨道

let

firstTrack = composition.addMutableTrackWithMediaType(

AVMediaTypeVideo

, preferredTrackID:

CMPersistentTrackID

())

let

audioTrack = composition.addMutableTrackWithMediaType(

AVMediaTypeAudio

, preferredTrackID:

CMPersistentTrackID

())

var

insertTime:

CMTime

= kCMTimeZero

for

asset

in

videoAssets {

print

(

"合并视频片段:\(asset)"

)

do {

try firstTrack.insertTimeRange(

CMTimeRangeMake

(kCMTimeZero, asset.duration),

ofTrack: asset.tracksWithMediaType(

AVMediaTypeVideo

)[0] ,

atTime: insertTime)

} catch _ {

}

do {

try audioTrack.insertTimeRange(

CMTimeRangeMake

(kCMTimeZero, asset.duration),

ofTrack: asset.tracksWithMediaType(

AVMediaTypeAudio

)[0] ,

atTime: insertTime)

} catch _ {

}

insertTime =

CMTimeAdd

(insertTime, asset.duration)

}

//旋转视频图像,防止90度颠倒

firstTrack.preferredTransform =

CGAffineTransformMakeRotation

(

CGFloat

(

M_PI_2

))

//获取合并后的视频路径

let

documentsPath =

NSSearchPathForDirectoriesInDomains

(.

DocumentDirectory

,

.

UserDomainMask

,

true

)[0]

let

destinationPath = documentsPath +

"/mergeVideo-\(arc4random()%1000).mov"

print

(

"合并后的视频:\(destinationPath)"

)

let

videoPath:

NSURL

=

NSURL

(fileURLWithPath: destinationPath

as

String

)

let

exporter =

AVAssetExportSession

(asset: composition,

presetName:

AVAssetExportPresetHighestQuality

)!

exporter.outputURL = videoPath

exporter.outputFileType =

AVFileTypeQuickTimeMovie

exporter.shouldOptimizeForNetworkUse =

true

exporter.timeRange =

CMTimeRangeMake

(

kCMTimeZero,

CMTimeMakeWithSeconds

(

Float64

(duration), framesPerSecond))

exporter.exportAsynchronouslyWithCompletionHandler({

//将合并后的视频保存到相册

self

.exportDidFinish(exporter)

})

}

//将合并后的视频保存到相册

func

exportDidFinish(session:

AVAssetExportSession

) {

print

(

"视频合并成功!"

)

let

outputURL:

NSURL

= session.outputURL!

//将录制好的录像保存到照片库中

PHPhotoLibrary

.sharedPhotoLibrary().performChanges({

PHAssetChangeRequest

.creationRequestForAssetFromVideoAtFileURL(outputURL)

}, completionHandler: { (isSuccess:

Bool

, error:

NSError

?)

in

dispatch_async(dispatch_get_main_queue(),{

//重置参数

self

.reset()

//弹出提示框

let

alertController =

UIAlertController

(title:

"视频保存成功"

,

message:

"是否需要回看录像?"

, preferredStyle: .

Alert

)

let

okAction =

UIAlertAction

(title:

"确定"

, style: .

Default

, handler: {

action

in

//录像回看

self

.reviewRecord(outputURL)

})

let

cancelAction =

UIAlertAction

(title:

"取消"

, style: .

Cancel

,

handler:

nil

)

alertController.addAction(okAction)

alertController.addAction(cancelAction)

self

.presentViewController(alertController, animated:

true

,

completion:

nil

)

})

})

}

//视频保存成功,重置各个参数,准备新视频录制

func

reset() {

//删除视频片段

for

assetURL

in

assetURLs {

if

(

NSFileManager

.defaultManager().fileExistsAtPath(assetURL)) {

do {

try

NSFileManager

.defaultManager().removeItemAtPath(assetURL)

} catch _ {

}

print

(

"删除视频片段: \(assetURL)"

)

}

}

//进度条还原

let

subviews = progressBar.subviews

for

subview

in

subviews {

subview.removeFromSuperview()

}

//各个参数还原

videoAssets.removeAll(keepCapacity:

false

)

assetURLs.removeAll(keepCapacity:

false

)

appendix = 1

oldX = 0

stopRecording =

false

remainingTime = totalSeconds

}

//录像回看

func

reviewRecord(outputURL:

NSURL

) {

//定义一个视频播放器,通过本地文件路径初始化

let

player =

AVPlayer

(

URL

: outputURL)

let

playerViewController =

AVPlayerViewController

()

playerViewController.player = player

self

.presentViewController(playerViewController, animated:

true

) {

playerViewController.player!.play()

}

}

}

|

原文出自: www.hangge.com 转载请保留原文链接: http://www.hangge.com/blog/cache/detail_1185.html

488

488

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?