前言

在用SUSE 操作系统安装 CM 大数据平台,在集群开启 kerberos 后,使用 HDFS 命令报错如下:

hdfs dfs -ls /

19/05/29 18:06:15 WARN ipc.Client: Exception encountered while connecting to the server : org.apache.hadoop.security.AccessControlException: Client cannot authenticate via:[TOKEN, KERBEROS]

ls: Failed on local exception: java.io.IOException: org.apache.hadoop.security.AccessControlException: Client cannot authenticate via:[TOKEN, KERBEROS]; Host Details : local host is: "hadoop001/172.17.239.230"; destination host is: "hadoop001":8020;

环境信息

SUSE Linux Enterprise Server 12 Service Pack 1 (SLES 12 SP5)

问题复现

- 先进行认证

kinit -kt hdfs.keytab hdfs

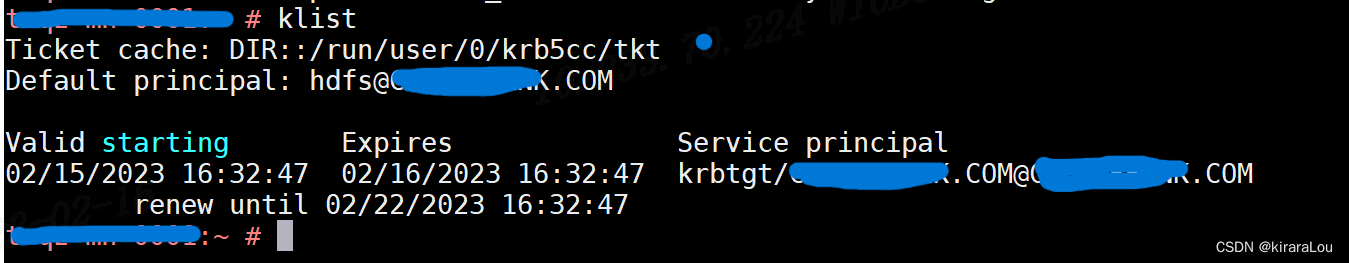

## 查看票据

klist

export HADOOP_ROOT_LOGGER=DEBUG,console

export HADOOP_OPTS="-Dsun.security.krb5.debug=true -Djavax.net.debug=ssl"

hdfs dfs -ls /

问题原因

仔细看,在使用 klist 命令时,有个Ticket Cache : Dir 他指向的路径是: /run/user/0/krb5cc/tkt

而在执行 HDFS 命令时,有个 KinitOptions cache name is 他指向的路径是 tmp/krb5cc_0

HDFS 默认是去 /tmp 目录下找 Kerberos 缓存。然后 SUSE 操作系统下 kerberos 并不是放在 /tmp 目录下,导致 HDFS 客户端认为你没有进行 Kerberos 认证。所以报错。

解决方案

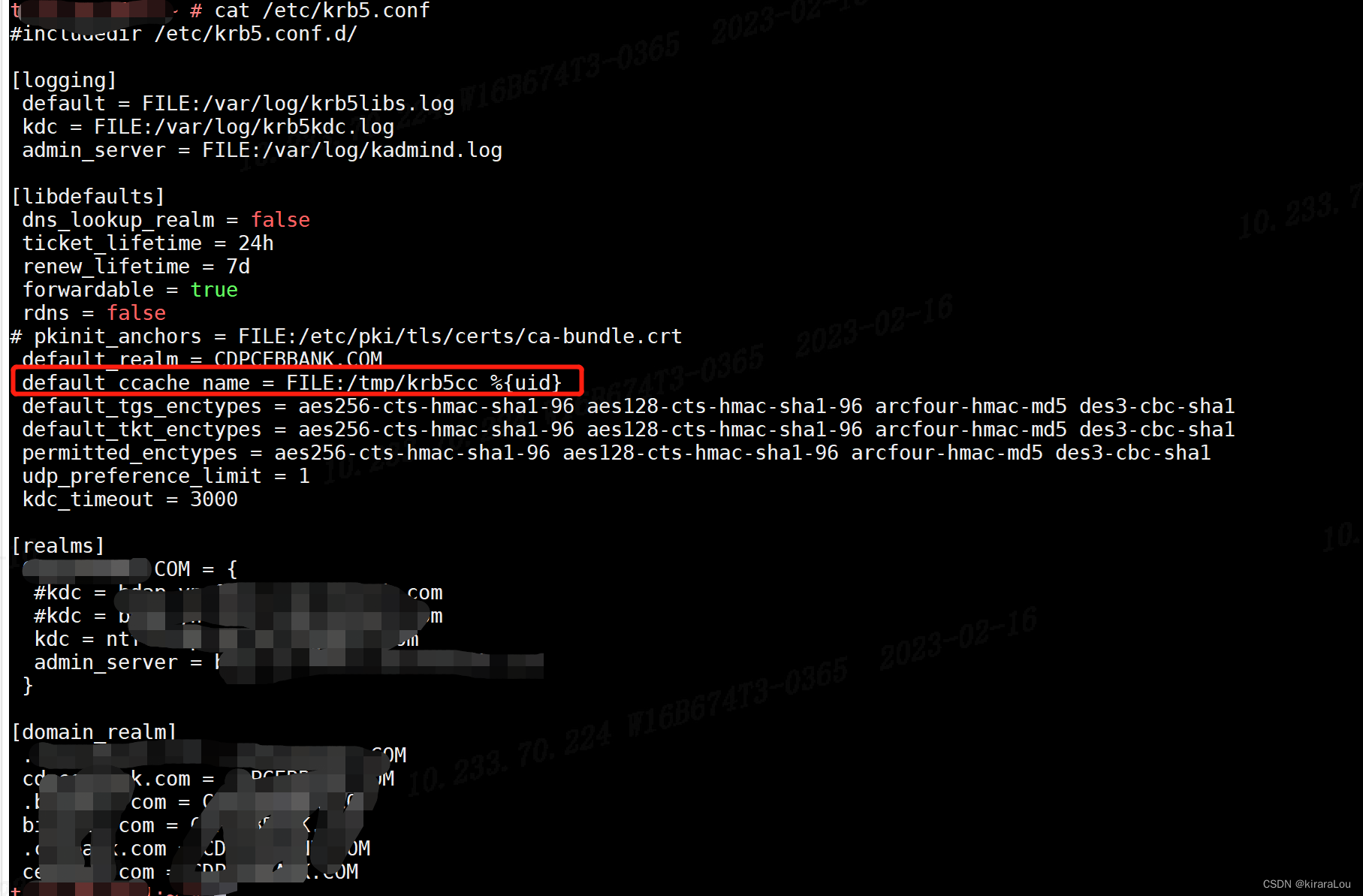

在/etc/krb5.conf中,我们增加了下面的参数以后,就可以正常kinit,也可以执行hdfs的命令了。

default_ccache_name = FILE:/tmp/krb5cc_%{uid}

- 销毁凭据

kdestroy

- 重新认证

kinit -kt hdfs.keytab hdfs

- 查看HDS

hdfs dfs -ls /

在重新执行,问题解决!

此外网上还有别的解决方案,但都不是我这种情况。这里也顺便贴下:

方法一:

将krb5.conf文件中的default_ccache_name注释掉,然后执行kdestroy,重新kinit,问题解决

方法二:

在 /etc/krb5.conf 里补全了加密方法后

https://www.cnblogs.com/tommyjiang/p/15008787.html

方法三:

代码问题

https://blog.csdn.net/ifenggege/article/details/111243297

3870

3870

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?