Event接口实现client-go/tools/record/event.go

事件处理入口函数

func recordToSink(sink EventSink, event *v1.Event, eventCorrelator *EventCorrelator, randGen *rand.Rand, sleepDuration time.Duration) {

// Make a copy before modification, because there could be multiple listeners.

// Events are safe to copy like this.

eventCopy := *event

event = &eventCopy

result, err := eventCorrelator.EventCorrelate(event)

if err != nil {

utilruntime.HandleError(err)

}

if result.Skip {

return

//跳出

}

EventCorrelate 过滤、聚合、计数和删除所有传入事件

func (c *EventCorrelator) EventCorrelate(newEvent *v1.Event) (*EventCorrelateResult, error) {

if newEvent == nil {

return nil, fmt.Errorf("event is nil")

}

aggregateEvent, ckey := c.aggregator.EventAggregate(newEvent)

observedEvent, patch, err := c.logger.eventObserve(aggregateEvent, ckey)

if c.filterFunc(observedEvent) {

return &EventCorrelateResult{Skip: true}, nil

//如果需要跳过返回true

}

return &EventCorrelateResult{Event: observedEvent, Patch: patch}, err

}

EventAggregate:EventCorrelate 中首先执行了c.aggregator.EventAggregate(newEvent)方法进行事件聚合,它根据上面初始化 EventCorrelator 时的配置,检查是否已经有和传入的事件类似的事件。

eventObserve函数负责将aggregateEvent事件相同的进行汇聚,count次数+1

func (e *eventLogger) eventObserve(newEvent *v1.Event, key string) (*v1.Event, []byte, error) {

var (

patch []byte

err error

)

eventCopy := *newEvent

event := &eventCopy

e.Lock()

defer e.Unlock()

// Check if there is an existing event we should update

lastObservation := e.lastEventObservationFromCache(key)

// If we found a result, prepare a patch

if lastObservation.count > 0 {

// update the event based on the last observation so patch will work as desired

event.Name = lastObservation.name

event.ResourceVersion = lastObservation.resourceVersion

event.FirstTimestamp = lastObservation.firstTimestamp

event.Count = int32(lastObservation.count) + 1

eventCopy2 := *event

eventCopy2.Count = 0

eventCopy2.LastTimestamp = metav1.NewTime(time.Unix(0, 0))

eventCopy2.Message = ""

newData, _ := json.Marshal(event)

oldData, _ := json.Marshal(eventCopy2)

patch, err = strategicpatch.CreateTwoWayMergePatch(oldData, newData, event)

}

// record our new observation

e.cache.Add(

key,

eventLog{

count: uint(event.Count),

firstTimestamp: event.FirstTimestamp,

name: event.Name,

resourceVersion: event.ResourceVersion,

},

)

return event, patch, err

}

Kubelet初始化设置限流参数

cmd/kubelet/app/server.go

// makeEventRecorder sets up kubeDeps.Recorder if it's nil. It's a no-op otherwise.

func makeEventRecorder(kubeDeps *kubelet.Dependencies, nodeName types.NodeName) {

if kubeDeps.Recorder != nil {

return

}

eventBroadcaster := record.NewBroadcaster()

kubeDeps.Recorder = eventBroadcaster.NewRecorder(legacyscheme.Scheme, v1.EventSource{Component: componentKubelet, Host: string(nodeName)})

eventBroadcaster.StartLogging(klog.V(3).Infof)

if kubeDeps.EventClient != nil {

klog.V(4).Infof("Sending events to api server.")

eventBroadcaster.StartRecordingToSink(&v1core.EventSinkImpl{Interface: kubeDeps.EventClient.Events("")})

} else {

klog.Warning("No api server defined - no events will be sent to API server.")

}

}

事件关联,负责事件的过滤,聚合和计数

NewEventCorrelator 返回一个使用默认值配置的 EventCorrelator。 EventCorrelator 负责在与 API 服务器交互以记录事件之前进行事件过滤、聚合和计数。默认行为如下: 如果类似事件在 10 分钟滚动间隔内记录 10 次,则执行聚合。类似事件是仅因 Event.Message 字段而异的事件。聚合将创建一个新事件,而不是记录精确的事件,其消息报告它已将具有相同原因的事件组合在一起。如果多次遇到完全相同的事件,事件将递增计数。一个源可能会爆发关于一个对象的 25 个事件,但每个对象的重新填充率预算为每 5 分钟 1 个事件,以控制垃圾邮件的长尾。[官方解释翻译]

func NewEventCorrelator(clock clock.Clock) *EventCorrelator {

cacheSize := maxLruCacheEntries

spamFilter := NewEventSourceObjectSpamFilter(cacheSize, defaultSpamBurst, defaultSpamQPS, clock)

return &EventCorrelator{

filterFunc: spamFilter.Filter,

aggregator: NewEventAggregator(

cacheSize,

EventAggregatorByReasonFunc,

EventAggregatorByReasonMessageFunc,

defaultAggregateMaxEvents,

defaultAggregateIntervalInSeconds,

clock),

logger: newEventLogger(cacheSize, clock),

}

}

这里设置默认值为

defaultSpamBurst = 25

defaultSpamQPS = 1. / 300.

计算方式:

第一个5分钟内podname为tomcat-001的podliveness/Readniess为100次,这时候只记录podliveness+Readiness事件总数为25个

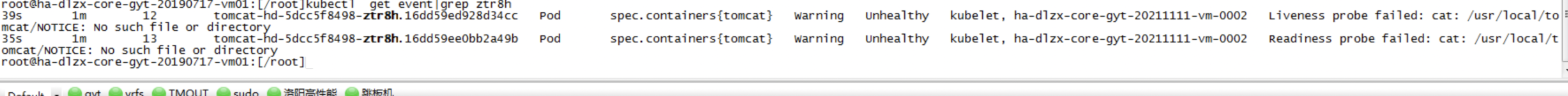

验证

频繁readiness/Liveness总数为25,

为什么生产上面查询是26条呢

有一条数据来源是scheduler,其余25条为kubelet

5分钟一个事件参数是什么意思

5分钟内事件已经达到25条了,这时候pod有kill新事件,这时候Kill事件忽略,在第二个5分钟内如果有kill事件,这时候才会上报。

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?