docker部署minio集群

准备3个节点,每个节点创建2个挂载点,由于集群模式下不能使用根磁盘,这里使用docker卷作为挂载点。

3节点的集群,故障一个节点时不影响对集群的读写操作。

1、所有节点配置主机名解析:

cat >> /etc/hosts << EOF

192.168.92.10 minio-1

192.168.92.11 minio-2

192.168.92.12 minio-3

EOF

2、配置时间同步,关闭防火墙和selinux。

3、所有节点安装docker

yum install -y yum-utils device-mapper-persistent-data lvm2

yum-config-manager --add-repo https://mirrors.aliyun.com/docker-ce/linux/centos/docker-ce.repo

yum -y install docker-ce

systemctl enable --now docker

4、部署minio集群,3个节点每个节点挂载2个目录

minio-1节点执行

docker run -d --name minio \

--restart=always --net=host \

-e MINIO_ACCESS_KEY=minio \

-e MINIO_SECRET_KEY=minio123 \

-v minio-data1:/data1 \

-v minio-data2:/data2 \

minio/minio server \

--address 192.168.92.10:9000 \

http://minio-{1...3}/data{1...2}

minio-2节点执行

docker run -d --name minio \

--restart=always --net=host \

-e MINIO_ACCESS_KEY=minio \

-e MINIO_SECRET_KEY=minio123 \

-v minio-data1:/data1 \

-v minio-data2:/data2 \

minio/minio server \

--address 192.168.92.11:9000 \

http://minio-{1...3}/data{1...2}

minio-3节点执行

docker run -d --name minio \

--restart=always --net=host \

-e MINIO_ACCESS_KEY=minio \

-e MINIO_SECRET_KEY=minio123 \

-v minio-data1:/data1 \

-v minio-data2:/data2 \

minio/minio server \

--address 192.168.92.12:9000 \

http://minio-{1...3}/data{1...2}

说明:docker部署集群模式时必须指定–net=host参数,使用主机网络,使用端口映射无法创建集群。

查看容器运行状态

[root@node1 ~]# docker ps -a

CONTAINER ID IMAGE COMMAND CREATED STATUS PORTS NAMES

ab4c57281ab9 minio/minio "/usr/bin/docker-ent…" 21 minutes ago Up 21 minutes minio

查看容器日志,创建1个zone、1个set以及6个在线的drivers:

[root@minio-1 ~]# docker logs -f minio

......

Waiting for all other servers to be online to format the disks.

Formatting 1st zone, 1 set(s), 6 drives per set.

Waiting for all MinIO sub-systems to be initialized.. lock acquired

Attempting encryption of all config, IAM users and policies on MinIO backend

All MinIO sub-systems initialized successfully

Status: 6 Online, 0 Offline.

Endpoint: http://192.168.92.10:9000

Browser Access:

http://192.168.92.10:9000

Object API (Amazon S3 compatible):

Go: https://docs.min.io/docs/golang-client-quickstart-guide

Java: https://docs.min.io/docs/java-client-quickstart-guide

Python: https://docs.min.io/docs/python-client-quickstart-guide

JavaScript: https://docs.min.io/docs/javascript-client-quickstart-guide

.NET: https://docs.min.io/docs/dotnet-client-quickstart-guide

Waiting for all MinIO IAM sub-system to be initialized.. lock acquired

客户端查看minio状态,3个节点及每个节点上的Drivers全部在线:

[root@minio-1 ~]# mc config host add minio http://minio-1:9000 minio minio123

Added `minio` successfully.

[root@minio-1 ~]# mc admin info minio

● minio-2:9000

Uptime: 3 minutes

Version: 2020-12-18T03:27:42Z

Network: 3/3 OK

Drives: 2/2 OK

● minio-3:9000

Uptime: 2 minutes

Version: 2020-12-18T03:27:42Z

Network: 3/3 OK

Drives: 2/2 OK

● minio-1:9000

Uptime: 3 minutes

Version: 2020-12-18T03:27:42Z

Network: 3/3 OK

Drives: 2/2 OK

6 drives online, 0 drives offline

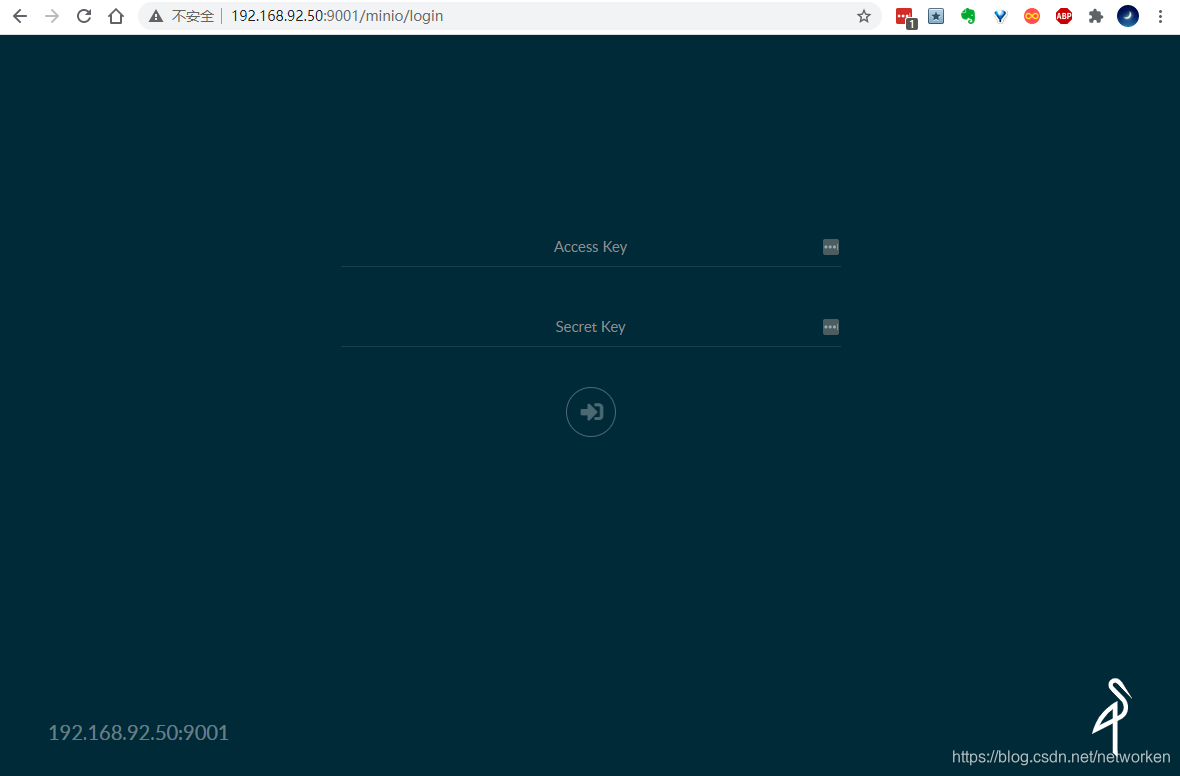

浏览器访问minio UI,任意节点IP:

http://192.168.92.10:9000

故障场景:

场景1:模拟一个节点故障,上传数据验证minio能够正常读写,节点重新上线后集群自动恢复正常:

[root@minio-3 ~]# docker stop minio

场景2:模拟一个节点彻底故障无法恢复,在一个节点上使用下面清理容器集群命令彻底删除数据,只需在准备一个节点,配置好hosts解析,然后执行启动集群命令即可。

清理minio容器集群

docker stop minio

docker rm minio

docker volume rm minio-data1 minio-data2

配置负载均衡

使用nginx和keepalived部署负载均衡,实际部署需要额外准备2个节点,这里作为测试复用minio集群前2个节点。

在192.168.92.10及192.168.92.11节点执行以下操作:

准备nginx配置文件,2个节点执行:

mkdir -p /etc/nginx/conf.d

cat > /etc/nginx/conf.d/minio-lb.conf << 'EOF'

upstream minio_server {

server 192.168.92.10:9000;

server 192.168.92.11:9000;

server 192.168.92.12:9000;

}

server {

listen 9001;

server_name localhost;

ignore_invalid_headers off;

client_max_body_size 0;

proxy_buffering off;

location / {

proxy_set_header X-Real-IP $remote_addr;

proxy_set_header X-Forwarded-For $proxy_add_x_forwarded_for;

proxy_set_header X-Forwarded-Proto $scheme;

proxy_set_header Host $http_host;

proxy_connect_timeout 300;

proxy_http_version 1.1;

proxy_set_header Connection "";

chunked_transfer_encoding off;

proxy_pass http://minio_server;

}

}

EOF

部署nginx容器,2个节点执行:

docker run -d --name nginx \

--restart always -p 9001:9001 \

-v /etc/nginx/conf.d:/etc/nginx/conf.d \

nginx

准备keepalived配置文件,注意修改interface及virtual_ipaddress参数,2个节点执行:

mkdir /etc/keepalived

cat > /etc/keepalived/keepalived.conf <<EOF

! Configuration File for keepalived

global_defs {

router_id minio

vrrp_version 2

vrrp_garp_master_delay 1

script_user root

enable_script_security

}

vrrp_script chk_nginx {

script "/bin/sh -c 'curl -I http://127.0.0.1:9001 &> /dev/null'"

timeout 3

interval 1 # check every 1 second

fall 2 # require 2 failures for KO

rise 2 # require 2 successes for OK

}

vrrp_instance lb-minio {

state BACKUP

interface ens33

virtual_router_id 51

priority 100

virtual_ipaddress {

192.168.92.50

}

track_script {

chk_nginx

}

}

EOF

部署keepalived容器,2个节点执行:

docker run -d --name keepalived \

--restart always \

--cap-add=NET_ADMIN \

--net=host \

-v /etc/keepalived/keepalived.conf:/container/service/keepalived/assets/keepalived.conf \

--detach osixia/keepalived --copy-service

查看创建的vip

[root@minio-1 ~]# ip a | grep 192.168.92

inet 192.168.92.10/24 brd 192.168.92.255 scope global noprefixroute ens33

inet 192.168.92.50/32 scope global ens33

停止vip所在节点nginx容器模拟负载均衡故障,验证vip转移

docker stop nginx

查看keepalived日志

[root@minio-1 ~]# docker logs -f keepalived

......

Tue Dec 22 01:22:06 2020: Script `chk_nginx` now returning 7

Tue Dec 22 01:22:09 2020: VRRP_Script(chk_nginx) failed (exited with status 7)

Tue Dec 22 01:22:09 2020: (lb-minio) Entering FAULT STATE

Tue Dec 22 01:22:09 2020: (lb-minio) sent 0 priority

Tue Dec 22 01:22:09 2020: (lb-minio) removing VIPs.

vip自动迁移至节点2

[root@minio-2 ~]# ip a | grep 192.168.92

inet 192.168.92.11/24 brd 192.168.92.255 scope global noprefixroute ens33

inet 192.168.92.50/32 scope global ens33

浏览器访问minio UI,使用vip地址和9001端口:

docker-compose 模式

参考:https://docs.min.io/docs/deploy-minio-on-docker-compose.html

docker-compose只能在单主机部署多个分布式MinIO实例,分布式MinIO实例将部署在同一主机上的多个容器中。

安装docker-compose后执行以下操作:

mkdir -p /date/minio

cd /date/minio

wget https://raw.githubusercontent.com/minio/minio/master/docs/orchestration/docker-compose/docker-compose.yaml

wget https://raw.githubusercontent.com/minio/minio/master/docs/orchestration/docker-compose/nginx.conf

docker-compose up -d

查看运行的容器

[root@master minio]# docker-compose ps -a

Name Command State Ports

-----------------------------------------------------------------------------------------------

minio_minio1_1 /usr/bin/docker-entrypoint ... Up (healthy) 9000/tcp

minio_minio2_1 /usr/bin/docker-entrypoint ... Up (healthy) 9000/tcp

minio_minio3_1 /usr/bin/docker-entrypoint ... Up (healthy) 9000/tcp

minio_minio4_1 /usr/bin/docker-entrypoint ... Up (healthy) 9000/tcp

minio_nginx_1 /docker-entrypoint.sh ngin ... Up 80/tcp, 0.0.0.0:9000->9000/tcp

使用nginx进行了负载均衡,浏览器访问:http://172.31.112.2:9000

默认情况下会创建4个minio实例,你可以添加更多的MinIO服务(最多总共16个)到你的MinIO Comose deployment。

docker swarm模式

参考:https://docs.min.io/docs/deploy-minio-on-docker-swarm.html

不推荐使用docker swarm,建议使用kubernetes作为替代,基于minio operator部署集群。

准备4台服务器安装docker,配置主机名以及/etc/hosts解析:

minio-1 192.168.92.10

minio-2 192.168.92.11

minio-3 192.168.92.12

minio-4 192.168.92.13

在第一个节点按照以下流程部署minio集群:

#第一个节点初始化swarm管理节点

docker swarm init --advertise-addr 192.168.93.40

#其他3个节点执行,作为worker节点加入swarm集群

docker swarm join --token SWMTKN-1-0038kngb30k8orsj1lx7e5pa7opasf2ljdtj7uktct98x01e5o-7ufsfr5lc70u4g7lt7xzguc6m 192.168.93.40:2377

#以下所有操作在第一个节点执行

#创建access_key

echo "AKIAIOSFODNN7EXAMPLE" | docker secret create access_key -

echo "wJalrXUtnFEMI/K7MDENG/bPxRfiCYEXAMPLEKEY" | docker secret create secret_key -

#所有节点打标签,将minio实例调度到不同节点上

docker node update --label-add minio1=true minio-1

docker node update --label-add minio2=true minio-2

docker node update --label-add minio3=true minio-3

docker node update --label-add minio4=true minio-4

#查看swarm集群节点运行情况

[root@minio-1 ~]# docker node ls

ID HOSTNAME STATUS AVAILABILITY MANAGER STATUS ENGINE VERSION

1j8jo69z6herntddxhe2tsk06 * minio-1 Ready Active Leader 20.10.1

fnbs8f0mfaxuf2enpryk6oxsx minio-2 Ready Active 20.10.1

21w6nc29mjlk850psgqvip9rb minio-3 Ready Active 20.10.1

pdjn34s0qrxv7xhovkmfscz1x minio-4 Ready Active 20.10.1

#下载minio compose文件

wget https://raw.githubusercontent.com/minio/minio/master/docs/orchestration/docker-swarm/docker-compose-secrets.yaml

#执行minio部署

docker stack deploy --compose-file=docker-compose-secrets.yaml minio_stack

#确认4个minio实例运行正常

[root@minio-1 ~]# docker service ls

ID NAME MODE REPLICAS IMAGE PORTS

bhfbh4lgpkyc minio_stack_minio1 replicated 1/1 minio/minio:RELEASE.2020-12-26T01-35-54Z *:9001->9000/tcp

mig3o6plv2b4 minio_stack_minio2 replicated 1/1 minio/minio:RELEASE.2020-12-26T01-35-54Z *:9002->9000/tcp

tjp2kvsgzriv minio_stack_minio3 replicated 1/1 minio/minio:RELEASE.2020-12-26T01-35-54Z *:9003->9000/tcp

krwgyciyy4cn minio_stack_minio4 replicated 1/1 minio/minio:RELEASE.2020-12-26T01-35-54Z *:9004->9000/tcp

#浏览器使用任意节点IP访问

http://192.168.93.40:9001

#清理minio集群

docker stack rm minio_stack

docker volume prune

3728

3728

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?