kubeadm 是 kubernetes 官方提供的快速部署 k8s 集群的工具 ,

[root@qa1v130-26 ~]# cat /etc/redhat-release

CentOS Linux release 7.5.1804 (Core)

wget -O /etc/yum.repos.d/epel-7.repo http://mirrors.aliyun.com/repo/epel-7.rep

yum clean all

yum makecache

[root@qa1v130-26 ~]# cat /etc/hosts

127.0.0.1 localhost localhost.localdomain localhost4 localhost4.localdomain4

::1 localhost localhost.localdomain localhost6 localhost6.localdomain6

192.168.130.26 master

192.168.130.28 node-1

[root@qa1v130-26 ~]# systemctl stop firewalld

[root@qa1v130-26 ~]# systemctl disable firewalld

Removed symlink /etc/systemd/system/multi-user.target.wants/firewalld.service.

Removed symlink /etc/systemd/system/dbus-org.fedoraproject.FirewallD1.service.

[root@qa1v130-26 ~]# setenforce 0

[root@qa1v130-26 ~]# vi /etc/selinux/config

SELINUX=disabled

在 /etc/sysctl.d/k8s.conf,添加如下内容:

net.bridge.bridge-nf-call-ip6tables = 1 net.bridge.bridge-nf-call-iptables = 1 net.ipv4.ip_forward = 1

[root@qa1v130-26 ~]# modprobe br_netfilter

[root@qa1v130-26 ~]# sysctl -p /etc/sysctl.d/k8s.conf

net.bridge.bridge-nf-call-ip6tables = 1

net.bridge.bridge-nf-call-iptables = 1

net.ipv4.ip_forward = 1

在所有的Kubernetes节点node1和node2上执行以下脚本:

cat > /etc/sysconfig/modules/ipvs.modules <<EOF #!/bin/bash modprobe -- ip_vs modprobe -- ip_vs_rr modprobe -- ip_vs_wrr modprobe -- ip_vs_sh modprobe -- nf_conntrack_ipv4 EOF chmod 755 /etc/sysconfig/modules/ipvs.modules && bash /etc/sysconfig/modules/ipvs.modules && lsmod | grep -e ip_vs -e nf_conntrack_ipv

上面脚本创建了的 `/etc/sysconfig/modules/ipvs.modules`文件,保证在节点重启后能自动加载所需模块。 使用lsmod | grep -e ip_vs -e nf_conntrack_ipv4命令查看是否已经正确加载所需的内核模块。

yum install ipset ipvsadm

docker 的 yum 源

yum install -y yum-utils device-mapper-persistent-data lvm2 yum-config-manager \ --add-repo \ https://download.docker.com/linux/centos/docker-ce.repo

yum list docker-ce.x86_64 --showduplicates |sort -r

yum install -y --setopt=obsoletes=0 \

docker-ce-18.09.7-3.el7root@qa1v130-26 ~]# systemctl start docker

[root@qa1v130-26 ~]# systemctl enable docker

[root@qa1v130-26 ~]# iptables -nvL

Chain INPUT (policy ACCEPT 68 packets, 4740 bytes)

pkts bytes target prot opt in out source destinationChain FORWARD (policy ACCEPT 0 packets, 0 bytes)

pkts bytes target prot opt in out source destination

0 0 DOCKER-USER all -- * * 0.0.0.0/0 0.0.0.0/0

0 0 DOCKER-ISOLATION-STAGE-1 all -- * * 0.0.0.0/0 0.0.0.0/0

0 0 ACCEPT all -- * docker0 0.0.0.0/0 0.0.0.0/0 ctstate RELATED,ESTABLISHED

0 0 DOCKER all -- * docker0 0.0.0.0/0 0.0.0.0/0

0 0 ACCEPT all -- docker0 !docker0 0.0.0.0/0 0.0.0.0/0

0 0 ACCEPT all -- docker0 docker0 0.0.0.0/0 0.0.0.0/0Chain OUTPUT (policy ACCEPT 36 packets, 3640 bytes)

pkts bytes target prot opt in out source destinationChain DOCKER (1 references)

pkts bytes target prot opt in out source destinationChain DOCKER-ISOLATION-STAGE-1 (1 references)

pkts bytes target prot opt in out source destination

0 0 DOCKER-ISOLATION-STAGE-2 all -- docker0 !docker0 0.0.0.0/0 0.0.0.0/0

0 0 RETURN all -- * * 0.0.0.0/0 0.0.0.0/0Chain DOCKER-ISOLATION-STAGE-2 (1 references)

pkts bytes target prot opt in out source destination

0 0 DROP all -- * docker0 0.0.0.0/0 0.0.0.0/0

0 0 RETURN all -- * * 0.0.0.0/0 0.0.0.0/0Chain DOCKER-USER (1 references)

pkts bytes target prot opt in out source destination

0 0 RETURN all -- * * 0.0.0.0/0 0.0.0.0/0

[root@qa1v130-26 ~]#

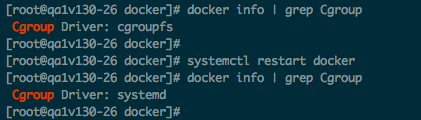

在 /etc/docker/daemon.json 下添加如下内容:

{

"exec-opts": ["native.cgroupdriver=systemd"]

}

root@qa1v130-26 docker]# systemctl restart docker

[root@qa1v130-26 docker]# docker info | grep Cgroup

2.使用 kubeadm 部署 Kubernetes

下载 kubeadm 和 kubelet

可以科学上网的配置如下:

cat <<EOF > /etc/yum.repos.d/kubernetes.repo [kubernetes] name=Kubernetes baseurl=https://packages.cloud.google.com/yum/repos/kubernetes-el7-x86_64 enabled=1 gpgcheck=1 repo_gpgcheck=1 gpgkey=https://packages.cloud.google.com/yum/doc/yum-key.gpg https://packages.cloud.google.com/yum/doc/rpm-package-key.gpg EOF

不能科学上网的可以配置如下:

[root@qa1v130-26 ~]# cat /etc/yum.repos.d/kubernetes.repo

[kubernetes]

name=Kubernetes

baseurl=http://mirrors.aliyun.com/kubernetes/yum/repos/kubernetes-el7-x86_64

enabled=1

gpgcheck=0

repo_gpgcheck=0

gpgkey=http://mirrors.aliyun.com/kubernetes/yum/doc/yum-key.gpg

http://mirrors.aliyun.com/kubernetes/yum/doc/rpm-package-key.gpg[root@qa1v130-26 ~]# yum makecache

yum install -y kubelet kubeadm kubectl

已安装:

kubeadm.x86_64 0:1.15.1-0 kubectl.x86_64 0:1.15.1-0 kubelet.x86_64 0:1.15.1-0作为依赖被安装:

conntrack-tools.x86_64 0:1.4.4-4.el7 cri-tools.x86_64 0:1.13.0-0 kubernetes-cni.x86_64 0:0.7.5-0 libnetfilter_cthelper.x86_64 0:1.0.0-9.el7 libnetfilter_cttimeout.x86_64 0:1.0.0-6.el7

libnetfilter_queue.x86_64 0:1.0.2-2.el7_2 socat.x86_64 0:1.7.3.2-2.el7完毕!

cri-tools 、kubernetes-cni、socat 安装了三个依赖。

- 官方从Kubernetes 1.14开始将 cni 依赖升级到了0.7.5版本

- socat 是 kubelet 的依赖

- cri-tools 是 CRI(Container Runtime Interface) 容器运行时接口的命令行工具

[root@qa1v130-26 ~]# swapoff -a

可以通过 ` free -m ` 来检验是否关闭,修改 /etc/fstab 文件,注释掉 SWAP 的自动挂载

[root@qa1v130-26 ~]# free -m

total used free shared buff/cache available

Mem: 7821 192 6293 8 1335 7334

Swap: 0 0 0

[root@qa1v130-26 ~]#

在 /etc/sysctl.d/k8s.conf 下,添加如下:

vm.swappiness=0

使生效:

sysctl -p /etc/sysctl.d/k8s.conf

使用 kubelet 的启动参数 --fail-swap-on=false 去掉必须关闭 Swap 的限制,/usr/lib/systemd/system/kubelet.service.d/10-kubeadm.conf

使用 kubeadm init 初始化集群

设置 kubelet 开机自启动:

[root@qa1v130-26 ~]# systemctl enable kubelet

Created symlink from /etc/systemd/system/multi-user.target.wants/kubelet.service to /usr/lib/systemd/system/kubelet.service.

使用 kubeadm config print init-defaults 可以打印集群初始化默认的使用的配置:

root@qa1v130-26 ~]# kubeadm config print init-defaults

apiVersion: kubeadm.k8s.io/v1beta2

bootstrapTokens:

- groups:

- system:bootstrappers:kubeadm:default-node-token

token: abcdef.0123456789abcdef

ttl: 24h0m0s

usages:

- signing

- authentication

kind: InitConfiguration

localAPIEndpoint:

advertiseAddress: 1.2.3.4

bindPort: 6443

nodeRegistration:

criSocket: /var/run/dockershim.sock

name: qa1v130-26

taints:

- effect: NoSchedule

key: node-role.kubernetes.io/master

---

apiServer:

timeoutForControlPlane: 4m0s

apiVersion: kubeadm.k8s.io/v1beta2

certificatesDir: /etc/kubernetes/pki

clusterName: kubernetes

controllerManager: {}

dns:

type: CoreDNS

etcd:

local:

dataDir: /var/lib/etcd

imageRepository: k8s.gcr.io

kind: ClusterConfiguration

kubernetesVersion: v1.14.0

networking:

dnsDomain: cluster.local

serviceSubnet: 10.96.0.0/12

scheduler: {}

199

199

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?