简单的分析了nutch抓取过程,涉及到的mapredue等内容在这不做讨论,时间仓促,很多地方写得不具体,以后有时间再慢慢修改,工作需要又得马上分析nutch相关配置文件,分析整理后会发布上来。转载请注明出处

1.1 抓取目录分析

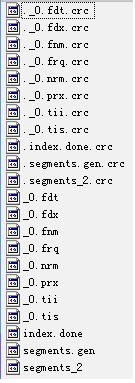

一共生成5个文件夹,分别是:

l crawldb目录存放下载的URL,以及下载的日期,用来页面更新检查时间.

l linkdb目录存放URL的互联关系,是下载完成后分析得到的.

l segments:存放抓取的页面,下面子目录的个数于获取的页面层数有关系,通常每一层页面会独立存放一个子目录,子目录名称为时间,便于管理.比如我这只抓取了一层页面就只生成了20090508173137目录.每个子目录里又有6个子文件夹如下:

Ø content:每个下载页面的内容。

Ø crawl_fetch:每个下载URL的状态。

Ø crawl_generate:待下载URL集合。

Ø crawl_parse:包含来更新crawldb的外部链接库。

Ø parse_data:包含每个URL解析出的外部链接和元数据

Ø parse_text:包含每个解析过的URL的文本内容。

l indexs:存放每次下载的独立索引目录

l index:符合Lucene格式的索引目录,是indexs里所有index合并后的完整索引

1.2 Crawl过程概述

引用到的类主要有以下9个:

1、 nutch.crawl.Inject

用来给抓取数据库添加URL的插入器

2、 nutch.crawl.Generator

用来生成待下载任务列表的生成器

3、 nutch.fetcher.Fetcher

完成抓取特定页面的抓取器

4、 nutch.parse.ParseSegment

负责内容提取和对下级URL提取的内容进行解析的解析器

5、 nutch.crawl.CrawlDb

负责数据库管理的数据库管理工具

6、 nutch.crawl.LinkDb

负责链接管理

7、 nutch.indexer.Indexer

负责创建索引的索引器

8、 nutch.indexer.DeleteDuplicates

删除重复数据

9、 nutch.indexer.IndexMerger

对当前下载内容局部索引和历史索引进行合并的索引合并器

1.3 抓取过程分析

1.3.1 inject方法

描述:初始化爬取的crawldb,读取URL配置文件,把内容注入爬取数据库.

首先会找到读取URL配置文件的目录urls.如果没创建此目录,nutch1.0下会报错.

得到hadoop处理的临时文件夹:

/tmp/hadoop-Administrator/mapred/

日志信息如下:

2009-05-08 15:41:36,640 INFO Injector - Injector: starting

2009-05-08 15:41:37,031 INFO Injector - Injector: crawlDb: 20090508/crawldb

2009-05-08 15:41:37,781 INFO Injector - Injector: urlDir: urls

接着设置一些初始化信息.

调用hadoop包JobClient.runJob方法,跟踪进入JobClient下的submitJob方法进行提交整个过程.具体原理又涉及到另一个开源项目hadoop的分析,它包括了复杂的

MapReduce架构,此处不做分析。

查看submitJob方法,首先获得jobid,执行configureCommandLineOptions方法后会在上边的临时文件夹生成一个system文件夹,同时在它下边生成一个job_local_0001文件夹.执行writeSplitsFile后在job_local_0001下生成job.split文件.执行writeXml写入job.xml,然后执行jobSubmitClient.submitJob正式提交整个job流程,日志如下:

2009-05-08 15:41:36,640 INFO Injector - Injector: starting

2009-05-08 15:41:37,031 INFO Injector - Injector: crawlDb: 20090508/crawldb

2009-05-08 15:41:37,781 INFO Injector - Injector: urlDir: urls

2009-05-08 15:52:41,734 INFO Injector - Injector: Converting injected urls to crawl db entries.

2009-05-08 15:56:22,203 INFO JvmMetrics - Initializing JVM Metrics with processName=JobTracker, sessionId=

2009-05-08 16:08:20,796 WARN JobClient - Use GenericOptionsParser for parsing the arguments. Applications should implement Tool for the same.

2009-05-08 16:08:20,984 WARN JobClient - No job jar file set. User classes may not be found. See JobConf(Class) or JobConf#setJar(String).

2009-05-08 16:24:42,593 INFO FileInputFormat - Total input paths to process : 1

2009-05-08 16:38:29,437 INFO FileInputFormat - Total input paths to process : 1

2009-05-08 16:38:29,546 INFO MapTask - numReduceTasks: 1

2009-05-08 16:38:29,562 INFO MapTask - io.sort.mb = 100

2009-05-08 16:38:29,687 INFO MapTask - data buffer = 79691776/99614720

2009-05-08 16:38:29,687 INFO MapTask - record buffer = 262144/327680

2009-05-08 16:38:29,718 INFO PluginRepository - Plugins: looking in: D:/work/workspace/nutch_crawl/bin/plugins

2009-05-08 16:38:29,921 INFO PluginRepository - Plugin Auto-activation mode: [true]

2009-05-08 16:38:29,921 INFO PluginRepository - Registered Plugins:

2009-05-08 16:38:29,921 INFO PluginRepository - the nutch core extension points (nutch-extensionpoints)

2009-05-08 16:38:29,921 INFO PluginRepository - Basic Query Filter (query-basic)

2009-05-08 16:38:29,921 INFO PluginRepository - Basic URL Normalizer (urlnormalizer-basic)

2009-05-08 16:38:29,921 INFO PluginRepository - Basic Indexing Filter (index-basic)

2009-05-08 16:38:29,921 INFO PluginRepository - Html Parse Plug-in (parse-html)

2009-05-08 16:38:29,921 INFO PluginRepository - Site Query Filter (query-site)

2009-05-08 16:38:29,921 INFO PluginRepository - Basic Summarizer Plug-in (summary-basic)

2009-05-08 16:38:29,921 INFO PluginRepository - HTTP Framework (lib-http)

2009-05-08 16:38:29,921 INFO PluginRepository - Text Parse Plug-in (parse-text)

2009-05-08 16:38:29,921 INFO PluginRepository - Pass-through URL Normalizer (urlnormalizer-pass)

2009-05-08 16:38:29,921 INFO PluginRepository - Regex URL Filter (urlfilter-regex)

2009-05-08 16:38:29,921 INFO PluginRepository - Http Protocol Plug-in (protocol-http)

2009-05-08 16:38:29,921 INFO PluginRepository - XML Response Writer Plug-in (response-xml)

2009-05-08 16:38:29,921 INFO PluginRepository - Regex URL Normalizer (urlnormalizer-regex)

2009-05-08 16:38:29,921 INFO PluginRepository - OPIC Scoring Plug-in (scoring-opic)

2009-05-08 16:38:29,921 INFO PluginRepository - CyberNeko HTML Parser (lib-nekohtml)

2009-05-08 16:38:29,921 INFO PluginRepository - Anchor Indexing Filter (index-anchor)

2009-05-08 16:38:29,921 INFO PluginRepository - JavaScript Parser (parse-js)

2009-05-08 16:38:29,921 INFO PluginRepository - URL Query Filter (query-url)

2009-05-08 16:38:29,921 INFO PluginRepository - Regex URL Filter Framework (lib-regex-filter)

2009-05-08 16:38:29,921 INFO PluginRepository - JSON Response Writer Plug-in (response-json)

2009-05-08 16:38:29,921 INFO PluginRepository - Registered Extension-Points:

2009-05-08 16:38:29,921 INFO PluginRepository - Nutch Summarizer (org.apache.nutch.searcher.Summarizer)

2009-05-08 16:38:29,921 INFO PluginRepository - Nutch Protocol (org.apache.nutch.protocol.Protocol)

2009-05-08 16:38:29,921 INFO PluginRepository - Nutch Analysis (org.apache.nutch.analysis.NutchAnalyzer)

2009-05-08 16:38:29,921 INFO PluginRepository - Nutch Field Filter (org.apache.nutch.indexer.field.FieldFilter)

2009-05-08 16:38:29,921 INFO PluginRepository - HTML Parse Filter (org.apache.nutch.parse.HtmlParseFilter)

2009-05-08 16:38:29,921 INFO PluginRepository - Nutch Query Filter (org.apache.nutch.searcher.QueryFilter)

2009-05-08 16:38:29,921 INFO PluginRepository - Nutch Search Results Response Writer (org.apache.nutch.searcher.response.ResponseWriter)

2009-05-08 16:38:29,921 INFO PluginRepository - Nutch URL Normalizer (org.apache.nutch.net.URLNormalizer)

2009-05-08 16:38:29,921 INFO PluginRepository - Nutch URL Filter (org.apache.nutch.net.URLFilter)

2009-05-08 16:38:29,921 INFO PluginRepository - Nutch Online Search Results Clustering Plugin (org.apache.nutch.clustering.OnlineClusterer)

2009-05-08 16:38:29,921 INFO PluginRepository - Nutch Indexing Filter (org.apache.nutch.indexer.IndexingFilter)

2009-05-08 16:38:29,921 INFO PluginRepository - Nutch Content Parser (org.apache.nutch.parse.Parser)

2009-05-08 16:38:29,921 INFO PluginRepository - Nutch Scoring (org.apache.nutch.scoring.ScoringFilter)

2009-05-08 16:38:29,921 INFO PluginRepository - Ontology Model Loader (org.apache.nutch.ontology.Ontology)

2009-05-08 16:38:29,968 INFO Configuration - found resource crawl-urlfilter.txt at file:/D:/work/workspace/nutch_crawl/bin/crawl-urlfilter.txt

2009-05-08 16:38:29,984 WARN RegexURLNormalizer - can't find rules for scope 'inject', using default

2009-05-08 16:38:29,984 INFO MapTask - Starting flush of map output

2009-05-08 16:38:30,203 INFO MapTask - Finished spill 0

2009-05-08 16:38:30,203 INFO TaskRunner - Task:attempt_local_0001_m_000000_0 is done. And is in the process of commiting

2009-05-08 16:38:30,218 INFO LocalJobRunner - file:/D:/work/workspace/nutch_crawl/urls/site.txt:0+19

2009-05-08 16:38:30,218 INFO TaskRunner - Task 'attempt_local_0001_m_000000_0' done.

2009-05-08 16:38:30,234 INFO LocalJobRunner -

2009-05-08 16:38:30,250 INFO Merger - Merging 1 sorted segments

2009-05-08 16:38:30,265 INFO Merger - Down to the last merge-pass, with 1 segments left of total size: 53 bytes

2009-05-08 16:38:30,265 INFO LocalJobRunner -

2009-05-08 16:38:30,390 INFO TaskRunner - Task:attempt_local_0001_r_000000_0 is done. And is in the process of commiting

2009-05-08 16:38:30,390 INFO LocalJobRunner -

2009-05-08 16:38:30,390 INFO TaskRunner - Task attempt_local_0001_r_000000_0 is allowed to commit now

2009-05-08 16:38:30,406 INFO FileOutputCommitter - Saved output of task 'attempt_local_0001_r_000000_0' to file:/tmp/hadoop-Administrator/mapred/temp/inject-temp-474192304

2009-05-08 16:38:30,406 INFO LocalJobRunner - reduce > reduce

2009-05-08 16:38:30,406 INFO TaskRunner - Task 'attempt_local_0001_r_000000_0' done.

执行完后返回的running值如下:

Job: job_local_0001

file: file:/tmp/hadoop-Administrator/mapred/system/job_local_0001/job.xml

tracking URL: http://localhost:8080/

2009-05-08 16:47:14,093 INFO JobClient - Running job: job_local_0001

2009-05-08 16:49:51,859 INFO JobClient - Job complete: job_local_0001

2009-05-08 16:51:36,062 INFO JobClient - Counters: 11

2009-05-08 16:51:36,062 INFO JobClient - File Systems

2009-05-08 16:51:36,062 INFO JobClient - Local bytes read=51591

2009-05-08 16:51:36,062 INFO JobClient - Local bytes written=104337

2009-05-08 16:51:36,062 INFO JobClient - Map-Reduce Framework

2009-05-08 16:51:36,062 INFO JobClient - Reduce input groups=1

2009-05-08 16:51:36,062 INFO JobClient - Combine output records=0

2009-05-08 16:51:36,062 INFO JobClient - Map input records=1

2009-05-08 16:51:36,062 INFO JobClient - Reduce output records=1

2009-05-08 16:51:36,062 INFO JobClient - Map output bytes=49

2009-05-08 16:51:36,062 INFO JobClient - Map input bytes=19

2009-05-08 16:51:36,062 INFO JobClient - Combine input records=0

2009-05-08 16:51:36,062 INFO JobClient - Map output records=1

2009-05-08 16:51:36,062 INFO JobClient - Reduce input records=1

至此第一个runJob方法执行结束.

总结:待写

接下来就是生成crawldb文件夹,并把urls合并注入到它的里面.

JobClient.runJob(mergeJob);

CrawlDb.install(mergeJob, crawlDb);

这个过程首先会在前面提到的临时文件夹下生成job_local_0002目录,和上边一样同样会生成job.split和job.xml,接着完成crawldb的创建,最后删除临时文件夹temp下的文件.

至此inject过程结束.最后部分日志如下:

2009-05-08 17:03:57,250 INFO Injector - Injector: Merging injected urls into crawl db.

2009-05-08 17:10:01,015 INFO JvmMetrics - Cannot initialize JVM Metrics with processName=JobTracker, sessionId= - already initialized

2009-05-08 17:10:15,953 WARN JobClient - Use GenericOptionsParser for parsing the arguments. Applications should implement Tool for the same.

2009-05-08 17:10:16,156 WARN JobClient - No job jar file set. User classes may not be found. See JobConf(Class) or JobConf#setJar(String).

2009-05-08 17:12:15,296 INFO FileInputFormat - Total input paths to process : 1

2009-05-08 17:13:40,296 INFO FileInputFormat - Total input paths to process : 1

2009-05-08 17:13:40,406 INFO MapTask - numReduceTasks: 1

2009-05-08 17:13:40,406 INFO MapTask - io.sort.mb = 100

2009-05-08 17:13:40,515 INFO MapTask - data buffer = 79691776/99614720

2009-05-08 17:13:40,515 INFO MapTask - record buffer = 262144/327680

2009-05-08 17:13:40,546 INFO MapTask - Starting flush of map output

2009-05-08 17:13:40,765 INFO MapTask - Finished spill 0

2009-05-08 17:13:40,765 INFO TaskRunner - Task:attempt_local_0002_m_000000_0 is done. And is in the process of commiting

2009-05-08 17:13:40,765 INFO LocalJobRunner - file:/tmp/hadoop-Administrator/mapred/temp/inject-temp-474192304/part-00000:0+143

2009-05-08 17:13:40,765 INFO TaskRunner - Task 'attempt_local_0002_m_000000_0' done.

2009-05-08 17:13:40,796 INFO LocalJobRunner -

2009-05-08 17:13:40,796 INFO Merger - Merging 1 sorted segments

2009-05-08 17:13:40,796 INFO Merger - Down to the last merge-pass, with 1 segments left of total size: 53 bytes

2009-05-08 17:13:40,796 INFO LocalJobRunner -

2009-05-08 17:13:40,906 WARN NativeCodeLoader - Unable to load native-hadoop library for your platform... using builtin-java classes where applicable

2009-05-08 17:13:40,906 INFO CodecPool - Got brand-new compressor

2009-05-08 17:13:40,906 INFO TaskRunner - Task:attempt_local_0002_r_000000_0 is done. And is in the process of commiting

2009-05-08 17:13:40,906 INFO LocalJobRunner -

2009-05-08 17:13:40,906 INFO TaskRunner - Task attempt_local_0002_r_000000_0 is allowed to commit now

2009-05-08 17:13:40,921 INFO FileOutputCommitter - Saved output of task 'attempt_local_0002_r_000000_0' to file:/D:/work/workspace/nutch_crawl/20090508/crawldb/1896567745

2009-05-08 17:13:40,921 INFO LocalJobRunner - reduce > reduce

2009-05-08 17:13:40,937 INFO TaskRunner - Task 'attempt_local_0002_r_000000_0' done.

2009-05-08 17:13:46,781 INFO JobClient - Running job: job_local_0002

2009-05-08 17:14:55,125 INFO JobClient - Job complete: job_local_0002

2009-05-08 17:14:59,328 INFO JobClient - Counters: 11

2009-05-08 17:14:59,328 INFO JobClient - File Systems

2009-05-08 17:14:59,328 INFO JobClient - Local bytes read=103875

2009-05-08 17:14:59,328 INFO JobClient - Local bytes written=209385

2009-05-08 17:14:59,328 INFO JobClient - Map-Reduce Framework

2009-05-08 17:14:59,328 INFO JobClient - Reduce input groups=1

2009-05-08 17:14:59,328 INFO JobClient - Combine output records=0

2009-05-08 17:14:59,328 INFO JobClient - Map input records=1

2009-05-08 17:14:59,328 INFO JobClient - Reduce output records=1

2009-05-08 17:14:59,328 INFO JobClient - Map output bytes=49

2009-05-08 17:14:59,328 INFO JobClient - Map input bytes=57

2009-05-08 17:14:59,328 INFO JobClient - Combine input records=0

2009-05-08 17:14:59,328 INFO JobClient - Map output records=1

2009-05-08 17:14:59,328 INFO JobClient - Reduce input records=1

2009-05-08 17:17:30,984 INFO JvmMetrics - Cannot initialize JVM Metrics with processName=JobTracker, sessionId= - already initialized

2009-05-08 17:20:02,390 INFO Injector - Injector: done

1.3.2 generate方法

描述:从爬取数据库中生成新的segment,然后从中生成待下载任务列表(fetchlist).

LockUtil.createLockFile(fs, lock, force);

首先执行上边方法后会在crawldb目录下生成.locked文件,猜测作用是防止crawldb的数据被修改,真实作用有待验证.

接着执行的过程和上边大同小异,可参考上边步骤,日志如下:

2009-05-08 17:37:18,218 INFO Generator - Generator: Selecting best-scoring urls due for fetch.

2009-05-08 17:37:18,625 INFO Generator - Generator: starting

2009-05-08 17:37:18,937 INFO Generator - Generator: segment: 20090508/segments/20090508173137

2009-05-08 17:37:19,468 INFO Generator - Generator: filtering: true

2009-05-08 17:37:22,312 INFO Generator - Generator: topN: 50

2009-05-08 17:37:51,203 INFO Generator - Generator: jobtracker is 'local', generating exactly one partition.

2009-05-08 17:39:57,609 INFO JvmMetrics - Cannot initialize JVM Metrics with processName=JobTracker, sessionId= - already initialized

2009-05-08 17:40:05,234 WARN JobClient - Use GenericOptionsParser for parsing the arguments. Applications should implement Tool for the same.

2009-05-08 17:40:05,406 WARN JobClient - No job jar file set. User classes may not be found. See JobConf(Class) or JobConf#setJar(String).

2009-05-08 17:40:05,437 INFO FileInputFormat - Total input paths to process : 1

2009-05-08 17:40:06,062 INFO FileInputFormat - Total input paths to process : 1

2009-05-08 17:40:06,109 INFO MapTask - numReduceTasks: 1

省略插件加载日志……

2009-05-08 17:40:06,312 INFO Configuration - found resource crawl-urlfilter.txt at file:/D:/work/workspace/nutch_crawl/bin/crawl-urlfilter.txt

2009-05-08 17:40:06,343 INFO FetchScheduleFactory - Using FetchSchedule impl: org.apache.nutch.crawl.DefaultFetchSchedule

2009-05-08 17:40:06,343 INFO AbstractFetchSchedule - defaultInterval=2592000

2009-05-08 17:40:06,343 INFO AbstractFetchSchedule - maxInterval=7776000

2009-05-08 17:40:06,343 INFO MapTask - io.sort.mb = 100

2009-05-08 17:40:06,437 INFO MapTask - data buffer = 79691776/99614720

2009-05-08 17:40:06,437 INFO MapTask - record buffer = 262144/327680

2009-05-08 17:40:06,453 WARN RegexURLNormalizer - can't find rules for scope 'partition', using default

2009-05-08 17:40:06,453 INFO MapTask - Starting flush of map output

2009-05-08 17:40:06,625 INFO MapTask - Finished spill 0

2009-05-08 17:40:06,640 INFO TaskRunner - Task:attempt_local_0003_m_000000_0 is done. And is in the process of commiting

2009-05-08 17:40:06,640 INFO LocalJobRunner - file:/D:/work/workspace/nutch_crawl/20090508/crawldb/current/part-00000/data:0+143

2009-05-08 17:40:06,640 INFO TaskRunner - Task 'attempt_local_0003_m_000000_0' done.

2009-05-08 17:40:06,656 INFO LocalJobRunner -

2009-05-08 17:40:06,656 INFO Merger - Merging 1 sorted segments

2009-05-08 17:40:06,656 INFO Merger - Down to the last merge-pass, with 1 segments left of total size: 78 bytes

2009-05-08 17:40:06,656 INFO LocalJobRunner –

省略插件加载日志……

2009-05-08 17:40:06,875 INFO Configuration - found resource crawl-urlfilter.txt at file:/D:/work/workspace/nutch_crawl/bin/crawl-urlfilter.txt

2009-05-08 17:40:06,906 INFO FetchScheduleFactory - Using FetchSchedule impl: org.apache.nutch.crawl.DefaultFetchSchedule

2009-05-08 17:40:06,906 INFO AbstractFetchSchedule - defaultInterval=2592000

2009-05-08 17:40:06,906 INFO AbstractFetchSchedule - maxInterval=7776000

2009-05-08 17:40:06,906 WARN RegexURLNormalizer - can't find rules for scope 'generate_host_count', using default

2009-05-08 17:40:06,906 INFO TaskRunner - Task:attempt_local_0003_r_000000_0 is done. And is in the process of commiting

2009-05-08 17:40:06,906 INFO LocalJobRunner -

2009-05-08 17:40:06,906 INFO TaskRunner - Task attempt_local_0003_r_000000_0 is allowed to commit now

2009-05-08 17:40:06,906 INFO FileOutputCommitter - Saved output of task 'attempt_local_0003_r_000000_0' to file:/tmp/hadoop-Administrator/mapred/temp/generate-temp-1241774893937

2009-05-08 17:40:06,921 INFO LocalJobRunner - reduce > reduce

2009-05-08 17:40:06,921 INFO TaskRunner - Task 'attempt_local_0003_r_000000_0' done.

2009-05-08 17:40:21,468 INFO JobClient - Running job: job_local_0003

2009-05-08 17:40:31,671 INFO JobClient - Job complete: job_local_0003

2009-05-08 17:40:34,046 INFO JobClient - Counters: 11

2009-05-08 17:40:34,046 INFO JobClient - File Systems

2009-05-08 17:40:34,046 INFO JobClient - Local bytes read=157400

2009-05-08 17:40:34,046 INFO JobClient - Local bytes written=316982

2009-05-08 17:40:34,046 INFO JobClient - Map-Reduce Framework

2009-05-08 17:40:34,046 INFO JobClient - Reduce input groups=1

2009-05-08 17:40:34,046 INFO JobClient - Combine output records=0

2009-05-08 17:40:34,046 INFO JobClient - Map input records=1

2009-05-08 17:40:34,046 INFO JobClient - Reduce output records=1

2009-05-08 17:40:34,046 INFO JobClient - Map output bytes=74

2009-05-08 17:40:34,046 INFO JobClient - Map input bytes=57

2009-05-08 17:40:34,046 INFO JobClient - Combine input records=0

2009-05-08 17:40:34,046 INFO JobClient - Map output records=1

2009-05-08 17:40:34,046 INFO JobClient - Reduce input records=1

接着还是执行submitJob方法提交整个generate过程,生成segments目录,删除临时文件,锁定文件等,当前segments下只生成了crawl_generate一个文件夹.

1.3.3 fetch 方法

描述:完成具体的下载任务

2009-05-11 09:45:13,984 WARN Fetcher - Fetcher: Your 'http.agent.name' value should be listed first in 'http.robots.agents' property.

2009-05-11 09:45:34,796 INFO Fetcher - Fetcher: starting

2009-05-11 09:45:35,375 INFO Fetcher - Fetcher: segment: 20090508/segments/20090511094102

2009-05-11 09:49:23,984 INFO JvmMetrics - Cannot initialize JVM Metrics with processName=JobTracker, sessionId= - already initialized

2009-05-11 09:49:58,046 WARN JobClient - Use GenericOptionsParser for parsing the arguments. Applications should implement Tool for the same.

2009-05-11 09:49:58,234 WARN JobClient - No job jar file set. User classes may not be found. See JobConf(Class) or JobConf#setJar(String).

2009-05-11 09:49:58,265 INFO FileInputFormat - Total input paths to process : 1

2009-05-11 09:49:58,859 INFO FileInputFormat - Total input paths to process : 1

2009-05-11 09:49:58,906 INFO MapTask - numReduceTasks: 1

2009-05-11 09:49:58,906 INFO MapTask - io.sort.mb = 100

2009-05-11 09:49:59,015 INFO MapTask - data buffer = 79691776/99614720

2009-05-11 09:49:59,015 INFO MapTask - record buffer = 262144/327680

2009-05-11 09:49:59,140 INFO Fetcher - Fetcher: threads: 5

2009-05-11 09:49:59,140 INFO PluginRepository - Plugins: looking in: D:/work/workspace/nutch_crawl/bin/plugins

2009-05-11 09:49:59,250 INFO Fetcher - QueueFeeder finished: total 1 records.

省略插件加载日志….

2009-05-11 09:49:59,312 INFO Configuration - found resource crawl-urlfilter.txt at file:/D:/work/workspace/nutch_crawl/bin/crawl-urlfilter.txt

2009-05-11 09:49:59,328 INFO Configuration - found resource parse-plugins.xml at file:/D:/work/workspace/nutch_crawl/bin/parse-plugins.xml

2009-05-11 09:49:59,359 INFO Fetcher - fetching http://www.163.com/

2009-05-11 09:49:59,375 INFO Fetcher - -finishing thread FetcherThread, activeThreads=4

2009-05-11 09:49:59,375 INFO Fetcher - -finishing thread FetcherThread, activeThreads=3

2009-05-11 09:49:59,375 INFO Fetcher - -finishing thread FetcherThread, activeThreads=2

2009-05-11 09:49:59,375 INFO Fetcher - -finishing thread FetcherThread, activeThreads=1

2009-05-11 09:49:59,421 INFO Http - http.proxy.host = null

2009-05-11 09:49:59,421 INFO Http - http.proxy.port = 8080

2009-05-11 09:49:59,421 INFO Http - http.timeout = 10000

2009-05-11 09:49:59,421 INFO Http - http.content.limit = 65536

2009-05-11 09:49:59,421 INFO Http - http.agent = nutch/Nutch-1.0 (chinahui; http://www.163.com; zhonghui@028wy.com)

2009-05-11 09:49:59,421 INFO Http - protocol.plugin.check.blocking = false

2009-05-11 09:49:59,421 INFO Http - protocol.plugin.check.robots = false

2009-05-11 09:50:00,109 INFO Configuration - found resource tika-mimetypes.xml at file:/D:/work/workspace/nutch_crawl/bin/tika-mimetypes.xml

2009-05-11 09:50:00,156 WARN ParserFactory - ParserFactory:Plugin: org.apache.nutch.parse.html.HtmlParser mapped to contentType application/xhtml+xml via parse-plugins.xml, but its plugin.xml file does not claim to support contentType: application/xhtml+xml

2009-05-11 09:50:00,375 INFO Fetcher - -activeThreads=1, spinWaiting=0, fetchQueues.totalSize=0

2009-05-11 09:50:00,671 INFO SignatureFactory - Using Signature impl: org.apache.nutch.crawl.MD5Signature

2009-05-11 09:50:00,687 INFO Fetcher - -finishing thread FetcherThread, activeThreads=0

2009-05-11 09:50:01,375 INFO Fetcher - -activeThreads=0, spinWaiting=0, fetchQueues.totalSize=0

2009-05-11 09:50:01,375 INFO Fetcher - -activeThreads=0

2009-05-11 09:50:01,375 INFO MapTask - Starting flush of map output

2009-05-11 09:50:01,578 INFO MapTask - Finished spill 0

2009-05-11 09:50:01,578 INFO TaskRunner - Task:attempt_local_0005_m_000000_0 is done. And is in the process of commiting

2009-05-11 09:50:01,578 INFO LocalJobRunner - 0 threads, 1 pages, 0 errors, 0.5 pages/s, 256 kb/s,

2009-05-11 09:50:01,578 INFO TaskRunner - Task 'attempt_local_0005_m_000000_0' done.

2009-05-11 09:50:01,593 INFO LocalJobRunner -

2009-05-11 09:50:01,593 INFO Merger - Merging 1 sorted segments

2009-05-11 09:50:01,593 INFO Merger - Down to the last merge-pass, with 1 segments left of total size: 72558 bytes

2009-05-11 09:50:01,593 INFO LocalJobRunner -

2009-05-11 09:50:01,671 INFO CodecPool - Got brand-new compressor

2009-05-11 09:50:01,734 INFO CodecPool - Got brand-new compressor

2009-05-11 09:50:01,765 INFO CodecPool - Got brand-new compressor

2009-05-11 09:50:01,765 INFO PluginRepository - Plugins: looking in: D:/work/workspace/nutch_crawl/bin/plugins

省略插件加载日志…

2009-05-11 09:50:01,921 INFO Configuration - found resource crawl-urlfilter.txt at file:/D:/work/workspace/nutch_crawl/bin/crawl-urlfilter.txt

2009-05-11 09:50:01,984 INFO CodecPool - Got brand-new compressor

2009-05-11 09:50:02,015 INFO CodecPool - Got brand-new compressor

2009-05-11 09:50:02,062 INFO CodecPool - Got brand-new compressor

2009-05-11 09:50:02,093 INFO CodecPool - Got brand-new compressor

2009-05-11 09:50:02,125 INFO CodecPool - Got brand-new compressor

2009-05-11 09:50:02,140 WARN RegexURLNormalizer - can't find rules for scope 'outlink', using default

2009-05-11 09:50:02,171 INFO TaskRunner - Task:attempt_local_0005_r_000000_0 is done. And is in the process of commiting

2009-05-11 09:50:02,171 INFO LocalJobRunner - reduce > reduce

2009-05-11 09:50:02,187 INFO TaskRunner - Task 'attempt_local_0005_r_000000_0' done.

2009-05-11 09:50:44,062 INFO JobClient - Running job: job_local_0005

2009-05-11 09:51:31,328 INFO JobClient - Job complete: job_local_0005

2009-05-11 09:51:32,984 INFO JobClient - Counters: 11

2009-05-11 09:51:33,000 INFO JobClient - File Systems

2009-05-11 09:51:33,000 INFO JobClient - Local bytes read=336424

2009-05-11 09:51:33,000 INFO JobClient - Local bytes written=700394

2009-05-11 09:51:33,000 INFO JobClient - Map-Reduce Framework

2009-05-11 09:51:33,000 INFO JobClient - Reduce input groups=1

2009-05-11 09:51:33,000 INFO JobClient - Combine output records=0

2009-05-11 09:51:33,000 INFO JobClient - Map input records=1

2009-05-11 09:51:33,000 INFO JobClient - Reduce output records=3

2009-05-11 09:51:33,000 INFO JobClient - Map output bytes=72545

2009-05-11 09:51:33,000 INFO JobClient - Map input bytes=78

2009-05-11 09:51:33,000 INFO JobClient - Combine input records=0

2009-05-11 09:51:33,000 INFO JobClient - Map output records=3

2009-05-11 09:51:33,000 INFO JobClient - Reduce input records=3

2009-05-11 09:51:47,750 INFO Fetcher - Fetcher: done

1.3.4 parse方法

描述:解析下载页面内容

1.3.5 update方法

描述:添加子链接到爬取数据库

2009-05-11 10:04:20,890 INFO CrawlDb - CrawlDb update: starting

2009-05-11 10:04:22,500 INFO CrawlDb - CrawlDb update: db: 20090508/crawldb

2009-05-11 10:05:53,593 INFO CrawlDb - CrawlDb update: segments: [20090508/segments/20090511094102]

2009-05-11 10:06:06,031 INFO CrawlDb - CrawlDb update: additions allowed: true

2009-05-11 10:06:07,296 INFO CrawlDb - CrawlDb update: URL normalizing: true

2009-05-11 10:06:09,031 INFO CrawlDb - CrawlDb update: URL filtering: true

2009-05-11 10:07:05,125 INFO CrawlDb - CrawlDb update: Merging segment data into db.

2009-05-11 10:08:11,031 INFO JvmMetrics - Cannot initialize JVM Metrics with processName=JobTracker, sessionId= - already initialized

2009-05-11 10:09:00,187 WARN JobClient - Use GenericOptionsParser for parsing the arguments. Applications should implement Tool for the same.

2009-05-11 10:09:00,375 WARN JobClient - No job jar file set. User classes may not be found. See JobConf(Class) or JobConf#setJar(String).

2009-05-11 10:10:03,531 INFO FileInputFormat - Total input paths to process : 3

2009-05-11 10:16:25,125 INFO FileInputFormat - Total input paths to process : 3

2009-05-11 10:16:25,203 INFO MapTask - numReduceTasks: 1

2009-05-11 10:16:25,203 INFO MapTask - io.sort.mb = 100

2009-05-11 10:16:25,343 INFO MapTask - data buffer = 79691776/99614720

2009-05-11 10:16:25,343 INFO MapTask - record buffer = 262144/327680

2009-05-11 10:16:25,343 INFO PluginRepository - Plugins: looking in: D:/work/workspace/nutch_crawl/bin/plugins

省略插件加载日志…

2009-05-11 10:16:25,750 INFO Configuration - found resource crawl-urlfilter.txt at file:/D:/work/workspace/nutch_crawl/bin/crawl-urlfilter.txt

2009-05-11 10:16:25,796 WARN RegexURLNormalizer - can't find rules for scope 'crawldb', using default

2009-05-11 10:16:25,796 INFO MapTask - Starting flush of map output

2009-05-11 10:16:25,984 INFO MapTask - Finished spill 0

2009-05-11 10:16:26,000 INFO TaskRunner - Task:attempt_local_0006_m_000000_0 is done. And is in the process of commiting

2009-05-11 10:16:26,000 INFO LocalJobRunner - file:/D:/work/workspace/nutch_crawl/20090508/crawldb/current/part-00000/data:0+143

2009-05-11 10:16:26,000 INFO TaskRunner - Task 'attempt_local_0006_m_000000_0' done.

2009-05-11 10:16:26,031 INFO MapTask - numReduceTasks: 1

2009-05-11 10:16:26,031 INFO MapTask - io.sort.mb = 100

2009-05-11 10:16:26,140 INFO MapTask - data buffer = 79691776/99614720

2009-05-11 10:16:26,140 INFO MapTask - record buffer = 262144/327680

2009-05-11 10:16:26,156 INFO CodecPool - Got brand-new decompressor

2009-05-11 10:16:26,171 INFO PluginRepository - Plugins: looking in: D:/work/workspace/nutch_crawl/bin/plugins

省略插件加载日志…

2009-05-11 10:16:26,687 INFO Configuration - found resource crawl-urlfilter.txt at file:/D:/work/workspace/nutch_crawl/bin/crawl-urlfilter.txt

2009-05-11 10:16:26,718 WARN RegexURLNormalizer - can't find rules for scope 'crawldb', using default

2009-05-11 10:16:26,734 INFO MapTask - Starting flush of map output

2009-05-11 10:16:26,750 INFO MapTask - Finished spill 0

2009-05-11 10:16:26,750 INFO TaskRunner - Task:attempt_local_0006_m_000002_0 is done. And is in the process of commiting

2009-05-11 10:16:26,750 INFO LocalJobRunner - file:/D:/work/workspace/nutch_crawl/20090508/segments/20090511094102/crawl_parse/part-00000:0+4026

2009-05-11 10:16:26,750 INFO TaskRunner - Task 'attempt_local_0006_m_000002_0' done.

2009-05-11 10:16:26,781 INFO LocalJobRunner -

2009-05-11 10:16:26,781 INFO Merger - Merging 3 sorted segments

2009-05-11 10:16:26,781 INFO Merger - Down to the last merge-pass, with 3 segments left of total size: 3706 bytes

2009-05-11 10:16:26,781 INFO LocalJobRunner -

2009-05-11 10:16:26,875 INFO PluginRepository - Plugins: looking in: D:/work/workspace/nutch_crawl/bin/plugins

省略插件加载日志…

2009-05-11 10:16:27,031 INFO FetchScheduleFactory - Using FetchSchedule impl: org.apache.nutch.crawl.DefaultFetchSchedule

2009-05-11 10:16:27,031 INFO AbstractFetchSchedule - defaultInterval=2592000

2009-05-11 10:16:27,031 INFO AbstractFetchSchedule - maxInterval=7776000

2009-05-11 10:16:27,046 INFO TaskRunner - Task:attempt_local_0006_r_000000_0 is done. And is in the process of commiting

2009-05-11 10:16:27,046 INFO LocalJobRunner -

2009-05-11 10:16:27,046 INFO TaskRunner - Task attempt_local_0006_r_000000_0 is allowed to commit now

2009-05-11 10:16:27,062 INFO FileOutputCommitter - Saved output of task 'attempt_local_0006_r_000000_0' to file:/D:/work/workspace/nutch_crawl/20090508/crawldb/132216774

2009-05-11 10:16:27,062 INFO LocalJobRunner - reduce > reduce

2009-05-11 10:16:27,062 INFO TaskRunner - Task 'attempt_local_0006_r_000000_0' done.

2009-05-11 10:17:43,984 INFO JobClient - Running job: job_local_0006

2009-05-11 10:18:33,671 INFO JobClient - Job complete: job_local_0006

2009-05-11 10:18:35,906 INFO JobClient - Counters: 11

2009-05-11 10:18:35,906 INFO JobClient - File Systems

2009-05-11 10:18:35,906 INFO JobClient - Local bytes read=936164

2009-05-11 10:18:35,906 INFO JobClient - Local bytes written=1678861

2009-05-11 10:18:35,906 INFO JobClient - Map-Reduce Framework

2009-05-11 10:18:35,906 INFO JobClient - Reduce input groups=57

2009-05-11 10:18:35,906 INFO JobClient - Combine output records=0

2009-05-11 10:18:35,906 INFO JobClient - Map input records=63

2009-05-11 10:18:35,906 INFO JobClient - Reduce output records=57

2009-05-11 10:18:35,906 INFO JobClient - Map output bytes=3574

2009-05-11 10:18:35,906 INFO JobClient - Map input bytes=4079

2009-05-11 10:18:35,906 INFO JobClient - Combine input records=0

2009-05-11 10:18:35,906 INFO JobClient - Map output records=63

2009-05-11 10:18:35,906 INFO JobClient - Reduce input records=63

2009-05-11 10:19:48,078 INFO JvmMetrics - Cannot initialize JVM Metrics with processName=JobTracker, sessionId= - already initialized

2009-05-11 10:22:51,437 INFO CrawlDb - CrawlDb update: done

1.3.6 invert方法

描述:分析链接关系,生成反向链接

执行完后生成linkdb目录.

2009-05-11 10:04:20,890 INFO CrawlDb - CrawlDb update: starting

2009-05-11 10:04:22,500 INFO CrawlDb - CrawlDb update: db: 20090508/crawldb

2009-05-11 10:05:53,593 INFO CrawlDb - CrawlDb update: segments: [20090508/segments/20090511094102]

2009-05-11 10:06:06,031 INFO CrawlDb - CrawlDb update: additions allowed: true

2009-05-11 10:06:07,296 INFO CrawlDb - CrawlDb update: URL normalizing: true

2009-05-11 10:06:09,031 INFO CrawlDb - CrawlDb update: URL filtering: true

2009-05-11 10:07:05,125 INFO CrawlDb - CrawlDb update: Merging segment data into db.

2009-05-11 10:08:11,031 INFO JvmMetrics - Cannot initialize JVM Metrics with processName=JobTracker, sessionId= - already initialized

2009-05-11 10:09:00,187 WARN JobClient - Use GenericOptionsParser for parsing the arguments. Applications should implement Tool for the same.

2009-05-11 10:09:00,375 WARN JobClient - No job jar file set. User classes may not be found. See JobConf(Class) or JobConf#setJar(String).

2009-05-11 10:10:03,531 INFO FileInputFormat - Total input paths to process : 3

2009-05-11 10:16:25,125 INFO FileInputFormat - Total input paths to process : 3

2009-05-11 10:16:25,203 INFO MapTask - numReduceTasks: 1

2009-05-11 10:16:25,203 INFO MapTask - io.sort.mb = 100

2009-05-11 10:16:25,343 INFO MapTask - data buffer = 79691776/99614720

2009-05-11 10:16:25,343 INFO MapTask - record buffer = 262144/327680

2009-05-11 10:16:25,343 INFO PluginRepository - Plugins: looking in: D:/work/workspace/nutch_crawl/bin/plugins

省略插件加载日志…

2009-05-11 10:16:25,750 INFO Configuration - found resource crawl-urlfilter.txt at file:/D:/work/workspace/nutch_crawl/bin/crawl-urlfilter.txt

2009-05-11 10:16:25,796 WARN RegexURLNormalizer - can't find rules for scope 'crawldb', using default

2009-05-11 10:16:25,796 INFO MapTask - Starting flush of map output

2009-05-11 10:16:25,984 INFO MapTask - Finished spill 0

2009-05-11 10:16:26,000 INFO TaskRunner - Task:attempt_local_0006_m_000000_0 is done. And is in the process of commiting

2009-05-11 10:16:26,000 INFO LocalJobRunner - file:/D:/work/workspace/nutch_crawl/20090508/crawldb/current/part-00000/data:0+143

2009-05-11 10:16:26,000 INFO TaskRunner - Task 'attempt_local_0006_m_000000_0' done.

2009-05-11 10:16:26,031 INFO MapTask - numReduceTasks: 1

2009-05-11 10:16:26,031 INFO MapTask - io.sort.mb = 100

2009-05-11 10:16:26,140 INFO MapTask - data buffer = 79691776/99614720

2009-05-11 10:16:26,140 INFO MapTask - record buffer = 262144/327680

2009-05-11 10:16:26,156 INFO CodecPool - Got brand-new decompressor

2009-05-11 10:16:26,171 INFO PluginRepository - Plugins: looking in: D:/work/workspace/nutch_crawl/bin/plugins

省略插件加载日志…

2009-05-11 10:16:26,343 INFO Configuration - found resource crawl-urlfilter.txt at file:/D:/work/workspace/nutch_crawl/bin/crawl-urlfilter.txt

2009-05-11 10:16:26,359 WARN RegexURLNormalizer - can't find rules for scope 'crawldb', using default

2009-05-11 10:16:26,359 INFO MapTask - Starting flush of map output

2009-05-11 10:16:26,359 INFO MapTask - Finished spill 0

2009-05-11 10:16:26,375 INFO TaskRunner - Task:attempt_local_0006_m_000001_0 is done. And is in the process of commiting

2009-05-11 10:16:26,375 INFO LocalJobRunner - file:/D:/work/workspace/nutch_crawl/20090508/segments/20090511094102/crawl_fetch/part-00000/data:0+254

2009-05-11 10:16:26,375 INFO TaskRunner - Task 'attempt_local_0006_m_000001_0' done.

2009-05-11 10:16:26,406 INFO MapTask - numReduceTasks: 1

2009-05-11 10:16:26,406 INFO MapTask - io.sort.mb = 100

2009-05-11 10:16:26,515 INFO MapTask - data buffer = 79691776/99614720

2009-05-11 10:16:26,515 INFO MapTask - record buffer = 262144/327680

2009-05-11 10:16:26,531 INFO PluginRepository - Plugins: looking in: D:/work/workspace/nutch_crawl/bin/plugins

省略插件加载日志…

2009-05-11 10:16:26,687 INFO Configuration - found resource crawl-urlfilter.txt at file:/D:/work/workspace/nutch_crawl/bin/crawl-urlfilter.txt

2009-05-11 10:16:26,718 WARN RegexURLNormalizer - can't find rules for scope 'crawldb', using default

2009-05-11 10:16:26,734 INFO MapTask - Starting flush of map output

2009-05-11 10:16:26,750 INFO MapTask - Finished spill 0

2009-05-11 10:16:26,750 INFO TaskRunner - Task:attempt_local_0006_m_000002_0 is done. And is in the process of commiting

2009-05-11 10:16:26,750 INFO LocalJobRunner - file:/D:/work/workspace/nutch_crawl/20090508/segments/20090511094102/crawl_parse/part-00000:0+4026

2009-05-11 10:16:26,750 INFO TaskRunner - Task 'attempt_local_0006_m_000002_0' done.

2009-05-11 10:16:26,781 INFO LocalJobRunner -

2009-05-11 10:16:26,781 INFO Merger - Merging 3 sorted segments

2009-05-11 10:16:26,781 INFO Merger - Down to the last merge-pass, with 3 segments left of total size: 3706 bytes

2009-05-11 10:16:26,781 INFO LocalJobRunner -

2009-05-11 10:16:26,875 INFO PluginRepository - Plugins: looking in: D:/work/workspace/nutch_crawl/bin/plugins

省略插件加载日志…

2009-05-11 10:16:27,031 INFO FetchScheduleFactory - Using FetchSchedule impl: org.apache.nutch.crawl.DefaultFetchSchedule

2009-05-11 10:16:27,031 INFO AbstractFetchSchedule - defaultInterval=2592000

2009-05-11 10:16:27,031 INFO AbstractFetchSchedule - maxInterval=7776000

2009-05-11 10:16:27,046 INFO TaskRunner - Task:attempt_local_0006_r_000000_0 is done. And is in the process of commiting

2009-05-11 10:16:27,046 INFO LocalJobRunner -

2009-05-11 10:16:27,046 INFO TaskRunner - Task attempt_local_0006_r_000000_0 is allowed to commit now

2009-05-11 10:16:27,062 INFO FileOutputCommitter - Saved output of task 'attempt_local_0006_r_000000_0' to file:/D:/work/workspace/nutch_crawl/20090508/crawldb/132216774

2009-05-11 10:16:27,062 INFO LocalJobRunner - reduce > reduce

2009-05-11 10:16:27,062 INFO TaskRunner - Task 'attempt_local_0006_r_000000_0' done.

2009-05-11 10:17:43,984 INFO JobClient - Running job: job_local_0006

2009-05-11 10:18:33,671 INFO JobClient - Job complete: job_local_0006

2009-05-11 10:18:35,906 INFO JobClient - Counters: 11

2009-05-11 10:18:35,906 INFO JobClient - File Systems

2009-05-11 10:18:35,906 INFO JobClient - Local bytes read=936164

2009-05-11 10:18:35,906 INFO JobClient - Local bytes written=1678861

2009-05-11 10:18:35,906 INFO JobClient - Map-Reduce Framework

2009-05-11 10:18:35,906 INFO JobClient - Reduce input groups=57

2009-05-11 10:18:35,906 INFO JobClient - Combine output records=0

2009-05-11 10:18:35,906 INFO JobClient - Map input records=63

2009-05-11 10:18:35,906 INFO JobClient - Reduce output records=57

2009-05-11 10:18:35,906 INFO JobClient - Map output bytes=3574

2009-05-11 10:18:35,906 INFO JobClient - Map input bytes=4079

2009-05-11 10:18:35,906 INFO JobClient - Combine input records=0

2009-05-11 10:18:35,906 INFO JobClient - Map output records=63

2009-05-11 10:18:35,906 INFO JobClient - Reduce input records=63

2009-05-11 10:19:48,078 INFO JvmMetrics - Cannot initialize JVM Metrics with processName=JobTracker, sessionId= - already initialized

2009-05-11 10:22:51,437 INFO CrawlDb - CrawlDb update: done

2009-05-11 10:26:31,250 INFO LinkDb - LinkDb: starting

2009-05-11 10:26:31,250 INFO LinkDb - LinkDb: linkdb: 20090508/linkdb

2009-05-11 10:26:31,250 INFO LinkDb - LinkDb: URL normalize: true

2009-05-11 10:26:31,250 INFO LinkDb - LinkDb: URL filter: true

2009-05-11 10:26:31,281 INFO LinkDb - LinkDb: adding segment: file:/D:/work/workspace/nutch_crawl/20090508/segments/20090511094102

2009-05-11 10:26:31,281 INFO JvmMetrics - Cannot initialize JVM Metrics with processName=JobTracker, sessionId= - already initialized

2009-05-11 10:26:31,296 WARN JobClient - Use GenericOptionsParser for parsing the arguments. Applications should implement Tool for the same.

2009-05-11 10:26:31,453 WARN JobClient - No job jar file set. User classes may not be found. See JobConf(Class) or JobConf#setJar(String).

2009-05-11 10:26:31,484 INFO FileInputFormat - Total input paths to process : 1

2009-05-11 10:26:32,078 INFO JobClient - Running job: job_local_0007

2009-05-11 10:26:32,078 INFO FileInputFormat - Total input paths to process : 1

2009-05-11 10:26:32,125 INFO MapTask - numReduceTasks: 1

2009-05-11 10:26:32,125 INFO MapTask - io.sort.mb = 100

2009-05-11 10:26:32,234 INFO MapTask - data buffer = 79691776/99614720

2009-05-11 10:26:32,234 INFO MapTask - record buffer = 262144/327680

2009-05-11 10:26:32,250 INFO MapTask - Starting flush of map output

2009-05-11 10:26:32,437 INFO MapTask - Finished spill 0

2009-05-11 10:26:32,453 INFO TaskRunner - Task:attempt_local_0007_m_000000_0 is done. And is in the process of commiting

2009-05-11 10:26:32,453 INFO LocalJobRunner - file:/D:/work/workspace/nutch_crawl/20090508/segments/20090511094102/parse_data/part-00000/data:0+1382

2009-05-11 10:26:32,453 INFO TaskRunner - Task 'attempt_local_0007_m_000000_0' done.

2009-05-11 10:26:32,468 INFO LocalJobRunner -

2009-05-11 10:26:32,468 INFO Merger - Merging 1 sorted segments

2009-05-11 10:26:32,468 INFO Merger - Down to the last merge-pass, with 1 segments left of total size: 3264 bytes

2009-05-11 10:26:32,468 INFO LocalJobRunner -

2009-05-11 10:26:32,562 INFO TaskRunner - Task:attempt_local_0007_r_000000_0 is done. And is in the process of commiting

2009-05-11 10:26:32,562 INFO LocalJobRunner -

2009-05-11 10:26:32,562 INFO TaskRunner - Task attempt_local_0007_r_000000_0 is allowed to commit now

2009-05-11 10:26:32,578 INFO FileOutputCommitter - Saved output of task 'attempt_local_0007_r_000000_0' to file:/D:/work/workspace/nutch_crawl/linkdb-1900012851

2009-05-11 10:26:32,578 INFO LocalJobRunner - reduce > reduce

2009-05-11 10:26:32,578 INFO TaskRunner - Task 'attempt_local_0007_r_000000_0' done.

2009-05-11 10:26:33,078 INFO JobClient - Job complete: job_local_0007

2009-05-11 10:26:33,078 INFO JobClient - Counters: 11

2009-05-11 10:26:33,078 INFO JobClient - File Systems

2009-05-11 10:26:33,078 INFO JobClient - Local bytes read=535968

2009-05-11 10:26:33,078 INFO JobClient - Local bytes written=965231

2009-05-11 10:26:33,078 INFO JobClient - Map-Reduce Framework

2009-05-11 10:26:33,078 INFO JobClient - Reduce input groups=56

2009-05-11 10:26:33,078 INFO JobClient - Combine output records=56

2009-05-11 10:26:33,078 INFO JobClient - Map input records=1

2009-05-11 10:26:33,078 INFO JobClient - Reduce output records=56

2009-05-11 10:26:33,078 INFO JobClient - Map output bytes=3384

2009-05-11 10:26:33,078 INFO JobClient - Map input bytes=1254

2009-05-11 10:26:33,078 INFO JobClient - Combine input records=60

2009-05-11 10:26:33,078 INFO JobClient - Map output records=60

2009-05-11 10:26:33,078 INFO JobClient - Reduce input records=56

2009-05-11 10:26:33,078 INFO JvmMetrics - Cannot initialize JVM Metrics with processName=JobTracker, sessionId= - already initialized

2009-05-11 10:26:33,125 INFO LinkDb - LinkDb: done

1.3.7 index方法

描述:创建页面内容索引

生成indexes目录.

2009-05-11 10:31:22,250 INFO Indexer - Indexer: starting

2009-05-11 10:31:45,078 INFO IndexerMapReduce - IndexerMapReduce: crawldb: 20090508/crawldb

2009-05-11 10:31:45,078 INFO IndexerMapReduce - IndexerMapReduce: linkdb: 20090508/linkdb

2009-05-11 10:31:45,078 INFO IndexerMapReduce - IndexerMapReduces: adding segment: file:/D:/work/workspace/nutch_crawl/20090508/segments/20090511094102

2009-05-11 10:32:30,359 INFO JvmMetrics - Cannot initialize JVM Metrics with processName=JobTracker, sessionId= - already initialized

2009-05-11 10:32:34,109 WARN JobClient - Use GenericOptionsParser for parsing the arguments. Applications should implement Tool for the same.

2009-05-11 10:32:34,296 WARN JobClient - No job jar file set. User classes may not be found. See JobConf(Class) or JobConf#setJar(String).

2009-05-11 10:32:34,421 INFO FileInputFormat - Total input paths to process : 6

2009-05-11 10:32:35,078 INFO FileInputFormat - Total input paths to process : 6

2009-05-11 10:32:35,140 INFO MapTask - numReduceTasks: 1

2009-05-11 10:32:35,140 INFO MapTask - io.sort.mb = 100

2009-05-11 10:32:35,250 INFO MapTask - data buffer = 79691776/99614720

2009-05-11 10:32:35,250 INFO MapTask - record buffer = 262144/327680

2009-05-11 10:32:35,265 INFO PluginRepository - Plugins: looking in: D:/work/workspace/nutch_crawl/bin/plugins

省略插件加载日志…

2009-05-11 10:32:35,937 INFO IndexingFilters - Adding org.apache.nutch.indexer.basic.BasicIndexingFilter

2009-05-11 10:32:35,937 INFO IndexingFilters - Adding org.apache.nutch.indexer.anchor.AnchorIndexingFilter

2009-05-11 10:32:35,953 INFO MapTask - Starting flush of map output

2009-05-11 10:32:35,968 INFO MapTask - Finished spill 0

2009-05-11 10:32:35,968 INFO TaskRunner - Task:attempt_local_0008_m_000001_0 is done. And is in the process of commiting

2009-05-11 10:32:35,968 INFO LocalJobRunner - file:/D:/work/workspace/nutch_crawl/20090508/segments/20090511094102/crawl_parse/part-00000:0+4026

2009-05-11 10:32:35,968 INFO TaskRunner - Task 'attempt_local_0008_m_000001_0' done.

2009-05-11 10:32:36,000 INFO MapTask - numReduceTasks: 1

2009-05-11 10:32:36,000 INFO MapTask - io.sort.mb = 100

2009-05-11 10:32:36,125 INFO MapTask - data buffer = 79691776/99614720

2009-05-11 10:32:36,125 INFO MapTask - record buffer = 262144/327680

2009-05-11 10:32:36,125 INFO PluginRepository - Plugins: looking in: D:/work/workspace/nutch_crawl/bin/plugins

省略插件加载日志…

2009-05-11 10:32:36,281 INFO IndexingFilters - Adding org.apache.nutch.indexer.basic.BasicIndexingFilter

2009-05-11 10:32:36,281 INFO IndexingFilters - Adding org.apache.nutch.indexer.anchor.AnchorIndexingFilter

2009-05-11 10:32:36,281 INFO MapTask - Starting flush of map output

2009-05-11 10:32:36,296 INFO MapTask - Finished spill 0

2009-05-11 10:32:36,312 INFO TaskRunner - Task:attempt_local_0008_m_000002_0 is done. And is in the process of commiting

2009-05-11 10:32:36,312 INFO LocalJobRunner - file:/D:/work/workspace/nutch_crawl/20090508/segments/20090511094102/parse_data/part-00000/data:0+1382

2009-05-11 10:32:36,312 INFO TaskRunner - Task 'attempt_local_0008_m_000002_0' done.

2009-05-11 10:32:36,343 INFO MapTask - numReduceTasks: 1

2009-05-11 10:32:36,343 INFO MapTask - io.sort.mb = 100

2009-05-11 10:32:36,453 INFO MapTask - data buffer = 79691776/99614720

2009-05-11 10:32:36,453 INFO MapTask - record buffer = 262144/327680

2009-05-11 10:32:36,453 INFO PluginRepository - Plugins: looking in: D:/work/workspace/nutch_crawl/bin/plugins

省略插件加载日志…

2009-05-11 10:32:36,609 INFO IndexingFilters - Adding org.apache.nutch.indexer.basic.BasicIndexingFilter

2009-05-11 10:32:36,609 INFO IndexingFilters - Adding org.apache.nutch.indexer.anchor.AnchorIndexingFilter

2009-05-11 10:32:36,625 INFO MapTask - Starting flush of map output

2009-05-11 10:32:36,625 INFO MapTask - Finished spill 0

2009-05-11 10:32:36,640 INFO TaskRunner - Task:attempt_local_0008_m_000003_0 is done. And is in the process of commiting

2009-05-11 10:32:36,640 INFO LocalJobRunner - file:/D:/work/workspace/nutch_crawl/20090508/segments/20090511094102/parse_text/part-00000/data:0+738

2009-05-11 10:32:36,640 INFO TaskRunner - Task 'attempt_local_0008_m_000003_0' done.

2009-05-11 10:32:36,671 INFO MapTask - numReduceTasks: 1

2009-05-11 10:32:36,671 INFO MapTask - io.sort.mb = 100

2009-05-11 10:32:36,781 INFO MapTask - data buffer = 79691776/99614720

2009-05-11 10:32:36,781 INFO MapTask - record buffer = 262144/327680

2009-05-11 10:32:36,796 INFO PluginRepository - Plugins: looking in: D:/work/workspace/nutch_crawl/bin/plugins

省略插件加载日志…

2009-05-11 10:32:36,937 INFO IndexingFilters - Adding org.apache.nutch.indexer.basic.BasicIndexingFilter

2009-05-11 10:32:36,953 INFO IndexingFilters - Adding org.apache.nutch.indexer.anchor.AnchorIndexingFilter

2009-05-11 10:32:36,953 INFO MapTask - Starting flush of map output

2009-05-11 10:32:36,968 INFO MapTask - Finished spill 0

2009-05-11 10:32:36,968 INFO TaskRunner - Task:attempt_local_0008_m_000004_0 is done. And is in the process of commiting

2009-05-11 10:32:36,968 INFO LocalJobRunner - file:/D:/work/workspace/nutch_crawl/20090508/crawldb/current/part-00000/data:0+3772

2009-05-11 10:32:36,968 INFO TaskRunner - Task 'attempt_local_0008_m_000004_0' done.

2009-05-11 10:32:37,000 INFO MapTask - numReduceTasks: 1

2009-05-11 10:32:37,000 INFO MapTask - io.sort.mb = 100

2009-05-11 10:32:37,109 INFO MapTask - data buffer = 79691776/99614720

2009-05-11 10:32:37,109 INFO MapTask - record buffer = 262144/327680

2009-05-11 10:32:37,125 INFO PluginRepository - Plugins: looking in: D:/work/workspace/nutch_crawl/bin/plugins

省略插件加载日志…

2009-05-11 10:32:37,281 INFO IndexingFilters - Adding org.apache.nutch.indexer.basic.BasicIndexingFilter

2009-05-11 10:32:37,281 INFO IndexingFilters - Adding org.apache.nutch.indexer.anchor.AnchorIndexingFilter

2009-05-11 10:32:37,296 INFO MapTask - Starting flush of map output

2009-05-11 10:32:37,296 INFO MapTask - Finished spill 0

2009-05-11 10:32:37,312 INFO TaskRunner - Task:attempt_local_0008_m_000005_0 is done. And is in the process of commiting

2009-05-11 10:32:37,312 INFO LocalJobRunner - file:/D:/work/workspace/nutch_crawl/20090508/linkdb/current/part-00000/data:0+4215

2009-05-11 10:32:37,312 INFO TaskRunner - Task 'attempt_local_0008_m_000005_0' done.

2009-05-11 10:32:37,343 INFO LocalJobRunner -

2009-05-11 10:32:37,359 INFO Merger - Merging 6 sorted segments

2009-05-11 10:32:37,359 INFO Merger - Down to the last merge-pass, with 6 segments left of total size: 13876 bytes

2009-05-11 10:32:37,359 INFO LocalJobRunner -

2009-05-11 10:32:37,359 INFO PluginRepository - Plugins: looking in: D:/work/workspace/nutch_crawl/bin/plugins

省略插件加载日志…

2009-05-11 10:32:37,515 INFO IndexingFilters - Adding org.apache.nutch.indexer.basic.BasicIndexingFilter

2009-05-11 10:32:37,515 INFO IndexingFilters - Adding org.apache.nutch.indexer.anchor.AnchorIndexingFilter

2009-05-11 10:32:37,546 INFO Configuration - found resource common-terms.utf8 at file:/D:/work/workspace/nutch_crawl/bin/common-terms.utf8

2009-05-11 10:32:38,500 INFO TaskRunner - Task:attempt_local_0008_r_000000_0 is done. And is in the process of commiting

2009-05-11 10:32:38,500 INFO LocalJobRunner - reduce > reduce

2009-05-11 10:32:38,500 INFO TaskRunner - Task 'attempt_local_0008_r_000000_0' done.

2009-05-11 10:33:19,703 INFO JobClient - Running job: job_local_0008

2009-05-11 10:33:50,156 INFO JobClient - Job complete: job_local_0008

2009-05-11 10:33:52,562 INFO JobClient - Counters: 11

2009-05-11 10:33:52,562 INFO JobClient - File Systems

2009-05-11 10:33:52,562 INFO JobClient - Local bytes read=2150441

2009-05-11 10:33:52,562 INFO JobClient - Local bytes written=3845733

2009-05-11 10:33:52,562 INFO JobClient - Map-Reduce Framework

2009-05-11 10:33:52,562 INFO JobClient - Reduce input groups=58

2009-05-11 10:33:52,562 INFO JobClient - Combine output records=0

2009-05-11 10:33:52,562 INFO JobClient - Map input records=177

2009-05-11 10:33:52,562 INFO JobClient - Reduce output records=1

2009-05-11 10:33:52,562 INFO JobClient - Map output bytes=13506

2009-05-11 10:33:52,562 INFO JobClient - Map input bytes=13661

2009-05-11 10:33:52,562 INFO JobClient - Combine input records=0

2009-05-11 10:33:52,562 INFO JobClient - Map output records=177

2009-05-11 10:33:52,562 INFO JobClient - Reduce input records=177

2009-05-11 10:33:57,656 INFO Indexer - Indexer: done

1.3.8 dedup方法

描述:删除重复数据

2009-05-11 10:38:53,671 INFO DeleteDuplicates - Dedup: starting

2009-05-11 10:39:32,890 INFO DeleteDuplicates - Dedup: adding indexes in: 20090508/indexes

2009-05-11 10:39:57,265 INFO JvmMetrics - Cannot initialize JVM Metrics with processName=JobTracker, sessionId= - already initialized

2009-05-11 10:40:09,015 WARN JobClient - Use GenericOptionsParser for parsing the arguments. Applications should implement Tool for the same.

2009-05-11 10:40:09,218 WARN JobClient - No job jar file set. User classes may not be found. See JobConf(Class) or JobConf#setJar(String).

2009-05-11 10:40:51,890 INFO FileInputFormat - Total input paths to process : 1

2009-05-11 10:42:56,203 INFO FileInputFormat - Total input paths to process : 1

2009-05-11 10:42:56,265 INFO MapTask - numReduceTasks: 1

2009-05-11 10:42:56,265 INFO MapTask - io.sort.mb = 100

2009-05-11 10:42:56,390 INFO MapTask - data buffer = 79691776/99614720

2009-05-11 10:42:56,390 INFO MapTask - record buffer = 262144/327680

2009-05-11 10:42:56,515 INFO MapTask - Starting flush of map output

2009-05-11 10:42:56,718 INFO MapTask - Finished spill 0

2009-05-11 10:42:56,718 INFO TaskRunner - Task:attempt_local_0009_m_000000_0 is done. And is in the process of commiting

2009-05-11 10:42:56,718 INFO LocalJobRunner - file:/D:/work/workspace/nutch_crawl/20090508/indexes/part-00000

2009-05-11 10:42:56,718 INFO TaskRunner - Task 'attempt_local_0009_m_000000_0' done.

2009-05-11 10:42:56,734 INFO LocalJobRunner -

2009-05-11 10:42:56,734 INFO Merger - Merging 1 sorted segments

2009-05-11 10:42:56,734 INFO Merger - Down to the last merge-pass, with 1 segments left of total size: 141 bytes

2009-05-11 10:42:56,734 INFO LocalJobRunner -

2009-05-11 10:42:56,781 INFO TaskRunner - Task:attempt_local_0009_r_000000_0 is done. And is in the process of commiting

2009-05-11 10:42:56,781 INFO LocalJobRunner -

2009-05-11 10:42:56,781 INFO TaskRunner - Task attempt_local_0009_r_000000_0 is allowed to commit now

2009-05-11 10:42:56,796 INFO FileOutputCommitter - Saved output of task 'attempt_local_0009_r_000000_0' to file:/D:/work/workspace/nutch_crawl/dedup-urls-1843604809

2009-05-11 10:42:56,796 INFO LocalJobRunner - reduce > reduce

2009-05-11 10:42:56,796 INFO TaskRunner - Task 'attempt_local_0009_r_000000_0' done.

2009-05-11 10:43:06,515 INFO JobClient - Running job: job_local_0009

2009-05-11 10:43:14,500 INFO JobClient - Job complete: job_local_0009

2009-05-11 10:43:16,296 INFO JobClient - Counters: 11

2009-05-11 10:43:16,296 INFO JobClient - File Systems

2009-05-11 10:43:16,296 INFO JobClient - Local bytes read=710951

2009-05-11 10:43:16,296 INFO JobClient - Local bytes written=1220879

2009-05-11 10:43:16,296 INFO JobClient - Map-Reduce Framework

2009-05-11 10:43:16,296 INFO JobClient - Reduce input groups=1

2009-05-11 10:43:16,296 INFO JobClient - Combine output records=0

2009-05-11 10:43:16,296 INFO JobClient - Map input records=1

2009-05-11 10:43:16,296 INFO JobClient - Reduce output records=1

2009-05-11 10:43:16,296 INFO JobClient - Map output bytes=137

2009-05-11 10:43:16,296 INFO JobClient - Map input bytes=2147483647

2009-05-11 10:43:16,296 INFO JobClient - Combine input records=0

2009-05-11 10:43:16,296 INFO JobClient - Map output records=1

2009-05-11 10:43:16,296 INFO JobClient - Reduce input records=1

2009-05-11 10:44:37,734 INFO JvmMetrics - Cannot initialize JVM Metrics with processName=JobTracker, sessionId= - already initialized

2009-05-11 10:44:45,953 WARN JobClient - Use GenericOptionsParser for parsing the arguments. Applications should implement Tool for the same.

2009-05-11 10:44:46,140 WARN JobClient - No job jar file set. User classes may not be found. See JobConf(Class) or JobConf#setJar(String).

2009-05-11 10:44:48,781 INFO FileInputFormat - Total input paths to process : 1

2009-05-11 10:45:46,546 INFO FileInputFormat - Total input paths to process : 1

2009-05-11 10:45:46,609 INFO MapTask - numReduceTasks: 1

2009-05-11 10:45:46,609 INFO MapTask - io.sort.mb = 100

2009-05-11 10:45:46,718 INFO MapTask - data buffer = 79691776/99614720

2009-05-11 10:45:46,718 INFO MapTask - record buffer = 262144/327680

2009-05-11 10:45:46,734 INFO MapTask - Starting flush of map output

2009-05-11 10:45:46,953 INFO MapTask - Finished spill 0

2009-05-11 10:45:46,953 INFO TaskRunner - Task:attempt_local_0010_m_000000_0 is done. And is in the process of commiting

2009-05-11 10:45:46,953 INFO LocalJobRunner - file:/D:/work/workspace/nutch_crawl/dedup-urls-1843604809/part-00000:0+247

2009-05-11 10:45:46,953 INFO TaskRunner - Task 'attempt_local_0010_m_000000_0' done.

2009-05-11 10:45:46,968 INFO LocalJobRunner -

2009-05-11 10:45:46,968 INFO Merger - Merging 1 sorted segments

2009-05-11 10:45:46,968 INFO Merger - Down to the last merge-pass, with 1 segments left of total size: 137 bytes

2009-05-11 10:45:46,968 INFO LocalJobRunner -

2009-05-11 10:45:47,015 INFO TaskRunner - Task:attempt_local_0010_r_000000_0 is done. And is in the process of commiting

2009-05-11 10:45:47,015 INFO LocalJobRunner -

2009-05-11 10:45:47,015 INFO TaskRunner - Task attempt_local_0010_r_000000_0 is allowed to commit now

2009-05-11 10:45:47,015 INFO FileOutputCommitter - Saved output of task 'attempt_local_0010_r_000000_0' to file:/D:/work/workspace/nutch_crawl/dedup-hash-291931517

2009-05-11 10:45:47,015 INFO LocalJobRunner - reduce > reduce

2009-05-11 10:45:47,015 INFO TaskRunner - Task 'attempt_local_0010_r_000000_0' done.

2009-05-11 10:45:52,187 INFO JobClient - Running job: job_local_0010

2009-05-11 10:46:03,984 INFO JobClient - Job complete: job_local_0010

2009-05-11 10:46:06,359 INFO JobClient - Counters: 11

2009-05-11 10:46:06,359 INFO JobClient - File Systems

2009-05-11 10:46:06,359 INFO JobClient - Local bytes read=764171

2009-05-11 10:46:06,359 INFO JobClient - Local bytes written=1327019

2009-05-11 10:46:06,359 INFO JobClient - Map-Reduce Framework

2009-05-11 10:46:06,359 INFO JobClient - Reduce input groups=1

2009-05-11 10:46:06,359 INFO JobClient - Combine output records=0

2009-05-11 10:46:06,359 INFO JobClient - Map input records=1

2009-05-11 10:46:06,359 INFO JobClient - Reduce output records=0

2009-05-11 10:46:06,359 INFO JobClient - Map output bytes=133

2009-05-11 10:46:06,359 INFO JobClient - Map input bytes=141

2009-05-11 10:46:06,359 INFO JobClient - Combine input records=0

2009-05-11 10:46:06,359 INFO JobClient - Map output records=1

2009-05-11 10:46:06,359 INFO JobClient - Reduce input records=1

2009-05-11 10:47:19,953 INFO JvmMetrics - Cannot initialize JVM Metrics with processName=JobTracker, sessionId= - already initialized

2009-05-11 10:47:19,953 WARN JobClient - Use GenericOptionsParser for parsing the arguments. Applications should implement Tool for the same.

2009-05-11 10:47:20,140 WARN JobClient - No job jar file set. User classes may not be found. See JobConf(Class) or JobConf#setJar(String).

2009-05-11 10:47:20,156 INFO FileInputFormat - Total input paths to process : 1

2009-05-11 10:47:20,765 INFO JobClient - Running job: job_local_0011

2009-05-11 10:47:20,765 INFO FileInputFormat - Total input paths to process : 1

2009-05-11 10:47:20,796 INFO MapTask - numReduceTasks: 1

2009-05-11 10:47:20,796 INFO MapTask - io.sort.mb = 100

2009-05-11 10:47:20,921 INFO MapTask - data buffer = 79691776/99614720

2009-05-11 10:47:20,921 INFO MapTask - record buffer = 262144/327680

2009-05-11 10:47:20,937 INFO MapTask - Starting flush of map output

2009-05-11 10:47:21,140 INFO MapTask - Index: (0, 2, 6)

2009-05-11 10:47:21,140 INFO TaskRunner - Task:attempt_local_0011_m_000000_0 is done. And is in the process of commiting

2009-05-11 10:47:21,140 INFO LocalJobRunner - file:/D:/work/workspace/nutch_crawl/dedup-hash-291931517/part-00000:0+103

2009-05-11 10:47:21,140 INFO TaskRunner - Task 'attempt_local_0011_m_000000_0' done.

2009-05-11 10:47:21,156 INFO LocalJobRunner -

2009-05-11 10:47:21,156 INFO Merger - Merging 1 sorted segments

2009-05-11 10:47:21,156 INFO Merger - Down to the last merge-pass, with 0 segments left of total size: 0 bytes

2009-05-11 10:47:21,156 INFO LocalJobRunner -

2009-05-11 10:47:21,171 INFO TaskRunner - Task:attempt_local_0011_r_000000_0 is done. And is in the process of commiting

2009-05-11 10:47:21,171 INFO LocalJobRunner - reduce > reduce

2009-05-11 10:47:21,171 INFO TaskRunner - Task 'attempt_local_0011_r_000000_0' done.

2009-05-11 10:47:21,765 INFO JobClient - Job complete: job_local_0011

2009-05-11 10:47:21,765 INFO JobClient - Counters: 11

2009-05-11 10:47:21,765 INFO JobClient - File Systems

2009-05-11 10:47:21,765 INFO JobClient - Local bytes read=816128

2009-05-11 10:47:21,765 INFO JobClient - Local bytes written=1430954

2009-05-11 10:47:21,765 INFO JobClient - Map-Reduce Framework

2009-05-11 10:47:21,765 INFO JobClient - Reduce input groups=0

2009-05-11 10:47:21,765 INFO JobClient - Combine output records=0

2009-05-11 10:47:21,765 INFO JobClient - Map input records=0

2009-05-11 10:47:21,765 INFO JobClient - Reduce output records=0

2009-05-11 10:47:21,765 INFO JobClient - Map output bytes=0

2009-05-11 10:47:21,765 INFO JobClient - Map input bytes=0

2009-05-11 10:47:21,765 INFO JobClient - Combine input records=0

2009-05-11 10:47:21,765 INFO JobClient - Map output records=0

2009-05-11 10:47:21,765 INFO JobClient - Reduce input records=0

2009-05-11 10:47:44,031 INFO DeleteDuplicates - Dedup: done

1.3.9 merge方法

描述:合并索引文件

首先在tmp/hadoop-Administrator/mapred/local/crawl生成一个临时文件夹20090511094057,用indexes里的数据生成索引添加到20090511094057下的merge-output目录,

fs.completeLocalOutput方法把临时目录的索引写到新生成的index目录下.

2009-05-11 10:53:56,156 INFO IndexMerger - merging indexes to: 20090508/index

2009-05-11 10:58:50,906 INFO IndexMerger - Adding file:/D:/work/workspace/nutch_crawl/20090508/indexes/part-00000

2009-05-11 11:04:36,562 INFO IndexMerger - done merging

2850

2850

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?