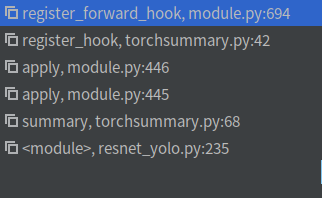

上接题目: register_hook(...) / module.register_forward_hook(hook) / ...

目的:统计参数信息/ 注册hook,搭建 model 前向传播的hooks / 输入input ,正向传播 / remove hook / 输出网络结构和参数信息

torchsummary.py : summary(...)

import torch

import torch.nn as nn

from torch.autograd import Variable

from collections import OrderedDict

import numpy as np

def summary(model, input_size, batch_size=-1, device="cuda"):

def register_hook(module):

def hook(module, input, output):

class_name = str(module.__class__).split(".")[-1].split("'")[0]

module_idx = len(summary)

m_key = "%s-%i" % (class_name, module_idx + 1)

summary[m_key] = OrderedDict()

summary[m_key]["input_shape"] = list(input[0].size())

summary[m_key]["input_shape"][0] = batch_size

if isinstance(output, (list, tuple)):

summary[m_key]["output_shape"] = [

[-1] + list(o.size())[1:] for o in output

]

else:

summary[m_key]["output_shape"] = list(output.size())

summary[m_key]["output_shape"][0] = batch_size

params = 0

if hasattr(module, "weight") and hasattr(module.weight, "size"):

params += torch.prod(torch.LongTensor(list(module.weight.size())))

summary[m_key]["trainable"] = module.weight.requires_grad

if hasattr(module, "bias") and hasattr(module.bias, "size"):

params += torch.prod(torch.LongTensor(list(module.bias.size())))

summary[m_key]["nb_params"] = params

if (

not isinstance(module, nn.Sequential)

and not isinstance(module, nn.ModuleList)

and not (module == model)

):

hooks.append(module.register_forward_hook(hook))

device = device.lower()

assert device in [

"cuda",

"cpu",

], "Input device is not valid, please specify 'cuda' or 'cpu'"

if device == "cuda" and torch.cuda.is_available():

dtype = torch.cuda.FloatTensor

else:

dtype = torch.FloatTensor

# multiple inputs to the network

if isinstance(input_size, tuple):

input_size = [input_size]

# batch_size of 2 for batchnorm

x = [torch.rand(2, *in_size).type(dtype) for in_size in input_size]

# print(type(x[0]))

# create properties

summary = OrderedDict()

hooks = []

# register hook

model.apply(register_hook)

# make a forward pass

# print(x.shape)

model(*x)

# remove these hooks

for h in hooks:

h.remove()

print("----------------------------------------------------------------")

line_new = "{:>20} {:>25} {:>15}".format("Layer (type)", "Output Shape", "Param #")

print(line_new)

print("================================================================")

total_params = 0

total_output = 0

trainable_params = 0

for layer in summary:

# input_shape, output_shape, trainable, nb_params

line_new = "{:>20} {:>25} {:>15}".format(

layer,

str(summary[layer]["output_shape"]),

"{0:,}".format(summary[layer]["nb_params"]),

)

total_params += summary[layer]["nb_params"]

total_output += np.prod(summary[layer]["output_shape"])

if "trainable" in summary[layer]:

if summary[layer]["trainable"] == True:

trainable_params += summary[layer]["nb_params"]

print(line_new)

# assume 4 bytes/number (float on cuda).

total_input_size = abs(np.prod(input_size) * batch_size * 4. / (1024 ** 2.))

total_output_size = abs(2. * total_output * 4. / (1024 ** 2.)) # x2 for gradients

total_params_size = abs(total_params.numpy() * 4. / (1024 ** 2.))

total_size = total_params_size + total_output_size + total_input_size

print("================================================================")

print("Total params: {0:,}".format(total_params))

print("Trainable params: {0:,}".format(trainable_params))

print("Non-trainable params: {0:,}".format(total_params - trainable_params))

print("----------------------------------------------------------------")

print("Input size (MB): %0.2f" % total_input_size)

print("Forward/backward pass size (MB): %0.2f" % total_output_size)

print("Params size (MB): %0.2f" % total_params_size)

print("Estimated Total Size (MB): %0.2f" % total_size)

print("----------------------------------------------------------------")

# return summary

module.py : model.apply(...)

def apply(self: T, fn: Callable[['Module'], None]) -> T:

r"""Applies ``fn`` recursively to every submodule (as returned by ``.children()``)

as well as self. Typical use includes initializing the parameters of a model

(see also :ref:`nn-init-doc`).

Args:

fn (:class:`Module` -> None): function to be applied to each submodule

Returns:

Module: self

Example::

>>> @torch.no_grad()

>>> def init_weights(m):

>>> print(m)

>>> if type(m) == nn.Linear:

>>> m.weight.fill_(1.0)

>>> print(m.weight)

>>> net = nn.Sequential(nn.Linear(2, 2), nn.Linear(2, 2))

>>> net.apply(init_weights)

Linear(in_features=2, out_features=2, bias=True)

Parameter containing:

tensor([[ 1., 1.],

[ 1., 1.]])

Linear(in_features=2, out_features=2, bias=True)

Parameter containing:

tensor([[ 1., 1.],

[ 1., 1.]])

Sequential(

(0): Linear(in_features=2, out_features=2, bias=True)

(1): Linear(in_features=2, out_features=2, bias=True)

)

Sequential(

(0): Linear(in_features=2, out_features=2, bias=True)

(1): Linear(in_features=2, out_features=2, bias=True)

)

"""

for module in self.children():

module.apply(fn)

fn(self)

return self注:循环体在

for module in self.children():

module.apply(fn)

fn(self)以后:

apply(...) : fn(self)-> torchsummary.py : summary(...) : register_hook(module):

import torch

import torch.nn as nn

from torch.autograd import Variable

from collections import OrderedDict

import numpy as np

def summary(model, input_size, batch_size=-1, device="cuda"):

def register_hook(module):

def hook(module, input, output):

class_name = str(module.__class__).split(".")[-1].split("'")[0]

module_idx = len(summary)

m_key = "%s-%i" % (class_name, module_idx + 1)

summary[m_key] = OrderedDict()

summary[m_key]["input_shape"] = list(input[0].size())

summary[m_key]["input_shape"][0] = batch_size

if isinstance(output, (list, tuple)):

summary[m_key]["output_shape"] = [

[-1] + list(o.size())[1:] for o in output

]

else:

summary[m_key]["output_shape"] = list(output.size())

summary[m_key]["output_shape"][0] = batch_size

params = 0

if hasattr(module, "weight") and hasattr(module.weight, "size"):

params += torch.prod(torch.LongTensor(list(module.weight.size())))

summary[m_key]["trainable"] = module.weight.requires_grad

if hasattr(module, "bias") and hasattr(module.bias, "size"):

params += torch.prod(torch.LongTensor(list(module.bias.size())))

summary[m_key]["nb_params"] = params

if (

not isinstance(module, nn.Sequential)

and not isinstance(module, nn.ModuleList)

and not (module == model)

):

hooks.append(module.register_forward_hook(hook))

device = device.lower()

assert device in [

"cuda",

"cpu",

], "Input device is not valid, please specify 'cuda' or 'cpu'"

if device == "cuda" and torch.cuda.is_available():

dtype = torch.cuda.FloatTensor

else:

dtype = torch.FloatTensor

# multiple inputs to the network

if isinstance(input_size, tuple):

input_size = [input_size]

# batch_size of 2 for batchnorm

x = [torch.rand(2, *in_size).type(dtype) for in_size in input_size]

# print(type(x[0]))

# create properties

summary = OrderedDict()

hooks = []

# register hook

model.apply(register_hook)

# make a forward pass

# print(x.shape)

model(*x)

# remove these hooks

for h in hooks:

h.remove()

print("----------------------------------------------------------------")

line_new = "{:>20} {:>25} {:>15}".format("Layer (type)", "Output Shape", "Param #")

print(line_new)

print("================================================================")

total_params = 0

total_output = 0

trainable_params = 0

for layer in summary:

# input_shape, output_shape, trainable, nb_params

line_new = "{:>20} {:>25} {:>15}".format(

layer,

str(summary[layer]["output_shape"]),

"{0:,}".format(summary[layer]["nb_params"]),

)

total_params += summary[layer]["nb_params"]

total_output += np.prod(summary[layer]["output_shape"])

if "trainable" in summary[layer]:

if summary[layer]["trainable"] == True:

trainable_params += summary[layer]["nb_params"]

print(line_new)

# assume 4 bytes/number (float on cuda).

total_input_size = abs(np.prod(input_size) * batch_size * 4. / (1024 ** 2.))

total_output_size = abs(2. * total_output * 4. / (1024 ** 2.)) # x2 for gradients

total_params_size = abs(total_params.numpy() * 4. / (1024 ** 2.))

total_size = total_params_size + total_output_size + total_input_size

print("================================================================")

print("Total params: {0:,}".format(total_params))

print("Trainable params: {0:,}".format(trainable_params))

print("Non-trainable params: {0:,}".format(total_params - trainable_params))

print("----------------------------------------------------------------")

print("Input size (MB): %0.2f" % total_input_size)

print("Forward/backward pass size (MB): %0.2f" % total_output_size)

print("Params size (MB): %0.2f" % total_params_size)

print("Estimated Total Size (MB): %0.2f" % total_size)

print("----------------------------------------------------------------")

# return summary

接下来: / model(*x) / module.py _call_impl(...)

input torch.Size([2, 3, 418, 418])

tensor([[[[0.2709, 0.1753, 0.5693, ..., 0.1817, 0.8895, 0.8485],

[0.2879, 0.5660, 0.3162, ..., 0.4502, 0.0285, 0.2262],

[0.5451, 0.0369, 0.1112, ..., 0.8297, 0.6462, 0.2173],

...,

[0.3083, 0.2746, 0.1428, ..., 0.4092, 0.8913, 0.6776],

[0.0704, 0.4520, 0.1853, ..., 0.3169, 0.2486, 0.0153],

[0.8637, 0.9614, 0.8856, ..., 0.5083, 0.0980, 0.4110]],

[[0.2200, 0.6590, 0.0706, ..., 0.0349, 0.4435, 0.4459],

[0.2895, 0.7844, 0.7320, ..., 0.2589, 0.2925, 0.2299],

[0.0328, 0.3250, 0.2682, ..., 0.5436, 0.4696, 0.3192],

...,

[0.9408, 0.7429, 0.8390, ..., 0.0753, 0.0214, 0.4611],

[0.7398, 0.2577, 0.0237, ..., 0.3019, 0.5939, 0.2017],

[0.1762, 0.5876, 0.3601, ..., 0.9460, 0.0997, 0.9222]],

[[0.6818, 0.6513, 0.8082, ..., 0.0442, 0.2984, 0.9585],

[0.9634, 0.8260, 0.9116, ..., 0.6349, 0.7930, 0.3128],

[0.0029, 0.1439, 0.1321, ..., 0.4748, 0.0550, 0.0460],

...,

[0.5563, 0.0182, 0.2356, ..., 0.6358, 0.0112, 0.0989],

[0.8476, 0.9336, 0.0674, ..., 0.7531, 0.6115, 0.0313],

[0.5901, 0.2817, 0.3999, ..., 0.9797, 0.0348, 0.0544]]],

[[[0.3199, 0.0565, 0.7252, ..., 0.8113, 0.1974, 0.9692],

[0.3234, 0.5321, 0.9094, ..., 0.6868, 0.7541, 0.2812],

[0.6792, 0.3287, 0.7031, ..., 0.0393, 0.7585, 0.9073],

...,

[0.6043, 0.4006, 0.8022, ..., 0.6846, 0.1578, 0.0674],

[0.8830, 0.1915, 0.6430, ..., 0.8385, 0.7511, 0.8796],

[0.2231, 0.4110, 0.3816, ..., 0.9334, 0.2590, 0.0708]],

[[0.8419, 0.5102, 0.1765, ..., 0.9242, 0.6562, 0.3825],

[0.7863, 0.5056, 0.2321, ..., 0.5666, 0.3428, 0.0472],

[0.2211, 0.1237, 0.5578, ..., 0.9036, 0.8590, 0.8707],

...,

[0.5512, 0.8258, 0.8552, ..., 0.5092, 0.0324, 0.9799],

[0.3007, 0.9965, 0.5445, ..., 0.3631, 0.0745, 0.1484],

[0.9852, 0.3907, 0.4071, ..., 0.0096, 0.1675, 0.2988]],

[[0.8020, 0.4269, 0.6776, ..., 0.1981, 0.3181, 0.7629],

[0.1655, 0.2805, 0.9518, ..., 0.7684, 0.3522, 0.0216],

[0.3547, 0.9546, 0.1811, ..., 0.3153, 0.5066, 0.2176],

...,

[0.4352, 0.9969, 0.4457, ..., 0.0439, 0.0609, 0.9854],

[0.8673, 0.0833, 0.5800, ..., 0.4859, 0.1498, 0.6754],

[0.1354, 0.3183, 0.1909, ..., 0.1720, 0.3356, 0.9053]]]],

device='cuda:0')

output: torch.Size([2, 14, 14, 30])

tensor([[[[0.8454, 0.5001, 0.4481, ..., 0.3887, 0.5547, 0.4538],

[0.3161, 0.2713, 0.1053, ..., 0.7058, 0.5122, 0.3770],

[0.8674, 0.1722, 0.5894, ..., 0.3615, 0.5899, 0.1752],

...,

[0.5117, 0.4987, 0.5604, ..., 0.5415, 0.6103, 0.2923],

[0.7312, 0.5665, 0.5950, ..., 0.5056, 0.5276, 0.2977],

[0.8911, 0.3422, 0.6511, ..., 0.5503, 0.7118, 0.3349]],

[[0.5136, 0.5950, 0.6043, ..., 0.3629, 0.7913, 0.1259],

[0.7269, 0.2455, 0.7077, ..., 0.5334, 0.1849, 0.8442],

[0.3607, 0.2570, 0.4114, ..., 0.6796, 0.7183, 0.3902],

...,

[0.4740, 0.1870, 0.6772, ..., 0.4313, 0.4529, 0.6758],

[0.5104, 0.7938, 0.8614, ..., 0.8057, 0.2632, 0.5451],

[0.7676, 0.5275, 0.4823, ..., 0.8349, 0.7249, 0.4327]],

[[0.6351, 0.3136, 0.7343, ..., 0.5627, 0.8222, 0.2429],

[0.6175, 0.7593, 0.3978, ..., 0.4780, 0.4118, 0.5988],

[0.7639, 0.7412, 0.7270, ..., 0.6081, 0.2860, 0.4456],

...,

[0.5305, 0.3259, 0.2790, ..., 0.4755, 0.2830, 0.4254],

[0.2155, 0.7948, 0.6770, ..., 0.2614, 0.4303, 0.5881],

[0.6324, 0.6040, 0.3321, ..., 0.8459, 0.5411, 0.2833]],

...,

[[0.5986, 0.4945, 0.5617, ..., 0.5485, 0.8687, 0.5003],

[0.4123, 0.2548, 0.5763, ..., 0.4321, 0.6643, 0.2194],

[0.6033, 0.3960, 0.8730, ..., 0.3220, 0.3615, 0.4827],

...,

[0.6209, 0.5610, 0.8390, ..., 0.9049, 0.2984, 0.3171],

[0.6609, 0.3996, 0.3908, ..., 0.5779, 0.4216, 0.4093],

[0.3762, 0.3825, 0.7023, ..., 0.6302, 0.5448, 0.2533]],

[[0.6995, 0.3162, 0.5145, ..., 0.5722, 0.8078, 0.3267],

[0.3358, 0.4398, 0.4114, ..., 0.5094, 0.6879, 0.5694],

[0.8994, 0.7637, 0.7209, ..., 0.4898, 0.3235, 0.5609],

...,

[0.6871, 0.4873, 0.2624, ..., 0.8552, 0.2644, 0.1981],

[0.3292, 0.6031, 0.5475, ..., 0.8603, 0.3224, 0.5257],

[0.6454, 0.6559, 0.3611, ..., 0.6053, 0.3233, 0.3911]],

[[0.8353, 0.3757, 0.5170, ..., 0.5400, 0.7920, 0.3292],

[0.4445, 0.1594, 0.1481, ..., 0.4408, 0.7380, 0.0804],

[0.6494, 0.4928, 0.5922, ..., 0.5205, 0.4136, 0.1677],

...,

[0.5073, 0.7941, 0.4370, ..., 0.4859, 0.6491, 0.1767],

[0.5077, 0.4488, 0.5004, ..., 0.5549, 0.4578, 0.4015],

[0.6580, 0.8020, 0.3235, ..., 0.5760, 0.7451, 0.3607]]],

[[[0.7887, 0.3598, 0.6905, ..., 0.3169, 0.4231, 0.3283],

[0.5104, 0.4611, 0.7260, ..., 0.5472, 0.2503, 0.1919],

[0.7392, 0.5708, 0.3512, ..., 0.4495, 0.3325, 0.1758],

...,

[0.4488, 0.1813, 0.4408, ..., 0.4989, 0.2345, 0.4532],

[0.5168, 0.2066, 0.3749, ..., 0.5762, 0.3896, 0.2489],

[0.6949, 0.4311, 0.4541, ..., 0.5924, 0.7709, 0.2230]],

[[0.7194, 0.2229, 0.7320, ..., 0.7090, 0.5210, 0.5555],

[0.6014, 0.4024, 0.7971, ..., 0.0637, 0.6264, 0.7605],

[0.6757, 0.5992, 0.5865, ..., 0.3314, 0.5319, 0.4007],

...,

[0.5528, 0.4832, 0.4008, ..., 0.4913, 0.3247, 0.3309],

[0.4411, 0.3779, 0.4661, ..., 0.5987, 0.6658, 0.1835],

[0.8791, 0.4104, 0.3369, ..., 0.7199, 0.6460, 0.2555]],

[[0.5319, 0.5749, 0.5323, ..., 0.4296, 0.8030, 0.3956],

[0.3608, 0.7142, 0.7013, ..., 0.2304, 0.3627, 0.4260],

[0.5907, 0.7656, 0.8546, ..., 0.6153, 0.8012, 0.5844],

...,

[0.3063, 0.4122, 0.3434, ..., 0.3949, 0.4604, 0.4554],

[0.7616, 0.8810, 0.6775, ..., 0.3367, 0.4952, 0.4255],

[0.5144, 0.7115, 0.5239, ..., 0.3875, 0.6694, 0.4869]],

...,

[[0.5930, 0.4510, 0.7014, ..., 0.5092, 0.8747, 0.3856],

[0.4378, 0.5118, 0.7562, ..., 0.4926, 0.2055, 0.7743],

[0.2992, 0.3419, 0.3461, ..., 0.4813, 0.6985, 0.4168],

...,

[0.7641, 0.2579, 0.5648, ..., 0.2922, 0.1993, 0.4663],

[0.7158, 0.5681, 0.7143, ..., 0.7512, 0.2261, 0.5006],

[0.7043, 0.8186, 0.6456, ..., 0.8127, 0.5741, 0.4387]],

[[0.6965, 0.5033, 0.8124, ..., 0.7958, 0.5631, 0.2824],

[0.4693, 0.5840, 0.4974, ..., 0.4357, 0.6017, 0.2164],

[0.5586, 0.2834, 0.5982, ..., 0.7697, 0.4989, 0.6309],

...,

[0.5489, 0.7579, 0.6713, ..., 0.6297, 0.7694, 0.2560],

[0.6636, 0.5977, 0.6842, ..., 0.6410, 0.2517, 0.3146],

[0.6226, 0.4115, 0.5543, ..., 0.8064, 0.6572, 0.2819]],

[[0.8928, 0.5078, 0.3673, ..., 0.4940, 0.7749, 0.2013],

[0.4794, 0.5522, 0.6444, ..., 0.2302, 0.3957, 0.3785],

[0.7415, 0.5251, 0.2500, ..., 0.3829, 0.5733, 0.4928],

...,

[0.6961, 0.7784, 0.4826, ..., 0.6172, 0.4769, 0.3475],

[0.5428, 0.6430, 0.2980, ..., 0.6795, 0.8927, 0.2451],

[0.7771, 0.6398, 0.3207, ..., 0.4437, 0.6768, 0.3847]]]],

device='cuda:0', grad_fn=<PermuteBackward>)

def _call_impl(self, *input, **kwargs):

for hook in itertools.chain(

_global_forward_pre_hooks.values(),

self._forward_pre_hooks.values()):

result = hook(self, input)

if result is not None:

if not isinstance(result, tuple):

result = (result,)

input = result

if torch._C._get_tracing_state():

result = self._slow_forward(*input, **kwargs)

else:

result = self.forward(*input, **kwargs)

for hook in itertools.chain(

_global_forward_hooks.values(),

self._forward_hooks.values()):

hook_result = hook(self, input, result)

if hook_result is not None:

result = hook_result

if (len(self._backward_hooks) > 0) or (len(_global_backward_hooks) > 0):

var = result

while not isinstance(var, torch.Tensor):

if isinstance(var, dict):

var = next((v for v in var.values() if isinstance(v, torch.Tensor)))

else:

var = var[0]

grad_fn = var.grad_fn

if grad_fn is not None:

for hook in itertools.chain(

_global_backward_hooks.values(),

self._backward_hooks.values()):

wrapper = functools.partial(hook, self)

functools.update_wrapper(wrapper, hook)

grad_fn.register_hook(wrapper)

return result/home/wangbin/anaconda3/envs/deep_learning/bin/python3.7 /media/wangbin/F/深度学习_程序/yolo_practice/resnet_yolo.py

----------------------------------------------------------------

Layer (type) Output Shape Param #

================================================================

Conv2d-1 [-1, 64, 209, 209] 9,408

BatchNorm2d-2 [-1, 64, 209, 209] 128

ReLU-3 [-1, 64, 209, 209] 0

MaxPool2d-4 [-1, 64, 105, 105] 0

Conv2d-5 [-1, 64, 105, 105] 4,096

BatchNorm2d-6 [-1, 64, 105, 105] 128

ReLU-7 [-1, 64, 105, 105] 0

Conv2d-8 [-1, 64, 105, 105] 36,864

BatchNorm2d-9 [-1, 64, 105, 105] 128

ReLU-10 [-1, 64, 105, 105] 0

Conv2d-11 [-1, 256, 105, 105] 16,384

BatchNorm2d-12 [-1, 256, 105, 105] 512

Conv2d-13 [-1, 256, 105, 105] 16,384

BatchNorm2d-14 [-1, 256, 105, 105] 512

ReLU-15 [-1, 256, 105, 105] 0

Bottleneck-16 [-1, 256, 105, 105] 0

Conv2d-17 [-1, 64, 105, 105] 16,384

BatchNorm2d-18 [-1, 64, 105, 105] 128

ReLU-19 [-1, 64, 105, 105] 0

Conv2d-20 [-1, 64, 105, 105] 36,864

BatchNorm2d-21 [-1, 64, 105, 105] 128

ReLU-22 [-1, 64, 105, 105] 0

Conv2d-23 [-1, 256, 105, 105] 16,384

BatchNorm2d-24 [-1, 256, 105, 105] 512

ReLU-25 [-1, 256, 105, 105] 0

Bottleneck-26 [-1, 256, 105, 105] 0

Conv2d-27 [-1, 64, 105, 105] 16,384

BatchNorm2d-28 [-1, 64, 105, 105] 128

ReLU-29 [-1, 64, 105, 105] 0

Conv2d-30 [-1, 64, 105, 105] 36,864

BatchNorm2d-31 [-1, 64, 105, 105] 128

ReLU-32 [-1, 64, 105, 105] 0

Conv2d-33 [-1, 256, 105, 105] 16,384

BatchNorm2d-34 [-1, 256, 105, 105] 512

ReLU-35 [-1, 256, 105, 105] 0

Bottleneck-36 [-1, 256, 105, 105] 0

Conv2d-37 [-1, 128, 105, 105] 32,768

BatchNorm2d-38 [-1, 128, 105, 105] 256

ReLU-39 [-1, 128, 105, 105] 0

Conv2d-40 [-1, 128, 53, 53] 147,456

BatchNorm2d-41 [-1, 128, 53, 53] 256

ReLU-42 [-1, 128, 53, 53] 0

Conv2d-43 [-1, 512, 53, 53] 65,536

BatchNorm2d-44 [-1, 512, 53, 53] 1,024

Conv2d-45 [-1, 512, 53, 53] 131,072

BatchNorm2d-46 [-1, 512, 53, 53] 1,024

ReLU-47 [-1, 512, 53, 53] 0

Bottleneck-48 [-1, 512, 53, 53] 0

Conv2d-49 [-1, 128, 53, 53] 65,536

BatchNorm2d-50 [-1, 128, 53, 53] 256

ReLU-51 [-1, 128, 53, 53] 0

Conv2d-52 [-1, 128, 53, 53] 147,456

BatchNorm2d-53 [-1, 128, 53, 53] 256

ReLU-54 [-1, 128, 53, 53] 0

Conv2d-55 [-1, 512, 53, 53] 65,536

BatchNorm2d-56 [-1, 512, 53, 53] 1,024

ReLU-57 [-1, 512, 53, 53] 0

Bottleneck-58 [-1, 512, 53, 53] 0

Conv2d-59 [-1, 128, 53, 53] 65,536

BatchNorm2d-60 [-1, 128, 53, 53] 256

ReLU-61 [-1, 128, 53, 53] 0

Conv2d-62 [-1, 128, 53, 53] 147,456

BatchNorm2d-63 [-1, 128, 53, 53] 256

ReLU-64 [-1, 128, 53, 53] 0

Conv2d-65 [-1, 512, 53, 53] 65,536

BatchNorm2d-66 [-1, 512, 53, 53] 1,024

ReLU-67 [-1, 512, 53, 53] 0

Bottleneck-68 [-1, 512, 53, 53] 0

Conv2d-69 [-1, 128, 53, 53] 65,536

BatchNorm2d-70 [-1, 128, 53, 53] 256

ReLU-71 [-1, 128, 53, 53] 0

Conv2d-72 [-1, 128, 53, 53] 147,456

BatchNorm2d-73 [-1, 128, 53, 53] 256

ReLU-74 [-1, 128, 53, 53] 0

Conv2d-75 [-1, 512, 53, 53] 65,536

BatchNorm2d-76 [-1, 512, 53, 53] 1,024

ReLU-77 [-1, 512, 53, 53] 0

Bottleneck-78 [-1, 512, 53, 53] 0

Conv2d-79 [-1, 256, 53, 53] 131,072

BatchNorm2d-80 [-1, 256, 53, 53] 512

ReLU-81 [-1, 256, 53, 53] 0

Conv2d-82 [-1, 256, 27, 27] 589,824

BatchNorm2d-83 [-1, 256, 27, 27] 512

ReLU-84 [-1, 256, 27, 27] 0

Conv2d-85 [-1, 1024, 27, 27] 262,144

BatchNorm2d-86 [-1, 1024, 27, 27] 2,048

Conv2d-87 [-1, 1024, 27, 27] 524,288

BatchNorm2d-88 [-1, 1024, 27, 27] 2,048

ReLU-89 [-1, 1024, 27, 27] 0

Bottleneck-90 [-1, 1024, 27, 27] 0

Conv2d-91 [-1, 256, 27, 27] 262,144

BatchNorm2d-92 [-1, 256, 27, 27] 512

ReLU-93 [-1, 256, 27, 27] 0

Conv2d-94 [-1, 256, 27, 27] 589,824

BatchNorm2d-95 [-1, 256, 27, 27] 512

ReLU-96 [-1, 256, 27, 27] 0

Conv2d-97 [-1, 1024, 27, 27] 262,144

BatchNorm2d-98 [-1, 1024, 27, 27] 2,048

ReLU-99 [-1, 1024, 27, 27] 0

Bottleneck-100 [-1, 1024, 27, 27] 0

Conv2d-101 [-1, 256, 27, 27] 262,144

BatchNorm2d-102 [-1, 256, 27, 27] 512

ReLU-103 [-1, 256, 27, 27] 0

Conv2d-104 [-1, 256, 27, 27] 589,824

BatchNorm2d-105 [-1, 256, 27, 27] 512

ReLU-106 [-1, 256, 27, 27] 0

Conv2d-107 [-1, 1024, 27, 27] 262,144

BatchNorm2d-108 [-1, 1024, 27, 27] 2,048

ReLU-109 [-1, 1024, 27, 27] 0

Bottleneck-110 [-1, 1024, 27, 27] 0

Conv2d-111 [-1, 256, 27, 27] 262,144

BatchNorm2d-112 [-1, 256, 27, 27] 512

ReLU-113 [-1, 256, 27, 27] 0

Conv2d-114 [-1, 256, 27, 27] 589,824

BatchNorm2d-115 [-1, 256, 27, 27] 512

ReLU-116 [-1, 256, 27, 27] 0

Conv2d-117 [-1, 1024, 27, 27] 262,144

BatchNorm2d-118 [-1, 1024, 27, 27] 2,048

ReLU-119 [-1, 1024, 27, 27] 0

Bottleneck-120 [-1, 1024, 27, 27] 0

Conv2d-121 [-1, 256, 27, 27] 262,144

BatchNorm2d-122 [-1, 256, 27, 27] 512

ReLU-123 [-1, 256, 27, 27] 0

Conv2d-124 [-1, 256, 27, 27] 589,824

BatchNorm2d-125 [-1, 256, 27, 27] 512

ReLU-126 [-1, 256, 27, 27] 0

Conv2d-127 [-1, 1024, 27, 27] 262,144

BatchNorm2d-128 [-1, 1024, 27, 27] 2,048

ReLU-129 [-1, 1024, 27, 27] 0

Bottleneck-130 [-1, 1024, 27, 27] 0

Conv2d-131 [-1, 256, 27, 27] 262,144

BatchNorm2d-132 [-1, 256, 27, 27] 512

ReLU-133 [-1, 256, 27, 27] 0

Conv2d-134 [-1, 256, 27, 27] 589,824

BatchNorm2d-135 [-1, 256, 27, 27] 512

ReLU-136 [-1, 256, 27, 27] 0

Conv2d-137 [-1, 1024, 27, 27] 262,144

BatchNorm2d-138 [-1, 1024, 27, 27] 2,048

ReLU-139 [-1, 1024, 27, 27] 0

Bottleneck-140 [-1, 1024, 27, 27] 0

Conv2d-141 [-1, 512, 27, 27] 524,288

BatchNorm2d-142 [-1, 512, 27, 27] 1,024

ReLU-143 [-1, 512, 27, 27] 0

Conv2d-144 [-1, 512, 14, 14] 2,359,296

BatchNorm2d-145 [-1, 512, 14, 14] 1,024

ReLU-146 [-1, 512, 14, 14] 0

Conv2d-147 [-1, 2048, 14, 14] 1,048,576

BatchNorm2d-148 [-1, 2048, 14, 14] 4,096

Conv2d-149 [-1, 2048, 14, 14] 2,097,152

BatchNorm2d-150 [-1, 2048, 14, 14] 4,096

ReLU-151 [-1, 2048, 14, 14] 0

Bottleneck-152 [-1, 2048, 14, 14] 0

Conv2d-153 [-1, 512, 14, 14] 1,048,576

BatchNorm2d-154 [-1, 512, 14, 14] 1,024

ReLU-155 [-1, 512, 14, 14] 0

Conv2d-156 [-1, 512, 14, 14] 2,359,296

BatchNorm2d-157 [-1, 512, 14, 14] 1,024

ReLU-158 [-1, 512, 14, 14] 0

Conv2d-159 [-1, 2048, 14, 14] 1,048,576

BatchNorm2d-160 [-1, 2048, 14, 14] 4,096

ReLU-161 [-1, 2048, 14, 14] 0

Bottleneck-162 [-1, 2048, 14, 14] 0

Conv2d-163 [-1, 512, 14, 14] 1,048,576

BatchNorm2d-164 [-1, 512, 14, 14] 1,024

ReLU-165 [-1, 512, 14, 14] 0

Conv2d-166 [-1, 512, 14, 14] 2,359,296

BatchNorm2d-167 [-1, 512, 14, 14] 1,024

ReLU-168 [-1, 512, 14, 14] 0

Conv2d-169 [-1, 2048, 14, 14] 1,048,576

BatchNorm2d-170 [-1, 2048, 14, 14] 4,096

ReLU-171 [-1, 2048, 14, 14] 0

Bottleneck-172 [-1, 2048, 14, 14] 0

Conv2d-173 [-1, 256, 14, 14] 524,288

BatchNorm2d-174 [-1, 256, 14, 14] 512

Conv2d-175 [-1, 256, 14, 14] 589,824

BatchNorm2d-176 [-1, 256, 14, 14] 512

Conv2d-177 [-1, 256, 14, 14] 65,536

BatchNorm2d-178 [-1, 256, 14, 14] 512

Conv2d-179 [-1, 256, 14, 14] 524,288

BatchNorm2d-180 [-1, 256, 14, 14] 512

detnet_bottleneck-181 [-1, 256, 14, 14] 0

Conv2d-182 [-1, 256, 14, 14] 65,536

BatchNorm2d-183 [-1, 256, 14, 14] 512

Conv2d-184 [-1, 256, 14, 14] 589,824

BatchNorm2d-185 [-1, 256, 14, 14] 512

Conv2d-186 [-1, 256, 14, 14] 65,536

BatchNorm2d-187 [-1, 256, 14, 14] 512

detnet_bottleneck-188 [-1, 256, 14, 14] 0

Conv2d-189 [-1, 256, 14, 14] 65,536

BatchNorm2d-190 [-1, 256, 14, 14] 512

Conv2d-191 [-1, 256, 14, 14] 589,824

BatchNorm2d-192 [-1, 256, 14, 14] 512

Conv2d-193 [-1, 256, 14, 14] 65,536

BatchNorm2d-194 [-1, 256, 14, 14] 512

detnet_bottleneck-195 [-1, 256, 14, 14] 0

Conv2d-196 [-1, 30, 14, 14] 69,120

BatchNorm2d-197 [-1, 30, 14, 14] 60

================================================================

Total params: 26,728,060

Trainable params: 26,728,060

Non-trainable params: 0

----------------------------------------------------------------

Input size (MB): 2.00

Forward/backward pass size (MB): 1038.47

Params size (MB): 101.96

Estimated Total Size (MB): 1142.43

----------------------------------------------------------------

Process finished with exit code 0

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?