1. 设置显示表头信息

默认hive命令行所展示出来的表并不显示字段(表头)信息

可以通过下面代码设置:

set hive.cli.print.header=true;

如下图所示,显示了每列所在数据库及字段信息,但是没有必有显示数据库,显示数据库反而减弱了可读性

可以通过下面的代码设置:

set hive.resultset.use.unique.column.names=false;

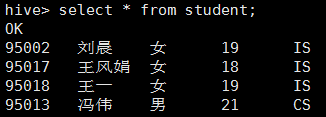

如下图所示,使所查询的结果可读性很好

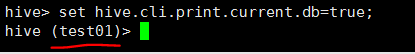

而如果命令行周敲入下面的代码,则会提示当前命令行所操作的数据库:

set hive.cli.print.current.db=true;

如下图所示,括号中的“test01”就是当前使用的数据库:

虽然当前经过对这些参数设置使所查询出来的结果按照我们设想显示,但是只是临时起作用,当我们退出命令行后再次进入,就会使之前的设置失效,有一劳永逸的办法吗?当然有。

在hive的配置文件hive-site.xml中添加上上面的配置即可

<property>

<name>hive.cli.print.header</name>

<value>true</value>

<description>Whether to print the names of the columns in query output.</description>

</property>

<property>

<name>hive.resultset.use.unique.column.names</name>

<value>false</value>

<description>print the names of the columns in query output without the names of database</description>

</property>

<property>

<name>hive.cli.print.current.db</name>

<value>true</value>

<description>Whether to include the current database in the Hive prompt.</description>

</property>

2. 设置Hive执行模式为本地模式

0.7版本后Hive开始支持任务执行选择本地模式(local mode)。大多数的Hadoop job是需要hadoop提供的完整的可扩展性来处理大数据的。不过,有时hive的输入数据量是非常小的。在这种情况下,job在本地执行会节省很多时间。对于大多数这种情况,hive可以通过本地模式在单台机器上处理所有的任务。对于小数据集,执行时间会明显被缩短。

如此一来,对数据量比较小的操作,就可以在本地执行,这样要比提交任务到集群执行效率要快很多。

配置如下参数,可以开启Hive的本地模式:

hive> set hive.exec.mode.local.auto=true;(默认为false)

当一个job满足如下条件才能真正使用本地模式:

1.job的输入数据大小必须小于参数:hive.exec.mode.local.auto.inputbytes.max(默认128MB)

2.job的map数必须小于参数:hive.exec.mode.local.auto.tasks.max(默认4)

3.job的reduce数必须为0或者1

hive (test01)> create table student02

> (id int, name string, gender string, age int, dept string);

OK

Time taken: 1.571 seconds

-- 将任务提交到集群执行,使用时间为329.992 seconds

hive (test01)> insert into table student02

> select * from student limit 3;

WARNING: Hive-on-MR is deprecated in Hive 2 and may not be available in the future versions. Consider using a different execution engine (i.e. spark, tez) or using Hive 1.X releases.

Query ID = xh_20191113211935_6054855b-c713-4213-b371-217d7450bd2f

Total jobs = 1

Launching Job 1 out of 1

Number of reduce tasks determined at compile time: 1

In order to change the average load for a reducer (in bytes):

set hive.exec.reducers.bytes.per.reducer=<number>

In order to limit the maximum number of reducers:

set hive.exec.reducers.max=<number>

In order to set a constant number of reducers:

set mapreduce.job.reduces=<number>

Starting Job = job_1573609225964_0001, Tracking URL = http://hadoop:8088/proxy/application_1573609225964_0001/

Kill Command = /home/xh/hadoop/hadoop-2.7.7/bin/hadoop job -kill job_1573609225964_0001

Hadoop job information for Stage-1: number of mappers: 1; number of reducers: 1

2019-11-13 21:21:36,447 Stage-1 map = 0%, reduce = 0%

2019-11-13 21:22:40,492 Stage-1 map = 0%, reduce = 0%

2019-11-13 21:23:25,780 Stage-1 map = 100%, reduce = 0%, Cumulative CPU 2.84 sec

2019-11-13 21:24:18,076 Stage-1 map = 100%, reduce = 67%, Cumulative CPU 5.36 sec

2019-11-13 21:24:30,373 Stage-1 map = 100%, reduce = 100%, Cumulative CPU 9.03 sec

MapReduce Total cumulative CPU time: 9 seconds 260 msec

Ended Job = job_1573609225964_0001

Loading data to table test01.student02

MapReduce Jobs Launched:

Stage-Stage-1: Map: 1 Reduce: 1 Cumulative CPU: 9.26 sec HDFS Read: 9636 HDFS Write: 144 SUCCESS

Total MapReduce CPU Time Spent: 9 seconds 260 msec

OK

_col0 _col1 _col2 _col3 _col4

Time taken: 329.992 seconds

-- 设置为本地模式,同样的任务执行时间为28.157 seconds,时间缩短很多

hive (test01)> set hive.exec.mode.local.auto=true;

hive (test01)> insert into table student02

> select * from student limit 3;

Automatically selecting local only mode for query

WARNING: Hive-on-MR is deprecated in Hive 2 and may not be available in the future versions. Consider using a different execution engine (i.e. spark, tez) or using Hive 1.X releases.

Query ID = xh_20191113212658_17016e5a-f566-465d-b9ba-b2839d1d70a6

Total jobs = 1

Launching Job 1 out of 1

Number of reduce tasks determined at compile time: 1

In order to change the average load for a reducer (in bytes):

set hive.exec.reducers.bytes.per.reducer=<number>

In order to limit the maximum number of reducers:

set hive.exec.reducers.max=<number>

In order to set a constant number of reducers:

set mapreduce.job.reduces=<number>

Job running in-process (local Hadoop)

2019-11-13 21:27:13,641 Stage-1 map = 0%, reduce = 0%

2019-11-13 21:27:19,846 Stage-1 map = 100%, reduce = 0%

2019-11-13 21:27:24,909 Stage-1 map = 100%, reduce = 100%

Ended Job = job_local2084706803_0001

Loading data to table test01.student02

MapReduce Jobs Launched:

Stage-Stage-1: HDFS Read: 2160 HDFS Write: 69240154 SUCCESS

Total MapReduce CPU Time Spent: 0 msec

OK

_col0 _col1 _col2 _col3 _col4

Time taken: 28.157 seconds

60

60

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?