LightDB 事前SQL兼容迁移评估工具在线评估(恒生电子内网可访问)

0.简介

随着信创数据库兴起,对于开发人员而言,可能需要学习新的sql方言,在工程实践中,往往需要面对sql改写的问题,大部分都是Oracle、Mysql转到其他数据库,目前针对Oracle/Mysql 转到LightDB,开发了一款小工具,静态扫描工程中的sql文件和Mybatis的xml文件,对常用语法给出评估改写意见,使用说明如下,下载页面已经置顶。

离线扫描

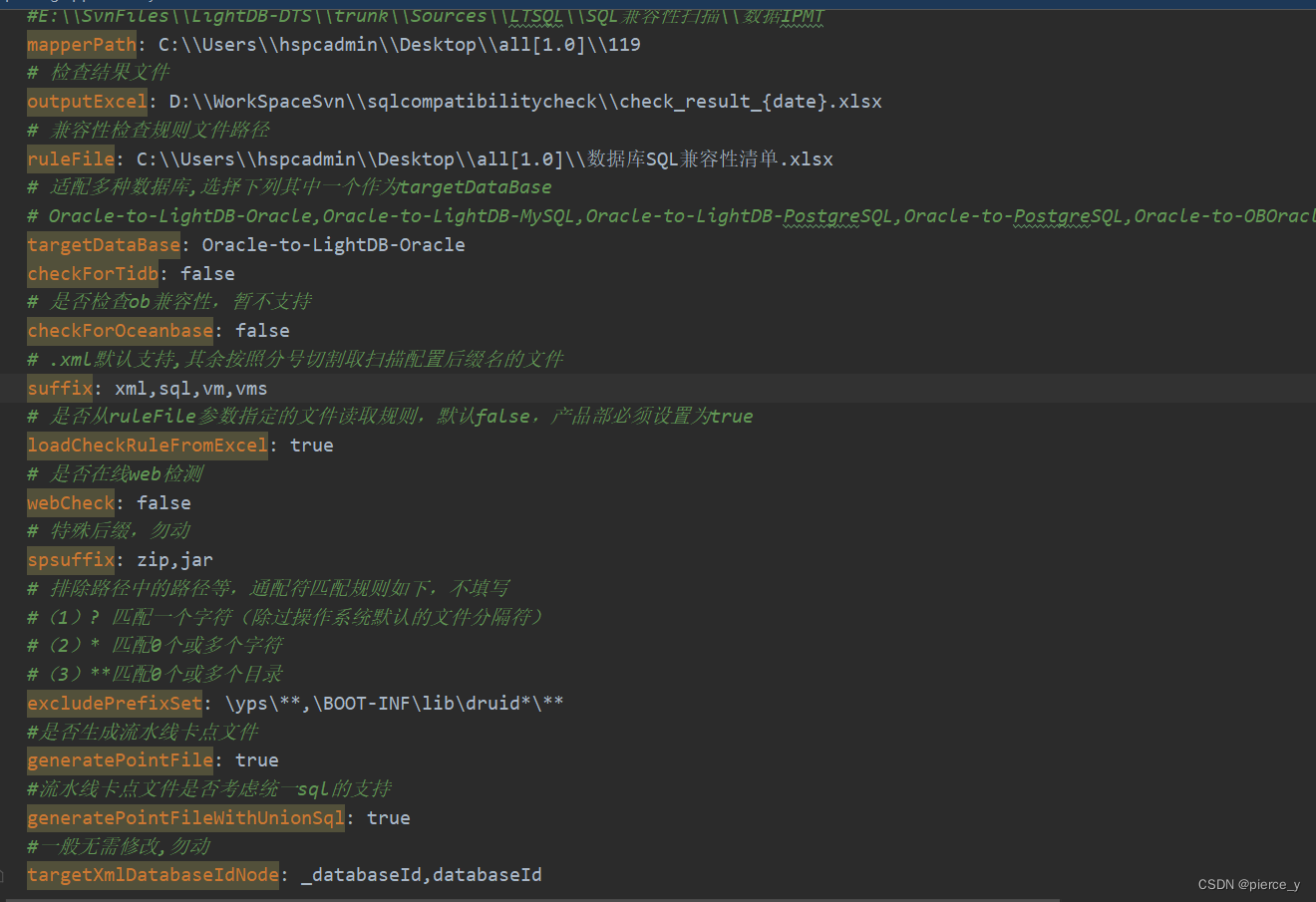

1.配置application.yaml

将yaml文件中sqlchecker.webCheck设置成false

配置工具包中的yaml文件:

修改mapperPath:主要是待扫描文件的目录,如果微服务比较多、模块多,可以配置工程的最外层目录

修改outputExcel: 主要是结果文件的位置,不要放在c盘就行

修改ruleFile:主要是扫描规则文件,就是工具包中数据库SQL兼容性清单.xlsx的文件绝对路径位置

suffix: 表示支持的文件的后缀名,注意尽量添加,xml比较特殊必扫,其他文件用的扫描规则都是以; 来分割文件,逐条扫描的

修改generatePointFile: true表示,对本次离线扫描会生成txt文件,里面记录一个值,0(代表本次扫描全部兼容),1(代表本次扫描有不兼容),方便流水线卡点文件

修改generatePointFileWithUnionSql: true表示,对本次离线扫描会生成txt文件,里面记录一个值,0(代表本次扫描全部兼容,或者统一SQL 可以解决替换),1(代表本次扫描有不兼容且统一SQL不支持解决的),方便流水线卡点文件。

注意是yaml文件,注意空格,所有的绝对路径不要有空格,其余数据勿动

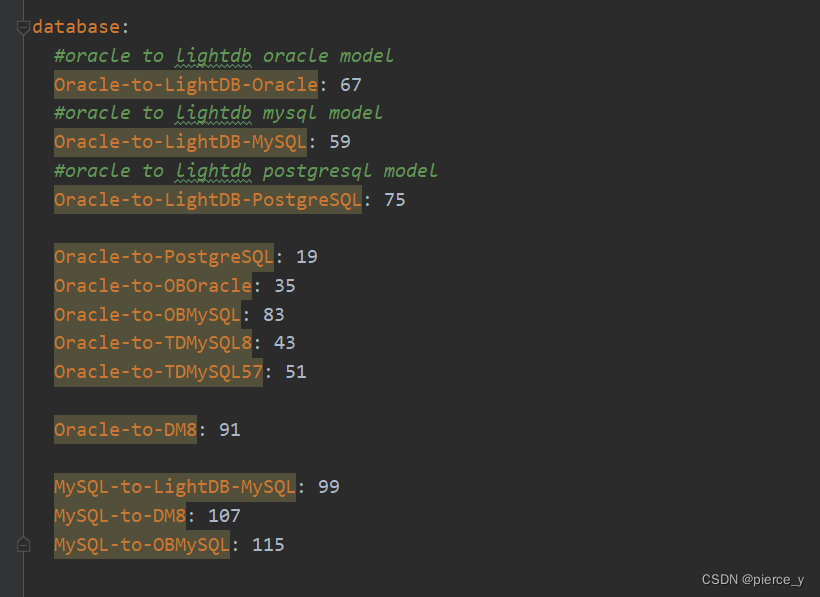

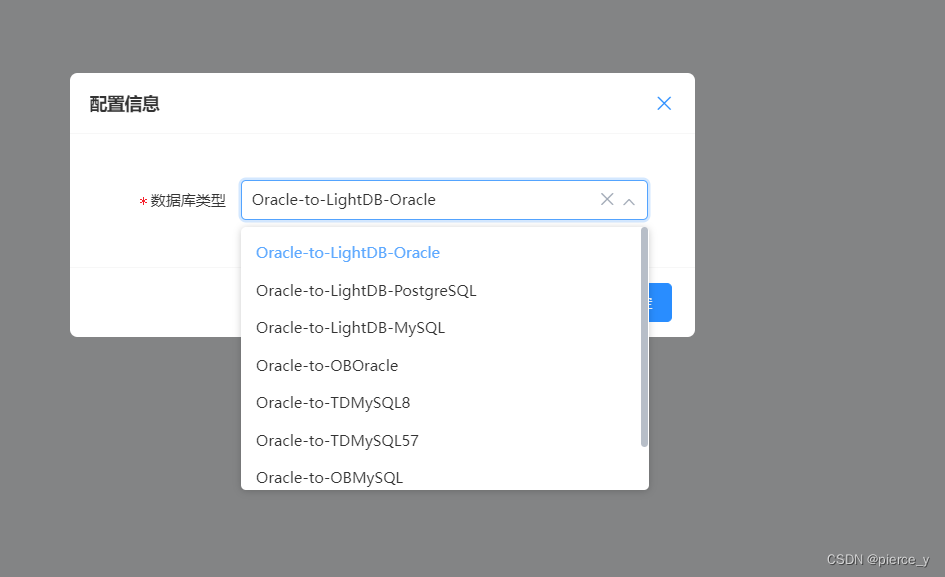

修改targetDataBase: 主要是兼容的目标信创库,名称来自与该文件的dataBase下数据库名称,如下图所示,其中Oracle-to-OBOracle,代表检测源端为Oracle语法去兼容目标端OceanBase的Oracle版本数据库的语法检测,Oracle-to-LightDB-Oracle表示检测源端Oracle语法去兼容目标端LightDB的Oracle模式数据库的语法检测,见名知意,同时注意后面配置的数字勿动。

targetXmlDatabaseIdNode:这个配置无需修改,只做说明,targetDataBase这个参数让我们选择了源端和目标端了,目前我们扫描的源端只能是Oracle或mysql了,对于扫描mybatis来说,mybatis里面会配置databaseId或者_databaseId来表示来区别不同的sql的支持,如果源端选择了Oracle-xxxxx,那么mybatis里面那些指明了databaseId并且非oracle的语句将不会被扫描的,当然了一般大家配置databaseId的值,为了便于理解都是写oracle或者mysql,不会写的太复杂的。

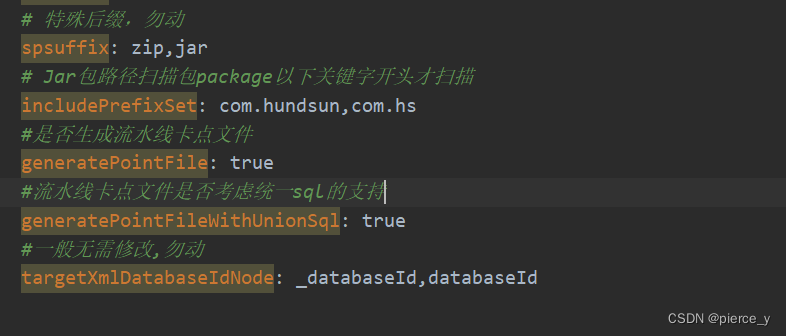

同时也可以扫描Jar包里面的.xml和.sql文件和.class文件,

在excludePrefixSet可配置路径,可剔除扫扫描路径无需扫描的文件等,例如不要扫描druid的jar里面的sql文件,在windows下可以配置 \BOOT-INF\lib\druid*\**等,规则可见yaml文件

如上图,可扫描含com.hundsun和com.hs开头的package的jar包,及其class上面的mybatis的select、update、insert、delete注解等

总结:可扫描

1. java代码文件夹中的.xml,.sql文件;

2. jar包里的.xml,.sql文件;

3. zip压缩包里的.xml, .sql文件;

4. jar中class里面Mybatis的select、update、insert、delete注解。

2.运行run.bat

运行停止之后获取outputExcel位置的文件,查看excel文件即可

在线扫描

1.配置application.yaml

将yaml文件中sqlchecker.webCheck设置成true

server.port配置对应的端口号,其余勿动.

2.使用

运行jar包(命令:java -jar LtMigrateAssist.jar),打开对应的页面(如:http://192.168.33.193:8766/),页面如下

使用说明见页面上Notice,目前支持扫描sql、xml文件,可支持扫描jar中java文件的mybatis注解。

同时在线扫描可以点击上图右上角的设置来配置目标库

例子:

扫描的sql文件如下,去兼容Postgresql数据库

create table t(col NUMBER(2));

create table t(col NUMBER(4));

create table t(col NUMBER(8));

create table t(col NUMBER(18));

create table t(col NUMBER(28));

create table t(col NUMBER(10,2));

create table t(col NUMBER);

create table t(col NUMBER(*));

create table t(col BFILE);

create table t(col BINARY_FLOAT);

create table t(col BINARY_DOUBLE);

create table t(col BLOB);

create table t(col CHAR(200));

create table t(col CHARACTER(200));

create table t(col CLOB);

create table t(col DATE);

create table t(col DECIMAL(10,2));

create table t(col DEC(10,2));

create table t(col DOUBLE PRECISION);

create table t(col FLOAT(10));

create table t(col INTEGER);

create table t(col INT);

create table t(col INTERVAL YEAR(4) TO MONTH);

create table t(col INTERVAL DAY(4) TO SECOND(2));

create table t(col LONG);

create table t(col LONG RAW);

create table t(col NCHAR(10));

create table t(col NCHAR VARYING(10));

create table t(col NCLOB);

create table t(col NUMERIC(10,2));

create table t(col NVARCHAR2(10));

create table t(col RAW(10));

create table t(col REAL);

create table t(col ROWID);

create table t(col SMALLINT);

create table t(col TIMESTAMP(6));

create table t(col TIMESTAMP(6) WITH TIME ZONE));

create table t(col UROWID(6));

create table t(col VARCHAR(10));

create table t(col VARCHAR2(10));

create table t(col XMLTYPE);

SELECT ABS(-15) "Absolute" FROM DUAL;

select bitand(2,1);

SELECT ceil(0) AS "ceil(0)", ceil(10) AS "ceil(10)", ceil(10.11) AS "ceil(10.11)", ceil(-10.11) AS "ceil(-10.11)" from dual;

SELECT exp(2) from dual;

SELECT floor(0) AS "floor(0)", floor(10) AS "floor(10)", floor(10.11) AS "floor(10.11)", floor(-10.11) AS "floor(-10.11)" from dual;

SELECT LN(3.0) AS "Natural Logarithm" from dual;

SELECT LOG(45) from dual;

SELECT MOD(29,3) from dual;

select power(2,3)from dual;

select DBMS_RANDOM.VALUE from dual SELECT DBMS_RANDOM.RANDOM from dual;

SELECT ROUND(3, 100) from dual;

select sign(20)from dual;

select sqrt(20)from dual;

select TRUNC(1.5) from dual;

select * from t where a is null;

select chr(65) from dual;

select concat('6','a')from dual;

select initcap( 'heeKKo') from dual;

SELECT LOWER('heeKKo')from dual;

select lpad('test',10,'ee') from dual;

select ltrim('abcccabddee','abc') from dual;

select regexp_replace('你好hello世界abc', '[A-Za-z]+') from dual;

SELECT REGEXP_SUBSTR('11a22A33a','[^A]+',1,1,'i') AS STR FROM DUAL;

select replace('123456789','4','88888888' ) from dual;

select rpad('tech on the net', 16, 'z') from dual;

select trim(leading 'a' from 'abYaYba') l1 from dual;

select substr('1234',0,3) as re from dual;

select trim(trailing 'x' from 'dylanx') "test_trim" from dual;

select upper('daHHqqq') from dual;

SELECT REVERSE('abc') FROM DUAL;

SELECT ASCII('a') FROM DUAL;

SELECT INSTR('high','ig') FROM DUAL ;

SELECT LENGTH('high') FROM DUAL;

SELECT REGEXP_INSTR('500 Oracle Parkway, Redwood Shores, CA', '[^ ]+', 1, 6) "REGEXP_INSTR" FROM DUAL;

SELECT ADD_MONTHS(SYSDATE , 1) FROM dual;

SELECT CURRENT_DATE FROM DUAL;

SELECT CURRENT_TIMESTAMP(6) FROM DUAL ;

SELECT EXTRACT(month FROM order_date) "Month", COUNT(order_date) "No. of Orders" FROM orders;

SELECT LAST_DAY(SYSDATE) "Last" FROM DUAL;

SELECT MONTHS_BETWEEN (TO_DATE('02-02-1995','MM-DD-YYYY'), TO_DATE('01-01-1995','MM-DD-YYYY') ) "Months" FROM DUAL;

SELECT ROUND (to_date('2002-08-26','yyyy-mm-dd')) "New Year" FROM DUAL;

SELECT SYSDATE FROM DUAL;

SELECT TO_TIMESTAMP ('10-Sept-02 14:10:10.123000' DEFAULT NULL ON CONVERSION ERROR, 'DD-Mon-RR HH24:MI:SS.FF 'NLS_DATE_LANGUAGE = American') "Value" FROM DUAL;

SELECT employee_id, last_name FROM employees WHERE hire_date + TO_DSINTERVAL('100 00:00:00') <= DATE '2002-11-01' ORDER BY employee_id;

SELECT TO_YMINTERVAL('1-2') FROM DUAL;

select trunc(sysdate) from dual;

SELECT GREATEST(2, 5, 12, 3, 16, 8, 9) A FROM DUAL;

SELECT LEAST(2, 5, 12, 3, 16, 8, 9) A FROM DUAL;

select bin_to_num(1,1,1,1) num from dual;

select to_number('123.45') + 2 from dual;

select sysdate, sysdate + numtodsinterval(3, 'day') as num_value from dual;

SELECT SYSDATE,SYSDATE + NUMTOYMINTERVAL(1, 'YEAR') FROM DUAL;

select to_timestamp(1628088734) from dual;

SELECT TIMESTAMP_TO_SCN(order_date) FROM orders WHERE order_id = 5000;

select to_char(timestamp '2002-04-20 17:31:12.66', 'HH12:MI:SS') FROM DUAL;

SELECT TO_CLOB(docu, 873, 'text/xml') FROM media_tab;

SELECT TO_DATE( 'January 15, 1989, 11:00 A.M.', 'Month dd, YYYY, HH:MI A.M.', 'NLS_DATE_LANGUAGE = American') FROM DUAL;

SELECT TO_CHAR(TIMESTAMP '2009-01-01 00:00:00' + TO_DSINTERVAL('P100DT05H'), 'YYYY-MM-DD HH24:MI:SS') "Time Stamp" FROM DUAL;

SELECT TO_NCHAR(cust_last_name) FROM customers WHERE customer_id=103;

SELECT TO_NCHAR(order_date) FROM orders WHERE order_status > 9;

SELECT TO_NCHAR(customer_id) "NCHAR_Customer_ID" FROM orders WHERE order_status > 9;

SELECT TO_TIMESTAMP ('10-Sep-02 14:10:10.123000', 'DD-Mon-RR HH24:MI:SS.FF') FROM DUAL;

SELECT hire_date, hire_date + TO_YMINTERVAL('01-02') "14 months" FROM employees;

SELECT LPAD(' ', 2*level-1)||SYS_CONNECT_BY_PATH(last_name, '/') FROM employees;

SELECT product_id, DECODE (warehouse_id, 1, 'Southlake', 2, 'San Francisco', 3, 'New Jersey', 4, 'Seattle', 'Non domestic') "Location of inventory" FROM inventories WHERE product_id < 1775;

SELECT product_id, list_price, min_price, COALESCE(0.9*list_price, min_price, 5) "Sale" FROM product_information WHERE supplier_id = 102050;

SELECT e.last_name, NULLIF(e.job_id, j.job_id) "Old Job ID" FROM employees e, job_history j WHERE e.employee_id = j.employee_id ORDER BY last_name;

SELECT last_name, NVL(TO_CHAR(commission_pct), 'Not Applicable') "COMMISSION" FROM employees WHERE last_name LIKE 'B%' ORDER BY last_name;

SELECT SYS_CONTEXT ('hr_apps', 'group_no') "User Group" FROM DUAL;

SELECT SYS_GUID() FROM DUAL;

SELECT USER, UID FROM DUAL;

SELECT USERENV('LANGUAGE') "Language" FROM DUAL;

SELECT AVG(salary) "Average" FROM employees;

SELECT weight_class, CORR(list_price, min_price) FROM product_information GROUP BY weight_class;

SELECT COUNT(*) count, CORR_S(salary, commission_pct) commission, CORR_S(salary, employee_id) empid FROM employees;

SELECT COUNT(*) "Total" FROM employees;

SELECT job_id, COVAR_POP(SYSDATE-hire_date, salary) AS covar_pop FROM employees WHERE department_id in (50, 80) GROUP BY job_id;

SELECT job_id, COVAR_SAMP(SYSDATE-hire_date, salary) AS covar_samp FROM employees WHERE department_id in (50, 80) GROUP BY job_id;

SELECT CUME_DIST(15500, .05) WITHIN GROUP (ORDER BY salary, commission_pct) "Cume-Dist of 15500" FROM employees;

SELECT DENSE_RANK(15500, .05) WITHIN GROUP (ORDER BY salary DESC, commission_pct) "Dense Rank" FROM employees;

SELECT department_id, MIN(salary) KEEP (DENSE_RANK FIRST ORDER BY commission_pct) "Worst" FROM employees GROUP BY department_id;

SELECT department_id, MAX(salary) KEEP (DENSE_RANK LAST ORDER BY commission_pct) "Best" FROM employees GROUP BY department_id;

SELECT MAX(salary) "Maximum" FROM employees;

SELECT department_id, MEDIAN(salary) FROM employees GROUP BY department_id;

SELECT MIN(hire_date) "Earliest" FROM employees;

SELECT department_id, PERCENTILE_CONT(0.5) WITHIN GROUP (ORDER BY salary DESC) "Median cont" FROM employees GROUP BY department_id;

SELECT department_id, PERCENTILE_DISC(0.5) WITHIN GROUP (ORDER BY salary DESC) "Median disc" FROM employees GROUP BY department_id;

SELECT PERCENT_RANK(15000, .05) WITHIN GROUP (ORDER BY salary, commission_pct) "Percent-Rank" FROM employees;

SELECT RANK(15500, .05) WITHIN GROUP (ORDER BY salary, commission_pct) "Rank" FROM employees;

SELECT STDDEV(salary) "Deviation" FROM employees;

SELECT STDDEV_POP(amount_sold) "Pop", STDDEV_SAMP(amount_sold) "Samp" FROM sales;

SELECT department_id, last_name, hire_date, salary, STDDEV_SAMP(salary) OVER (PARTITION BY department_id ORDER BY hire_date ROWS BETWEEN UNBOUNDED PRECEDING AND CURRENT ROW) AS cum_sdev FROM employees ORDER BY department_id, last_name, hire_date, salary, cum_sdev;

SELECT manager_id, last_name, salary, SUM(salary) OVER (PARTITION BY manager_id ORDER BY salary RANGE UNBOUNDED PRECEDING) l_csum FROM employees ORDER BY manager_id, last_name, salary, l_csum;

SELECT t.calendar_month_desc, VAR_POP(SUM(s.amount_sold)) OVER (ORDER BY t.calendar_month_desc) "Var_Pop", VAR_SAMP(SUM(s.amount_sold)) OVER (ORDER BY t.calendar_month_desc) "Var_Samp" FROM sales s, times t WHERE s.time_id = t.time_id AND t.calendar_year = 1998 GROUP BY t.calendar_month_desc ORDER BY t.calendar_month_desc, "Var_Pop", "Var_Samp";

SELECT VAR_SAMP(salary) FROM employees;

SELECT last_name, salary, VARIANCE(salary) OVER (ORDER BY hire_date) "Variance" FROM employees WHERE department_id = 30 ORDER BY last_name, salary, "Variance";

SELECT JSON_QUERY('[0,1,2,3,4]', '$[3]' WITH WRAPPER) AS value FROM DUAL;

SELECT t.* FROM j_purchaseOrder LEFT OUTER JOIN JSON_TABLE(po_document COLUMNS(PONumber, Reference, Requestor)) t ON 1=1;

SELECT JSON_VALUE('{firstname:"John"}', '$.lastname' DEFAULT 'No last name found' ON ERROR) AS "Last Name" FROM DUAL;

SELECT JSON_ARRAY ( JSON_OBJECT('percentage' VALUE .50), JSON_ARRAY(1,2,3), 100, 'California', null NULL ON NULL ) "JSON Array Example" FROM DUAL;

SELECT JSON_ARRAYAGG(id ORDER BY id RETURNING VARCHAR2(100)) ID_NUMBERS FROM id_table;

SELECT JSON_OBJECT(eMail) FROM employees WHERE employee_id = 140;

SELECT JSON_OBJECTAGG(KEY department_name VALUE department_id) "Department Numbers" FROM departments WHERE department_id <= 30;

SELECT department_id "Dept", hire_date "Date", last_name "Name", LISTAGG(last_name, '; ') WITHIN GROUP (ORDER BY hire_date, last_name) OVER (PARTITION BY department_id) as "Emp_list" FROM employees WHERE hire_date < '01-SEP-2003' ORDER BY "Dept", "Date", "Name";

SELECT last_name, salary, NTILE(4) OVER (ORDER BY salary DESC) AS quartile FROM employees WHERE department_id = 100 ORDER BY last_name, salary, quartile;

SELECT prod_id, channel_id, MIN(amount_sold), NTH_VALUE(MIN(amount_sold), 2) OVER (PARTITION BY prod_id ORDER BY channel_id ROWS BETWEEN UNBOUNDED PRECEDING AND UNBOUNDED FOLLOWING) nv FROM sales WHERE prod_id BETWEEN 13 and 16 GROUP BY prod_id, channel_id;

SELECT employee_id, last_name, salary, hire_date, FIRST_VALUE(last_name) OVER (ORDER BY salary ASC RANGE UNBOUNDED PRECEDING) AS fv FROM (SELECT * FROM employees WHERE department_id = 90 ORDER BY hire_date DESC);

SELECT hire_date, last_name, salary, LAG(salary, 1, 0) OVER (ORDER BY hire_date) AS prev_sal FROM employees WHERE job_id = 'PU_CLERK' ORDER BY hire_date;

SELECT employee_id, last_name, salary, hire_date, LAST_VALUE(hire_date) OVER (ORDER BY salary DESC RANGE BETWEEN UNBOUNDED PRECEDING AND UNBOUNDED FOLLOWING) AS lv FROM (SELECT * FROM employees WHERE department_id = 90 ORDER BY hire_date DESC);

SELECT hire_date, last_name, LEAD(hire_date, 1) OVER (ORDER BY hire_date) AS "NextHired" FROM employees WHERE department_id = 30 ORDER BY hire_date;

SELECT department_id, first_name, last_name, salary FROM ( SELECT department_id, first_name, last_name, salary, ROW_NUMBER() OVER (PARTITION BY department_id ORDER BY salary desc) rn FROM employees ) WHERE rn <= 3 ORDER BY department_id, salary DESC, last_name;

SELECT A.dept,MAX(A.salaries) KEEP(DENSE_RANK FIRST ORDER BY A.age) AS max_sal FROM workers A GROUP BY A.dept;

call get_sal(7902);

select 1 from dual;

select deptno,job,sum(sal) from emp group by rollup(deptno,job) order by deptno;

SELECT distinct name, credit_limit,address FROM customers ORDER BY 2 DESC, 1;

SELECT customer_id, name FROM customers ORDER BY UPPER( name );

SELECT department_id, MIN(salary), MAX (salary) FROM employees WHERE job_id = 'PU_CLERK' GROUP BY department_id Having MIN(salary) > 1000 ORDER BY department_id OFFSET 10 ROWS FETCH NEXT 10 ROWS;

select * from employees partition(P1);

select * from employees for update;

select * from employees for update nowait;

select * from employees for update wait 3;

select * from employees for update skip locked;

LOCK TABLE employees IN SHARE MODE;

select * from employees for update of a,b;

SELECT * FROM orders INNER JOIN order_items ON order_items.order_id = orders.order_id;

SELECT * FROM orders,order_items where order_items.order_id = orders.order_id;

SELECT * FROM orders CROSS JOIN order_items;

SELECT * FROM orders,order_items;

SELECT * FROM orders LEFT JOIN order_items ON order_items.order_id = orders.order_id;

SELECT * FROM t1 LEFT JOIN t2 ON t1.a = t2.b UNION SELECT * FROM t1 RIGHT JOIN t2 ON t1.a = t2.b;

SELECT * FROM t1 LEFT JOIN (select * from t2) t2 ON t1.a = t2.b;

SELECT * FROM t1 RIGHT JOIN t2 ON t1.a = t2.b;

SELECT * FROM sq1 WHERE num = (SELECT MAX(num)/10 FROM sq2);

SELECT name,age FROM staff WHERE (name,age) IN (SELECT name,age FROM customer);

SELECT col1 FROM t1 WHERE EXISTS (SELECT * FROM t2 WHERE t1.id = t2.id);

SELECT * FROM sq1 WHERE NOT EXISTS (SELECT * FROM sq2 GROUP BY num2 HAVING MIN(num2)=40);

SELECT AVG(sq_sum) FROM (SELECT SUM(score) AS sq_sum FROM student GROUP BY name) t;

SELECT supplier_id FROM suppliers INTERSECT SELECT supplier_id FROM order_details;

SELECT * FROM a UNION SELECT * FROM b;

SELECT * FROM a UNION ALL SELECT * FROM b;

select * from A minus select * from B;

WITH ancestors AS ( SELECT * FROM folks WHERE name="Alex" UNION ALL SELECT f.* FROM folks f, ancestors a WHERE f.id = a.father OR f.id = a.mother ) SELECT * FROM ancestors;

select * from (with a as (select * from b) select * from a) c;

SELECT t1.a, t1.b FROM t1, t2 WHERE t1.a > t2.c AND t2.c IN(WITH t AS (SELECT * FROM t1 WHERE t1.a < 5) SELECT t2.c FROM t2, t WHERE t2.c = t.a);

WITH engineers AS ( SELECT * FROM employees WHERE dept IN('Development','Support') ), eu_engineers AS ( SELECT * FROM engineers WHERE country IN('NL') ) SELECT * FROM eu_engineers;

;

with org_chart ( employee_id, first_name, last_name, hire_date, manager_id, lvl ) as ( select employee_id, first_name, last_name, hire_date, manager_id, 1 lvl from employees where manager_id is null union all select e.employee_id, e.first_name, e.last_name, e.hire_date, e.manager_id, oc.lvl + 1 from org_chart oc join employees e on e.manager_id = oc.employee_id ) search depth first by hire_date set hire_seq select * from org_chart order by hire_seq;

;

;

SELECT country,prod,year,s FROM sales_view_ref MODEL PARTITION BY (country) DIMENSION BY (prod, year) MEASURES (sale s) IGNORE NAV UNIQUE DIMENSION RULES UPSERT SEQUENTIAL ORDER ( s[prod='Mouse Pad', year=2001] = s['Mouse Pad', 1999] + s['Mouse Pad', 2000], s['Standard Mouse', 2002] = s['Standard Mouse', 2001] ) ORDER BY country, prod, year;

TRUNCATE TABLE t;

ALTER INDEX idx DISABLE;

CREATE INDEX name_ix ON t (name);

CREATE TABLE new_t AS SELECT a, b FROM t WHERE b >= '2022-01-01';

CREATE PRIVATE TEMPORARY TABLE tmp_t AS SELECT a, b FROM t WHERE b >= '2022-01-01';

CREATE TABLE t (a NUMBER NULL, b NUMBER(5,2) NOT NULL, c NUMBER(4) DEFAULT 1 NOT NULL);

CREATE TABLE t (id NUMBER(18) PRIMARY KEY, a FLOAT NOT NULL, b BINARY_FLOAT);

CREATE TABLE sales_data ( product_id NUMBER(6), sale_time TIMESTAMP(6), sale_price NUMBER(10,2) ) PARTITION BY RANGE (sale_time) ( PARTITION q1_2023 VALUES LESS THAN (TO_DATE('2023/04/01', 'yyyy/mm/dd')), PARTITION q2_2023 VALUES LESS THAN (TO_DATE('2023/07/01', 'yyyy/mm/dd')), PARTITION q3_2023 VALUES LESS THAN (TO_DATE('2023/10/01', 'yyyy/mm/dd')), PARTITION q4_2023 VALUES LESS THAN (TO_DATE('2024/01/01', 'yyyy/mm/dd')) );

ALTER TABLE t ADD e NUMBER(4);

ALTER TABLE t ADD CONSTRAINT t_pk PRIMARY KEY (id);

ALTER TABLE t DROP CONSTRAINT t_pk;

ALTER TABLE sales_data TRUNCATE PARTITION q4_2023;

ALTER TABLE sales_data ADD PARTITION q1_2024 VALUES LESS THAN (TO_DATE('2024/04/01', 'yyyy/mm/dd'));

ALTER TABLE sales_data MERGE PARTITIONS q4_2023, q1_2024 INTO PARTITION q1_2024;

ALTER TABLE sales_data COALESCE PARTITION;

ALTER TABLE sales_data DROP PARTITION q1_2024;

ALTER TABLE t MODIFY a NUMBER(18,0);

ALTER TABLE t RENAME TO new_t;

DROP INDEX a_ix;

INSERT INTO t PARTITION (p1) (col,col2,col3) VALUES (1,2,3);

merge into t using (select '7' as id from dual) s on (id = s.id) when matched then update set name = 'xx' when not matched then insert (id, name) values ('7', 'xx');

UPDATE t SET col1 = 1, col2 = 2 WHERE id=100;

UPDATE t1 PARTITION (p1) SET col1 = 1 WHERE id = 1;

DELETE FROM t PARTITION (p1);

不支持;

delete from a where exists ( selexcel结果如下:

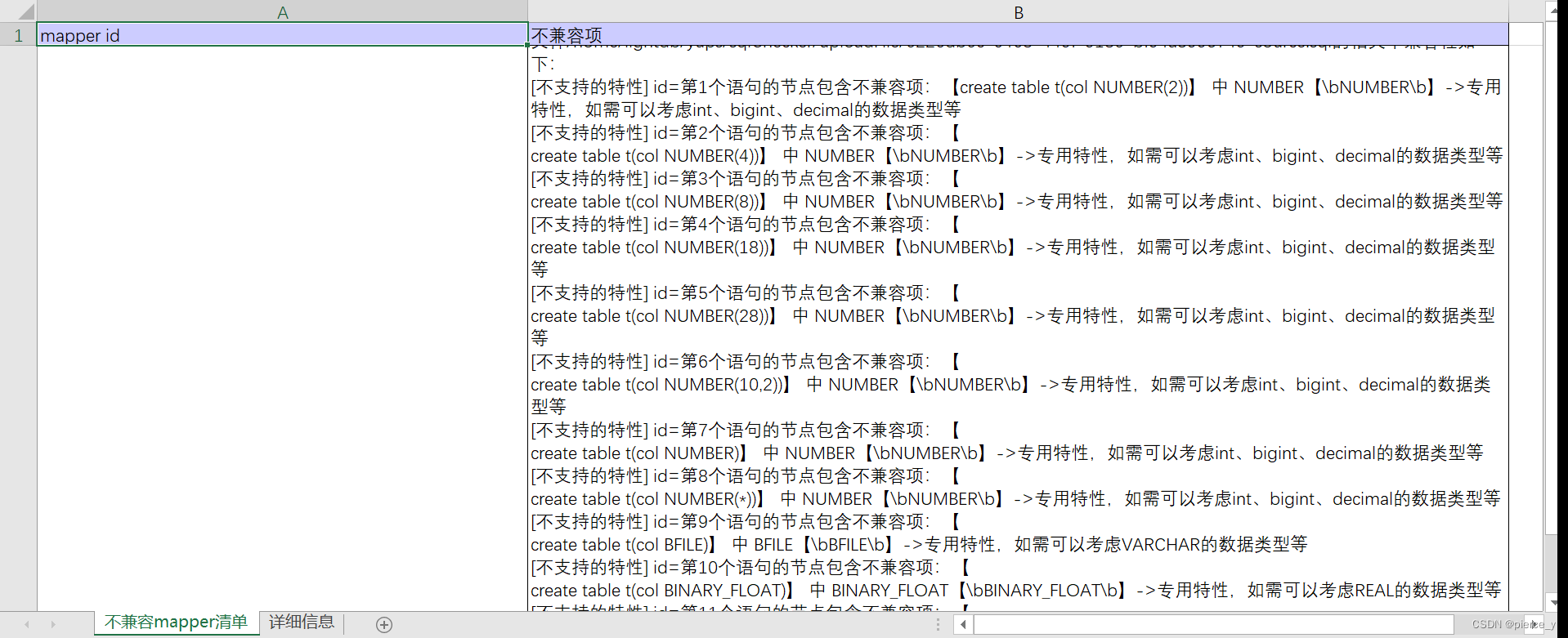

第一个sheet可以看出具体不兼容的SQL

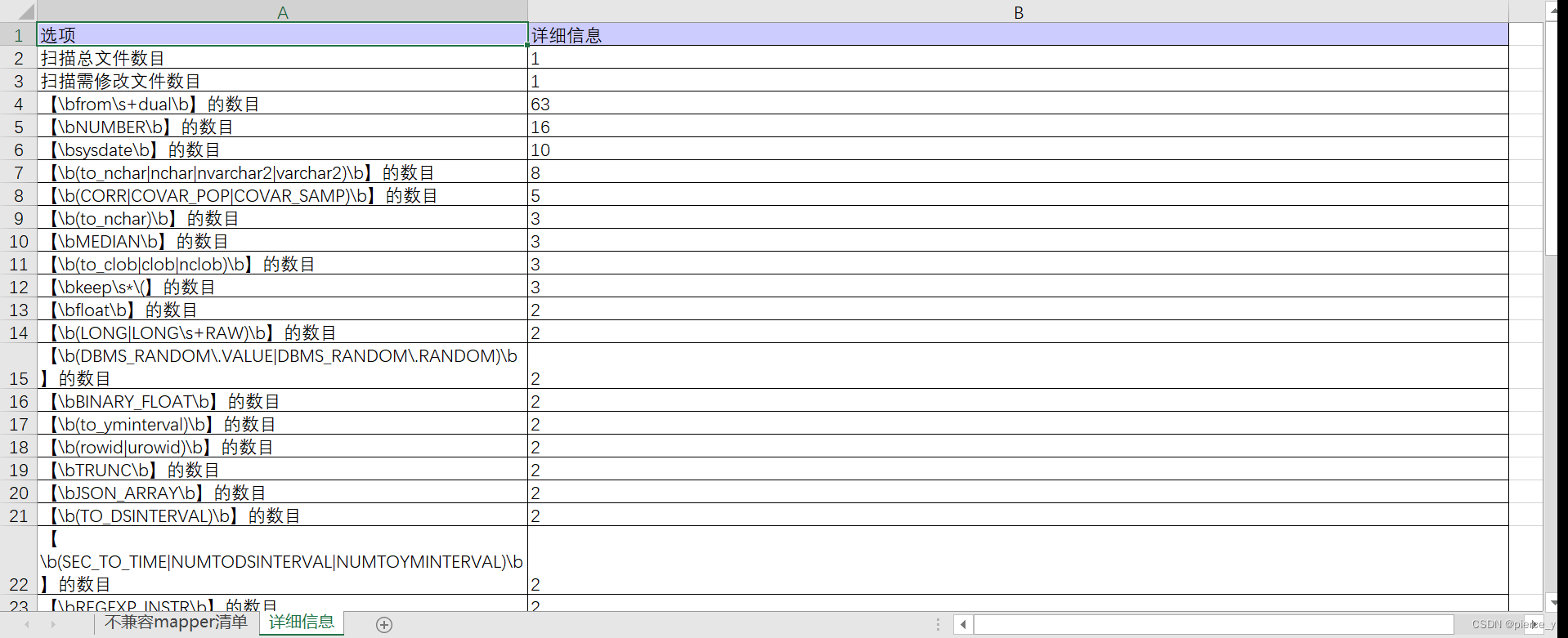

第二Sheet页可以看见,哪些不兼容的正则类型和数量

2650

2650

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?