这边使用虚拟机下载依赖配置环境以及模拟服务器各个节点,使用两个虚拟机模拟离线不联网环境的服务器,使用一个虚拟机联网下载依赖包,然后传入两个不能联网的虚拟机安装所有环境(我这边偷懒就用两个虚拟机中的一个联网下载安装包。再安装到两个虚拟机内了)。

文档参照:离线安装 (kubesphere.io)

一、虚拟机安装

VMware下载地址:vm17pro 下载

也可以根据你的情况来换不同版本,但是下载需要注册,后续可以去网上找免费版本或破解方法。

下载后点击安装可能提示重启,按照要求重启即可。

这一步更改安装位置。

其他配置可以全部默认,一直点击下一步直到安装成功。

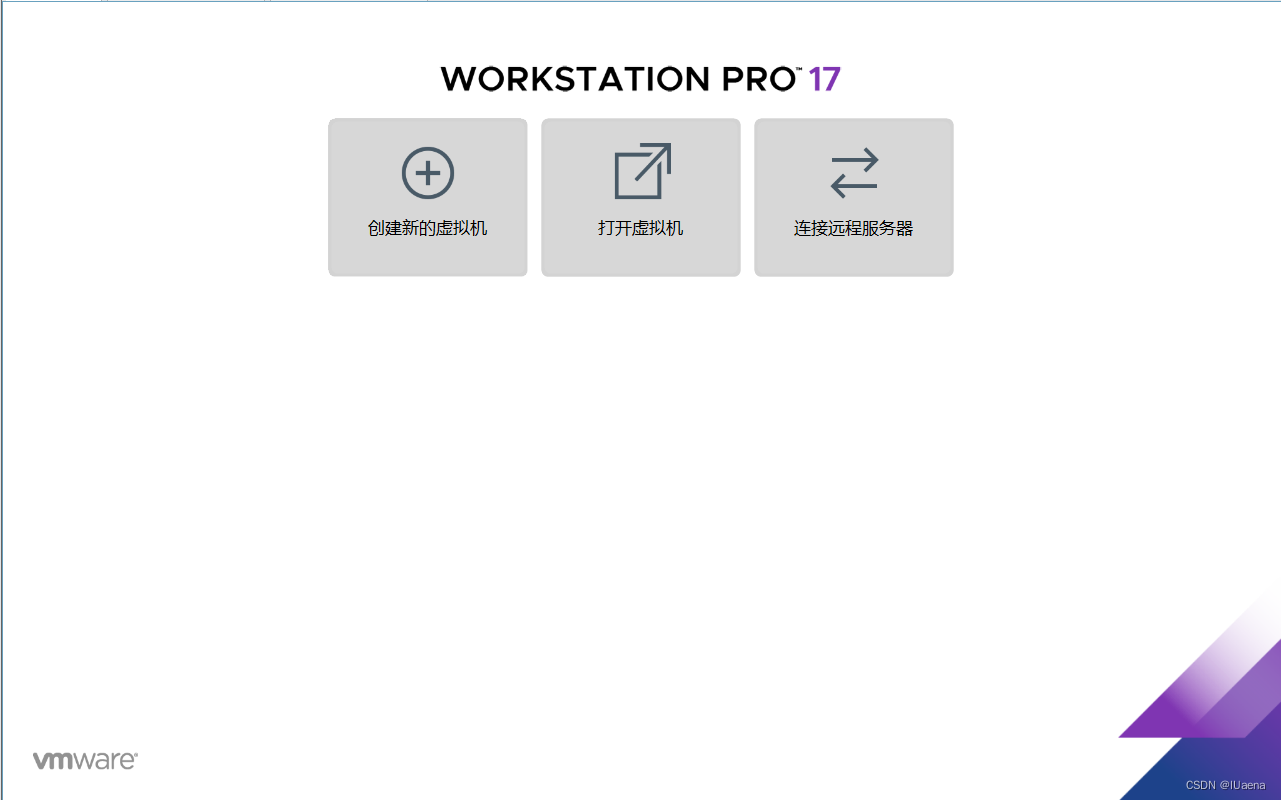

打开之后这个样子就是安装完成了,许可可以搜到然后输入

二、镜像下载安装

1、镜像下载

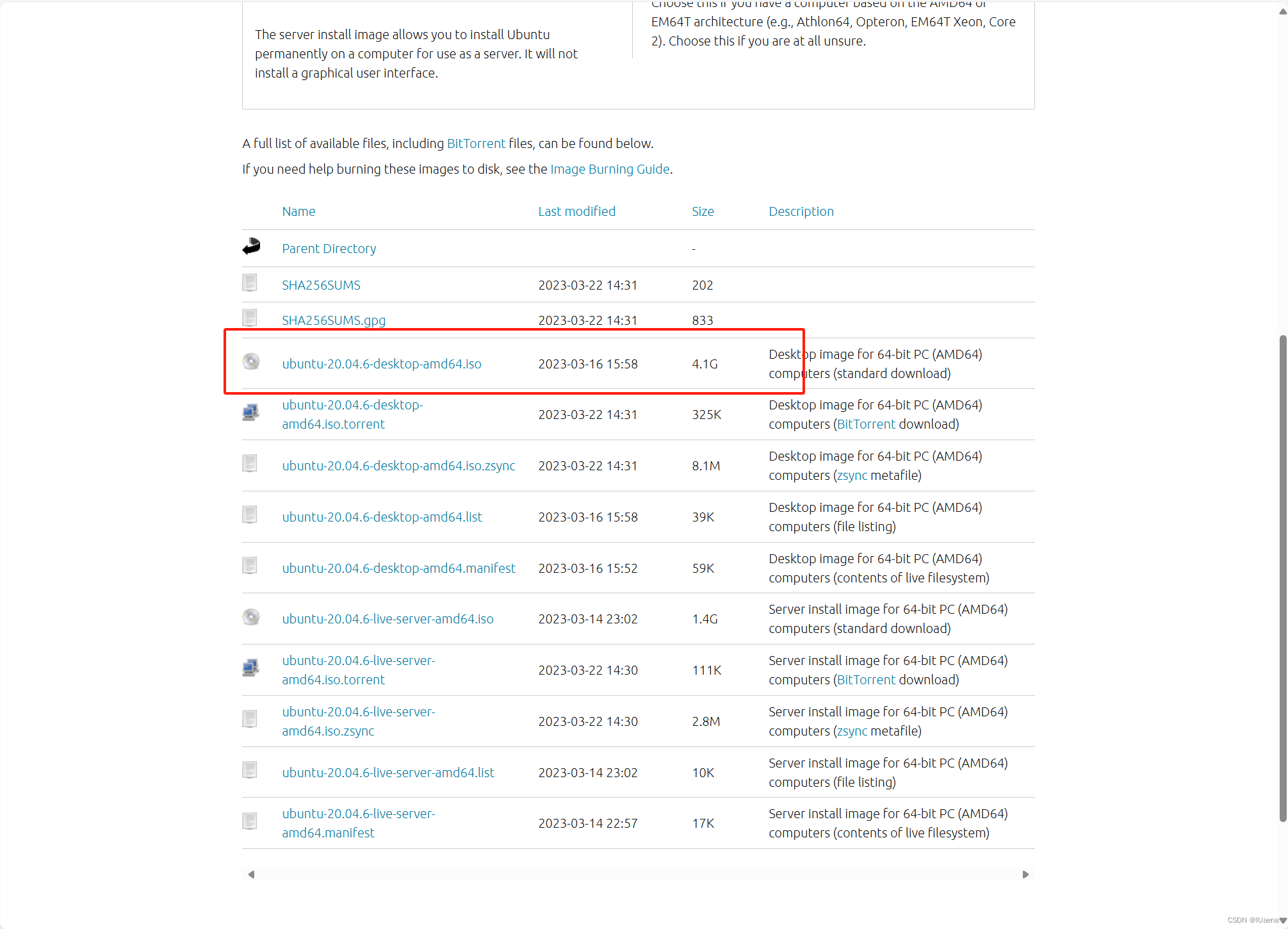

这边使用ubuntu2020.04并安装图形化界面来进行配置

大约4.1G,下载地址:

2、虚拟机安装

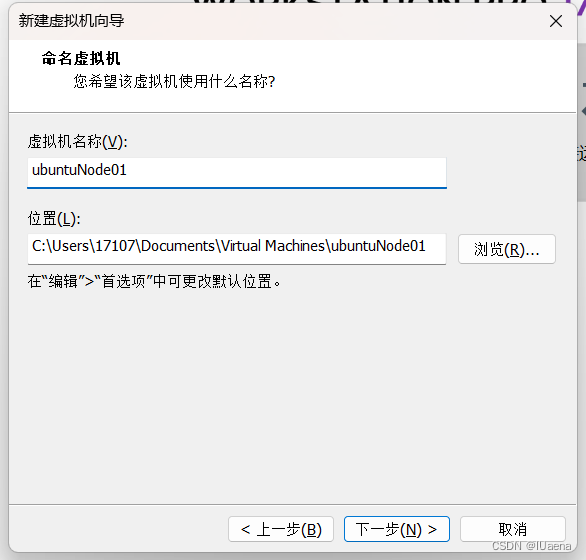

这边分别安装名为ubuntuNode01和ubuntuNode02的两个虚拟机模拟服务器两个节点来安装k8s集群和kubesphere服务。

点击创建新的虚拟机

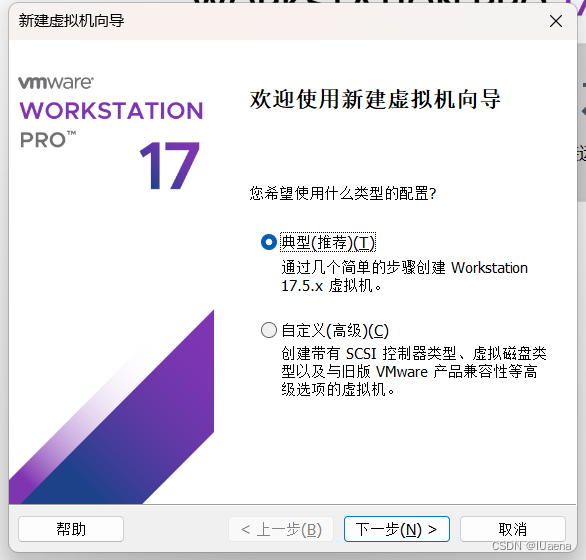

选择经典,点击下一步

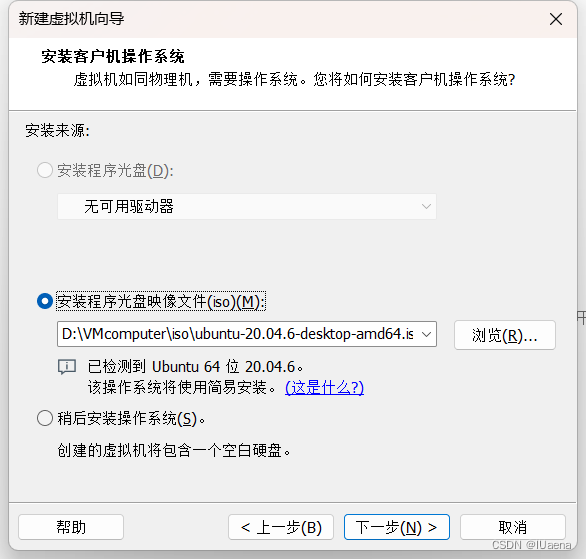

iso选择刚才下载的镜像并点击下一步

输入全名和用户名,密码可设置12345678,点击下一步

输入虚拟机名称并选择安装目录,点击下一步

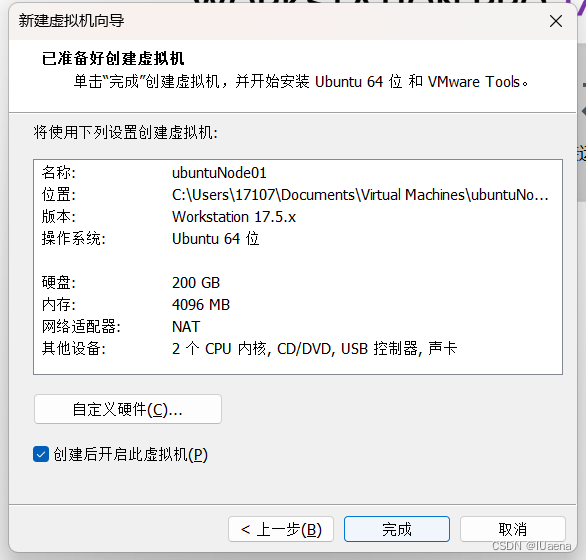

磁盘设置大小50G就可以了,但我虚拟机还有别的用处就设置了200G(磁盘选单个文件或者多个文件都可以,而且它也解释清楚了区别),点击下一步

点击自定义硬件

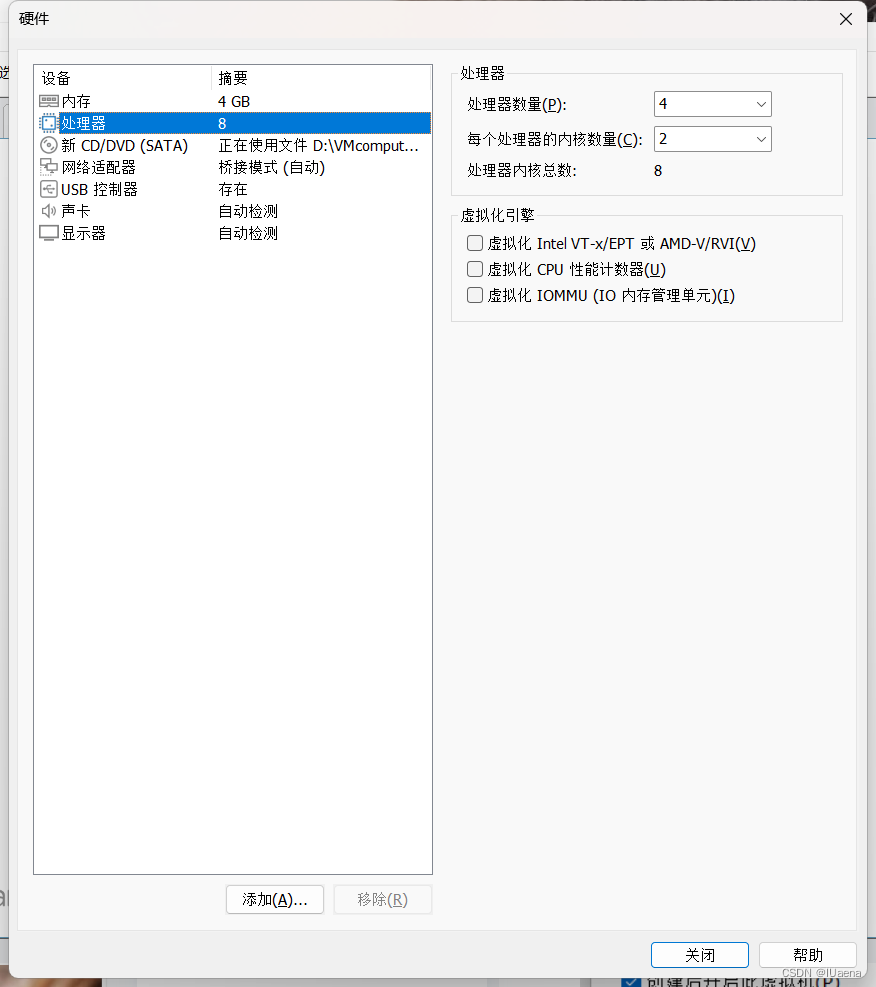

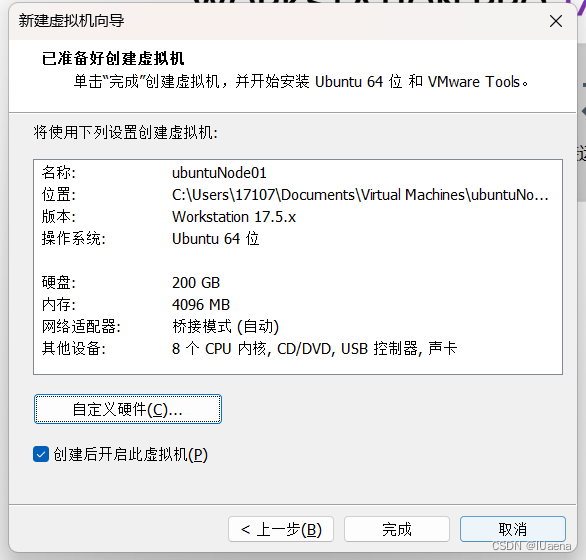

内存可以根据主机实际情况设置4-16G,网络选桥接,处理器选4*2就是八个,如下

点击关闭后再点完成即可等待创建虚拟机

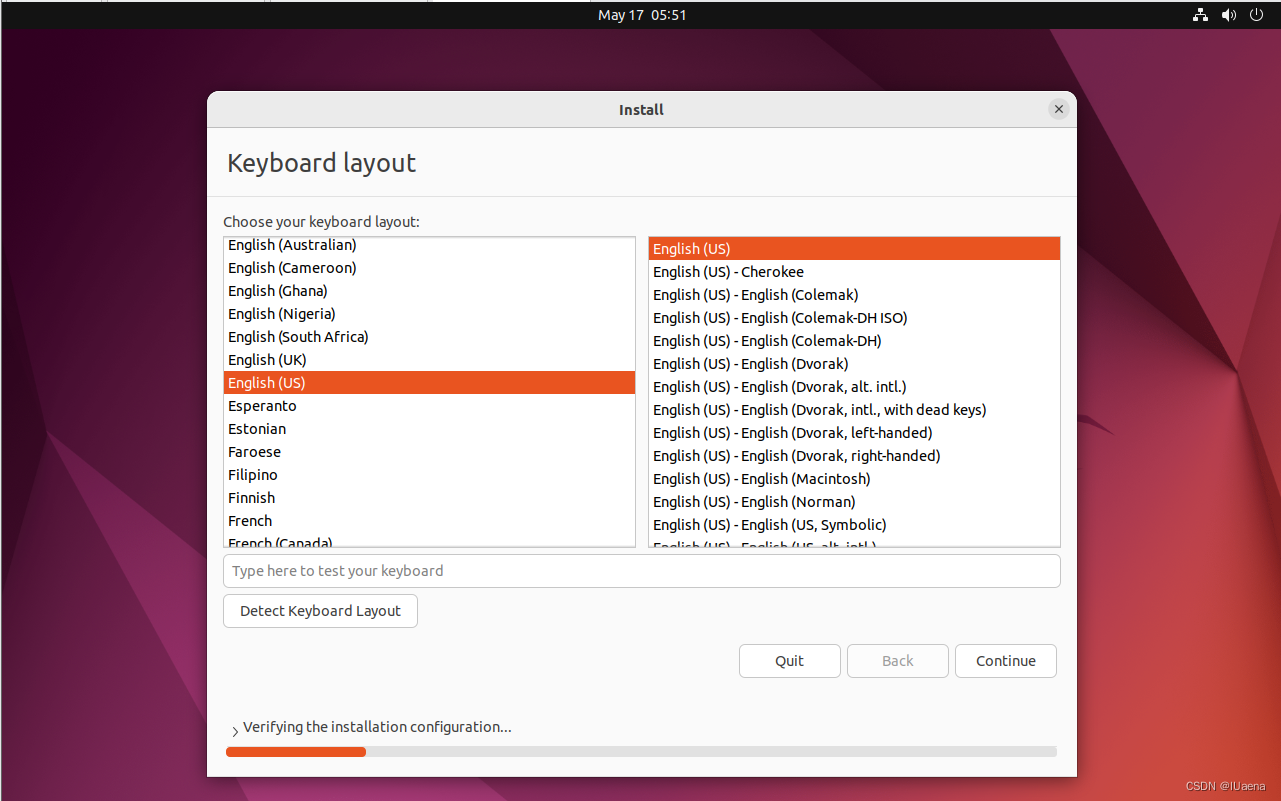

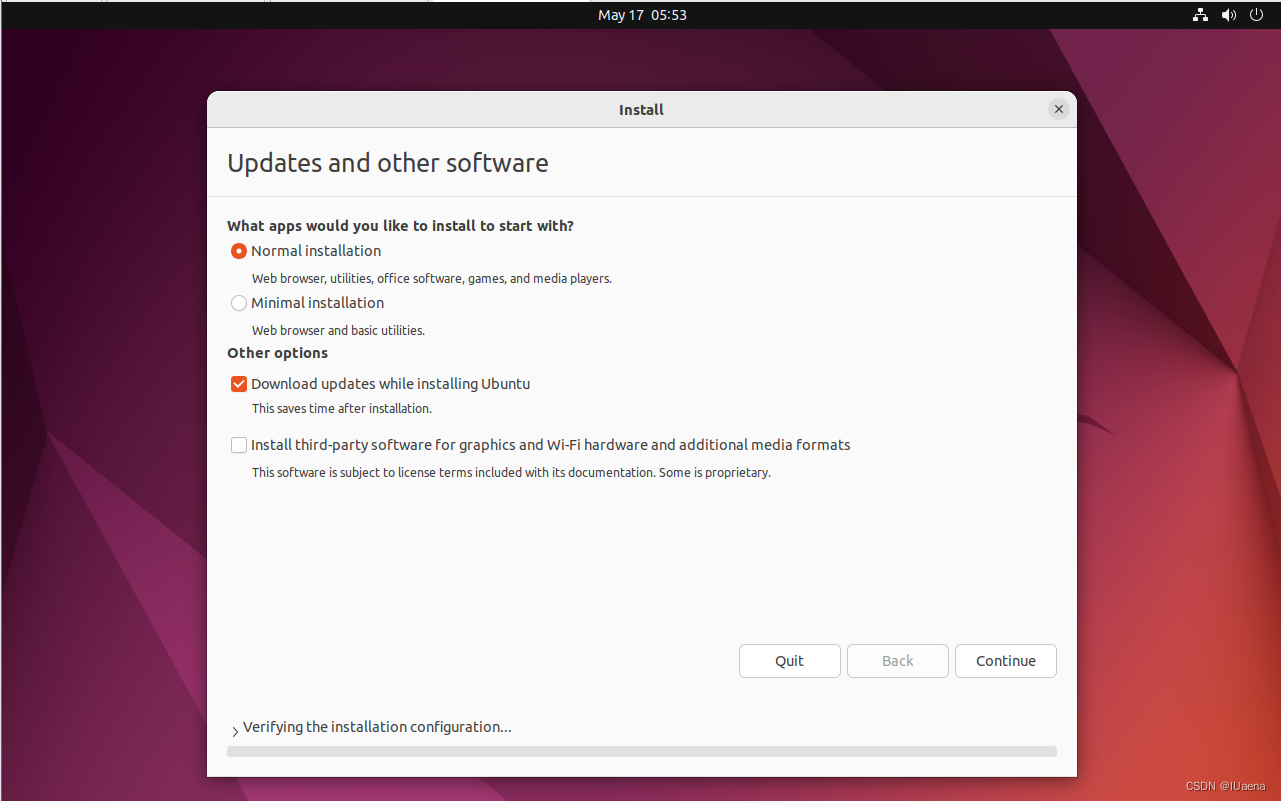

大约等一会就会出现(如果虚拟机平台报错可能是电脑不支持虚拟化或者未开启,若是虚拟化未开启不同的主板可能开启方式不同,可以搜一搜自己的主板如何开启),点击Continue

再次点击Continue

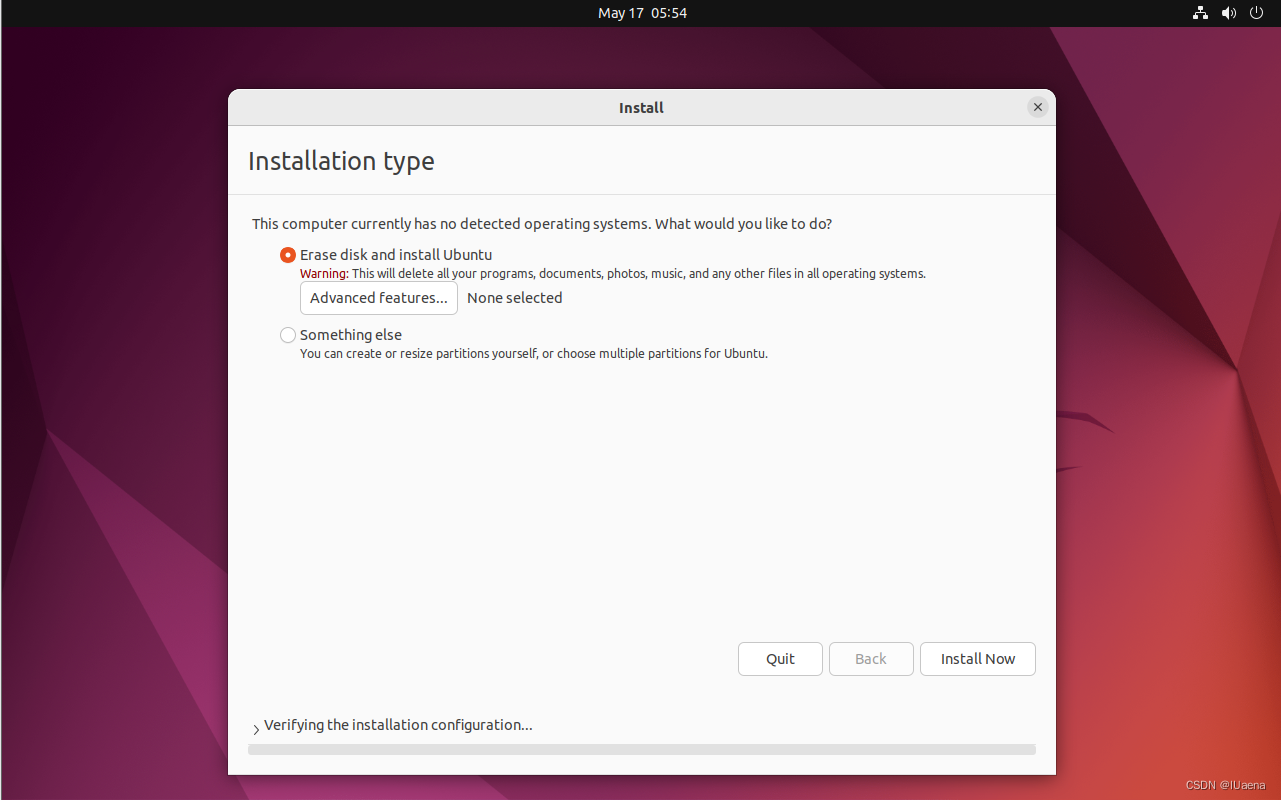

点击Install Now

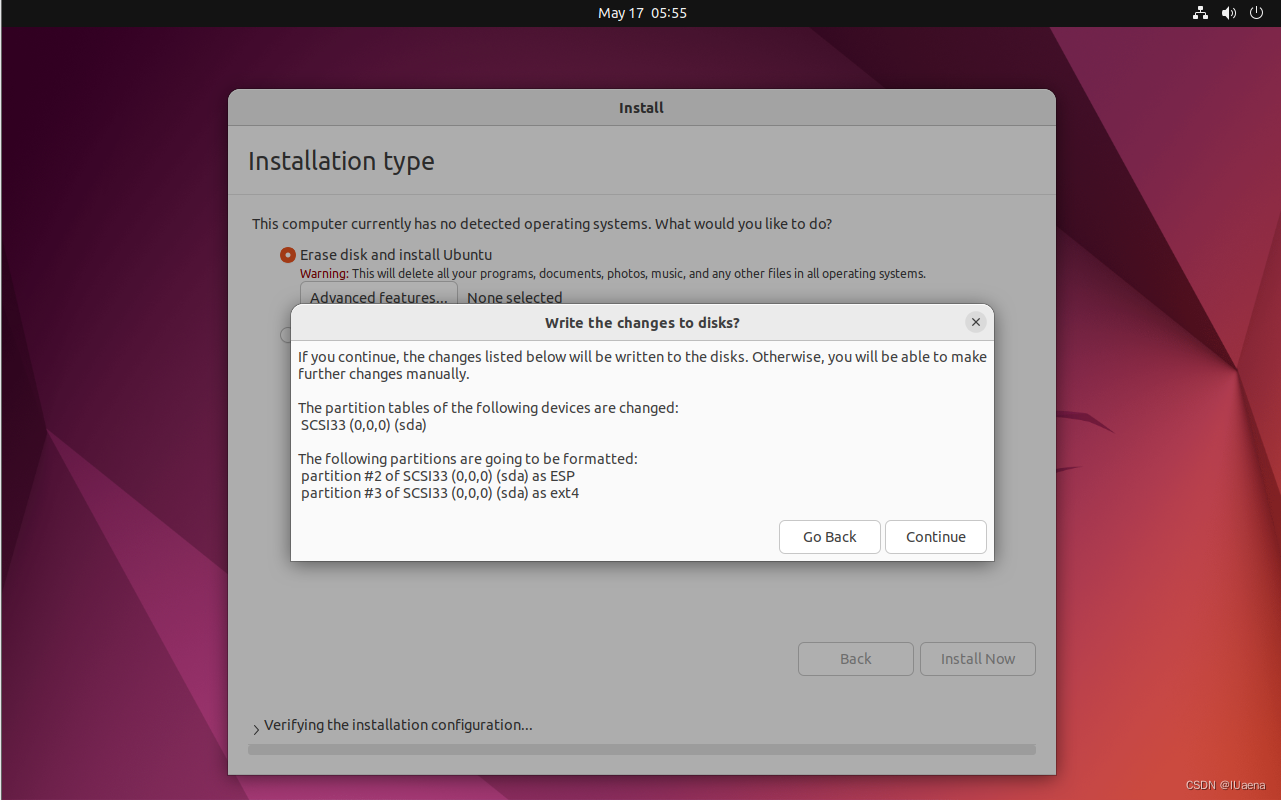

弹出窗口后点击Continue

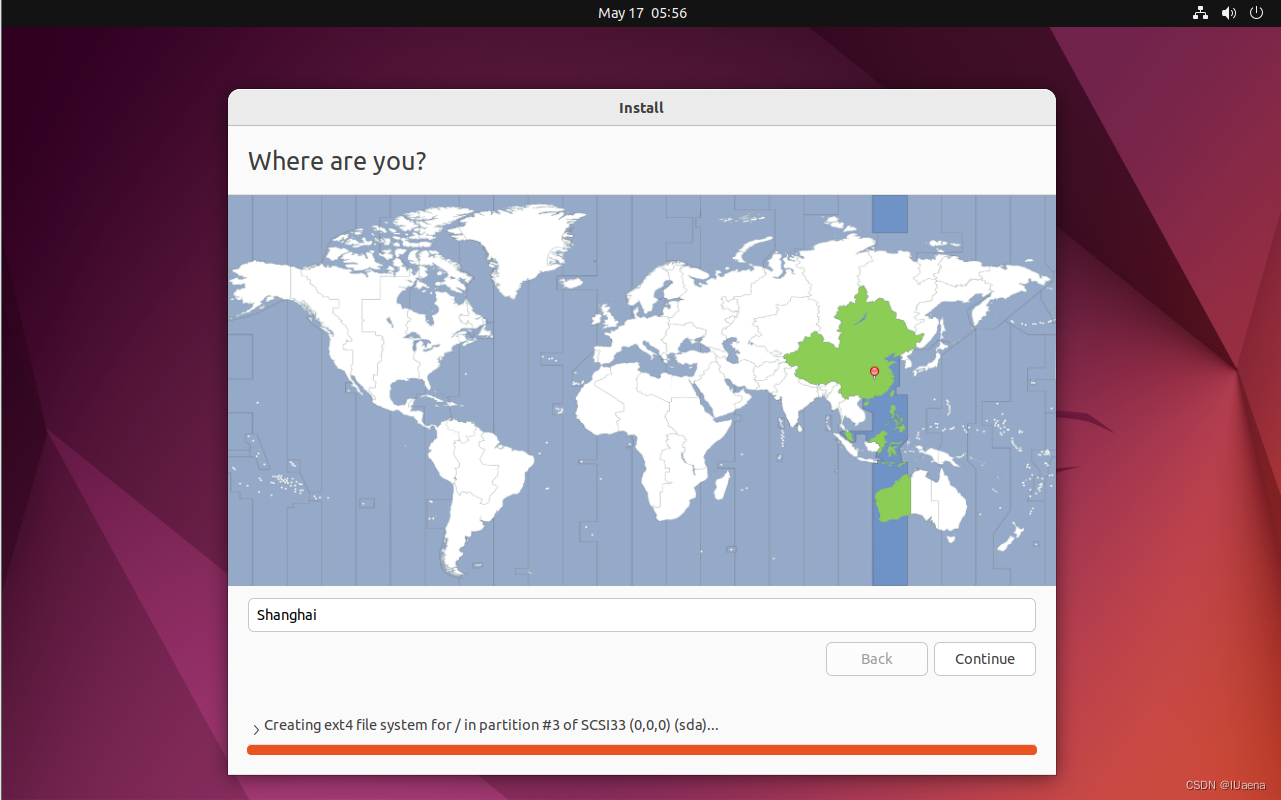

等待一会出现地图,点一下自己的大体位置,然后点Continue

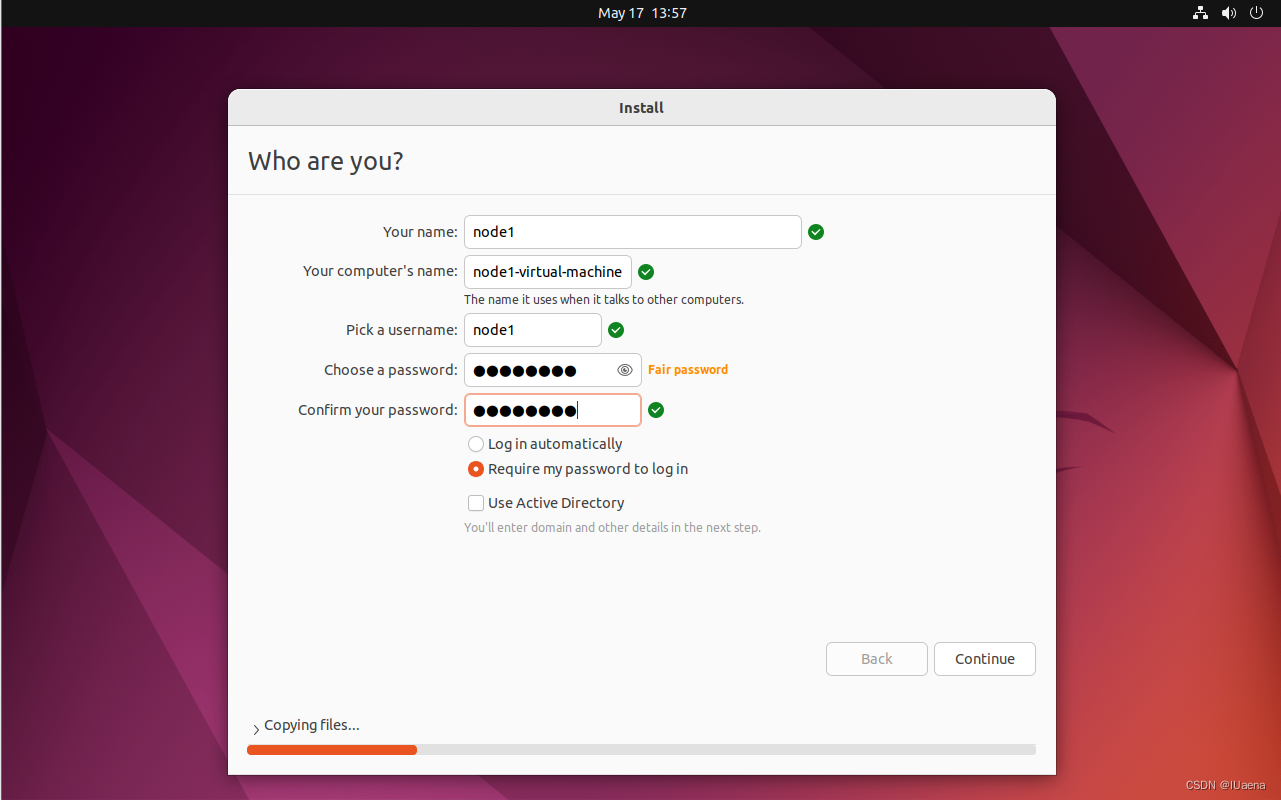

输入信息,密码依旧是12345678,然后点击Continue

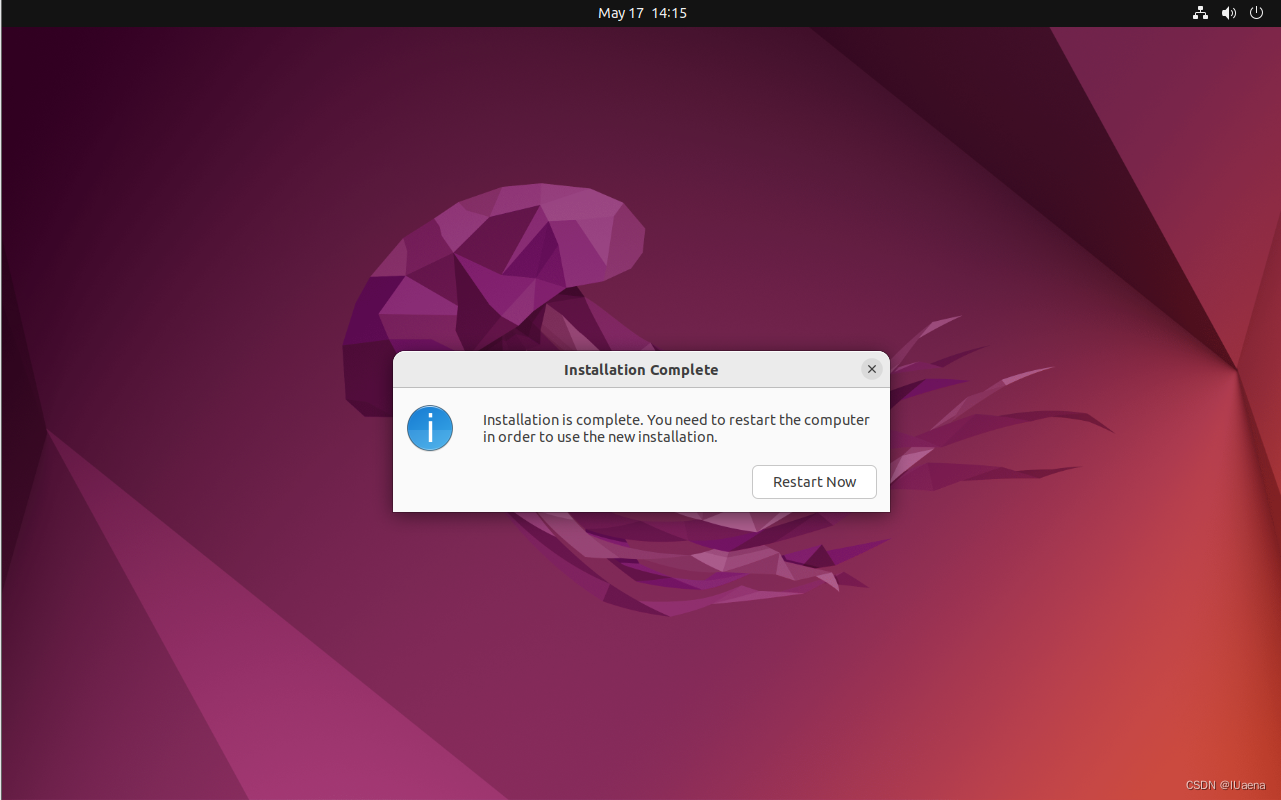

等待一段(很长)时间出现弹窗,点击Restart Now

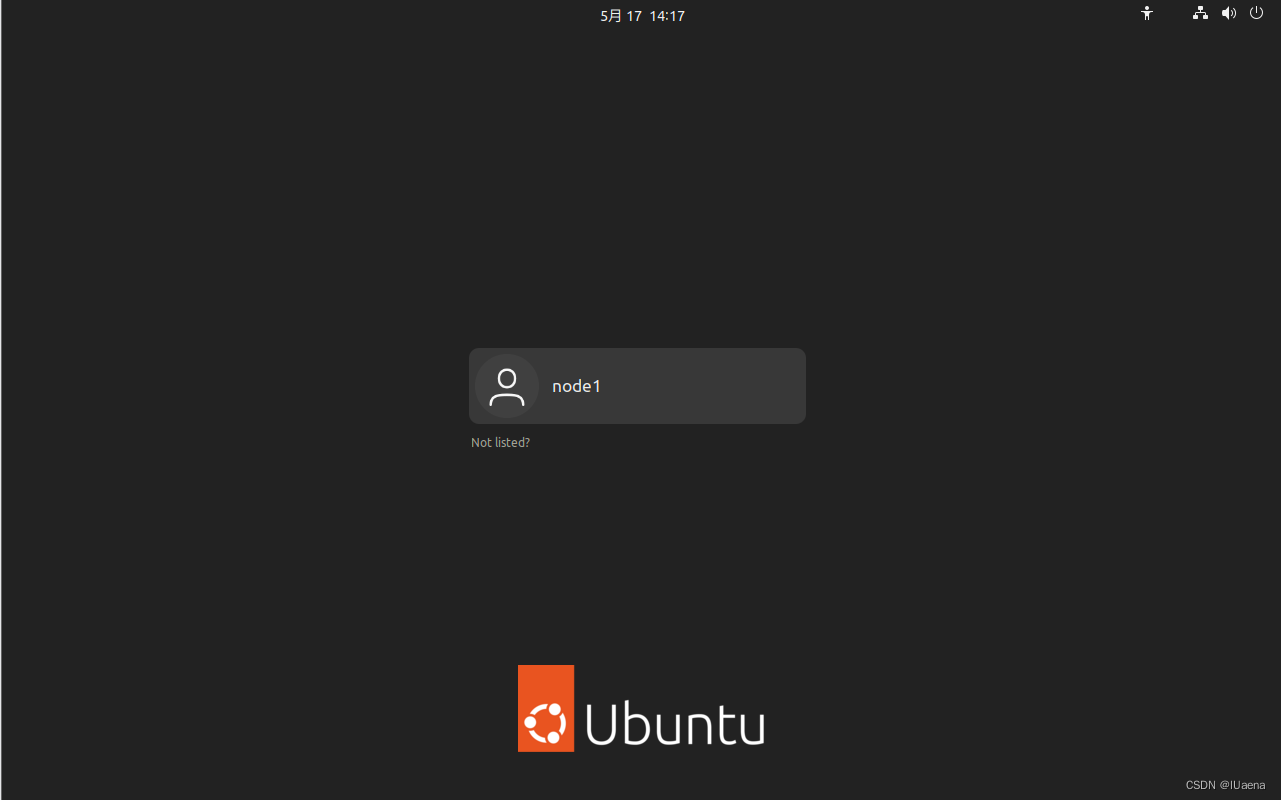

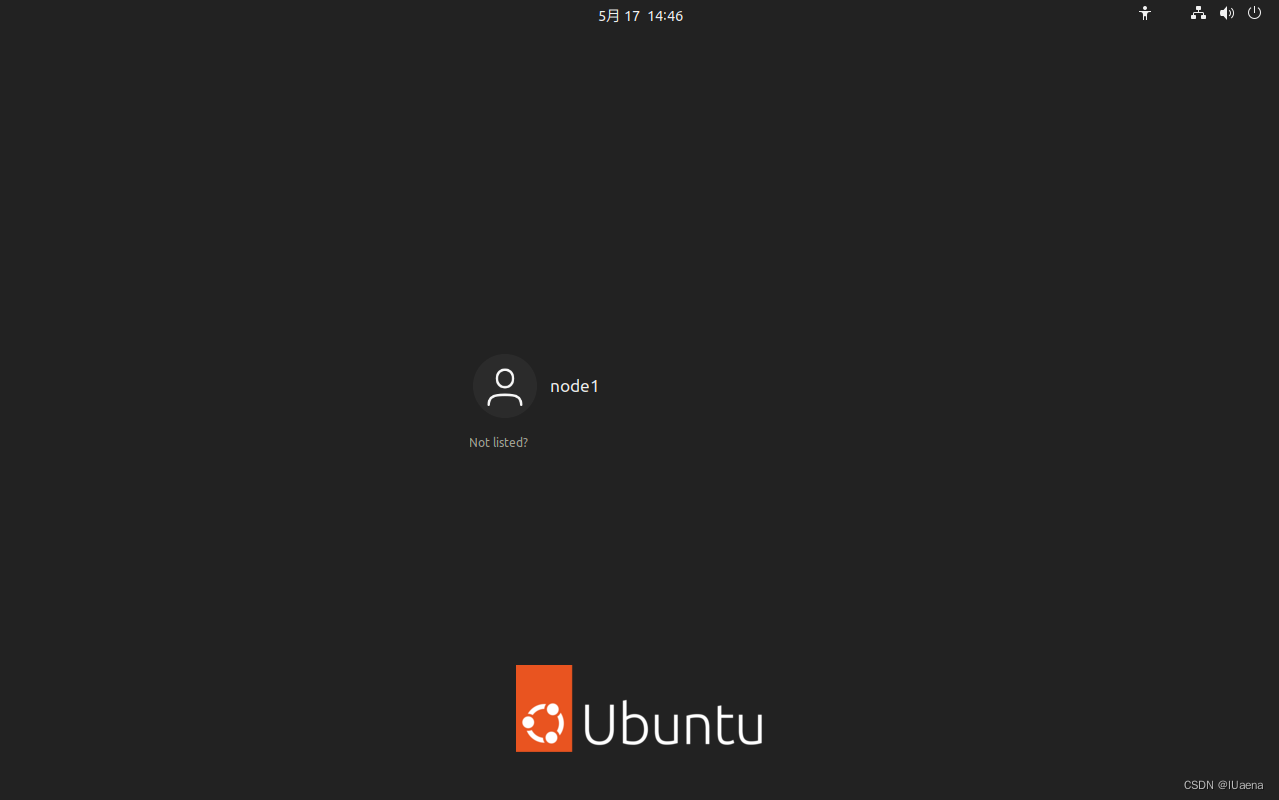

等待重启成功,点击用户输入12345678密码

安装成功,按照此步骤安装另一个虚拟机ubuntuNode2,名称是node1的地方也换成node2(方便区分)。

三、配置虚拟机

分为root用户开放登录、ssh配置、ip固定【记得两台虚拟机都要配置】

1、root用户登录

(1)重新设置密码,终端输入以下命令

sudo passwd root

然后输入12345678按回车,大约要重复三次

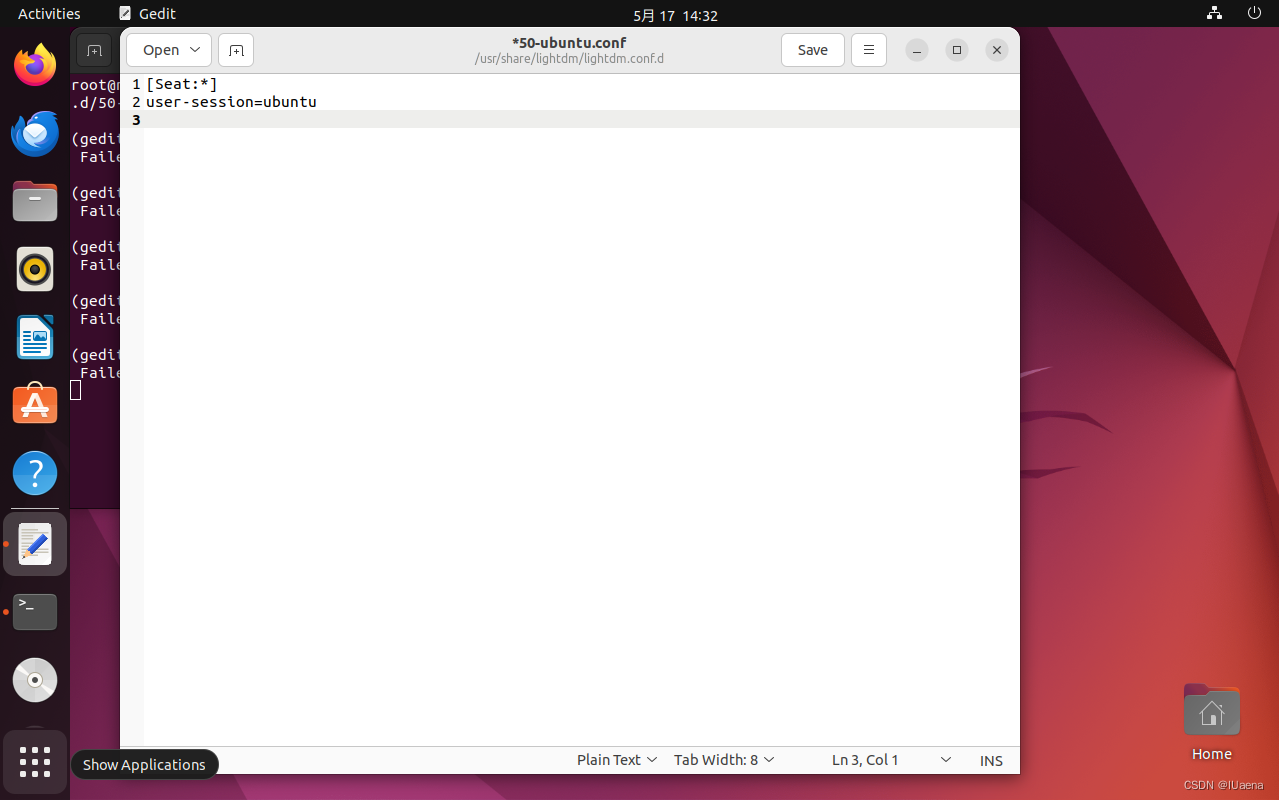

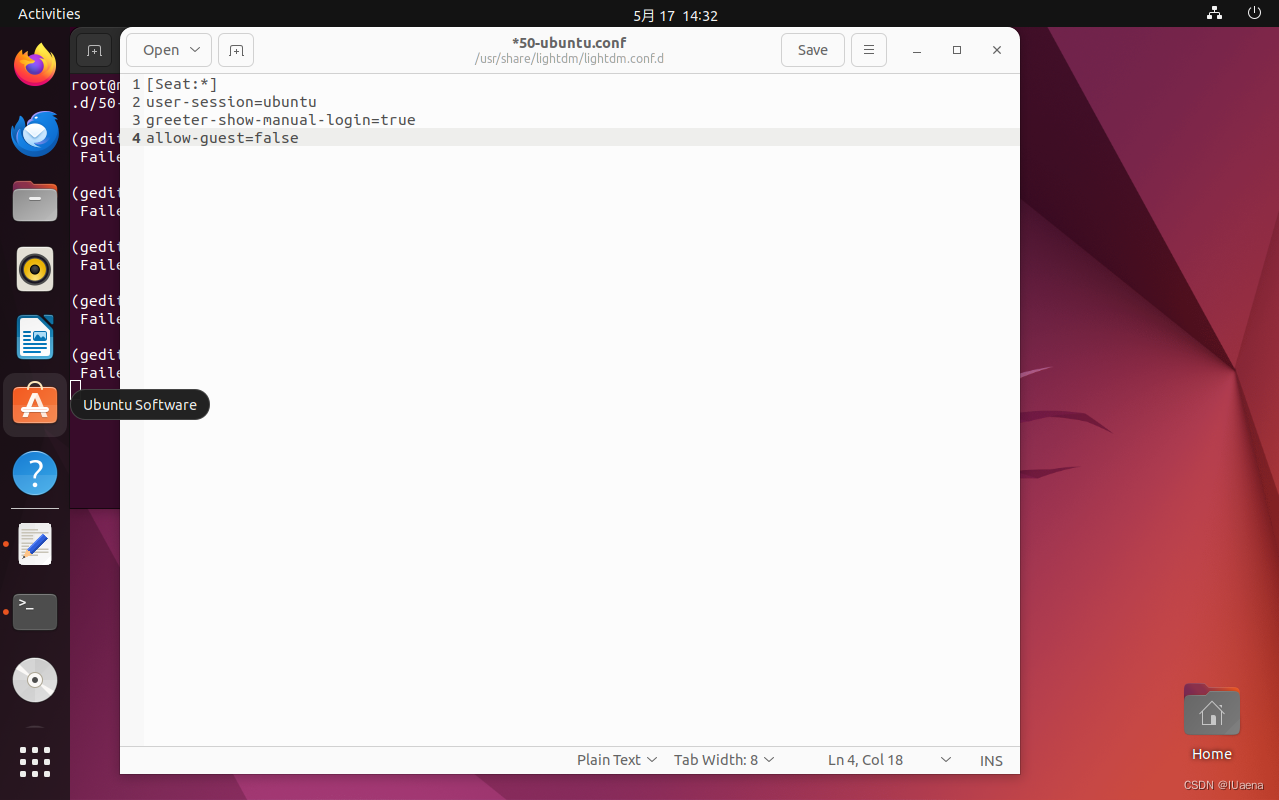

(2)修改50-ubuntu.conf配置文件,终端输入以下命令

sudo gedit /usr/share/lightdm/lightdm.conf.d/50-ubuntu.conf

在原有文件内容的基础上添加以下内容并保存

greeter-show-manual-login=true

allow-guest=false

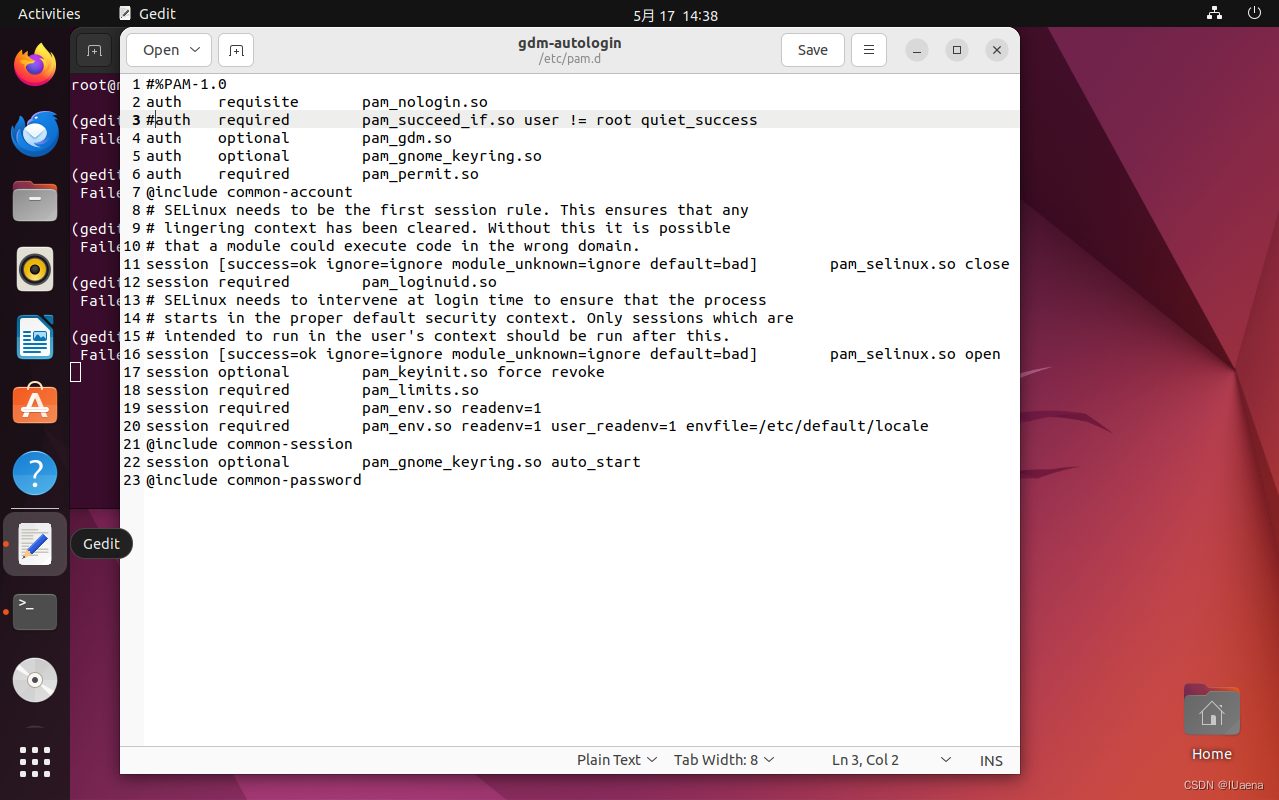

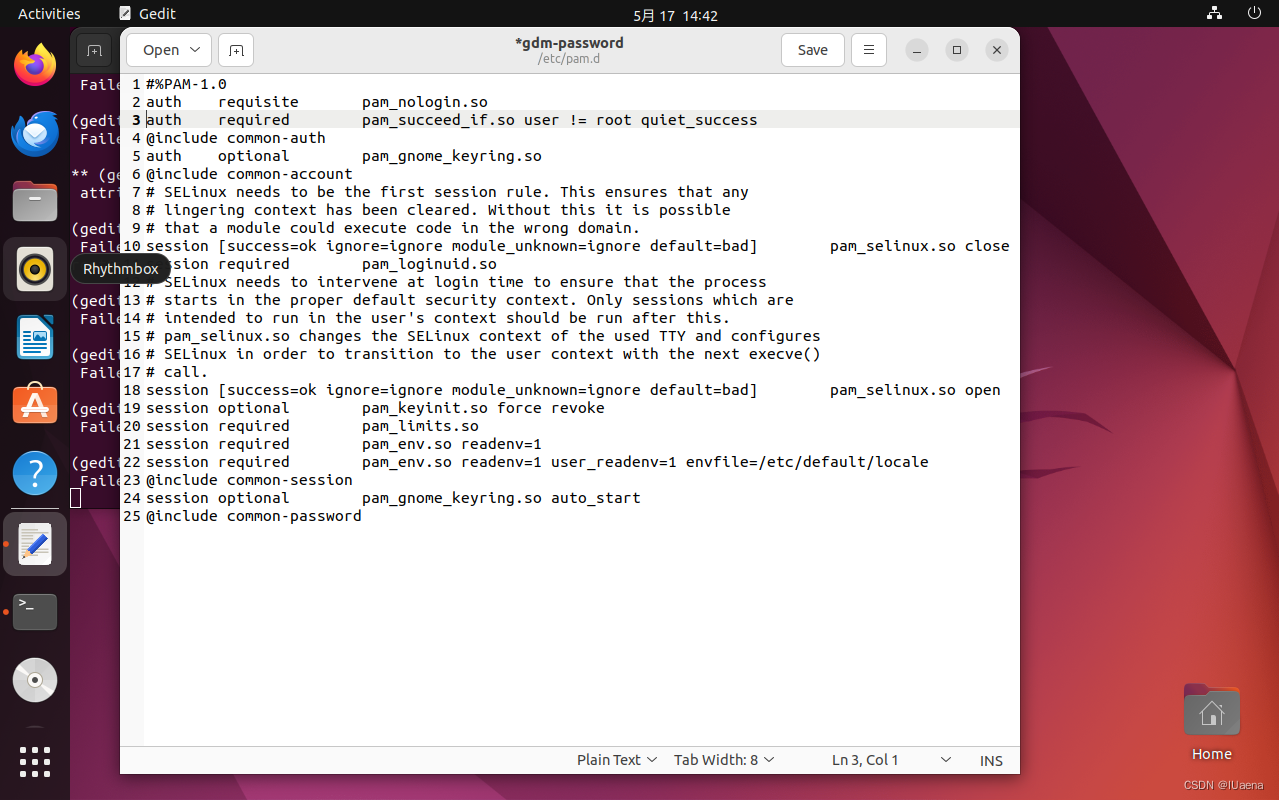

(3)修改gdm-autologin配置文件,终端输入以下命令

sudo gedit /etc/pam.d/gdm-autologin

注释掉第三行的内容并保存,也就是auth required pam_succeed_if.so user != root quiet_success

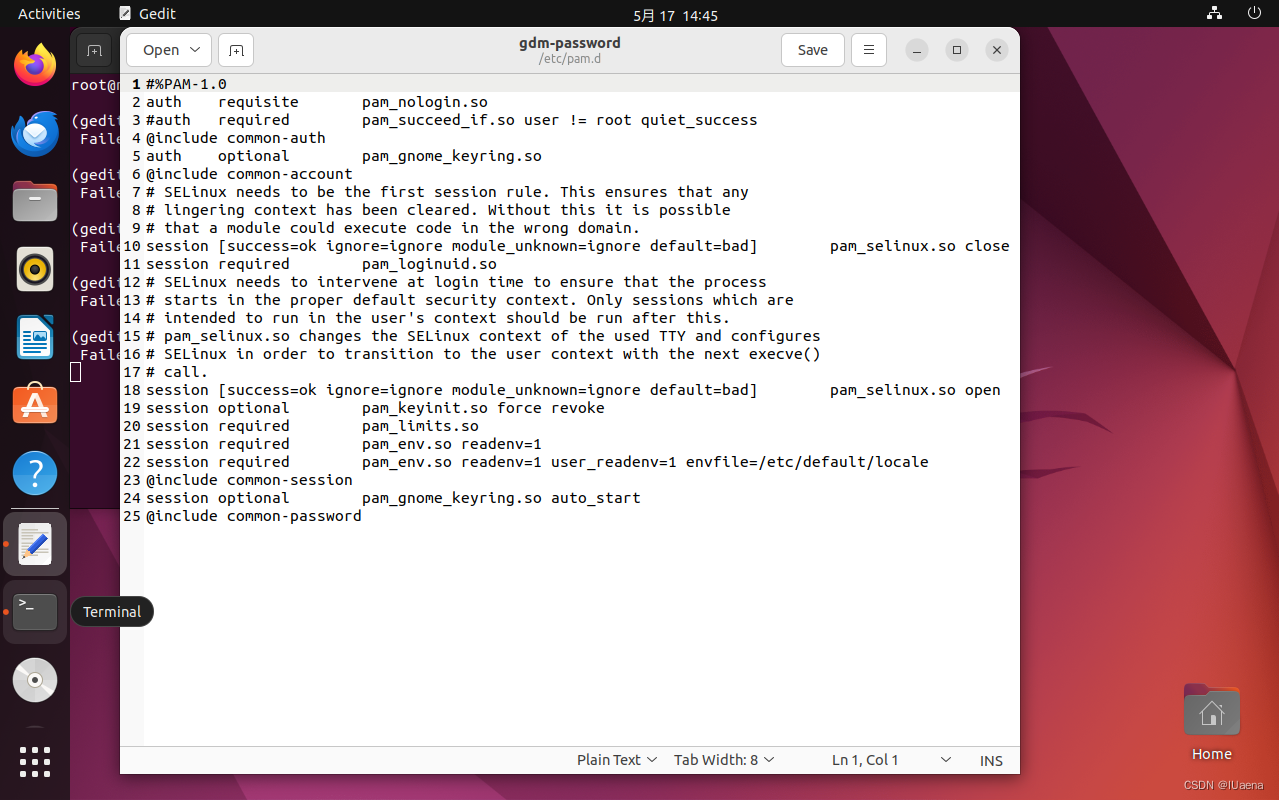

(3)修改gdm-password配置文件,终端输入以下命令

sudo gedit /etc/pam.d/gdm-password

注释掉第三行内容并保存,也就是auth required pam_succeed_if.so user != root quiet_success

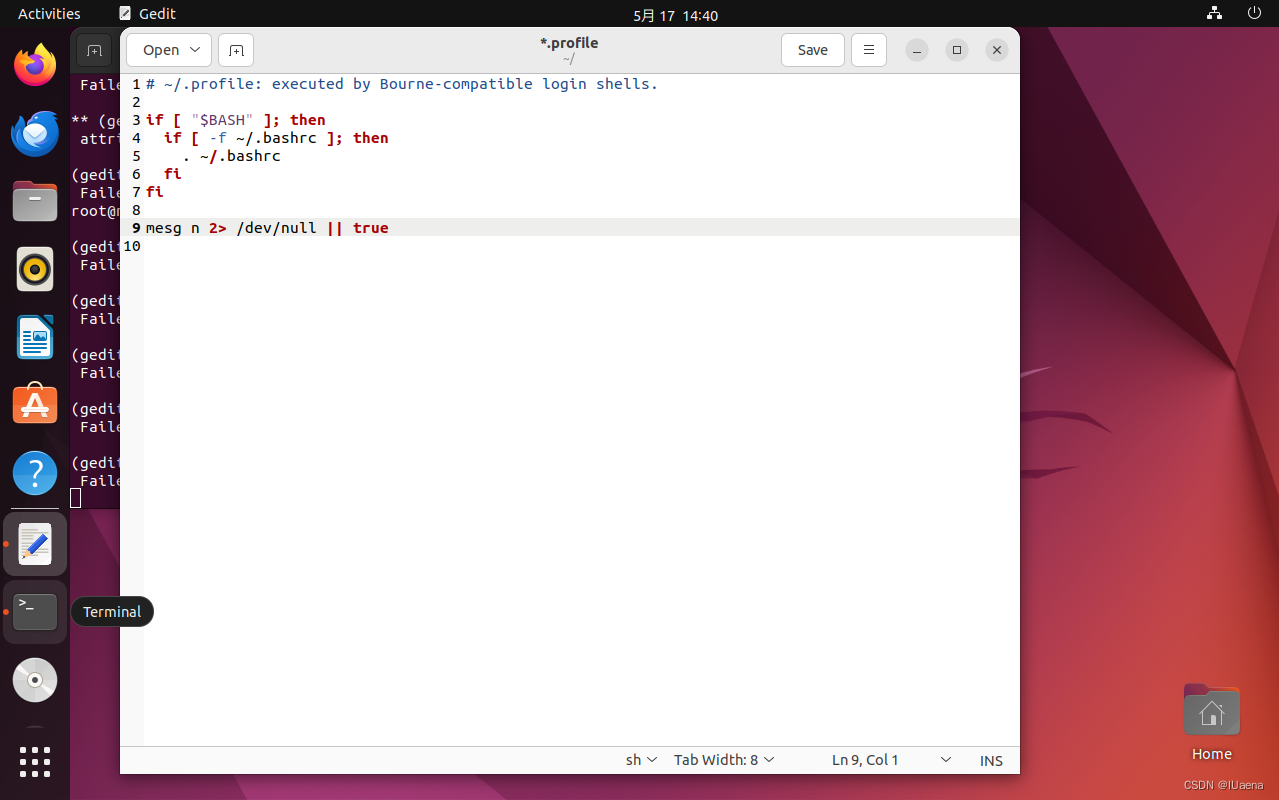

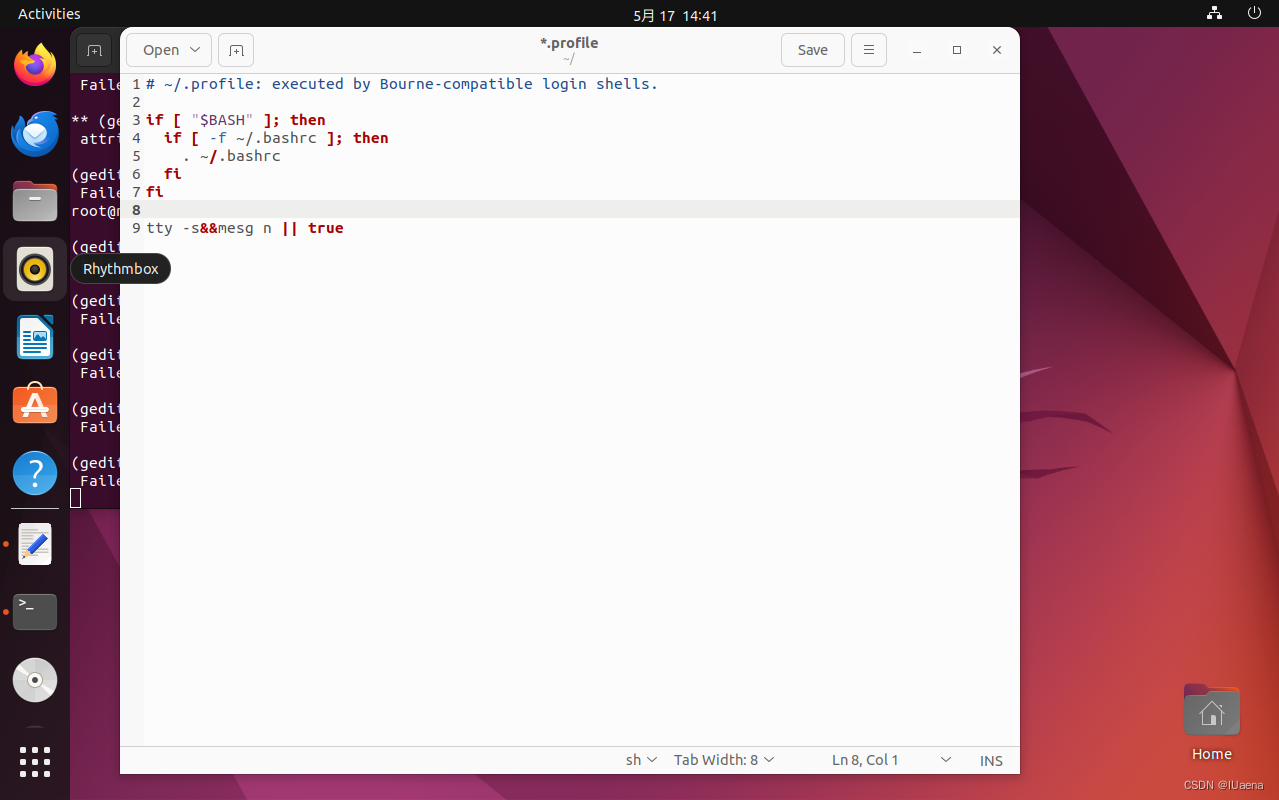

(4) 修改.profile配置文件,终端输入以下命令

sudo gedit /root/.profile

将最后一行替换为以下内容并保存

tty -s&&mesg n || true

然后终端输入reboot重启虚拟机

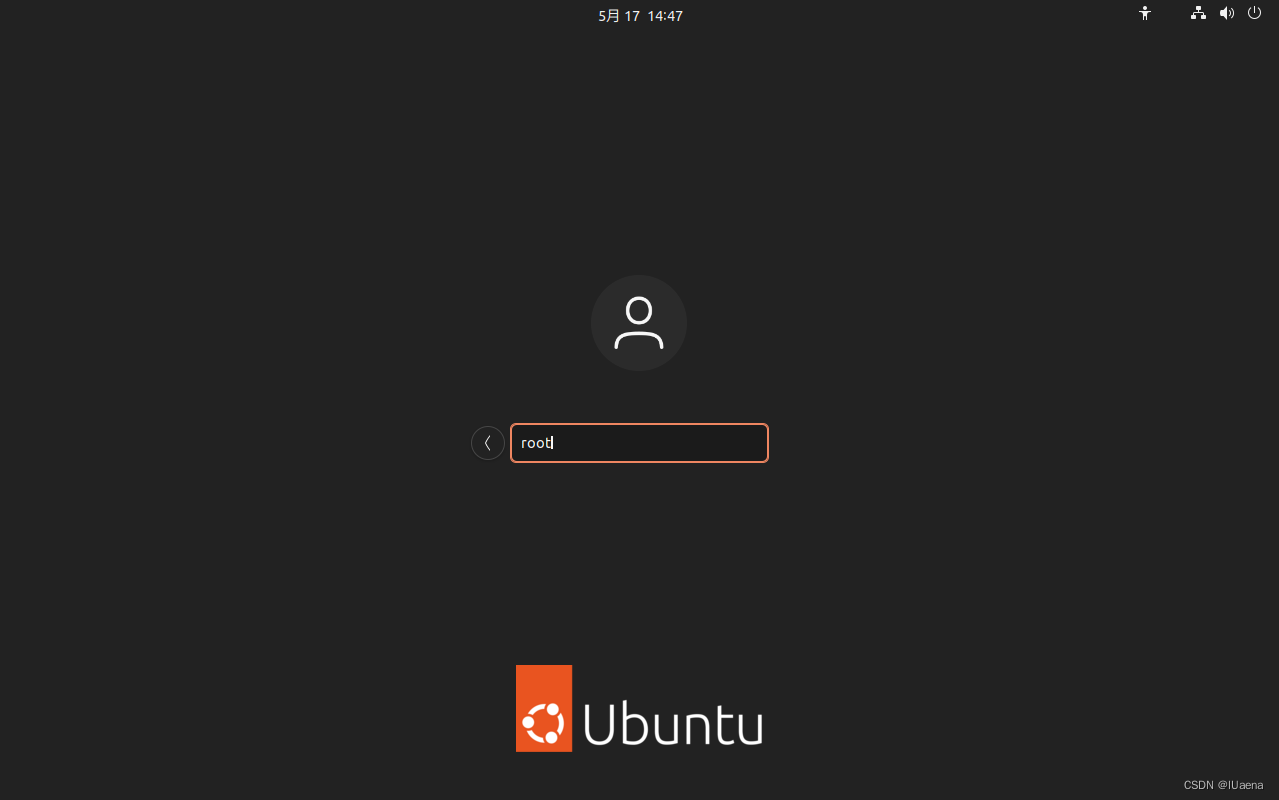

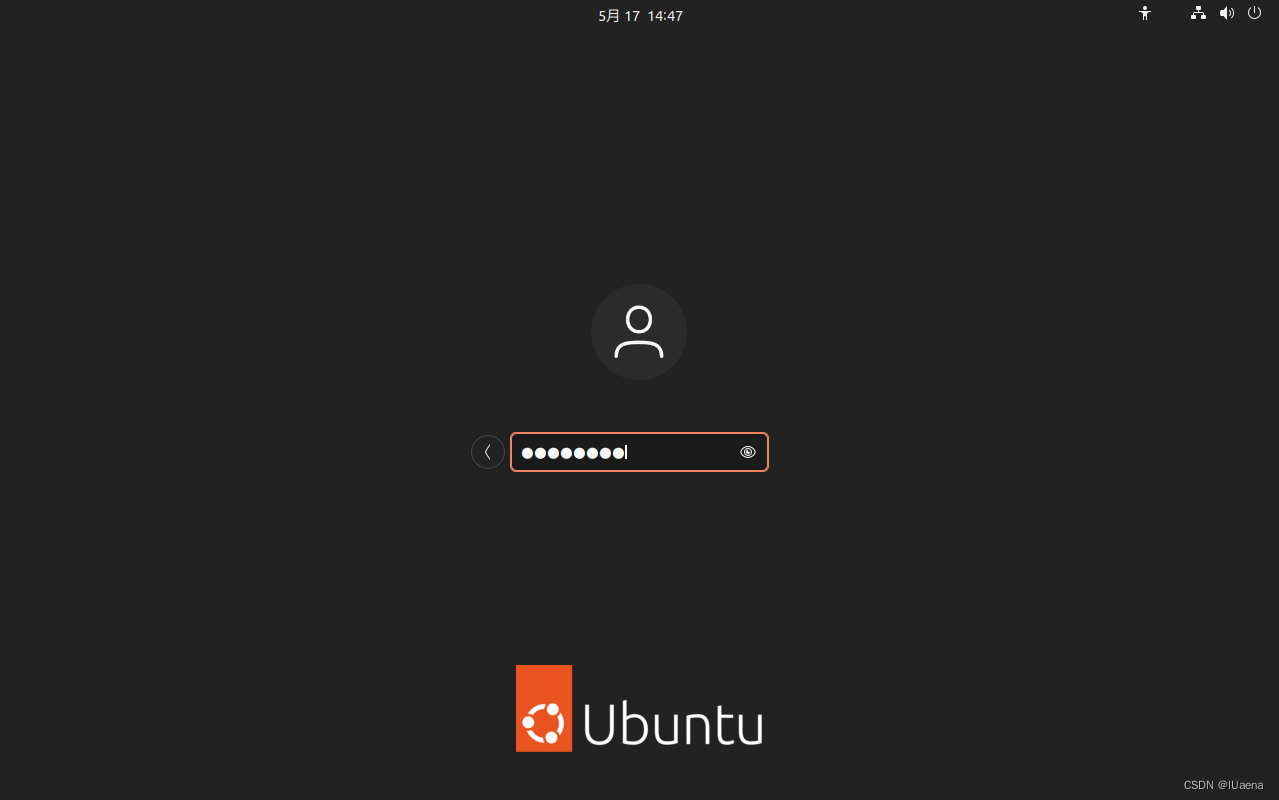

点击Not listed,然后输入用户名root,再输入密码12345678,再按回车

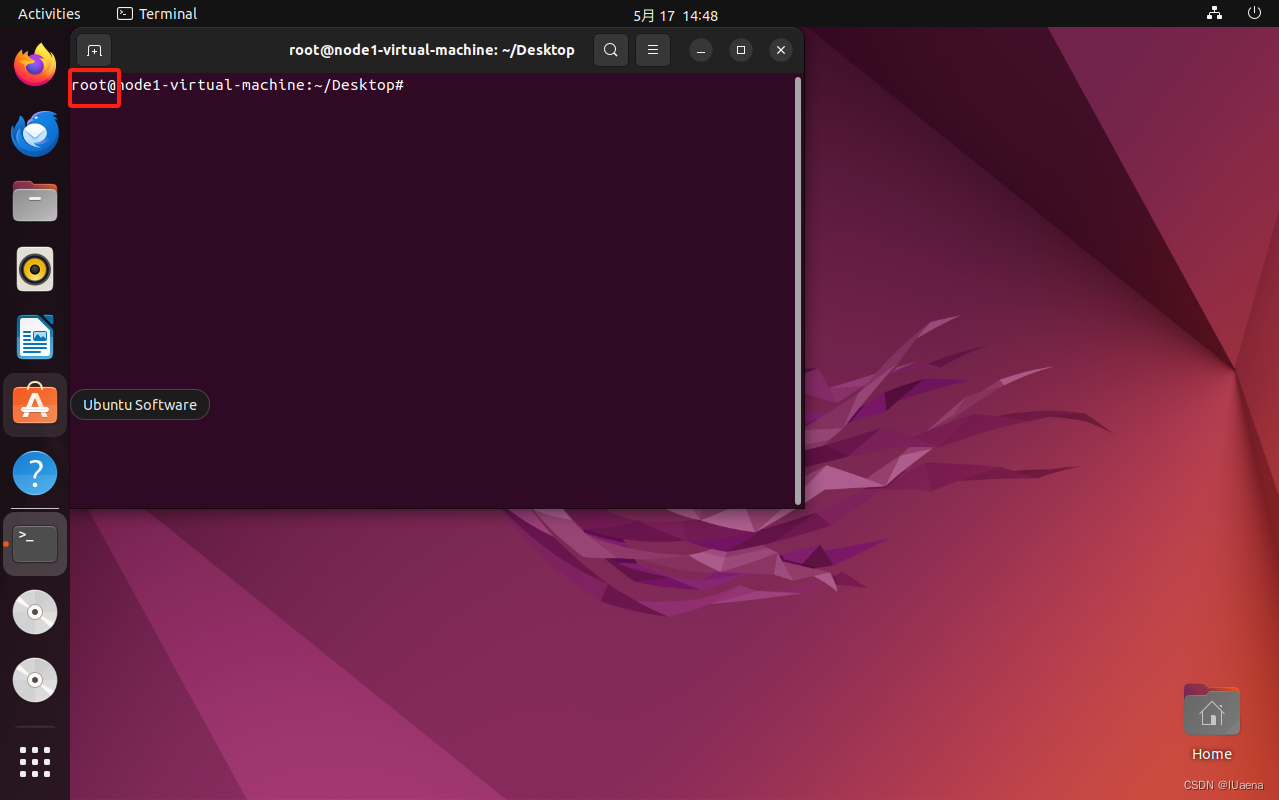

打开终端,发现左侧用户为root,即为配置成功

注意两台虚拟机都要配置

2、ssh配置

这里需要apt下载ssh服务,apt源感觉ubuntu的就很快,所以这边就没换

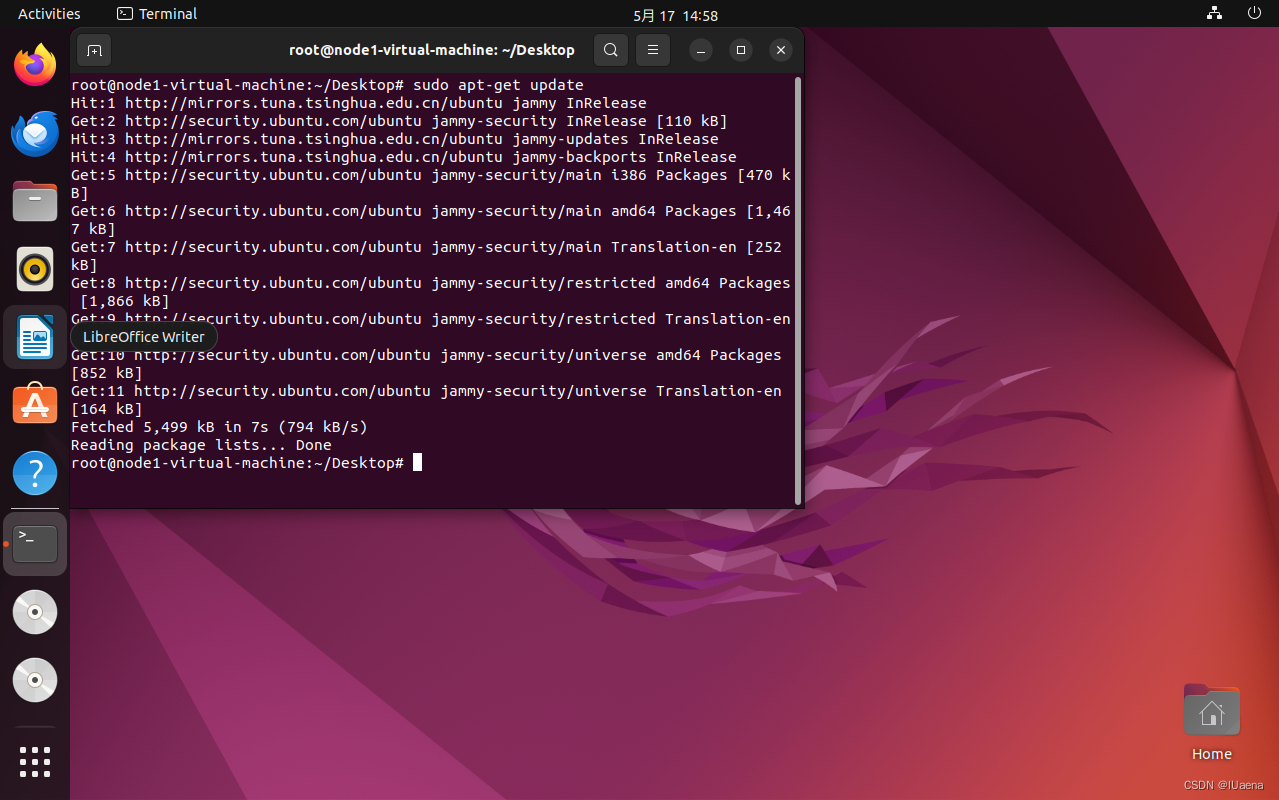

(1)输入以下命令更新apt

sudo apt-get update

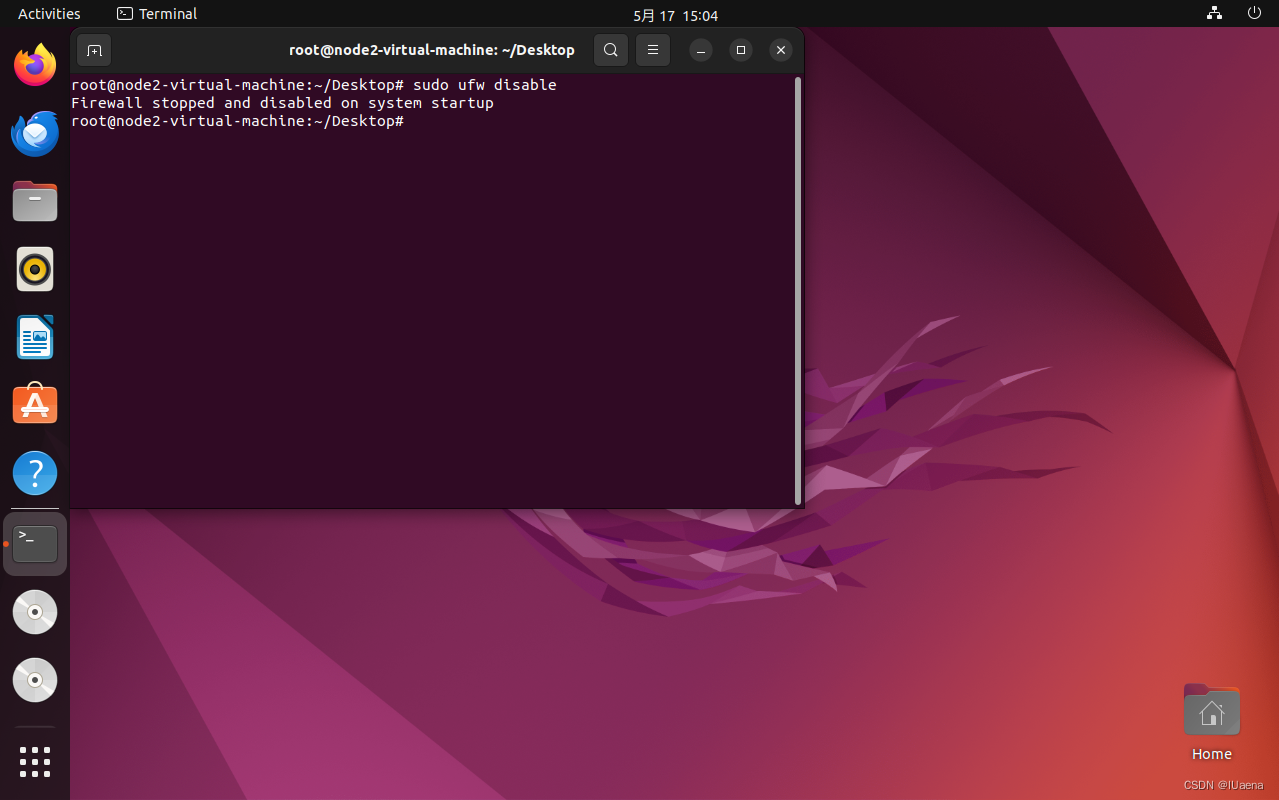

终端输入以下命令关闭防火墙

sudo ufw disable

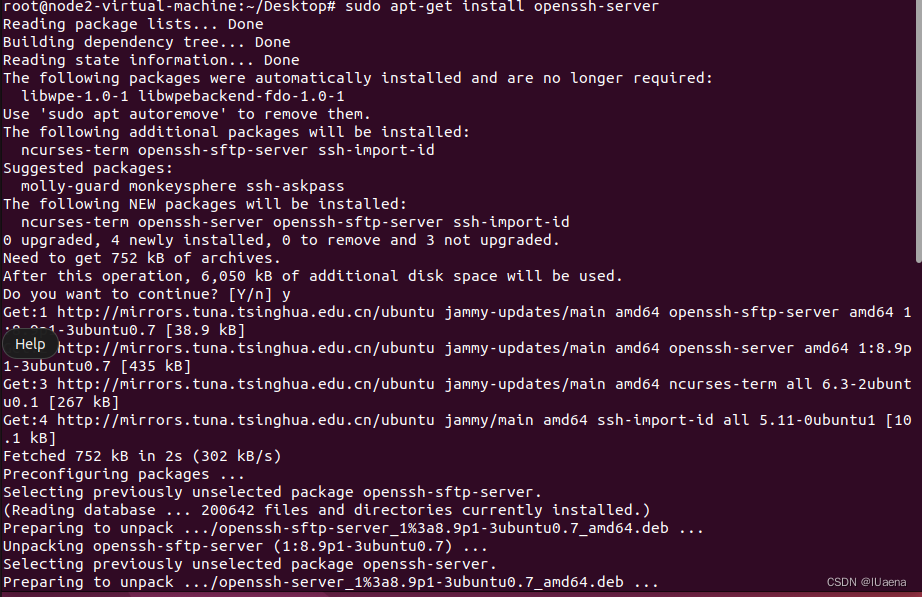

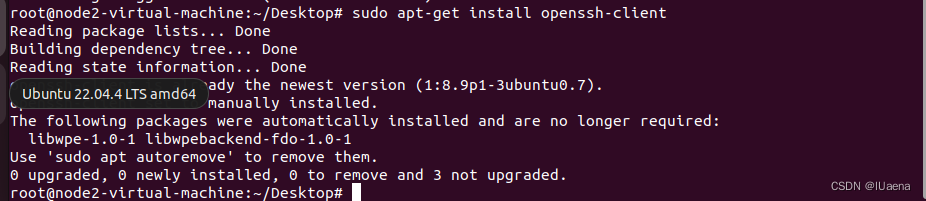

(2)终端分别输入以下两个命令下载ssh的客户端和服务端

sudo apt-get install openssh-server

sudo apt-get install openssh-client

(3)修改配置文件,终端输入以下命令

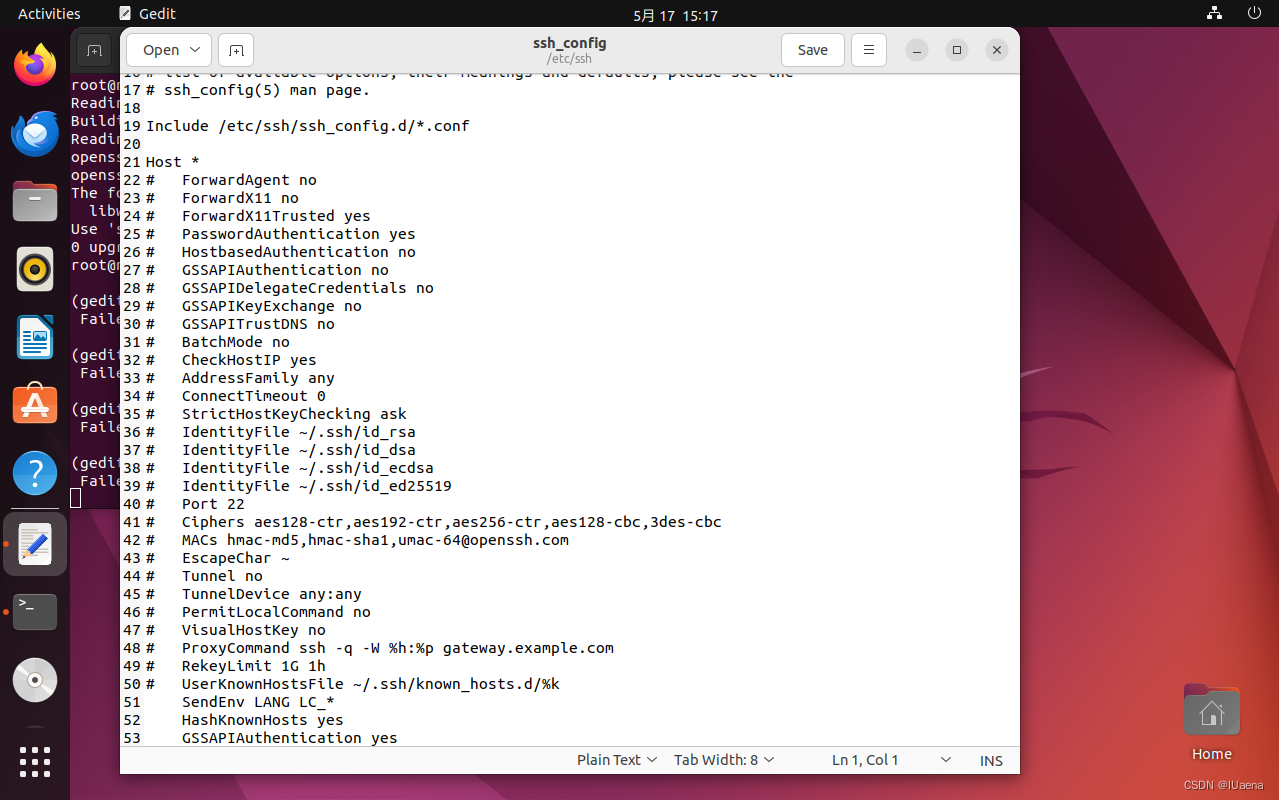

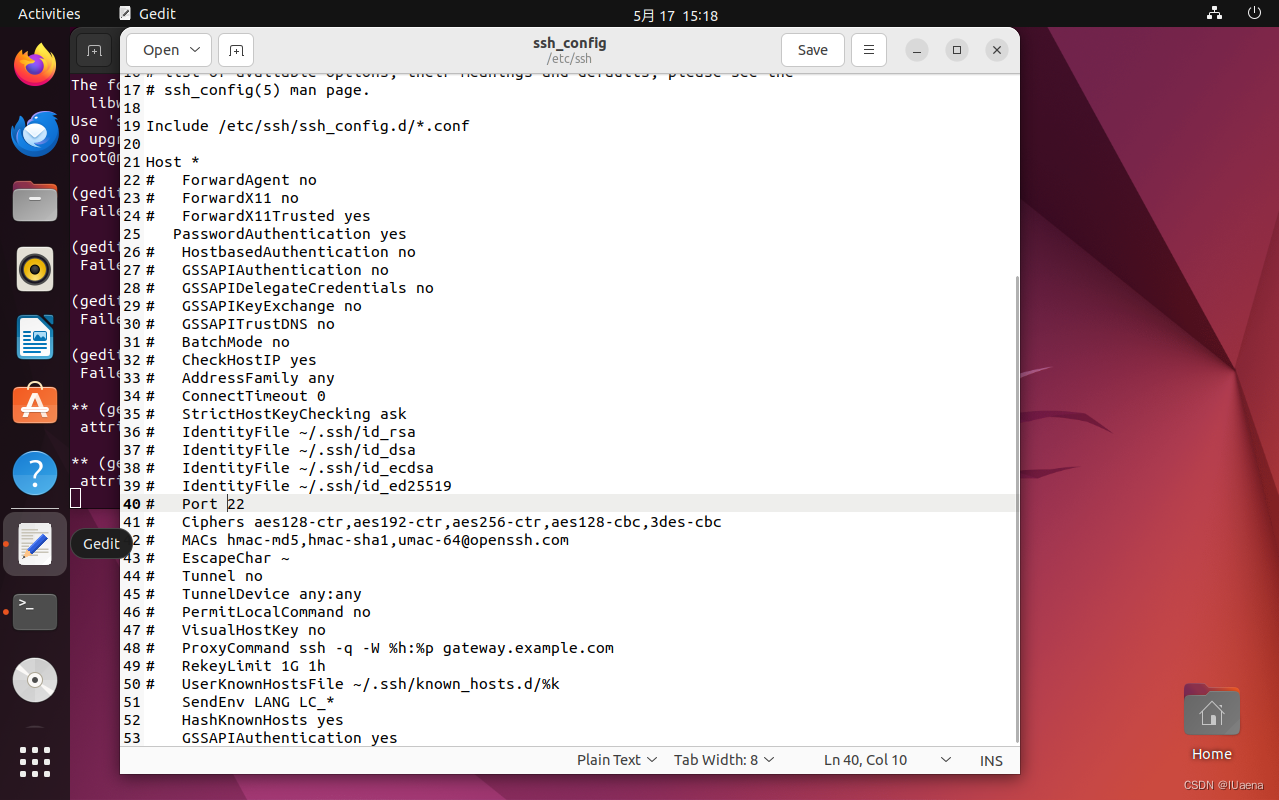

sudo gedit /etc/ssh/ssh_config

删除第25行的#号并保存,也就是PasswordAuthentication yes

(4)修改配置文件,终端输入以下命令

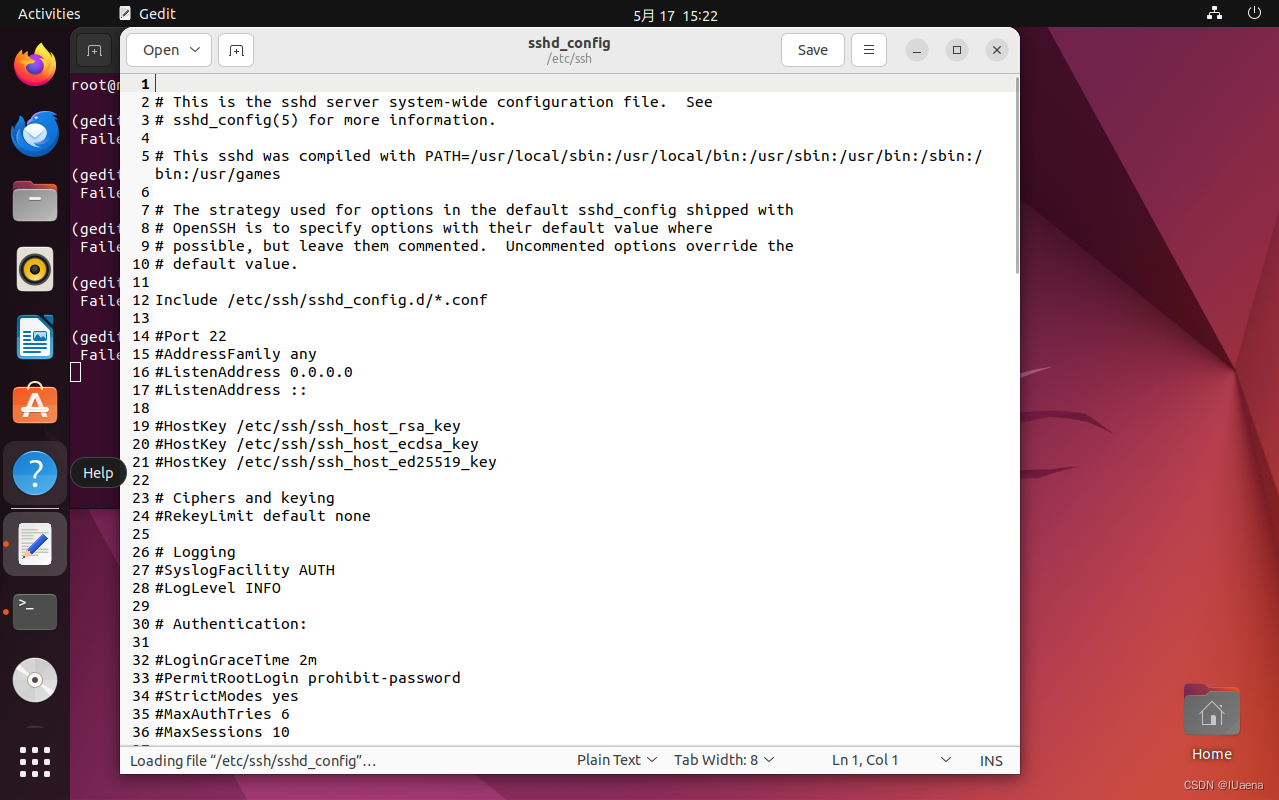

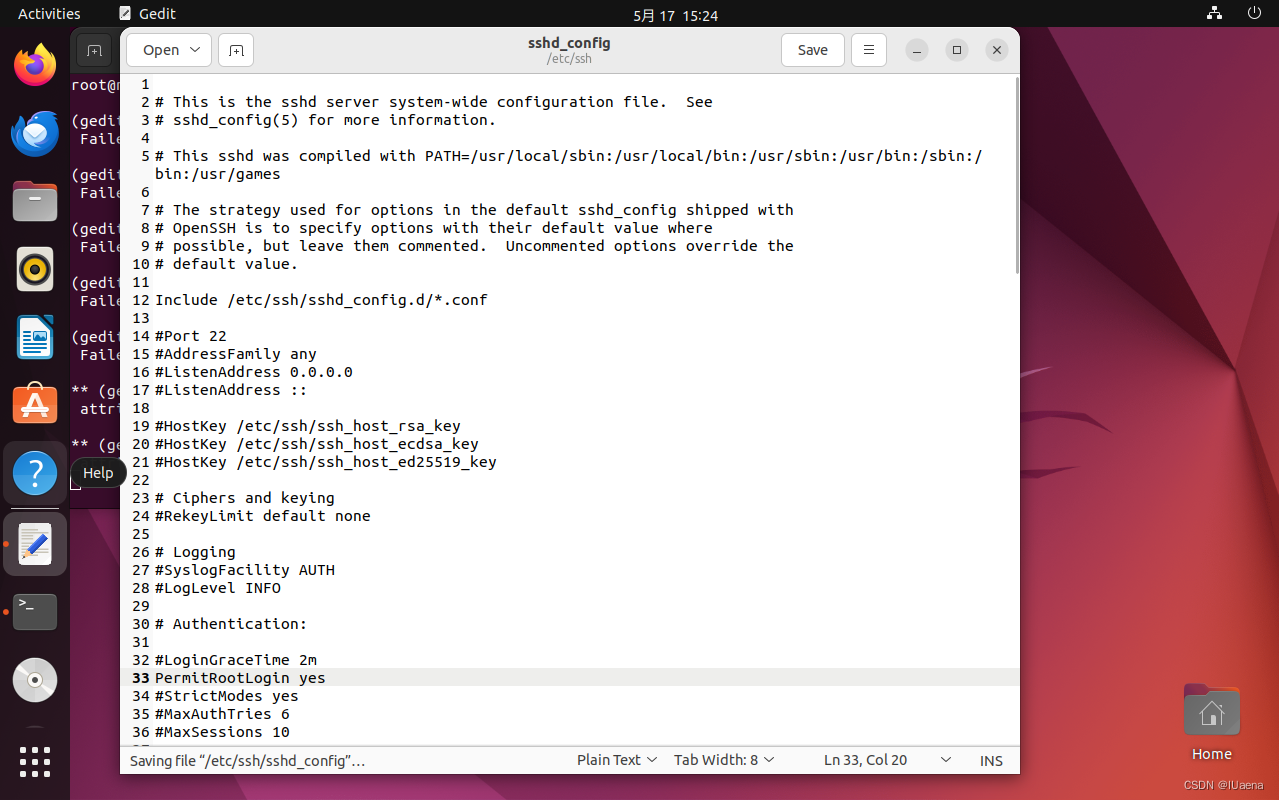

sudo gedit /etc/ssh/sshd_config

修改33行为以下内容,并保存

PermitRootLogin yes

(5)链接测试

首先终端输入reboot重启一下机器

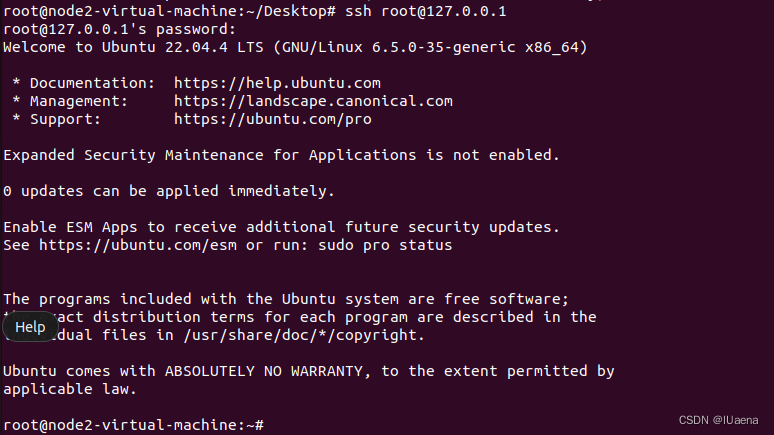

reboot机器重启成功后输入ssh root@127.0.0.1,连接本机测试。第一次链接可能要输入yes,输入yes回车后需要输入密码,密码就是机器密码12345678

ssh root@127.0.0.1出现以下信息即为链接成功

注意两台虚拟机都要配置

3、IP固定

这里要注意两台机器要固定不同的IP,可别一点不改的复制

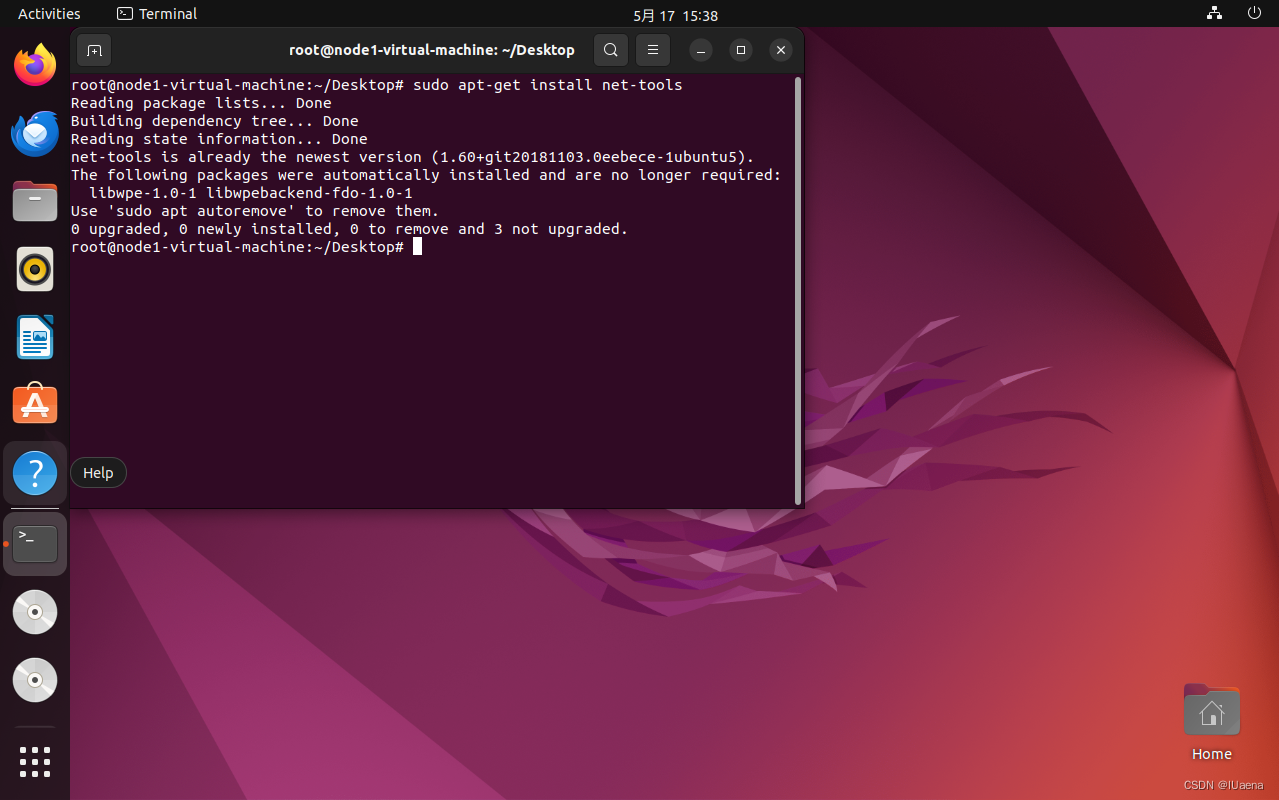

(1)使用ifconfig查看自动分配的ip,在终端中输入

sudo apt-get install net-tools

输入以下命令查看虚拟机IP,记住你的网段名称,我这里是ens33

ifconfig

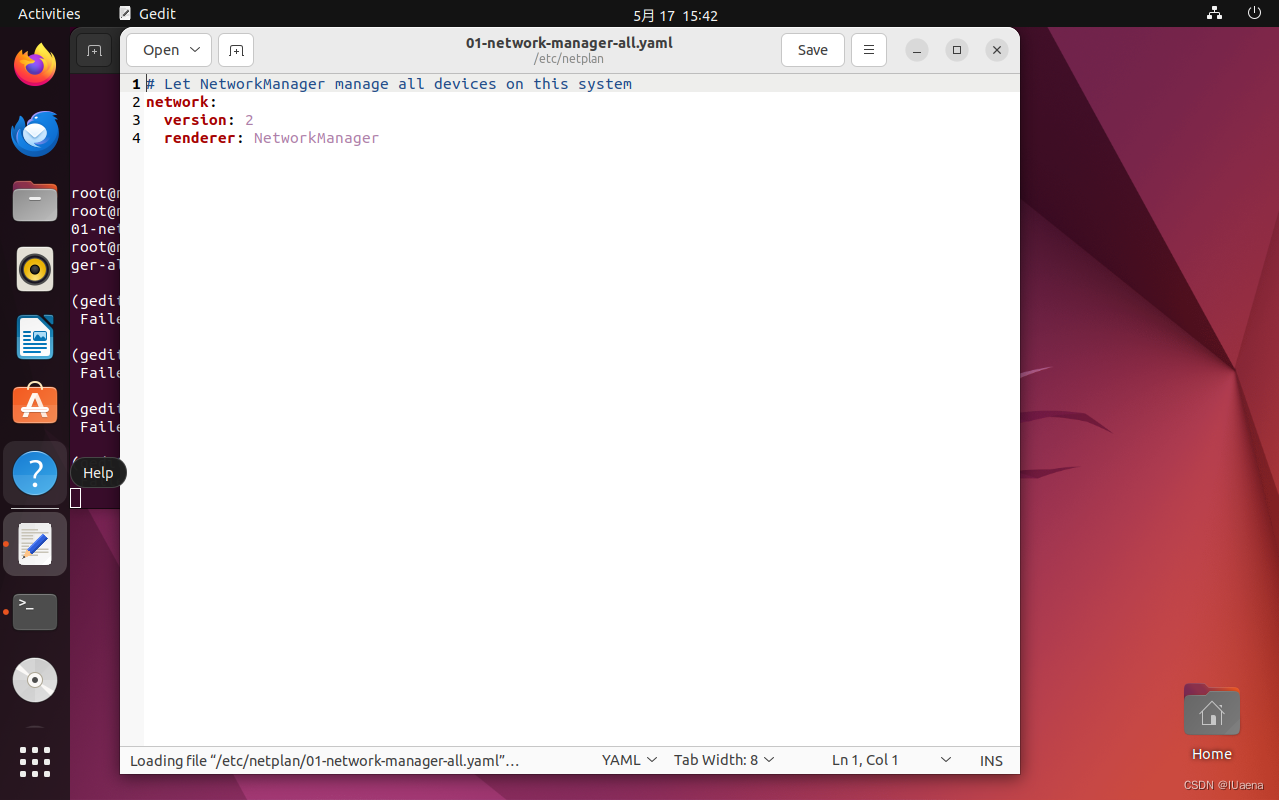

(2)修改配置文件,终端输入以下命令

sudo gedit /etc/netplan/01-network-manager-all.yaml

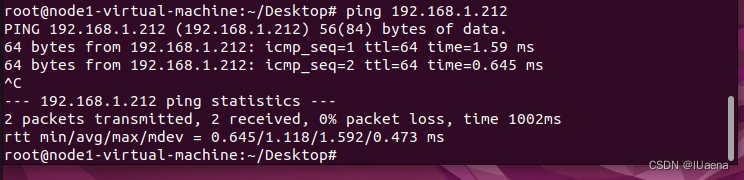

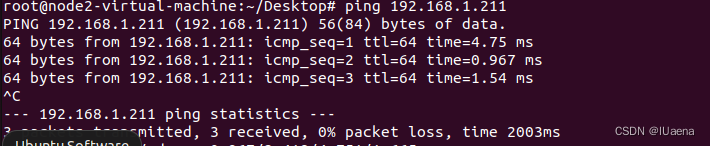

讲以下内容代替此文件内容,下面ens33换成自己ifconfig看到的网段名。ip选一个自己局域网内没被占用的就行。然后保存【两个虚拟机都要设置,我这边一个ip设置211,另一个设置212】

# This is the network config written by 'subiquity'

network:

ethernets:

ens33:

dhcp4: false

addresses: [192.168.1.211/24]

gateway4: 192.168.1.1

nameservers:

addresses: [114.114.114.114]

enp2s0:

dhcp4: true

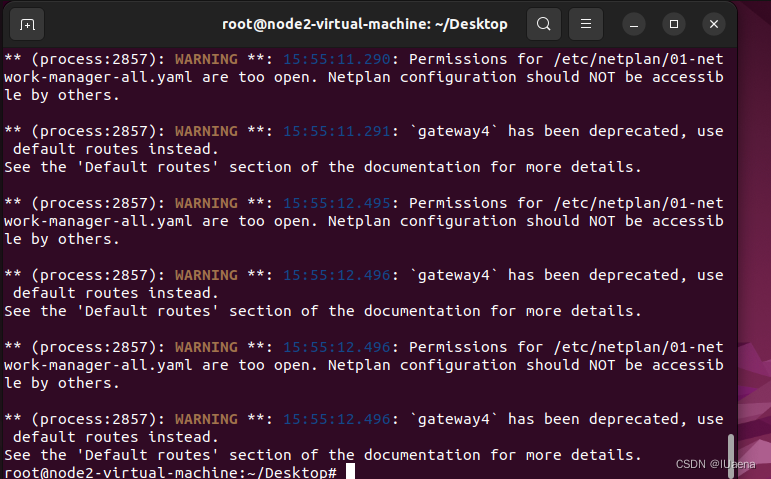

version: 2终端输入以下命令让配置生效

sudo netplan apply

互相ping一下看看是否可以ping通

四、整体环境安装

1、下载四个插件

apt-get install socat

apt-get install conntrack

apt-get install ebtables

apt-get install ipset

apt-get install curl两个机器都要下载

然后基本就是kubesphere官网的离线安装教程了,网址在这里(有些内容下载很慢,可以找个梯子):离线安装 (kubesphere.io)也可以选一个更低版本的装。

这边也跟着官网来一遍:

2、制品制作

制品只需要一个虚拟机制作就可以了

先在ubuntu内找个位置建一个文件夹,所有操作文件都放在里面:

cd /

mkdir kk

cd /kk然后下载kubeky,输入命令等待下载:

export KKZONE=cn

curl -sfL https://get-kk.kubesphere.io | VERSION=v3.0.7 sh -下载完成会自动解压然后是这样的

如果下载不下来可以下载我这里的kk,版本就是v3.0.7:v3.3的v3.0.7的kk资源

然后继续按照官网上说的

创建配置文件

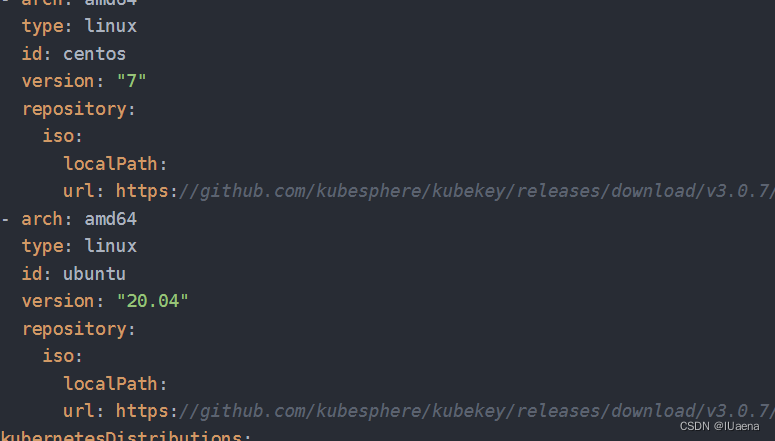

vim manifest.yaml

内容为

---

apiVersion: kubekey.kubesphere.io/v1alpha2

kind: Manifest

metadata:

name: sample

spec:

arches:

- amd64

operatingSystems:

- arch: amd64

type: linux

id: centos

version: "7"

repository:

iso:

localPath:

url: https://github.com/kubesphere/kubekey/releases/download/v3.0.7/centos7-rpms-amd64.iso

- arch: amd64

type: linux

id: ubuntu

version: "20.04"

repository:

iso:

localPath:

url: https://github.com/kubesphere/kubekey/releases/download/v3.0.7/ubuntu-20.04-debs-amd64.iso

kubernetesDistributions:

- type: kubernetes

version: v1.22.12

components:

helm:

version: v3.9.0

cni:

version: v0.9.1

etcd:

version: v3.4.13

## For now, if your cluster container runtime is containerd, KubeKey will add a docker 20.10.8 container runtime in the below list.

## The reason is KubeKey creates a cluster with containerd by installing a docker first and making kubelet connect the socket file of containerd which docker contained.

containerRuntimes:

- type: docker

version: 20.10.8

crictl:

version: v1.24.0

docker-registry:

version: "2"

harbor:

version: v2.5.3

docker-compose:

version: v2.2.2

images:

- registry.cn-beijing.aliyuncs.com/kubesphereio/kube-apiserver:v1.22.12

- registry.cn-beijing.aliyuncs.com/kubesphereio/kube-controller-manager:v1.22.12

- registry.cn-beijing.aliyuncs.com/kubesphereio/kube-proxy:v1.22.12

- registry.cn-beijing.aliyuncs.com/kubesphereio/kube-scheduler:v1.22.12

- registry.cn-beijing.aliyuncs.com/kubesphereio/pause:3.5

- registry.cn-beijing.aliyuncs.com/kubesphereio/coredns:1.8.0

- registry.cn-beijing.aliyuncs.com/kubesphereio/cni:v3.23.2

- registry.cn-beijing.aliyuncs.com/kubesphereio/kube-controllers:v3.23.2

- registry.cn-beijing.aliyuncs.com/kubesphereio/node:v3.23.2

- registry.cn-beijing.aliyuncs.com/kubesphereio/pod2daemon-flexvol:v3.23.2

- registry.cn-beijing.aliyuncs.com/kubesphereio/typha:v3.23.2

- registry.cn-beijing.aliyuncs.com/kubesphereio/flannel:v0.12.0

- registry.cn-beijing.aliyuncs.com/kubesphereio/provisioner-localpv:3.3.0

- registry.cn-beijing.aliyuncs.com/kubesphereio/linux-utils:3.3.0

- registry.cn-beijing.aliyuncs.com/kubesphereio/haproxy:2.3

- registry.cn-beijing.aliyuncs.com/kubesphereio/nfs-subdir-external-provisioner:v4.0.2

- registry.cn-beijing.aliyuncs.com/kubesphereio/k8s-dns-node-cache:1.15.12

- registry.cn-beijing.aliyuncs.com/kubesphereio/ks-installer:v3.3.2

- registry.cn-beijing.aliyuncs.com/kubesphereio/ks-apiserver:v3.3.2

- registry.cn-beijing.aliyuncs.com/kubesphereio/ks-console:v3.3.2

- registry.cn-beijing.aliyuncs.com/kubesphereio/ks-controller-manager:v3.3.2

- registry.cn-beijing.aliyuncs.com/kubesphereio/ks-upgrade:v3.3.2

- registry.cn-beijing.aliyuncs.com/kubesphereio/kubectl:v1.22.0

- registry.cn-beijing.aliyuncs.com/kubesphereio/kubectl:v1.21.0

- registry.cn-beijing.aliyuncs.com/kubesphereio/kubectl:v1.20.0

- registry.cn-beijing.aliyuncs.com/kubesphereio/kubefed:v0.8.1

- registry.cn-beijing.aliyuncs.com/kubesphereio/tower:v0.2.0

- registry.cn-beijing.aliyuncs.com/kubesphereio/minio:RELEASE.2019-08-07T01-59-21Z

- registry.cn-beijing.aliyuncs.com/kubesphereio/mc:RELEASE.2019-08-07T23-14-43Z

- registry.cn-beijing.aliyuncs.com/kubesphereio/snapshot-controller:v4.0.0

- registry.cn-beijing.aliyuncs.com/kubesphereio/nginx-ingress-controller:v1.1.0

- registry.cn-beijing.aliyuncs.com/kubesphereio/defaultbackend-amd64:1.4

- registry.cn-beijing.aliyuncs.com/kubesphereio/metrics-server:v0.4.2

- registry.cn-beijing.aliyuncs.com/kubesphereio/redis:5.0.14-alpine

- registry.cn-beijing.aliyuncs.com/kubesphereio/haproxy:2.0.25-alpine

- registry.cn-beijing.aliyuncs.com/kubesphereio/alpine:3.14

- registry.cn-beijing.aliyuncs.com/kubesphereio/openldap:1.3.0

- registry.cn-beijing.aliyuncs.com/kubesphereio/netshoot:v1.0

- registry.cn-beijing.aliyuncs.com/kubesphereio/cloudcore:v1.9.2

- registry.cn-beijing.aliyuncs.com/kubesphereio/iptables-manager:v1.9.2

- registry.cn-beijing.aliyuncs.com/kubesphereio/edgeservice:v0.2.0

- registry.cn-beijing.aliyuncs.com/kubesphereio/gatekeeper:v3.5.2

- registry.cn-beijing.aliyuncs.com/kubesphereio/openpitrix-jobs:v3.3.2

- registry.cn-beijing.aliyuncs.com/kubesphereio/devops-apiserver:ks-v3.3.2

- registry.cn-beijing.aliyuncs.com/kubesphereio/devops-controller:ks-v3.3.2

- registry.cn-beijing.aliyuncs.com/kubesphereio/devops-tools:ks-v3.3.2

- registry.cn-beijing.aliyuncs.com/kubesphereio/ks-jenkins:v3.3.0-2.319.1

- registry.cn-beijing.aliyuncs.com/kubesphereio/inbound-agent:4.10-2

- registry.cn-beijing.aliyuncs.com/kubesphereio/builder-base:v3.2.2

- registry.cn-beijing.aliyuncs.com/kubesphereio/builder-nodejs:v3.2.0

- registry.cn-beijing.aliyuncs.com/kubesphereio/builder-maven:v3.2.0

- registry.cn-beijing.aliyuncs.com/kubesphereio/builder-maven:v3.2.1-jdk11

- registry.cn-beijing.aliyuncs.com/kubesphereio/builder-python:v3.2.0

- registry.cn-beijing.aliyuncs.com/kubesphereio/builder-go:v3.2.0

- registry.cn-beijing.aliyuncs.com/kubesphereio/builder-go:v3.2.2-1.16

- registry.cn-beijing.aliyuncs.com/kubesphereio/builder-go:v3.2.2-1.17

- registry.cn-beijing.aliyuncs.com/kubesphereio/builder-go:v3.2.2-1.18

- registry.cn-beijing.aliyuncs.com/kubesphereio/builder-base:v3.2.2-podman

- registry.cn-beijing.aliyuncs.com/kubesphereio/builder-nodejs:v3.2.0-podman

- registry.cn-beijing.aliyuncs.com/kubesphereio/builder-maven:v3.2.0-podman

- registry.cn-beijing.aliyuncs.com/kubesphereio/builder-maven:v3.2.1-jdk11-podman

- registry.cn-beijing.aliyuncs.com/kubesphereio/builder-python:v3.2.0-podman

- registry.cn-beijing.aliyuncs.com/kubesphereio/builder-go:v3.2.0-podman

- registry.cn-beijing.aliyuncs.com/kubesphereio/builder-go:v3.2.2-1.16-podman

- registry.cn-beijing.aliyuncs.com/kubesphereio/builder-go:v3.2.2-1.17-podman

- registry.cn-beijing.aliyuncs.com/kubesphereio/builder-go:v3.2.2-1.18-podman

- registry.cn-beijing.aliyuncs.com/kubesphereio/s2ioperator:v3.2.1

- registry.cn-beijing.aliyuncs.com/kubesphereio/s2irun:v3.2.0

- registry.cn-beijing.aliyuncs.com/kubesphereio/s2i-binary:v3.2.0

- registry.cn-beijing.aliyuncs.com/kubesphereio/tomcat85-java11-centos7:v3.2.0

- registry.cn-beijing.aliyuncs.com/kubesphereio/tomcat85-java11-runtime:v3.2.0

- registry.cn-beijing.aliyuncs.com/kubesphereio/tomcat85-java8-centos7:v3.2.0

- registry.cn-beijing.aliyuncs.com/kubesphereio/tomcat85-java8-runtime:v3.2.0

- registry.cn-beijing.aliyuncs.com/kubesphereio/java-11-centos7:v3.2.0

- registry.cn-beijing.aliyuncs.com/kubesphereio/java-8-centos7:v3.2.0

- registry.cn-beijing.aliyuncs.com/kubesphereio/java-8-runtime:v3.2.0

- registry.cn-beijing.aliyuncs.com/kubesphereio/java-11-runtime:v3.2.0

- registry.cn-beijing.aliyuncs.com/kubesphereio/nodejs-8-centos7:v3.2.0

- registry.cn-beijing.aliyuncs.com/kubesphereio/nodejs-6-centos7:v3.2.0

- registry.cn-beijing.aliyuncs.com/kubesphereio/nodejs-4-centos7:v3.2.0

- registry.cn-beijing.aliyuncs.com/kubesphereio/python-36-centos7:v3.2.0

- registry.cn-beijing.aliyuncs.com/kubesphereio/python-35-centos7:v3.2.0

- registry.cn-beijing.aliyuncs.com/kubesphereio/python-34-centos7:v3.2.0

- registry.cn-beijing.aliyuncs.com/kubesphereio/python-27-centos7:v3.2.0

- registry.cn-beijing.aliyuncs.com/kubesphereio/argocd:v2.3.3

- registry.cn-beijing.aliyuncs.com/kubesphereio/argocd-applicationset:v0.4.1

- registry.cn-beijing.aliyuncs.com/kubesphereio/dex:v2.30.2

- registry.cn-beijing.aliyuncs.com/kubesphereio/redis:6.2.6-alpine

- registry.cn-beijing.aliyuncs.com/kubesphereio/configmap-reload:v0.5.0

- registry.cn-beijing.aliyuncs.com/kubesphereio/prometheus:v2.34.0

- registry.cn-beijing.aliyuncs.com/kubesphereio/prometheus-config-reloader:v0.55.1

- registry.cn-beijing.aliyuncs.com/kubesphereio/prometheus-operator:v0.55.1

- registry.cn-beijing.aliyuncs.com/kubesphereio/kube-rbac-proxy:v0.11.0

- registry.cn-beijing.aliyuncs.com/kubesphereio/kube-state-metrics:v2.5.0

- registry.cn-beijing.aliyuncs.com/kubesphereio/node-exporter:v1.3.1

- registry.cn-beijing.aliyuncs.com/kubesphereio/alertmanager:v0.23.0

- registry.cn-beijing.aliyuncs.com/kubesphereio/thanos:v0.25.2

- registry.cn-beijing.aliyuncs.com/kubesphereio/grafana:8.3.3

- registry.cn-beijing.aliyuncs.com/kubesphereio/kube-rbac-proxy:v0.8.0

- registry.cn-beijing.aliyuncs.com/kubesphereio/notification-manager-operator:v1.4.0

- registry.cn-beijing.aliyuncs.com/kubesphereio/notification-manager:v1.4.0

- registry.cn-beijing.aliyuncs.com/kubesphereio/notification-tenant-sidecar:v3.2.0

- registry.cn-beijing.aliyuncs.com/kubesphereio/elasticsearch-curator:v5.7.6

- registry.cn-beijing.aliyuncs.com/kubesphereio/elasticsearch-oss:6.8.22

- registry.cn-beijing.aliyuncs.com/kubesphereio/fluentbit-operator:v0.13.0

- registry.cn-beijing.aliyuncs.com/kubesphereio/docker:19.03

- registry.cn-beijing.aliyuncs.com/kubesphereio/fluent-bit:v1.8.11

- registry.cn-beijing.aliyuncs.com/kubesphereio/log-sidecar-injector:1.1

- registry.cn-beijing.aliyuncs.com/kubesphereio/filebeat:6.7.0

- registry.cn-beijing.aliyuncs.com/kubesphereio/kube-events-operator:v0.4.0

- registry.cn-beijing.aliyuncs.com/kubesphereio/kube-events-exporter:v0.4.0

- registry.cn-beijing.aliyuncs.com/kubesphereio/kube-events-ruler:v0.4.0

- registry.cn-beijing.aliyuncs.com/kubesphereio/kube-auditing-operator:v0.2.0

- registry.cn-beijing.aliyuncs.com/kubesphereio/kube-auditing-webhook:v0.2.0

- registry.cn-beijing.aliyuncs.com/kubesphereio/pilot:1.11.1

- registry.cn-beijing.aliyuncs.com/kubesphereio/proxyv2:1.11.1

- registry.cn-beijing.aliyuncs.com/kubesphereio/jaeger-operator:1.27

- registry.cn-beijing.aliyuncs.com/kubesphereio/jaeger-agent:1.27

- registry.cn-beijing.aliyuncs.com/kubesphereio/jaeger-collector:1.27

- registry.cn-beijing.aliyuncs.com/kubesphereio/jaeger-query:1.27

- registry.cn-beijing.aliyuncs.com/kubesphereio/jaeger-es-index-cleaner:1.27

- registry.cn-beijing.aliyuncs.com/kubesphereio/kiali-operator:v1.38.1

- registry.cn-beijing.aliyuncs.com/kubesphereio/kiali:v1.38

- registry.cn-beijing.aliyuncs.com/kubesphereio/busybox:1.31.1

- registry.cn-beijing.aliyuncs.com/kubesphereio/nginx:1.14-alpine

- registry.cn-beijing.aliyuncs.com/kubesphereio/wget:1.0

- registry.cn-beijing.aliyuncs.com/kubesphereio/hello:plain-text

- registry.cn-beijing.aliyuncs.com/kubesphereio/wordpress:4.8-apache

- registry.cn-beijing.aliyuncs.com/kubesphereio/hpa-example:latest

- registry.cn-beijing.aliyuncs.com/kubesphereio/fluentd:v1.4.2-2.0

- registry.cn-beijing.aliyuncs.com/kubesphereio/perl:latest

- registry.cn-beijing.aliyuncs.com/kubesphereio/examples-bookinfo-productpage-v1:1.16.2

- registry.cn-beijing.aliyuncs.com/kubesphereio/examples-bookinfo-reviews-v1:1.16.2

- registry.cn-beijing.aliyuncs.com/kubesphereio/examples-bookinfo-reviews-v2:1.16.2

- registry.cn-beijing.aliyuncs.com/kubesphereio/examples-bookinfo-details-v1:1.16.2

- registry.cn-beijing.aliyuncs.com/kubesphereio/examples-bookinfo-ratings-v1:1.16.3

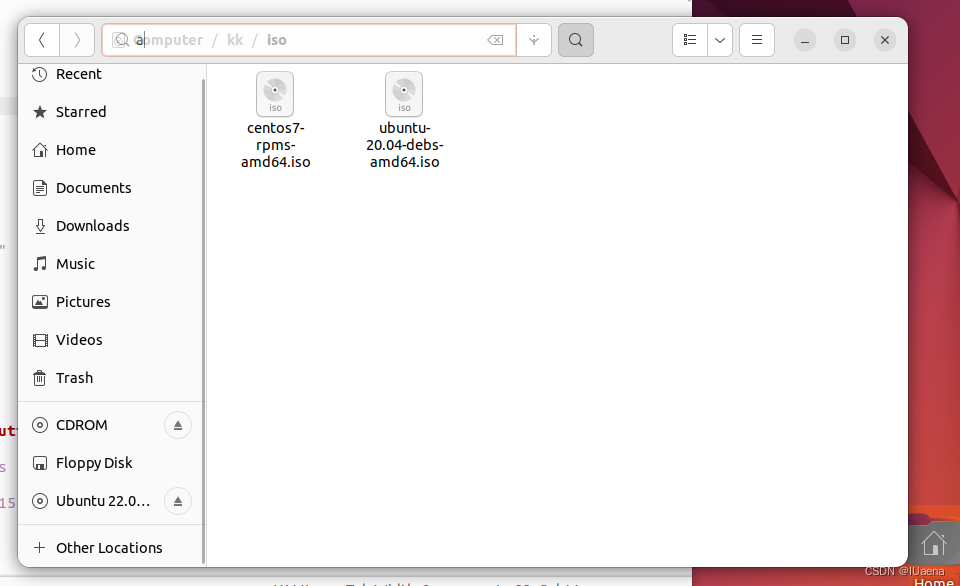

- registry.cn-beijing.aliyuncs.com/kubesphereio/scope:1.13.0但要注意,这里有两个github网址,如果直接用ubuntu下载速度超级慢,可以在主机复制网址下载之后放入虚拟机中。

下载好的文件在/kk目录中创建一个iso文件夹然后放入

下载好的文件在/kk目录中创建一个iso文件夹然后放入

manifest.yaml配置文件修改,将两个url的内容删除,localPath换为iso本地目录,然后保存

type: linux

id: centos

version: "7"

repository:

iso:

localPath: /kk/iso/centos7-rpms-amd64.iso

url:

- arch: amd64

type: linux

id: ubuntu

version: "20.04"

repository:

iso:

localPath: /kk/iso/ubuntu-20.04-debs-amd64.iso

url:

kubernetesDistributions:这里也提供一下两个iso:

kubesphere离线安装v3.3的ubuntu2020iso

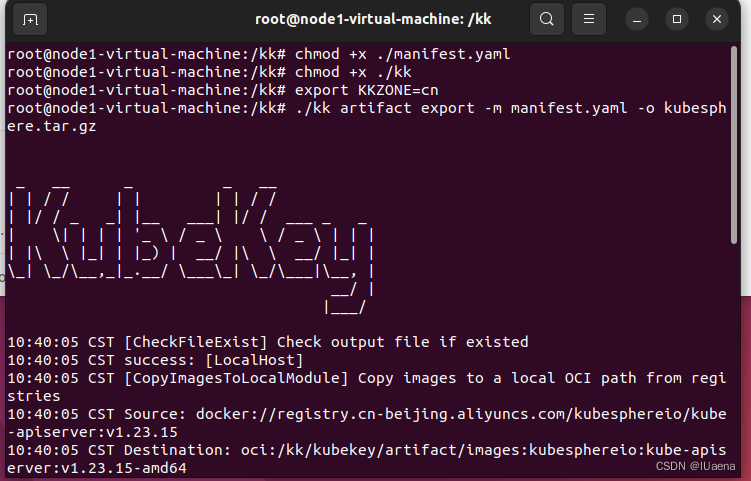

然后就可以下载了,执行以下命令

chmod +x ./manifest.yaml

chmod +x ./kk

export KKZONE=cn

./kk artifact export -m manifest.yaml -o kubesphere.tar.gz

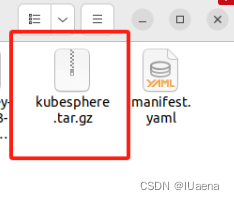

等待好长时间后就可以看到打包完成了

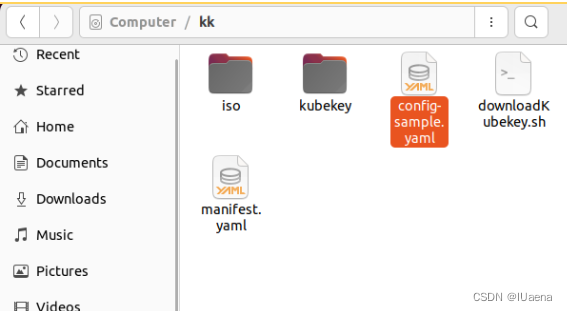

可以将kk文件和刚下载打包好的kubesphere.tar.gz移动到需要离线安装的集群机器上。然后进行kubesphere安装了。我这边就继续在下载机器上安装了。

3、离线安装集群

执行以下命令创建安装的配置文件

./kk create config --with-kubesphere v3.3.2 --with-kubernetes v1.22.12 -f config-sample.yaml

然后根据两个虚拟机集群的情况修改配置文件

gedit config-sample.yaml把圈起来的地方改成自己集群的配置

特别注意这一块

network:

plugin: calico

kubePodsCIDR: 10.233.64.0/18

kubeServiceCIDR: 10.233.0.0/18

multusCNI:

enabled: false

registry:

# 这里加一个type然后写harbor

type: harbor

privateRegistry: ""

namespaceOverride: ""

registryMirrors: []

insecureRegistries: []

addons: []保存文档后执行以下命令(确保要安装的机器都能ping通):

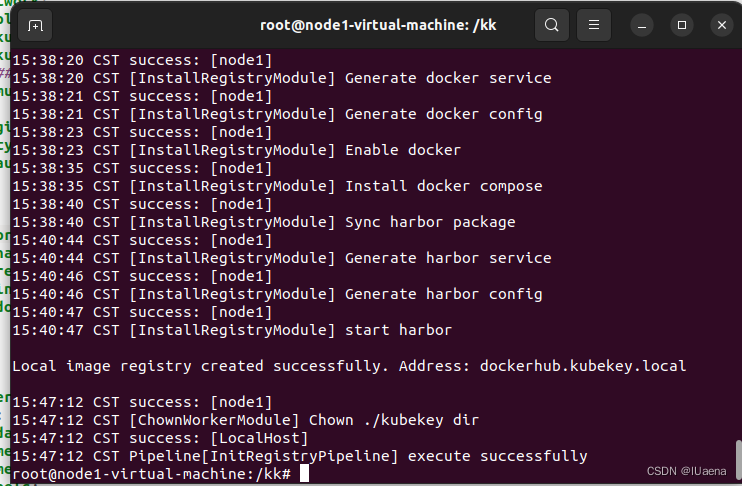

./kk init registry -f config-sample.yaml -a kubesphere.tar.gz

有可能执行会报错,多执行几次,成功是这样的:

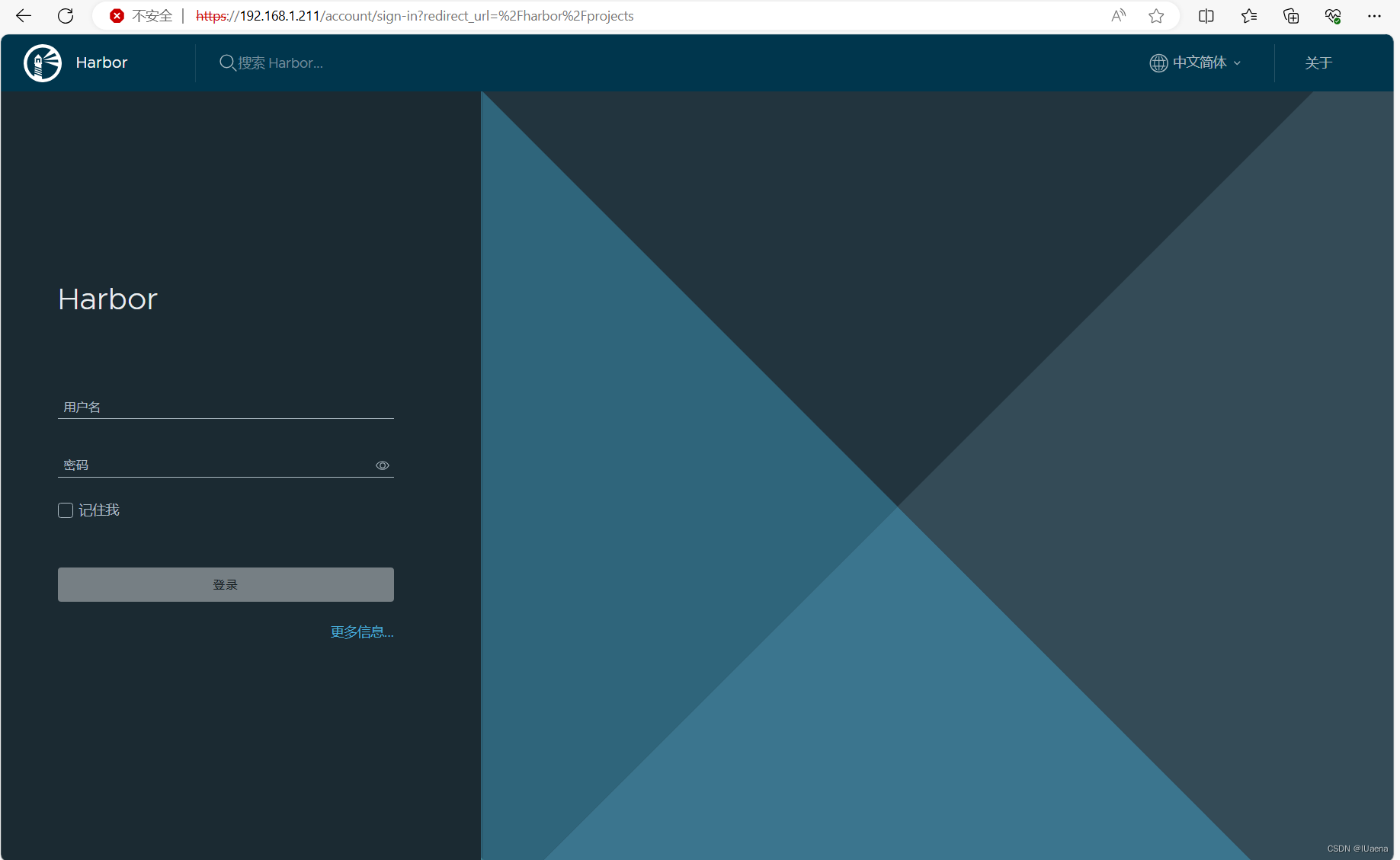

然后用直接访问harbor安装IP查看是否安装成功,我这边就是直接访问192.168.1.211,可能会报不安全,点直接访问就可以了

之后需要用脚本来设置harbor

mkdir /kk/harborSh

cd /kk/harborSh

vim create_project_harbor.sh

然后给sh脚本添加以下内容并保存

#!/usr/bin/env bash

# Copyright 2018 The KubeSphere Authors.

#

# Licensed under the Apache License, Version 2.0 (the "License");

# you may not use this file except in compliance with the License.

# You may obtain a copy of the License at

#

# http://www.apache.org/licenses/LICENSE-2.0

#

# Unless required by applicable law or agreed to in writing, software

# distributed under the License is distributed on an "AS IS" BASIS,

# WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

# See the License for the specific language governing permissions and

# limitations under the License.

url="https://dockerhub.kubekey.local" #修改url的值为https://dockerhub.kubekey.local

user="admin"

passwd="Harbor12345"

harbor_projects=(library

kubesphereio

kubesphere

argoproj

calico

coredns

openebs

csiplugin

minio

mirrorgooglecontainers

osixia

prom

thanosio

jimmidyson

grafana

elastic

istio

jaegertracing

jenkins

weaveworks

openpitrix

joosthofman

nginxdemos

fluent

kubeedge

openpolicyagent

)

for project in "${harbor_projects[@]}"; do

echo "creating $project"

curl -u "${user}:${passwd}" -X POST -H "Content-Type: application/json" "${url}/api/v2.0/projects" -d "{ \"project_name\": \"${project}\", \"public\": true}" -k #curl命令末尾加上 -k

done然后赋予权限并执行脚本

chmod +x create_project_harbor.sh

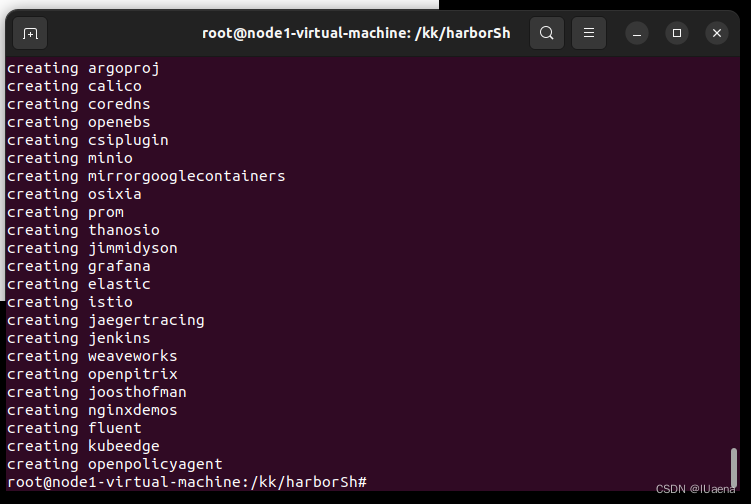

./create_project_harbor.sh

执行成功后可以看到

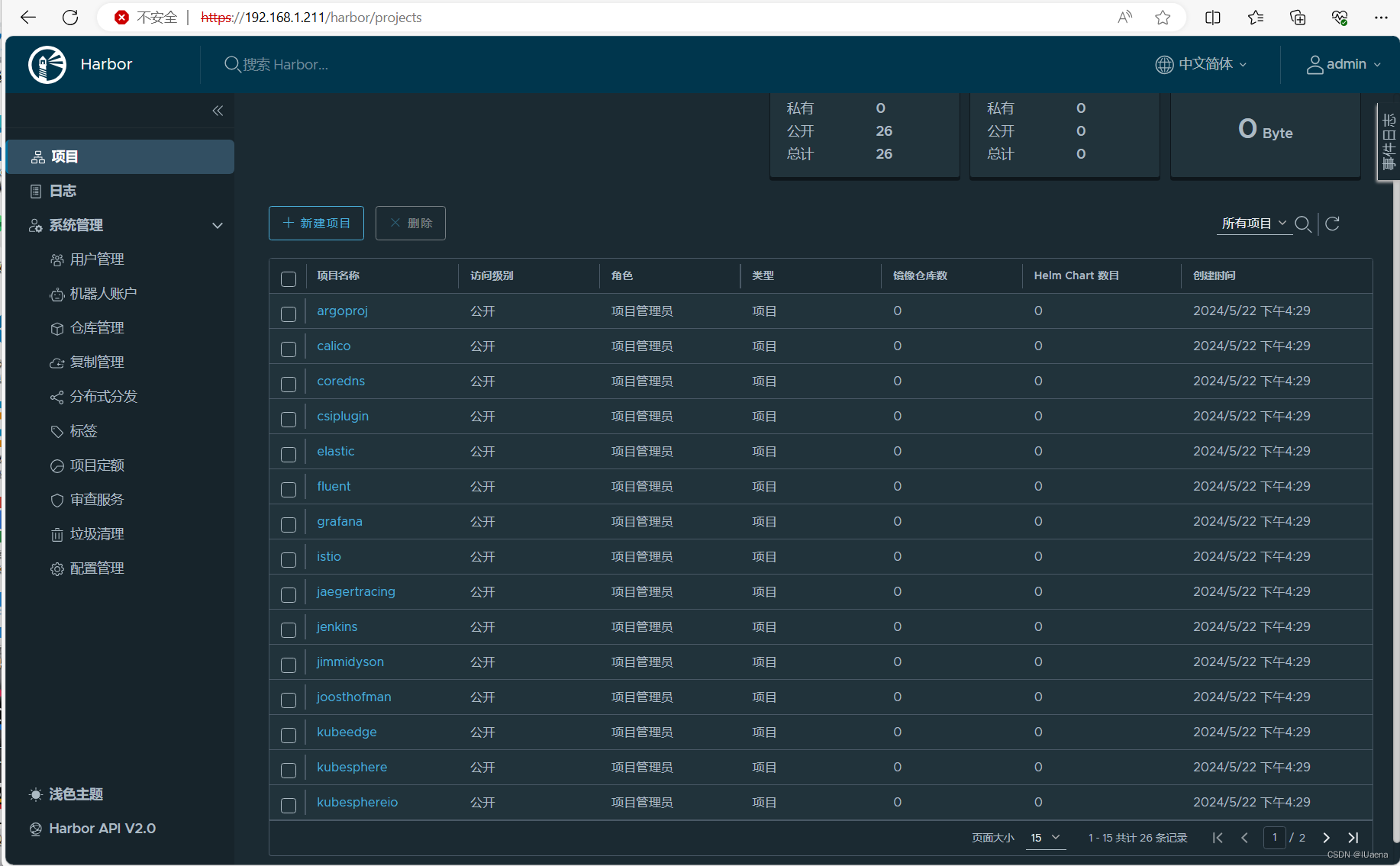

也可以登录harbor去看一看成功没成功,账号admin,密码Harbor12345,可以看到很多镜像已经添加成功了

再次执行以下命令修改集群配置文件,给namespaceOverride增加值

cd /kk

gedit config-sample.yaml

其他不用改,registry下面要添加下面的内容

network:

plugin: calico

kubePodsCIDR: 10.233.64.0/18

kubeServiceCIDR: 10.233.0.0/18

## multus support. https://github.com/k8snetworkplumbingwg/multus-cni

multusCNI:

enabled: false

registry:

type: harbor

auths:

"dockerhub.kubekey.local":

username: admin

password: Harbor12345

privateRegistry: "dockerhub.kubekey.local"

namespaceOverride: "kubesphereio"

registryMirrors: []

insecureRegistries: []

addons: []然后执行命令

./kk create cluster -f config-sample.yaml -a kubesphere.tar.gz --with-packages中途需要输入yes同意安装。

如果安装中途出现下载或者对应依赖包版本不正确的情况,那就看看报错信息是缺少什么依赖,然后在manifest.yaml文件中写入,然后重新制作制品再来安装。

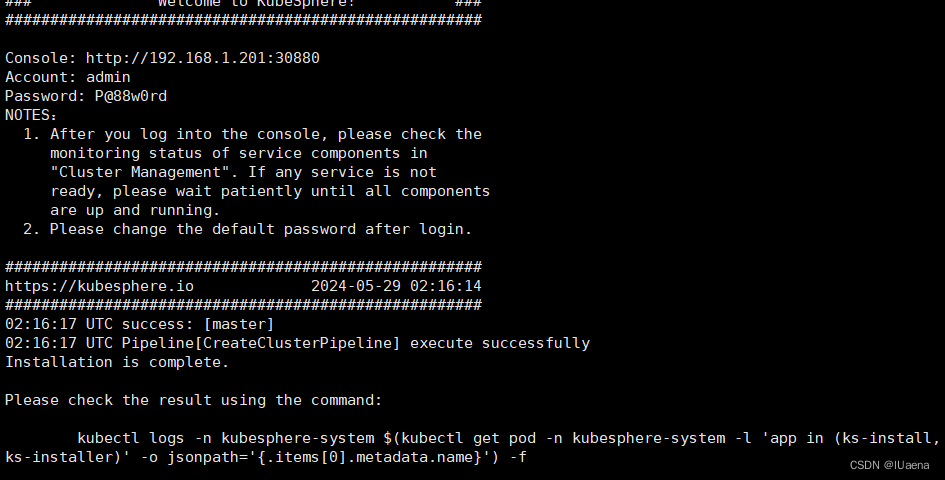

等待很长时间后

然后访问地址192.168.1.211:30880,出现网页,就是安装成功了。

账号admin,密码P@88w0rd,登录成功后是这样的

五、安装完成后配置调整

1、kubesphere链接harbor

将 nodelocaldns 解析都转发给 coredns

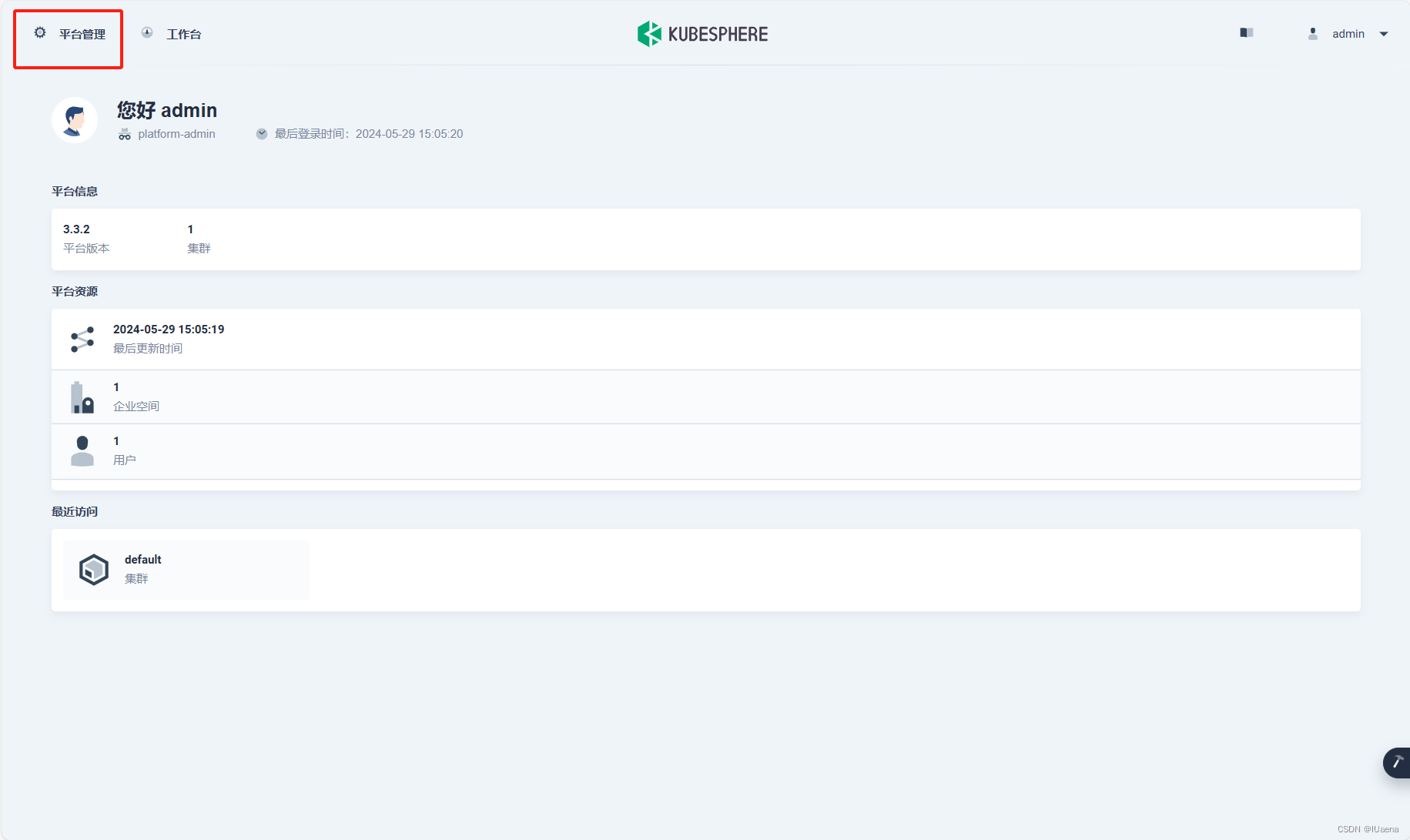

点击kubesphere平台的平台管理,然后点击集群管理

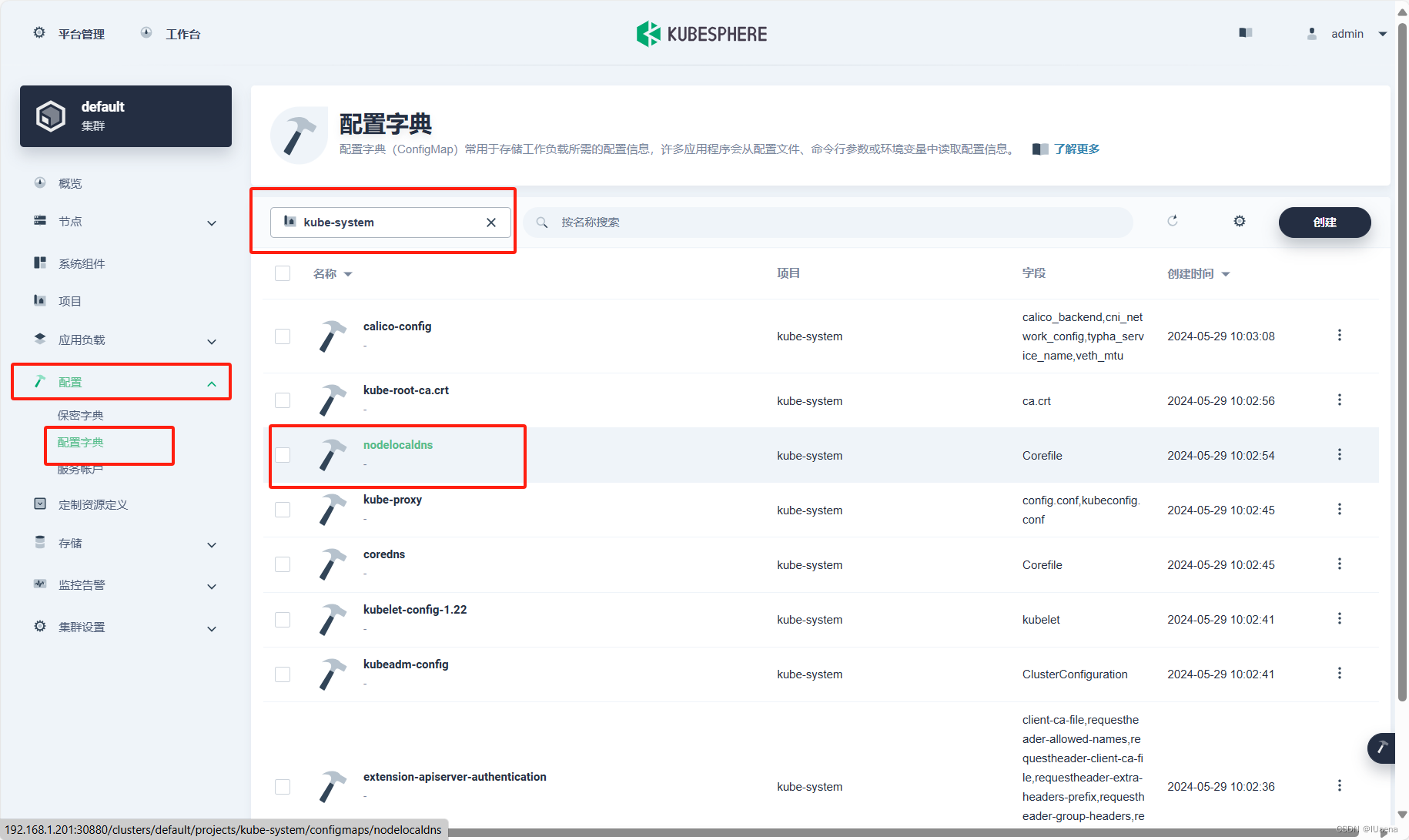

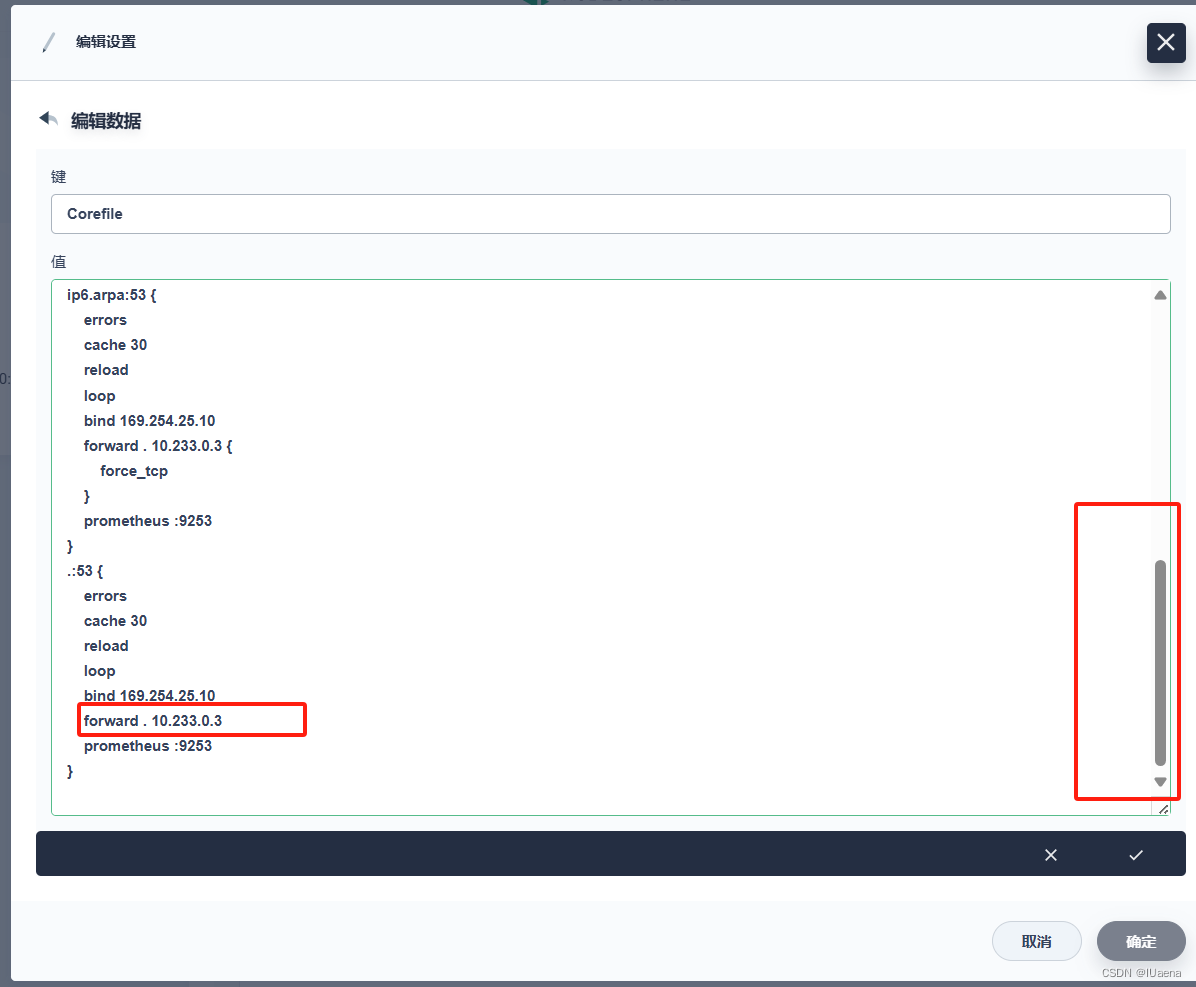

然后依次点击配置-配置字典-搜索kube-system-点击进入nodelocaldns

点击更多操作-编辑设置

点击修改图标

滚动到最下面,然后修改内容如下,将forward后面的内容修改为 . 10.233.0.3【注意空格】,然后点击确认

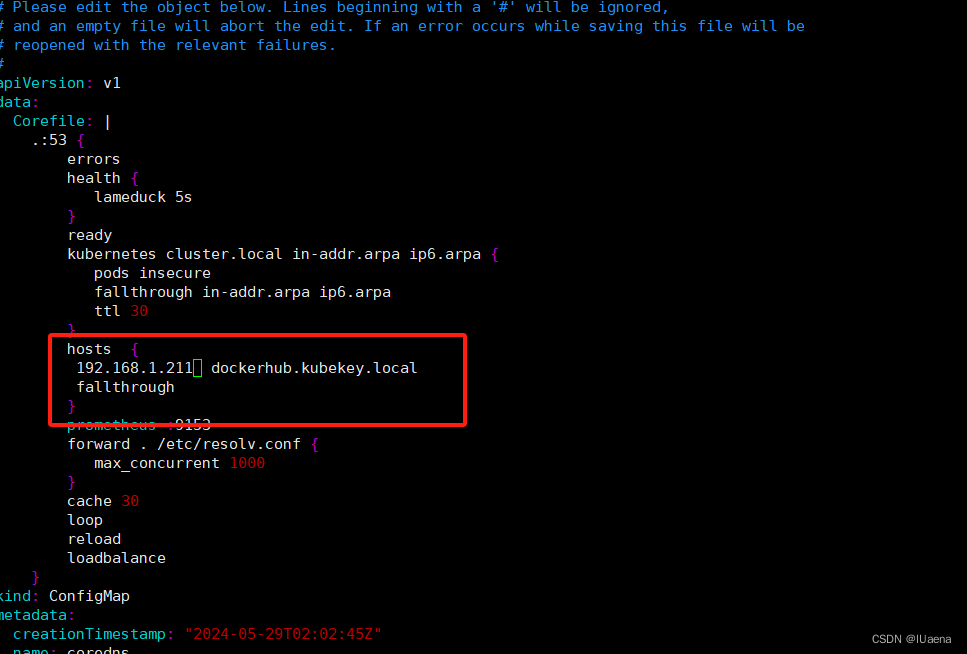

在 coredns 中添加主机记录

在你的安装harbor的机器中输入以下命令,我这边就是211下操作

kubectl edit cm coredns -n kube-system在这个位置加入红框中的内容并保存,ip地址就是harbor的地址,记得保存

hosts {

192.168.1.211 dockerhub.kubekey.local

fallthrough

}

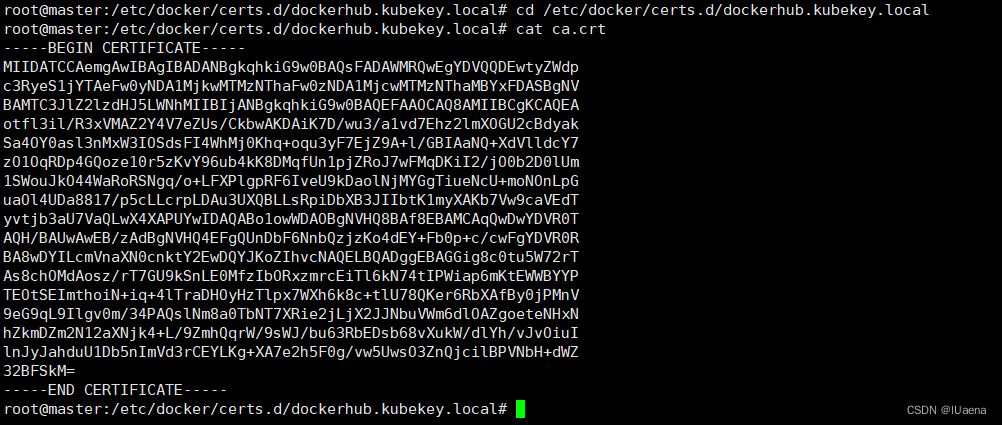

添加证书

在你的安装harbor的机器中输入以下命令,我这边就是211下操作

cd /etc/docker/certs.d/dockerhub.kubekey.local

cat ca.crt

然后将文本中的内容全部复制

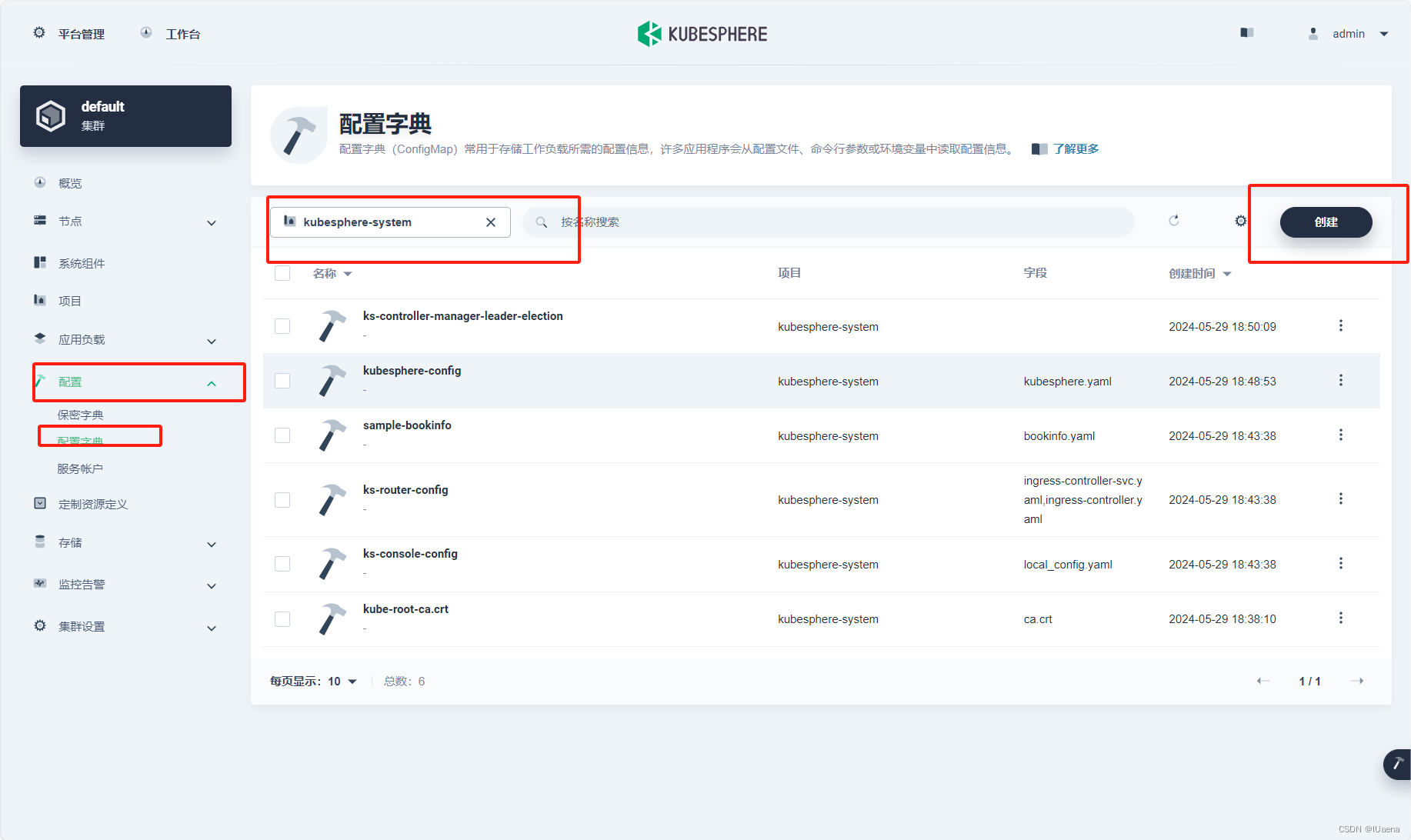

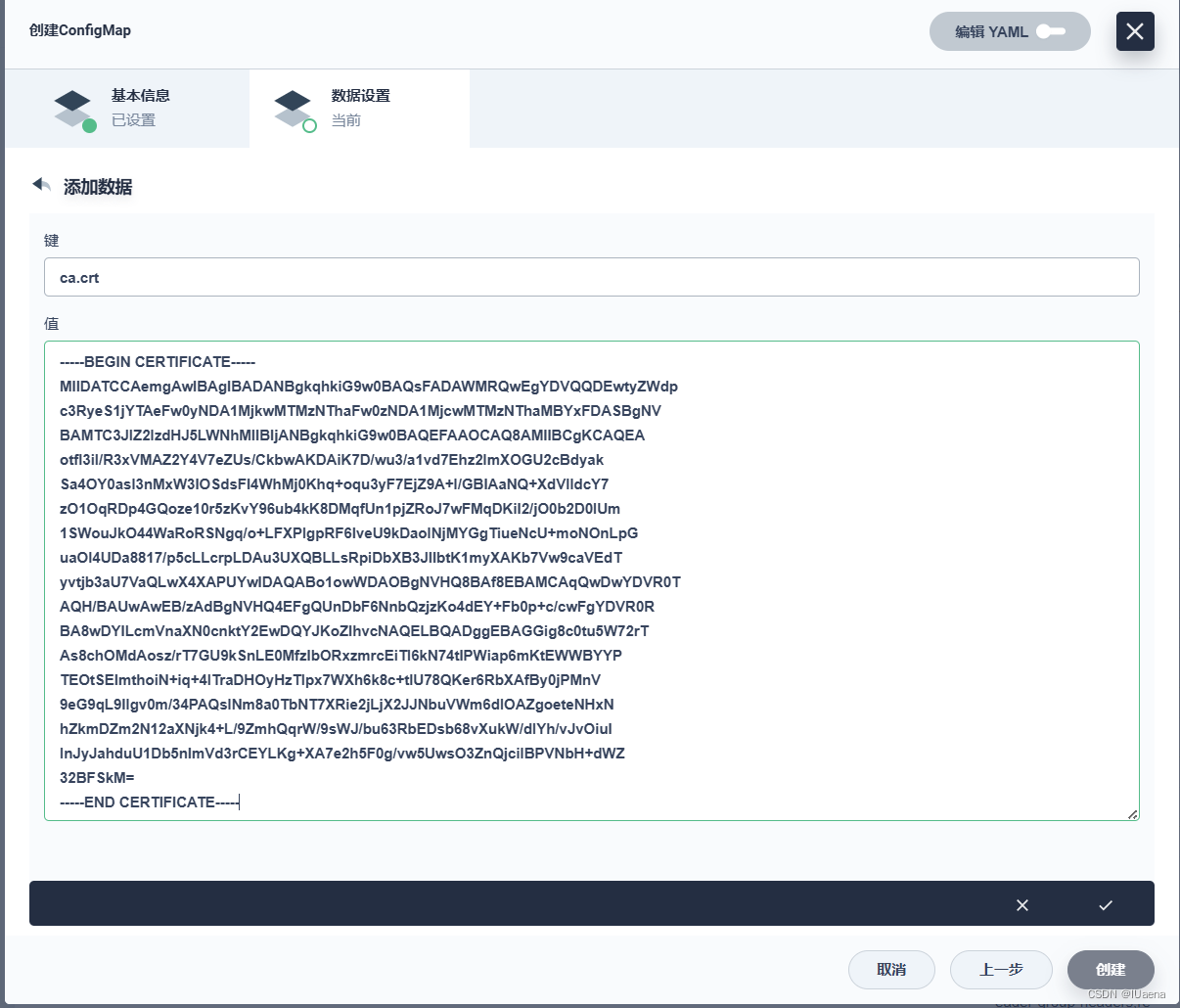

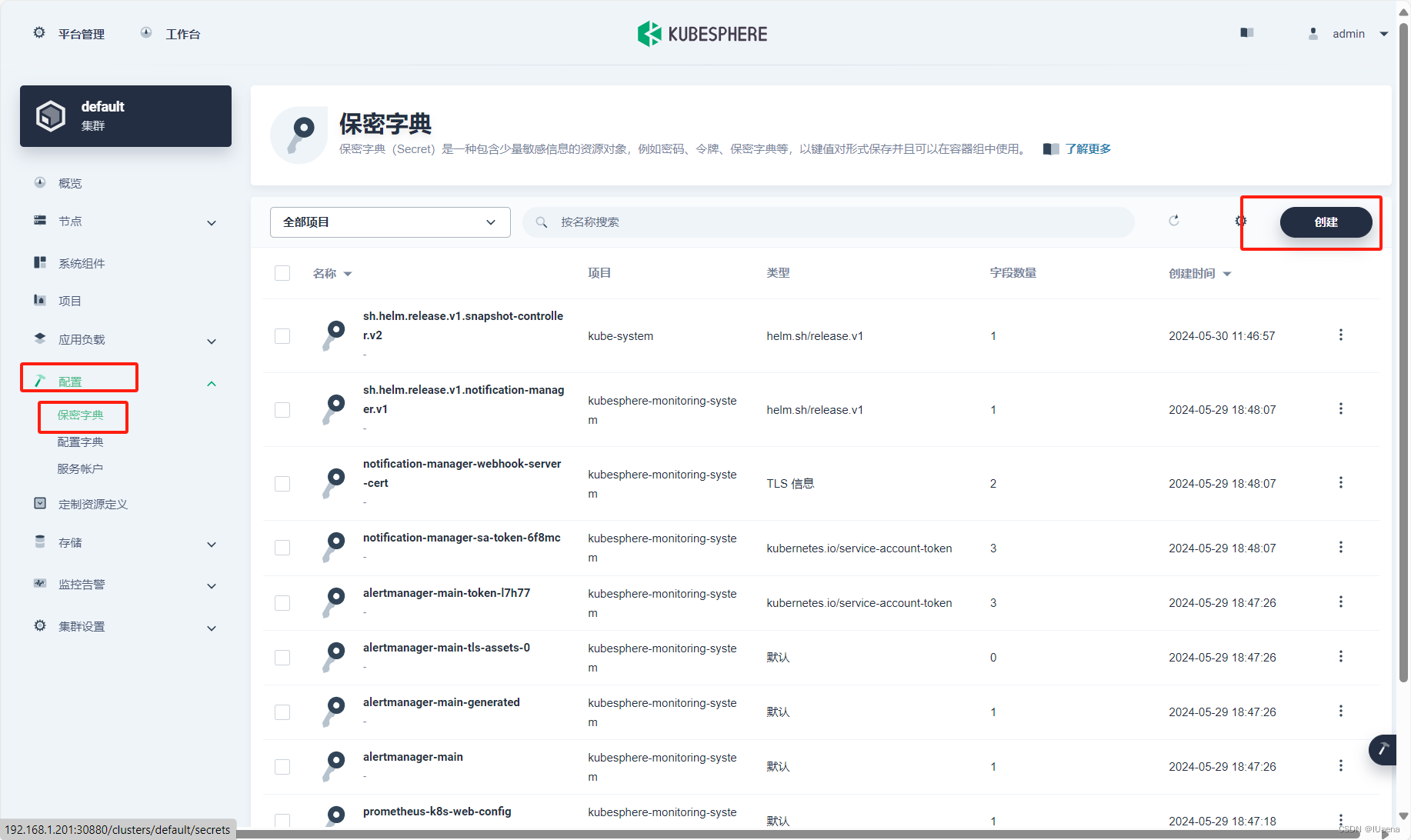

然后创建配置字典,点击配置-配置字典,搜索kubesphere-system-点击创建

然后输入对应信息点击下一步

harbor4shl-ca

kubesphere-system

然后名称设置为ca.crt,内容为刚才终端中复制的证书,点击创建对号然后点击创建

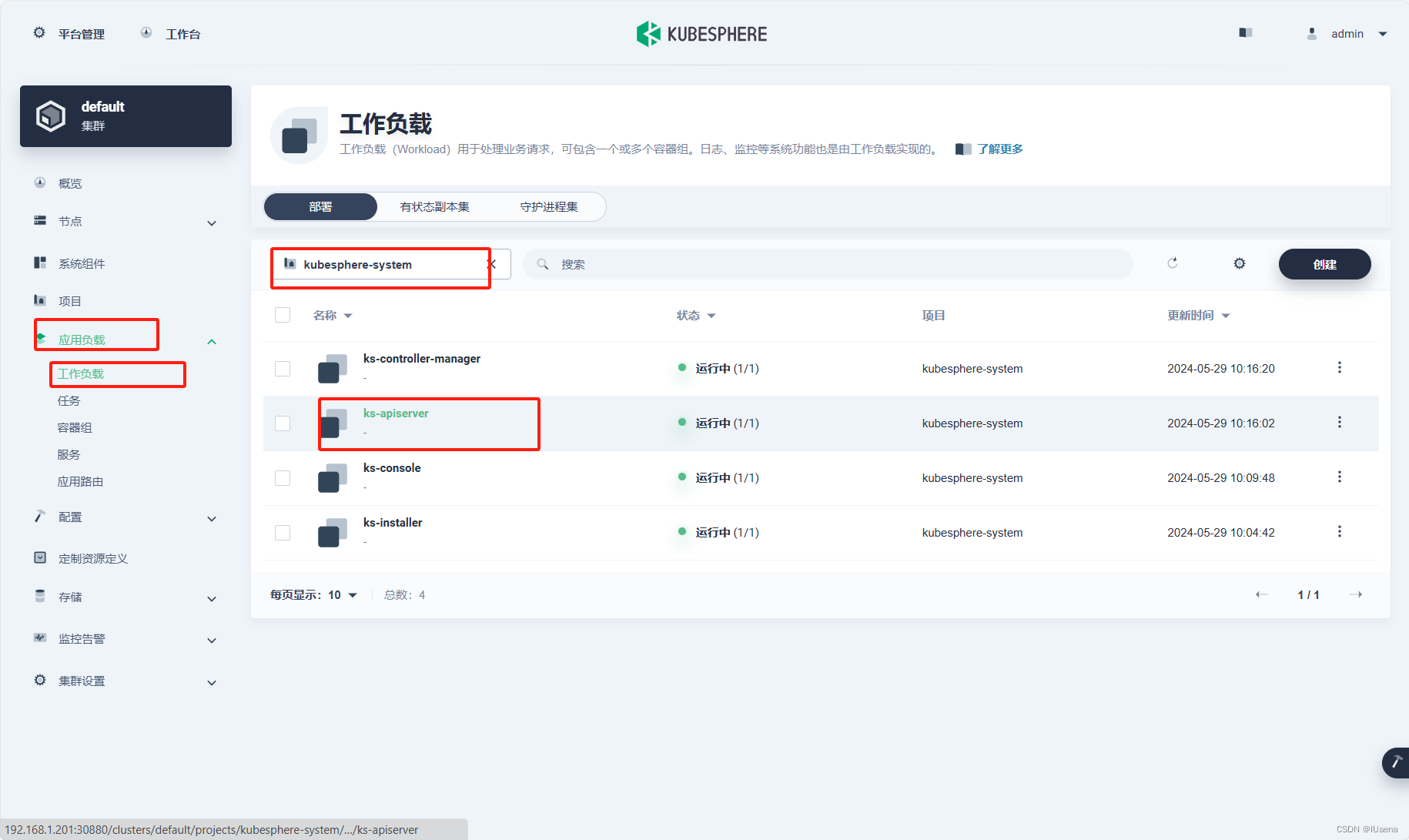

点击应用负载-工作负载-搜索kubesphere-system-点击ks-apiserver

点击更多操作-编辑设置

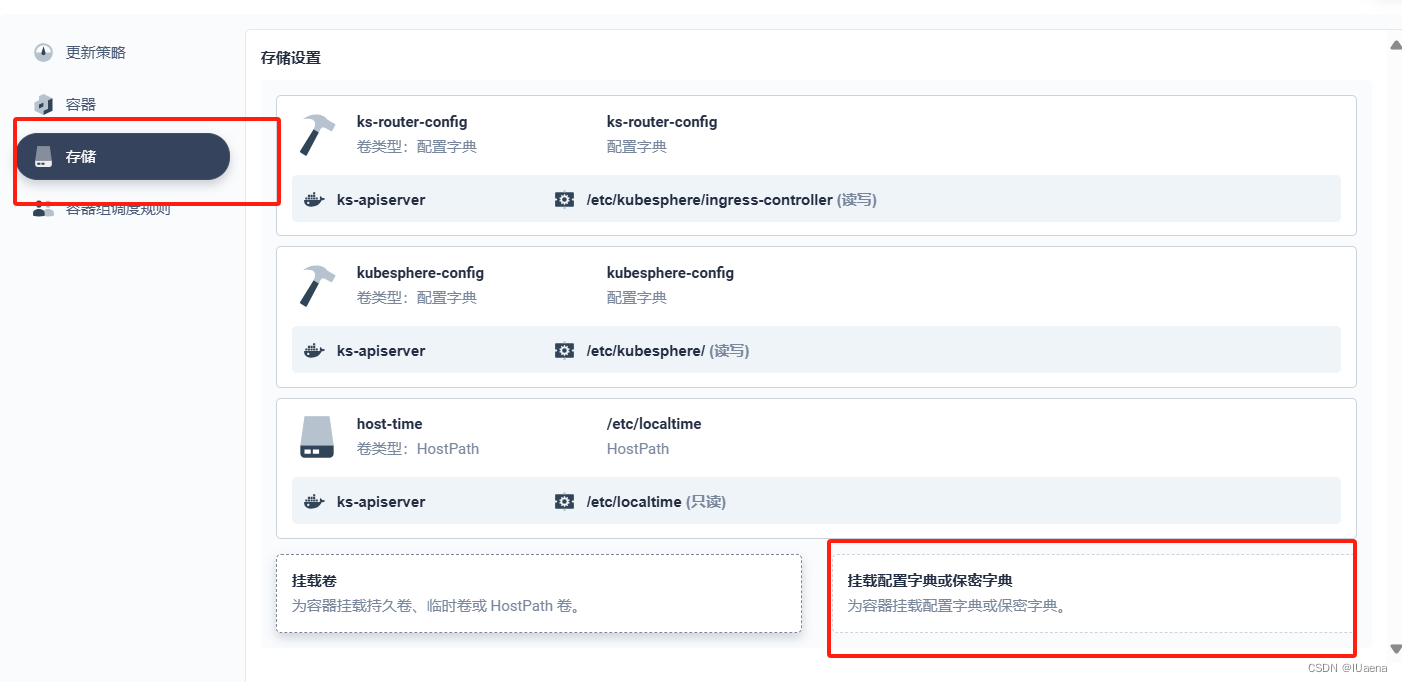

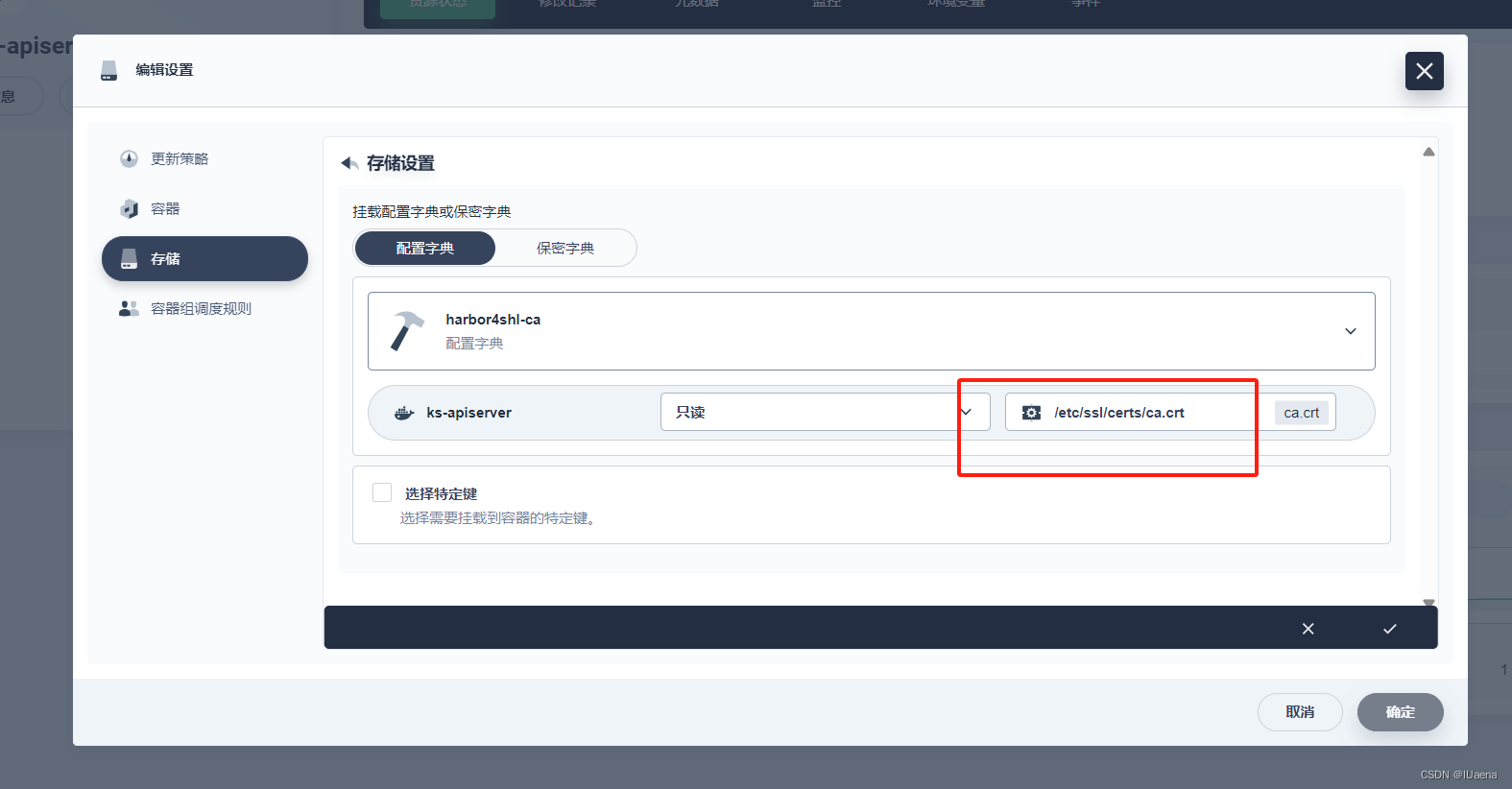

点击存储-挂载配置字典或保密字典

然后输入以下配置和选项

/etc/ssl/certs/ca.crt

点击对号,并点击确认

修改完后平台可能会掉线,然后等待平台重启再进入就行了

也可以去测试一下

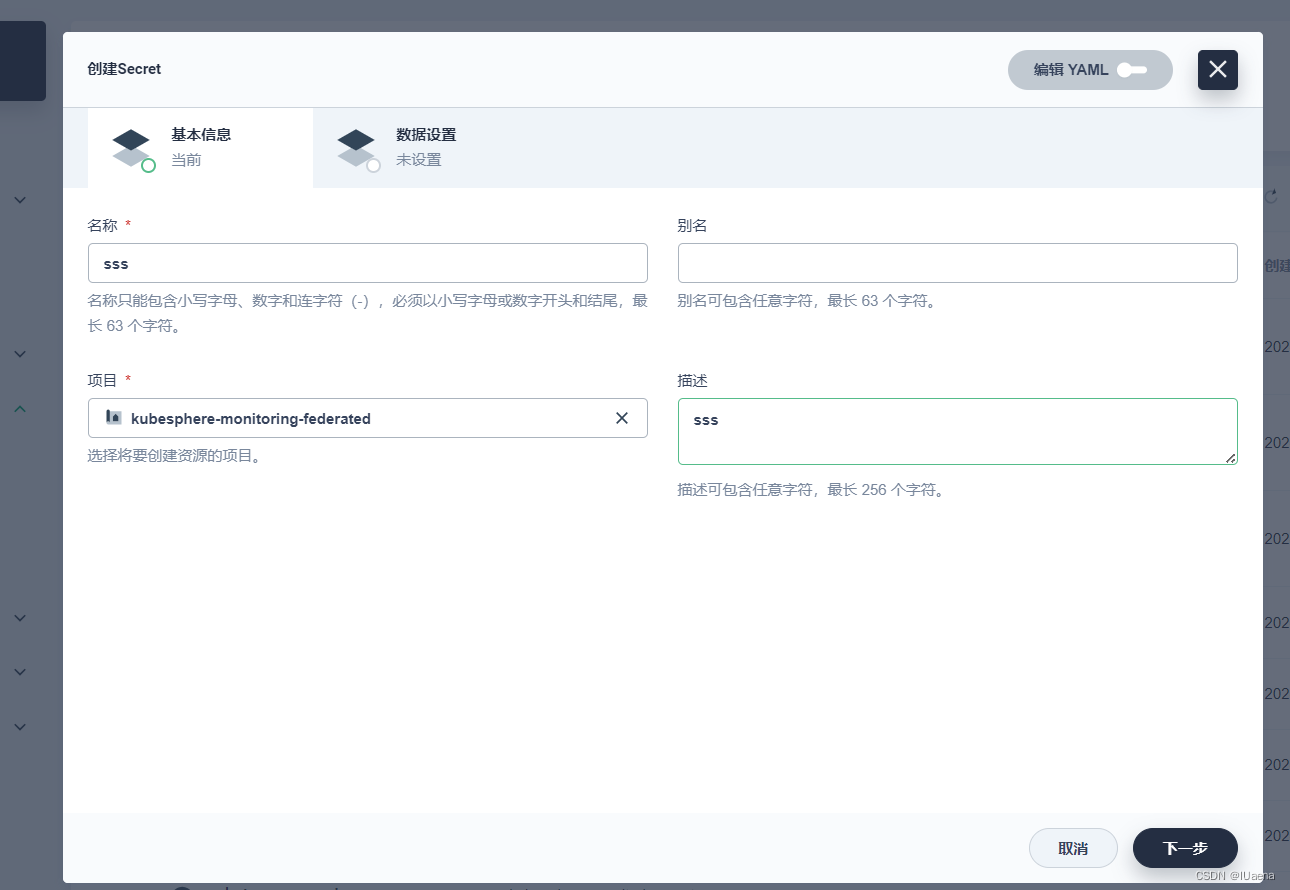

随便建一个保密字典,信息随便填然后点下一步

类型选择镜像服务信息,镜像服务地址选择https://,输入harbor地址,输入登录harbor的用户名和密码,然后点击验证。出现镜像服务验证通过即为成功。

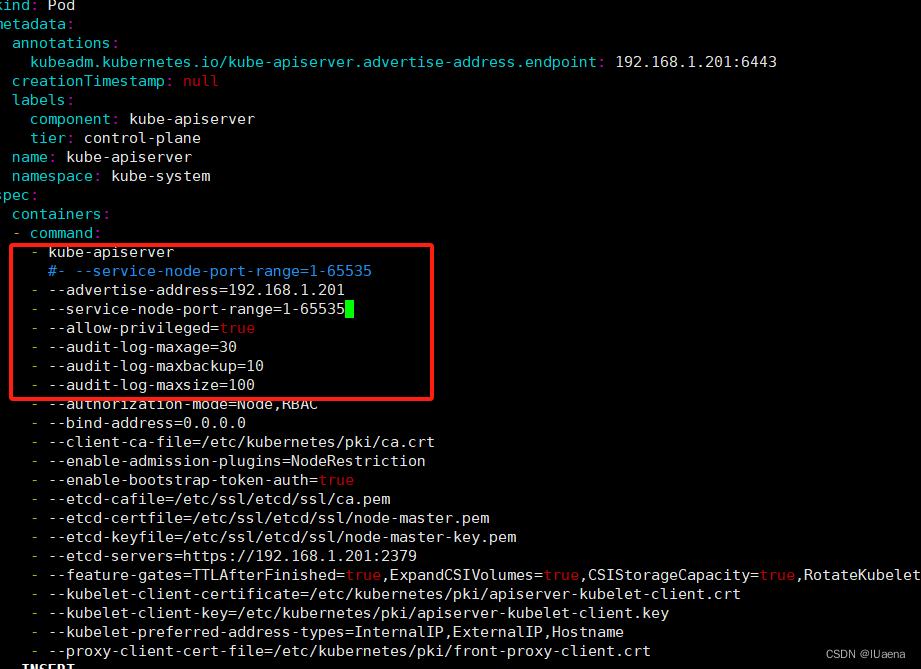

2、解除kubesphere端口号限制

kubesphere端口号默认限制了30000-32767,只要稍微配置就能解除了

在kubesphere部署的机器终端上输入命令

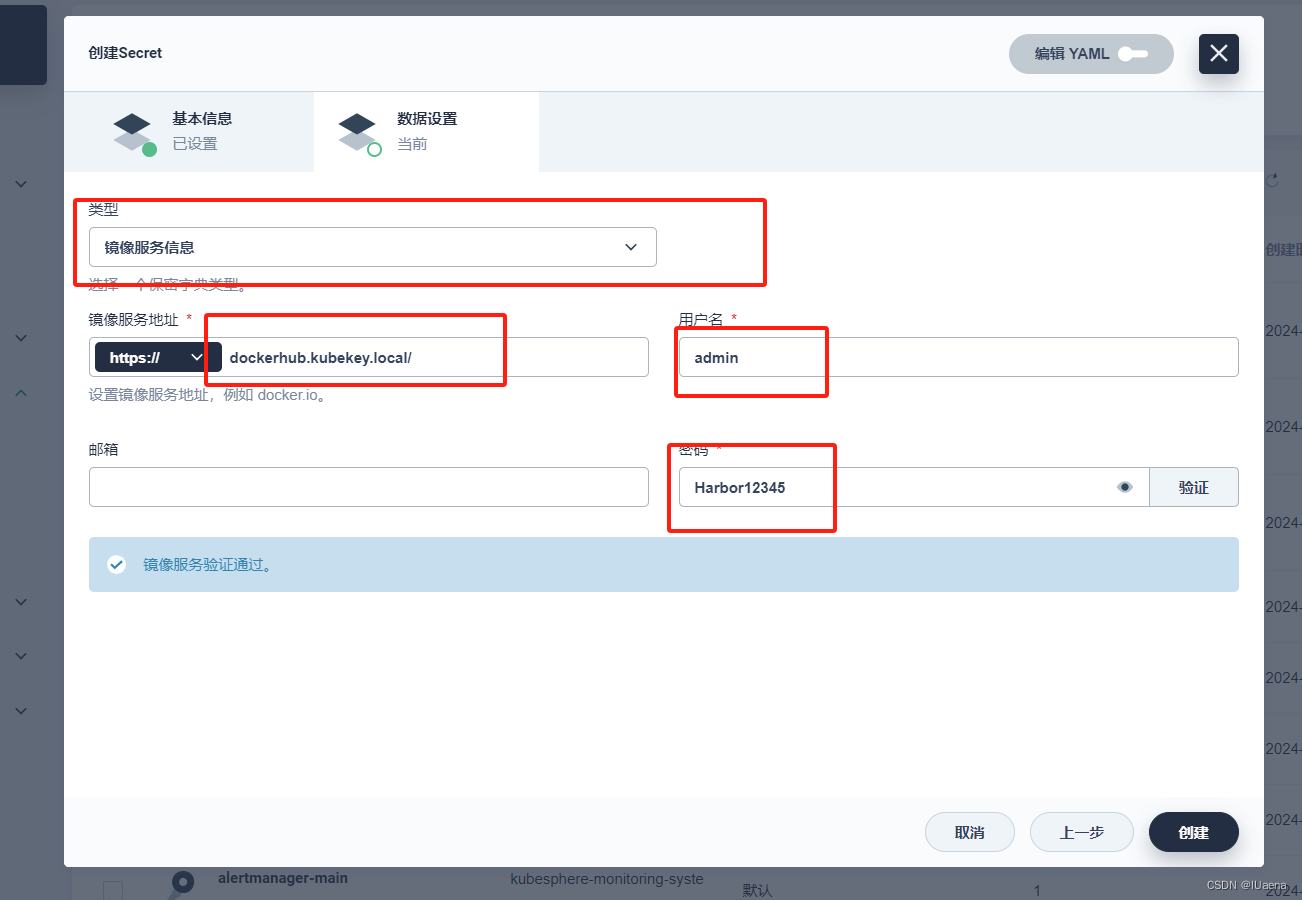

vim /etc/kubernetes/manifests/kube-apiserver.yaml在目标行插入以下字段并保存退出

- --service-node-port-range=1-65535

然后在终端重启一下服务让配置生效

systemctl restart kubelet.service到此kubesphere整体安装并配置就完成了,出错可以留言。

1366

1366

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?