import torch

import torch.nn as nn

from torch.nn.init import constant_, normal_, uniform_

class Conv2D3(nn.Module):

def __init__(self, in_channels, out_channels, kernel_size, stride=1, padding=0):

weight_attr = constant_(torch.empty(size=(out_channels, in_channels, kernel_size, kernel_size)), val=1.0)

bias_attr = constant_(torch.empty(size=(out_channels, 1)), val=0.0)

super(Conv2D3, self).__init__()

# 创建卷积核

self.weight = torch.nn.parameter.Parameter(weight_attr, requires_grad=True)

# 创建偏置

self.bias = torch.nn.parameter.Parameter(bias_attr, requires_grad=True)

self.stride = stride

self.padding = padding

# 输入通道数

self.in_channels = in_channels

# 输出通道数

self.out_channels = out_channels

# 基础卷积运算

def single_forward(self, X, weight):

# 零填充

new_X = torch.zeros([X.shape[0], X.shape[1] + 2 * self.padding, X.shape[2] + 2 * self.padding])

new_X[:, self.padding:X.shape[1] + self.padding, self.padding:X.shape[2] + self.padding] = X

u, v = weight.shape

output_w = (new_X.shape[1] - u) // self.stride + 1

output_h = (new_X.shape[2] - v) // self.stride + 1

output = torch.zeros([X.shape[0], output_w, output_h])

for i in range(0, output.shape[1]):

for j in range(0, output.shape[2]):

output[:, i, j] = torch.sum(

new_X[:, self.stride * i:self.stride * i + u, self.stride * j:self.stride * j + v] * weight,

dim=[1, 2])

return output

def forward(self, inputs):

"""

输入:

- inputs:输入矩阵,shape=[B, D, M, N]

- weights:P组二维卷积核,shape=[P, D, U, V]

- bias:P个偏置,shape=[P, 1]

"""

feature_maps = []

# 进行多次多输入通道卷积运算

p = 0

for w, b in zip(self.weight, self.bias): # P个(w,b),每次计算一个特征图Zp

multi_outs = []

# 循环计算每个输入特征图对应的卷积结果

for i in range(self.in_channels):

single = self.single_forward(inputs[:, i, :, :], w[i])

multi_outs.append(single)

# print("Conv2D in_channels:",self.in_channels,"i:",i,"single:",single.shape)

# 将所有卷积结果相加

feature_map = torch.sum(torch.stack(multi_outs), dim=0) + b # Zp

#求 a 在第 0 维的和,因为第 0 维代表最外边的括号,第 0 维的和就是第 0 维中的元素相加

feature_maps.append(feature_map)

# print("Conv2D out_channels:",self.out_channels, "p:",p,"feature_map:",feature_map.shape)

p += 1

# 将所有Zp进行堆叠,凑成一个张量

out = torch.stack(feature_maps, 1)

return out

inputs = torch.tensor([[[[0,1,1,0,2], [2,2,2,2,1], [1,0,0,2,0],[0,1,1,0,0],[1,2,0,0,2]],

[[1,0,2,2,0], [0,0,0,2,0], [1,2,1,2,1],[1,0,0,0,0],[1,2,1,1,1]],[[2,1,2,0,0], [1,0,0,1,0], [0,2,1,0,1],[0,1,2,2,2],[2,1,0,0,1]]]]).float()

conv2d = Conv2D3(in_channels=3, out_channels=2, kernel_size=3,stride=2,padding=1)

conv2d.weight = torch.nn.parameter.Parameter(torch.tensor([[[[-1,1,0],[0,1,0],[0,1,1]],[[-1,-1,0],[0,0,0],[0,-1,0]],[[0,0,-1],[0,1,0],[1,-1,-1]]],[[[1,1,-1],[-1,-1,1],[0,-1,1]],[[0,1,0],[-1,0,-1],[-1,1,0]],[[-1,0,0],[-1,0,1],[-1,0,0]]]]).float(), requires_grad=True)

conv2d.bias = torch.nn.parameter.Parameter(torch.tensor([1,0]).float(), requires_grad=True)

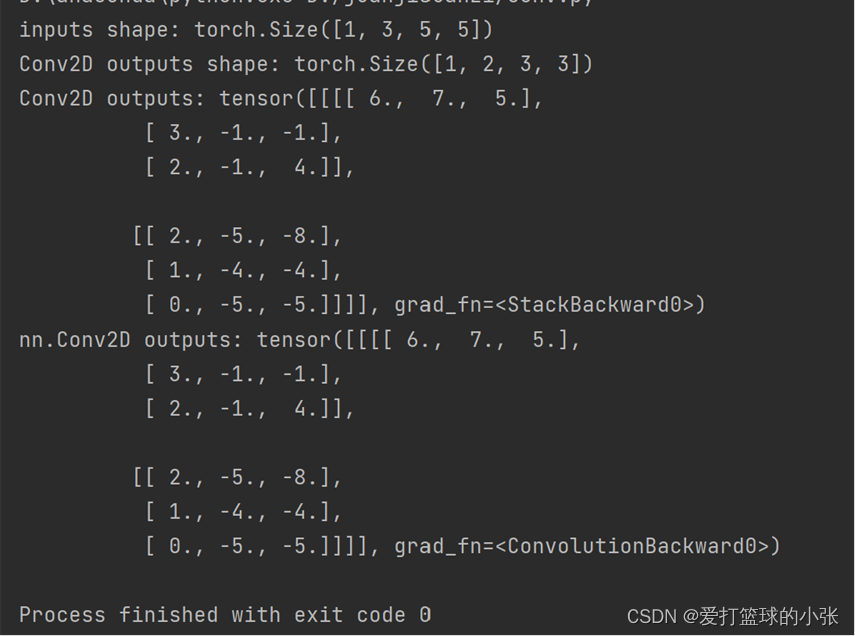

print("inputs shape:", inputs.shape)

outputs = conv2d(inputs)

print("Conv2D outputs shape:", outputs.shape)

# 比较与paddle API运算结果

conv2d_paddle = nn.Conv2d(in_channels=3, out_channels=2, kernel_size=3,stride=2,padding=1)

conv2d_paddle.weight = torch.nn.parameter.Parameter(torch.tensor([[[[-1,1,0],[0,1,0],[0,1,1]],[[-1,-1,0],[0,0,0],[0,-1,0]],[[0,0,-1],[0,1,0],[1,-1,-1]]],[[[1,1,-1],[-1,-1,1],[0,-1,1]],[[0,1,0],[-1,0,-1],[-1,1,0]],[[-1,0,0],[-1,0,1],[-1,0,0]]]]).float(), requires_grad=True)

conv2d_paddle.bias = torch.nn.parameter.Parameter(torch.tensor([1,0]).float(), requires_grad=True)

outputs_paddle = conv2d_paddle(inputs)

# 自定义算子运算结果

print('Conv2D outputs:', outputs)

# paddle API运算结果

print('nn.Conv2D outputs:', outputs_paddle)

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?