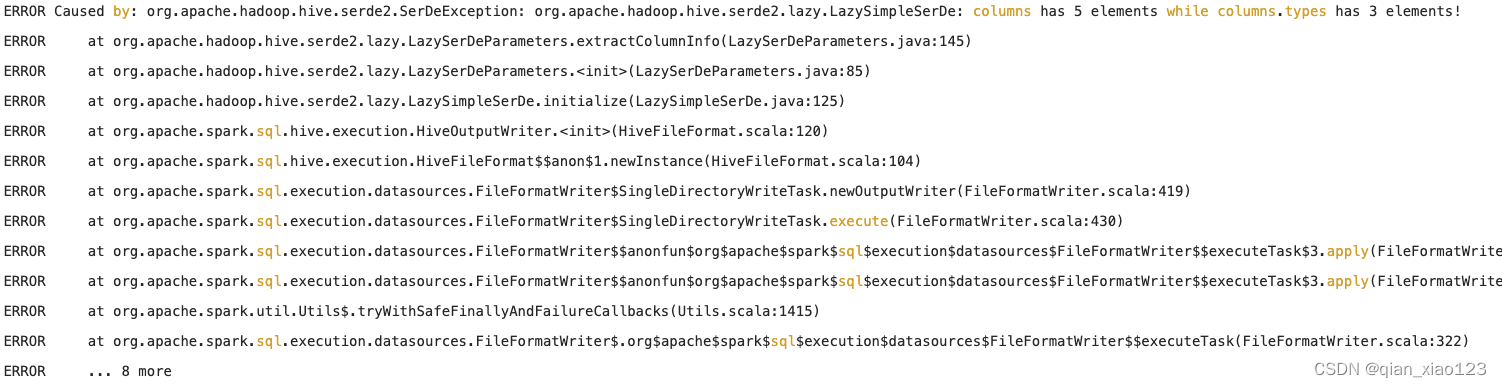

一、Caused by: org.apache.hadoop.hive.serde2.SerDeException: org.apache.hadoop.hive.serde2.lazy.LazySimpleSerDe: columns has 4 elements while columns.types has 3 elements!

SQL:

insert overwrite directory 'xxx'

ROW FORMAT DELIMITED FIELDS TERMINATED BY '\001'

select

id ,

contract_id ,

date_format(update_time,'yyyy-MM-dd hh:mm:ss')

from (

异常解决:

date_format(update_time,'yyyy-MM-dd hh:mm:ss') 这种字符串作为的列需要起一个别名

insert overwrite directory 'xxx'

ROW FORMAT DELIMITED FIELDS TERMINATED BY '\001'

select

id ,

contract_id ,

date_format(update_time,'yyyy-MM-dd hh:mm:ss') as update_time

from (改成这种之后错误异常解决!!!

--------------------------------------------------------------------------------------------------------------------------------

spark-version:2.3 会出现这个问题

出现问题部分的源码

public void extractColumnInfo() throws SerDeException {

String columnNameProperty = this.tableProperties.getProperty("columns");

String columnTypeProperty = this.tableProperties.getProperty("columns.types");

if (columnNameProperty != null && columnNameProperty.length() > 0) {

//因为这边根据“,"进行分割,所以如果表达式中包含“,”,且没有别名的话,size就会多一个

this.columnNames = Arrays.asList(columnNameProperty.split(","));

} else {

this.columnNames = new ArrayList();

}

if (columnTypeProperty == null) {

StringBuilder sb = new StringBuilder();

for(int i = 0; i < this.columnNames.size(); ++i) {

if (i > 0) {

sb.append(":");

}

sb.append("string");

}

columnTypeProperty = sb.toString();

}

this.columnTypes = TypeInfoUtils.getTypeInfosFromTypeString(columnTypeProperty);

if (this.columnNames.size() != this.columnTypes.size()) {

//error部分

throw new SerDeException(this.serdeName + ": columns has " + this.columnNames.size() + " elements while columns.types has " + this.columnTypes.size() + " elements!");

}

}

进一步验证,判断是否是“,“分割引起的,将sql调整为如下

insert overwrite directory 'xxx'

ROW FORMAT DELIMITED FIELDS TERMINATED BY '\001'

select

id ,

contract_id ,

--两个,号

substr(update_time,1,10)

from (结果报错:org.apache.hadoop.hive.serde2.lazy.LazySimpleSerDe: columns has 5 elements while columns.types has 3 elements!

验证是这个原因导致,起别名会将columeName改成别名,就不会存在分割size问题!!!

spark-version:3.1 不出现这个问题

/**

* Extracts and set column names and column types from the table properties

* @throws SerDeException

*/

public void extractColumnInfo() throws SerDeException {

// Read the configuration parameters

String columnNameProperty = tableProperties.getProperty(serdeConstants.LIST_COLUMNS);

// NOTE: if "columns.types" is missing, all columns will be of String type

String columnTypeProperty = tableProperties.getProperty(serdeConstants.LIST_COLUMN_TYPES);

// Parse the configuration parameters

String columnNameDelimiter = tableProperties.containsKey(serdeConstants.COLUMN_NAME_DELIMITER) ? tableProperties

.getProperty(serdeConstants.COLUMN_NAME_DELIMITER) : String.valueOf(SerDeUtils.COMMA);

if (columnNameProperty != null && columnNameProperty.length() > 0) {

columnNames = Arrays.asList(columnNameProperty.split(columnNameDelimiter));

} else {

columnNames = new ArrayList<String>();

}

if (columnTypeProperty == null) {

// Default type: all string

StringBuilder sb = new StringBuilder();

for (int i = 0; i < columnNames.size(); i++) {

if (i > 0) {

sb.append(":");

}

sb.append(serdeConstants.STRING_TYPE_NAME);

}

columnTypeProperty = sb.toString();

}

columnTypes = TypeInfoUtils.getTypeInfosFromTypeString(columnTypeProperty);

if (columnNames.size() != columnTypes.size()) {

throw new SerDeException(serdeName + ": columns has " + columnNames.size()

+ " elements while columns.types has " + columnTypes.size() + " elements!");

}

}

9674

9674

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?