文章目录

1.mfs环境部署

#新建3个虚拟机server1,2,3,4。server1做master,server2,3,4块服务器(做存储),真机做client端

官网https://moosefs.com/

##yum源

[root@server1 ~]# curl "http://ppa.moosefs.com/MooseFS-3-el7.repo" > /etc/yum.repos.d/MooseFS.repo

[root@server1 ~]# cd /etc/yum.repos.d/

[root@server1 yum.repos.d]# ls

dvd.repo MooseFS.repo redhat.repo

[root@server1 yum.repos.d]# vim MooseFS.repo

[root@server1 yum.repos.d]# cat MooseFS.repo

[MooseFS]

name=MooseFS $releasever - $basearch

baseurl=http://ppa.moosefs.com/moosefs-3/yum/el7

gpgcheck=0

gpgkey=file:///etc/pki/rpm-gpg/RPM-GPG-KEY-MooseFS

enabled=1

[root@server1 ~]# scp /etc/yum.repos.d/MooseFS.repo server2:/etc/yum.repos.d/

[root@server1 ~]# scp /etc/yum.repos.d/MooseFS.repo server3:/etc/yum.repos.d/

[root@server1 ~]# scp /etc/yum.repos.d/MooseFS.repo server4:/etc/yum.repos.d/

[root@server1 ~]# scp /etc/yum.repos.d/MooseFS.repo zhenji:/etc/yum.repos.d/

[root@server1 ~]# yum install moosefs-chunkserver -y

[root@server1 yum.repos.d]# yum install moosefs-master moosefs-cgi moosefs-cgiserv moosefs-cli -y

[root@server2 ~]# yum install moosefs-chunkserver -y

[root@server3 ~]# yum install moosefs-chunkserver -y

###部署server1

[root@server1 ~]# vim /etc/hosts

172.25.3.1 server1 mfsmaster

[root@server1 ~]# systemctl start moosefs-master.service

[root@server1 ~]# systemctl start moosefs-cgiserv.service

[root@server1 ~]# netstat -antlp

Active Internet connections (servers and established)

Proto Recv-Q Send-Q Local Address Foreign Address State PID/Program name

tcp 0 0 0.0.0.0:9419 0.0.0.0:* LISTEN 13647/mfsmaster

tcp 0 0 0.0.0.0:9420 0.0.0.0:* LISTEN 13647/mfsmaster

tcp 0 0 0.0.0.0:9421 0.0.0.0:* LISTEN 13647/mfsmaster

tcp 0 0 0.0.0.0:9425 0.0.0.0:* LISTEN 13674/python2

网页http://172.25.3.1:9425/

网页http://172.25.3.1:9425/

##部署server2,3

[root@server2 ~]# vim /etc/hosts##程序内默认的解析

172.25.3.1 server1 mfsmaster

[root@server3 ~]# vim /etc/hosts

172.25.3.1 server1 mfsmaster

###server2,3都添加一个10G的虚拟硬盘,把数据放到独立的硬盘上

###建立分区

[root@server2 ~]# fdisk -l

Disk /dev/vdb: 10.7 GB, 10737418240 bytes, 20971520 sectors

Units = sectors of 1 * 512 = 512 bytes

[root@server2 ~]# fdisk /dev/vdb

[root@server2 ~]# mkfs.xfs /dev/vdb1

[root@server2 ~]# blkid

/dev/vdb1: UUID="fc4da196-4a95-4c68-951e-1680e422fc3d" TYPE="xfs"

[root@server2 ~]# mkdir /mnt/chunk1

[root@server2 ~]# vim /etc/fstab

##最后添加自动挂载

UUID="fc4da196-4a95-4c68-951e-1680e422fc3d" /mnt/chunk1 xfs defaults 0 0

[root@server2 ~]# mount -a

[root@server2 ~]# df

[root@server2 mfs]# chown mfs.mfs /mnt/chunk1/

[root@server2 ~]# systemctl start moosefs-chunkserver

[root@server2 ~]# netstat -antlp

Active Internet connections (servers and established)

Proto Recv-Q Send-Q Local Address Foreign Address State PID/Program name

tcp 0 0 0.0.0.0:9422 0.0.0.0:* LISTEN 13429/mfschunkserve

[root@server2 ~]# yum install -y lsof

[root@server2 ~]# cd /etc/mfs/

[root@server2 mfs]# ls

mfschunkserver.cfg mfschunkserver.cfg.sample mfshdd.cfg mfshdd.cfg.sample

[root@server2 mfs]# vim mfshdd.cfg

/mnt/chunk1

[root@server2 mfs]# systemctl restart moosefs-chunkserver.service

网页http://172.25.3.1:9425/就能看见挂载

##部署server3

[root@server3 ~]# vim /etc/hosts

172.25.3.1 server1 mfsmaster

###server2,3都添加一个10G的虚拟硬盘

[root@server2 ~]# fdisk -l

Disk /dev/vdb: 10.7 GB, 10737418240 bytes, 20971520 sectors

Units = sectors of 1 * 512 = 512 bytes

[root@server2 ~]# fdisk /dev/vdb##同server2

[root@server3 ~]# mkfs.xfs /dev/vdb1

[root@server3 ~]# blkid

/dev/vdb1: UUID="fc4da196-4a95-4c68-951e-1680e422fc3d" TYPE="xfs"

[root@server3 ~]# mkdir /mnt/chunk2

[root@server3 ~]# vim /etc/fstab

UUID="fc4da196-4a95-4c68-951e-1680e422fc3d" /mnt/chunk2 xfs defaults 0 0

[root@server3 ~]# vim /etc/fstab

[root@server3 ~]# mount -a

[root@server3 ~]# df

[root@server2 mfs]# chown mfs.mfs /mnt/chunk2/

[root@server3 ~]# systemctl start moosefs-chunkserver

[root@server3 ~]# netstat -antlp

Active Internet connections (servers and established)

Proto Recv-Q Send-Q Local Address Foreign Address State PID/Program name

tcp 0 0 0.0.0.0:9422 0.0.0.0:* LISTEN 13429/mfschunkserve

[root@server3 ~]# yum install -y lsof

[root@server3 ~]# cd /etc/mfs/

[root@server3 mfs]# ls

mfschunkserver.cfg mfschunkserver.cfg.sample mfshdd.cfg mfshdd.cfg.sample

[root@server3 mfs]# vim mfshdd.cfg

/mnt/chunk2

[root@server3 mfs]# systemctl restart moosefs-chunkserver.service

网页http://172.25.3.1:9425/就能看见挂载

真机做客户端

[root@zhenji images]# yum install moosefs-client -y

[root@zhenji images]# mkdir /mnt/mfs

[root@zhenji images]# vim /etc/hosts

172.25.3.1 server1 mfsmaster

[root@zhenji images]# vim /etc/mfs/mfsmount.cfg

/mnt/mfs

[root@zhenji images]# mfsmount

[root@zhenji images]# df

mfsmaster:9421 20948992 590592 20358400 3% /mnt/mfs

[root@zhenji ~]# cd /mnt/mfs

[root@zhenji mfs]# ls

[root@zhenji mfs]# mkdir dir1

[root@zhenji mfs]# mkdir dir2

[root@zhenji mfs]# mfsgetgoal dir1

dir1: 2

[root@zhenji mfs]# mfsgetgoal dir2##存两份,防止某个节点down掉导致数据丢失

dir2: 2

[root@zhenji mfs]# mfssetgoal -r 1 dir1##dir1存一份

[root@zhenji mfs]# cp /etc/passwd dir1/

[root@zhenji mfs]# cp /etc/fstab dir2

[root@zhenji mfs]# mfsfileinfo dir1/passwd ##客户端访问master端看passwd存在哪个块服务器上,master端返回给客户端9422端口,客户端通过访问9422端口获取 passwd

dir1/passwd:

chunk 0: 0000000000000001_00000001 / (id:1 ver:1)

copy 1: 172.25.3.2:9422 (status:VALID)

[root@zhenji mfs]# mfsfileinfo dir2/fstab

dir2/fstab:

chunk 0: 0000000000000002_00000001 / (id:2 ver:1)

copy 1: 172.25.3.3:9422 (status:VALID)

copy 2: 172.25.3.4:9422 (status:VALID)

[root@zhenji dir2]# dd if=/dev/zero of=bigfile bs=1M count=100

100+0 records in

100+0 records out

104857600 bytes (105 MB, 100 MiB) copied, 0.613293 s, 171 MB/s

[root@zhenji dir2]# mfsfileinfo bigfile##一个最大的空间时64M ,超过的会被分成两个

bigfile:

chunk 0: 0000000000000003_00000001 / (id:3 ver:1)

copy 1: 172.25.3.2:9422 (status:VALID)

copy 2: 172.25.3.4:9422 (status:VALID)

chunk 1: 0000000000000004_00000001 / (id:4 ver:1)

copy 1: 172.25.3.3:9422 (status:VALID)

copy 2: 172.25.3.4:9422 (status:VALID)

%%%回收利用

[root@zhenji ~]# cd /mnt/

[root@zhenji mnt]# mkdir mfsmeta/

[root@zhenji mnt]# cd mfsmeta/

[root@zhenji mfsmeta]# ls

[root@zhenji mfsmeta]# mfsmount -m /mnt/mfsmeta/

mfsmaster accepted connection with parameters: read-write,restricted_ip

[root@zhenji mfsmeta]# cd /mnt/mfsmeta/

[root@zhenji mfsmeta]# ls

sustained trash

[root@zhenji mfsmeta]# cd trash/

[root@zhenji trash]# ls

[root@zhenji trash]# ls|wc -l

4097

[root@zhenji trash]# find -name *passwd*

./00B/0000000B|dir1|passwd

[root@zhenji trash]# cd 00B

[root@zhenji 00B]# ls

'0000000B|dir1|passwd' undel

[root@zhenji 00B]# mv '0000000B|dir1|passwd' undel

[root@zhenji 00B]# cd /mnt/mfs

mfs/ mfsmeta/

[root@zhenji 00B]# cd /mnt/mfs/dir1

[root@zhenji dir1]# ls

passwd

[root@zhenji dir1]# mfsfileinfo passwd

passwd:

chunk 0: 0000000000000001_00000001 / (id:1 ver:1)

copy 1: 172.25.3.2:9422 (status:VALID)

2.Storage Classes(存储器的类别)

1.修改不同的标签

[root@server2 ~]# cd /etc/mfs/

[root@server2 mfs]# vim mfschunkserver.cfg

LABELS = A##修改标签

[root@server2 mfs]# systemctl reload moosefs-chunkserver.service

[root@server3 ~]# cd /etc/mfs/

[root@server3 mfs]# vim mfschunkserver.cfg

LABELS = B##修改标签

[root@server3 mfs]# systemctl reload moosefs-chunkserver.servi

###部署新的server4

[root@server4 ~]# yum install moosefs-chunkserver -y

[root@server4 ~]# mkdir /mnt/chunk3

[root@server4 ~]# vim /etc/mfs/mfshdd.cfg

/mnt/chunk3

[root@server4 ~]# vim /etc/hosts

172.25.3.1 server1 mfsmaster

[root@server4 ~]# chown mfs.mfs /mnt/chunk3/

[root@server4 ~]# systemctl start moosefs-chunkserver

[root@server4 ~]# cd /etc/mfs/

[root@server4 mfs]# vim mfschunkserver.cfg

LABELS = A##修改标签

[root@server4 mfs]# systemctl reload moosefs-chunkserver.service

网页http://172.25.3.1:9425/就能看见label:server2是A,server3是B,server4是A

2.在MooseFS上创建存储类

[root@zhenji mfs]# mfsscadmin create 2A class_2A

storage class make class_2A: ok

[root@zhenji mfs]# mfsfileinfo dir2/fstab

dir2/fstab:

chunk 0: 0000000000000002_00000001 / (id:2 ver:1)

copy 1: 172.25.3.2:9422 (status:VALID)

copy 2: 172.25.3.3:9422 (status:VALID)

[root@zhenji mfs]# ls

dir1 dir2

[root@zhenji mfs]# cd dir2

[root@zhenji dir2]# mfssetsclass class_2A fstab

fstab: storage class: 'class_2A'

[root@zhenji dir2]# mfsfileinfo fstab ###server2是A,server3是B,server4是A,所以2个A在2,4上

fstab:

chunk 0: 0000000000000002_00000001 / (id:2 ver:1)

copy 1: 172.25.3.2:9422 (status:VALID)

copy 2: 172.25.3.4:9422 (status:VALID)

[root@zhenji dir2]# mfsscadmin create A,B classAB

storage class make classAB: ok

[root@zhenji dir2]# mfssetsclass classAB bigfile

[root@zhenji dir2]# mfsfileinfo bigfile ###server2是A,server3是B,server4是A,所以AB在3,4或2,3上

bigfile:

chunk 0: 0000000000000003_00000001 / (id:3 ver:1)

copy 1: 172.25.3.2:9422 (status:VALID)

copy 2: 172.25.3.3:9422 (status:VALID)

chunk 1: 0000000000000004_00000001 / (id:4 ver:1)

copy 1: 172.25.3.3:9422 (status:VALID)

copy 2: 172.25.3.4:9422 (status:VALID)

[root@server2 mfs]# vim mfschunkserver.cfg

LABELS = A S ##修改标签

[root@server2 mfs]# systemctl reload moosefs-chunkserver.service

[root@server3 mfs]# vim mfschunkserver.cfg

LABELS = B S##修改标签

[root@server3 mfs]# systemctl reload moosefs-chunkserver.service

[root@server4 mfs]# vim mfschunkserver.cfg

LABELS = A H##修改标签;;HDD固态,SSH机械

[root@server4 mfs]# systemctl reload moosefs-chunkserver.servi

[root@zhenji dir2]# mfsfileinfo fstab

fstab:

chunk 0: 0000000000000002_00000001 / (id:2 ver:1)

copy 1: 172.25.3.2:9422 (status:VALID)

copy 2: 172.25.3.4:9422 (status:VALID)

[root@zhenji dir2]# cd ..

[root@zhenji mfs]# mfsfileinfo dir1/passwd

dir1/passwd:

chunk 0: 0000000000000001_00000001 / (id:1 ver:1)

copy 1: 172.25.3.2:9422 (status:VALID)

[root@zhenji mfs]# mfsscadmin create AS,BS class_ASBS

storage class make class_ASBS: ok

[root@zhenji mfs]# mfssetsclass class_ASBS dir2/fstab

dir2/fstab: storage class: 'class_ASBS'

[root@zhenji mfs]# mfsfileinfo dir2/fstab ###server2是A S,server3是B S,server4是A H,所以AS,BS在2,3上

dir2/fstab:

chunk 0: 0000000000000002_00000001 / (id:2 ver:1)

copy 1: 172.25.3.2:9422 (status:VALID)

copy 2: 172.25.3.3:9422 (status:VALID)

[root@zhenji mfs]# mfsscadmin create BS,2A[S+H] class4

storage class make class4: ok

[root@zhenji mfs]# mfssetsclass class4 dir2/fstab

dir2/fstab: storage class: 'class4'

[root@zhenji mfs]# mfsfileinfo dir2/fstab

dir2/fstab:

chunk 0: 0000000000000002_00000001 / (id:2 ver:1)

copy 1: 172.25.3.2:9422 (status:VALID)

copy 2: 172.25.3.3:9422 (status:VALID)

copy 3: 172.25.3.4:9422 (status:VALID)

[root@server3 mfs]# vim mfschunkserver.cfg

LABELS = A B S H##修改标签

[root@server3 mfs]# systemctl reload moosefs-chunkserver.service

[root@zhenji mfs]# mfsscadmin create -C 2AS -K AS,BS -A AH,BH -d 30 class5

storage class make class5: ok

[root@zhenji mfs]# mfssetsclass class5 dir2/fstab

dir2/fstab: storage class: 'class5'

[root@zhenji mfs]# mfsfileinfo dir2/fstab

dir2/fstab:

chunk 0: 0000000000000002_00000001 / (id:2 ver:1)

copy 1: 172.25.3.2:9422 (status:VALID)

copy 2: 172.25.3.3:9422 (status:VALID)

2.高可用

##server1和4,1做导入,4做接收

[root@server4 ~]# yum install moosefs-master moosefs-cgi moosefs-cgiserv moosefs-cli -y

[root@server3 ~]# systemctl stop moosefs-chunkserver.service

[root@server3 ~]# vim /etc/fstab

#UUID="8460cd4a-fc34-426a-9336-9c304ce13c2c" /mnt/chunk2 xfs defaults 0 0

[root@server3 ~]# umount /mnt/chunk2/

[root@server3 ~]# fdisk /dev/vdb

Command (m for help): d

Command (m for help): p

Device Boot Start End Blocks Id System

Command (m for help): w

[root@server3 ~]# mount /dev/vdb1 /mnt/##此时已经不能挂载了

##此时若恢复分区表,不要格式化

mount: special device /dev/vdb1 does not exist

[root@server3 ~]# fdisk /dev/vdb

Command (m for help): n

Select (default p): p

Partition number (1-4, default 1):

First sector (2048-20971519, default 2048):

Last sector, +sectors or +size{K,M,G} (2048-20971519, default 20971519):

Command (m for help): p

Device Boot Start End Blocks Id System

/dev/vdb1 2048 20971519 10484736 83 Linux

Command (m for help): w

[root@server3 ~]# mount /dev/vdb1 /mnt/

[root@server3 ~]# df

/dev/vdb1 10474496 33168 10441328 1% /mnt

[root@server3 ~]# cd /mnt/

[root@server3 mnt]# ls##数据都还在

[root@server3 ~]# umount /mnt/

[root@server3 ~]# dd if=/dev/zero of=/dev/vdb bs=512 count=1##把数据全部置0,清空

[root@server3 ~]# umount /mnt/chunk2/

1.target

[root@server3 ~]# yum install targetcli -y

[root@server3 ~]# systemctl start target.service

[root@server1 ~]# yum install iscsi-* -y

[root@server1 ~]# cat /etc/iscsi/initiatorname.iscsi

InitiatorName=iqn.1994-05.com.redhat:50f0e48b3ee7

[root@server3 ~]# targetcli

/> ls

/> cd /backstores/block

/backstores/block> create my_disk /dev/vdb

Created block storage object my_disk using /dev/vdb.

/backstores/block> cd /iscsi

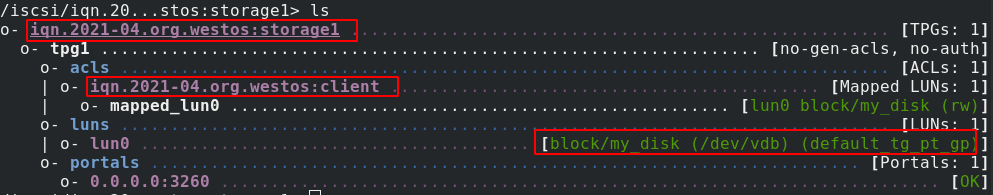

/iscsi> create iqn.2021-04.org.westos:storage1

/iscsi> ls

/iscsi> cd iqn.2021-04.org.westos:storage1

/iscsi/iqn.20...stos:storage1> cd tpg1/acls

/iscsi/iqn.20...ge1/tpg1/acls> create iqn.2021-04.org.westos:client

/iscsi/iqn.20...ge1/tpg1/acls> cd ..

/iscsi/iqn.20...storage1/tpg1> cd luns

/iscsi/iqn.20...ge1/tpg1/luns> create

/backstores/block/my_disk add_mapped_luns= lun=

storage_object=

/iscsi/iqn.20...ge1/tpg1/luns> create /backstores/block/my_disk

Created LUN 0.

Created LUN 0->0 mapping in node ACL iqn.2021-04.org.westos:client

/iscsi/iqn.20...ge1/tpg1/luns> ls

o- luns ................................................................................. [LUNs: 1]

o- lun0 ........................................... [block/my_disk (/dev/vdb) (default_tg_pt_gp)]

/iscsi/iqn.20...ge1/tpg1/luns> cd ..

/iscsi/iqn.20...storage1/tpg1> cd ..

/iscsi/iqn.20...stos:storage1> ls

[root@server1 ~]# vim /etc/iscsi/initiatorname.iscsi

[root@server1 ~]# cat /etc/iscsi/initiatorname.iscsi

InitiatorName=iqn.2021-04.org.westos:client

[root@server1 ~]# iscsiadm -m discovery -t st -p 172.25.3.3

[root@server1 ~]# iscsiadm -m node -l

[root@server1 ~]# fdisk -l

Disk /dev/sda: 10.7 GB, 10737418240 bytes, 20971520 sectors

[root@server4 ~]# yum install iscsi-* -y

[root@server4 ~]# vim /etc/iscsi/initiatorname.iscsi

[root@server4 ~]# cat /etc/iscsi/initiatorname.iscsi

InitiatorName=iqn.2021-04.org.westos:client

[root@server4 ~]# iscsiadm -m discovery -t st -p 172.25.3.3

[root@server4 ~]# iscsiadm -m node -l

[root@server4 ~]# fdisk -l

Disk /dev/sda: 10.7 GB, 10737418240 bytes, 20971520 sectors

###server1上创建分区,server4上刷新后会同步

[root@server1 ~]# fdisk /dev/sda

Command (m for help): n

Select (default p): p

Partition number (1-4, default 1):

First sector (2048-20971519, default 2048):

Last sector, +sectors or +size{K,M,G} (2048-20971519, default 20971519):

Command (m for help): p

Device Boot Start End Blocks Id System

/dev/sda1 2048 20971519 10484736 83 Linux

Command (m for help): w

[root@server4 ~]# ll /dev/sda1

ls: cannot access /dev/sda1: No such file or directory

[root@server4 ~]# partprobe

[root@server4 ~]# ll /dev/sda1

brw-rw---- 1 root disk 8, 1 Apr 18 16:40 /dev/sda1

[root@server1 ~]# mkfs.xfs /dev/sda1

mkfs.xfs: /dev/sda1 appears to contain an existing filesystem (xfs).

mkfs.xfs: Use the -f option to force overwrite.

[root@server1 ~]# mkfs.xfs /dev/sda1 -f

[root@server1 ~]# mount /dev/sda1 /mnt/

[root@server1 ~]# df

/dev/sda1 10474496 32992 10441504 1% /mnt

[root@server1 ~]# cd /mnt/

[root@server1 mnt]# ls

[root@server1 mnt]# cp /etc/passwd .

[root@server1 mnt]# ls

passwd

[root@server1 mnt]# cd

[root@server1 ~]# umount /mnt/##本地文件,不是网络文件,所以只能一个设备取消挂载后才能挂载在另一个设备中。##写数据时只能一个设备挂载,不能同时挂载

[root@server4 ~]# mount /dev/sda1 /mnt/

[root@server4 ~]# cd /mnt/

[root@server4 mnt]# ls##数据会同步

passwd

[root@server4 ~]# umount /dev/sda1 /mnt/

[root@server1 ~]# mount /dev/sda1 /mnt/

[root@server1 ~]# cd /mnt/

[root@server1 mnt]# rm -fr passwd

2.server1和server2的mfs环境

[root@server1 ~]# cd /var/lib/mfs/

[root@server1 mfs]# ls

changelog.0.mfs changelog.4.mfs metadata.crc metadata.mfs.back.1 stats.mfs

changelog.1.mfs changelog.5.mfs metadata.mfs.back metadata.mfs.empty

[root@server1 mfs]# cp -p * /mnt/

[root@server1 mfs]# df

/dev/sda1 10474496 38092 10436404 1% /mnt

[root@server1 mfs]# ll -d /mnt/

drwxr-xr-x 2 root root 213 Apr 20 15:47 /mnt/

[root@server1 mfs]# chown mfs.mfs /mnt/

[root@server1 mfs]# umount /mnt/

[root@server1 mfs]# ll /mnt/

total 0

[root@server1 mfs]# ll -d /mnt/

drwxr-xr-x. 2 root root 6 Dec 15 2017 /mnt/

[root@server1 mfs]# systemctl stop moosefs-master.service ##开启和停掉时,/var/lib/mfs/里的文件是不一样的metadata.mfs镜像文件

[root@server1 mfs]# cd /var/lib/mfs/

[root@server1 mfs]# ls

changelog.0.mfs changelog.4.mfs metadata.crc metadata.mfs.back.1 stats.mfs

changelog.1.mfs changelog.5.mfs metadata.mfs metadata.mfs.empty

[root@server1 mfs]# umount /mnt/

[root@server1 mfs]# mount /dev/sda1 /var/lib/mfs

[root@server1 mfs]# ll -d /var/lib/mfs

drwxr-xr-x 2 mfs mfs 213 Apr 20 15:47 /var/lib/mfs

[root@server1 mfs]# systemctl start moosefs-master

Job for moosefs-master.service failed because the control process exited with error code. See "systemctl status moosefs-master.service" and "journalctl -xe" for details.

[root@server1 mfs]# cd /var/lib/mfs/

[root@server1 mfs]# ls

[root@server1 mfs]# mv metadata.mfs.back metadata.mfs##改一下metadata.mfs.back文件名为stop下的文件名

[root@server1 mfs]# systemctl start moosefs-master##可以启动

[root@server1 mfs]# systemctl stop moosefs-master##一定要关掉

[root@server1 ~]# umount /var/lib/mfs##取消server1挂载,然后挂载到server4上

3.Pacemaker 集群

1)免密

[root@server1 ~]# ssh-keygen

[root@server1 ~]# ssh-copy-id server1

[root@server1 ~]# ssh-copy-id server2

[root@server1 ~]# ssh-copy-id server3

[root@server1 ~]# ssh-copy-id server4

2)安装集群软件

[root@server1 ~]# systemctl start moosefs-master

[root@zhenji mfs]# umount /mnt/mfsmeta

[root@zhenji mfs]# cd

[root@zhenji ~]# umount /mnt/mfs

[root@server1 ~]# systemctl stop moosefs-master##一定要关掉

[root@server1 ~]# vim /etc/yum.repos.d/dvd.repo

[root@server1 ~]# cat /etc/yum.repos.d/dvd.repo

[dvd]

name=rhel7.6

baseurl=http://10.4.17.74/rhel7.6

gpgcheck=0

[HighAvailability]

name=HighAvailability

baseurl=http://10.4.17.74/rhel7.6/addons/HighAvailability

gpgcheck=0

[root@server1 ~]# yum install -y pacemaker pcs psmisc policycoreutils-python

[root@server1 ~]# scp /etc/yum.repos.d/dvd.repo server4:/etc/yum.repos.d/dvd.repo

[root@server1 ~]# ssh server4 yum install -y pacemaker pcs psmisc policycoreutils-python

3)认证

[root@server1 ~]# systemctl enable --now pcsd.service

[root@server1 ~]# ssh server4 systemctl enable --now pcsd.service

[root@server1 ~]# echo westos | passwd --stdin hacluster

[root@server1 ~]# ssh server4 'echo westos | passwd --stdin hacluster'

[root@server1 ~]# grep hacluster /etc/shadow#一样的密码

hacluster:$6$UIU6Ztum$ogHw5sX9.Hbzr1cm/f5Di8QQv8KwqoRwzrD68v2aSs2dz.QsZ36VOLFT8c7NDqhIuTnk7lUQ0PjIMs.kwOI31.:18737::::::

[root@server1 ~]# pcs cluster auth server1 server4##认证

[root@server1 ~]# pcs cluster setup --name mycluster server1 server4##创建集群

[root@server1 ~]# pcs cluster start --all

[root@server1 ~]# pcs status

Cluster name: mycluster

WARNINGS:

No stonith devices and stonith-enabled is not false

[root@server1 ~]# crm_verify -LV#查看错误信息

error: unpack_resources: Resource start-up disabled since no STONITH resources have been defined

error: unpack_resources: Either configure some or disable STONITH with the stonith-enabled option

error: unpack_resources: NOTE: Clusters with shared data need STONITH to ensure data integrity

Errors found during check: config not valid

[root@server1 ~]# pcs property set stonith-enabled=false

[root@server1 ~]# pcs status##热备的集群搭建完成

[root@server1 ~]# pcs resource describe ocf:heartbeat:IPaddr2##pacemake的一个优点:每隔10s进行健康检测

[root@server1 ~]# pcs resource create vip ocf:heartbeat:IPaddr2 ip=10.4.17.100 cidr_netmask=32 op monitor interval=30s

[root@server1 ~]# ip addr##查看有10.4.17.100

[root@server1 ~]# pcs node standby

[root@server1 ~]# pcs status##server1下线后,server4接管,server1起开后回了不消耗资源不会切回来

[root@server1 ~]# pcs cluster enable --all

[root@server1 ~]# pcs resource agents ocf:heartbeat

[root@server1 ~]# pcs resource describe ocf:heartbeat:Filesystem

[root@server1 ~]# pcs resource create mfsdata ocf:heartbeat:Filesystem device=/dev/sda1 directory=/var/lib/mfs fstype=xfs op monitor interval=60s##调用的资源代理;设备;挂载路径;挂载方式;监控

[root@server1 ~]# pcs resource create mfsmaster systemd:moosefs-master op monitor interval=60s

[root@server1 ~]# pcs resource group add mfsgroup vip mfsdata mfsmaster###group 把资源集成到一起,控制请求顺序

[root@server1 ~]# pcs status###Started到在server1上

[root@server3 ~]# vim /etc/mfs/mfshdd.cfg

[root@server3 ~]# systemctl restart moosefs-chunkserver

[root@server4 ~]# vim /usr/lib/systemd/system/moosefs-chunkserver.service

##修改strat为-a

ExecStart=/usr/sbin/mfschunkserver -a

[root@server4 ~]# systemctl daemon-reload

[root@server1 ~]# vim /usr/lib/systemd/system/moosefs-chunkserver.service

ExecStart=/usr/sbin/mfschunkserver -a

[root@server1 ~]# systemctl daemon-reload

###这样正在接管的server1内核崩溃(echo c > /proc/sysrq-trigger)后,pcs resource cleanup mfsmaster后server4会正常接管

%%server1内核崩溃,只能断电,server1内核崩溃后由server4接管,但是就怕server1再起开,下面做fence:电源交换机,可以断电server1

4.fence

[root@zhenji ~]# yum install fence-virtd fence-virtd-libvirt fence-virtd-multicast -y

[root@zhenji ~]# rpm -qa|grep fence

fence-virtd-multicast-0.3.2-13.el7.x86_64

libxshmfence-1.3-2.el8.x86_64

fence-virtd-0.3.2-13.el7.x86_64

fence-virtd-libvirt-0.3.2-13.el7.x86_64

[root@zhenji ~]# fence_virtd -c##信息如下,br0需要写,其他是默认

port = "1229";

family = "ipv4";

interface = "br0";

address = "225.0.0.12";

key_file = "/etc/cluster/fence_xvm.key";

[root@zhenji ~]# cd /etc/cluster

bash: cd: /etc/cluster: No such file or directory

[root@zhenji ~]# mkdir /etc/cluster

[root@zhenji ~]# cd /etc/cluster

[root@zhenji cluster]# touch fence_xvm.key###客户端访问fence的密钥,必须在此目录下,先有密钥在启动才生效

[root@zhenji cluster]# ll

total 0

-rw-r--r-- 1 root root 0 Apr 20 21:49 fence_xvm.key

[root@zhenji cluster]# dd if=/dev/urandom of=fence_xvm.key bs=128 count=1

[root@zhenji cluster]# systemctl start fence_virtd.service

[root@zhenji cluster]# netstat -anulp|grep :1229

udp 0 0 0.0.0.0:1229 0.0.0.0:* 34260/fence_virtd

[root@server1 ~]# yum install fence-virt -y

[root@server1 ~]# yum install fence-virt -y

[root@server4 ~]# stonith_admin -I

fence_xvm

fence_virt

2 devices found

[root@server1 ~]# mkdir /etc/cluster

[root@server1 ~]# mkdir /etc/cluster

[root@zhenji cluster]# scp fence_xvm.key server1:/etc/cluster

[root@zhenji cluster]# scp fence_xvm.key server2:/etc/cluster

[root@zhenji cluster]# virsh list

Id Name State

----------------------------------------------------

7 demo1 running

8 demo2 running

9 demo3 running

10 demo4 running

##创建fence,作映射

[root@server1 cluster]# pcs stonith create vmfence fence_xvm pcmk_host_map="server1:demo1;server4:demo4" op monitor interval=60s

[root@server1 cluster]# pcs property set stonith-enabled=true

[root@server1 cluster]# pcs status

Online: [ server1 server4 ]

Resource Group: mfsgroup

vip (ocf::heartbeat:IPaddr2): Started server4

mfsdata (ocf::heartbeat:Filesystem): Started server4

mfsmaster (systemd:moosefs-master): Started server4

vmfence (stonith:fence_xvm): Started server1

###此时正在接管的server4内核崩溃(echo c > /proc/sysrq-trigger)后,server1会正常接管,然后server1自动重启作为vmfence:Started server4

1198

1198

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?