莫名其妙遇到…pod container 启动失败:Back-off restarting failed container

StatefulSet zk 集群 pod 一直处于pending,pv nfs

[root@hadoop03 k8s]# kubectl get pods

NAME READY STATUS RESTARTS AGE

zk-0 0/1 Pending 0 16s

[root@hadoop03 k8s]# kubectl describe pod zk-0

Name: zk-0

Namespace: default

Priority: 0

Node: <none>

Labels: app=zk

controller-revision-hash=zk-84975cc754

statefulset.kubernetes.io/pod-name=zk-0

Annotations: <none>

Status: Pending

IP:

IPs: <none>

Controlled By: StatefulSet/zk

Containers:

kubernetes-zookeeper:

Image: kubebiz/zookeeper

Ports: 2181/TCP, 2888/TCP, 3888/TCP

Host Ports: 0/TCP, 0/TCP, 0/TCP

Command:

sh

-c

start-zookeeper --servers=3 --data_dir=/var/lib/zookeeper/data --data_log_ dir=/var/lib/zookeeper/data/log --conf_dir=/opt/zookeeper/conf --client_port=218 1 --election_port=3888 --server_port=2888 --tick_time=2000 --init_limit=10 --syn c_limit=5 --heap=512M --max_client_cnxns=60 --snap_retain_count=3 --purge_interv al=12 --max_session_timeout=40000 --min_session_timeout=4000 --log_level=INFO

Requests:

cpu: 100m

memory: 512Mi

Liveness: exec [sh -c zookeeper-ready 2181] delay=10s timeout=5s period= 10s #success=1 #failure=3

Readiness: exec [sh -c zookeeper-ready 2181] delay=10s timeout=5s period= 10s #success=1 #failure=3

Environment: <none>

Mounts:

/var/lib/zookeeper from datadir (rw)

/var/run/secrets/kubernetes.io/serviceaccount from kube-api-access-8xqxv ( ro)

Conditions:

Type Status

PodScheduled False

Volumes:

datadir:

Type: PersistentVolumeClaim (a reference to a PersistentVolumeClaim in the same namespace)

ClaimName: datadir-zk-0

ReadOnly: false

kube-api-access-8xqxv:

Type: Projected (a volume that contains injected data fro m multiple sources)

TokenExpirationSeconds: 3607

ConfigMapName: kube-root-ca.crt

ConfigMapOptional: <nil>

DownwardAPI: true

QoS Class: Burstable

Node-Selectors: <none>

Tolerations: node.kubernetes.io/not-ready:NoExecute op=Exists fo r 300s

node.kubernetes.io/unreachable:NoExecute op=Exists for 300s

Events:

Type Reason Age From Message

---- ------ ---- ---- -------

Warning FailedScheduling 24s (x2 over 25s) default-scheduler 0/1 nodes are available: 1 pod has unbound immediate PersistentVolumeClaims.

zookeeper.yaml

[root@hadoop03 k8s]# cat zookeeper.yaml

apiVersion: v1

kind: Service

metadata:

name: zk-hs

labels:

app: zk

spec:

ports:

- port: 2888

name: server

- port: 3888

name: leader-election

clusterIP: None

selector:

app: zk

---

apiVersion: v1

kind: Service

metadata:

name: zk-cs

labels:

app: zk

spec:

ports:

- port: 2181

name: client

selector:

app: zk

---

apiVersion: policy/v1

kind: PodDisruptionBudget

metadata:

name: zk-pdb

spec:

selector:

matchLabels:

app: zk

maxUnavailable: 1

---

apiVersion: apps/v1

kind: StatefulSet

metadata:

name: zk

spec:

selector:

matchLabels:

app: zk

serviceName: zk-hs

replicas: 3

updateStrategy:

type: RollingUpdate

podManagementPolicy: OrderedReady

template:

metadata:

labels:

app: zk

spec:

#affinity:

# podAffinity:

# requiredDuringSchedulingIgnoredDuringExecution:

# - labelSelector:

# matchExpressions:

# - key: "app"

# operator: In

# values:

# - zk

# topologyKey: "kubernetes.io/hostname"

containers:

- name: kubernetes-zookeeper

imagePullPolicy: Always

image: "kubebiz/zookeeper"

resources:

requests:

memory: "512Mi"

cpu: "0.1"

ports:

- containerPort: 2181

name: client

- containerPort: 2888

name: server

- containerPort: 3888

name: leader-election

command:

- sh

- -c

- "start-zookeeper \

--servers=3 \

--data_dir=/var/lib/zookeeper/data \

--data_log_dir=/var/lib/zookeeper/data/log \

--conf_dir=/opt/zookeeper/conf \

--client_port=2181 \

--election_port=3888 \

--server_port=2888 \

--tick_time=2000 \

--init_limit=10 \

--sync_limit=5 \

--heap=512M \

--max_client_cnxns=60 \

--snap_retain_count=3 \

--purge_interval=12 \

--max_session_timeout=40000 \

--min_session_timeout=4000 \

--log_level=INFO"

readinessProbe:

exec:

command:

- sh

- -c

- "zookeeper-ready 2181"

initialDelaySeconds: 10

timeoutSeconds: 5

livenessProbe:

exec:

command:

- sh

- -c

- "zookeeper-ready 2181"

initialDelaySeconds: 10

timeoutSeconds: 5

volumeMounts:

- name: datadir

mountPath: /var/lib/zookeeper

securityContext:

runAsUser: 1000

fsGroup: 1000

volumeClaimTemplates:

- metadata:

name: datadir

annotations:

volume.beta.kubernetes.io/storage-class: "managed-nfs-storage" ### sc 绑定相同 sc matedata name对应pvc(同一个namespace)

spec:

accessModes: [ "ReadWriteOnce" ]

resources:

requests:

storage: 1Gi

sc 对应 NFS provisioner,以及pvc

[root@hadoop03 NFS]# cat nfs-class.yaml

apiVersion: storage.k8s.io/v1

kind: StorageClass

metadata:

name: managed-nfs-storage

provisioner: fuseim.pri/ifs ###

parameters:

archiveOnDelete: "false"

apiVersion: storage.k8s.io/v1

kind: StorageClass

metadata:

name: managed-nfs-storage

provisioner: fuseim.pri/ifs

parameters:

archiveOnDelete: "false"

######### NFS provisioner #########

[root@hadoop03 NFS]# cat nfs-deployment.yaml

apiVersion: apps/v1

kind: Deployment

metadata:

namespace: nfs-client

name: nfs-client-provisioner

labels:

app: nfs-client-provisioner

spec:

replicas: 1

strategy:

type: Recreate

selector:

matchLabels:

app: nfs-client-provisioner

template:

metadata:

labels:

app: nfs-client-provisioner

spec:

serviceAccountName: nfs-client-provisioner

containers:

- name: nfs-client-provisioner

image: quay.io/external_storage/nfs-client-provisioner:latest

volumeMounts:

- name: nfs-client-root

mountPath: /persistentvolumes

env:

- name: PROVISIONER_NAME

value: fuseim.pri/ifs ###

- name: NFS_SERVER

value: 192.168.153.103

- name: NFS_PATH

value: /nfs/data

volumes:

- name: nfs-client-root

nfs:

server: 192.168.153.103

path: /nfs/data

[root@hadoop03 NFS]#

######### pvc #########

apiVersion: v1

kind: PersistentVolumeClaim

metadata:

name: test-sc-pvc

annotations:

volume.beta.kubernetes.io/storage-class: "managed-nfs-storage" ### sc matedata name

spec:

accessModes:

- ReadWriteMany

resources:

requests:

storage: 1Gi

##################

[root@hadoop03 k8s]# kubectl get pod -n nfs-client

NAME READY STATUS RESTARTS AGE

nfs-client-provisioner-764f44f754-gww47 0/1 CrashLoopBackOff 7 (2m22s ago) 17h

test-pod 0/1 Completed 0 17h

[root@hadoop03 k8s]# kubectl describe pod nfs-client-provisioner-764f44f754-htndd -n nfs-client

Name: nfs-client-provisioner-764f44f754-htndd

Namespace: nfs-client

Priority: 0

Node: hadoop03/192.168.153.103

Start Time: Fri, 19 Nov 2021 09:56:49 +0800

Labels: app=nfs-client-provisioner

pod-template-hash=764f44f754

Annotations: <none>

Status: Running

IP: 10.244.0.8

IPs:

IP: 10.244.0.8

Controlled By: ReplicaSet/nfs-client-provisioner-764f44f754

Containers:

nfs-client-provisioner:

Container ID: docker://7fe4e55942d2f104c56ce092800eb7270b23f6f237f7b3a29ad7e974cd756bbd

Image: quay.io/external_storage/nfs-client-provisioner:latest

Image ID: docker-pullable://quay.io/external_storage/nfs-client-provisioner@sha256:022ea0b0d69834b652a4c53655d78642ae23f0324309097be874fb58d09d2919

Port: <none>

Host Port: <none>

State: Terminated

Reason: Error

Exit Code: 255

Started: Fri, 19 Nov 2021 09:57:29 +0800

Finished: Fri, 19 Nov 2021 09:57:33 +0800

Last State: Terminated

Reason: Error

Exit Code: 255

Started: Fri, 19 Nov 2021 09:57:09 +0800

Finished: Fri, 19 Nov 2021 09:57:12 +0800

Ready: False

Restart Count: 2

Environment:

PROVISIONER_NAME: fuseim.pri/ifs

NFS_SERVER: 192.168.153.103

NFS_PATH: /nfs/data

Mounts:

/persistentvolumes from nfs-client-root (rw)

/var/run/secrets/kubernetes.io/serviceaccount from kube-api-access-vkwdg (ro)

Conditions:

Type Status

Initialized True

Ready False

ContainersReady False

PodScheduled True

Volumes:

nfs-client-root:

Type: NFS (an NFS mount that lasts the lifetime of a pod)

Server: 192.168.153.103

Path: /nfs/data

ReadOnly: false

kube-api-access-vkwdg:

Type: Projected (a volume that contains injected data from multiple sources)

TokenExpirationSeconds: 3607

ConfigMapName: kube-root-ca.crt

ConfigMapOptional: <nil>

DownwardAPI: true

QoS Class: BestEffort

Node-Selectors: <none>

Tolerations: node.kubernetes.io/not-ready:NoExecute op=Exists for 300s

node.kubernetes.io/unreachable:NoExecute op=Exists for 300s

Events:

Type Reason Age From Message

---- ------ ---- ---- -------

Normal Scheduled 51s default-scheduler Successfully assigned nfs-client/nfs-client-provisioner-764f44f754-htndd to hadoop03

Normal Pulled 43s kubelet Successfully pulled image "quay.io/external_storage/nfs-client-provisioner:latest" in 6.395868709s

Normal Pulled 33s kubelet Successfully pulled image "quay.io/external_storage/nfs-client-provisioner:latest" in 4.951636594s

Normal Pulling 16s (x3 over 49s) kubelet Pulling image "quay.io/external_storage/nfs-client-provisioner:latest"

Normal Pulled 12s kubelet Successfully pulled image "quay.io/external_storage/nfs-client-provisioner:latest" in 4.671464188s

Normal Created 11s (x3 over 42s) kubelet Created container nfs-client-provisioner

Normal Started 11s (x3 over 42s) kubelet Started container nfs-client-provisioner

Warning BackOff 6s (x2 over 27s) kubelet Back-off restarting failed container

#######################

[root@hadoop03 k8s]# kubectl logs nfs-client-provisioner-764f44f754-htndd -n nfs-client

F1119 01:58:09.702588 1 provisioner.go:180] Error getting server version: Get https://10.1.0.1:443/version?timeout=32s: dial tcp 10.1.0.1:443: connect: no route to host

[root@hadoop03 k8s]#

解决:

[root@hadoop03 k8s]# service docker stop

Redirecting to /bin/systemctl stop docker.service

Warning: Stopping docker.service, but it can still be activated by:

docker.socket

[root@hadoop03 k8s]# systemctl stop docker.socket [root@hadoop03 k8s]# service kubelet stop Redirecting to /bin/systemctl stop kubelet.service

[root@hadoop03 k8s]# iptables --flush

[root@hadoop03 k8s]# iptables -tnat --flush

[root@hadoop03 k8s]# service docker start Redirecting to /bin/systemctl start docker.service

[root@hadoop03 k8s]# service kubelet start

Redirecting to /bin/systemctl start kubelet.service

[root@hadoop03 k8s]#

[root@hadoop03 k8s]# kubectl get pod -n nfs-client NAME READY STATUS RESTARTS AGE

nfs-client-provisioner-764f44f754-htndd 1/1 Running 8 (2m7s ago) 14m

test-pod 0/1 Completed 0 17h

#########

[root@hadoop03 NFS]# kubectl get pod

NAME READY STATUS RESTARTS AGE

zk-0 1/1 Running 0 54m

zk-1 1/1 Running 0 21m

zk-2 1/1 Running 0 19m

[root@hadoop03 NFS]#

[root@hadoop03 NFS]# kubectl get pvc

NAME STATUS VOLUME CAPACITY ACCESS MODES STORAGECLASS AGE

datadir-zk-0 Bound pvc-39361e04-7a61-4a6e-863f-018f73ed1557 1Gi RWO managed-nfs-storage 54m

datadir-zk-1 Bound pvc-daaf2112-cbdd-4da5-90d3-662cace5b0ab 1Gi RWO managed-nfs-storage 21m

datadir-zk-2 Bound pvc-4964f707-e4de-4db0-8d4e-45a5b0c29b60 1Gi RWO managed-nfs-storage 20m

test-sc-pvc Bound pvc-d6d17ca7-8b05-4068-bcdc-0f4443196274 1Gi RWX managed-nfs-storage 47m

[root@hadoop03 NFS]#

[root@hadoop03 NFS]# kubectl get pv

NAME CAPACITY ACCESS MODES RECLAIM POLICY STATUS CLAIM STORAGECLASS REASON AGE

pvc-39361e04-7a61-4a6e-863f-018f73ed1557 1Gi RWO Delete Bound default/datadir-zk-0 managed-nfs-storage 22m

pvc-4964f707-e4de-4db0-8d4e-45a5b0c29b60 1Gi RWO Delete Bound default/datadir-zk-2 managed-nfs-storage 19m

pvc-a8158cfe-5950-4ef5-b90c-0941a8fa082c 1Mi RWX Delete Bound nfs-client/test-claim managed-nfs-storage 17h

pvc-d6d17ca7-8b05-4068-bcdc-0f4443196274 1Gi RWX Delete Bound default/test-sc-pvc managed-nfs-storage 22m

pvc-daaf2112-cbdd-4da5-90d3-662cace5b0ab 1Gi RWO Delete Bound default/datadir-zk-1 managed-nfs-storage 21m

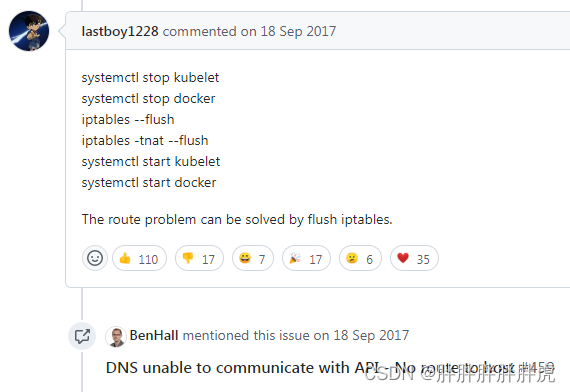

衍生

k8s master HA

各个master 节点都进行以下处理

systemctl stop kubelet

systemctl stop docker

iptables --flush

iptables -tnat --flush

systemctl start kubelet

systemctl start docker

参考:https://github.com/kubernetes/kubeadm/issues/193#issuecomment

学习:https://tencentcloudcontainerteam.github.io/2019/12/15/no-route-to-host/

632

632

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?