记录

—>>>>> GlusterFS分布式存储系统

https://www.cnblogs.com/wsnbba/p/10203870.html

日志位置

问题记录

1、启动报错

yum install centos-release-gluster

yum install -y glusterfs glusterfs-server glusterfs-fuse glusterfs-rdma

[2022-01-09 08:08:00.562893 +0000] E [rpc-transport.c:282:rpc_transport_load] 0-rpc-transport: /usr/lib64/glusterfs/9.4/rpc-transport/socket.so: symbol SSL_CTX_get0_param, version libssl.so.10 not defined in file libssl.so.10 with link time reference

[2022-01-09 08:08:00.562923 +0000] W [rpc-transport.c:286:rpc_transport_load] 0-rpc-transport: volume 'socket.management': transport-type 'socket' is not valid or not found on this machine

[2022-01-09 08:08:00.562931 +0000] W [rpcsvc.c:1987:rpcsvc_create_listener] 0-rpc-service: cannot create listener, initing the transport failed

[2022-01-09 08:08:00.562939 +0000] E [MSGID: 106244] [glusterd.c:1843:init] 0-management: creation of listener failed

[2022-01-09 08:08:00.562956 +0000] E [MSGID: 101019] [xlator.c:643:xlator_init] 0-management: Initialization of volume failed. review your volfile again. [{name=management}]

[2022-01-09 08:08:00.562964 +0000] E [MSGID: 101066] [graph.c:425:glusterfs_graph_init] 0-management: initializing translator failed

[2022-01-09 08:08:00.562971 +0000] E [MSGID: 101176] [graph.c:777:glusterfs_graph_activate] 0-graph: init failed

[2022-01-09 08:08:00.563226 +0000] W [glusterfsd.c:1429:cleanup_and_exit] (-->/usr/sbin/glusterd(glusterfs_volumes_init+0xaa) [0x7f2648baf7da] -->/usr/sbin/glusterd(glusterfs_process_volfp+0x236) [0x7f2648baf716] -->/usr/sbin/glusterd(cleanup_and_exit+0x6b) [0x7f2648baea6b] ) 0-: received signum (-1), shutting down

[2022-01-09 08:08:24.173041 +0000] I [MSGID: 100030] [glusterfsd.c:2683:main] 0-/usr/sbin/glusterd: Started running version [{arg=/usr/sbin/glusterd}, {version=9.4}, {cmdlinestr=/usr/sbin/glusterd -p /var/run/glusterd.pid --log-level INFO}]

[2022-01-09 08:08:24.175748 +0000] I [glusterfsd.c:2418:daemonize] 0-glusterfs: Pid of current running process is 308

[2022-01-09 08:08:24.185943 +0000] I [MSGID: 106478] [glusterd.c:1474:init] 0-management: Maximum allowed open file descriptors set to 65536

[2022-01-09 08:08:24.186239 +0000] I [MSGID: 106479] [glusterd.c:1550:init] 0-management: Using /var/lib/glusterd as working directory

[2022-01-09 08:08:24.186260 +0000] I [MSGID: 106479] [glusterd.c:1556:init] 0-management: Using /var/run/gluster as pid file working directory

[2022-01-09 08:08:24.193259 +0000] E [rpc-transport.c:282:rpc_transport_load] 0-rpc-transport: /usr/lib64/glusterfs/9.4/rpc-transport/socket.so: symbol SSL_CTX_get0_param, version libssl.so.10 not defined in file libssl.so.10 with link time reference

[2022-01-09 08:08:24.193299 +0000] W [rpc-transport.c:286:rpc_transport_load] 0-rpc-transport: volume 'socket.management': transport-type 'socket' is not valid or not found on this machine

yum -y install openssl-devel

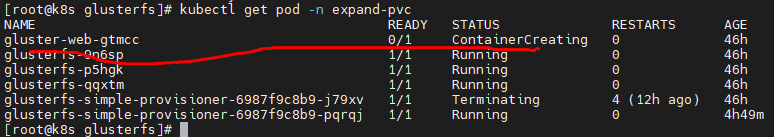

2、pod pvc 错误

line 60: type ‘features/utime’ is not valid or not found on this machine

3、客户端挂载失败

[hadoop@hadoop03 glusterfs]$ sudo mount.glusterfs hadoop01:/data /mnt/

Mounting glusterfs on /mnt/ failed.

[hadoop@hadoop03 glusterfs]$ sudo gluster volume info

Volume Name: test-glusterfs-volume

Type: Distribute

Volume ID: 099c37f3-adf4-4a38-bace-c0a4996dc437

Status: Started

Snapshot Count: 0

Number of Bricks: 3

Transport-type: tcp

Bricks:

Brick1: hadoop01:/data

Brick2: hadoop02:/data

Brick3: hadoop03:/data

Options Reconfigured:

storage.fips-mode-rchecksum: on

transport.address-family: inet

nfs.disable: on

额…发现挂载的是卷而不是具体存储路径…好吧记录下…

4、glusterfs k8s pod 服务启动不了

因为是配置的网络模式 hostNetwork: true;注意宿主机是不是已启动glusterfs服务、关闭宿主机glusterfs pod启动

4.1 另外的原因

在宿主机上安装过gluster 在/var/lib/gluster 路径下的文件未删除,后来 kubectl apply -f glusterfs-daemonset.yaml ,pod 过载到这个路径导致看不懂的错误,删除/var/lib/gluster文件下的内容,重新 kubectl apply -f glusterfs-daemonset.yaml 即可

5、glusterfs input/output error

https://docs.rackspace.com/support/how-to/glusterfs-troubleshooting/

https://cloud.tencent.com/developer/article/1101876

0-glusterfs: failed to set volfile server: File exists

https://stackoverflow.com/questions/60683873/0-glusterfs-failed-to-set-volfile-server-file-exists

[root@k8s-storage-02 /]# gluster volume info vol_ed8913602dbbc0510a6ecf8ad3bf941b

Volume Name: vol_ed8913602dbbc0510a6ecf8ad3bf941b

Type: Replicate

Volume ID: 7f334d29-70fc-49e8-aec3-d74b470865df

Status: Started

Snapshot Count: 0

Number of Bricks: 1 x 3 = 3

Transport-type: tcp

Bricks:

Brick1: 10.0.2.45:/var/lib/heketi/mounts/vg_094f45cfcd0ff5815c3914723876c69e/brick_5a398d6130306007bd2c72d0cf53d947/brick

Brick2: 10.0.2.46:/var/lib/heketi/mounts/vg_1f3f18f36dae1a1d968713e583b7972a/brick_44590fc606e77a08c405fe789abbe514/brick

Brick3: 10.0.2.44:/var/lib/heketi/mounts/vg_a04ab1f3fafb5b88709b6354df22ea3e/brick_5a423d1cdee47f93f0f3a62b7a329ef2/brick

Options Reconfigured:

user.heketi.id: ed8913602dbbc0510a6ecf8ad3bf941b

transport.address-family: inet

nfs.disable: on

performance.client-io-threads: off

[root@k8s-storage-02 /]# gluster volume status vol_ed8913602dbbc0510a6ecf8ad3bf941b

Status of volume: vol_ed8913602dbbc0510a6ecf8ad3bf941b

Gluster process TCP Port RDMA Port Online Pid

------------------------------------------------------------------------------

Brick 10.0.2.45:/var/lib/heketi/mounts/vg_0

94f45cfcd0ff5815c3914723876c69e/brick_5a398

d6130306007bd2c72d0cf53d947/brick N/A N/A N N/A

Brick 10.0.2.44:/var/lib/heketi/mounts/vg_a

04ab1f3fafb5b88709b6354df22ea3e/brick_5a423

d1cdee47f93f0f3a62b7a329ef2/brick 49531 0 Y 4117

Self-heal Daemon on localhost N/A N/A N N/A

Self-heal Daemon on k8s-storage-01 N/A N/A N N/A

Task Status of Volume vol_ed8913602dbbc0510a6ecf8ad3bf941b

------------------------------------------------------------------------------

There are no active volume tasks

[root@k8s-storage-02 /]# gluster volume status vol_ed8913602dbbc0510a6ecf8ad3bf941b

Status of volume: vol_ed8913602dbbc0510a6ecf8ad3bf941b

Gluster process TCP Port RDMA Port Online Pid

------------------------------------------------------------------------------

Brick 10.0.2.45:/var/lib/heketi/mounts/vg_0

94f45cfcd0ff5815c3914723876c69e/brick_5a398

d6130306007bd2c72d0cf53d947/brick N/A N/A N N/A

Brick 10.0.2.44:/var/lib/heketi/mounts/vg_a

04ab1f3fafb5b88709b6354df22ea3e/brick_5a423

d1cdee47f93f0f3a62b7a329ef2/brick 49531 0 Y 4117

Self-heal Daemon on localhost N/A N/A N N/A

Self-heal Daemon on k8s-storage-01 N/A N/A N N/A

Task Status of Volume vol_ed8913602dbbc0510a6ecf8ad3bf941b

------------------------------------------------------------------------------

There are no active volume tasks

[root@k8s-storage-02 /]# gluster volume stop vol_ed8913602dbbc0510a6ecf8ad3bf941b

Stopping volume will make its data inaccessible. Do you want to continue? (y/n) y

volume stop: vol_ed8913602dbbc0510a6ecf8ad3bf941b: success

[root@k8s-storage-02 /]# gluster volume status vol_ed8913602dbbc0510a6ecf8ad3bf941b

Volume vol_ed8913602dbbc0510a6ecf8ad3bf941b is not started

[root@k8s-storage-02 /]# gluster volume start vol_ed8913602dbbc0510a6ecf8ad3bf941b

volume start: vol_ed8913602dbbc0510a6ecf8ad3bf941b: success

[root@k8s-storage-02 /]# gluster volume status vol_ed8913602dbbc0510a6ecf8ad3bf941b

Status of volume: vol_ed8913602dbbc0510a6ecf8ad3bf941b

Gluster process TCP Port RDMA Port Online Pid

------------------------------------------------------------------------------

Brick 10.0.2.45:/var/lib/heketi/mounts/vg_0

94f45cfcd0ff5815c3914723876c69e/brick_5a398

d6130306007bd2c72d0cf53d947/brick 49167 0 Y 75428

Brick 10.0.2.44:/var/lib/heketi/mounts/vg_a

04ab1f3fafb5b88709b6354df22ea3e/brick_5a423

d1cdee47f93f0f3a62b7a329ef2/brick 49531 0 Y 76051

Self-heal Daemon on localhost N/A N/A Y 75449

Self-heal Daemon on k8s-storage-01 N/A N/A N N/A

Task Status of Volume vol_ed8913602dbbc0510a6ecf8ad3bf941b

------------------------------------------------------------------------------

There are no active volume tasks

6、GlusterFS故障模拟

https://my.oschina.net/u/4321476/blog/3826738

7、glusterfs 使用小结

https://blog.51cto.com/nosmoking/1710133

8、glusterfs 小文件优化

https://blog.csdn.net/xiaofei0859/article/details/53467336

9、Glusterfs下读写请求的处理流程

https://www.cnblogs.com/chaozhu/p/6402000.html

常用命令

gluster volume info

gluster volume info xxxxx

[root@k8s 00]# gluster volume info vol_d4c379a9fd02de7a9bc1a59ac3ece02b

Volume Name: vol_d4c379a9fd02de7a9bc1a59ac3ece02b

Type: Replicate

Volume ID: 19558c08-c2b9-4500-a2e6-2c73660f9fc5

Status: Started

Snapshot Count: 0

Number of Bricks: 1 x 2 = 2

Transport-type: tcp

Bricks:

Brick1: 10.0.2.35:/var/lib/heketi/mounts/vg_f47e1d26a2086bb2121f00512259f0e0/brick_00313ded6f8f323b6eea103d84b6f43b/brick

Brick2: 10.0.2.33:/var/lib/heketi/mounts/vg_250a0c9d90faa2ad4b39b9dbe6c98866/brick_3bd9d75ae41f4c845408f4d06dca4641/brick

Options Reconfigured:

user.heketi.id: d4c379a9fd02de7a9bc1a59ac3ece02b

transport.address-family: inet

nfs.disable: on

performance.client-io-threads: off

volume 配置参数

gluster volume set VOLNAME OPTION PARAMETER

配置参数:https://rajeshjoseph.gitbooks.io/test-guide/content/cluster/chap-Managing_Gluster_Volumes.html

通过命令查看副本文件的扩展属性

[root@k8s 00]# getfattr -m . -d -e hex /var/lib/heketi/mounts/vg_f47e1d26a2086bb2121f00512259f0e0/brick_00313ded6f8f323b6eea103d84b6f43b/brick

getfattr: Removing leading '/' from absolute path names

# file: var/lib/heketi/mounts/vg_f47e1d26a2086bb2121f00512259f0e0/brick_00313ded6f8f323b6eea103d84b6f43b/brick

trusted.gfid=0x00000000000000000000000000000001

trusted.glusterfs.volume-id=0x19558c08c2b94500a2e62c73660f9fc5

[root@k8s 00]#

heketi-cli 命令

[root@k8s kubernetes]# heketi-cli --user=admin --secret='My Secret' --server=http://10.1.241.85:8080 volume info d4c379a9fd02de7a9bc1a59ac3ece02b

Name: vol_d4c379a9fd02de7a9bc1a59ac3ece02b

Size: 1

Volume Id: d4c379a9fd02de7a9bc1a59ac3ece02b

Cluster Id: 563efc153a15e62560fd0afedd3a61fd

Mount: 10.0.2.35:vol_d4c379a9fd02de7a9bc1a59ac3ece02b

Mount Options: backup-volfile-servers=10.0.2.34,10.0.2.33

Block: false

Free Size: 0

Reserved Size: 0

Block Hosting Restriction: (none)

Block Volumes: []

Durability Type: replicate

Distribute Count: 1

Replica Count: 2

Snapshot Factor: 1.00

heketi 问题

[root@k8s kubernetes]# heketi-cli --user=admin --secret='My Secret' --server=http://10.1.241.85:8080 volume list

Error: Invalid JWT token: Token used before issued (client and server clocks may differ)

heketi 服务器 | heketi-cli 时间不一致导致

注意: 多个master的需要所有master 宿主机都得匹配

GlusterFS博客记录

1、k8s集群动态存储管理GlusterFS及使用Heketi扩容GlusterFS集群

https://www.cnblogs.com/dukuan/p/9954094.html

2、—>> Centos7下GlusterFS分布式存储集群环境部署记录

https://www.cnblogs.com/kevingrace/p/8743812.html

3、glusterfs 详解

https://czero000.github.io/tags/glusterfs/

4、glusterfs介绍使用

https://www.cnblogs.com/netonline/p/9107859.html

5、深入理解GlusterFS之数据均衡

http://blog.itpub.net/31547898/viewspace-2168800/

6、存储之我见

https://blog.csdn.net/baidu_17173809/category_5942909.html

7、GlusterFS的一次节点重置和恢复

http://www.javashuo.com/article/p-dgyyueka-ho.html

8、Add and remove GlusterFS servers

https://docs.rackspace.com/support/how-to/add-and-remove-glusterfs-servers

9、Recover from a failed server in a GlusterFS array

https://docs.rackspace.com/support/how-to/recover-from-a-failed-server-in-a-glusterfs-array

10、分布式文件系统GlusterFS介绍

11、glusterfs多节点性能测试

http://www.doc88.com/p-7018698209799.html

https://cloud.tencent.com/developer/article/1104136

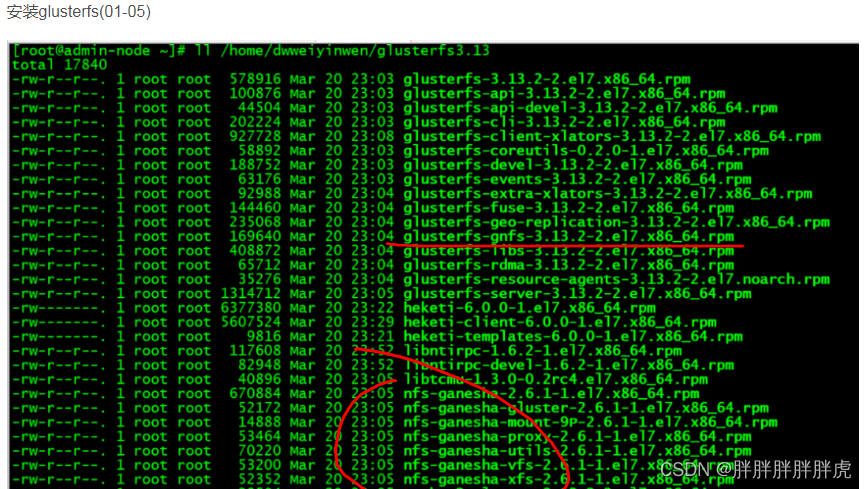

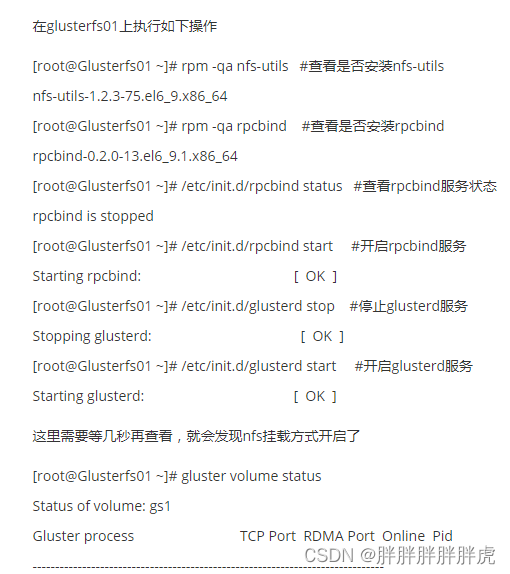

Glusterfs + NFS

通过glusterfs+NFS+service供k8s使用存储

通过nfs-ganesha实现nfs客户端挂载glusterfs虚拟卷

[root@hadoop02 /]# gluster volume info

Volume Name: vol_distributed

Type: Distribute

Volume ID: 9ef58dda-ea8e-45ae-91e0-dc3d9ce277e6

Status: Started

Snapshot Count: 0

Number of Bricks: 1

Transport-type: tcp

Bricks:

Brick1: 192.168.153.102:/glusterfs/vol1

Options Reconfigured:

transport.address-family: inet

storage.fips-mode-rchecksum: on

nfs.disable: on

NFS

—>>> https://www.modb.pro/db/396465

gluster volume set <volume_name> nfs.disable off

nfs-ganasha

cat /etc/ganesha/ganesha.conf

EXPORT{

Export_Id = 1 ; # Export ID unique to each export

Path = "/gv0"; # Path of the volume to be exported. Eg: "/test_volume"

FSAL {

name = GLUSTER;

hostname = "${ip}"; #one of the nodes in the trusted pool

volume = "gv0"; # Volume name. Eg: "test_volume"

}

Access_type = RW; # Access permissions

Squash = No_root_squash; # To enable/disable root squashing

Disable_ACL = TRUE; # To enable/disable ACL

Pseudo = "/gv0_pseudo"; # NFSv4 pseudo path for this export. Eg: "/test_volume_pseudo"

Protocols = "3","4" ; # NFS protocols supported

Transports = "UDP","TCP" ; # Transport protocols supported

SecType = "sys"; # Security flavors supported

}

GlusterFS vs. CephFS性能对比研究

https://blog.csdn.net/bjchenxu/article/details/107036274

https://blog.csdn.net/elvishehai/article/details/107222257

系列博客

https://rajeshjoseph.gitbooks.io/test-guide/content/

GlusterFs教程第12章:GlusterFs存储卷管理

https://www.hellodemos.com/hello-glusterfs/glusterfs-demos.html

1、五光十色

https://www.cnblogs.com/wuhg/category/1356968.html

2、散尽浮华

https://www.cnblogs.com/kevingrace/p/8709544.html

3、glusterfs

https://www.cnblogs.com/wuhg/category/1356968.html

4、刘爱贵博士

https://blog.csdn.net/liuaigui/article/details/17331557

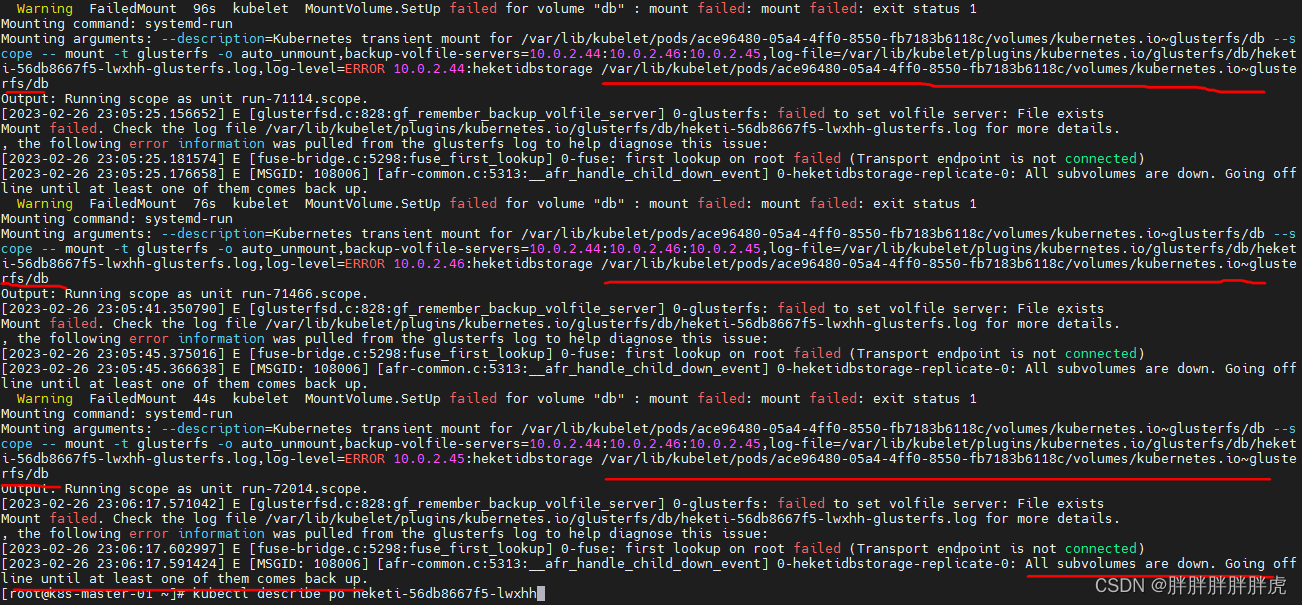

问题记录

Heketi 启动失败

All subvolumes are down. Going offline until at least one of them comes back up.

/var/lib/kubelet/plugins/kubernetes.io/glusterfs/db/heketi-56db8667f5-lwxhh-glusterfs.log

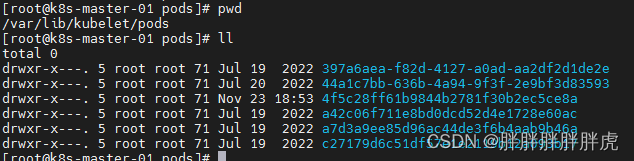

/var/lib/kubelet/pods/ace96480-05a4-4ff0-8550-fb7183b6118c/volumes/kubernetes.io~glusterfs/db

2354

2354

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?