vhost user协议的控制和数据通道

所有的控制信息通过UNIX套接口(控制通道)交互。包括为进行直接内存访问而交换的内存映射信息,以及当数据填入virtio队列后需要出发的kick事件和中断信息。在Neutron中此UNIX套接口命名为vhuxxxxxxxx-xx;

数据通道事实上由内存直接访问实现。客户机中的virtio-net驱动分配一部分内存用于virtio的队列。virtio标准定义了此队列的结构。QEMU通过控制通道将此部分内存的地址共享给OVS DPDK。DPDK自身映射一个相同标准的virtio队列结构到此内存上,藉此来读写客户机巨页内存中的virtio队列。直接内存访问的实现需要在OVS DPDK和QEMU之间使用巨页内存。如果QEMU设置正确,但是没有配置巨页内存,OVS DPDK将不能访问QEMU的内存,二者也就不能交换数据报文。如果用户忘记了请求客户机巨页内存,nova将通过宏数据通知用户。

当OVS DPDK向客户机发送数据包时,这些数据包在OVS DPDK的统计里面显示为接口vhuxxxxxxxx-xx的发送Tx流量。在客户机中,显示为接收Rx流量。

当客户机向OVS DPDK发送数据包时,这些数据包在客户机中显示为发送Tx流量,而在OVS DPDK中显示为接口vhuxxxxxxxx-xx的接收Rx流量。

客户机并没有硬件的统计计数。ethtool工具的-s选项未实现。所有的底层统计计数只能使用OVS的命令显示(ovs-vsctl list get interfave vhuxxxxxxxx-xx statistics),因此显示的数据都是基于OVS DPDK的视角。

虽然数据包可通过共享内存传输,但是还需要一种方法告知对端数据包已经拷贝到virtio队列中。通过vhost user套接口vhuxxxxxxxx-xx实现的控制通道可用来完成通知(kicking)对方的功能。通知必然有代价。首先,需要一个写套接口的系统调用;之后对端需要处理一个中断操作。所以,接收双方都会在控制通道上消耗时间。

为避免控制通道的通知消耗,OpenvSwitch和QEMU都可以设置特殊标志以告知对方其不愿接收中断。尽管如此,只有在采用临时或者固定查询virtio队列方式时才能使用不接收中断的功能。

为客户机的性能考虑其本身可采用DPDK处理数据包。尽管Linux内核采用轮询处理和中断相结合的NAPI机制,但是产生的中断数量仍然很多。OVS DPDK以非常高的速率发送数据包到客户机。同时,QEMU的virtio队列的收发缓存数被限制在了默认的256与最大1024之间。结果,客户机必须以非常快的速度处理数据包。理想的实现就是使用DPDK的PMD驱动不停的轮询客户机端口进行数据包处理。

vhost user协议标准

Vhost-user协议

Copyright (c) 2014 Virtual Open Systems Sarl.

This work is licensed under the terms of the GNU GPL, version 2 or later.

See the COPYING file in the top-level directory.

此协议旨在补充实现在Linux内核中的vhost的ioctl接口。

实现了与同一宿主机中的用户进程交互建立virtqueue队列的控制平面。通过UNIX套接口消息中的附加数据字段来共享文件描述符。

协议定义了通信的两端:主和从。主时要共享其virtqueues队列的进程,即QEMU。从为virtqueues队列的消费者。

当前实现中QEMU作为主,从为运行在用户空间的软件交换机,如Snabbswitch。

主和从在通信时都可以作为客户端(主动连接)或者服务端(监听)。

vhost user协议由两方组成:

- 主方 - QEMU

- 从方 - Open vSwitch或者其它软件交换机

vhost user各方都可运行在2种模式下:

- vhostuser-client - QEMU作为服务端,软件交换机作为客户端

- vhostuser - 软件交换机作为服务端,QEMU作为客户端。

vhost user实现基于内核的vhost架构,将所有特性实现在用户空间。

当QEMU客户机启动时,它将所有的客户机内存分配为共享的巨页内存。其操作系统的半虚拟化驱动virtio将保留这些巨页内存的一部分用作virtio环形缓存。这样OVS DPDK将可以直接读写客户机的virtio环形缓存。OVS DPDK和QEMU可通过此保留的内存空间交换网络数据包。

用户空间进程接收到客户机预先分配的共享内存文件描述符后,可直接存取与之关联的客户机内存空间中的vrings环结构。(http://www.virtualopensystems.com/en/solutions/guides/snabbswitch-qemu/).

参见以下的VM虚拟机,模式为vhostuser:

$ /usr/libexec/qemu-kvm

-name guest=instance-00000028,debug-threads=on-S

-object secret,id=masterKey0,format=raw,file=/var/lib/libvirt/qemu/domain-58-instance-00000028/master-key.aes-machine pc-i440fx-rhel7.4.0,accel=kvm,usb=off,dump-guest-core=off

-cpu Skylake-Client,ss=on,hypervisor=on,tsc_adjust=on,pdpe1gb=on,mpx=off,xsavec=off,xgetbv1=off-m 2048

-realtime mlock=off

-smp 8,sockets=4,cores=1,threads=2

-object memory-backend-file,id=ram-node0,prealloc=yes,mem-path=/dev/hugepages/libvirt/qemu/58-instance-00000028,share=yes,size=1073741824,host-nodes=0,policy=bind-numa node,nodeid=0,cpus=0-3,memdev=ram-node0

-object memory-backend-file,id=ram-node1,prealloc=yes,mem-path=/dev/hugepages/libvirt/qemu/58-instance-00000028,share=yes,size=1073741824,host-nodes=1,policy=bind-numa node,nodeid=1,cpus=4-7,memdev=ram-node1

-uuid 48888226-7b6b-415c-bcf7-b278ba0bca62 -smbios type=1,manufacturer=Red Hat,product=OpenStack Compute,version=14.1.0-3.el7ost,serial=3d5e138a-8193-41e4-ac95-de9bfc1a3ef1,uuid=48888226-7b6b-415c-bcf7-b278ba0bca62,family=Virtual Machine

-no-user-config

-nodefaults

-chardev socket,id=charmonitor,path=/var/lib/libvirt/qemu/domain-58-instance-00000028/monitor.sock,server,nowait-mon chardev=charmonitor,id=monitor,mode=control

-rtc base=utc,driftfix=slew

-global kvm-pit.lost_tick_policy=delay

-no-hpet

-no-shutdown

-boot strict=on

-device piix3-usb-uhci,id=usb,bus=pci.0,addr=0x1.0x2

-drive file=/var/lib/nova/instances/48888226-7b6b-415c-bcf7-b278ba0bca62/disk,format=qcow2,if=none,id=drive-virtio-disk0,cache=none

-device virtio-blk-pci,scsi=off,bus=pci.0,addr=0x4,drive=drive-virtio-disk0,id=virtio-disk0,bootindex=1

-chardev socket,id=charnet0,path=/var/run/openvswitch/vhuc26fd3c6-4b

-netdev vhost-user,chardev=charnet0,queues=8,id=hostnet0

-device virtio-net-pci,mq=on,vectors=18,netdev=hostnet0,id=net0,mac=fa:16:3e:52:30:73,bus=pci.0,addr=0x3

-add-fd set=0,fd=33 -chardev file,id=charserial0,path=/dev/fdset/0,append=on

-device isa-serial,chardev=charserial0,id=serial0 -chardev pty,id=charserial1

-device isa-serial,chardev=charserial1,id=serial1

-device usb-tablet,id=input0,bus=usb.0,port=1

-vnc 172.16.2.10:1 -k en-us

-device cirrus-vga,id=video0,bus=pci.0,addr=0x2

-device virtio-balloon-pci,id=balloon0,bus=pci.0,addr=0x5

-msg timestamp=on

指定QEMU从巨页池中分配内存,并设置为共享内存。

-object memory-backend-file,id=ram-node0,prealloc=yes,mem-path=/dev/hugepages/libvirt/qemu/58-instance-00000028,share=yes,size=1073741824,host-nodes=0,policy=bind

-numa node,nodeid=0,cpus=0-3,memdev=ram-node0

-object memory-backend-file,id=ram-node1,prealloc=yes,mem-path=/dev/hugepages/libvirt/qemu/58-instance-00000028,share=yes,size=1073741824,host-nodes=1,policy=bind

尽管如此,简单的拷贝数据包到对方的缓存中还不足够。另外,vhost user协议使用一个UNIX套接口(vhu[a-f0-9-])处理vswitch和QEMU之间的通信,包括在初始化过程中,和数据包拷贝到共享内存的virtio环中需要通知对方时。所以两者的交互包括基于控制通道(vhu)的创建操作和通知机制,与拷贝数据包的数据通道(直接内存访问)。

所述virtio机制要能工作,我们需要建立一个接口来初始化共享内存区域和交换event事件描述符。UNIX套接口提供的API接口可实现此要求。此套接口可用于初始化用户空间virtio传输(vhost-user),特别是:

- 初始化时确定Vrings,并且放入两个进程间的共享内存中;

- 使用eventfd映射到Vring事件。这样就可与QEMU/KVM中的实现相兼容,KVM可以关联客户机系统中virtio_pci驱动所触发事件与宿主机的eventfd(ioventfd和irqfd)文件描述符。

在两个进程间共享文件描述符与在一个进程和内核直接不相同。前者需要在UNIX套接口的sendmsg系统调用中设置SCM_RIGHTS标志。

vhostuser模式下,OVS创建vhu套接口,QEMU主动进行连接。vhostuser client模式下,QEMU创建vhu套接口,OVS进行连接。

在上面创建的vhostuser模式客户机实例中,指示QEMU连接一个类型为vhost-user的netdev到套接口/var/run/openvswitch/vhuc26fd3c6-4b:

-chardev socket,id=charnet0,path=/var/run/openvswitch/vhuc26fd3c6-4b \

-netdev vhost-user,chardev=charnet0,queues=8,id=hostnet0 \

-device virtio-net-pci,mq=on,vectors=18, \

netdev=hostnet0,id=net0,mac=fa:16:3e:52:30:73,bus=pci.0,addr=0x3

使用lsof命令显示此套接口为OVS所创建:

[root@overcloud-0 ~]# lsof -nn | grep vhuc26fd3c6-4b | awk '{print $1}' | uniq

当一方拷贝一个数据报文到共享内存的virtio环中时,另一方有两种选择:

- 类似(e.g. Linux kernel’s NAPI)或者 (e.g. DPDK’s PMD)的轮询队列,不需要通知就可取得新的数据报文;

- 非队列轮询,必须得到新报文到达的通知。

针对第二种情况,可通过独立的vhu套接口控制通道发送通知到客户机。通过交换eventfd文件描述符数据,控制通道可在用户空间实现中断。套接口的写操作要求系统调用,必将引起PMDs花费时间在内核空间。客户机可通过设置VRING_AVAIL_F_NO_INTERRUPT标志关闭控制通道中断通知。否则,当Open vSwitch网virtio环中填入新数据包时,将发送中断通知到客户机。

详情可参加此博客文章:http://blog.vmsplice.net/2011/09/qemu-internals-vhost-architecture.html

用户空间的vhost接口

vhost架构的一个惊人的特性是其并没有绑定在KVM上。其仅是一个用户空间接口并不依赖于KVM内核模块。这意味着其它的用户空间程序,比如libpcap,如果要获得高性能I/O接口,理论上也可以使用vhost设备。

当客户机通知宿主机其已在virtqueue中填入了数据时,需要通知vhost的工作进程有数据要进行处理(对于内核的virtio-net驱动,vhost工作进程为一个内核线程,名称为vhost-$pid,其中pid为QEMU的进程号)。既然vhost不依赖于KVM内核模块,二者就不能直接通信。所以vhost实例创建了一个eventfd文件描述符,提供给vhost工作进程去监听。KVM内核模块的ioeventfd特性可将一个eventfd文件描述符关联到一个特殊的客户机I/O操作上。QEMU用户空间在硬件寄存器VIRTIO_PCI_QUEUE_NOTIFY的I/O访问上注册了virtqueue的通知ioeventfd。当客户机写VIRTIO_PCI_QUEUE_NOTIFY寄存器时将会发送virtqueue队列通知,vhost工作进程将接收到KVM内核模块通过ioeventfd发来的通知。

在vhost工作进程需要发送中断到客户机的反向路径上使用相同的方式。vhost通过写一个“call”文件描述符去通知客户机。KVM内核模块的另一个特性irqfd中断描述符可使eventfd出发客户机中断。QEMU用户空间为virtio的PCI设备中断注册了一个irqfd文件描述符,并将此irqfd交于vhost实例。vhost工作进程即可通过此“call”文件描述符去中断客户机。

最终,vhost实例仅了解到客户机的内存映射、kick通知eventfd文件描述符和call中断文件描述符。

更多细节,参考Linux内核中内核相关代码:

drivers/vhost/vhost.c - 通用vhost驱动代码

drivers/vhost/net.c - vhost-net网络设备驱动代码

virt/kvm/eventfd.c - ioeventfd事件和irqfd中断文件描述符实现

QEMU初始化vhost实例的用户空间代码:

hw/vhost.c - 通用vhost初始化代码

hw/vhost_net.c - vhost-net网络设备初始化代码

数据通道-直接内存访问

virtqueue的内存映射

virtio官方标准定义了virtqueue的结构。

virtqueues

2.4 Virtqueues

virtio设备的大数据传输机制命名为virtqueue虚拟队列。每个设备可以有多个virtqueues,也可以没有

virtqueue队列。16位的队列大小参数指定了队列内成员的数量,也限定了队列的总大小。

每个virtqueue队列有三个部分组成:

Descriptor Table - 描述符表

Available Ring - 可用环

Used Ring - 已用环

virtio标志精确的定义了描述符表、可用环和已用环的结构。例如,可用环的定义:

http://docs.oasis-open.org/virtio/virtio/v1.0/virtio-v1.0.html

virtq_avail

2.4.6 virtqueue可用环结构

struct virtq_avail {

#define VIRTQ_AVAIL_F_NO_INTERRUPT 1

le16 flags;

le16 idx;

le16 ring[ /* Queue Size */ ];

le16 used_event; /* Only if VIRTIO_F_EVENT_IDX */

};

驱动程序使用可用环提供发送缓存给设备。其中每个环项指向一个描述符链的开头。可用环只能由驱动程序写,由设备读。

idx成员指示驱动程序将下一个描述符入口项放在了ring成员的哪个位置(不超过队列长度)。其从0开始增加。

传统的标准[Virtio PCI Draft]将此结构定义为vring_avail,将宏定义命名为

VRING_AVAIL_F_NO_INTERRUPT,但是本质结构都还是相同的。

DPDK的virtio标准实现代码,其也是使用传统virtio标准中的结构定义:

dpdk-18.08/drivers/net/virtio/virtio_ring.h

vring、vring_desc、vring_avail、vring_used

/* VirtIO ring descriptors: 16 bytes.

* These can chain together via "next".

*/

struct vring_desc {

uint64_t addr; /* Address (guest-physical). */

uint32_t len; /* Length. */

uint16_t flags; /* The flags as indicated above. */

uint16_t next; /* We chain unused descriptors via this. */

};

struct vring_avail {

uint16_t flags;

uint16_t idx;

uint16_t ring[0];

};

/* id is a 16bit index. uint32_t is used here for ids for padding reasons. */

struct vring_used_elem {

/* Index of start of used descriptor chain. */

uint32_t id;

/* Total length of the descriptor chain which was written to. */

uint32_t len;

};

struct vring_used {

uint16_t flags;

volatile uint16_t idx;

struct vring_used_elem ring[0];

};

struct vring {

unsigned int num;

struct vring_desc *desc;

struct vring_avail *avail;

struct vring_used *used;

};

dpdk-18.08/lib/librte_vhost/vhost.h

vhost_virtqueue

/**

* Structure contains variables relevant to RX/TX virtqueues.

*/

struct vhost_virtqueue {

union {

struct vring_desc *desc;

struct vring_packed_desc *desc_packed;

};

union {

struct vring_avail *avail;

struct vring_packed_desc_event *driver_event;

};

union {

struct vring_used *used;

struct vring_packed_desc_event *device_event;

};

uint32_t size;

uint16_t last_avail_idx;

uint16_t last_used_idx;

/* Last used index we notify to front end. */

uint16_t signalled_used;

bool signalled_used_valid;

#define VIRTIO_INVALID_EVENTFD (-1)

#define VIRTIO_UNINITIALIZED_EVENTFD (-2)

/* Backend value to determine if device should started/stopped */

int backend;

int enabled;

int access_ok;

rte_spinlock_t access_lock;

/* Used to notify the guest (trigger interrupt) */

int callfd;

/* Currently unused as polling mode is enabled */

int kickfd;

/* Physical address of used ring, for logging */

uint64_t log_guest_addr;

uint16_t nr_zmbuf;

uint16_t zmbuf_size;

uint16_t last_zmbuf_idx;

struct zcopy_mbuf *zmbufs;

struct zcopy_mbuf_list zmbuf_list;

union {

struct vring_used_elem *shadow_used_split;

struct vring_used_elem_packed *shadow_used_packed;

};

uint16_t shadow_used_idx;

struct vhost_vring_addr ring_addrs;

struct batch_copy_elem *batch_copy_elems;

uint16_t batch_copy_nb_elems;

bool used_wrap_counter;

bool avail_wrap_counter;

struct log_cache_entry log_cache[VHOST_LOG_CACHE_NR];

uint16_t log_cache_nb_elem;

rte_rwlock_t iotlb_lock;

rte_rwlock_t iotlb_pending_lock;

struct rte_mempool *iotlb_pool;

TAILQ_HEAD(, vhost_iotlb_entry) iotlb_list;

int iotlb_cache_nr;

TAILQ_HEAD(, vhost_iotlb_entry) iotlb_pending_list;

} __rte_cache_aligned;

内存映射完成之后,DPDK就可像客户机的virtio-net驱动一样直接操作其共享内存中的同一结构了。

控制通道-UNIX套接口

QEMU与DPDK通过vhost user套接口交换消息。

DPDK与QEMU的通信遵照标准的vhost-user协议。

消息类型如下:

dpdk-18.08/lib/librte_vhost/vhost_user.h

VhostUserRequest

typedef enum VhostUserRequest {

VHOST_USER_NONE = 0,

VHOST_USER_GET_FEATURES = 1,

VHOST_USER_SET_FEATURES = 2,

VHOST_USER_SET_OWNER = 3,

VHOST_USER_RESET_OWNER = 4,

VHOST_USER_SET_MEM_TABLE = 5,

VHOST_USER_SET_LOG_BASE = 6,

VHOST_USER_SET_LOG_FD = 7,

VHOST_USER_SET_VRING_NUM = 8,

VHOST_USER_SET_VRING_ADDR = 9,

VHOST_USER_SET_VRING_BASE = 10,

VHOST_USER_GET_VRING_BASE = 11,

VHOST_USER_SET_VRING_KICK = 12,

VHOST_USER_SET_VRING_CALL = 13,

VHOST_USER_SET_VRING_ERR = 14,

VHOST_USER_GET_PROTOCOL_FEATURES = 15,

VHOST_USER_SET_PROTOCOL_FEATURES = 16,

VHOST_USER_GET_QUEUE_NUM = 17,

VHOST_USER_SET_VRING_ENABLE = 18,

VHOST_USER_SEND_RARP = 19,

VHOST_USER_NET_SET_MTU = 20,

VHOST_USER_SET_SLAVE_REQ_FD = 21,

VHOST_USER_IOTLB_MSG = 22,

VHOST_USER_CRYPTO_CREATE_SESS = 26,

VHOST_USER_CRYPTO_CLOSE_SESS = 27,

VHOST_USER_MAX = 28

} VhostUserRequest;

VhostUserMsg

typedef struct VhostUserMsg {

union {

uint32_t master; /* a VhostUserRequest value */

uint32_t slave; /* a VhostUserSlaveRequest value*/

} request;

#define VHOST_USER_VERSION_MASK 0x3

#define VHOST_USER_REPLY_MASK (0x1 << 2)

#define VHOST_USER_NEED_REPLY (0x1 << 3)

uint32_t flags;

uint32_t size; /* the following payload size */

union {

#define VHOST_USER_VRING_IDX_MASK 0xff

#define VHOST_USER_VRING_NOFD_MASK (0x1<<8)

uint64_t u64;

struct vhost_vring_state state;

struct vhost_vring_addr addr;

VhostUserMemory memory;

VhostUserLog log;

struct vhost_iotlb_msg iotlb;

VhostUserCryptoSessionParam crypto_session;

VhostUserVringArea area;

} payload;

int fds[VHOST_MEMORY_MAX_NREGIONS];

} __attribute((packed)) VhostUserMsg;

DPDK使用如下函数处理接收到的消息:

dpdk-18.08/lib/librte_vhost/vhost_user.c

vhost_user_msg_handler()

int

vhost_user_msg_handler(int vid, int fd)

{

struct virtio_net *dev;

struct VhostUserMsg msg;

struct rte_vdpa_device *vdpa_dev;

int did = -1;

int ret;

int unlock_required = 0;

uint32_t skip_master = 0;

dev = get_device(vid);

if (dev == NULL)

return -1;

if (!dev->notify_ops) {

dev->notify_ops = vhost_driver_callback_get(dev->ifname);

if (!dev->notify_ops) {

RTE_LOG(ERR, VHOST_CONFIG,

"failed to get callback ops for driver %s\n",

dev->ifname);

return -1;

}

}

ret = read_vhost_message(fd, &msg);

if (ret <= 0 || msg.request.master >= VHOST_USER_MAX) {

if (ret < 0)

RTE_LOG(ERR, VHOST_CONFIG,

"vhost read message failed\n");

else if (ret == 0)

RTE_LOG(INFO, VHOST_CONFIG,

"vhost peer closed\n");

else

RTE_LOG(ERR, VHOST_CONFIG,

"vhost read incorrect message\n");

return -1;

}

ret = 0;

if (msg.request.master != VHOST_USER_IOTLB_MSG)

RTE_LOG(INFO, VHOST_CONFIG, "read message %s\n",

vhost_message_str[msg.request.master]);

else

RTE_LOG(DEBUG, VHOST_CONFIG, "read message %s\n",

vhost_message_str[msg.request.master]);

ret = vhost_user_check_and_alloc_queue_pair(dev, &msg);

if (ret < 0) {

RTE_LOG(ERR, VHOST_CONFIG,

"failed to alloc queue\n");

return -1;

}

/*

* Note: we don't lock all queues on VHOST_USER_GET_VRING_BASE

* and VHOST_USER_RESET_OWNER, since it is sent when virtio stops

* and device is destroyed. destroy_device waits for queues to be

* inactive, so it is safe. Otherwise taking the access_lock

* would cause a dead lock.

*/

switch (msg.request.master) {

case VHOST_USER_SET_FEATURES:

case VHOST_USER_SET_PROTOCOL_FEATURES:

case VHOST_USER_SET_OWNER:

case VHOST_USER_SET_MEM_TABLE:

case VHOST_USER_SET_LOG_BASE:

case VHOST_USER_SET_LOG_FD:

case VHOST_USER_SET_VRING_NUM:

case VHOST_USER_SET_VRING_ADDR:

case VHOST_USER_SET_VRING_BASE:

case VHOST_USER_SET_VRING_KICK:

case VHOST_USER_SET_VRING_CALL:

case VHOST_USER_SET_VRING_ERR:

case VHOST_USER_SET_VRING_ENABLE:

case VHOST_USER_SEND_RARP:

case VHOST_USER_NET_SET_MTU:

case VHOST_USER_SET_SLAVE_REQ_FD:

vhost_user_lock_all_queue_pairs(dev);

unlock_required = 1;

break;

default:

break;

}

if (dev->extern_ops.pre_msg_handle) {

uint32_t need_reply;

ret = (*dev->extern_ops.pre_msg_handle)(dev->vid,

(void *)&msg, &need_reply, &skip_master);

if (ret < 0)

goto skip_to_reply;

if (need_reply)

send_vhost_reply(fd, &msg);

if (skip_master)

goto skip_to_post_handle;

}

switch (msg.request.master) {

case VHOST_USER_GET_FEATURES:

msg.payload.u64 = vhost_user_get_features(dev);

msg.size = sizeof(msg.payload.u64);

send_vhost_reply(fd, &msg);

break;

case VHOST_USER_SET_FEATURES:

ret = vhost_user_set_features(dev, msg.payload.u64);

break;

case VHOST_USER_GET_PROTOCOL_FEATURES:

vhost_user_get_protocol_features(dev, &msg);

send_vhost_reply(fd, &msg);

break;

case VHOST_USER_SET_PROTOCOL_FEATURES:

ret = vhost_user_set_protocol_features(dev, msg.payload.u64);

break;

case VHOST_USER_SET_OWNER:

ret = vhost_user_set_owner();

break;

case VHOST_USER_RESET_OWNER:

ret = vhost_user_reset_owner(dev);

break;

case VHOST_USER_SET_MEM_TABLE:

ret = vhost_user_set_mem_table(&dev, &msg);

break;

case VHOST_USER_SET_LOG_BASE:

ret = vhost_user_set_log_base(dev, &msg);

if (ret)

goto skip_to_reply;

/* it needs a reply */

msg.size = sizeof(msg.payload.u64);

send_vhost_reply(fd, &msg);

break;

case VHOST_USER_SET_LOG_FD:

close(msg.fds[0]);

RTE_LOG(INFO, VHOST_CONFIG, "not implemented.\n");

break;

case VHOST_USER_SET_VRING_NUM:

ret = vhost_user_set_vring_num(dev, &msg);

break;

case VHOST_USER_SET_VRING_ADDR:

ret = vhost_user_set_vring_addr(&dev, &msg);

break;

case VHOST_USER_SET_VRING_BASE:

ret = vhost_user_set_vring_base(dev, &msg);

break;

case VHOST_USER_GET_VRING_BASE:

ret = vhost_user_get_vring_base(dev, &msg);

if (ret)

goto skip_to_reply;

msg.size = sizeof(msg.payload.state);

send_vhost_reply(fd, &msg);

break;

case VHOST_USER_SET_VRING_KICK:

ret = vhost_user_set_vring_kick(&dev, &msg);

break;

case VHOST_USER_SET_VRING_CALL:

vhost_user_set_vring_call(dev, &msg);

break;

case VHOST_USER_SET_VRING_ERR:

if (!(msg.payload.u64 & VHOST_USER_VRING_NOFD_MASK))

close(msg.fds[0]);

RTE_LOG(INFO, VHOST_CONFIG, "not implemented\n");

break;

case VHOST_USER_GET_QUEUE_NUM:

msg.payload.u64 = (uint64_t)vhost_user_get_queue_num(dev);

msg.size = sizeof(msg.payload.u64);

send_vhost_reply(fd, &msg);

break;

case VHOST_USER_SET_VRING_ENABLE:

ret = vhost_user_set_vring_enable(dev, &msg);

break;

case VHOST_USER_SEND_RARP:

ret = vhost_user_send_rarp(dev, &msg);

break;

case VHOST_USER_NET_SET_MTU:

ret = vhost_user_net_set_mtu(dev, &msg);

break;

case VHOST_USER_SET_SLAVE_REQ_FD:

ret = vhost_user_set_req_fd(dev, &msg);

break;

case VHOST_USER_IOTLB_MSG:

ret = vhost_user_iotlb_msg(&dev, &msg);

break;

default:

ret = -1;

break;

}

skip_to_post_handle:

if (!ret && dev->extern_ops.post_msg_handle) {

uint32_t need_reply;

ret = (*dev->extern_ops.post_msg_handle)(

dev->vid, (void *)&msg, &need_reply);

if (ret < 0)

goto skip_to_reply;

if (need_reply)

send_vhost_reply(fd, &msg);

}

skip_to_reply:

if (unlock_required)

vhost_user_unlock_all_queue_pairs(dev);

if (msg.flags & VHOST_USER_NEED_REPLY) {

msg.payload.u64 = !!ret;

msg.size = sizeof(msg.payload.u64);

send_vhost_reply(fd, &msg);

} else if (ret) {

RTE_LOG(ERR, VHOST_CONFIG,

"vhost message handling failed.\n");

return -1;

}

if (!(dev->flags & VIRTIO_DEV_RUNNING) && virtio_is_ready(dev)) {

dev->flags |= VIRTIO_DEV_READY;

if (!(dev->flags & VIRTIO_DEV_RUNNING)) {

if (dev->dequeue_zero_copy) {

RTE_LOG(INFO, VHOST_CONFIG,

"dequeue zero copy is enabled\n");

}

if (dev->notify_ops->new_device(dev->vid) == 0)

dev->flags |= VIRTIO_DEV_RUNNING;

}

}

did = dev->vdpa_dev_id;

vdpa_dev = rte_vdpa_get_device(did);

if (vdpa_dev && virtio_is_ready(dev) &&

!(dev->flags & VIRTIO_DEV_VDPA_CONFIGURED) &&

msg.request.master == VHOST_USER_SET_VRING_ENABLE) {

if (vdpa_dev->ops->dev_conf)

vdpa_dev->ops->dev_conf(dev->vid);

dev->flags |= VIRTIO_DEV_VDPA_CONFIGURED;

if (vhost_user_host_notifier_ctrl(dev->vid, true) != 0) {

RTE_LOG(INFO, VHOST_CONFIG,

"(%d) software relay is used for vDPA, performance may be low.\n",

dev->vid);

}

}

return 0;

}

还有dpdk-18.08/lib/librte_vhost/vhost_user.c:

read_vhost_message()

/* return bytes# of read on success or negative val on failure. */

static int

read_vhost_message(int sockfd, struct VhostUserMsg *msg)

{

int ret;

ret = read_fd_message(sockfd, (char *)msg, VHOST_USER_HDR_SIZE,

msg->fds, VHOST_MEMORY_MAX_NREGIONS);

if (ret <= 0)

return ret;

if (msg->size) {

if (msg->size > sizeof(msg->payload)) {

RTE_LOG(ERR, VHOST_CONFIG,

"invalid msg size: %d\n", msg->size);

return -1;

}

ret = read(sockfd, &msg->payload, msg->size);

if (ret <= 0)

return ret;

if (ret != (int)msg->size) {

RTE_LOG(ERR, VHOST_CONFIG,

"read control message failed\n");

return -1;

}

}

return ret;

}

read_fd_message()

/* return bytes# of read on success or negative val on failure. */

int

read_fd_message(int sockfd, char *buf, int buflen, int *fds, int fd_num)

{

struct iovec iov;

struct msghdr msgh;

size_t fdsize = fd_num * sizeof(int);

char control[CMSG_SPACE(fdsize)];

struct cmsghdr *cmsg;

int got_fds = 0;

int ret;

memset(&msgh, 0, sizeof(msgh));

iov.iov_base = buf;

iov.iov_len = buflen;

msgh.msg_iov = &iov;

msgh.msg_iovlen = 1;

msgh.msg_control = control;

msgh.msg_controllen = sizeof(control);

ret = recvmsg(sockfd, &msgh, 0);

if (ret <= 0) {

RTE_LOG(ERR, VHOST_CONFIG, "recvmsg failed\n");

return ret;

}

if (msgh.msg_flags & (MSG_TRUNC | MSG_CTRUNC)) {

RTE_LOG(ERR, VHOST_CONFIG, "truncted msg\n");

return -1;

}

for (cmsg = CMSG_FIRSTHDR(&msgh); cmsg != NULL;

cmsg = CMSG_NXTHDR(&msgh, cmsg)) {

if ((cmsg->cmsg_level == SOL_SOCKET) &&

(cmsg->cmsg_type == SCM_RIGHTS)) {

got_fds = (cmsg->cmsg_len - CMSG_LEN(0)) / sizeof(int);

memcpy(fds, CMSG_DATA(cmsg), got_fds * sizeof(int));

break;

}

}

/* Clear out unused file descriptors */

while (got_fds < fd_num)

fds[got_fds++] = -1;

return ret;

}

DPDK向外发送消息使用如下函数

dpdk-18.08/lib/librte_vhost/vhost_user.c

send_vhost_message()

static int

send_vhost_message(int sockfd, struct VhostUserMsg *msg, int *fds, int fd_num)

{

if (!msg)

return 0;

return send_fd_message(sockfd, (char *)msg,

VHOST_USER_HDR_SIZE + msg->size, fds, fd_num);

}

send_fd_message()

int

send_fd_message(int sockfd, char *buf, int buflen, int *fds, int fd_num)

{

struct iovec iov;

struct msghdr msgh;

size_t fdsize = fd_num * sizeof(int);

char control[CMSG_SPACE(fdsize)];

struct cmsghdr *cmsg;

int ret;

memset(&msgh, 0, sizeof(msgh));

iov.iov_base = buf;

iov.iov_len = buflen;

msgh.msg_iov = &iov;

msgh.msg_iovlen = 1;

if (fds && fd_num > 0) {

msgh.msg_control = control;

msgh.msg_controllen = sizeof(control);

cmsg = CMSG_FIRSTHDR(&msgh);

if (cmsg == NULL) {

RTE_LOG(ERR, VHOST_CONFIG, "cmsg == NULL\n");

errno = EINVAL;

return -1;

}

cmsg->cmsg_len = CMSG_LEN(fdsize);

cmsg->cmsg_level = SOL_SOCKET;

cmsg->cmsg_type = SCM_RIGHTS;

memcpy(CMSG_DATA(cmsg), fds, fdsize);

} else {

msgh.msg_control = NULL;

msgh.msg_controllen = 0;

}

do {

ret = sendmsg(sockfd, &msgh, MSG_NOSIGNAL);

} while (ret < 0 && errno == EINTR);

if (ret < 0) {

RTE_LOG(ERR, VHOST_CONFIG, "sendmsg error\n");

return ret;

}

return ret;

}

QEMU与之相对应的接收函数为:

qemu-3.0.0/contrib/libvhost-user/libvhost-user.c

vu_process_message()

static bool

vu_process_message(VuDev *dev, VhostUserMsg *vmsg)

{

int do_reply = 0;

/* Print out generic part of the request. */

DPRINT("================ Vhost user message ================\n");

DPRINT("Request: %s (%d)\n", vu_request_to_string(vmsg->request),

vmsg->request);

DPRINT("Flags: 0x%x\n", vmsg->flags);

DPRINT("Size: %d\n", vmsg->size);

if (vmsg->fd_num) {

int i;

DPRINT("Fds:");

for (i = 0; i < vmsg->fd_num; i++) {

DPRINT(" %d", vmsg->fds[i]);

}

DPRINT("\n");

}

if (dev->iface->process_msg &&

dev->iface->process_msg(dev, vmsg, &do_reply)) {

return do_reply;

}

switch (vmsg->request) {

case VHOST_USER_GET_FEATURES:

return vu_get_features_exec(dev, vmsg);

case VHOST_USER_SET_FEATURES:

return vu_set_features_exec(dev, vmsg);

case VHOST_USER_GET_PROTOCOL_FEATURES:

return vu_get_protocol_features_exec(dev, vmsg);

case VHOST_USER_SET_PROTOCOL_FEATURES:

return vu_set_protocol_features_exec(dev, vmsg);

case VHOST_USER_SET_OWNER:

return vu_set_owner_exec(dev, vmsg);

case VHOST_USER_RESET_OWNER:

return vu_reset_device_exec(dev, vmsg);

case VHOST_USER_SET_MEM_TABLE:

return vu_set_mem_table_exec(dev, vmsg);

case VHOST_USER_SET_LOG_BASE:

return vu_set_log_base_exec(dev, vmsg);

case VHOST_USER_SET_LOG_FD:

return vu_set_log_fd_exec(dev, vmsg);

case VHOST_USER_SET_VRING_NUM:

return vu_set_vring_num_exec(dev, vmsg);

case VHOST_USER_SET_VRING_ADDR:

return vu_set_vring_addr_exec(dev, vmsg);

case VHOST_USER_SET_VRING_BASE:

return vu_set_vring_base_exec(dev, vmsg);

case VHOST_USER_GET_VRING_BASE:

return vu_get_vring_base_exec(dev, vmsg);

case VHOST_USER_SET_VRING_KICK:

return vu_set_vring_kick_exec(dev, vmsg);

case VHOST_USER_SET_VRING_CALL:

return vu_set_vring_call_exec(dev, vmsg);

case VHOST_USER_SET_VRING_ERR:

return vu_set_vring_err_exec(dev, vmsg);

case VHOST_USER_GET_QUEUE_NUM:

return vu_get_queue_num_exec(dev, vmsg);

case VHOST_USER_SET_VRING_ENABLE:

return vu_set_vring_enable_exec(dev, vmsg);

case VHOST_USER_SET_SLAVE_REQ_FD:

return vu_set_slave_req_fd(dev, vmsg);

case VHOST_USER_GET_CONFIG:

return vu_get_config(dev, vmsg);

case VHOST_USER_SET_CONFIG:

return vu_set_config(dev, vmsg);

case VHOST_USER_NONE:

break;

case VHOST_USER_POSTCOPY_ADVISE:

return vu_set_postcopy_advise(dev, vmsg);

case VHOST_USER_POSTCOPY_LISTEN:

return vu_set_postcopy_listen(dev, vmsg);

case VHOST_USER_POSTCOPY_END:

return vu_set_postcopy_end(dev, vmsg);

default:

vmsg_close_fds(vmsg);

vu_panic(dev, "Unhandled request: %d", vmsg->request);

}

return false;

}

QEMU对应的消息发送函数:

qemu-3.0.0/hw/virtio/vhost-user.c

vhost_user_write()

/* most non-init callers ignore the error */

static int vhost_user_write(struct vhost_dev *dev, VhostUserMsg *msg,

int *fds, int fd_num)

{

struct vhost_user *u = dev->opaque;

CharBackend *chr = u->user->chr;

int ret, size = VHOST_USER_HDR_SIZE + msg->hdr.size;

/*

* For non-vring specific requests, like VHOST_USER_SET_MEM_TABLE,

* we just need send it once in the first time. For later such

* request, we just ignore it.

*/

if (vhost_user_one_time_request(msg->hdr.request) && dev->vq_index != 0) {

msg->hdr.flags &= ~VHOST_USER_NEED_REPLY_MASK;

return 0;

}

if (qemu_chr_fe_set_msgfds(chr, fds, fd_num) < 0) {

error_report("Failed to set msg fds.");

return -1;

}

ret = qemu_chr_fe_write_all(chr, (const uint8_t *) msg, size);

if (ret != size) {

error_report("Failed to write msg."

" Wrote %d instead of %d.", ret, size);

return -1;

}

return 0;

}

DPDK UNIX套接口的注册和消息交互

neutron控制Open vSwitch创建一个名称为vhuxxxxxxxx-xx的接口。在OVS内部,此名称保存在netdev结构体的成员name中(netdev->name)。

当创建vhost user接口时,Open vSwitch控制DPDK注册一个新的vhost-user UNIX套接口。套接口的路径为vhost_sock_dir加netdev->name加设备的dev->vhost_id。

通过设置RTE_VHOST_USER_CLIENT标志,OVS可请求创建vhost user套接口的客户端模式。

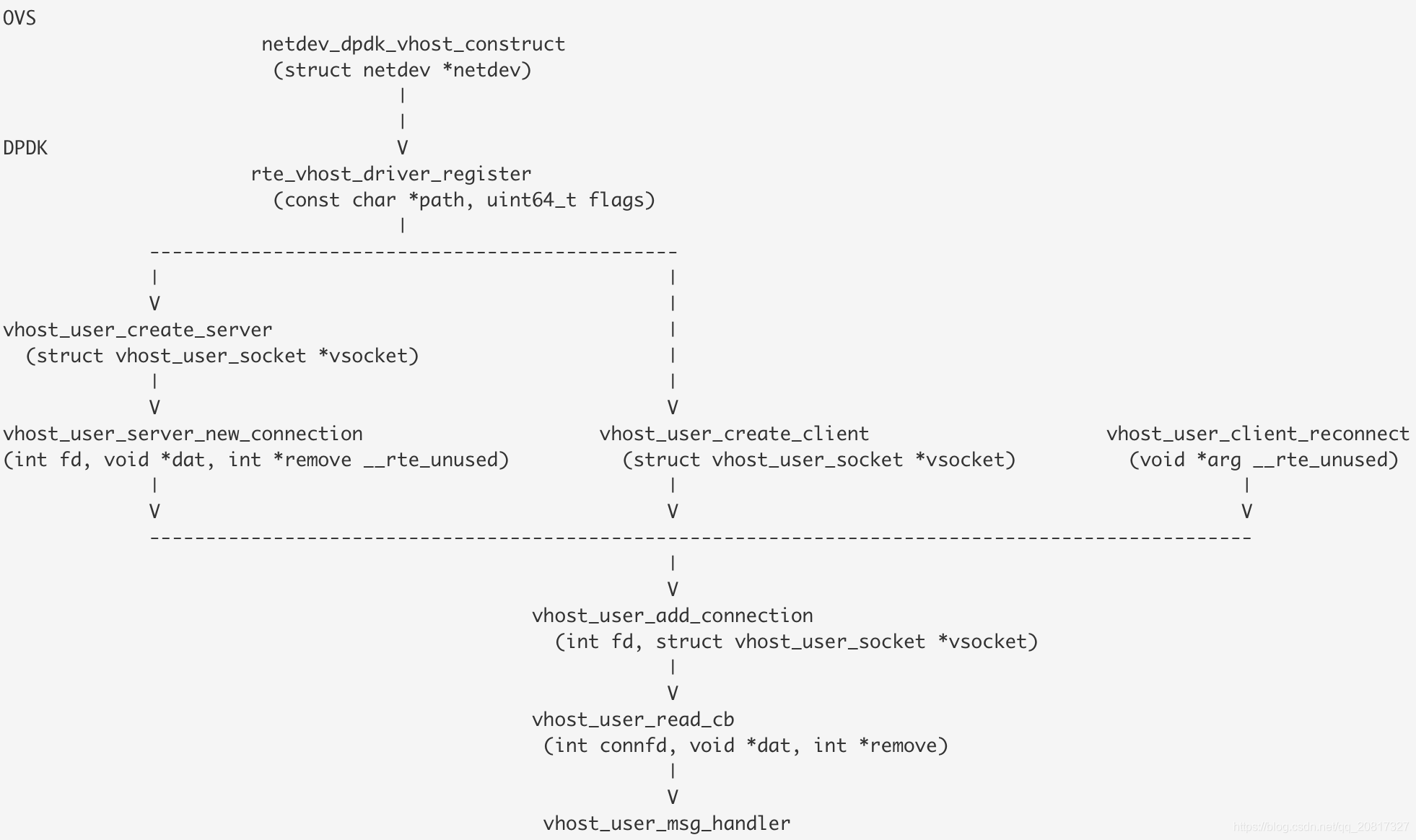

OVS函数netdev_dpdk_vhost_construct调用DPDK的rte_vhost_driver_register函数,其又调用vhost_user_create_server或者vhost_user_create_client函数创建套接口。默认使用前者创建服务端模式的套接口,如果设置了RTE_VHOST_USER_CLIENT标志,创建客户端模式套接口。

相关的函数调用关系如下:

netdev_dpdk_vhost_construct定义在文件openvswitch-2.9.2/lib/netdev-dpdk.c

netdev_dpdk_vhost_construct()

static int

netdev_dpdk_vhost_construct(struct netdev *netdev)

{

struct netdev_dpdk *dev = netdev_dpdk_cast(netdev);

const char *name = netdev->name;

int err;

/* 'name' is appended to 'vhost_sock_dir' and used to create a socket in

* the file system. '/' or '\' would traverse directories, so they're not

* acceptable in 'name'. */

if (strchr(name, '/') || strchr(name, '\\')) {

VLOG_ERR("\"%s\" is not a valid name for a vhost-user port. "

"A valid name must not include '/' or '\\'",

name);

return EINVAL;

}

ovs_mutex_lock(&dpdk_mutex);

/* Take the name of the vhost-user port and append it to the location where

* the socket is to be created, then register the socket.

*/

snprintf(dev->vhost_id, sizeof dev->vhost_id, "%s/%s",

dpdk_get_vhost_sock_dir(), name);

dev->vhost_driver_flags &= ~RTE_VHOST_USER_CLIENT;

err = rte_vhost_driver_register(dev->vhost_id, dev->vhost_driver_flags);

if (err) {

VLOG_ERR("vhost-user socket device setup failure for socket %s\n",

dev->vhost_id);

goto out;

} else {

fatal_signal_add_file_to_unlink(dev->vhost_id);

VLOG_INFO("Socket %s created for vhost-user port %s\n",

dev->vhost_id, name);

}

err = rte_vhost_driver_callback_register(dev->vhost_id,

&virtio_net_device_ops);

if (err) {

VLOG_ERR("rte_vhost_driver_callback_register failed for vhost user "

"port: %s\n", name);

goto out;

}

err = rte_vhost_driver_disable_features(dev->vhost_id,

1ULL << VIRTIO_NET_F_HOST_TSO4

| 1ULL << VIRTIO_NET_F_HOST_TSO6

| 1ULL << VIRTIO_NET_F_CSUM);

if (err) {

VLOG_ERR("rte_vhost_driver_disable_features failed for vhost user "

"port: %s\n", name);

goto out;

}

err = rte_vhost_driver_start(dev->vhost_id);

if (err) {

VLOG_ERR("rte_vhost_driver_start failed for vhost user "

"port: %s\n", name);

goto out;

}

err = vhost_common_construct(netdev);

if (err) {

VLOG_ERR("vhost_common_construct failed for vhost user "

"port: %s\n", name);

}

out:

ovs_mutex_unlock(&dpdk_mutex);

VLOG_WARN_ONCE("dpdkvhostuser ports are considered deprecated; "

"please migrate to dpdkvhostuserclient ports.");

return err;

}

netdev_dpdk_vhost_construct函数调用rte_vhost_driver_register。以下代码均定义在dpdk-18.08/lib/librte_vhost/socket.c

rte_vhost_driver_register()

/*

* Register a new vhost-user socket; here we could act as server

* (the default case), or client (when RTE_VHOST_USER_CLIENT) flag

* is set.

*/

int

rte_vhost_driver_register(const char *path, uint64_t flags)

{

int ret = -1;

struct vhost_user_socket *vsocket;

if (!path)

return -1;

pthread_mutex_lock(&vhost_user.mutex);

if (vhost_user.vsocket_cnt == MAX_VHOST_SOCKET) {

RTE_LOG(ERR, VHOST_CONFIG,

"error: the number of vhost sockets reaches maximum\n");

goto out;

}

vsocket = malloc(sizeof(struct vhost_user_socket));

if (!vsocket)

goto out;

memset(vsocket, 0, sizeof(struct vhost_user_socket));

vsocket->path = strdup(path);

if (vsocket->path == NULL) {

RTE_LOG(ERR, VHOST_CONFIG,

"error: failed to copy socket path string\n");

vhost_user_socket_mem_free(vsocket);

goto out;

}

TAILQ_INIT(&vsocket->conn_list);

ret = pthread_mutex_init(&vsocket->conn_mutex, NULL);

if (ret) {

RTE_LOG(ERR, VHOST_CONFIG,

"error: failed to init connection mutex\n");

goto out_free;

}

vsocket->dequeue_zero_copy = flags & RTE_VHOST_USER_DEQUEUE_ZERO_COPY;

/*

* Set the supported features correctly for the builtin vhost-user

* net driver.

*

* Applications know nothing about features the builtin virtio net

* driver (virtio_net.c) supports, thus it's not possible for them

* to invoke rte_vhost_driver_set_features(). To workaround it, here

* we set it unconditionally. If the application want to implement

* another vhost-user driver (say SCSI), it should call the

* rte_vhost_driver_set_features(), which will overwrite following

* two values.

*/

vsocket->use_builtin_virtio_net = true;

vsocket->supported_features = VIRTIO_NET_SUPPORTED_FEATURES;

vsocket->features = VIRTIO_NET_SUPPORTED_FEATURES;

/* Dequeue zero copy can't assure descriptors returned in order */

if (vsocket->dequeue_zero_copy) {

vsocket->supported_features &= ~(1ULL << VIRTIO_F_IN_ORDER);

vsocket->features &= ~(1ULL << VIRTIO_F_IN_ORDER);

}

if (!(flags & RTE_VHOST_USER_IOMMU_SUPPORT)) {

vsocket->supported_features &= ~(1ULL << VIRTIO_F_IOMMU_PLATFORM);

vsocket->features &= ~(1ULL << VIRTIO_F_IOMMU_PLATFORM);

}

if ((flags & RTE_VHOST_USER_CLIENT) != 0) {

vsocket->reconnect = !(flags & RTE_VHOST_USER_NO_RECONNECT);

if (vsocket->reconnect && reconn_tid == 0) {

if (vhost_user_reconnect_init() != 0)

goto out_mutex;

}

} else {

vsocket->is_server = true;

}

ret = create_unix_socket(vsocket);

if (ret < 0) {

goto out_mutex;

}

vhost_user.vsockets[vhost_user.vsocket_cnt++] = vsocket;

pthread_mutex_unlock(&vhost_user.mutex);

return ret;

out_mutex:

if (pthread_mutex_destroy(&vsocket->conn_mutex)) {

RTE_LOG(ERR, VHOST_CONFIG,

"error: failed to destroy connection mutex\n");

}

out_free:

vhost_user_socket_mem_free(vsocket);

out:

pthread_mutex_unlock(&vhost_user.mutex);

return ret;

}

create_unix_socket()

static int

create_unix_socket(struct vhost_user_socket *vsocket)

{

int fd;

struct sockaddr_un *un = &vsocket->un;

fd = socket(AF_UNIX, SOCK_STREAM, 0);

if (fd < 0)

return -1;

RTE_LOG(INFO, VHOST_CONFIG, "vhost-user %s: socket created, fd: %d\n",

vsocket->is_server ? "server" : "client", fd);

if (!vsocket->is_server && fcntl(fd, F_SETFL, O_NONBLOCK)) {

RTE_LOG(ERR, VHOST_CONFIG,

"vhost-user: can't set nonblocking mode for socket, fd: "

"%d (%s)\n", fd, strerror(errno));

close(fd);

return -1;

}

memset(un, 0, sizeof(*un));

un->sun_family = AF_UNIX;

strncpy(un->sun_path, vsocket->path, sizeof(un->sun_path));

un->sun_path[sizeof(un->sun_path) - 1] = '\0';

vsocket->socket_fd = fd;

return 0;

}

netdev_dpdk_vhost_construct函数调用rte_vhost_driver_start。定义在dpdk-18.08/lib/librte_vhost/socket.c

rte_vhost_driver_start()

int

rte_vhost_driver_start(const char *path)

{

struct vhost_user_socket *vsocket;

static pthread_t fdset_tid;

pthread_mutex_lock(&vhost_user.mutex);

vsocket = find_vhost_user_socket(path);

pthread_mutex_unlock(&vhost_user.mutex);

if (!vsocket)

return -1;

if (fdset_tid == 0) {

/**

* create a pipe which will be waited by poll and notified to

* rebuild the wait list of poll.

*/

if (fdset_pipe_init(&vhost_user.fdset) < 0) {

RTE_LOG(ERR, VHOST_CONFIG,

"failed to create pipe for vhost fdset\n");

return -1;

}

int ret = rte_ctrl_thread_create(&fdset_tid,

"vhost-events", NULL, fdset_event_dispatch,

&vhost_user.fdset);

if (ret != 0) {

RTE_LOG(ERR, VHOST_CONFIG,

"failed to create fdset handling thread");

fdset_pipe_uninit(&vhost_user.fdset);

return -1;

}

}

if (vsocket->is_server)

return vhost_user_start_server(vsocket);

else

return vhost_user_start_client(vsocket);

}

vhost_user_create_server调用vhost_user_server_new_connection:

以下的3个函数调用vhost_user_add_connection:

vhost_user_server_new_connection()

static void

vhost_user_server_new_connection(int fd, void *dat, int *remove __rte_unused)

{

struct vhost_user_socket *vsocket = dat;

fd = accept(fd, NULL, NULL);

if (fd < 0)

return;

RTE_LOG(INFO, VHOST_CONFIG, "new vhost user connection is %d\n", fd);

vhost_user_add_connection(fd, vsocket);

}

vhost_user_client_reconnect()

static void *

vhost_user_client_reconnect(void *arg __rte_unused)

{

int ret;

struct vhost_user_reconnect *reconn, *next;

while (1) {

pthread_mutex_lock(&reconn_list.mutex);

/*

* An equal implementation of TAILQ_FOREACH_SAFE,

* which does not exist on all platforms.

*/

for (reconn = TAILQ_FIRST(&reconn_list.head);

reconn != NULL; reconn = next) {

next = TAILQ_NEXT(reconn, next);

ret = vhost_user_connect_nonblock(reconn->fd,

(struct sockaddr *)&reconn->un,

sizeof(reconn->un));

if (ret == -2) {

close(reconn->fd);

RTE_LOG(ERR, VHOST_CONFIG,

"reconnection for fd %d failed\n",

reconn->fd);

goto remove_fd;

}

if (ret == -1)

continue;

RTE_LOG(INFO, VHOST_CONFIG,

"%s: connected\n", reconn->vsocket->path);

vhost_user_add_connection(reconn->fd, reconn->vsocket);

remove_fd:

TAILQ_REMOVE(&reconn_list.head, reconn, next);

free(reconn);

}

pthread_mutex_unlock(&reconn_list.mutex);

sleep(1);

}

return NULL;

}

vhost_user_start_client()

static int

vhost_user_start_client(struct vhost_user_socket *vsocket)

{

int ret;

int fd = vsocket->socket_fd;

const char *path = vsocket->path;

struct vhost_user_reconnect *reconn;

ret = vhost_user_connect_nonblock(fd, (struct sockaddr *)&vsocket->un,

sizeof(vsocket->un));

if (ret == 0) {

vhost_user_add_connection(fd, vsocket);

return 0;

}

RTE_LOG(WARNING, VHOST_CONFIG,

"failed to connect to %s: %s\n",

path, strerror(errno));

if (ret == -2 || !vsocket->reconnect) {

close(fd);

return -1;

}

RTE_LOG(INFO, VHOST_CONFIG, "%s: reconnecting...\n", path);

reconn = malloc(sizeof(*reconn));

if (reconn == NULL) {

RTE_LOG(ERR, VHOST_CONFIG,

"failed to allocate memory for reconnect\n");

close(fd);

return -1;

}

reconn->un = vsocket->un;

reconn->fd = fd;

reconn->vsocket = vsocket;

pthread_mutex_lock(&reconn_list.mutex);

TAILQ_INSERT_TAIL(&reconn_list.head, reconn, next);

pthread_mutex_unlock(&reconn_list.mutex);

return 0;

}

vhost_user_connect_nonblock()

static int

vhost_user_connect_nonblock(int fd, struct sockaddr *un, size_t sz)

{

int ret, flags;

ret = connect(fd, un, sz);

if (ret < 0 && errno != EISCONN)

return -1;

flags = fcntl(fd, F_GETFL, 0);

if (flags < 0) {

RTE_LOG(ERR, VHOST_CONFIG,

"can't get flags for connfd %d\n", fd);

return -2;

}

if ((flags & O_NONBLOCK) && fcntl(fd, F_SETFL, flags & ~O_NONBLOCK)) {

RTE_LOG(ERR, VHOST_CONFIG,

"can't disable nonblocking on fd %d\n", fd);

return -2;

}

return 0;

}

vhost_user_start_server()

static int

vhost_user_start_server(struct vhost_user_socket *vsocket)

{

int ret;

int fd = vsocket->socket_fd;

const char *path = vsocket->path;

/*

* bind () may fail if the socket file with the same name already

* exists. But the library obviously should not delete the file

* provided by the user, since we can not be sure that it is not

* being used by other applications. Moreover, many applications form

* socket names based on user input, which is prone to errors.

*

* The user must ensure that the socket does not exist before

* registering the vhost driver in server mode.

*/

ret = bind(fd, (struct sockaddr *)&vsocket->un, sizeof(vsocket->un));

if (ret < 0) {

RTE_LOG(ERR, VHOST_CONFIG,

"failed to bind to %s: %s; remove it and try again\n",

path, strerror(errno));

goto err;

}

RTE_LOG(INFO, VHOST_CONFIG, "bind to %s\n", path);

ret = listen(fd, MAX_VIRTIO_BACKLOG);

if (ret < 0)

goto err;

ret = fdset_add(&vhost_user.fdset, fd, vhost_user_server_new_connection,

NULL, vsocket);

if (ret < 0) {

RTE_LOG(ERR, VHOST_CONFIG,

"failed to add listen fd %d to vhost server fdset\n",

fd);

goto err;

}

return 0;

err:

close(fd);

return -1;

}

vhost_user_add_connection()

static void

vhost_user_add_connection(int fd, struct vhost_user_socket *vsocket)

{

int vid;

size_t size;

struct vhost_user_connection *conn;

int ret;

if (vsocket == NULL)

return;

conn = malloc(sizeof(*conn));

if (conn == NULL) {

close(fd);

return;

}

vid = vhost_new_device();

if (vid == -1) {

goto err;

}

size = strnlen(vsocket->path, PATH_MAX);

vhost_set_ifname(vid, vsocket->path, size);

vhost_set_builtin_virtio_net(vid, vsocket->use_builtin_virtio_net);

vhost_attach_vdpa_device(vid, vsocket->vdpa_dev_id);

if (vsocket->dequeue_zero_copy)

vhost_enable_dequeue_zero_copy(vid);

RTE_LOG(INFO, VHOST_CONFIG, "new device, handle is %d\n", vid);

if (vsocket->notify_ops->new_connection) {

ret = vsocket->notify_ops->new_connection(vid);

if (ret < 0) {

RTE_LOG(ERR, VHOST_CONFIG,

"failed to add vhost user connection with fd %d\n",

fd);

goto err;

}

}

conn->connfd = fd;

conn->vsocket = vsocket;

conn->vid = vid;

ret = fdset_add(&vhost_user.fdset, fd, vhost_user_read_cb,

NULL, conn);

if (ret < 0) {

RTE_LOG(ERR, VHOST_CONFIG,

"failed to add fd %d into vhost server fdset\n",

fd);

if (vsocket->notify_ops->destroy_connection)

vsocket->notify_ops->destroy_connection(conn->vid);

goto err;

}

pthread_mutex_lock(&vsocket->conn_mutex);

TAILQ_INSERT_TAIL(&vsocket->conn_list, conn, next);

pthread_mutex_unlock(&vsocket->conn_mutex);

fdset_pipe_notify(&vhost_user.fdset);

return;

err:

free(conn);

close(fd);

}

vhost_user_add_connection接下来执行vhost_user_read_cb函数,其又调用vhost_user_msg_handler函数处理接收到的消息。

vhost_user_read_cb()

static void

vhost_user_read_cb(int connfd, void *dat, int *remove)

{

struct vhost_user_connection *conn = dat;

struct vhost_user_socket *vsocket = conn->vsocket;

int ret;

ret = vhost_user_msg_handler(conn->vid, connfd);

if (ret < 0) {

close(connfd);

*remove = 1;

vhost_destroy_device(conn->vid);

if (vsocket->notify_ops->destroy_connection)

vsocket->notify_ops->destroy_connection(conn->vid);

pthread_mutex_lock(&vsocket->conn_mutex);

TAILQ_REMOVE(&vsocket->conn_list, conn, next);

pthread_mutex_unlock(&vsocket->conn_mutex);

free(conn);

if (vsocket->reconnect) {

create_unix_socket(vsocket);

vhost_user_start_client(vsocket);

}

}

}

dpdk-18.08/lib/librte_vhost/vhost_user.c

vhost_user_msg_handler()

int

vhost_user_msg_handler(int vid, int fd)

{

struct virtio_net *dev;

struct VhostUserMsg msg;

struct rte_vdpa_device *vdpa_dev;

int did = -1;

int ret;

int unlock_required = 0;

uint32_t skip_master = 0;

dev = get_device(vid);

if (dev == NULL)

return -1;

if (!dev->notify_ops) {

dev->notify_ops = vhost_driver_callback_get(dev->ifname);

if (!dev->notify_ops) {

RTE_LOG(ERR, VHOST_CONFIG,

"failed to get callback ops for driver %s\n",

dev->ifname);

return -1;

}

}

ret = read_vhost_message(fd, &msg);

if (ret <= 0 || msg.request.master >= VHOST_USER_MAX) {

if (ret < 0)

RTE_LOG(ERR, VHOST_CONFIG,

"vhost read message failed\n");

else if (ret == 0)

RTE_LOG(INFO, VHOST_CONFIG,

"vhost peer closed\n");

else

RTE_LOG(ERR, VHOST_CONFIG,

"vhost read incorrect message\n");

return -1;

}

ret = 0;

if (msg.request.master != VHOST_USER_IOTLB_MSG)

RTE_LOG(INFO, VHOST_CONFIG, "read message %s\n",

vhost_message_str[msg.request.master]);

else

RTE_LOG(DEBUG, VHOST_CONFIG, "read message %s\n",

vhost_message_str[msg.request.master]);

ret = vhost_user_check_and_alloc_queue_pair(dev, &msg);

if (ret < 0) {

RTE_LOG(ERR, VHOST_CONFIG,

"failed to alloc queue\n");

return -1;

}

/*

* Note: we don't lock all queues on VHOST_USER_GET_VRING_BASE

* and VHOST_USER_RESET_OWNER, since it is sent when virtio stops

* and device is destroyed. destroy_device waits for queues to be

* inactive, so it is safe. Otherwise taking the access_lock

* would cause a dead lock.

*/

switch (msg.request.master) {

case VHOST_USER_SET_FEATURES:

case VHOST_USER_SET_PROTOCOL_FEATURES:

case VHOST_USER_SET_OWNER:

case VHOST_USER_SET_MEM_TABLE:

case VHOST_USER_SET_LOG_BASE:

case VHOST_USER_SET_LOG_FD:

case VHOST_USER_SET_VRING_NUM:

case VHOST_USER_SET_VRING_ADDR:

case VHOST_USER_SET_VRING_BASE:

case VHOST_USER_SET_VRING_KICK:

case VHOST_USER_SET_VRING_CALL:

case VHOST_USER_SET_VRING_ERR:

case VHOST_USER_SET_VRING_ENABLE:

case VHOST_USER_SEND_RARP:

case VHOST_USER_NET_SET_MTU:

case VHOST_USER_SET_SLAVE_REQ_FD:

vhost_user_lock_all_queue_pairs(dev);

unlock_required = 1;

break;

default:

break;

}

if (dev->extern_ops.pre_msg_handle) {

uint32_t need_reply;

ret = (*dev->extern_ops.pre_msg_handle)(dev->vid,

(void *)&msg, &need_reply, &skip_master);

if (ret < 0)

goto skip_to_reply;

if (need_reply)

send_vhost_reply(fd, &msg);

if (skip_master)

goto skip_to_post_handle;

}

switch (msg.request.master) {

case VHOST_USER_GET_FEATURES:

msg.payload.u64 = vhost_user_get_features(dev);

msg.size = sizeof(msg.payload.u64);

send_vhost_reply(fd, &msg);

break;

case VHOST_USER_SET_FEATURES:

ret = vhost_user_set_features(dev, msg.payload.u64);

break;

case VHOST_USER_GET_PROTOCOL_FEATURES:

vhost_user_get_protocol_features(dev, &msg);

send_vhost_reply(fd, &msg);

break;

case VHOST_USER_SET_PROTOCOL_FEATURES:

ret = vhost_user_set_protocol_features(dev, msg.payload.u64);

break;

case VHOST_USER_SET_OWNER:

ret = vhost_user_set_owner();

break;

case VHOST_USER_RESET_OWNER:

ret = vhost_user_reset_owner(dev);

break;

case VHOST_USER_SET_MEM_TABLE:

ret = vhost_user_set_mem_table(&dev, &msg);

break;

case VHOST_USER_SET_LOG_BASE:

ret = vhost_user_set_log_base(dev, &msg);

if (ret)

goto skip_to_reply;

/* it needs a reply */

msg.size = sizeof(msg.payload.u64);

send_vhost_reply(fd, &msg);

break;

case VHOST_USER_SET_LOG_FD:

close(msg.fds[0]);

RTE_LOG(INFO, VHOST_CONFIG, "not implemented.\n");

break;

case VHOST_USER_SET_VRING_NUM:

ret = vhost_user_set_vring_num(dev, &msg);

break;

case VHOST_USER_SET_VRING_ADDR:

ret = vhost_user_set_vring_addr(&dev, &msg);

break;

case VHOST_USER_SET_VRING_BASE:

ret = vhost_user_set_vring_base(dev, &msg);

break;

case VHOST_USER_GET_VRING_BASE:

ret = vhost_user_get_vring_base(dev, &msg);

if (ret)

goto skip_to_reply;

msg.size = sizeof(msg.payload.state);

send_vhost_reply(fd, &msg);

break;

case VHOST_USER_SET_VRING_KICK:

ret = vhost_user_set_vring_kick(&dev, &msg);

break;

case VHOST_USER_SET_VRING_CALL:

vhost_user_set_vring_call(dev, &msg);

break;

case VHOST_USER_SET_VRING_ERR:

if (!(msg.payload.u64 & VHOST_USER_VRING_NOFD_MASK))

close(msg.fds[0]);

RTE_LOG(INFO, VHOST_CONFIG, "not implemented\n");

break;

case VHOST_USER_GET_QUEUE_NUM:

msg.payload.u64 = (uint64_t)vhost_user_get_queue_num(dev);

msg.size = sizeof(msg.payload.u64);

send_vhost_reply(fd, &msg);

break;

case VHOST_USER_SET_VRING_ENABLE:

ret = vhost_user_set_vring_enable(dev, &msg);

break;

case VHOST_USER_SEND_RARP:

ret = vhost_user_send_rarp(dev, &msg);

break;

case VHOST_USER_NET_SET_MTU:

ret = vhost_user_net_set_mtu(dev, &msg);

break;

case VHOST_USER_SET_SLAVE_REQ_FD:

ret = vhost_user_set_req_fd(dev, &msg);

break;

case VHOST_USER_IOTLB_MSG:

ret = vhost_user_iotlb_msg(&dev, &msg);

break;

default:

ret = -1;

break;

}

skip_to_post_handle:

if (!ret && dev->extern_ops.post_msg_handle) {

uint32_t need_reply;

ret = (*dev->extern_ops.post_msg_handle)(

dev->vid, (void *)&msg, &need_reply);

if (ret < 0)

goto skip_to_reply;

if (need_reply)

send_vhost_reply(fd, &msg);

}

skip_to_reply:

if (unlock_required)

vhost_user_unlock_all_queue_pairs(dev);

if (msg.flags & VHOST_USER_NEED_REPLY) {

msg.payload.u64 = !!ret;

msg.size = sizeof(msg.payload.u64);

send_vhost_reply(fd, &msg);

} else if (ret) {

RTE_LOG(ERR, VHOST_CONFIG,

"vhost message handling failed.\n");

return -1;

}

if (!(dev->flags & VIRTIO_DEV_RUNNING) && virtio_is_ready(dev)) {

dev->flags |= VIRTIO_DEV_READY;

if (!(dev->flags & VIRTIO_DEV_RUNNING)) {

if (dev->dequeue_zero_copy) {

RTE_LOG(INFO, VHOST_CONFIG,

"dequeue zero copy is enabled\n");

}

if (dev->notify_ops->new_device(dev->vid) == 0)

dev->flags |= VIRTIO_DEV_RUNNING;

}

}

did = dev->vdpa_dev_id;

vdpa_dev = rte_vdpa_get_device(did);

if (vdpa_dev && virtio_is_ready(dev) &&

!(dev->flags & VIRTIO_DEV_VDPA_CONFIGURED) &&

msg.request.master == VHOST_USER_SET_VRING_ENABLE) {

if (vdpa_dev->ops->dev_conf)

vdpa_dev->ops->dev_conf(dev->vid);

dev->flags |= VIRTIO_DEV_VDPA_CONFIGURED;

if (vhost_user_host_notifier_ctrl(dev->vid, true) != 0) {

RTE_LOG(INFO, VHOST_CONFIG,

"(%d) software relay is used for vDPA, performance may be low.\n",

dev->vid);

}

}

return 0;

}

virtio告知DPDK共享内存的virtio queues内存地址

DPDK使用函数vhost_user_set_vring_addr将virtio的描述符、已用环和可用环地址转化为DPDK自身的地址空间。

dpdk-18.08/lib/librte_vhost/vhost_user.c

vhost_user_set_vring_addr()

/*

* The virtio device sends us the desc, used and avail ring addresses.

* This function then converts these to our address space.

*/

static int

vhost_user_set_vring_addr(struct virtio_net **pdev, VhostUserMsg *msg)

{

struct vhost_virtqueue *vq;

struct vhost_vring_addr *addr = &msg->payload.addr;

struct virtio_net *dev = *pdev;

if (dev->mem == NULL)

return -1;

/* addr->index refers to the queue index. The txq 1, rxq is 0. */

vq = dev->virtqueue[msg->payload.addr.index];

/*

* Rings addresses should not be interpreted as long as the ring is not

* started and enabled

*/

memcpy(&vq->ring_addrs, addr, sizeof(*addr));

vring_invalidate(dev, vq);

if (vq->enabled && (dev->features &

(1ULL << VHOST_USER_F_PROTOCOL_FEATURES))) {

dev = translate_ring_addresses(dev, msg->payload.addr.index);

if (!dev)

return -1;

*pdev = dev;

}

return 0;

}

只有在通过控制通道vhu套接口接收到VHOST_USER_SET_VRING_ADDR类型消息时,设置内存地址。

dpdk-18.08/lib/librte_vhost/vhost_user.c

vhost_user_msg_handler()

int

vhost_user_msg_handler(int vid, int fd)

{

struct virtio_net *dev;

struct VhostUserMsg msg;

struct rte_vdpa_device *vdpa_dev;

int did = -1;

int ret;

int unlock_required = 0;

uint32_t skip_master = 0;

dev = get_device(vid);

if (dev == NULL)

return -1;

if (!dev->notify_ops) {

dev->notify_ops = vhost_driver_callback_get(dev->ifname);

if (!dev->notify_ops) {

RTE_LOG(ERR, VHOST_CONFIG,

"failed to get callback ops for driver %s\n",

dev->ifname);

return -1;

}

}

ret = read_vhost_message(fd, &msg);

if (ret <= 0 || msg.request.master >= VHOST_USER_MAX) {

if (ret < 0)

RTE_LOG(ERR, VHOST_CONFIG,

"vhost read message failed\n");

else if (ret == 0)

RTE_LOG(INFO, VHOST_CONFIG,

"vhost peer closed\n");

else

RTE_LOG(ERR, VHOST_CONFIG,

"vhost read incorrect message\n");

return -1;

}

ret = 0;

if (msg.request.master != VHOST_USER_IOTLB_MSG)

RTE_LOG(INFO, VHOST_CONFIG, "read message %s\n",

vhost_message_str[msg.request.master]);

else

RTE_LOG(DEBUG, VHOST_CONFIG, "read message %s\n",

vhost_message_str[msg.request.master]);

ret = vhost_user_check_and_alloc_queue_pair(dev, &msg);

if (ret < 0) {

RTE_LOG(ERR, VHOST_CONFIG,

"failed to alloc queue\n");

return -1;

}

/*

* Note: we don't lock all queues on VHOST_USER_GET_VRING_BASE

* and VHOST_USER_RESET_OWNER, since it is sent when virtio stops

* and device is destroyed. destroy_device waits for queues to be

* inactive, so it is safe. Otherwise taking the access_lock

* would cause a dead lock.

*/

switch (msg.request.master) {

case VHOST_USER_SET_FEATURES:

case VHOST_USER_SET_PROTOCOL_FEATURES:

case VHOST_USER_SET_OWNER:

case VHOST_USER_SET_MEM_TABLE:

case VHOST_USER_SET_LOG_BASE:

case VHOST_USER_SET_LOG_FD:

case VHOST_USER_SET_VRING_NUM:

case VHOST_USER_SET_VRING_ADDR:

case VHOST_USER_SET_VRING_BASE:

case VHOST_USER_SET_VRING_KICK:

case VHOST_USER_SET_VRING_CALL:

case VHOST_USER_SET_VRING_ERR:

case VHOST_USER_SET_VRING_ENABLE:

case VHOST_USER_SEND_RARP:

case VHOST_USER_NET_SET_MTU:

case VHOST_USER_SET_SLAVE_REQ_FD:

vhost_user_lock_all_queue_pairs(dev);

unlock_required = 1;

break;

default:

break;

}

if (dev->extern_ops.pre_msg_handle) {

uint32_t need_reply;

ret = (*dev->extern_ops.pre_msg_handle)(dev->vid,

(void *)&msg, &need_reply, &skip_master);

if (ret < 0)

goto skip_to_reply;

if (need_reply)

send_vhost_reply(fd, &msg);

if (skip_master)

goto skip_to_post_handle;

}

switch (msg.request.master) {

case VHOST_USER_GET_FEATURES:

msg.payload.u64 = vhost_user_get_features(dev);

msg.size = sizeof(msg.payload.u64);

send_vhost_reply(fd, &msg);

break;

case VHOST_USER_SET_FEATURES:

ret = vhost_user_set_features(dev, msg.payload.u64);

break;

case VHOST_USER_GET_PROTOCOL_FEATURES:

vhost_user_get_protocol_features(dev, &msg);

send_vhost_reply(fd, &msg);

break;

case VHOST_USER_SET_PROTOCOL_FEATURES:

ret = vhost_user_set_protocol_features(dev, msg.payload.u64);

break;

case VHOST_USER_SET_OWNER:

ret = vhost_user_set_owner();

break;

case VHOST_USER_RESET_OWNER:

ret = vhost_user_reset_owner(dev);

break;

case VHOST_USER_SET_MEM_TABLE:

ret = vhost_user_set_mem_table(&dev, &msg);

break;

case VHOST_USER_SET_LOG_BASE:

ret = vhost_user_set_log_base(dev, &msg);

if (ret)

goto skip_to_reply;

/* it needs a reply */

msg.size = sizeof(msg.payload.u64);

send_vhost_reply(fd, &msg);

break;

case VHOST_USER_SET_LOG_FD:

close(msg.fds[0]);

RTE_LOG(INFO, VHOST_CONFIG, "not implemented.\n");

break;

case VHOST_USER_SET_VRING_NUM:

ret = vhost_user_set_vring_num(dev, &msg);

break;

case VHOST_USER_SET_VRING_ADDR:

ret = vhost_user_set_vring_addr(&dev, &msg);

break;

case VHOST_USER_SET_VRING_BASE:

ret = vhost_user_set_vring_base(dev, &msg);

break;

case VHOST_USER_GET_VRING_BASE:

ret = vhost_user_get_vring_base(dev, &msg);

if (ret)

goto skip_to_reply;

msg.size = sizeof(msg.payload.state);

send_vhost_reply(fd, &msg);

break;

case VHOST_USER_SET_VRING_KICK:

ret = vhost_user_set_vring_kick(&dev, &msg);

break;

case VHOST_USER_SET_VRING_CALL:

vhost_user_set_vring_call(dev, &msg);

break;

case VHOST_USER_SET_VRING_ERR:

if (!(msg.payload.u64 & VHOST_USER_VRING_NOFD_MASK))

close(msg.fds[0]);

RTE_LOG(INFO, VHOST_CONFIG, "not implemented\n");

break;

case VHOST_USER_GET_QUEUE_NUM:

msg.payload.u64 = (uint64_t)vhost_user_get_queue_num(dev);

msg.size = sizeof(msg.payload.u64);

send_vhost_reply(fd, &msg);

break;

case VHOST_USER_SET_VRING_ENABLE:

ret = vhost_user_set_vring_enable(dev, &msg);

break;

case VHOST_USER_SEND_RARP:

ret = vhost_user_send_rarp(dev, &msg);

break;

case VHOST_USER_NET_SET_MTU:

ret = vhost_user_net_set_mtu(dev, &msg);

break;

case VHOST_USER_SET_SLAVE_REQ_FD:

ret = vhost_user_set_req_fd(dev, &msg);

break;

case VHOST_USER_IOTLB_MSG:

ret = vhost_user_iotlb_msg(&dev, &msg);

break;

default:

ret = -1;

break;

}

skip_to_post_handle:

if (!ret && dev->extern_ops.post_msg_handle) {

uint32_t need_reply;

ret = (*dev->extern_ops.post_msg_handle)(

dev->vid, (void *)&msg, &need_reply);

if (ret < 0)

goto skip_to_reply;

if (need_reply)

send_vhost_reply(fd, &msg);

}

skip_to_reply:

if (unlock_required)

vhost_user_unlock_all_queue_pairs(dev);

if (msg.flags & VHOST_USER_NEED_REPLY) {

msg.payload.u64 = !!ret;

msg.size = sizeof(msg.payload.u64);

send_vhost_reply(fd, &msg);

} else if (ret) {

RTE_LOG(ERR, VHOST_CONFIG,

"vhost message handling failed.\n");

return -1;

}

if (!(dev->flags & VIRTIO_DEV_RUNNING) && virtio_is_ready(dev)) {

dev->flags |= VIRTIO_DEV_READY;

if (!(dev->flags & VIRTIO_DEV_RUNNING)) {

if (dev->dequeue_zero_copy) {

RTE_LOG(INFO, VHOST_CONFIG,

"dequeue zero copy is enabled\n");

}

if (dev->notify_ops->new_device(dev->vid) == 0)

dev->flags |= VIRTIO_DEV_RUNNING;

}

}

did = dev->vdpa_dev_id;

vdpa_dev = rte_vdpa_get_device(did);

if (vdpa_dev && virtio_is_ready(dev) &&

!(dev->flags & VIRTIO_DEV_VDPA_CONFIGURED) &&

msg.request.master == VHOST_USER_SET_VRING_ENABLE) {

if (vdpa_dev->ops->dev_conf)

vdpa_dev->ops->dev_conf(dev->vid);

dev->flags |= VIRTIO_DEV_VDPA_CONFIGURED;

if (vhost_user_host_notifier_ctrl(dev->vid, true) != 0) {

RTE_LOG(INFO, VHOST_CONFIG,

"(%d) software relay is used for vDPA, performance may be low.\n",

dev->vid);

}

}

return 0;

}

实际上,QEMU中有一个与DPDK的消息处理函数类型的处理函数。

qemu-3.0.0/contrib/libvhost-user/libvhost-user.c

vu_process_message()

static bool

vu_process_message(VuDev *dev, VhostUserMsg *vmsg)

{

int do_reply = 0;

/* Print out generic part of the request. */

DPRINT("================ Vhost user message ================\n");

DPRINT("Request: %s (%d)\n", vu_request_to_string(vmsg->request),

vmsg->request);

DPRINT("Flags: 0x%x\n", vmsg->flags);

DPRINT("Size: %d\n", vmsg->size);

if (vmsg->fd_num) {

int i;

DPRINT("Fds:");

for (i = 0; i < vmsg->fd_num; i++) {

DPRINT(" %d", vmsg->fds[i]);

}

DPRINT("\n");

}

if (dev->iface->process_msg &&

dev->iface->process_msg(dev, vmsg, &do_reply)) {

return do_reply;

}

switch (vmsg->request) {

case VHOST_USER_GET_FEATURES:

return vu_get_features_exec(dev, vmsg);

case VHOST_USER_SET_FEATURES:

return vu_set_features_exec(dev, vmsg);

case VHOST_USER_GET_PROTOCOL_FEATURES:

return vu_get_protocol_features_exec(dev, vmsg);

case VHOST_USER_SET_PROTOCOL_FEATURES:

return vu_set_protocol_features_exec(dev, vmsg);

case VHOST_USER_SET_OWNER:

return vu_set_owner_exec(dev, vmsg);

case VHOST_USER_RESET_OWNER:

return vu_reset_device_exec(dev, vmsg);

case VHOST_USER_SET_MEM_TABLE:

return vu_set_mem_table_exec(dev, vmsg);

case VHOST_USER_SET_LOG_BASE:

return vu_set_log_base_exec(dev, vmsg);

case VHOST_USER_SET_LOG_FD:

return vu_set_log_fd_exec(dev, vmsg);

case VHOST_USER_SET_VRING_NUM:

return vu_set_vring_num_exec(dev, vmsg);

case VHOST_USER_SET_VRING_ADDR:

return vu_set_vring_addr_exec(dev, vmsg);

case VHOST_USER_SET_VRING_BASE:

return vu_set_vring_base_exec(dev, vmsg);

case VHOST_USER_GET_VRING_BASE:

return vu_get_vring_base_exec(dev, vmsg);

case VHOST_USER_SET_VRING_KICK:

return vu_set_vring_kick_exec(dev, vmsg);

case VHOST_USER_SET_VRING_CALL:

return vu_set_vring_call_exec(dev, vmsg);

case VHOST_USER_SET_VRING_ERR:

return vu_set_vring_err_exec(dev, vmsg);

case VHOST_USER_GET_QUEUE_NUM:

return vu_get_queue_num_exec(dev, vmsg);

case VHOST_USER_SET_VRING_ENABLE:

return vu_set_vring_enable_exec(dev, vmsg);

case VHOST_USER_SET_SLAVE_REQ_FD:

return vu_set_slave_req_fd(dev, vmsg);

case VHOST_USER_GET_CONFIG:

return vu_get_config(dev, vmsg);

case VHOST_USER_SET_CONFIG:

return vu_set_config(dev, vmsg);

case VHOST_USER_NONE:

break;

case VHOST_USER_POSTCOPY_ADVISE:

return vu_set_postcopy_advise(dev, vmsg);

case VHOST_USER_POSTCOPY_LISTEN:

return vu_set_postcopy_listen(dev, vmsg);

case VHOST_USER_POSTCOPY_END:

return vu_set_postcopy_end(dev, vmsg);

default:

vmsg_close_fds(vmsg);

vu_panic(dev, "Unhandled request: %d", vmsg->request);

}

return false;

}

显然,QEMU中需要有函数通过UNIX套接口发送内存地址信息到DPDK中。

qemu-3.0.0/hw/virtio/vhost-user.c

vhost_user_set_vring_addr()

static int vhost_user_set_vring_addr(struct vhost_dev *dev,

struct vhost_vring_addr *addr)

{

VhostUserMsg msg = {

.hdr.request = VHOST_USER_SET_VRING_ADDR,

.hdr.flags = VHOST_USER_VERSION,

.payload.addr = *addr,

.hdr.size = sizeof(msg.payload.addr),

};

if (vhost_user_write(dev, &msg, NULL, 0) < 0) {

return -1;

}

return 0;

}

OVS DPDK发送数据包到客户机与发送丢包

OVS DPDK中向客户机发送数据包的函数为__netdev_dpdk_vhost_send,位于文件openvswitch-2.9.2/lib/netdev-dpdk.c。

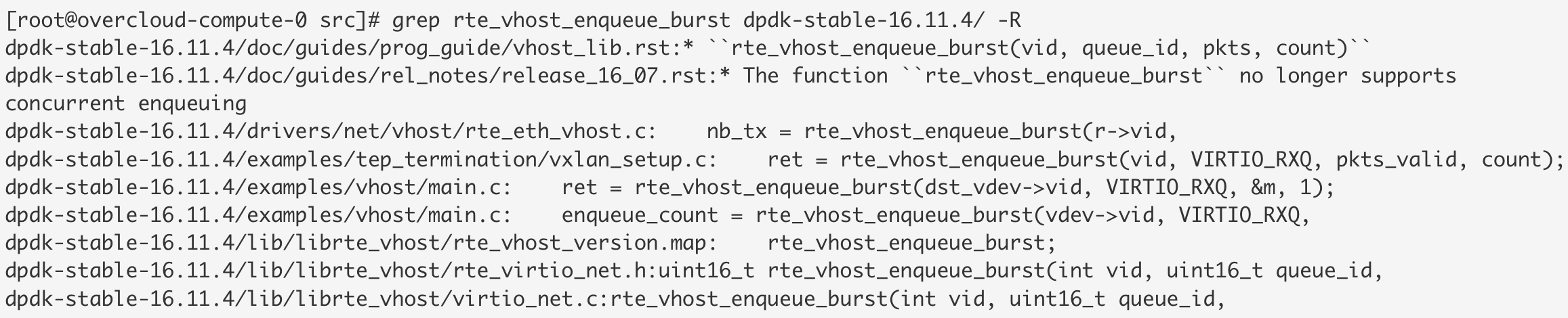

rte_vhost_enqueue_burst()

OVS发送程序,在空间用完后,仍会尝试发送VHOST_ENQ_RETRY_NUM (默认8)次。如果在第一次尝试发送中,没有任何数据包发送成功(无数据包写入共享内存的环中),或者超过了VHOST_ENQ_RETRY_NUM宏限定的次数,剩余的数据包将被丢弃(批量发送最大可由32个数据包组成)。

uint16_t

rte_vhost_enqueue_burst(int vid, uint16_t queue_id,

struct rte_mbuf **pkts, uint16_t count)

{

struct virtio_net *dev = get_device(vid);

if (!dev)

return 0;

if (unlikely(!(dev->flags & VIRTIO_DEV_BUILTIN_VIRTIO_NET))) {

RTE_LOG(ERR, VHOST_DATA,

"(%d) %s: built-in vhost net backend is disabled.\n",

dev->vid, __func__);

return 0;

}

return virtio_dev_rx(dev, queue_id, pkts, count);

}

客户机接收中断处理

当OVS DPDK将新的数据包填入virtio环中时,有以下两种情形:

- 客户机没有在轮询其队列,需要告知其新数据包的到达;

- 客户机正在轮询队列,不需要告知新数据包的到达。

如果客户机使用Linux内核网络协议栈,内核中负责接收报文的NAPI机制混合使用中断和轮询模式。客户机OS开始工作在中断模式,一直到第一个中断进来。此时,CPU快速响应中断,调度内核软中断ksoftirqd线程处理,同时禁止后续中断。

ksoftirqd运行时,尝试处理尽可能多的数据包,但是不能超出netdev_budget限定的数量。如果队列中还有更多的数据包,ksoftirqd线程将重新调度自身,继续处理数据包,直到没有可用的数据包为止。此过程中一直是轮询处理,中断处于关闭状态。处理完数据包之后,ksoftirqd线程停止轮询,重新打开中断,等待下一个数据包到来的中断发生。

当客户机轮询时,CPU的caches高速缓存利用率非常高,避免了额外的延时。宿主机和客户机中合适的进程在运行,进一步降低了延时。另外的,宿主机发送中断IRQ到客户机时,需要对UNIX套接口写操作(系统调用),非常耗时,增加了额外的延时和开销。

作为NFV应用的一部分,客户机中运行DPDK的优势在于其PMD驱动处理流量的方式。PMD驱动工作在轮询模式,关闭了系统中断,OVS DPDK不再需要给客户机发送中断通知。OVS DPDK节省了写UNIX套接口的操作,不在需要执行内核系统调用。OVS DPDK得以一直运行在用户空间,客户机也可以省去处理由控制通道而来的中断,快速运行。

如果没有设置VRING_AVAIL_F_NO_INTERRUPT标志,表明客户机可以接收中断。到客户机的中断通过callfd和操作系统的eventfd组件实现。

客户机的OS可以启用或禁用中断。当客户机禁用virtio接口的中断时,virtio-net驱动通过宏VRING_AVAIL_F_NO_INTERRUPT实现。此宏在DPDK和QEMU中都有定义:

[root@overcloud-0 SOURCES]# grep VRING_AVAIL_F_NO_INTERRUPT -R | grep def

dpdk-18.08/drivers/net/virtio/virtio_ring.h:#define VRING_AVAIL_F_NO_INTERRUPT 1

dpdk-18.08/drivers/crypto/virtio/virtio_ring.h:#define VRING_AVAIL_F_NO_INTERRUPT 1

[root@overcloud-0 qemu]# grep AVAIL_F_NO_INTERRUPT -R -i | grep def

qemu-3.0.0/include/standard-headers/linux/virtio_ring.h:#define VRING_AVAIL_F_NO_INTERRUPT 1

qemu-3.0.0/roms/seabios/src/hw/virtio-ring.h:#define VRING_AVAIL_F_NO_INTERRUPT 1

qemu-3.0.0/roms/ipxe/src/include/ipxe/virtio-ring.h:#define VRING_AVAIL_F_NO_INTERRUPT 1

qemu-3.0.0/roms/seabios-hppa/src/hw/virtio-ring.h:#define VRING_AVAIL_F_NO_INTERRUPT 1

qemu-3.0.0/roms/SLOF/lib/libvirtio/virtio.h:#define VRING_AVAIL_F_NO_INTERRUPT 1

一旦vq->avail->flags中的VRING_AVAIL_F_NO_INTERRUPT标志位设置,指示DPDK不要发送中断到客户机。dpdk-18.08/lib/librte_vhost/vhost.h

vhost_vring_call_split()

static __rte_always_inline void

vhost_vring_call_split(struct virtio_net *dev, struct vhost_virtqueue *vq)

{

/* Flush used->idx update before we read avail->flags. */

rte_smp_mb();

/* Don't kick guest if we don't reach index specified by guest. */

if (dev->features & (1ULL << VIRTIO_RING_F_EVENT_IDX)) {

uint16_t old = vq->signalled_used;

uint16_t new = vq->last_used_idx;

VHOST_LOG_DEBUG(VHOST_DATA, "%s: used_event_idx=%d, old=%d, new=%d\n",

__func__,

vhost_used_event(vq),

old, new);

if (vhost_need_event(vhost_used_event(vq), new, old)

&& (vq->callfd >= 0)) {

vq->signalled_used = vq->last_used_idx;

eventfd_write(vq->callfd, (eventfd_t) 1);

}

} else {

/* Kick the guest if necessary. */

if (!(vq->avail->flags & VRING_AVAIL_F_NO_INTERRUPT)

&& (vq->callfd >= 0))

eventfd_write(vq->callfd, (eventfd_t)1);

}

}

如前所述,PMD驱动不需要执行写UNIX套接口的系统调用了。

OVS DPDK发送数据包到客户机-代码详情

__netdev_dpdk_vhost_send()

static void

__netdev_dpdk_vhost_send(struct netdev *netdev, int qid,

struct dp_packet **pkts, int cnt)

{

struct netdev_dpdk *dev = netdev_dpdk_cast(netdev);

struct rte_mbuf **cur_pkts = (struct rte_mbuf **) pkts;

unsigned int total_pkts = cnt;

unsigned int dropped = 0;

int i, retries = 0;

int vid = netdev_dpdk_get_vid(dev);

qid = dev->tx_q[qid % netdev->n_txq].map;

if (OVS_UNLIKELY(vid < 0 || !dev->vhost_reconfigured || qid < 0

|| !(dev->flags & NETDEV_UP))) {

rte_spinlock_lock(&dev->stats_lock);

dev->stats.tx_dropped+= cnt;

rte_spinlock_unlock(&dev->stats_lock);

goto out;

}

rte_spinlock_lock(&dev->tx_q[qid].tx_lock);

cnt = netdev_dpdk_filter_packet_len(dev, cur_pkts, cnt);

/* Check has QoS has been configured for the netdev */

cnt = netdev_dpdk_qos_run(dev, cur_pkts, cnt, true);

dropped = total_pkts - cnt;

do {

int vhost_qid = qid * VIRTIO_QNUM + VIRTIO_RXQ;

unsigned int tx_pkts;

tx_pkts = rte_vhost_enqueue_burst(vid, vhost_qid, cur_pkts, cnt);

if (OVS_LIKELY(tx_pkts)) {

/* Packets have been sent.*/

cnt -= tx_pkts;

/* Prepare for possible retry.*/

cur_pkts = &cur_pkts[tx_pkts];

} else {

/* No packets sent - do not retry.*/

break;

}

} while (cnt && (retries++ <= VHOST_ENQ_RETRY_NUM));

rte_spinlock_unlock(&dev->tx_q[qid].tx_lock);

rte_spinlock_lock(&dev->stats_lock);

netdev_dpdk_vhost_update_tx_counters(&dev->stats, pkts, total_pkts,

cnt + dropped);

rte_spinlock_unlock(&dev->stats_lock);

out:

for (i = 0; i < total_pkts - dropped; i++) {

dp_packet_delete(pkts[i]);

}

}

rte_vhost_enqueue_burst函数来自于DPDK的vhost库。

dpdk-18.08/lib/librte_vhost/rte_vhost.h

rte_vhost_enqueue_burst()

/**

* This function adds buffers to the virtio devices RX virtqueue. Buffers can

* be received from the physical port or from another virtual device. A packet

* count is returned to indicate the number of packets that were successfully

* added to the RX queue.

* @param vid

* vhost device ID

* @param queue_id

* virtio queue index in mq case

* @param pkts

* array to contain packets to be enqueued

* @param count

* packets num to be enqueued

* @return

* num of packets enqueued

*/

uint16_t rte_vhost_enqueue_burst(int vid, uint16_t queue_id,

struct rte_mbuf **pkts, uint16_t count);

dpdk-18.08/lib/librte_vhost/virtio_net.c

uint16_t

rte_vhost_enqueue_burst(int vid, uint16_t queue_id,

struct rte_mbuf **pkts, uint16_t count)

{

struct virtio_net *dev = get_device(vid);

if (!dev)

return 0;

if (unlikely(!(dev->flags & VIRTIO_DEV_BUILTIN_VIRTIO_NET))) {

RTE_LOG(ERR, VHOST_DATA,

"(%d) %s: built-in vhost net backend is disabled.\n",

dev->vid, __func__);

return 0;

}

return virtio_dev_rx(dev, queue_id, pkts, count);

}

virtio_dev_rx_packed函数和virtio_dev_rx_split函数都将数据包发送到客户机,并根据设置决定是否发送中断通知(write系统调用)。

dpdk-18.08/lib/librte_vhost/virtio_net.c

virtio_dev_rx()

static __rte_always_inline uint32_t

virtio_dev_rx(struct virtio_net *dev, uint16_t queue_id,

struct rte_mbuf **pkts, uint32_t count)

{

struct vhost_virtqueue *vq;

uint32_t nb_tx = 0;

VHOST_LOG_DEBUG(VHOST_DATA, "(%d) %s\n", dev->vid, __func__);

if (unlikely(!is_valid_virt_queue_idx(queue_id, 0, dev->nr_vring))) {

RTE_LOG(ERR, VHOST_DATA, "(%d) %s: invalid virtqueue idx %d.\n",

dev->vid, __func__, queue_id);

return 0;

}

vq = dev->virtqueue[queue_id];

rte_spinlock_lock(&vq->access_lock);

if (unlikely(vq->enabled == 0))

goto out_access_unlock;

if (dev->features & (1ULL << VIRTIO_F_IOMMU_PLATFORM))

vhost_user_iotlb_rd_lock(vq);

if (unlikely(vq->access_ok == 0))

if (unlikely(vring_translate(dev, vq) < 0))

goto out;

count = RTE_MIN((uint32_t)MAX_PKT_BURST, count);

if (count == 0)

goto out;

if (vq_is_packed(dev))

nb_tx = virtio_dev_rx_packed(dev, vq, pkts, count);

else

nb_tx = virtio_dev_rx_split(dev, vq, pkts, count);

out:

if (dev->features & (1ULL << VIRTIO_F_IOMMU_PLATFORM))

vhost_user_iotlb_rd_unlock(vq);

out_access_unlock:

rte_spinlock_unlock(&vq->access_lock);

return nb_tx;

}

在virtio_dev_rx函数中:

count = RTE_MIN((uint32_t)MAX_PKT_BURST, count);

if (count == 0)

goto out;

发送数据包数量设置为MAX_PKT_BURST宏与空闲项数量(count)两者中的较小值。

最后,根据发送的数据包数量增加已用索引的值。

virtio_dev_rx_packed()

tatic __rte_always_inline uint32_t

virtio_dev_rx_packed(struct virtio_net *dev, struct vhost_virtqueue *vq,

struct rte_mbuf **pkts, uint32_t count)

{

uint32_t pkt_idx = 0;

uint16_t num_buffers;

struct buf_vector buf_vec[BUF_VECTOR_MAX];

for (pkt_idx = 0; pkt_idx < count; pkt_idx++) {

uint32_t pkt_len = pkts[pkt_idx]->pkt_len + dev->vhost_hlen;

uint16_t nr_vec = 0;

uint16_t nr_descs = 0;

if (unlikely(reserve_avail_buf_packed(dev, vq,

pkt_len, buf_vec, &nr_vec,

&num_buffers, &nr_descs) < 0)) {

VHOST_LOG_DEBUG(VHOST_DATA,

"(%d) failed to get enough desc from vring\n",

dev->vid);

vq->shadow_used_idx -= num_buffers;

break;

}

rte_prefetch0((void *)(uintptr_t)buf_vec[0].buf_addr);

VHOST_LOG_DEBUG(VHOST_DATA, "(%d) current index %d | end index %d\n",

dev->vid, vq->last_avail_idx,

vq->last_avail_idx + num_buffers);

if (copy_mbuf_to_desc(dev, vq, pkts[pkt_idx],

buf_vec, nr_vec,

num_buffers) < 0) {

vq->shadow_used_idx -= num_buffers;

break;

}

vq->last_avail_idx += nr_descs;

if (vq->last_avail_idx >= vq->size) {

vq->last_avail_idx -= vq->size;

vq->avail_wrap_counter ^= 1;

}

}

do_data_copy_enqueue(dev, vq);

if (likely(vq->shadow_used_idx)) {

flush_shadow_used_ring_packed(dev, vq);

vhost_vring_call_packed(dev, vq);

}

return pkt_idx;

}

virtio_dev_rx_split()

static __rte_always_inline uint32_t

virtio_dev_rx_split(struct virtio_net *dev, struct vhost_virtqueue *vq,

struct rte_mbuf **pkts, uint32_t count)

{

uint32_t pkt_idx = 0;

uint16_t num_buffers;

struct buf_vector buf_vec[BUF_VECTOR_MAX];

uint16_t avail_head;

rte_prefetch0(&vq->avail->ring[vq->last_avail_idx & (vq->size - 1)]);

avail_head = *((volatile uint16_t *)&vq->avail->idx);

for (pkt_idx = 0; pkt_idx < count; pkt_idx++) {

uint32_t pkt_len = pkts[pkt_idx]->pkt_len + dev->vhost_hlen;

uint16_t nr_vec = 0;

if (unlikely(reserve_avail_buf_split(dev, vq,

pkt_len, buf_vec, &num_buffers,

avail_head, &nr_vec) < 0)) {

VHOST_LOG_DEBUG(VHOST_DATA,

"(%d) failed to get enough desc from vring\n",

dev->vid);

vq->shadow_used_idx -= num_buffers;

break;

}

rte_prefetch0((void *)(uintptr_t)buf_vec[0].buf_addr);

VHOST_LOG_DEBUG(VHOST_DATA, "(%d) current index %d | end index %d\n",

dev->vid, vq->last_avail_idx,

vq->last_avail_idx + num_buffers);

if (copy_mbuf_to_desc(dev, vq, pkts[pkt_idx],

buf_vec, nr_vec,

num_buffers) < 0) {

vq->shadow_used_idx -= num_buffers;

break;

}

vq->last_avail_idx += num_buffers;

}

do_data_copy_enqueue(dev, vq);

if (likely(vq->shadow_used_idx)) {

flush_shadow_used_ring_split(dev, vq);

vhost_vring_call_split(dev, vq);

}

return pkt_idx;

}

数据包通过函数copy_mbuf_to_desc拷贝到客户机的内存中。最后,根据配置决定是否发送中断通知,参见函数vhost_vring_call_split和vhost_vring_call_packed。

vhost_vring_call_split()

static __rte_always_inline void

vhost_vring_call_split(struct virtio_net *dev, struct vhost_virtqueue *vq)

{

/* Flush used->idx update before we read avail->flags. */

rte_smp_mb();

/* Don't kick guest if we don't reach index specified by guest. */

if (dev->features & (1ULL << VIRTIO_RING_F_EVENT_IDX)) {

uint16_t old = vq->signalled_used;

uint16_t new = vq->last_used_idx;

VHOST_LOG_DEBUG(VHOST_DATA, "%s: used_event_idx=%d, old=%d, new=%d\n",

__func__,

vhost_used_event(vq),

old, new);

if (vhost_need_event(vhost_used_event(vq), new, old)

&& (vq->callfd >= 0)) {

vq->signalled_used = vq->last_used_idx;

eventfd_write(vq->callfd, (eventfd_t) 1);

}

} else {

/* Kick the guest if necessary. */

if (!(vq->avail->flags & VRING_AVAIL_F_NO_INTERRUPT)

&& (vq->callfd >= 0))

eventfd_write(vq->callfd, (eventfd_t)1);

}

}

vhost_vring_call_packed()

static __rte_always_inline void

vhost_vring_call_packed(struct virtio_net *dev, struct vhost_virtqueue *vq)

{

uint16_t old, new, off, off_wrap;

bool signalled_used_valid, kick = false;

/* Flush used desc update. */

rte_smp_mb();

if (!(dev->features & (1ULL << VIRTIO_RING_F_EVENT_IDX))) {

if (vq->driver_event->flags !=

VRING_EVENT_F_DISABLE)

kick = true;

goto kick;

}

old = vq->signalled_used;

new = vq->last_used_idx;

vq->signalled_used = new;

signalled_used_valid = vq->signalled_used_valid;

vq->signalled_used_valid = true;

if (vq->driver_event->flags != VRING_EVENT_F_DESC) {

if (vq->driver_event->flags != VRING_EVENT_F_DISABLE)

kick = true;

goto kick;

}

if (unlikely(!signalled_used_valid)) {

kick = true;

goto kick;

}

rte_smp_rmb();

off_wrap = vq->driver_event->off_wrap;

off = off_wrap & ~(1 << 15);

if (new <= old)

old -= vq->size;