项目中经常会用到kafka作为消息中间件,有用kafka记录收集日志,也有用kafka传消息异步处理业务逻辑,如果kafka处理业务逻辑,则要考虑相关业务是否要保证消息可靠性。记录一下springboot使用kafka的项目demo,以及配置文件如何配置。

一、依赖

<!-- kafka -->

<dependency>

<groupId>org.springframework.kafka</groupId>

<artifactId>spring-kafka</artifactId>

</dependency>

注:

这个spring-kafka依赖里面包含了kafka-clients依赖,而kafka-client的版本要最好和kafka服务器版本一致,不然会因为版本不兼容,有连接的各种问题,下面的异常记录有写版本不一致遇到的问题

二、生产者

1.yaml配置

#自定义的配置,不是springboot提供的自动配置

mq:

kafka:

producer:

clusters: 192.168.240.42:9092,192.168.240.43:9092,192.168.240.44:9092

topic: KAFKA_TEST_TOPIC

2.引入上面的yaml的配置以及kafka的一些配置参数

@Data

@Configuration

@ConfigurationProperties("mq.kafka.producer")

public class KafkaProducerProperties {

/**

* 其他配置

*/

private Map<String, String> other;

/**

* 集群地址

*/

private String clusters;

/**

* 0 Producer 往集群发送数据不需要等到集群的返回,不确保消息发送成功。安全性最低但是效率最高。

* 1 Producer 往集群发送数据只要 Leader 应答就可以发送下一条,只确保 Leader 接收成功。

* -1 或 all Producer 往集群发送数据需要所有的ISR Follower 都完成从 Leader 的同步才会发送下一条,

* 确保 Leader 发送成功和所有的副本都成功接收。安全性最高,但是效率最低。

*/

private String acks = "all";

/**

* 重试次数

*/

private int retries = 0;

/**

* 将同分区数据合并为一个 batch 发送, 单位 bytes

*/

private String batchSize = "16384";

/**

* 发送延迟时间,当 batch 未满 batchSize 时,延迟 lingerMs 发送 batch

* 当数据达到 batchSize 将会被立即发送

*/

private String lingerMs = "1";

/**

* 缓冲大小,当缓冲满时,max.block.ms 会控制 send() && partitionsFor() 阻塞时长

*/

private String bufferMemory = "33554432";

/**

* bytes

*/

private String maxRequestSize = "5048576";

/**

* 压缩方式 none, gzip, snappy, lz4, or zstd.

*/

private String compressionType = "gzip";

/**

* key序列化方式,实现 org.apache.kafka.common.serialization.StringSerializer 的类

*/

private String keySerializerClass = "org.apache.kafka.common.serialization.StringSerializer";

/**

* value序列化方式,实现 org.apache.kafka.common.serialization.StringSerializer 的类

*/

private String valueSerializerClass = "org.apache.kafka.common.serialization.StringSerializer";

}

3.kafka的配置Config类

@Configuration

@EnableKafka

public class KafkaConfig {

@Autowired

private KafkaProducerProperties producerProperties;

@Bean

public ProducerFactory<Integer, String> producerFactory() {

return new DefaultKafkaProducerFactory<>(producerConfigs());

}

@Bean

public Map<String, Object> producerConfigs() {

Map<String, Object> props = new HashMap<>(16);

props.put(ProducerConfig.BOOTSTRAP_SERVERS_CONFIG, producerProperties.getClusters());

props.put(ProducerConfig.ACKS_CONFIG, producerProperties.getAcks());

props.put(ProducerConfig.RETRIES_CONFIG, producerProperties.getRetries());

props.put(ProducerConfig.BATCH_SIZE_CONFIG, producerProperties.getBatchSize());

props.put(ProducerConfig.LINGER_MS_CONFIG, producerProperties.getLingerMs());

props.put(ProducerConfig.BUFFER_MEMORY_CONFIG, producerProperties.getBufferMemory());

props.put(ProducerConfig.MAX_REQUEST_SIZE_CONFIG, producerProperties.getMaxRequestSize());

props.put(ProducerConfig.COMPRESSION_TYPE_CONFIG, producerProperties.getCompressionType());

props.put(ProducerConfig.KEY_SERIALIZER_CLASS_CONFIG, producerProperties.getKeySerializerClass());

props.put(ProducerConfig.VALUE_SERIALIZER_CLASS_CONFIG, producerProperties.getValueSerializerClass());

if (producerProperties.getOther() != null){

props.putAll(producerProperties.getOther());

}

return props;

}

@Bean

public KafkaTemplate<Integer, String> kafkaTemplate() {

return new KafkaTemplate<>(producerFactory());

}

}

注:这里的配置Config类主要是把配置注入KafkaTemplate,然后其他地方注入这个kafkaTemplate发送消息到kafka

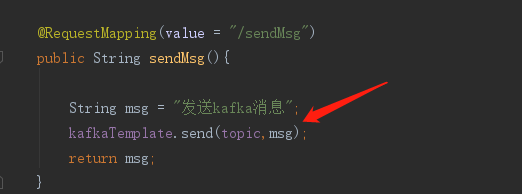

4.发送kafka消息

@RestController

@RequestMapping(value = "/product")

public class ProductController {

@Value("${mq.kafka.producer.topic}")

private String topic;

@Autowired

private KafkaTemplate<Integer, String> kafkaTemplate;

@RequestMapping(value = "/sendMsg")

public String sendMsg(){

String msg = "发送kafka消息";

kafkaTemplate.send(topic,msg);

return msg;

}

}

注:

SpringBoot2.0已经提供了Kafka的自动配置,可以在application.properties文件中配置,所以也可以不用像我上面这样写工厂类,自己注入kafka配置

5.springboot2.0的kafka自动配置

#============== kafka ===================

# 指定kafka server的地址,集群配多个,中间,逗号隔开

spring.kafka.bootstrap-servers=127.0.0.1:9092

#=============== provider =======================

# 写入失败时,重试次数。当leader节点失效,一个repli节点会替代成为leader节点,此时可能出现写入失败,

# 当retris为0时,produce不会重复。retirs重发,此时repli节点完全成为leader节点,不会产生消息丢失。

spring.kafka.producer.retries=0

# 每次批量发送消息的数量,produce积累到一定数据,一次发送

spring.kafka.producer.batch-size=16384

# produce积累数据一次发送,缓存大小达到buffer.memory就发送数据

spring.kafka.producer.buffer-memory=33554432

#可以设置的值为:all, -1, 0, 1

spring.kafka.producer.acks=1

# 指定消息key和消息体的编解码方式

spring.kafka.producer.key-serializer=org.apache.kafka.common.serialization.StringSerializer

spring.kafka.producer.value-serializer=org.apache.kafka.common.serialization.StringSerializer

三、消费者

1.我们在消费者服务引入yaml配置

#自定义配置

mq:

kafka:

consumer:

groupId: kafka-server

clusters: 192.168.240.42:9092,192.168.240.43:9092,192.168.240.44:9092

topic: KAFKA_TEST_TOPIC

2.读取上面的配置以及kafka消费端的配置

@Data

@Configuration

@ConfigurationProperties(prefix = "mq.kafka.consumer")

public class KafkaConsumerProperties {

private Map<String, String> other;

private String clusters ;//通过yaml的配置注入

private String groupId ;

private boolean enableAutoCommit = true;//是否自动提交

private String sessionTimeoutMs = "120000";

private String requestTimeoutMs = "160000";

private String fetchMaxWaitMs = "5000";

private String autoOffsetReset = "earliest";

private String maxPollRecords = "10";//批量拉取消息数量

private String maxPartitionFetch = "5048576";

private String keyDeserializerClass = "org.apache.kafka.common.serialization.StringDeserializer";

private String valueDeserializerClass = "org.apache.kafka.common.serialization.StringDeserializer";

}

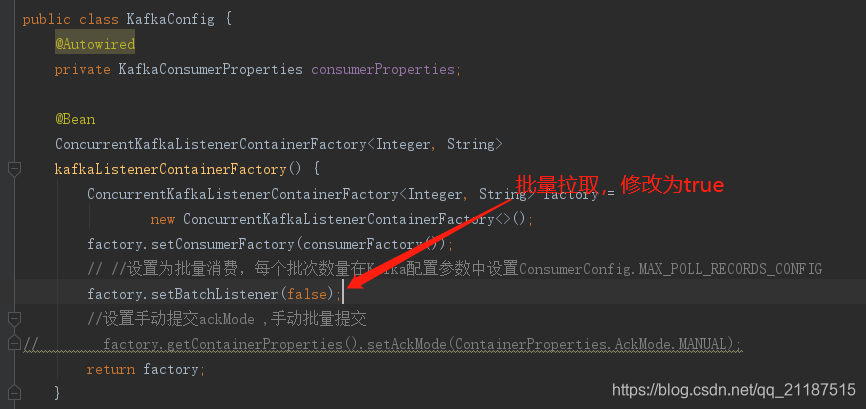

3.KafkaConfig配置类

@Configuration

@EnableKafka

public class KafkaConfig {

@Autowired

private KafkaConsumerProperties consumerProperties;

@Bean

ConcurrentKafkaListenerContainerFactory<Integer, String>

kafkaListenerContainerFactory() {

ConcurrentKafkaListenerContainerFactory<Integer, String> factory =

new ConcurrentKafkaListenerContainerFactory<>();

factory.setConsumerFactory(consumerFactory());

// //设置为批量消费,每个批次数量在Kafka配置参数中设置ConsumerConfig.MAX_POLL_RECORDS_CONFIG

factory.setBatchListener(true);

//设置手动提交ackMode ,手动批量提交

// factory.getContainerProperties().setAckMode(ContainerProperties.AckMode.MANUAL);

return factory;

}

@Bean

public ConsumerFactory<Integer, String> consumerFactory() {

return new DefaultKafkaConsumerFactory<>(consumerConfigs());

}

@Bean

public Map<String, Object> consumerConfigs() {

Map<String, Object> props = new HashMap<>(16);

props.put(ConsumerConfig.BOOTSTRAP_SERVERS_CONFIG, consumerProperties.getClusters());

props.put(ConsumerConfig.GROUP_ID_CONFIG, consumerProperties.getGroupId());

props.put(ConsumerConfig.ENABLE_AUTO_COMMIT_CONFIG, consumerProperties.isEnableAutoCommit());

props.put(ConsumerConfig.SESSION_TIMEOUT_MS_CONFIG, consumerProperties.getSessionTimeoutMs());

props.put(ConsumerConfig.REQUEST_TIMEOUT_MS_CONFIG, consumerProperties.getRequestTimeoutMs());

props.put(ConsumerConfig.FETCH_MAX_WAIT_MS_CONFIG, consumerProperties.getFetchMaxWaitMs());

props.put(ConsumerConfig.AUTO_OFFSET_RESET_CONFIG, consumerProperties.getAutoOffsetReset());

props.put(ConsumerConfig.MAX_POLL_RECORDS_CONFIG, consumerProperties.getMaxPollRecords());

props.put(ConsumerConfig.MAX_PARTITION_FETCH_BYTES_CONFIG, consumerProperties.getMaxPartitionFetch());

props.put(ConsumerConfig.KEY_DESERIALIZER_CLASS_CONFIG, consumerProperties.getKeyDeserializerClass());

props.put(ConsumerConfig.VALUE_DESERIALIZER_CLASS_CONFIG, consumerProperties.getValueDeserializerClass());

if (consumerProperties.getOther() != null){

props.putAll(consumerProperties.getOther());

}

return props;

}

}

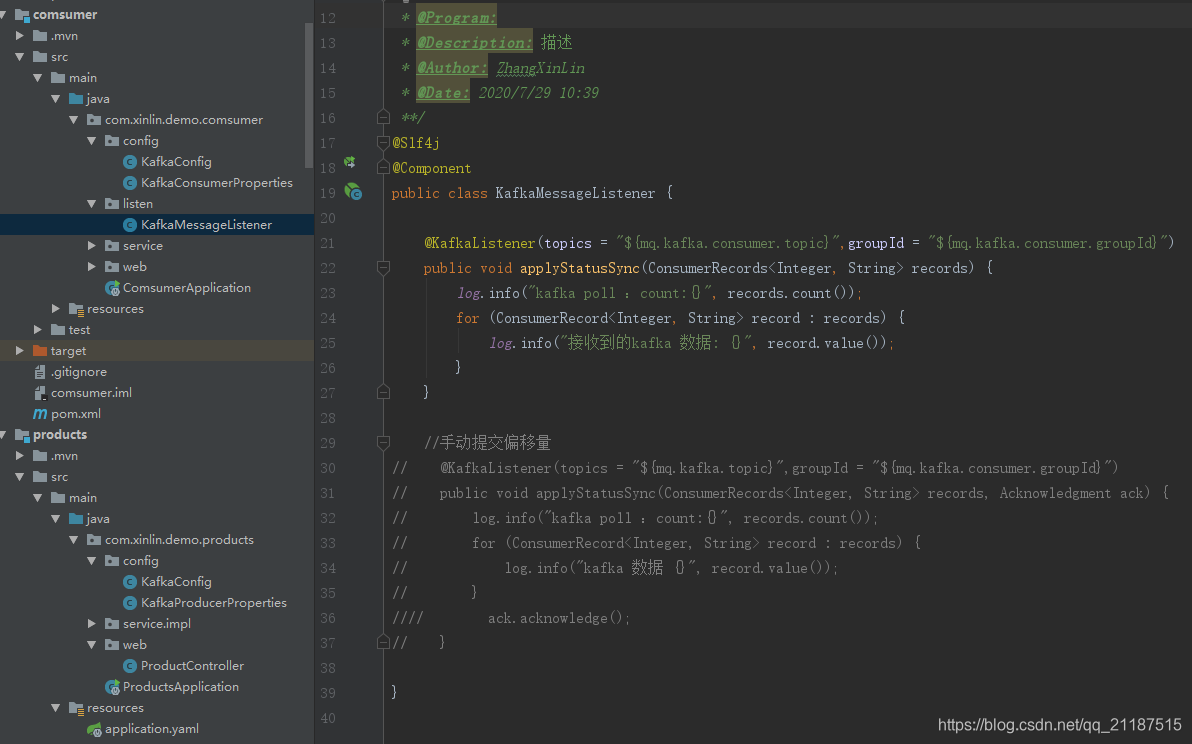

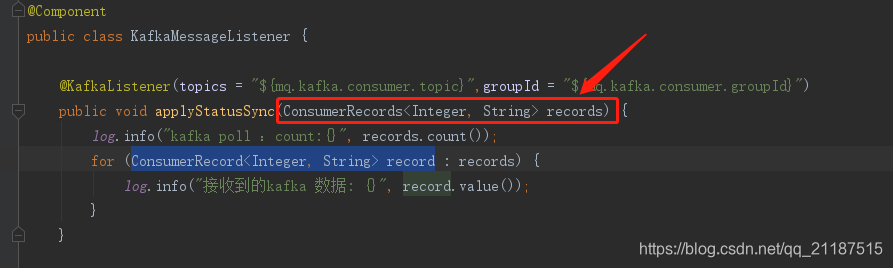

4.使用@KafkaListener来监听kafka服务器的topic的消息

@Slf4j

@Component

public class KafkaMessageListener {

@KafkaListener(topics = "${mq.kafka.consumer.topic}",groupId = "${mq.kafka.consumer.groupId}")

public void applyStatusSync(ConsumerRecords<Integer, String> records) {

log.info("kafka poll :count:{}", records.count());

for (ConsumerRecord<Integer, String> record : records) {

log.info("接收到的kafka 数据 {}", record.value());

}

}

//手动提交偏移量

// @KafkaListener(topics = "${mq.kafka.topic}",groupId = "${mq.kafka.consumer.groupId}")

// public void applyStatusSync(ConsumerRecords<Integer, String> records, Acknowledgment ack) {

// log.info("kafka poll :count:{}", records.count());

// for (ConsumerRecord<Integer, String> record : records) {

// log.info("接收到的kafka 数据 {}", record.value());

// }

ack.acknowledge();

// }

}

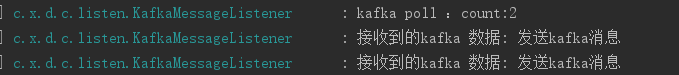

四、测试验证

1.分别启动生产者、消费者两个服务

2.服务都正常启动,调用一下生产者发kafka消息的接口

3.可以消费端已经打印了kafka接收到的消息,验证没有问题

4.项目目录

五、异常问题记录

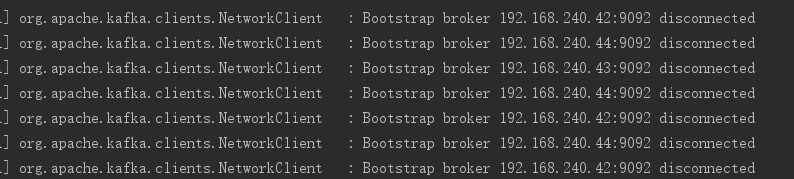

1.在springboot1.5.10也是引入了spring-kafka,配置也是如上面的,但是项目启动后一直连接不上kafka,提示Bootstrap broker 192.168.xxx.xx:9092 disconnected

分析原因:是因为springboot1.5.10中kafka-client的版本为0.10.1.1,低版本的kafka-client连不上kafka服务器,是kafka-client和kafka服务器版本不对应的兼容性问题

解决方法:在引入的spring-kafka中用exclusion排除kafka-client,然后重新引入一个0.10.2.1的依赖,重启,成功连上kafka服务器

2.在kafka消费端的监听器,看到有拉取回消息,但是一直提示数据转换异常

MessageConversionException: Cannot handle message; nested exception is org.springframework.messaging.converter.MessageConversionException: Cannot convert from [java.lang.String] to [org.apache.kafka.clients.consumer.ConsumerRecords]

Bean [com.xinlin.demo.comsumer.listen.KafkaMessageListener@1f43cab7]; nested exception is org.springframework.messaging.converter.MessageConversionException: Cannot handle message; nested exception is org.springframework.messaging.converter.MessageConversionException: Cannot convert from [java.lang.String] to [org.apache.kafka.clients.consumer.ConsumerRecords] for GenericMessage [payload=发送kafka消息, headers={kafka_offset=0, kafka_consumer=org.apache.kafka.clients.consumer.KafkaConsumer@416f4587, kafka_timestampType=CREATE_TIME, kafka_receivedMessageKey=null, kafka_receivedPartitionId=7, kafka_receivedTopic=KAFKA_TEST_TOPIC, kafka_receivedTimestamp=1596119315437}], failedMessage=GenericMessage [payload=发送kafka消息, headers={kafka_offset=0, kafka_consumer=org.apache.kafka.clients.consumer.KafkaConsumer@416f4587, kafka_timestampType=CREATE_TIME, kafka_receivedMessageKey=null, kafka_receivedPartitionId=7, kafka_receivedTopic=KAFKA_TEST_TOPIC, kafka_receivedTimestamp=1596119315437}]; nested exception is org.springframework.messaging.converter.MessageConversionException: Cannot handle message; nested exception is org.springframework.messaging.converter.MessageConversionException: Cannot convert from [java.lang.String] to [org.apache.kafka.clients.consumer.ConsumerRecords] for GenericMessage [payload=发送kafka消息, headers={kafka_offset=0, kafka_consumer=org.apache.kafka.clients.consumer.KafkaConsumer@416f4587, kafka_timestampType=CREATE_TIME, kafka_receivedMessageKey=null, kafka_receivedPartitionId=7, kafka_receivedTopic=KAFKA_TEST_TOPIC, kafka_receivedTimestamp=1596119315437}], failedMessage=GenericMessage [payload=发送kafka消息, headers={kafka_offset=0, kafka_consumer=org.apache.kafka.clients.consumer.KafkaConsumer@416f4587, kafka_timestampType=CREATE_TIME, kafka_receivedMessageKey=null, kafka_receivedPartitionId=7, kafka_receivedTopic=KAFKA_TEST_TOPIC, kafka_receivedTimestamp=1596119315437}]

at org.springframework.kafka.listener.KafkaMessageListenerContainer$ListenerConsumer.decorateException(KafkaMessageListenerContainer.java:1327) [spring-kafka-2.2.8.RELEASE.jar:2.2.8.RELEASE]

at org.springframework.kafka.listener.KafkaMessageListenerContainer$ListenerConsumer.invokeErrorHandler(KafkaMessageListenerContainer.java:1316) [spring-kafka-2.2.8.RELEASE.jar:2.2.8.RELEASE]

at org.springframework.kafka.listener.KafkaMessageListenerContainer$ListenerConsumer.doInvokeRecordListener(KafkaMessageListenerContainer.java:1232) [spring-kafka-2.2.8.RELEASE.jar:2.2.8.RELEASE]

at org.springframework.kafka.listener.KafkaMessageListenerContainer$ListenerConsumer.doInvokeWithRecords(KafkaMessageListenerContainer.java:1203) [spring-kafka-2.2.8.RELEASE.jar:2.2.8.RELEASE]

at org.springframework.kafka.listener.KafkaMessageListenerContainer$ListenerConsumer.invokeRecordListener(KafkaMessageListenerContainer.java:1123) [spring-kafka-2.2.8.RELEASE.jar:2.2.8.RELEASE]

at org.springframework.kafka.listener.KafkaMessageListenerContainer$ListenerConsumer.invokeListener(KafkaMessageListenerContainer.java:938) [spring-kafka-2.2.8.RELEASE.jar:2.2.8.RELEASE]

at org.springframework.kafka.listener.KafkaMessageListenerContainer$ListenerConsumer.pollAndInvoke(KafkaMessageListenerContainer.java:751) [spring-kafka-2.2.8.RELEASE.jar:2.2.8.RELEASE]

at org.springframework.kafka.listener.KafkaMessageListenerContainer$ListenerConsumer.run(KafkaMessageListenerContainer.java:700) [spring-kafka-2.2.8.RELEASE.jar:2.2.8.RELEASE]

at java.util.concurrent.Executors$RunnableAdapter.call(Executors.java:511) [na:1.8.0_162]

at java.util.concurrent.FutureTask.run(FutureTask.java:266) [na:1.8.0_162]

at java.lang.Thread.run(Thread.java:748) [na:1.8.0_162]

Caused by: org.springframework.messaging.converter.MessageConversionException: Cannot handle message; nested exception is org.springframework.messaging.converter.MessageConversionException: Cannot convert from [java.lang.String] to [org.apache.kafka.clients.consumer.ConsumerRecords] for GenericMessage [payload=发送kafka消息, headers={kafka_offset=0, kafka_consumer=org.apache.kafka.clients.consumer.KafkaConsumer@416f4587, kafka_timestampType=CREATE_TIME, kafka_receivedMessageKey=null, kafka_receivedPartitionId=7, kafka_receivedTopic=KAFKA_TEST_TOPIC, kafka_receivedTimestamp=1596119315437}], failedMessage=GenericMessage [payload=发送kafka消息, headers={kafka_offset=0, kafka_consumer=org.apache.kafka.clients.consumer.KafkaConsumer@416f4587, kafka_timestampType=CREATE_TIME, kafka_receivedMessageKey=null, kafka_receivedPartitionId=7, kafka_receivedTopic=KAFKA_TEST_TOPIC, kafka_receivedTimestamp=1596119315437}]

at org.springframework.kafka.listener.adapter.MessagingMessageListenerAdapter.invokeHandler(MessagingMessageListenerAdapter.java:292) ~[spring-kafka-2.2.8.RELEASE.jar:2.2.8.RELEASE]

at org.springframework.kafka.listener.adapter.RecordMessagingMessageListenerAdapter.onMessage(RecordMessagingMessageListenerAdapter.java:79) ~[spring-kafka-2.2.8.RELEASE.jar:2.2.8.RELEASE]

at org.springframework.kafka.listener.adapter.RecordMessagingMessageListenerAdapter.onMessage(RecordMessagingMessageListenerAdapter.java:50) ~[spring-kafka-2.2.8.RELEASE.jar:2.2.8.RELEASE]

at org.springframework.kafka.listener.KafkaMessageListenerContainer$ListenerConsumer.doInvokeOnMessage(KafkaMessageListenerContainer.java:1278) [spring-kafka-2.2.8.RELEASE.jar:2.2.8.RELEASE]

at org.springframework.kafka.listener.KafkaMessageListenerContainer$ListenerConsumer.invokeOnMessage(KafkaMessageListenerContainer.java:1261) [spring-kafka-2.2.8.RELEASE.jar:2.2.8.RELEASE]

at org.springframework.kafka.listener.KafkaMessageListenerContainer$ListenerConsumer.doInvokeRecordListener(KafkaMessageListenerContainer.java:1222) [spring-kafka-2.2.8.RELEASE.jar:2.2.8.RELEASE]

分析原因:其实提示错误可能有两个原因导致的

1)一个是设置了不允许批量消费

factory.setBatchListener(false);但是监听器写的是ConsumerRecords<Integer, String> records,获取批量的消息,导致出错,把ConsumerRecords<Integer, String> records改成单个消息获取ConsumerRecord<Integer, String> record即可,或者允许批量拉取消息

factory.setBatchListener(true);

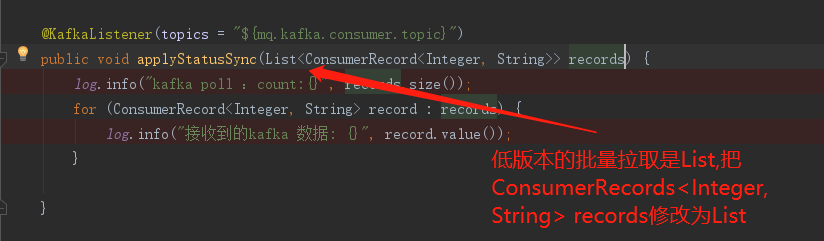

2)除了上面的原因,还有一个原因就是spring-kafka的版本太低了,低版本的批量拉取消息不是用ConsumerRecords<Integer, String> records,而是List<ConsumerRecord<Integer, String>> records。

我在springboot2.1.7引入spring-kafka没有问题,因为这里的spring-kafka版本是2.2.8,但是在springboot1.5.10引入的spring-kafka版本是1.1.7

3.设置手动提交ack,spring1.5和spring2.1配置轻微区别

//springboot1.5的配置方式:

//设置手动提交ackMode ,手动批量提交

factory.getContainerProperties().setAckMode(AbstractMessageListenerContainer.AckMode.MANUAL);

//springboot2.0的配置方式:

//设置手动提交ackMode ,手动批量提交

factory.getContainerProperties().setAckMode(ContainerProperties.AckMode.MANUAL);

注:

只是AbstractMessageListenerContainer.AckMode.MANUAL和ContainerProperties.AckMode.MANUAL的枚举类的位置区别

8254

8254

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?