前言

本文参考了大量其它博客,此处只是做了一定的汇总和一些疑难杂症的处理。

前置准备

1、安装好jdk。(包括配置好环境变量等等)

2、如果需要使用到springboot,则最好安装好idea或者是sts和maven。

正文

安装zookeeper

1、前往zookeeper的官方网站下载zookeeper,本文选用的版本是3.6.1

https://mirror.bit.edu.cn/apache/zookeeper/zookeeper-3.6.1/apache-zookeeper-3.6.1-bin.tar.gz

2、下载好之后,解压到你想要的文件夹下

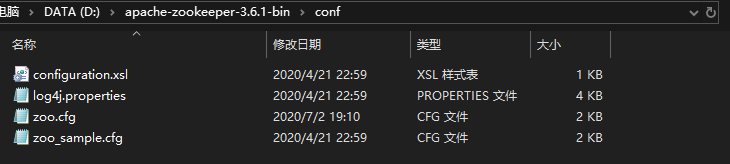

3、然后找到里面的conf文件夹

这里有一个zoo_sample.txt,把它复制一份,并重命名为zoo.txt。

修改里面的配置,把红色框框的内容替换成:

dataDir=D:\apache-zookeeper-3.6.1-bin\data

dataLogDir=D:\apache-zookeeper-3.6.1-bin\log

然后在bin目录下新建data文件夹和log文件夹即可。

4、此时双击bin目录下的zkServer.cmd就可以启动了。

5、还有一种做法是配置zookeeper的环境变量,这样就能直接从cmd输入zkserver来启动。

ZOOKEEPER_HOME: C:\Users\localadmin\CODE\zookeeper-3.4.13 (zookeeper目录)

Path: 在现有的值后面添加 ;%ZOOKEEPER_HOME%\bin;

6、在已经启动zkServer的情况下,双击zkCli.cmd,如果出现以下信息,则表示安装成功了。

先不要关闭cmd,以便后续测试。

安装kafka

1、下载kafka

https://mirror.bit.edu.cn/apache/kafka/2.5.0/kafka_2.12-2.5.0.tgz

2、解压到指定文件夹后,找到config,再找到server.properties

3、用记事本打开后,找到log.dirs,并修改为log.dirs=./logs

4、打开cmd,cd到kafka的目录下(例如D:\kafka_2.12-2.5.0),执行以下指令:

.\bin\windows\kafka-server-start.bat .\config\server.properties

先不要关闭cmd,以便后续测试。

测试kafka

1、cd到D:\kafka_2.12-2.5.0\bin\windows此目录下。

2、输入如下指令来创建一个新的topic:

kafka-topics.bat --create --zookeeper localhost:2181 --replication-factor 1 --partitions 1 --topic test

3、打开一个producer

新建一个cmd窗口

同样也需要cd到D:\kafka_2.12-2.5.0\bin\windows,然后输入如下指令:

kafka-console-producer.bat --broker-list localhost:9092 --topic test

4、打开一个consumer

新建一个cmd窗口

同样也需要cd到D:\kafka_2.12-2.5.0\bin\windows,然后输入如下指令:

kafka-console-consumer.bat --bootstrap-server localhost:9092 --topic test --from-beginning

注意:红色标记字体的指令可以去掉,意思是从生产者的开始处消费。

![]()

![]()

5、如何查看topics?

kafka-topics.bat --zookeeper localhost:2181 --list

整合springboot

1、需要用到的依赖

<dependencies>

<dependency>

<groupId>org.springframework.boot</groupId>

<artifactId>spring-boot-starter</artifactId>

</dependency>

<dependency>

<groupId>org.springframework.boot</groupId>

<artifactId>spring-boot-starter-web</artifactId>

</dependency>

<dependency>

<groupId>org.springframework.kafka</groupId>

<artifactId>spring-kafka</artifactId>

</dependency>

<dependency>

<groupId>org.projectlombok</groupId>

<artifactId>lombok</artifactId>

<optional>true</optional>

</dependency>

<dependency>

<groupId>org.springframework.boot</groupId>

<artifactId>spring-boot-starter-test</artifactId>

<scope>test</scope>

</dependency>

<dependency>

<groupId>com.fasterxml.jackson.core</groupId>

<artifactId>jackson-annotations</artifactId>

</dependency>

<dependency>

<groupId>org.springframework</groupId>

<artifactId>spring-web</artifactId>

</dependency>

</dependencies>2、工程结构和application.properties的配置如下

server.port=8088 #这里最好配置上,因为zookeeper会占用掉8080端口,所以改成8088

#============== kafka ===================

kafka.consumer.zookeeper.connect=127.0.0.1:2181

kafka.consumer.servers=127.0.0.1:9092

kafka.consumer.enable.auto.commit=true

kafka.consumer.session.timeout=6000

kafka.consumer.auto.commit.interval=100

kafka.consumer.auto.offset.reset=latest

kafka.consumer.topic=test

kafka.consumer.group.id=test

kafka.consumer.concurrency=10

kafka.producer.servers=127.0.0.1:9092

kafka.producer.retries=0

kafka.producer.batch.size=4096

kafka.producer.linger=1

kafka.producer.buffer.memory=40960

kafka.topic.default=test

3、详细代码

1、两个配置类,

import org.apache.kafka.clients.consumer.ConsumerConfig;

import org.apache.kafka.common.serialization.StringDeserializer;

import org.springframework.beans.factory.annotation.Value;

import org.springframework.context.annotation.Bean;

import org.springframework.context.annotation.Configuration;

import org.springframework.kafka.annotation.EnableKafka;

import org.springframework.kafka.config.ConcurrentKafkaListenerContainerFactory;

import org.springframework.kafka.config.KafkaListenerContainerFactory;

import org.springframework.kafka.core.ConsumerFactory;

import org.springframework.kafka.core.DefaultKafkaConsumerFactory;

import org.springframework.kafka.listener.ConcurrentMessageListenerContainer;

import org.springframework.kafka.support.serializer.JsonDeserializer;

import java.util.HashMap;

import java.util.Map;

@Configuration

@EnableKafka

public class KafkaConsumerConfig {

@Value("${kafka.consumer.servers}")

private String servers;

@Value("${kafka.consumer.enable.auto.commit}")

private boolean enableAutoCommit;

@Value("${kafka.consumer.session.timeout}")

private String sessionTimeout;

@Value("${kafka.consumer.auto.commit.interval}")

private String autoCommitInterval;

@Value("${kafka.consumer.group.id}")

private String groupId;

@Value("${kafka.consumer.auto.offset.reset}")

private String autoOffsetReset;

@Value("${kafka.consumer.concurrency}")

private int concurrency;

@Bean

public KafkaListenerContainerFactory<ConcurrentMessageListenerContainer<String, String>> kafkaListenerContainerFactory() {

ConcurrentKafkaListenerContainerFactory<String, String> factory = new ConcurrentKafkaListenerContainerFactory<>();

factory.setConsumerFactory(consumerFactory());

factory.setConcurrency(concurrency);

factory.getContainerProperties().setPollTimeout(1500);

return factory;

}

private ConsumerFactory<String, String> consumerFactory() {

return new DefaultKafkaConsumerFactory<>(

consumerConfigs(),

new StringDeserializer(),

new JsonDeserializer<>(String.class)

);

}

private Map<String, Object> consumerConfigs() {

Map<String, Object> propsMap = new HashMap<>();

propsMap.put(ConsumerConfig.BOOTSTRAP_SERVERS_CONFIG, servers);

propsMap.put(ConsumerConfig.ENABLE_AUTO_COMMIT_CONFIG, enableAutoCommit);

propsMap.put(ConsumerConfig.AUTO_COMMIT_INTERVAL_MS_CONFIG, autoCommitInterval);

propsMap.put(ConsumerConfig.SESSION_TIMEOUT_MS_CONFIG, sessionTimeout);

propsMap.put(ConsumerConfig.KEY_DESERIALIZER_CLASS_CONFIG, StringDeserializer.class);

propsMap.put(ConsumerConfig.VALUE_DESERIALIZER_CLASS_CONFIG, StringDeserializer.class);

propsMap.put(ConsumerConfig.GROUP_ID_CONFIG, groupId);

propsMap.put(ConsumerConfig.AUTO_OFFSET_RESET_CONFIG, autoOffsetReset);

return propsMap;

}

}import java.util.HashMap;

import java.util.Map;

import org.apache.kafka.clients.producer.ProducerConfig;

import org.apache.kafka.common.serialization.StringDeserializer;

import org.apache.kafka.common.serialization.StringSerializer;

import org.springframework.beans.factory.annotation.Value;

import org.springframework.context.annotation.Bean;

import org.springframework.context.annotation.Configuration;

import org.springframework.kafka.annotation.EnableKafka;

import org.springframework.kafka.core.DefaultKafkaProducerFactory;

import org.springframework.kafka.core.KafkaTemplate;

import org.springframework.kafka.core.ProducerFactory;

import org.springframework.kafka.support.serializer.JsonDeserializer;

import org.springframework.kafka.support.serializer.JsonSerializer;

@Configuration

@EnableKafka

public class KafkaProducerConfig {

@Value("${kafka.producer.servers}")

private String servers;

@Value("${kafka.producer.retries}")

private int retries;

@Value("${kafka.producer.batch.size}")

private int batchSize;

@Value("${kafka.producer.linger}")

private int linger;

@Value("${kafka.producer.buffer.memory}")

private int bufferMemory;

public Map<String, Object> producerConfigs() {

Map<String, Object> props = new HashMap<>();

props.put(ProducerConfig.BOOTSTRAP_SERVERS_CONFIG, servers);

props.put(ProducerConfig.RETRIES_CONFIG, retries);

props.put(ProducerConfig.BATCH_SIZE_CONFIG, batchSize);

props.put(ProducerConfig.LINGER_MS_CONFIG, linger);

props.put(ProducerConfig.BUFFER_MEMORY_CONFIG, bufferMemory);

props.put(ProducerConfig.KEY_SERIALIZER_CLASS_CONFIG, StringSerializer.class);

props.put(ProducerConfig.VALUE_SERIALIZER_CLASS_CONFIG, StringSerializer.class);

return props;

}

public ProducerFactory<String, String> producerFactory() {

return new DefaultKafkaProducerFactory<>(producerConfigs(),

new StringSerializer(),

new JsonSerializer<String>());

}

@Bean

public KafkaTemplate<String, String> kafkaTemplate() {

return new KafkaTemplate<>(producerFactory());

}

}

2、生产者

import lombok.extern.slf4j.Slf4j;

import org.apache.kafka.clients.producer.RecordMetadata;

import org.springframework.kafka.support.SendResult;

import org.springframework.lang.Nullable;

import org.springframework.util.concurrent.ListenableFutureCallback;

@Slf4j

public class ProducerCallback implements ListenableFutureCallback<SendResult<String, String>> {

private final long startTime;

private final String key;

private final String message;

public ProducerCallback(long startTime, String key, String message) {

this.startTime = startTime;

this.key = key;

this.message = message;

}

@Override

public void onSuccess(@Nullable SendResult<String, String> result) {

if (result == null) {

return;

}

long elapsedTime = System.currentTimeMillis() - startTime;

RecordMetadata metadata = result.getRecordMetadata();

if (metadata != null) {

StringBuilder record = new StringBuilder();

record.append("message(")

.append("key = ").append(key).append(",")

.append("message = ").append(message).append(")")

.append("sent to partition(").append(metadata.partition()).append(")")

.append("with offset(").append(metadata.offset()).append(")")

.append("in ").append(elapsedTime).append(" ms");

log.info(record.toString());

}

}

@Override

public void onFailure(Throwable ex) {

ex.printStackTrace();

}

}

import org.apache.kafka.clients.producer.ProducerRecord;

import org.springframework.beans.factory.annotation.Autowired;

import org.springframework.beans.factory.annotation.Qualifier;

import org.springframework.kafka.core.KafkaTemplate;

import org.springframework.kafka.support.SendResult;

import org.springframework.stereotype.Component;

import org.springframework.util.concurrent.ListenableFuture;

@Component

public class SimpleProducer {

@Autowired

@Qualifier("kafkaTemplate")

private KafkaTemplate<String, String> kafkaTemplate;

public void send(String topic, String message) {

kafkaTemplate.send(topic, message);

}

public void send(String topic, String key, String entity) {

ProducerRecord<String, String> record = new ProducerRecord<>(

topic,

key,

entity);

long startTime = System.currentTimeMillis();

ListenableFuture<SendResult<String, String>> future = kafkaTemplate.send(record);

future.addCallback(new ProducerCallback(startTime, key, entity));

}

}3、消费者

import lombok.extern.slf4j.Slf4j;

import org.springframework.kafka.annotation.KafkaListener;

import org.springframework.stereotype.Component;

@Slf4j

@Component

public class SimpleConsumer {

@KafkaListener(topics = "${kafka.topic.default}", containerFactory = "kafkaListenerContainerFactory")

public void receive(String message) {

log.info(message);

}

}4、控制层

import com.zy.kafkatest.producer.SimpleProducer;

import lombok.extern.slf4j.Slf4j;

import org.springframework.beans.factory.annotation.Autowired;

import org.springframework.beans.factory.annotation.Value;

import org.springframework.web.bind.annotation.RequestBody;

import org.springframework.web.bind.annotation.RequestMapping;

import org.springframework.web.bind.annotation.RequestMethod;

import org.springframework.web.bind.annotation.RestController;

import java.util.HashMap;

@Slf4j

@RestController

@RequestMapping("/kafka")

public class ProduceController {

@Autowired

private SimpleProducer simpleProducer;

@Value("${kafka.topic.default}")

private String topic;

@RequestMapping(value = "/hello", method = RequestMethod.GET, produces = {"application/json"})

public String sendKafka() {

return "200";

}

@RequestMapping(value = "/send", method = RequestMethod.POST, produces = {"application/json"})

public HashMap<String, Object> sendKafka(@RequestBody String message) {

HashMap<String, Object> modelMap = new HashMap<>();

try {

log.info("kafka的消息={}", message);

simpleProducer.send(topic, "key", message);

log.info("发送kafka成功.");

modelMap.put("发送kafka成功", "success");

return modelMap;

} catch (Exception e) {

log.error("发送kafka失败", e);

modelMap.put("发送kafka失败", "fail");

return modelMap;

}

}

}参考博客:

261

261

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?