quick-start-guide

前言

本文参考 Hudi 的官方文档中quick-start-guide部分完成 : https://hudi.apache.org/docs/quick-start-guide(单纯照着文档来我是没搞起来,总之踩了一些小坑)

请严格遵守jar包版本的约束

| Hudi | Supported Spark 3 version |

|---|---|

| 0.10.0 | 3.1.x (default build), 3.0.x |

| 0.7.0 - 0.9.0 | 3.0.x |

| 0.6.0 and prior | not supported |

spark shell 配置

这个步骤是根据官网的描述操作的,只不过一开始没有进行下载jar,踩了一个微型坑

启动pyspark的hudi环境报错

ERROR INFO:

(venv) gavin@GavindeMacBook-Pro test % which python3.8

/Users/gavin/PycharmProjects/pythonProject/venv/bin/python3.8

(venv) gavin@GavindeMacBook-Pro test % export PYSPARK_PYTHON=$(which python3.8)

(venv) gavin@GavindeMacBook-Pro test % echo ${PYSPARK_PYTHON}

/Users/gavin/PycharmProjects/pythonProject/venv/bin/python3.8

(venv) gavin@GavindeMacBook-Pro test % pyspark --packages org.apache.hudi:hudi-spark3.1.2-bundle_2.12:0.10.1,org.apache.spark:spark-avro_2.12:3.1.2 --conf 'spark.serializer=org.apache.spark.serializer.KryoSerializer'

Python 3.8.9 (default, Oct 26 2021, 07:25:54)

[Clang 13.0.0 (clang-1300.0.29.30)] on darwin

Type "help", "copyright", "credits" or "license" for more information.

:: loading settings :: url = jar:file:/Users/gavin/PycharmProjects/pythonProject/venv/lib/python3.8/site-packages/pyspark/jars/ivy-2.4.0.jar!/org/apache/ivy/core/settings/ivysettings.xml

Ivy Default Cache set to: /Users/gavin/.ivy2/cache

The jars for the packages stored in: /Users/gavin/.ivy2/jars

org.apache.hudi#hudi-spark3.1.2-bundle_2.12 added as a dependency

org.apache.spark#spark-avro_2.12 added as a dependency

:: resolving dependencies :: org.apache.spark#spark-submit-parent-311b5800-4168-498b-987f-f714233cf50c;1.0

confs: [default]

:: resolution report :: resolve 614199ms :: artifacts dl 0ms

:: modules in use:

---------------------------------------------------------------------

| | modules || artifacts |

| conf | number| search|dwnlded|evicted|| number|dwnlded|

---------------------------------------------------------------------

| default | 2 | 0 | 0 | 0 || 0 | 0 |

---------------------------------------------------------------------

:: problems summary ::

:::: WARNINGS

module not found: org.apache.hudi#hudi-spark3.1.2-bundle_2.12;0.10.1

==== local-m2-cache: tried

file:/Users/gavin/.m2/repository/org/apache/hudi/hudi-spark3.1.2-bundle_2.12/0.10.1/hudi-spark3.1.2-bundle_2.12-0.10.1.pom

-- artifact org.apache.hudi#hudi-spark3.1.2-bundle_2.12;0.10.1!hudi-spark3.1.2-bundle_2.12.jar:

file:/Users/gavin/.m2/repository/org/apache/hudi/hudi-spark3.1.2-bundle_2.12/0.10.1/hudi-spark3.1.2-bundle_2.12-0.10.1.jar

==== local-ivy-cache: tried

/Users/gavin/.ivy2/local/org.apache.hudi/hudi-spark3.1.2-bundle_2.12/0.10.1/ivys/ivy.xml

-- artifact org.apache.hudi#hudi-spark3.1.2-bundle_2.12;0.10.1!hudi-spark3.1.2-bundle_2.12.jar:

/Users/gavin/.ivy2/local/org.apache.hudi/hudi-spark3.1.2-bundle_2.12/0.10.1/jars/hudi-spark3.1.2-bundle_2.12.jar

==== central: tried

https://repo1.maven.org/maven2/org/apache/hudi/hudi-spark3.1.2-bundle_2.12/0.10.1/hudi-spark3.1.2-bundle_2.12-0.10.1.pom

-- artifact org.apache.hudi#hudi-spark3.1.2-bundle_2.12;0.10.1!hudi-spark3.1.2-bundle_2.12.jar:

https://repo1.maven.org/maven2/org/apache/hudi/hudi-spark3.1.2-bundle_2.12/0.10.1/hudi-spark3.1.2-bundle_2.12-0.10.1.jar

==== spark-packages: tried

https://repos.spark-packages.org/org/apache/hudi/hudi-spark3.1.2-bundle_2.12/0.10.1/hudi-spark3.1.2-bundle_2.12-0.10.1.pom

-- artifact org.apache.hudi#hudi-spark3.1.2-bundle_2.12;0.10.1!hudi-spark3.1.2-bundle_2.12.jar:

https://repos.spark-packages.org/org/apache/hudi/hudi-spark3.1.2-bundle_2.12/0.10.1/hudi-spark3.1.2-bundle_2.12-0.10.1.jar

module not found: org.apache.spark#spark-avro_2.12;3.1.2

==== local-m2-cache: tried

file:/Users/gavin/.m2/repository/org/apache/spark/spark-avro_2.12/3.1.2/spark-avro_2.12-3.1.2.pom

-- artifact org.apache.spark#spark-avro_2.12;3.1.2!spark-avro_2.12.jar:

file:/Users/gavin/.m2/repository/org/apache/spark/spark-avro_2.12/3.1.2/spark-avro_2.12-3.1.2.jar

==== local-ivy-cache: tried

/Users/gavin/.ivy2/local/org.apache.spark/spark-avro_2.12/3.1.2/ivys/ivy.xml

-- artifact org.apache.spark#spark-avro_2.12;3.1.2!spark-avro_2.12.jar:

/Users/gavin/.ivy2/local/org.apache.spark/spark-avro_2.12/3.1.2/jars/spark-avro_2.12.jar

==== central: tried

https://repo1.maven.org/maven2/org/apache/spark/spark-avro_2.12/3.1.2/spark-avro_2.12-3.1.2.pom

-- artifact org.apache.spark#spark-avro_2.12;3.1.2!spark-avro_2.12.jar:

https://repo1.maven.org/maven2/org/apache/spark/spark-avro_2.12/3.1.2/spark-avro_2.12-3.1.2.jar

==== spark-packages: tried

https://repos.spark-packages.org/org/apache/spark/spark-avro_2.12/3.1.2/spark-avro_2.12-3.1.2.pom

-- artifact org.apache.spark#spark-avro_2.12;3.1.2!spark-avro_2.12.jar:

https://repos.spark-packages.org/org/apache/spark/spark-avro_2.12/3.1.2/spark-avro_2.12-3.1.2.jar

::::::::::::::::::::::::::::::::::::::::::::::

:: UNRESOLVED DEPENDENCIES ::

::::::::::::::::::::::::::::::::::::::::::::::

:: org.apache.hudi#hudi-spark3.1.2-bundle_2.12;0.10.1: not found

:: org.apache.spark#spark-avro_2.12;3.1.2: not found

::::::::::::::::::::::::::::::::::::::::::::::

:::: ERRORS

Server access error at url https://repo1.maven.org/maven2/org/apache/hudi/hudi-spark3.1.2-bundle_2.12/0.10.1/hudi-spark3.1.2-bundle_2.12-0.10.1.pom (java.net.ConnectException: Operation timed out (Connection timed out))

Server access error at url https://repo1.maven.org/maven2/org/apache/hudi/hudi-spark3.1.2-bundle_2.12/0.10.1/hudi-spark3.1.2-bundle_2.12-0.10.1.jar (java.net.ConnectException: Operation timed out (Connection timed out))

Server access error at url https://repos.spark-packages.org/org/apache/hudi/hudi-spark3.1.2-bundle_2.12/0.10.1/hudi-spark3.1.2-bundle_2.12-0.10.1.pom (java.net.ConnectException: Operation timed out (Connection timed out))

Server access error at url https://repos.spark-packages.org/org/apache/hudi/hudi-spark3.1.2-bundle_2.12/0.10.1/hudi-spark3.1.2-bundle_2.12-0.10.1.jar (java.net.ConnectException: Operation timed out (Connection timed out))

Server access error at url https://repo1.maven.org/maven2/org/apache/spark/spark-avro_2.12/3.1.2/spark-avro_2.12-3.1.2.pom (java.net.ConnectException: Operation timed out (Connection timed out))

Server access error at url https://repo1.maven.org/maven2/org/apache/spark/spark-avro_2.12/3.1.2/spark-avro_2.12-3.1.2.jar (java.net.ConnectException: Operation timed out (Connection timed out))

Server access error at url https://repos.spark-packages.org/org/apache/spark/spark-avro_2.12/3.1.2/spark-avro_2.12-3.1.2.pom (java.net.ConnectException: Operation timed out (Connection timed out))

Server access error at url https://repos.spark-packages.org/org/apache/spark/spark-avro_2.12/3.1.2/spark-avro_2.12-3.1.2.jar (java.net.ConnectException: Operation timed out (Connection timed out))

:: USE VERBOSE OR DEBUG MESSAGE LEVEL FOR MORE DETAILS

Exception in thread "main" java.lang.RuntimeException: [unresolved dependency: org.apache.hudi#hudi-spark3.1.2-bundle_2.12;0.10.1: not found, unresolved dependency: org.apache.spark#spark-avro_2.12;3.1.2: not found]

at org.apache.spark.deploy.SparkSubmitUtils$.resolveMavenCoordinates(SparkSubmit.scala:1429)

at org.apache.spark.deploy.DependencyUtils$.resolveMavenDependencies(DependencyUtils.scala:54)

at org.apache.spark.deploy.SparkSubmit.prepareSubmitEnvironment(SparkSubmit.scala:308)

at org.apache.spark.deploy.SparkSubmit.org$apache$spark$deploy$SparkSubmit$$runMain(SparkSubmit.scala:894)

at org.apache.spark.deploy.SparkSubmit.doRunMain$1(SparkSubmit.scala:180)

at org.apache.spark.deploy.SparkSubmit.submit(SparkSubmit.scala:203)

at org.apache.spark.deploy.SparkSubmit.doSubmit(SparkSubmit.scala:90)

at org.apache.spark.deploy.SparkSubmit$$anon$2.doSubmit(SparkSubmit.scala:1039)

at org.apache.spark.deploy.SparkSubmit$.main(SparkSubmit.scala:1048)

at org.apache.spark.deploy.SparkSubmit.main(SparkSubmit.scala)

Traceback (most recent call last):

File "/Users/gavin/PycharmProjects/pythonProject/venv/lib/python3.8/site-packages/pyspark/python/pyspark/shell.py", line 35, in <module>

SparkContext._ensure_initialized() # type: ignore

File "/Users/gavin/PycharmProjects/pythonProject/venv/lib/python3.8/site-packages/pyspark/context.py", line 331, in _ensure_initialized

SparkContext._gateway = gateway or launch_gateway(conf)

File "/Users/gavin/PycharmProjects/pythonProject/venv/lib/python3.8/site-packages/pyspark/java_gateway.py", line 108, in launch_gateway

raise Exception("Java gateway process exited before sending its port number")

Exception: Java gateway process exited before sending its port number

>>> exit()

观察到回去本地maven仓库查找jar包,于是使用maven下载需要的两个jar包(对应的spark版本是3.1.2):

<dependency>

<groupId>org.apache.hudi</groupId>

<artifactId>hudi-spark3.1.2-bundle_2.12</artifactId>

<version>0.10.1</version>

</dependency>

<dependency>

<groupId>org.apache.spark</groupId>

<artifactId>spark-avro_2.12</artifactId>

<version>3.1.2</version>

</dependency>

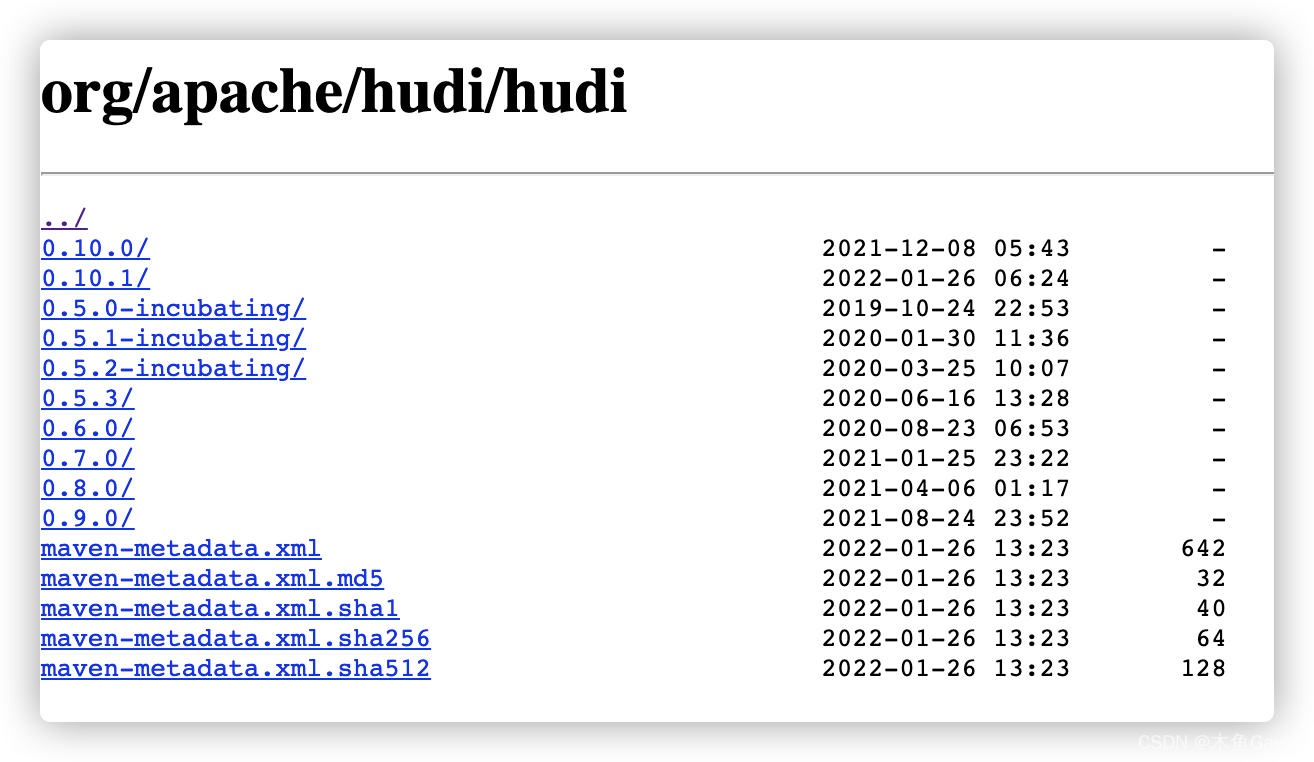

也可以自己直接到网站下载:

Hudi jar download:

https://repo1.maven.org/maven2/org/apache/hudi/hudi-spark3.1.2-bundle_2.12/0.10.1/

https://repo1.maven.org/maven2/org/apache/hudi/hudi/

maven中jar下载完成之后,key在本地仓库中看到jar:/Users/gavin/.m2/repository/org/apache/hudi/…

成功启动带hudi的pyspark

(venv) gavin@GavindeMacBook-Pro test % pyspark --packages org.apache.hudi:hudi-spark3.1.2-bundle_2.12:0.10.1,org.apache.spark:spark-avro_2.12:3.1.2 --conf 'spark.serializer=org.apache.spark.serializer.KryoSerializer'

Python 3.8.9 (default, Oct 26 2021, 07:25:54)

[Clang 13.0.0 (clang-1300.0.29.30)] on darwin

Type "help", "copyright", "credits" or "license" for more information.

22/03/01 10:20:28 WARN Utils: Your hostname, GavindeMacBook-Pro.local resolves to a loopback address: 127.0.0.1; using 192.168.24.227 instead (on interface en0)

22/03/01 10:20:28 WARN Utils: Set SPARK_LOCAL_IP if you need to bind to another address

:: loading settings :: url = jar:file:/Users/gavin/PycharmProjects/pythonProject/venv/lib/python3.8/site-packages/pyspark/jars/ivy-2.4.0.jar!/org/apache/ivy/core/settings/ivysettings.xml

Ivy Default Cache set to: /Users/gavin/.ivy2/cache

The jars for the packages stored in: /Users/gavin/.ivy2/jars

org.apache.hudi#hudi-spark3.1.2-bundle_2.12 added as a dependency

org.apache.spark#spark-avro_2.12 added as a dependency

:: resolving dependencies :: org.apache.spark#spark-submit-parent-9a87dae7-3c6a-4133-838b-c7050b1d8b89;1.0

confs: [default]

found org.apache.hudi#hudi-spark3.1.2-bundle_2.12;0.10.1 in local-m2-cache

found org.apache.spark#spark-avro_2.12;3.1.2 in local-m2-cache

found org.spark-project.spark#unused;1.0.0 in local-m2-cache

downloading file:/Users/gavin/.m2/repository/org/apache/hudi/hudi-spark3.1.2-bundle_2.12/0.10.1/hudi-spark3.1.2-bundle_2.12-0.10.1.jar ...

[SUCCESSFUL ] org.apache.hudi#hudi-spark3.1.2-bundle_2.12;0.10.1!hudi-spark3.1.2-bundle_2.12.jar (54ms)

downloading file:/Users/gavin/.m2/repository/org/apache/spark/spark-avro_2.12/3.1.2/spark-avro_2.12-3.1.2.jar ...

[SUCCESSFUL ] org.apache.spark#spark-avro_2.12;3.1.2!spark-avro_2.12.jar (2ms)

downloading file:/Users/gavin/.m2/repository/org/spark-project/spark/unused/1.0.0/unused-1.0.0.jar ...

[SUCCESSFUL ] org.spark-project.spark#unused;1.0.0!unused.jar (2ms)

:: resolution report :: resolve 6622ms :: artifacts dl 62ms

:: modules in use:

org.apache.hudi#hudi-spark3.1.2-bundle_2.12;0.10.1 from local-m2-cache in [default]

org.apache.spark#spark-avro_2.12;3.1.2 from local-m2-cache in [default]

org.spark-project.spark#unused;1.0.0 from local-m2-cache in [default]

---------------------------------------------------------------------

| | modules || artifacts |

| conf | number| search|dwnlded|evicted|| number|dwnlded|

---------------------------------------------------------------------

| default | 3 | 3 | 3 | 0 || 3 | 3 |

---------------------------------------------------------------------

:: retrieving :: org.apache.spark#spark-submit-parent-9a87dae7-3c6a-4133-838b-c7050b1d8b89

confs: [default]

3 artifacts copied, 0 already retrieved (38092kB/67ms)

22/03/01 10:20:35 WARN NativeCodeLoader: Unable to load native-hadoop library for your platform... using builtin-java classes where applicable

Using Spark's default log4j profile: org/apache/spark/log4j-defaults.properties

Setting default log level to "WARN".

To adjust logging level use sc.setLogLevel(newLevel). For SparkR, use setLogLevel(newLevel).

Welcome to

____ __

/ __/__ ___ _____/ /__

_\ \/ _ \/ _ `/ __/ '_/

/__ / .__/\_,_/_/ /_/\_\ version 3.1.2

/_/

Using Python version 3.8.9 (default, Oct 26 2021 07:25:54)

Spark context Web UI available at http://192.168.24.227:4040

Spark context available as 'sc' (master = local[*], app id = local-1646101237379).

SparkSession available as 'spark'.

>>>

IDEA 代码方式

插入数据(表不存在则新建表,upsert)

此例讲述的是「upsert」类型的插入,即「存在符合条件的则更新,不存在则新增」,具体是使用什么类型的数据插入方式,是由参数「hoodie.datasource.write.operation」控制的,具体参数的说明可见https://hudi.apache.org/docs/configurations

根据官网的document(https://hudi.apache.org/docs/quick-start-guide),得到如下代码:

import pyspark

if __name__ == '__main__':

builder = pyspark.sql.SparkSession.builder.appName("MyApp") \

.config("spark.jars", "/Users/gavin/.ivy2/cache/org.apache.hudi/hudi-spark3.1.2-bundle_2.12/jars/hudi-spark3.1.2-bundle_2.12-0.10.1.jar,"

"/Users/gavin/.ivy2/cache/org.apache.spark/spark-avro_2.12/jars/spark-avro_2.12-3.1.2.jar") \

.config("spark.serializer", "org.apache.spark.serializer.KryoSerializer")

spark = builder.getOrCreate()

sc = spark.sparkContext

# pyspark

tableName = "hudi_trips_cow"

basePath = "file:///tmp/hudi_trips_cow"

dataGen = sc._jvm.org.apache.hudi.QuickstartUtils.DataGenerator()

# pyspark

inserts = sc._jvm.org.apache.hudi.QuickstartUtils.convertToStringList(dataGen.generateInserts(10))

df = spark.read.json(spark.sparkContext.parallelize(inserts, 2))

hudi_options = {

'hoodie.table.name': tableName,

'hoodie.datasource.write.recordkey.field': 'uuid',

'hoodie.datasource.write.partitionpath.field': 'partitionpath',

'hoodie.datasource.write.table.name': tableName,

'hoodie.datasource.write.operation': 'upsert',

'hoodie.datasource.write.precombine.field': 'ts',

'hoodie.upsert.shuffle.parallelism': 2,

'hoodie.insert.shuffle.parallelism': 2

}

df.write.format("hudi"). \

options(**hudi_options). \

mode("overwrite"). \

save(basePath)

ps:插入的数据使用的是「org.apache.hudi.QuickstartUtils.DataGenerator()」生成的样例数据(官网的代码就是这么干的,具体数据内容可参见查询 章节)

运行代码后得到如下目录结构(妥妥的分区表目录结构):

gavin@GavindeMacBook-Pro apache % tree /tmp/hudi_trips_cow

/tmp/hudi_trips_cow

├── americas

│ ├── brazil

│ │ └── sao_paulo

│ │ └── 6f82f351-9994-459d-a20c-77baa91ad323-0_0-27-31_20220301105108074.parquet

│ └── united_states

│ └── san_francisco

│ └── 52a5ee08-9376-4954-bb8f-f7f519b8b40e-0_1-33-32_20220301105108074.parquet

└── asia

└── india

└── chennai

└── 2f5b659d-3738-48ca-b590-bbce52e98642-0_2-33-33_20220301105108074.parquet

8 directories, 3 files

gavin@GavindeMacBook-Pro apache %

扩展

除了基本的数据文件外,hudi还有一个metadata的隐藏文件「.hoodie」,文件具体内容再叙:

gavin@GavindeMacBook-Pro hudi_trips_cow % ll -a

total 0

drwxr-xr-x 5 gavin wheel 160 Mar 1 10:51 .

drwxrwxrwt 10 root wheel 320 Mar 1 11:26 ..

drwxr-xr-x 13 gavin wheel 416 Mar 1 10:51 .hoodie

drwxr-xr-x 4 gavin wheel 128 Mar 1 10:51 americas

drwxr-xr-x 3 gavin wheel 96 Mar 1 10:51 asia

gavin@GavindeMacBook-Pro hudi_trips_cow % tree .hoodie

.hoodie

├── 20220301105108074.commit

├── 20220301105108074.commit.requested

├── 20220301105108074.inflight

├── archived

└── hoodie.properties

查询数据(查询当前版本的数据)

查询代码

import pyspark

if __name__ == '__main__':

builder = pyspark.sql.SparkSession.builder.appName("MyApp") \

.config("spark.jars",

"/Users/gavin/.ivy2/cache/org.apache.hudi/hudi-spark3.1.2-bundle_2.12/jars/hudi-spark3.1.2-bundle_2.12-0.10.1.jar,"

"/Users/gavin/.ivy2/cache/org.apache.spark/spark-avro_2.12/jars/spark-avro_2.12-3.1.2.jar") \

.config("spark.serializer", "org.apache.spark.serializer.KryoSerializer")

spark = builder.getOrCreate()

sc = spark.sparkContext

basePath = "file:///tmp/hudi_trips_cow"

# pyspark

tripsSnapshotDF = spark. \

read. \

format("hudi"). \

load(basePath)

# load(basePath) use "/partitionKey=partitionValue" folder structure for Spark auto partition discovery

count = tripsSnapshotDF.count()

print(f'========hudi_trips_snapshot 表中共计[{count}]条数据')

print('表结构如下:')

tripsSnapshotDF.printSchema()

tripsSnapshotDF.show()

tripsSnapshotDF.createOrReplaceTempView("hudi_trips_snapshot")

spark.sql("select fare, begin_lon, begin_lat, ts from hudi_trips_snapshot where fare > 20.0").show()

spark.sql(

"select _hoodie_commit_time, _hoodie_record_key, _hoodie_partition_path, rider, driver, fare from hudi_trips_snapshot").show()

查询结果

/Users/gavin/PycharmProjects/pythonProject/venv/bin/python /Users/gavin/PycharmProjects/pythonProject/venv/spark/hudi/basic_query.py

22/03/01 11:18:26 WARN NativeCodeLoader: Unable to load native-hadoop library for your platform... using builtin-java classes where applicable

Using Spark's default log4j profile: org/apache/spark/log4j-defaults.properties

Setting default log level to "WARN".

To adjust logging level use sc.setLogLevel(newLevel). For SparkR, use setLogLevel(newLevel).

22/03/01 11:18:27 WARN Utils: Service 'SparkUI' could not bind on port 4040. Attempting port 4041.

========hudi_trips_snapshot 表中共计[10]条数据

表结构如下:

root

|-- _hoodie_commit_time: string (nullable = true)

|-- _hoodie_commit_seqno: string (nullable = true)

|-- _hoodie_record_key: string (nullable = true)

|-- _hoodie_partition_path: string (nullable = true)

|-- _hoodie_file_name: string (nullable = true)

|-- begin_lat: double (nullable = true)

|-- begin_lon: double (nullable = true)

|-- driver: string (nullable = true)

|-- end_lat: double (nullable = true)

|-- end_lon: double (nullable = true)

|-- fare: double (nullable = true)

|-- rider: string (nullable = true)

|-- ts: long (nullable = true)

|-- uuid: string (nullable = true)

|-- partitionpath: string (nullable = true)

+-------------------+--------------------+--------------------+----------------------+--------------------+-------------------+-------------------+----------+-------------------+-------------------+------------------+---------+-------------+--------------------+--------------------+

|_hoodie_commit_time|_hoodie_commit_seqno| _hoodie_record_key|_hoodie_partition_path| _hoodie_file_name| begin_lat| begin_lon| driver| end_lat| end_lon| fare| rider| ts| uuid| partitionpath|

+-------------------+--------------------+--------------------+----------------------+--------------------+-------------------+-------------------+----------+-------------------+-------------------+------------------+---------+-------------+--------------------+--------------------+

| 20220301105108074|20220301105108074...|c4340e2c-efd2-4a9...| americas/united_s...|52a5ee08-9376-495...|0.21624150367601136|0.14285051259466197|driver-213| 0.5890949624813784| 0.0966823831927115| 93.56018115236618|rider-213|1645736908516|c4340e2c-efd2-4a9...|americas/united_s...|

| 20220301105108074|20220301105108074...|67ee90ec-d7b8-477...| americas/united_s...|52a5ee08-9376-495...| 0.5731835407930634| 0.4923479652912024|driver-213|0.08988581780930216|0.42520899698713666| 64.27696295884016|rider-213|1645565690012|67ee90ec-d7b8-477...|americas/united_s...|

| 20220301105108074|20220301105108074...|91703076-f580-49f...| americas/united_s...|52a5ee08-9376-495...|0.11488393157088261| 0.6273212202489661|driver-213| 0.7454678537511295| 0.3954939864908973| 27.79478688582596|rider-213|1646031306513|91703076-f580-49f...|americas/united_s...|

| 20220301105108074|20220301105108074...|96a7571e-1e54-4bc...| americas/united_s...|52a5ee08-9376-495...| 0.8742041526408587| 0.7528268153249502|driver-213| 0.9197827128888302| 0.362464770874404|19.179139106643607|rider-213|1645796169470|96a7571e-1e54-4bc...|americas/united_s...|

| 20220301105108074|20220301105108074...|3723b4ac-8841-4cd...| americas/united_s...|52a5ee08-9376-495...| 0.1856488085068272| 0.9694586417848392|driver-213|0.38186367037201974|0.25252652214479043| 33.92216483948643|rider-213|1646085368961|3723b4ac-8841-4cd...|americas/united_s...|

| 20220301105108074|20220301105108074...|b3bf0b93-768d-4be...| americas/brazil/s...|6f82f351-9994-459...| 0.6100070562136587| 0.8779402295427752|driver-213| 0.3407870505929602| 0.5030798142293655| 43.4923811219014|rider-213|1645868768394|b3bf0b93-768d-4be...|americas/brazil/s...|

| 20220301105108074|20220301105108074...|7e195e8d-c6df-4fd...| americas/brazil/s...|6f82f351-9994-459...| 0.4726905879569653|0.46157858450465483|driver-213| 0.754803407008858| 0.9671159942018241|34.158284716382845|rider-213|1645602479789|7e195e8d-c6df-4fd...|americas/brazil/s...|

| 20220301105108074|20220301105108074...|3409ecd2-02c2-40c...| americas/brazil/s...|6f82f351-9994-459...| 0.0750588760043035|0.03844104444445928|driver-213|0.04376353354538354| 0.6346040067610669| 66.62084366450246|rider-213|1645621352954|3409ecd2-02c2-40c...|americas/brazil/s...|

| 20220301105108074|20220301105108074...|60903a00-3fdc-45d...| asia/india/chennai|2f5b659d-3738-48c...| 0.651058505660742| 0.8192868687714224|driver-213|0.20714896002914462|0.06224031095826987| 41.06290929046368|rider-213|1645503078006|60903a00-3fdc-45d...| asia/india/chennai|

| 20220301105108074|20220301105108074...|22d1507b-7d02-402...| asia/india/chennai|2f5b659d-3738-48c...| 0.40613510977307| 0.5644092139040959|driver-213| 0.798706304941517|0.02698359227182834|17.851135255091155|rider-213|1645948641664|22d1507b-7d02-402...| asia/india/chennai|

+-------------------+--------------------+--------------------+----------------------+--------------------+

本文档详细介绍了如何使用Apache Hudi进行数据操作,包括在pyspark环境中配置Hudi,插入、查询、更新、时间旅行查询、增量查询、删除数据,以及处理常见错误。通过实例展示了Hudi的upsert和append模式,并提供了FAQ解答。

本文档详细介绍了如何使用Apache Hudi进行数据操作,包括在pyspark环境中配置Hudi,插入、查询、更新、时间旅行查询、增量查询、删除数据,以及处理常见错误。通过实例展示了Hudi的upsert和append模式,并提供了FAQ解答。

最低0.47元/天 解锁文章

最低0.47元/天 解锁文章

7万+

7万+

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?