转载 :https://blog.csdn.net/hutongling/article/details/77053920

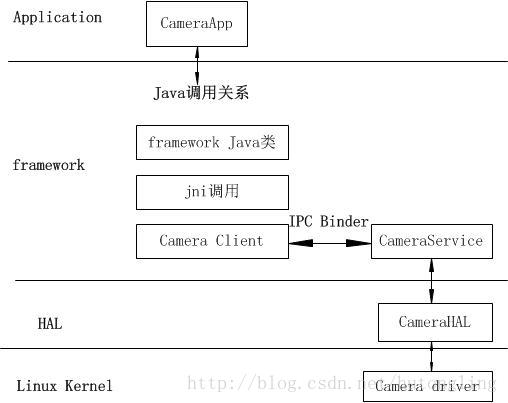

Android的camera 从上到下分为四个部分:

Application :应用层

Framework 层

HAL 层(hardware abstract layer)

一般面向开发者的话主要集中在Application层和framework层,但是一些厂商需要定制一些属于自己特有的东西的时候会去修改HAL层集成自己的一些东西,至于Kernel层一般的开发人员不会涉及到。

下面是一个简要的camera框架图:

APP层通过Java调用framework层的Java类,framework层的不止包含Java类,还包含jni调用和camera client通过IPC Binder绑定获取下层的服务,然后进入HAL层。

在framework层中,Java框架通过JNI的方式调用Native框架,此处的Native作为Client端只给上层应用提供调用接口,而具体的业务实现则是由Server端(CameraService)来实现,Client和Server则是通过Binder的方式进行通讯。单独分析下Camera的C/S架构,其架构图如下

对于Android camera来说,其主要有:initialize,preview,takepicture三个关键点

由于我下载的Android源码的版本是7.1.1,版本较新,所以可能分析的内容和以前不大一样。

下面从Application层开始分析

1、camera的初始化,

Camera2的初始化流程与Camera1.0有所区别,本文将就Camera2的内置应用来分析Camera2.0的初始化过程。Camera2.0首先启动的是CameraActivity,而它继承自QuickActivity,在代码中你会发现没有重写OnCreate等生命周期方法,因为此处采用的是模板方法的设计模式,在QuickActivity中的onCreate方法调用的是onCreateTasks等方法,所以要看onCreate方法就只须看onCreateTasks方法即可

CameraActivity.java 位于package/apps/Camera2/src/com/camera/ 目录下

//CameraActivity.java

@Override

public void onCreateTasks(Bundle state) {

Profile profile = mProfiler.create("CameraActivity.onCreateTasks").start();

…

try {

//①初始化mOneCameraOpener对象和mOneCameraManager 对象

mOneCameraOpener = OneCameraModule.provideOneCameraOpener(

mFeatureConfig, mAppContext,mActiveCameraDeviceTracker,

ResolutionUtil.getDisplayMetrics(this));

mOneCameraManager = OneCameraModule.provideOneCameraManager();

} catch (OneCameraException e) {...}

…

//②设置模块信息

ModulesInfo.setupModules(mAppContext, mModuleManager, mFeatureConfig);

…

//③进行初始化

mCurrentModule.init(this, isSecureCamera(), isCaptureIntent());

…

}1234567891011121314151617181920

从上面的代码中可以看出其主要分为三个步骤:

①初始化mOneCameraOpener对象和mOneCameraManager 对象

②设置模块信息

③进行初始化

(1)、第一个步骤,初始化OneCameraOpener对象和mOneCameraManager对象,我们初始化相机就必须得打开相机,并且对打开的相机进行管理,所以这里有初始化mOneCameraOpener对象和mOneCameraManager对象,这两个对象的初始化是在OneCameraModule.java中进行的

OneCameraModule.java 位于package/apps/Camera2/src/com/camera/目录下

//OneCameraModule.java

public static OneCameraOpener provideOneCameraOpener(OneCameraFeatureConfig featureConfig,Context context,ActiveCameraDeviceTracker activeCameraDeviceTracker,DisplayMetrics displayMetrics) throws OneCameraException {

Optional<OneCameraOpener> manager = Camera2OneCameraOpenerImpl.create(

featureConfig, context, activeCameraDeviceTracker, displayMetrics);

...

return manager.get();

}

public static OneCameraManager provideOneCameraManager() throws OneCameraException {

Optional<Camera2OneCameraManagerImpl> camera2HwManager = Camera2OneCameraManagerImpl.create();

if (camera2HwManager.isPresent()) {

return camera2HwManager.get();

}

...

}1234567891011121314

它调用Camera2OneCameraOpenerImpl的create方法来获得一个OneCameraOpener对象和调用Camera2OneCameraManagerImpl的create方法来获得一个OneCameraManager对象。

//Camera2OneCameraOpenerImpl.java

public static Optional<OneCameraOpener> create(OneCameraFeatureConfig featureConfig,Context context,ActiveCameraDeviceTracker activeCameraDeviceTracker,DisplayMetrics displayMetrics) {

...

OneCameraOpener oneCameraOpener = new Camera2OneCameraOpenerImpl(featureConfig,context,cameraManager,activeCameraDeviceTracker,displayMetrics);

return Optional.of(oneCameraOpener);

}123456

//Camera2OneCameraManagerImpl.java

public static Optional<Camera2OneCameraManagerImpl> create() {

...

Camera2OneCameraManagerImpl hardwareManager =

new Camera2OneCameraManagerImpl(cameraManager);

return Optional.of(hardwareManager);

}1234567

(2)设置模块信息,即 ModulesInfo.setupModules(mAppContext, mModuleManager, mFeatureConfig);这部分内容比较重要,主要是根据配置信息,进行一系列模块的注册:

//ModulesInfo.java

public static void setupModules(Context context, ModuleManager moduleManager,OneCameraFeatureConfig config) {

...

//注册Photo模块

registerPhotoModule(moduleManager, photoModuleId, SettingsScopeNamespaces.PHOTO,config.isUsingCaptureModule());

//设置为默认的模块

moduleManager.setDefaultModuleIndex(photoModuleId);

//注册Video模块

registerVideoModule(moduleManager, res.getInteger(R.integer.camera_mode_video),SettingsScopeNamespaces.VIDEO);

if (PhotoSphereHelper.hasLightCycleCapture(context)) {//开启闪光

//注册广角镜头

registerWideAngleModule(moduleManager, res.getInteger(

R.integer.camera_mode_panorama),SettingsScopeNamespaces

.PANORAMA);

//注册光球模块

registerPhotoSphereModule(moduleManager,res.getInteger(

R.integer.camera_mode_photosphere),

SettingsScopeNamespaces.PANORAMA);

}

//若需重新聚焦

if (RefocusHelper.hasRefocusCapture(context)) {

//注册重聚焦模块

registerRefocusModule(moduleManager, res.getInteger(

R.integer.camera_mode_refocus),

SettingsScopeNamespaces.REFOCUS);

}

//如果有色分离模块

if (GcamHelper.hasGcamAsSeparateModule(config)) {

//注册色分离模块

registerGcamModule(moduleManager, res.getInteger(

R.integer.camera_mode_gcam),SettingsScopeNamespaces.PHOTO,

config.getHdrPlusSupportLevel(OneCamera.Facing.BACK));

}

int imageCaptureIntentModuleId = res.getInteger(

R.integer.camera_mode_capture_intent);

registerCaptureIntentModule(moduleManager,

imageCaptureIntentModuleId,SettingsScopeNamespaces.PHOTO,

config.isUsingCaptureModule());

}

12345678910111213141516171819202122232425262728293031323334353637383940

因为打开Camera应用,既可以拍照片也可以拍视频,此处,只分析PhotoModule的注册

private static void registerPhotoModule(ModuleManager moduleManager, final int moduleId,

final String namespace, final boolean enableCaptureModule) {

moduleManager.registerModule(new ModuleManager.ModuleAgent() {

...

public ModuleController createModule(AppController app, Intent intent) {

Log.v(TAG, "EnableCaptureModule = " + enableCaptureModule);

return enableCaptureModule ? new CaptureModule(app) : new PhotoModule(app);

}

});

}12345678910

由上面的代码可知,注册PhotoModule的关键还是在moduleManager.registerModule中的ModuleController createModule方法根据enableCaptureModule ? new CaptureModule(app) : new PhotoModule(app);来判断是进行拍照模块的注册还是Photo模块的注册。

(3)进行初始化 mCurrentModule.init();

//CaptureModule.java

public void init(CameraActivity activity, boolean isSecureCamera, boolean

isCaptureIntent) {

...

HandlerThread thread = new HandlerThread("CaptureModule.mCameraHandler");

thread.start();

mCameraHandler = new Handler(thread.getLooper());

//获取第一步中创建的OneCameraOpener 和OneCameraManager对象

mOneCameraOpener = mAppController.getCameraOpener();

...

//获取前面创建的OneCameraManager对象

mOneCameraManager = OneCameraModule.provideOneCameraManager();

`...

//新建CaptureModule的UI

mUI = new CaptureModuleUI(activity, mAppController.

getModuleLayoutRoot(), mUIListener);

//设置预览状态的监听

mAppController.setPreviewStatusListener(mPreviewStatusListener);

synchronized (mSurfaceTextureLock) {

//获取SurfaceTexture

mPreviewSurfaceTexture = mAppController.getCameraAppUI()

.getSurfaceTexture();

}

}123456789101112131415161718192021222324

先获取创建的OneCameraOpener对象以及OneCameraManager对象,然后再设置预览状态监听,这里主要分析预览状态的监听:

//CaptureModule.java

private final PreviewStatusListener mPreviewStatusListener = new

PreviewStatusListener() {

...

public void onSurfaceTextureAvailable(SurfaceTexture surface,

int width, int height) {

updatePreviewTransform(width, height, true);

synchronized (mSurfaceTextureLock) {

mPreviewSurfaceTexture = surface;

}

//打开Camera

reopenCamera();

}

public boolean onSurfaceTextureDestroyed(SurfaceTexture surface) {

Log.d(TAG, "onSurfaceTextureDestroyed");

synchronized (mSurfaceTextureLock) {

mPreviewSurfaceTexture = null;

}

//关闭Camera

closeCamera();

return true;

}

public void onSurfaceTextureSizeChanged(SurfaceTexture surface,

int width, int height) {

//更新预览尺寸

updatePreviewBufferSize();

}

...

};1234567891011121314151617181920212223242526272829

当SurfaceTexture的状态变成可用的时候,会调用reopenCamera()方法来打开Camera,分析reopenCamera()方法:

private void reopenCamera() {

if (mPaused) {

return;

}

AsyncTask.THREAD_POOL_EXECUTOR.execute(new Runnable() {

@Override

public void run() {

closeCamera();

if(!mAppController.isPaused()) {

//开启相机并预览

openCameraAndStartPreview();

}

}

});

}123456789101112131415

继续分析openCameraAndStartPreview();

//CaptureModule.java

private void openCameraAndStartPreview() {

...

if (mOneCameraOpener == null) {

...

//释放CameraOpenCloseLock锁

mCameraOpenCloseLock.release();

mAppController.getFatalErrorHandler().onGenericCameraAccessFailure();

guard.stop("No OneCameraManager");

return;

}

// Derive objects necessary for camera creation.

MainThread mainThread = MainThread.create();

//查找需要打开的CameraId

CameraId cameraId = mOneCameraManager.findFirstCameraFacing(mCameraFacing);

...

//打开Camera

mOneCameraOpener.open(cameraId, captureSetting, mCameraHandler,

mainThread, imageRotationCalculator, mBurstController,

mSoundPlayer,new OpenCallback() {

public void onFailure() {

//进行失败的处理

...

}

@Override

public void onCameraClosed() {

...

}

@Override

public void onCameraOpened(@Nonnull final OneCamera camera) {

Log.d(TAG, "onCameraOpened: " + camera);

mCamera = camera;

if (mAppController.isPaused()) {

onFailure();

return;

}

...

mMainThread.execute(new Runnable() {

@Override

public void run() {

//通知UI,Camera状态变化

mAppController.getCameraAppUI().onChangeCamera();

//使能拍照按钮

mAppController.getButtonManager().enableCameraButton();

}

});

//至此,Camera打开成功,开始预览

camera.startPreview(new Surface(getPreviewSurfaceTexture()),

new CaptureReadyCallback() {

@Override

public void onSetupFailed() {

...

}

@Override

public void onReadyForCapture() {

//释放锁

mCameraOpenCloseLock.release();

mMainThread.execute(new Runnable() {

@Override

public void run() {

...

onPreviewStarted();

...

onReadyStateChanged(true);

//设置CaptureModule为Capture准备的状态监听

mCamera.setReadyStateChangedListener(

CaptureModule.this);

mUI.initializeZoom(mCamera.getMaxZoom());

mCamera.setFocusStateListener(

CaptureModule.this);

}

});

}

});

}

}, mAppController.getFatalErrorHandler());

guard.stop("mOneCameraOpener.open()");

}

}1234567891011121314151617181920212223242526272829303132333435363738394041424344454647484950515253545556575859606162636465666768697071727374757677787980818283

这里主要会调用Camera2OneCameraOpenerImpl的open方法来打开Camera,并定义了开启的回调函数,对开启结束后的结果进行处理,如失败则释放mCameraOpenCloseLock,并暂停mAppController,如果打开成功,通知UI成功,并开启Camera的Preview(在回调new OpenCallback中打开预览函数camera.startPreview()),并且定义了Preview的各种回调操作(如new CaptureReadyCallback()中的是否设置失败onSetupFailed,是否准备好拍照onReadyForCapture),针对Open过程,所以继续分析:

//Camera2OneCameraOpenerImpl.java

public void open(

...

mActiveCameraDeviceTracker.onCameraOpening(cameraKey);

//打开Camera,此处调用framework层的CameraManager类的openCamera,进入frameworks层

mCameraManager.openCamera(cameraKey.getValue(),

new CameraDevice.StateCallback() {

private boolean isFirstCallback = true;

...

public void onOpened(CameraDevice device) {

//第一次调用此回调

if (isFirstCallback) {

isFirstCallback = false;

try {

CameraCharacteristics characteristics = mCameraManager

.getCameraCharacteristics(device.getId());

...

//创建OneCamera对象

OneCamera oneCamera = OneCameraCreator.create(device,

characteristics, mFeatureConfig, captureSetting,

mDisplayMetrics, mContext, mainThread,

imageRotationCalculator, burstController, soundPlayer,

fatalErrorHandler);

if (oneCamera != null) {

//如果oneCamera不为空,则回调onCameraOpened,后面将做分析

openCallback.onCameraOpened(oneCamera);

} else {

...

openCallback.onFailure();

}

} catch (CameraAccessException e) {

openCallback.onFailure();

} catch (OneCameraAccessException e) {

Log.d(TAG, "Could not create OneCamera", e);

openCallback.onFailure();

}

}

}

}, handler);

...

}12345678910111213141516171819202122232425262728293031323334353637383940414243

接着调用CameraManager的openCamera方法打开相机,进入到framework层进行初始化

(CameraManager是连接App层和framework层的桥梁)

下面是App层初始化的时序图:

2、framework层的初始化

通过上面的CameraManager的openCamera进入到framework层,下面我们看openCamera方法:

//CameraManager.java

public void openCamera(@NonNull String cameraId,

@NonNull final CameraDevice.StateCallback callback, @Nullable Handler handler)

throws CameraAccessException {

openCameraForUid(cameraId, callback, handler, USE_CALLING_UID);

}1234567

openCamera方法调用了openCameraForUid方法,

//CameraManager.java

public void openCameraForUid(@NonNull String cameraId,

@NonNull final CameraDevice.StateCallback callback, @Nullable Handler handler,

int clientUid)

throws CameraAccessException {

...

openCameraDeviceUserAsync(cameraId, callback, handler, clientUid);

}

12345678910

接着调用了openCameraDeviceUserAsync方法:

private CameraDevice openCameraDeviceUserAsync(String cameraId,

CameraDevice.StateCallback callback, Handler handler, final int uid)

throws CameraAccessException {

CameraCharacteristics characteristics = getCameraCharacteristics(cameraId);

CameraDevice device = null;

synchronized (mLock) {

ICameraDeviceUser cameraUser = null;

android.hardware.camera2.impl.CameraDeviceImpl deviceImpl =

new android.hardware.camera2.impl.CameraDeviceImpl(

cameraId,

callback,

handler,

characteristics);

ICameraDeviceCallbacks callbacks = deviceImpl.getCallbacks();

...

try {

if (supportsCamera2ApiLocked(cameraId)) {

ICameraService cameraService = CameraManagerGlobal.get().getCameraService();

...

cameraUser = cameraService.connectDevice(callbacks, id,

mContext.getOpPackageName(), uid);

} else {

// Use legacy camera implementation for HAL1 devices

Log.i(TAG, "Using legacy camera HAL.");

cameraUser = CameraDeviceUserShim.connectBinderShim(callbacks, id);

}

} catch (ServiceSpecificException e) {

...

} catch (RemoteException e) {

...

}

...

deviceImpl.setRemoteDevice(cameraUser);

device = deviceImpl;

}

return device;

}

12345678910111213141516171819202122232425262728293031323334353637383940

该方法最重要的功能就是通过Java的Binder绑定功能获取CameraService,然后通过CameraService连接到具体的设备cameraUser = cameraService.connectDevice(callbacks, id, mContext.getOpPackageName(), uid);

//CameraService.cpp

Status CameraService::connectDevice(

const sp<hardware::camera2::ICameraDeviceCallbacks>& cameraCb,

int cameraId,

const String16& clientPackageName,

int clientUid,

/*out*/

sp<hardware::camera2::ICameraDeviceUser>* device) {

ATRACE_CALL();

Status ret = Status::ok();

String8 id = String8::format("%d", cameraId);

sp<CameraDeviceClient> client = nullptr;

ret = connectHelper<hardware::camera2::ICameraDeviceCallbacks,CameraDeviceClient>(cameraCb, id,

CAMERA_HAL_API_VERSION_UNSPECIFIED, clientPackageName,

clientUid, USE_CALLING_PID, API_2,

/*legacyMode*/ false, /*shimUpdateOnly*/ false,

/*out*/client);

if(!ret.isOk()) {

logRejected(id, getCallingPid(), String8(clientPackageName),

ret.toString8());

return ret;

}

*device = client;

return ret;

}12345678910111213141516171819202122232425262728

调用connectHelper

//CameraService.h

binder::Status CameraService::connectHelper(const sp<CALLBACK>& cameraCb, const String8& cameraId,

int halVersion, const String16& clientPackageName, int clientUid, int clientPid,

apiLevel effectiveApiLevel, bool legacyMode, bool shimUpdateOnly,

/*out*/sp<CLIENT>& device) {

binder::Status ret = binder::Status::ok();

String8 clientName8(clientPackageName);

int originalClientPid = 0;

ALOGI("CameraService::connect call (PID %d \"%s\", camera ID %s) for HAL version %s and "

"Camera API version %d", clientPid, clientName8.string(), cameraId.string(),

(halVersion == -1) ? "default" : std::to_string(halVersion).c_str(),

static_cast<int>(effectiveApiLevel));

sp<CLIENT> client = nullptr;

{

// Acquire mServiceLock and prevent other clients from connecting

std::unique_ptr<AutoConditionLock> lock =

AutoConditionLock::waitAndAcquire(mServiceLockWrapper, DEFAULT_CONNECT_TIMEOUT_NS);

if (lock == nullptr) {

ALOGE("CameraService::connect (PID %d) rejected (too many other clients connecting)."

, clientPid);

return STATUS_ERROR_FMT(ERROR_MAX_CAMERAS_IN_USE,

"Cannot open camera %s for \"%s\" (PID %d): Too many other clients connecting",

cameraId.string(), clientName8.string(), clientPid);

}

// Enforce client permissions and do basic sanity checks

if(!(ret = validateConnectLocked(cameraId, clientName8,

/*inout*/clientUid, /*inout*/clientPid, /*out*/originalClientPid)).isOk()) {

return ret;

}

// Check the shim parameters after acquiring lock, if they have already been updated and

// we were doing a shim update, return immediately

if (shimUpdateOnly) {

auto cameraState = getCameraState(cameraId);

if (cameraState != nullptr) {

if (!cameraState->getShimParams().isEmpty()) return ret;

}

}

status_t err;

sp<BasicClient> clientTmp = nullptr;

std::shared_ptr<resource_policy::ClientDescriptor<String8, sp<BasicClient>>> partial;

if ((err = handleEvictionsLocked(cameraId, originalClientPid, effectiveApiLevel,

IInterface::asBinder(cameraCb), clientName8, /*out*/&clientTmp,

/*out*/&partial)) != NO_ERROR) {

switch (err) {

case -ENODEV:

return STATUS_ERROR_FMT(ERROR_DISCONNECTED,

"No camera device with ID \"%s\" currently available",

cameraId.string());

case -EBUSY:

return STATUS_ERROR_FMT(ERROR_CAMERA_IN_USE,

"Higher-priority client using camera, ID \"%s\" currently unavailable",

cameraId.string());

default:

return STATUS_ERROR_FMT(ERROR_INVALID_OPERATION,

"Unexpected error %s (%d) opening camera \"%s\"",

strerror(-err), err, cameraId.string());

}

}

if (clientTmp.get() != nullptr) {

// Handle special case for API1 MediaRecorder where the existing client is returned

device = static_cast<CLIENT*>(clientTmp.get());

return ret;

}

// give flashlight a chance to close devices if necessary.

mFlashlight->prepareDeviceOpen(cameraId);

// TODO: Update getDeviceVersion + HAL interface to use strings for Camera IDs

int id = cameraIdToInt(cameraId);

if (id == -1) {

ALOGE("%s: Invalid camera ID %s, cannot get device version from HAL.", __FUNCTION__,

cameraId.string());

return STATUS_ERROR_FMT(ERROR_ILLEGAL_ARGUMENT,

"Bad camera ID \"%s\" passed to camera open", cameraId.string());

}

int facing = -1;

int deviceVersion = getDeviceVersion(id, /*out*/&facing);

sp<BasicClient> tmp = nullptr;

if(!(ret = makeClient(this, cameraCb, clientPackageName, id, facing, clientPid,

clientUid, getpid(), legacyMode, halVersion, deviceVersion, effectiveApiLevel,

/*out*/&tmp)).isOk()) {

return ret;

}

client = static_cast<CLIENT*>(tmp.get());

LOG_ALWAYS_FATAL_IF(client.get() == nullptr, "%s: CameraService in invalid state",

__FUNCTION__);

if ((err = client->initialize(mModule)) != OK) {

ALOGE("%s: Could not initialize client from HAL module.", __FUNCTION__);

// Errors could be from the HAL module open call or from AppOpsManager

switch(err) {

case BAD_VALUE:

return STATUS_ERROR_FMT(ERROR_ILLEGAL_ARGUMENT,

"Illegal argument to HAL module for camera \"%s\"", cameraId.string());

case -EBUSY:

return STATUS_ERROR_FMT(ERROR_CAMERA_IN_USE,

"Camera \"%s\" is already open", cameraId.string());

case -EUSERS:

return STATUS_ERROR_FMT(ERROR_MAX_CAMERAS_IN_USE,

"Too many cameras already open, cannot open camera \"%s\"",

cameraId.string());

case PERMISSION_DENIED:

return STATUS_ERROR_FMT(ERROR_PERMISSION_DENIED,

"No permission to open camera \"%s\"", cameraId.string());

case -EACCES:

return STATUS_ERROR_FMT(ERROR_DISABLED,

"Camera \"%s\" disabled by policy", cameraId.string());

case -ENODEV:

default:

return STATUS_ERROR_FMT(ERROR_INVALID_OPERATION,

"Failed to initialize camera \"%s\": %s (%d)", cameraId.string(),

strerror(-err), err);

}

}

// Update shim paremeters for legacy clients

if (effectiveApiLevel == API_1) {

// Assume we have always received a Client subclass for API1

sp<Client> shimClient = reinterpret_cast<Client*>(client.get());

String8 rawParams = shimClient->getParameters();

CameraParameters params(rawParams);

auto cameraState = getCameraState(cameraId);

if (cameraState != nullptr) {

cameraState->setShimParams(params);

} else {

ALOGE("%s: Cannot update shim parameters for camera %s, no such device exists.",

__FUNCTION__, cameraId.string());

}

}

if (shimUpdateOnly) {

// If only updating legacy shim parameters, immediately disconnect client

mServiceLock.unlock();

client->disconnect();

mServiceLock.lock();

} else {

// Otherwise, add client to active clients list

finishConnectLocked(client, partial);

}

} // lock is destroyed, allow further connect calls

// Important: release the mutex here so the client can call back into the service from its

// destructor (can be at the end of the call)

device = client;

return ret;

}

123456789101112131415161718192021222324252627282930313233343536373839404142434445464748495051525354555657585960616263646566676869707172737475767778798081828384858687888990919293949596979899100101102103104105106107108109110111112113114115116117118119120121122123124125126127128129130131132133134135136137138139140141142143144145146147148149150151152153154155156157158159160

调用makeClient

//CameraService.cpp

Status CameraService::makeClient(const sp<CameraService>& cameraService,

const sp<IInterface>& cameraCb, const String16& packageName, int cameraId,

int facing, int clientPid, uid_t clientUid, int servicePid, bool legacyMode,

int halVersion, int deviceVersion, apiLevel effectiveApiLevel,

/*out*/sp<BasicClient>* client) {

if (halVersion < 0 || halVersion == deviceVersion) {

// Default path: HAL version is unspecified by caller, create CameraClient

// based on device version reported by the HAL.

switch(deviceVersion) {

case CAMERA_DEVICE_API_VERSION_1_0:

if (effectiveApiLevel == API_1) { // Camera1 API route

sp<ICameraClient> tmp = static_cast<ICameraClient*>(cameraCb.get());

*client = new CameraClient(cameraService, tmp, packageName, cameraId, facing,

clientPid, clientUid, getpid(), legacyMode);

} else { // Camera2 API route

ALOGW("Camera using old HAL version: %d", deviceVersion);

return STATUS_ERROR_FMT(ERROR_DEPRECATED_HAL,

"Camera device \"%d\" HAL version %d does not support camera2 API",

cameraId, deviceVersion);

}

break;

case CAMERA_DEVICE_API_VERSION_3_0:

case CAMERA_DEVICE_API_VERSION_3_1:

case CAMERA_DEVICE_API_VERSION_3_2:

case CAMERA_DEVICE_API_VERSION_3_3:

case CAMERA_DEVICE_API_VERSION_3_4:

if (effectiveApiLevel == API_1) { // Camera1 API route

sp<ICameraClient> tmp = static_cast<ICameraClient*>(cameraCb.get());

*client = new Camera2Client(cameraService, tmp, packageName, cameraId, facing,

clientPid, clientUid, servicePid, legacyMode);

} else { // Camera2 API route

sp<hardware::camera2::ICameraDeviceCallbacks> tmp =

static_cast<hardware::camera2::ICameraDeviceCallbacks*>(cameraCb.get());

*client = new CameraDeviceClient(cameraService, tmp, packageName, cameraId,

facing, clientPid, clientUid, servicePid);

}

break;

default:

// Should not be reachable

ALOGE("Unknown camera device HAL version: %d", deviceVersion);

return STATUS_ERROR_FMT(ERROR_INVALID_OPERATION,

"Camera device \"%d\" has unknown HAL version %d",

cameraId, deviceVersion);

}

} else {

// A particular HAL version is requested by caller. Create CameraClient

// based on the requested HAL version.

if (deviceVersion > CAMERA_DEVICE_API_VERSION_1_0 &&

halVersion == CAMERA_DEVICE_API_VERSION_1_0) {

// Only support higher HAL version device opened as HAL1.0 device.

sp<ICameraClient> tmp = static_cast<ICameraClient*>(cameraCb.get());

*client = new CameraClient(cameraService, tmp, packageName, cameraId, facing,

clientPid, clientUid, servicePid, legacyMode);

} else {

// Other combinations (e.g. HAL3.x open as HAL2.x) are not supported yet.

ALOGE("Invalid camera HAL version %x: HAL %x device can only be"

" opened as HAL %x device", halVersion, deviceVersion,

CAMERA_DEVICE_API_VERSION_1_0);

return STATUS_ERROR_FMT(ERROR_ILLEGAL_ARGUMENT,

"Camera device \"%d\" (HAL version %d) cannot be opened as HAL version %d",

cameraId, deviceVersion, halVersion);

}

}

return Status::ok();

}12345678910111213141516171819202122232425262728293031323334353637383940414243444546474849505152535455565758596061626364656667

因为使用的Camera API 2.0,所以创建client=new CameraDeviceClient();

创建之后需要调用CameraDeviceClient的Initialize方法进行初始化(下面讲)

//CameraDeviceClient.cpp

CameraDeviceClient::CameraDeviceClient(const sp<CameraService>& cameraService,

const sp<hardware::camera2::ICameraDeviceCallbacks>& remoteCallback,

const String16& clientPackageName,

int cameraId,

int cameraFacing,

int clientPid,

uid_t clientUid,

int servicePid) :

Camera2ClientBase(cameraService, remoteCallback, clientPackageName,

cameraId, cameraFacing, clientPid, clientUid, servicePid),

mInputStream(),

mStreamingRequestId(REQUEST_ID_NONE),

mRequestIdCounter(0) {

ATRACE_CALL();

ALOGI("CameraDeviceClient %d: Opened", cameraId);

}

12345678910111213141516171819

继续调用Camera2ClientBase;并进行初始化

template <typename TClientBase>

Camera2ClientBase<TClientBase>::Camera2ClientBase(

const sp<CameraService>& cameraService,

const sp<TCamCallbacks>& remoteCallback,

const String16& clientPackageName,

int cameraId,

int cameraFacing,

int clientPid,

uid_t clientUid,

int servicePid):

TClientBase(cameraService, remoteCallback, clientPackageName,

cameraId, cameraFacing, clientPid, clientUid, servicePid),

mSharedCameraCallbacks(remoteCallback),

mDeviceVersion(cameraService->getDeviceVersion(cameraId)),

mDeviceActive(false)

{

ALOGI("Camera %d: Opened. Client: %s (PID %d, UID %d)", cameraId,

String8(clientPackageName).string(), clientPid, clientUid);

mInitialClientPid = clientPid;

mDevice = new Camera3Device(cameraId);

LOG_ALWAYS_FATAL_IF(mDevice == 0, "Device should never be NULL here.");

}1234567891011121314151617181920212223

创建mDevice=new Camera3Device();

Camera3Device::Camera3Device(int id):

mId(id),

mIsConstrainedHighSpeedConfiguration(false),

mHal3Device(NULL),

mStatus(STATUS_UNINITIALIZED),

mStatusWaiters(0),

mUsePartialResult(false),

mNumPartialResults(1),

mTimestampOffset(0),

mNextResultFrameNumber(0),

mNextReprocessResultFrameNumber(0),

mNextShutterFrameNumber(0),

mNextReprocessShutterFrameNumber(0),

mListener(NULL)

{

ATRACE_CALL();

camera3_callback_ops::notify = &sNotify;

camera3_callback_ops::process_capture_result = &sProcessCaptureResult;

ALOGV("%s: Created device for camera %d", __FUNCTION__, id);

}1234567891011121314151617181920

CameraDeviceClient创建对象之后需要调用initialize进行初始化:

status_t CameraDeviceClient::initialize(CameraModule *module)

{

ATRACE_CALL();

status_t res;

res = Camera2ClientBase::initialize(module);

if (res != OK) {

return res;

}

String8 threadName;

mFrameProcessor = new FrameProcessorBase(mDevice);

threadName = String8::format("CDU-%d-FrameProc", mCameraId);

mFrameProcessor->run(threadName.string());

mFrameProcessor->registerListener(FRAME_PROCESSOR_LISTENER_MIN_ID,

FRAME_PROCESSOR_LISTENER_MAX_ID,

/*listener*/this,

/*sendPartials*/true);

return OK;

}12345678910111213141516171819202122

这个初始化又调用了Camera2ClientBase的initialize(module);

template <typename TClientBase>

status_t Camera2ClientBase<TClientBase>::initialize(CameraModule *module) {

ATRACE_CALL();

ALOGV("%s: Initializing client for camera %d", __FUNCTION__,

TClientBase::mCameraId);

status_t res;

// Verify ops permissions

res = TClientBase::startCameraOps();

if (res != OK) {

return res;

}

if (mDevice == NULL) {

ALOGE("%s: Camera %d: No device connected",

__FUNCTION__, TClientBase::mCameraId);

return NO_INIT;

}

res = mDevice->initialize(module);

if (res != OK) {

ALOGE("%s: Camera %d: unable to initialize device: %s (%d)",

__FUNCTION__, TClientBase::mCameraId, strerror(-res), res);

return res;

}

wp<CameraDeviceBase::NotificationListener> weakThis(this);

res = mDevice->setNotifyCallback(weakThis);

return OK;

}12345678910111213141516171819202122232425262728293031

在Camera2ClientBase的初始化过程中又调用了Camera3Device的Initialize进行初始化

status_t Camera3Device::initialize(CameraModule *module)

{

ATRACE_CALL();

Mutex::Autolock il(mInterfaceLock);

Mutex::Autolock l(mLock);

ALOGV("%s: Initializing device for camera %d", __FUNCTION__, mId);

if (mStatus != STATUS_UNINITIALIZED) {

CLOGE("Already initialized!");

return INVALID_OPERATION;

}

/** Open HAL device */

status_t res;

String8 deviceName = String8::format("%d", mId);

camera3_device_t *device;

ATRACE_BEGIN("camera3->open");

res = module->open(deviceName.string(),

reinterpret_cast<hw_device_t**>(&device));

ATRACE_END();

if (res != OK) {

SET_ERR_L("Could not open camera: %s (%d)", strerror(-res), res);

return res;

}

/** Cross-check device version */

if (device->common.version < CAMERA_DEVICE_API_VERSION_3_0) {

SET_ERR_L("Could not open camera: "

"Camera device should be at least %x, reports %x instead",

CAMERA_DEVICE_API_VERSION_3_0,

device->common.version);

device->common.close(&device->common);

return BAD_VALUE;

}

camera_info info;

res = module->getCameraInfo(mId, &info);

if (res != OK) return res;

if (info.device_version != device->common.version) {

SET_ERR_L("HAL reporting mismatched camera_info version (%x)"

" and device version (%x).",

info.device_version, device->common.version);

device->common.close(&device->common);

return BAD_VALUE;

}

/** Initialize device with callback functions */

ATRACE_BEGIN("camera3->initialize");

res = device->ops->initialize(device, this);

ATRACE_END();

if (res != OK) {

SET_ERR_L("Unable to initialize HAL device: %s (%d)",

strerror(-res), res);

device->common.close(&device->common);

return BAD_VALUE;

}

/** Start up status tracker thread */

mStatusTracker = new StatusTracker(this);

res = mStatusTracker->run(String8::format("C3Dev-%d-Status", mId).string());

if (res != OK) {

SET_ERR_L("Unable to start status tracking thread: %s (%d)",

strerror(-res), res);

device->common.close(&device->common);

mStatusTracker.clear();

return res;

}

/** Register in-flight map to the status tracker */

mInFlightStatusId = mStatusTracker->addComponent();

/** Create buffer manager */

mBufferManager = new Camera3BufferManager();

bool aeLockAvailable = false;

camera_metadata_ro_entry aeLockAvailableEntry;

res = find_camera_metadata_ro_entry(info.static_camera_characteristics,

ANDROID_CONTROL_AE_LOCK_AVAILABLE, &aeLockAvailableEntry);

if (res == OK && aeLockAvailableEntry.count > 0) {

aeLockAvailable = (aeLockAvailableEntry.data.u8[0] ==

ANDROID_CONTROL_AE_LOCK_AVAILABLE_TRUE);

}

/** Start up request queue thread */

mRequestThread = new RequestThread(this, mStatusTracker, device, aeLockAvailable);

res = mRequestThread->run(String8::format("C3Dev-%d-ReqQueue", mId).string());

if (res != OK) {

SET_ERR_L("Unable to start request queue thread: %s (%d)",

strerror(-res), res);

device->common.close(&device->common);

mRequestThread.clear();

return res;

}

mPreparerThread = new PreparerThread();

/** Everything is good to go */

mDeviceVersion = device->common.version;

mDeviceInfo = info.static_camera_characteristics;

mHal3Device = device;

// Determine whether we need to derive sensitivity boost values for older devices.

// If post-RAW sensitivity boost range is listed, so should post-raw sensitivity control

// be listed (as the default value 100)

if (mDeviceVersion < CAMERA_DEVICE_API_VERSION_3_4 &&

mDeviceInfo.exists(ANDROID_CONTROL_POST_RAW_SENSITIVITY_BOOST_RANGE)) {

mDerivePostRawSensKey = true;

}

internalUpdateStatusLocked(STATUS_UNCONFIGURED);

mNextStreamId = 0;

mDummyStreamId = NO_STREAM;

mNeedConfig = true;

mPauseStateNotify = false;

// Measure the clock domain offset between camera and video/hw_composer

camera_metadata_entry timestampSource =

mDeviceInfo.find(ANDROID_SENSOR_INFO_TIMESTAMP_SOURCE);

if (timestampSource.count > 0 && timestampSource.data.u8[0] ==

ANDROID_SENSOR_INFO_TIMESTAMP_SOURCE_REALTIME) {

mTimestampOffset = getMonoToBoottimeOffset();

}

// Will the HAL be sending in early partial result metadata?

if (mDeviceVersion >= CAMERA_DEVICE_API_VERSION_3_2) {

camera_metadata_entry partialResultsCount =

mDeviceInfo.find(ANDROID_REQUEST_PARTIAL_RESULT_COUNT);

if (partialResultsCount.count > 0) {

mNumPartialResults = partialResultsCount.data.i32[0];

mUsePartialResult = (mNumPartialResults > 1);

}

} else {

camera_metadata_entry partialResultsQuirk =

mDeviceInfo.find(ANDROID_QUIRKS_USE_PARTIAL_RESULT);

if (partialResultsQuirk.count > 0 && partialResultsQuirk.data.u8[0] == 1) {

mUsePartialResult = true;

}

}

camera_metadata_entry configs =

mDeviceInfo.find(ANDROID_SCALER_AVAILABLE_STREAM_CONFIGURATIONS);

for (uint32_t i = 0; i < configs.count; i += 4) {

if (configs.data.i32[i] == HAL_PIXEL_FORMAT_IMPLEMENTATION_DEFINED &&

configs.data.i32[i + 3] ==

ANDROID_SCALER_AVAILABLE_STREAM_CONFIGURATIONS_INPUT) {

mSupportedOpaqueInputSizes.add(Size(configs.data.i32[i + 1],

configs.data.i32[i + 2]));

}

}

return OK;

}

123456789101112131415161718192021222324252627282930313233343536373839404142434445464748495051525354555657585960616263646566676869707172737475767778798081828384858687888990919293949596979899100101102103104105106107108109110111112113114115116117118119120121122123124125126127128129130131132133134135136137138139140141142143144145146147148149150151152153154155156157158159160161

在Camera3Device的初始化中又三个重要的步骤:

第一个:module.open(),打开模块;

第二个:device.ops.initialize()进行HAL层的初始化;

第三个:mRequestThread.run();启动请求线程

至此,framework层的初始化已经完成了,接下来就是HAL层的初始化。

framework层初始化的时序图:

3、HAL层的初始化

由Camera3Device.cpp中的initialize中的device.ops.initialize调用进入到HAL层的初始化

//QCamera3HardwareInterface.cpp

int QCamera3HardwareInterface::initialize(const struct camera3_device *device,

const camera3_callback_ops_t *callback_ops)

{

LOGD("E");

QCamera3HardwareInterface *hw =

reinterpret_cast<QCamera3HardwareInterface *>(device->priv);

if (!hw) {

LOGE("NULL camera device");

return -ENODEV;

}

int rc = hw->initialize(callback_ops);

LOGD("X");

return rc;

}12345678910111213141516

其中调用QCamera3HardwareInterface的initialize(callback_ops),

//QCamera3HardwareInterface.cpp

int QCamera3HardwareInterface::initialize(

const struct camera3_callback_ops *callback_ops)

{

ATRACE_CALL();

int rc;

LOGI("E :mCameraId = %d mState = %d", mCameraId, mState);

pthread_mutex_lock(&mMutex);

// Validate current state

switch (mState) {

case OPENED:

/* valid state */

break;

default:

LOGE("Invalid state %d", mState);

rc = -ENODEV;

goto err1;

}

rc = initParameters();

if (rc < 0) {

LOGE("initParamters failed %d", rc);

goto err1;

}

mCallbackOps = callback_ops;

mChannelHandle = mCameraHandle->ops->add_channel(

mCameraHandle->camera_handle, NULL, NULL, this);

if (mChannelHandle == 0) {

LOGE("add_channel failed");

rc = -ENOMEM;

pthread_mutex_unlock(&mMutex);

return rc;

}

pthread_mutex_unlock(&mMutex);

mCameraInitialized = true;

mState = INITIALIZED;

LOGI("X");

return 0;

err1:

pthread_mutex_unlock(&mMutex);

return rc;

}1234567891011121314151617181920212223242526272829303132333435363738394041424344454647

其中有比较重要的两个步骤:

第一个:initParameters();进行参数的初始化;

第二个:调用mm_camera_intf的add_channel方法;

先看第一个:

int QCamera3HardwareInterface::initParameters()

{

int rc = 0;

//Allocate Set Param Buffer

mParamHeap = new QCamera3HeapMemory(1);

rc = mParamHeap->allocate(sizeof(metadata_buffer_t));

if(rc != OK) {

rc = NO_MEMORY;

LOGE("Failed to allocate SETPARM Heap memory");

delete mParamHeap;

mParamHeap = NULL;

return rc;

}

//Map memory for parameters buffer

rc = mCameraHandle->ops->map_buf(mCameraHandle->camera_handle,

CAM_MAPPING_BUF_TYPE_PARM_BUF,

mParamHeap->getFd(0),

sizeof(metadata_buffer_t),

(metadata_buffer_t *) DATA_PTR(mParamHeap,0));

if(rc < 0) {

LOGE("failed to map SETPARM buffer");

rc = FAILED_TRANSACTION;

mParamHeap->deallocate();

delete mParamHeap;

mParamHeap = NULL;

return rc;

}

mParameters = (metadata_buffer_t *) DATA_PTR(mParamHeap,0);

mPrevParameters = (metadata_buffer_t *)malloc(sizeof(metadata_buffer_t));

return rc;

}

123456789101112131415161718192021222324252627282930313233

使用QCamera3HeapMemory创建了mParamHeap, QCamera3HeapMemory位于QCamera3Mem.cpp中,是一个子类,其参数表示帧数,可以在此控制放入的帧数。

//QCamera3Mem.cpp

QCamera3HeapMemory::QCamera3HeapMemory(uint32_t maxCnt)

: QCamera3Memory()

{

mMaxCnt = MIN(maxCnt, MM_CAMERA_MAX_NUM_FRAMES);

for (uint32_t i = 0; i < mMaxCnt; i ++)

mPtr[i] = NULL;

}12345678

然后就是讲参数buffer与内存map进行映射;

rc = mCameraHandle->ops->map_buf(mCameraHandle->camera_handle,

CAM_MAPPING_BUF_TYPE_PARM_BUF,

mParamHeap->getFd(0),

sizeof(metadata_buffer_t),

(metadata_buffer_t *) DATA_PTR(mParamHeap,0));12345

并且将mParamHeap的头指针指向mParameters,这样通过mParameters就可以控制参数了。

接下来就是看第二个:调用mm_camera_intf.c的add_channel方法;

先在mm_camera_interface.c中找到mm_camera_ops,然后找到add_channel的映射方法mm_camera_intf_add_channel,并进入该方法:

//mm_camera_interface.c

static uint32_t mm_camera_intf_add_channel(uint32_t camera_handle,

mm_camera_channel_attr_t *attr,

mm_camera_buf_notify_t channel_cb,

void *userdata)

{

uint32_t ch_id = 0;

mm_camera_obj_t * my_obj = NULL;

CDBG("%s :E camera_handler = %d", __func__, camera_handle);

pthread_mutex_lock(&g_intf_lock);

my_obj = mm_camera_util_get_camera_by_handler(camera_handle);

if(my_obj) {

pthread_mutex_lock(&my_obj->cam_lock);

pthread_mutex_unlock(&g_intf_lock);

ch_id = mm_camera_add_channel(my_obj, attr, channel_cb, userdata);

} else {

pthread_mutex_unlock(&g_intf_lock);

}

CDBG("%s :X ch_id = %d", __func__, ch_id);

return ch_id;

}1234567891011121314151617181920212223

该方法中又调用mm_camerea.c的add_channel方法mm_camera_add_channel,进如mm_camera.c的add_channel方法中

//mm_camera.c

uint32_t mm_camera_add_channel(mm_camera_obj_t *my_obj,

mm_camera_channel_attr_t *attr,

mm_camera_buf_notify_t channel_cb,

void *userdata)

{

mm_channel_t *ch_obj = NULL;

uint8_t ch_idx = 0;

uint32_t ch_hdl = 0;

for(ch_idx = 0; ch_idx < MM_CAMERA_CHANNEL_MAX; ch_idx++) {

if (MM_CHANNEL_STATE_NOTUSED == my_obj->ch[ch_idx].state) {

ch_obj = &my_obj->ch[ch_idx];

break;

}

}

if (NULL != ch_obj) {

/* initialize channel obj */

memset(ch_obj, 0, sizeof(mm_channel_t));

ch_hdl = mm_camera_util_generate_handler(ch_idx);

ch_obj->my_hdl = ch_hdl;

ch_obj->state = MM_CHANNEL_STATE_STOPPED;

ch_obj->cam_obj = my_obj;

pthread_mutex_init(&ch_obj->ch_lock, NULL);

mm_channel_init(ch_obj, attr, channel_cb, userdata);

}

pthread_mutex_unlock(&my_obj->cam_lock);

return ch_hdl;

}1234567891011121314151617181920212223242526272829303132

该方法又调用mm_camera_channel.c的mm_channel_init方法,进入mm_camera_channel.c的该方法;

//mm_camera_channel.c

int32_t mm_channel_init(mm_channel_t *my_obj,

mm_camera_channel_attr_t *attr,

mm_camera_buf_notify_t channel_cb,

void *userdata)

{

int32_t rc = 0;

my_obj->bundle.super_buf_notify_cb = channel_cb;

my_obj->bundle.user_data = userdata;

if (NULL != attr) {

my_obj->bundle.superbuf_queue.attr = *attr;

}

CDBG("%s : Launch data poll thread in channel open", __func__);

snprintf(my_obj->threadName, THREAD_NAME_SIZE, "DataPoll");

mm_camera_poll_thread_launch(&my_obj->poll_thread[0],

MM_CAMERA_POLL_TYPE_DATA);

/* change state to stopped state */

my_obj->state = MM_CHANNEL_STATE_STOPPED;

return rc;

}1234567891011121314151617181920212223

接着调用mm_camera_thread.c中的mm_camera_poll_thread_launch()方法,

//mm_camera_thread.c

int32_t mm_camera_poll_thread_launch(mm_camera_poll_thread_t * poll_cb,

mm_camera_poll_thread_type_t poll_type)

{

int32_t rc = 0;

size_t i = 0, cnt = 0;

poll_cb->poll_type = poll_type;

//Initialize poll_fds

cnt = sizeof(poll_cb->poll_fds) / sizeof(poll_cb->poll_fds[0]);

for (i = 0; i < cnt; i++) {

poll_cb->poll_fds[i].fd = -1;

}

//Initialize poll_entries

cnt = sizeof(poll_cb->poll_entries) / sizeof(poll_cb->poll_entries[0]);

for (i = 0; i < cnt; i++) {

poll_cb->poll_entries[i].fd = -1;

}

//Initialize pipe fds

poll_cb->pfds[0] = -1;

poll_cb->pfds[1] = -1;

rc = pipe(poll_cb->pfds);

if(rc < 0) {

CDBG_ERROR("%s: pipe open rc=%d\n", __func__, rc);

return -1;

}

poll_cb->timeoutms = -1; /* Infinite seconds */

CDBG("%s: poll_type = %d, read fd = %d, write fd = %d timeout = %d",

__func__, poll_cb->poll_type,

poll_cb->pfds[0], poll_cb->pfds[1],poll_cb->timeoutms);

pthread_mutex_init(&poll_cb->mutex, NULL);

pthread_cond_init(&poll_cb->cond_v, NULL);

/* launch the thread */

pthread_mutex_lock(&poll_cb->mutex);

poll_cb->status = 0;

pthread_create(&poll_cb->pid, NULL, mm_camera_poll_thread, (void *)poll_cb);

if(!poll_cb->status) {

pthread_cond_wait(&poll_cb->cond_v, &poll_cb->mutex);

}

if (!poll_cb->threadName) {

pthread_setname_np(poll_cb->pid, "CAM_poll");

} else {

pthread_setname_np(poll_cb->pid, poll_cb->threadName);

}

pthread_mutex_unlock(&poll_cb->mutex);

CDBG("%s: End",__func__);

return rc;

}

1234567891011121314151617181920212223242526272829303132333435363738394041424344454647484950515253

在这个函数中进行了poll_fds,poll_entries,pipe fds的初始化操作并且调用pthred_create(..,mm_camera_poll_thread,..)启动线程。

//mm_camera_thread.c

static void *mm_camera_poll_thread(void *data)

{

prctl(PR_SET_NAME, (unsigned long)"mm_cam_poll_th", 0, 0, 0);

mm_camera_poll_thread_t *poll_cb = (mm_camera_poll_thread_t *)data;

/* add pipe read fd into poll first */

poll_cb->poll_fds[poll_cb->num_fds++].fd = poll_cb->pfds[0];

mm_camera_poll_sig_done(poll_cb);

mm_camera_poll_set_state(poll_cb, MM_CAMERA_POLL_TASK_STATE_POLL);

return mm_camera_poll_fn(poll_cb);

}12345678910111213

最后返回mm_camera_poll_fn(poll_cb),

//mm_camera_thread.c

static void *mm_camera_poll_fn(mm_camera_poll_thread_t *poll_cb)

{

int rc = 0, i;

if (NULL == poll_cb) {

CDBG_ERROR("%s: poll_cb is NULL!\n", __func__);

return NULL;

}

CDBG("%s: poll type = %d, num_fd = %d poll_cb = %p\n",

__func__, poll_cb->poll_type, poll_cb->num_fds,poll_cb);

do {

for(i = 0; i < poll_cb->num_fds; i++) {

poll_cb->poll_fds[i].events = POLLIN|POLLRDNORM|POLLPRI;

}

rc = poll(poll_cb->poll_fds, poll_cb->num_fds, poll_cb->timeoutms);

if(rc > 0) {

if ((poll_cb->poll_fds[0].revents & POLLIN) &&

(poll_cb->poll_fds[0].revents & POLLRDNORM)) {

/* if we have data on pipe, we only process pipe in this iteration */

CDBG("%s: cmd received on pipe\n", __func__);

mm_camera_poll_proc_pipe(poll_cb);

} else {

for(i=1; i<poll_cb->num_fds; i++) {

/* Checking for ctrl events */

if ((poll_cb->poll_type == MM_CAMERA_POLL_TYPE_EVT) &&

(poll_cb->poll_fds[i].revents & POLLPRI)) {

CDBG("%s: mm_camera_evt_notify\n", __func__);

if (NULL != poll_cb->poll_entries[i-1].notify_cb) {

poll_cb->poll_entries[i-1].notify_cb(poll_cb->poll_entries[i-1].user_data);

}

}

if ((MM_CAMERA_POLL_TYPE_DATA == poll_cb->poll_type) &&

(poll_cb->poll_fds[i].revents & POLLIN) &&

(poll_cb->poll_fds[i].revents & POLLRDNORM)) {

CDBG("%s: mm_stream_data_notify\n", __func__);

if (NULL != poll_cb->poll_entries[i-1].notify_cb) {

poll_cb->poll_entries[i-1].notify_cb(poll_cb->poll_entries[i-1].user_data);

}

}

}

}

} else {

/* in error case sleep 10 us and then continue. hard coded here */

usleep(10);

continue;

}

} while ((poll_cb != NULL) && (poll_cb->state == MM_CAMERA_POLL_TASK_STATE_POLL));

return NULL;

}12345678910111213141516171819202122232425262728293031323334353637383940414243444546474849505152

至此HAL层的初始化工作完成了。

下面是HAL层初始化的时序图:

————————————————

版权声明:本文为CSDN博主「hutongling」的原创文章,遵循CC 4.0 by-sa版权协议,转载请附上原文出处链接及本声明。

原文链接:https://blog.csdn.net/hutongling/article/details/77053920https://blog.csdn.net/hutongling/article/details/77053920

250

250

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?