| [root@dba_bigdata_node2 192.168.10.12 ~]# sed -i 's/#ClientAliveInterval 0/ClientAliveInterval 60/g' /etc/ssh/sshd_config

[root@dba_bigdata_node2 192.168.10.12 ~]# sed -i 's/#ClientAliveCountMax 3/ClientAliveCountMax 60/g' /etc/ssh/sshd_config

[root@dba_bigdata_node2 192.168.10.12 ~]# systemctl daemon-reload && systemctl restart sshd && systemctl status sshd

● sshd.service - OpenSSH server daemon

Loaded: loaded (/usr/lib/systemd/system/sshd.service; enabled; vendor preset: enabled)

Active: active (running) since 五 2022-09-09 14:07:43 CST; 5ms ago

Docs: man:sshd(8)

man:sshd_config(5)

Main PID: 4449 (sshd)

CGroup: /system.slice/sshd.service

└─4449 /usr/sbin/sshd -D

9月 09 14:07:43 dba_bigdata_node2 systemd[1]: Starting OpenSSH server daemon...

9月 09 14:07:43 dba_bigdata_node2 sshd[4449]: Server listening on 0.0.0.0 port 22.

9月 09 14:07:43 dba_bigdata_node2 sshd[4449]: Server listening on :: port 22.

9月 09 14:07:43 dba_bigdata_node2 systemd[1]: Started OpenSSH server daemon.

[root@dba_bigdata_node2 192.168.10.12 ~]# 2.1.6 Yum 源修改 修改为阿里云或者中科大的源地址 阿里:baseurl=http://mirrors.cloud.aliyuncs.com/alinux 中科大:baseurl=https://mirrors.ustc.edu.cn/centos

sed -e 's|^mirrorlist=|#mirrorlist=|g' \

-e 's|^#baseurl=http://mirror.centos.org/centos|baseurl=https://mirrors.ustc.edu.cn/centos|g' \

-i.bak \

/etc/yum.repos.d/CentOS-Base.repo |

重新制作缓存

| yum clean all ;yum makecache |

安装epel源

| yum install -y epel-release |

升级系统软件包

2.1.7 安装python环境

| yum install python2-devel libffi-devel openssl-devel libselinux-python -y yum install python-pip -y |

配置清华的pip源,加快速度

mkdir ~/.pip

cat > ~/.pip/pip.conf << EOF

[global]

index-url = https://pypi.tuna.tsinghua.edu.cn/simple

[install]

trusted-host=pypi.tuna.tsinghua.edu.cn

EOF |

升级pip

pip install --upgrade "pip < 21.0"

pip install pbr |

2.2 时间同步 2.2.1 安装chrony服务

| [root@dba_bigdata_node1 192.168.10.11 ~]# yum -y install chrony |

2.2.2 设置为阿里地址

[root@dba_bigdata_node1 192.168.10.11 ~]# cp /etc/chrony.conf{,.bak}

[root@dba_bigdata_node1 192.168.10.11 ~]# echo "

server ntp1.aliyun.com iburst

server ntp2.aliyun.com iburst

server ntp6.aliyun.com iburst

stratumweight 0

driftfile /var/lib/chrony/drift

rtcsync

makestep 10 3

bindcmdaddress 127.0.0.1

bindcmdaddress ::1

keyfile /etc/chrony.keys

commandkey 1

generatecommandkey

noclientlog

logchange 0.5

logdir /var/log/chrony

">/etc/chrony.conf |

2.2.3 启动服务

| systemctl enable chronyd && systemctl restart chronyd && systemctl status chronyd |

2.2.4 chrony 同步源

[root@dba_bigdata_node1 192.168.10.11 ~]# chronyc sources -v

[root@dba_bigdata_node1 192.168.10.11 ~]# yum install ntpdate

[root@dba_bigdata_node1 192.168.10.11 ~]# ntpdate ntp1.aliyun.com

#系统时间写入到硬件时间

[root@dba_bigdata_node1 192.168.10.11 ~]# hwclock -w |

2.2.5 配置定时任务

| crontab -e0 */1 * * * ntpdate ntp1.aliyun.com > /dev/null 2>&1; /sbin/hwclock -w0 */1 * * * ntpdate ntp2.aliyun.com > /dev/null 2>&1; /sbin/hwclock -w |

2.2 Docker-ce 2.2.1 设置yum源 设置docker-ce源,并设置为国内的地址。

wget -O /etc/yum.repos.d/docker-ce.repo https://download.docker.com/linux/centos/docker-ce.repo

sed -i 's+download.docker.com+mirrors.tuna.tsinghua.edu.cn/docker-ce+' /etc/yum.repos.d/docker-ce.repo |

2.2.2 卸载现在的Docker信息

[root@dba_bigdata_node1 192.168.10.11 ~]# yum remove docker docker-common docker-selinux docker-engine -y

已加载插件:fastestmirror

参数 docker 没有匹配

参数 docker-common 没有匹配

参数 docker-selinux 没有匹配

参数 docker-engine 没有匹配

不删除任何软件包 |

2.2.3 安装docker

2.2.4 配置国内docker推送地址

mkdir /etc/docker/

cat > /etc/docker/daemon.json << EOF

{

"registry-mirrors": [

"https://registry.docker-cn.com",

"http://hub-mirror.c.163.com",

"https://docker.mirrors.ustc.edu.cn"

],

"insecure-registries": ["172.16.0.200:5000","192.168.10.11:4000"]

}

EOF |

其中172.16.0.200:5000是另外一个docker私有地址。可以同时用多个。 2.2.5 开启docker共享模式

mkdir -p /etc/systemd/system/docker.service.dcat >> /etc/systemd/system/docker.service.d/kolla.conf << EOF[Service]MountFlags=sharedEOF

2.2.6 开启docker服务

| systemctl daemon-reload && systemctl enable docker && systemctl restart docker&& systemctl status docker |

查看docker信息

[root@dba_bigdata_node1 192.168.10.11 ~]# docker info

Client:

Context: default

Debug Mode: false

Plugins:

app: Docker App (Docker Inc., v0.9.1-beta3)

buildx: Docker Buildx (Docker Inc., v0.8.2-docker)

scan: Docker Scan (Docker Inc., v0.17.0)

Server:

Containers: 0

Running: 0

Paused: 0

Stopped: 0

Images: 0

Server Version: 20.10.17

Storage Driver: overlay2 |

2.2.7 docker region #拉取registry镜像

设置配置目录

| [root@dba_bigdata_node1 192.168.10.11 kolla]# vim /etc/docker/registry/config.yml

version: 0.1

log:

fields: service: registry

storage:

cache:

blobdescriptor: inmemory

filesystem:

rootdirectory: /var/lib/registry

http:

addr: :5000

headers:

X-Content-Type-Options: [nosniff]

Access-Control-Allow-Origin: ['*']

Access-Control-Allow-Methods: ['*']

Access-Control-Max-Age: [1728000]

health:

storagedriver:

enabled: true

interval: 10s

threshold: 3 |

启动内部源

docker run -d \--name registry \ -p 4000:5000 \ -v /etc/docker/registry/config.yml:/etc/docker/registry/config.yml \ registry:latest[root@dba_bigdata_node1 192.168.10.11 kolla]# docker psCONTAINER ID IMAGE COMMAND CREATED STATUS PORTS NAMES7db4f7e1abbf registry:latest "/entrypoint.sh /etc…" 2 seconds ago Up 1 second registry

修改内部源地址

[root@dba_bigdata_node1 192.168.10.11 kolla]# vim /etc/docker/daemon.json"insecure-registries": ["172.16.212.241:5000","192.168.10.11:4000"]

重启docker

[root@dba_bigdata_node1 192.168.10.11 kolla]# systemctl restart docker[root@dba_bigdata_node1 192.168.10.11 kolla]# docker start registry

查看docker信息

[root@dba_bigdata_node1 192.168.10.11 kolla]# docker infoInsecure Registries: 172.16.212.241:5000 192.168.10.11:5000 127.0.0.0/8

测试是否可用 #查看docker中的images信息

[root@dba_bigdata_node1 192.168.10.11 kolla]# docker imagesREPOSITORY TAG IMAGE ID CREATED SIZEregistry latest 3a0f7b0a13ef 4 weeks ago 24.1MB#重命名docker images名字[root@dba_bigdata_node1 192.168.10.11 kolla]# docker tag registry 192.168.10.11:4000/registry#将本地images推送到内部源[root@dba_bigdata_node1 192.168.10.11 kolla]# docker push 192.168.10.11:4000/registryUsing default tag: latestThe push refers to repository [192.168.10.11:4000/registry]73130e341eaf: Pushed692a418a42be: Pushedd3db20e71506: Pushed145b66c455f7: Pushed994393dc58e7: Pushedlatest: digest: sha256:29f25d3b41a11500cc8fc4e19206d483833a68543c30aefc8c145c8b1f0b1450 size: 1363

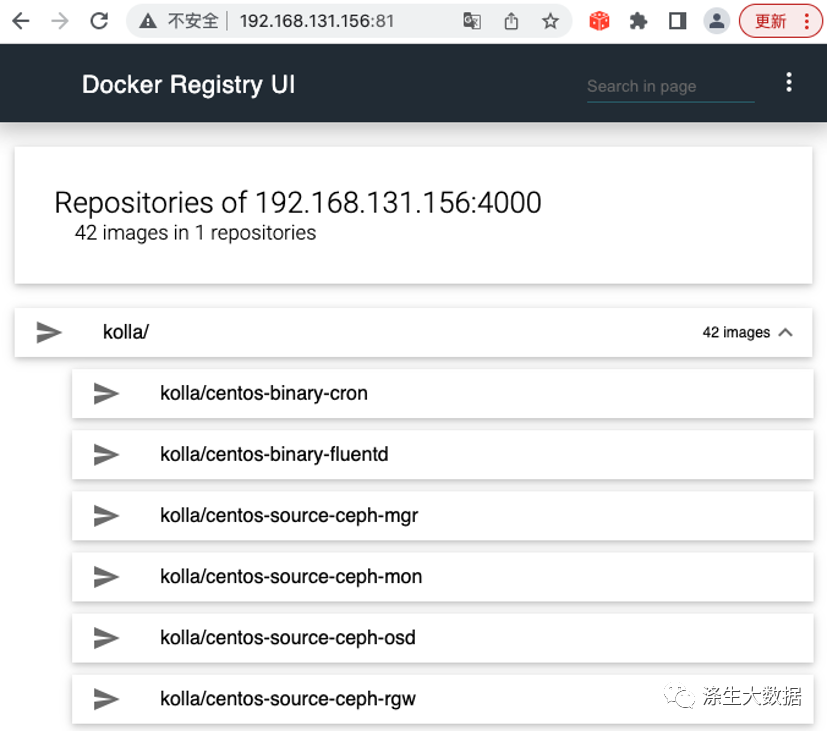

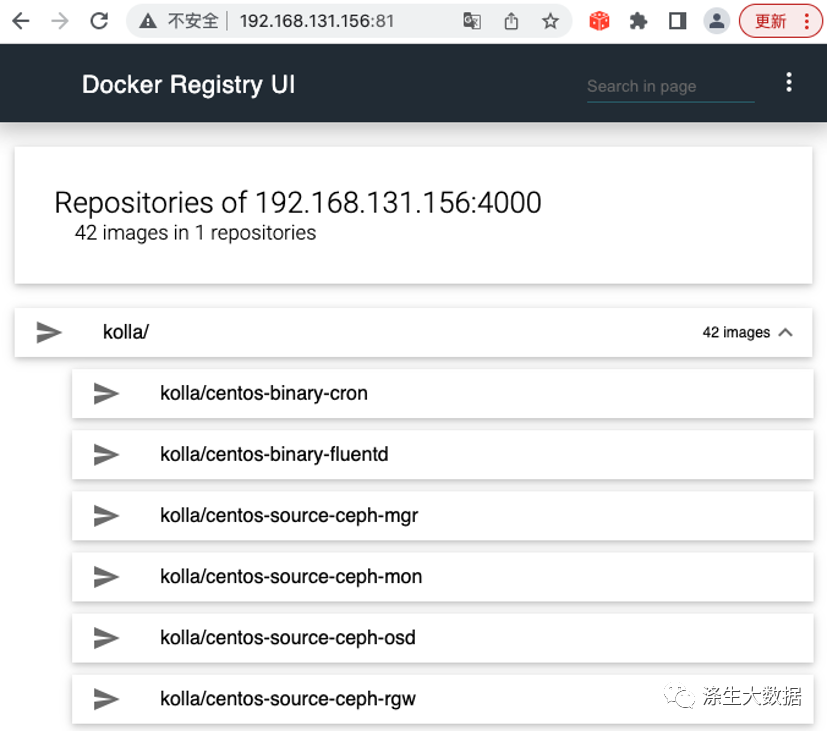

2.2.8 docker region UI 配置Docker UI 与nginx代理。通过UI管理查看docker images信息

#制作docker - uidocker run -p 81:80 \ --name registry-ui \ -e REGISTRY_URL="http://192.168.10.11:5000" \ -d 172.16.212.241:5000/docker-registry-ui:latest

访问地址:http://192.168.10.11:81/

可以查看具体的docker images信息

2.3 Ansible 2.3.1 安装ansible 安装ansible,只需要在node1主机安装配置即可。

[root@dba_bigdata_node1 192.168.10.11 ~]# yum install -y ansible

2.3.2 配置ansible默认配置

sed -i 's/#host_key_checking = False/host_key_checking = True/g' /etc/ansible/ansible.cfgsed -i 's/#pipelining = False/pipelining = True/g' /etc/ansible/ansible.cfgsed -i 's/#forks = 5/forks = 100/g' /etc/ansible/ansible.cfg

2.3.3 配置ansible主机信息

[root@dba_bigdata_node1 192.168.10.11 ~]# echo "dba_bigdata_node[1:3]" >>/etc/ansible/hosts

2.3.4 验证是否安装完毕

[root@dba_bigdata_node1 192.168.10.11 ~]# ansible all -m pingdba_bigdata_node1 | SUCCESS => {"ansible_facts": { "discovered_interpreter_python": "/usr/bin/python" }, "changed": false, "ping": "pong"}dba_bigdata_node3 | SUCCESS => { "ansible_facts": { "discovered_interpreter_python": "/usr/bin/python" }, "changed": false, "ping": "pong"}dba_bigdata_node2 | SUCCESS => { "ansible_facts": { "discovered_interpreter_python": "/usr/bin/python" }, "changed": false, "ping": "pong"}

2.4 Ansible Kolla 以下安装步骤,在主机node1中进行即可。 2.4.1 安装kolla-ansible

[root@dba_bigdata_node1 192.168.10.11 ~]# pip install kolla-ansible==9.4.0

若安装报错。

pip install --upgrade setuptoolspip install --upgrade pippip install pbr==5.6.0

2.4.2 设置默认配置到当前目录

mkdir -p /etc/kollachown $USER:$USER /etc/kollacp -r /usr/share/kolla-ansible/etc_examples/kolla/* /etc/kollacp /usr/share/kolla-ansible/ansible/inventory/* .

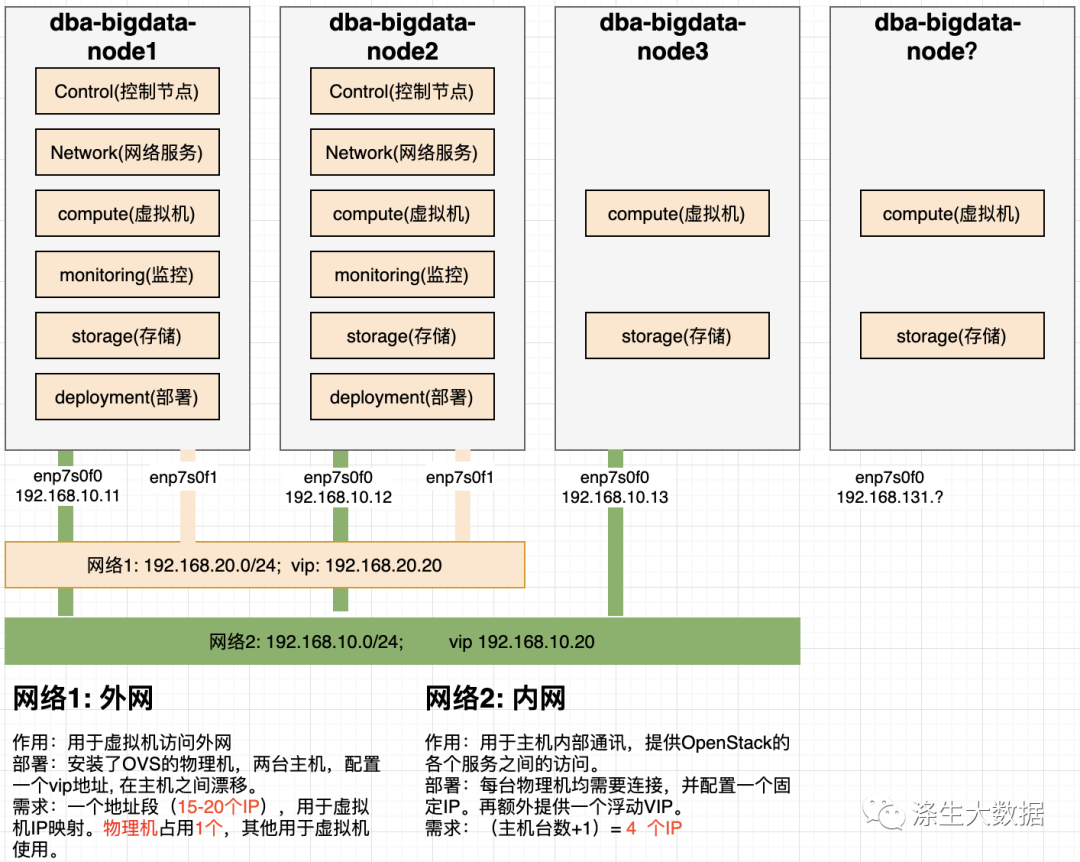

2.4.3 配置安装主机列表 编辑/root/multinode

# These initial groups are the only groups required to be modified. The# additional groups are for more control of the environment.[control]# These hostname must be resolvable from your deployment hostdba_bigdata_node1dba_bigdata_node2dba_bigdata_node3

# The above can also be specified as follows:#control[01:03] ansible_user=kolla

# The network nodes are where your l3-agent and loadbalancers will run# This can be the same as a host in the control group[network]dba_bigdata_node1dba_bigdata_node2dba_bigdata_node3

[compute]dba_bigdata_node1dba_bigdata_node2dba_bigdata_node3

[monitoring]dba_bigdata_node1dba_bigdata_node2dba_bigdata_node3

# When compute nodes and control nodes use different interfaces,# you need to comment out "api_interface" and other interfaces from the globals.yml# and specify like below:#compute01 neutron_external_interface=eth0 api_interface=em1 storage_interface=em1 tunnel_interface=em1

[storage]dba_bigdata_node1dba_bigdata_node2dba_bigdata_node3

[deployment]dba_bigdata_node1dba_bigdata_node2dba_bigdata_node3

2.4.4 配置kolla安装设置

vim /etc/kolla/globals.yml

[root@dba_bigdata_node1 192.168.10.11 kolla]# cat globals.yml |egrep -v '^#|^$'---kolla_base_distro: "centos"kolla_install_type: "source"openstack_release: "train"node_custom_config: "/etc/kolla/config"kolla_internal_vip_address: "192.168.10.20"docker_namespace: "kolla"network_interface: "enp7s0f0"api_interface: "{{ network_interface }}"storage_interface: "{{ network_interface }}"cluster_interface: "{{ network_interface }}"tunnel_interface: "{{ network_interface }}"dns_interface: "{{ network_interface }}"neutron_external_interface: "enp7s0f1"neutron_plugin_agent: "openvswitch"neutron_tenant_network_types: "vxlan,vlan,flat"keepalived_virtual_router_id: "51"enable_haproxy: "yes"enable_mariadb: "yes"enable_memcached: "yes"enable_ceilometer: "yes"enable_cinder: "yes"enable_cinder_backup: "yes"enable_cinder_backend_lvm: "yes"enable_gnocchi: "yes"enable_heat: "yes"enable_neutron_dvr: "yes"enable_neutron_qos: "yes"enable_neutron_agent_ha: "yes"enable_neutron_provider_networks: "yes"enable_nova_ssh: "yes"cinder_volume_group: "cinder-volumes"nova_compute_virt_type: "qemu"nova_console: "novnc"

生产随机密码

kolla-genpwd[root@dba_bigdata_node1 192.168.10.11 kolla]# cat /etc/kolla/passwords.yml.....zfssa_iscsi_password: XL8XeUdxaowGT9HUsOnGpxJyalvlm9YV6xNvZZx0zun_database_password: lhxgYCRaiN4ACvFgISIbgimGxkUe1z3pVgU0CYxdzun_keystone_password: ZDNuGXjOUyme28dUX1fWKbXdDDvItAxxtjYRwiUx

修改登录密码

sed -i 's/^keystone_admin_password.*/keystone_admin_password: 123456/' /etc/kolla/passwords.yml

2.4.5 LVM盘 发现CEPH制作盘的时候,无法创建主机,所以修改为LVM。 创建逻辑盘 pvcreate /dev/sdb 创建逻辑卷 vgcreate cinder-volumes /dev/sdb 查看是否创建成功

[root@dba-bigdata-node1 192.168.10.11 /]# vgsVG #PV #LV #SN Attr VSize VFree centos 1 3 0 wz--n- 1.63t 0 cinder-volumes 1 2 0 wz--n- <3.82t <195.21g[root@dba-bigdata-node1 192.168.10.11 /]# pvs PV VG Fmt Attr PSize PFree /dev/sda2 centos lvm2 a-- 1.63t 0 /dev/sdb cinder-volumes lvm2 a-- <3.82t <195.21g[root@dba-bigdata-node1 192.168.10.11 /]# lvs LV VG Attr LSize Pool Origin Data% Meta% Move Log Cpy%Sync Convert home centos -wi-ao---- 1.58t root centos -wi-ao---- 50.00g swap centos -wi-ao---- 4.00g cinder-volumes-pool cinder-volumes twi-aotz-- <3.63t 0.00 10.41 volume-beb1939a-b444-4ea0-b326-86f924fe3a50 cinder-volumes Vwi-a-tz-- 100.00g cinder-volumes-pool 0.00

修改kolla的配置为LVM

enable_cinder: "yes"enable_cinder_backup: "yes"enable_cinder_backend_lvm: "yes"cinder_volume_group: "cinder-volumes"

2.4.6 配置nova文件

mkdir /etc/kolla/configmkdir /etc/kolla/config/novacat >> /etc/kolla/config/nova/nova-compute.conf << EOF[libvirt]virt_type = qemucpu_mode = noneEOF

2.4.7 关闭创建新卷

mkdir /etc/kolla/config/horizon/cat >> /etc/kolla/config/horizon/custom_local_settings << EOFLAUNCH_INSTANCE_DEFAULTS = {'create_volume': False,}EOF

2.4.8 创建ceph配置

cat >> /etc/kolla/config/ceph.conf << EOF[global]osd pool default size = 3osd pool default min size = 2mon_clock_drift_allowed = 2osd_pool_default_pg_num = 8osd_pool_default_pgp_num = 8mon clock drift warn backoff = 30EOF

2.3 OpenStack 安装 2.3.1 编辑docker包 预先下载并推送docker包到各个节点,加快安装时候的速度 kolla-ansible -i /root/multinode pull

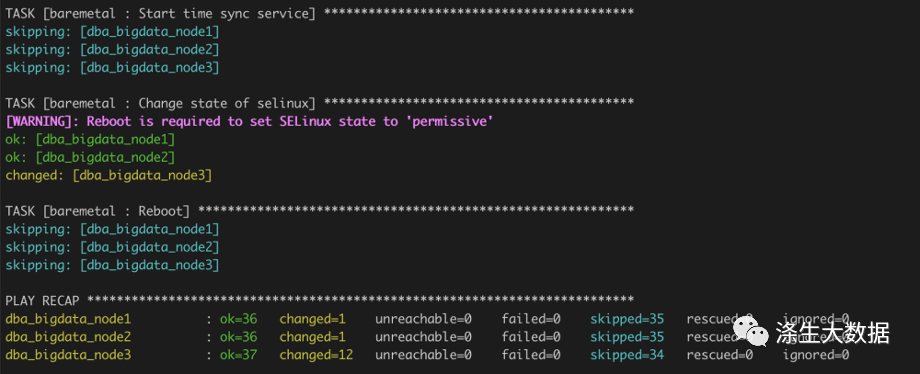

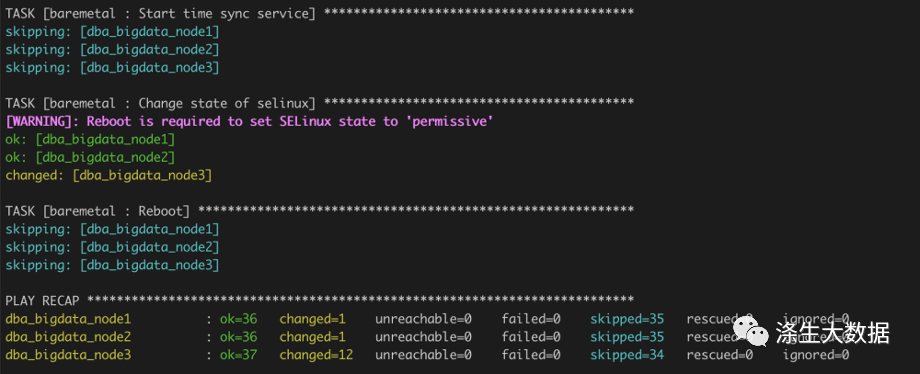

2.3.2 各节点依赖安装 kolla-ansible -i /root/multinode bootstrap-servers

2.3.3 执行安装前检查 kolla-ansible -i /root/multinode prechecks

2.3.4 部署 部署之前,先下载所有所有的docker依赖安装包,并推送到所有主机上,以加快部署

kolla-ansible -i /root/multinode pull

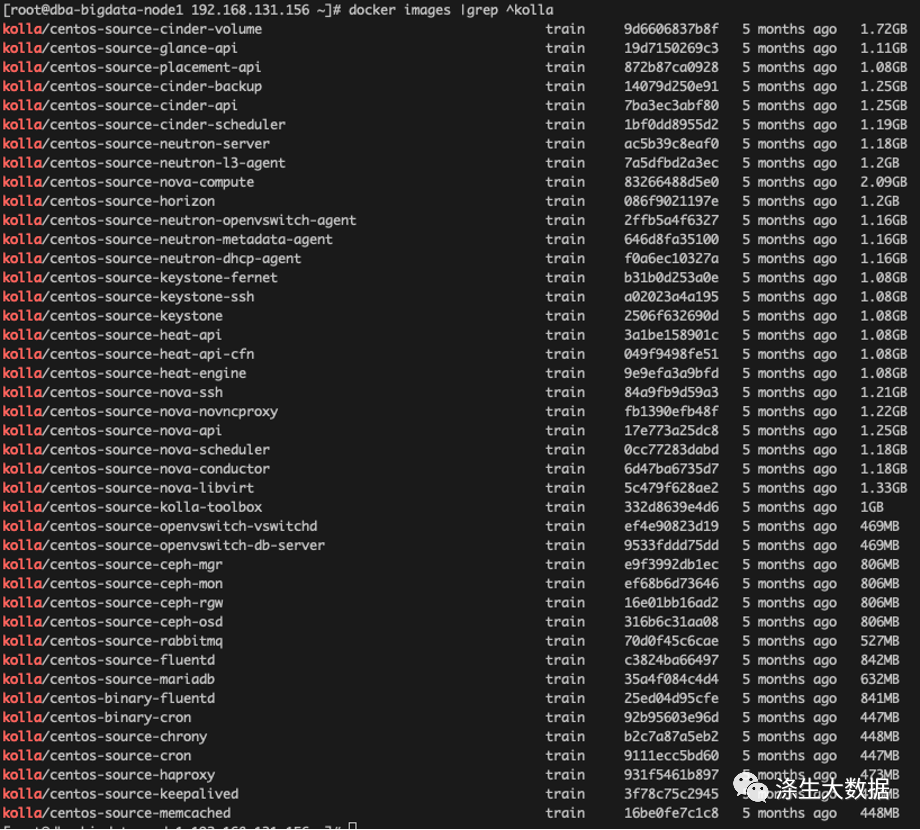

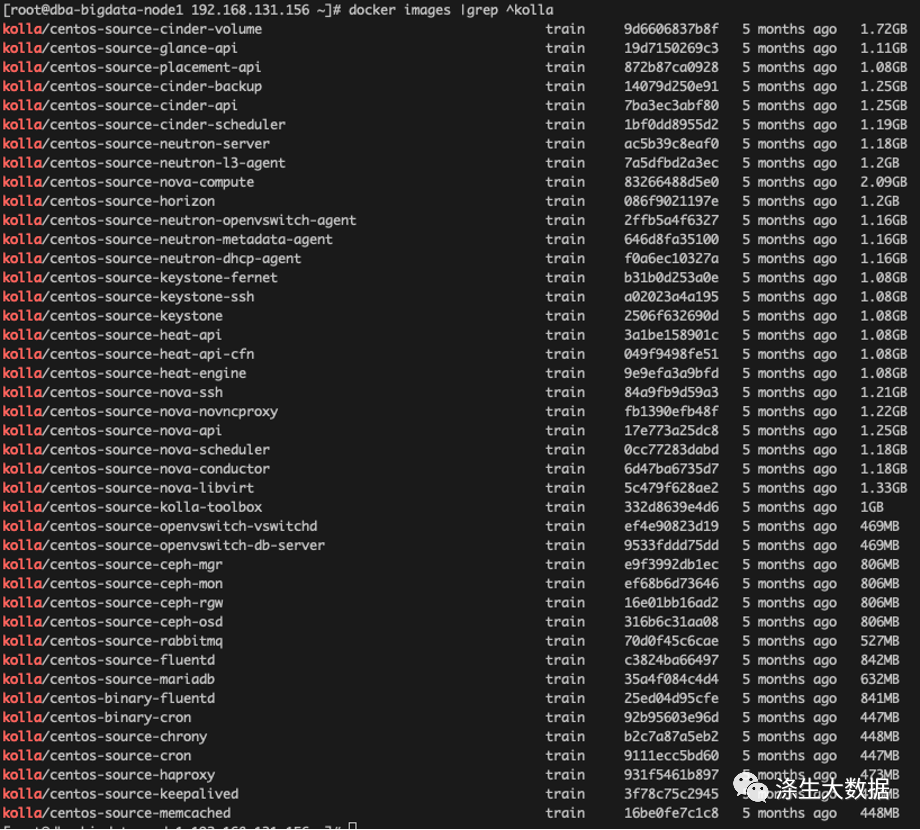

到主机通过docker images上查看

为了以后使用,让这些安装的包推送到docker registry 重命名:docker tag 原名:tag 新名:tag

docker images |grep ^kolla|grep 'train ' |awk '{print "docker tag "$1 ":" $2 " 192.168.10.11:4000/" $1 ":" $2 }'

将本地的image信息,推送到本地源

docker images |grep ^kolla|grep 'train ' |awk '{print "docker push 192.168.10.11:4000/" $1 ":" $2 }'

查看是否推送完毕

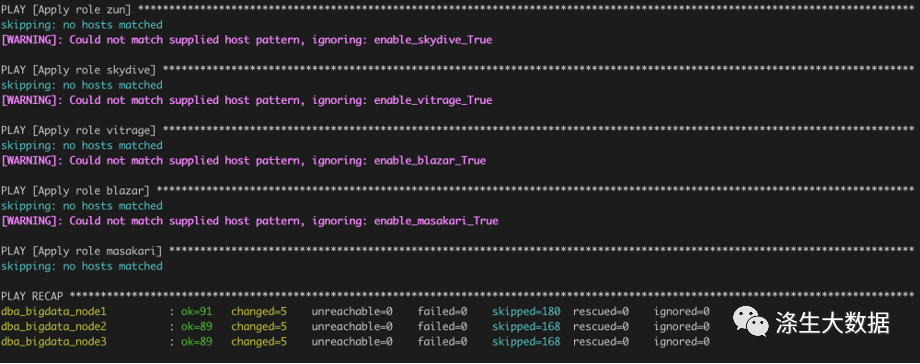

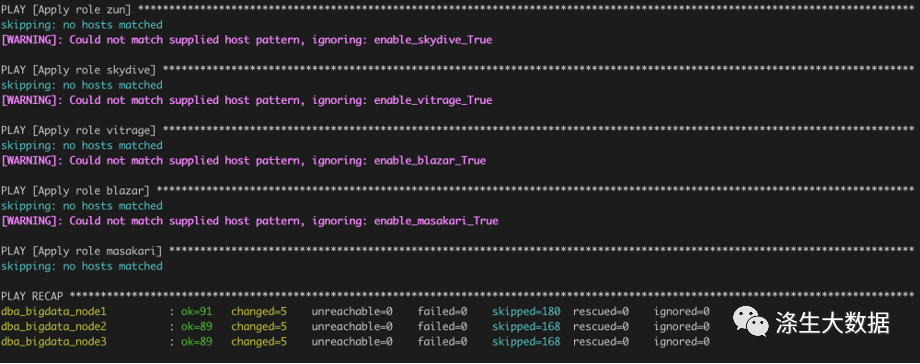

开始执行实际的安装:大概需要等待半小时左右。 kolla-ansible -i /root/multinode deploy 2.3.5 初始化 [root@dba-bigdata-node1 192.168.10.11 ~]# kolla-ansible -i multinode post-deploy 会在本机,生成console需要的各种变量

root@dba-bigdata-node1 192.168.10.11 ~]# cat /etc/kolla/admin-openrc.sh# Clear any old environment that may conflict.for key in $( set | awk '{FS="="} /^OS_/ {print $1}' ); do unset $key ; doneexport OS_PROJECT_DOMAIN_NAME=Defaultexport OS_USER_DOMAIN_NAME=Defaultexport OS_PROJECT_NAME=adminexport OS_TENANT_NAME=adminexport OS_USERNAME=adminexport OS_PASSWORD='4rfv$RFV094'export OS_AUTH_URL=http://192.168.10.20:35357/v3export OS_INTERFACE=internalexport OS_ENDPOINT_TYPE=internalURLexport OS_IDENTITY_API_VERSION=3export OS_REGION_NAME=RegionOneexport OS_AUTH_PLUGIN=password

安装openstack命令行程序

yum install centos-release-openstack-train -yyum makecache fastyum install python-openstackclient -y

修改初始化脚本,并执行初始化:/usr/share/kolla-ansible/init-runonce

ENABLE_EXT_NET=${ENABLE_EXT_NET:-1}EXT_NET_CIDR=${EXT_NET_CIDR:-'10.0.2.0/24'}EXT_NET_RANGE=${EXT_NET_RANGE:-'start=10.0.2.150,end=10.0.2.199'}EXT_NET_GATEWAY=${EXT_NET_GATEWAY:-'10.0.2.1'}

浏览器访问OpenStack 页面:http://192.168.10.20即可访问 |

1140

1140

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?