CentOS7+Openstack+KVM

-

- Openstack入门简介

OpenStack是一个由美国国家航空航天局(National Aeronautics and Space Administration,NASA)和Rackspace合作研发并发起的,以Apache许可证授权的自由软件和开放源代码项目,OpenStack是一个开源的云计算管理平台项目,由几个主要的组件组合起来完成具体工作。

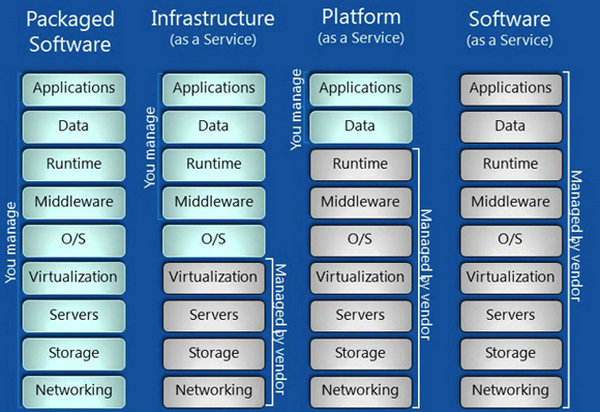

OpenStack支持几乎所有类型的云环境,项目目标是提供实施简单、可大规模扩展、丰富、标准统一的云计算管理平台。OpenStack通过各种互补的服务提供了基础设施即服务(Infrastructure as a Service,IaaS)的解决方案,每个服务提供API以进行集成,当然除了Iaas解决方案,还有主流的平台即服务(Platform-as-a-Service,PaaS)和软件即服务(Software-as-a-Service,SaaS),三者区别如图所示:

OpenStack是一个旨在为公共及私有云的建设与管理提供软件的开源项目。它的社区拥有超过130家企业及1350位开发者,这些机构与个人都将OpenStack作为基础设施即服务(IaaS)资源的通用前端。

OpenStack项目的首要任务是简化云的部署过程并为其带来良好的可扩展性。本文希望通过提供必要的指导信息,帮助大家利用OpenStack前端来设置及管理自己的公共云或私有云。

OpenStack云计算平台,帮助服务商和企业内部实现类似于 Amazon EC2 和 S3 的云基础架构服务(Infrastructure as a Service, IaaS)。

OpenStack 包含两个主要模块:Nova 和 Swift,前者是 NASA 开发的虚拟服务器部署和业务计算模块;后者是 Rackspace开发的分布式云存储模块,两者可以一起用,也可以分开单独用。

OpenStack除了有 Rackspace 和 NASA 的大力支持外,还有包括 Dell、Citrix、 Cisco、 Canonical等重量级公司的贡献和支持,发展速度非常快,有取代另一个业界领先开源云平台 Eucalyptus 的态势。

-

- Opentstack核心组件

OpenStack覆盖了网络、虚拟化、操作系统、服务器等各个方面。它是一个正在开发中的云计算平台项目,根据成熟及重要程度的不同,被分解成核心项目、孵化项目,以及支持项目和相关项目。每个项目都有自己的委员会和项目技术主管,而且每个项目都不是一成不变的,孵化项目可以根据发展的成熟度和重要性,转变为核心项目。截止到Icehouse版本,下面列出了10个核心项目(即OpenStack服务)。

计算(Compute):Nova,一套控制器,用于为单个用户或使用群组管理虚拟机实例的整个生命周期,根据用户需求来提供虚拟服务。负责虚拟机创建、开机、关机、挂起、暂停、调整、迁移、重启、销毁等操作,配置CPU、内存等信息规格。

对象存储(Object Storage):Swift,一套用于在大规模可扩展系统中通过内置冗余及高容错机制实现对象存储的系统,允许进行存储或者检索文件,可为Glance提供镜像存储,为Cinder提供卷备份服务。

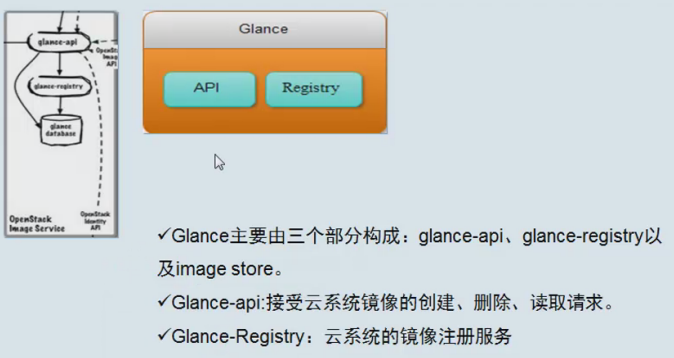

镜像服务(Image Service):Glance,一套虚拟机镜像查找及检索系统,支持多种虚拟机镜像格式(AKI、AMI、ARI、ISO、QCOW2、Raw、VDI、VHD、VMDK),有创建上传镜像、删除镜像、编辑镜像基本信息的功能。

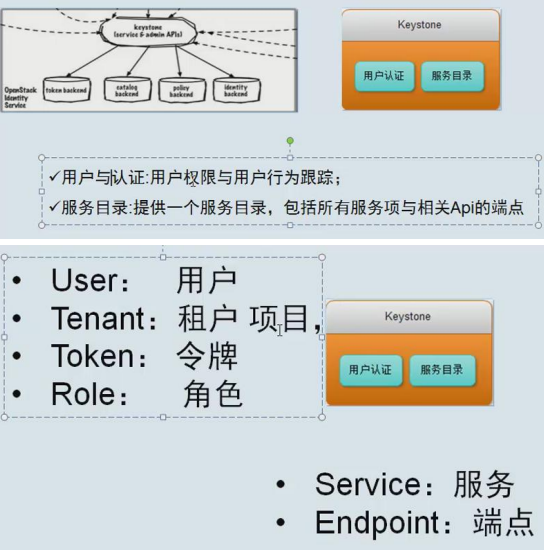

身份服务(Identity Service):Keystone,为OpenStack其他服务提供身份验证、服务规则和服务令牌的功能,管理Domains、Projects、Users、Groups、Roles。

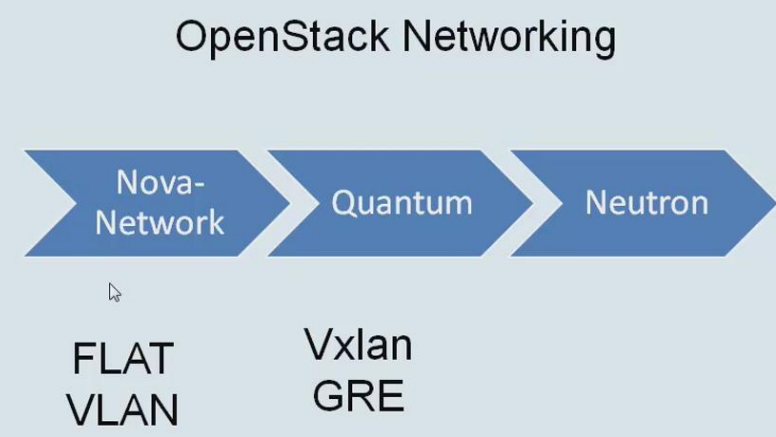

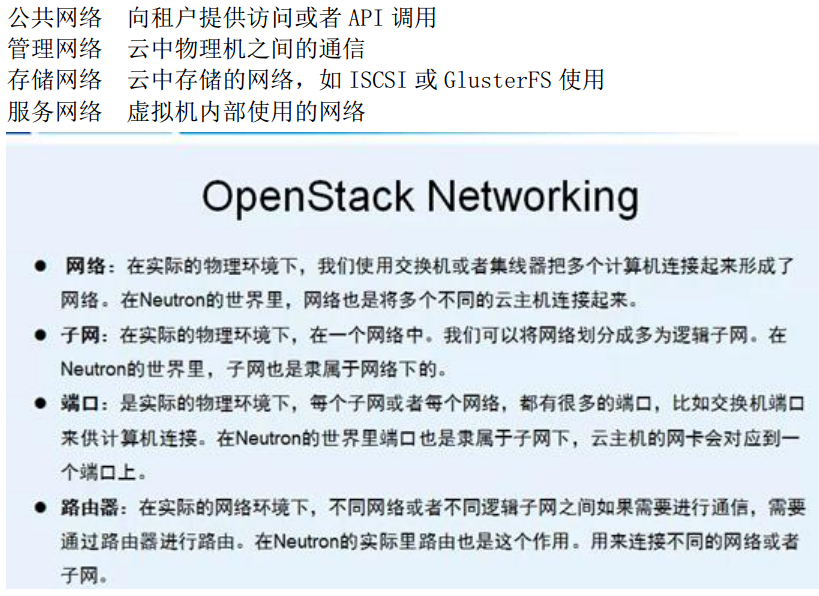

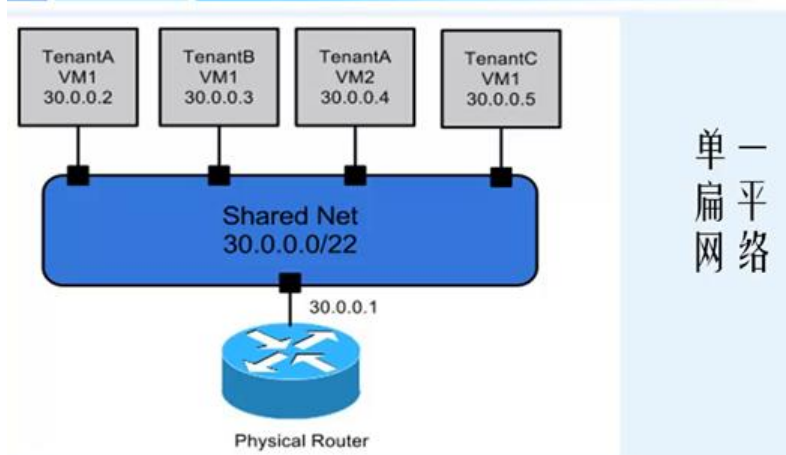

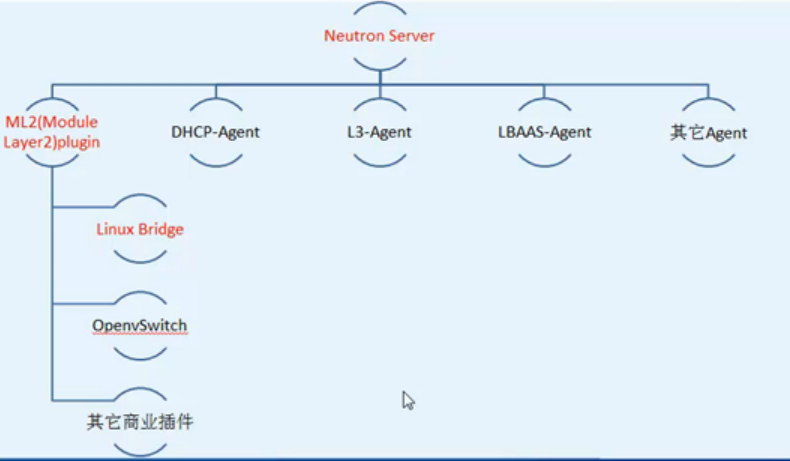

网络&地址管理(Network):Neutron,提供云计算的网络虚拟化技术,为OpenStack其他服务提供网络连接服务。为用户提供接口,可以定义Network、Subnet、Router,配置DHCP、DNS、负载均衡、L3服务,网络支持GRE、VLAN。插件架构支持许多主流的网络厂家和技术,如OpenvSwitch。

块存储 (Block Storage):Cinder,为运行实例提供稳定的数据块存储服务,它的插件驱动架构有利于块设备的创建和管理,如创建卷、删除卷,在实例上挂载和卸载卷。

UI 界面 (Dashboard):Horizon,OpenStack中各种服务的Web管理门户,用于简化用户对服务的操作,例如:启动实例、分配IP地址、配置访问控制等。

测量 (Metering):Ceilometer,像一个漏斗一样,能把OpenStack内部发生的几乎所有的事件都收集起来,然后为计费和监控以及其它服务提供数据支撑。

部署编排 (Orchestration):Heat[2],提供了一种通过模板定义的协同部署方式,实现云基础设施软件运行环境(计算、存储和网络资源)的自动化部署。

数据库服务(Database Service):Trove,为用户在OpenStack的环境提供可扩展和可靠的关系和非关系数据库引擎服务。

-

- Openstack准备环境

| 操作系统版本:CentOS Linux release 7.3.1611 10.150.150.239 node1控制节点 192.168.1.121 node2计算节点 192.168.1.122 node3计算节点(可选) 控制节点主要用于操控计算节点,计算节点上创建虚拟机。 |

控制节点配置服务如图所示:

计算节点配置服务如图所示:

-

- Hosts及防火墙设置

node1、node2节点进行如下配置:

| 127.0.0.1 localhost localhost.localdomain #103.27.60.52 mirror.centos.org 66.241.106.180 mirror.centos.org 10.150.150.239 node1 192.168.238.153 node2 192.168.238.154 node3 EOF sed -i '/SELINUX/s/enforcing/disabled/g' /etc/sysconfig/selinux setenforce 0 systemctl stop firewalld.service systemctl disable firewalld.service ntpdate cn.pool.ntp.org #保持主节点、计算节点时间同步; hostname `cat /etc/hosts|grep $(ifconfig|grep broadcast|awk '{print $2}')|awk '{print $2}'`;su |

-

- Openstack服务安装

Node1主节点安装如下服务:

Node1节点创建数据库配置:

| grant all privileges on keystone.* to 'keystone'@'localhost' identified by 'keystone'; grant all privileges on keystone.* to 'keystone'@'%' identified by 'keystone'; grant all privileges on keystone.* to 'keystone'@'node1' identified by 'keystone'; create database glance; grant all privileges on glance.* to 'glance'@'localhost' identified by 'glance'; grant all privileges on glance.* to 'glance'@'%' identified by 'glance'; grant all privileges on glance.* to 'glance'@'node1' identified by 'glance'; create database nova; grant all privileges on nova.* to 'nova'@'localhost' identified by 'nova'; grant all privileges on nova.* to 'nova'@'%' identified by 'nova'; grant all privileges on nova.* to 'nova'@'node1' identified by 'nova'; create database nova_api; grant all privileges on nova_api.* to 'nova'@'localhost' identified by 'nova'; grant all privileges on nova_api.* to 'nova'@'%' identified by 'nova'; grant all privileges on nova_api.* to 'nova'@'node1' identified by 'nova'; create database nova_cell0; grant all privileges on nova_cell0.* to 'nova'@'localhost' identified by 'nova'; grant all privileges on nova_cell0.* to 'nova'@'%' identified by 'nova'; grant all privileges on nova_cell0.* to 'nova'@'node1' identified by 'nova'; create database neutron; grant all privileges on neutron.* to 'neutron'@'localhost' identified by 'neutron'; grant all privileges on neutron.* to 'neutron'@'%' identified by 'neutron'; grant all privileges on neutron.* to 'neutron'@'node1' identified by 'neutron'; create database cinder; grant all privileges on cinder.* to 'cinder'@'localhost' identified by 'cinder'; grant all privileges on cinder.* to 'cinder'@'%' identified by 'cinder'; grant all privileges on cinder.* to 'cinder'@'node1' identified by 'cinder'; flush privileges; exit |

-

- MQ服务配置

MQ 全称为 Message Queue, 消息队列( MQ)是一种应用程序对应用程序的通信方法,应用程序通过读写出入队列的消息(针对应用程序的数据)来通信,而无需专用连接来链接它们。

-

-

- MQ消息队列简介

-

消息队列中间件是分布式系统中重要的组件,主要解决应用耦合,异步消息,流量削锋等问题,实现高性能,高可用,可伸缩和最终一致性架构。主流消息队列包括:ActiveMQ,RabbitMQ,ZeroMQ,Kafka,MetaMQ,RocketMQ等。

消息传递指的是程序之间通过在消息中发送数据进行通信,而不是通过直接调用彼此来通信,直接调用通常是用于诸如远程过程调用的技术。

排队指的是应用程序通过队列来通信,队列的使用除去了接收和发送应用程序同时执行的要求。RabbitMQ 是一个在 AMQP 基础上完整的,可复用的企业消息系统,遵循GPL开源协议。

Openstack的架构决定了需要使用消息队列机制来实现不同模块间的通信,通过消息验证、消息转换、消息路由架构模式,带来的好处就是可以是模块之间最大程度解耦,客户端不需要关注服务端的位置和是否存在,只需通过消息队列进行信息的发送。

RabbitMQ适合部署在一个拓扑灵活易扩展的规模化系统环境中,有效保证不同模块、不同节点、不同进程之间消息通信的时效性,可有效支持OpenStack云平台系统的规模化部署、弹性扩展、灵活架构以及信息安全的需求。

-

-

- Rabbitmq应用场景

-

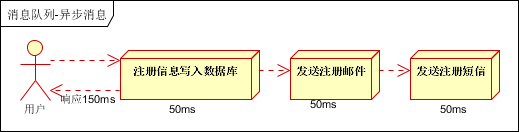

1)MQ异步信息场景:

MQ应用的场合非常的多,例如某个论坛网站,用户注册信息后,需要发注册邮件和注册短信,如图所示:

将注册信息写入数据库成功后,发送注册邮件,再发送注册短信。以上三个任务全部完成后,返回给客户端,总共花费时间为150MS。

引入消息队列后,将不是必须的业务逻辑,异步处理。改造后的架构如下:

用户的响应时间相当于是注册信息写入数据库的时间,50毫秒。注册邮件,发送短信写入消息队列后,直接返回,因此写入消息队列的速度很快,基本可以忽略,因此用户的响应时间可能是50毫秒。

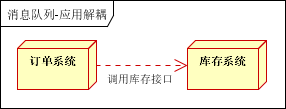

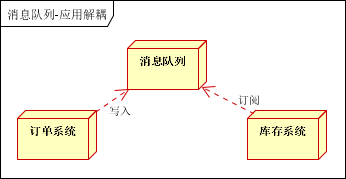

2)MQ应用解耦场景:

用户下单后,订单系统需要通知库存系统。传统的做法是,订单系统调用库存系统的接口,缺点是假如库存系统无法访问,则订单减库存将失败,从而导致订单失败。

引入应用消息队列后的方案:

订单系统:用户下单后,订单系统完成持久化处理,将消息写入消息队列,返回用户订单下单成功;

库存系统:订阅下单的消息,采用拉/推的方式,获取下单信息,库存系统根据下单信息,进行库存操作;

下单时库存系统不能正常使用,也不影响正常下单,因为下单后,订单系统写入消息队列就不再关心其他的后续操作,实现订单系统与库存系统的应用解耦;

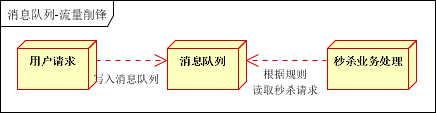

3)MQ流量销锋场景:

流量削锋也是消息队列中的常用场景,一般在秒杀或团抢活动中使用广泛,秒杀活动,一般会因为流量过大,导致流量暴增,应用挂掉。为解决这个问题,一般需要在应用前端加入消息队列。其优点可以控制活动的人数,可以缓解短时间内高流量压垮应用。

用户请求,服务器接收后,首先写入消息队列,假如消息队列长度超过最大数量,则直接抛弃用户请求或跳转到错误页面,秒杀业务根据消息队列中的请求信息,再做后续处理;

-

-

- 安装配置Rabbitmq

-

启动该服务,默认监听端口为TCP 5672,同时添加Openstack通信用户。

| systemctl enable rabbitmq-server.service systemctl start rabbitmq-server.service rabbitmqctl add_user openstack openstack rabbitmqctl set_permissions openstack ".*" ".*" ".*" rabbitmq-plugins list rabbitmq-plugins enable rabbitmq_management systemctl restart rabbitmq-server.service lsof -i :15672 |

访问RabbitMQ,访问地址是http://10.150.150.239:15672,如图所示:

使用默认用户名/密码guest登录,添加openstack用户到组并登陆测试。

创建完毕,使用openstack用户和密码登录,如图所示:

-

-

- Rabbitmq消息测试

-

Rabbitmq消息测试如下,如上消息服务器部署完毕,可以进行简单消息的发布和订阅。Rabbitmq完整的消息通信包括:

- 发布者(producer)是发布消息的应用程序;

- 队列(queue)用于消息存储的缓冲;

- 消费者(consumer)是接收消息的应用程序。

RabbitMQ消息模型核心理念:发布者(producer)不会直接发送任何消息给队列,发布者(producer)甚至不知道消息是否已经被投递到队列,发布者(producer)只需要把消息发送给一个交换器(exchange),交换器非常简单,它一边从发布者方接收消息,一边把消息推入队列。

交换器必须知道如何处理它接收到的消息,是应该推送到指定的队列还是是多个队列,或者是直接忽略消息。

-

- 配置 Keystone 验证服务

配置keystone服务:

| sed -i 's/OPTIONS=.*/OPTIONS=\"0.0.0.0\"/g' /etc/sysconfig/memcached systemctl enable memcached systemctl start memcached #Keystone cp /etc/keystone/keystone.conf{,.bak} #备份默认配置 Keys=$(openssl rand -hex 10) #生成随机密码 echo $Keys echo "kestone $Keys">>~/openstack.log echo " [DEFAULT] admin_token = $Keys verbose = true [database] connection = mysql+pymysql://keystone:keystone@node1/keystone [token] provider = fernet driver = memcache [memcache] servers = node1:11211 ">/etc/keystone/keystone.conf #初始化keystone数据库 su -s /bin/sh -c "keystone-manage db_sync" keystone #检查表是否创建成功 mysql -h node1 -ukeystone -pkeystone -e "use keystone;show tables;" #初始化密钥存储库 keystone-manage fernet_setup --keystone-user keystone --keystone-group keystone keystone-manage credential_setup --keystone-user keystone --keystone-group keystone #设置admin用户(管理用户)和密码 keystone-manage bootstrap --bootstrap-password admin \ --bootstrap-admin-url http://node1:35357/v3/ \ --bootstrap-internal-url http://node1:5000/v3/ \ --bootstrap-public-url http://node1:5000/v3/ \ --bootstrap-region-id RegionOne #apache配置 cp /etc/httpd/conf/httpd.conf{,.bak} echo "ServerName node1">>/etc/httpd/conf/httpd.conf ln -s /usr/share/keystone/wsgi-keystone.conf /etc/httpd/conf.d/ #Apache HTTP 启动并设置开机自启动 systemctl enable httpd.service systemctl restart httpd.service netstat -antp|egrep ':5000|:35357|:80' |

#创建 keystone用户,临时设置admin_token用户的环境变量,用来创建用户。

| echo " export OS_PROJECT_DOMAIN_NAME=default export OS_USER_DOMAIN_NAME=default export OS_PROJECT_NAME=admin export OS_USERNAME=admin export OS_PASSWORD=admin export OS_AUTH_URL=http://node1:35357/v3 export OS_IDENTITY_API_VERSION=3 export OS_IMAGE_API_VERSION=2 ">admin-openstack.sh #测试脚本是否生效 source admin-openstack.sh openstack token issue |

#创建demo项目(普通用户密码及角色)

| openstack project create --domain default --description "Demo Project" demo openstack user create --domain default --password=demo demo openstack role create user openstack role add --project demo --user demo user #demo环境脚本 echo " export OS_PROJECT_DOMAIN_NAME=default export OS_USER_DOMAIN_NAME=default export OS_PROJECT_NAME=demo export OS_USERNAME=demo export OS_PASSWORD=demo export OS_AUTH_URL=http://node1:5000/v3 export OS_IDENTITY_API_VERSION=3 export OS_IMAGE_API_VERSION=2 ">demo-openstack.sh #测试脚本是否生效 source demo-openstack.sh openstack token issue |

#openstack token issue执行结果如下:

#创建service项目,创建glance,nova,neutron用户,并授权:

| openstack project create --domain default --description "Service Project" service openstack user create --domain default --password=glance glance openstack role add --project service --user glance admin openstack user create --domain default --password=nova nova openstack role add --project service --user nova admin openstack user create --domain default --password=neutron neutron openstack role add --project service --user neutron admin |

#openstack endpoint list #查看

-

- 配置Glance镜像服务

#在keystone上进行服务注册 ,创建glance服务实体,API端点(公有、私有、admin)

| source admin-openstack.sh || { echo "加载前面设置的admin-openstack.sh环境变量脚本";exit; } openstack service create --name glance --description "OpenStack Image" image openstack endpoint create --region RegionOne image public http://node1:9292 openstack endpoint create --region RegionOne image internal http://node1:9292 openstack endpoint create --region RegionOne image admin http://node1:9292 |

#配置文件/etc/glance/glance-api.conf glance- registry.conf,内容如下:

| #glance配置 cp /etc/glance/glance-api.conf{,.bak} cp /etc/glance/glance-registry.conf{,.bak} # images默认/var/lib/glance/images/ Imgdir=/var/lib/glance/images/ echo "# [database] connection = mysql+pymysql://glance:glance@node1/glance [keystone_authtoken] auth_uri = http://node1:5000/v3 auth_url = http://node1:35357/v3 memcached_servers = node1:11211 auth_type = password project_domain_name = default user_domain_name = default project_name = service username = glance password = glance [paste_deploy] flavor = keystone [glance_store] stores = file,http default_store = file filesystem_store_datadir = $Imgdir #">/etc/glance/glance-api.conf ############################## echo "# [database] connection = mysql+pymysql://glance:glance@node1/glance [keystone_authtoken] auth_uri = http://node1:5000/v3 auth_url = http://node1:35357/v3 memcached_servers = node1:11211 auth_type = password project_domain_name = default user_domain_name = default project_name = service username = glance password = glance [paste_deploy] flavor = keystone #">/etc/glance/glance-registry.conf |

#同步数据库,检查数据库

| su -s /bin/sh -c "glance-manage db_sync" glance mysql -h node1 -u glance -pglance -e "use glance;show tables;" #启动服务并设置开机自启动 systemctl enable openstack-glance-api openstack-glance-registry systemctl start openstack-glance-api openstack-glance-registry #systemctl restart openstack-glance-api openstack-glance-registry netstat -antp|egrep '9292|9191'

|

#下载镜像并上传到 glance:

| #镜像测试,下载有时很慢 wget http://download.cirros-cloud.net/0.3.5/cirros-0.3.5-x86_64-disk.img #下载镜像源,使用qcow2磁盘格式,bare容器格式,上传镜像到镜像服务并设置公共可见; source admin-openstack.sh openstack image create "cirros" \ --file cirros-0.3.5-x86_64-disk.img \ --disk-format qcow2 --container-format bare \ --public #检查是否上传成功 openstack image list #glance image-list ls $Imgdir #删除镜像 glance image-delete镜像id #wget http://cloud.centos.org/centos/7/images/CentOS-7-x86_64-GenericCloud.qcow2 #glance image-create "CentOS-7-x86_64" --file CentOS-7-x86_64-GenericCloud.qcow2 --disk-format qcow2 --container-format bare --visibility --public |

glance image-list

ll /var/lib/glance/images/

-

- Nova控制节点配置

| #创建Nova数据库、用户、认证,前面已设置; source admin-openstack.sh || { echo "加载前面设置的admin-openstack.sh环境变量脚本";exit; } # keystone上服务注册 ,创建nova用户、服务、API # nova用户前面已建 openstack service create --name nova --description "OpenStack Compute" compute openstack endpoint create --region RegionOne compute public http://node1:8774/v2.1 openstack endpoint create --region RegionOne compute internal http://node1:8774/v2.1 openstack endpoint create --region RegionOne compute admin http://node1:8774/v2.1 #创建placement用户、服务、API openstack user create --domain default --password=placement placement openstack role add --project service --user placement admin openstack service create --name placement --description "Placement API" placement openstack endpoint create --region RegionOne placement public http://node1:8778 openstack endpoint create --region RegionOne placement internal http://node1:8778 openstack endpoint create --region RegionOne placement admin http://node1:8778 #openstack endpoint delete id |

#配置Nova控制节点服务,代码如下:

| echo '# [DEFAULT] enabled_apis = osapi_compute,metadata transport_url = rabbit://openstack:openstack@node1 my_ip = 10.150.150.239 use_neutron = True firewall_driver = nova.virt.firewall.NoopFirewallDriver [api_database] connection = mysql+pymysql://nova:nova@node1/nova_api [database] connection = mysql+pymysql://nova:nova@node1/nova [api] auth_strategy = keystone [keystone_authtoken] auth_uri = http://node1:5000 auth_url = http://node1:35357 memcached_servers = node1:11211 auth_type = password project_domain_name = default user_domain_name = default project_name = service username = nova password = nova [vnc] enabled = true vncserver_listen = $my_ip vncserver_proxyclient_address = $my_ip [glance] api_servers = http://node1:9292 [oslo_concurrency] lock_path = /var/lib/nova/tmp [placement] os_region_name = RegionOne project_domain_name = Default project_name = service auth_type = password user_domain_name = Default auth_url = http://node1:35357/v3 username = placement password = placement [scheduler] discover_hosts_in_cells_interval = 300 #'>/etc/nova/nova.conf echo " #Placement API <Directory /usr/bin> <IfVersion >= 2.4> Require all granted </IfVersion> <IfVersion < 2.4> Order allow,deny Allow from all </IfVersion> </Directory> ">>/etc/httpd/conf.d/00-nova-placement-api.conf systemctl restart httpd sleep 2 #同步数据库 su -s /bin/sh -c "nova-manage api_db sync" nova su -s /bin/sh -c "nova-manage cell_v2 map_cell0" nova su -s /bin/sh -c "nova-manage cell_v2 create_cell --name=cell1 --verbose" nova su -s /bin/sh -c "nova-manage db sync" nova #检测数据 nova-manage cell_v2 list_cells mysql -h node1 -u nova -pnova -e "use nova_api;show tables;" mysql -h node1 -u nova -pnova -e "use nova;show tables;" mysql -h node1 -u nova -pnova -e "use nova_cell0;show tables;" #开机自启动 systemctl enable openstack-nova-api.service \ openstack-nova-consoleauth.service openstack-nova-scheduler.service \ openstack-nova-conductor.service openstack-nova-novncproxy.service #启动服务 systemctl restart openstack-nova-api.service \ openstack-nova-consoleauth.service openstack-nova-scheduler.service \ openstack-nova-conductor.service openstack-nova-novncproxy.service #查看节点 #nova service-list openstack catalog list nova-status upgrade check openstack compute service list #nova-manage cell_v2 delete_cell --cell_uuid b736f4f4-2a67-4e60-952a-14b5a68b0f79 # #发现计算节点,新增计算节点时执行 #su -s /bin/sh -c "nova-manage cell_v2 discover_hosts --verbose" nova |

#openstack host list查看主机节点信息:

-

- Nova计算节点配置

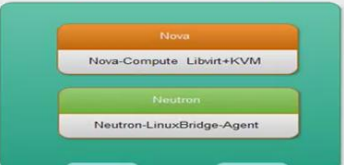

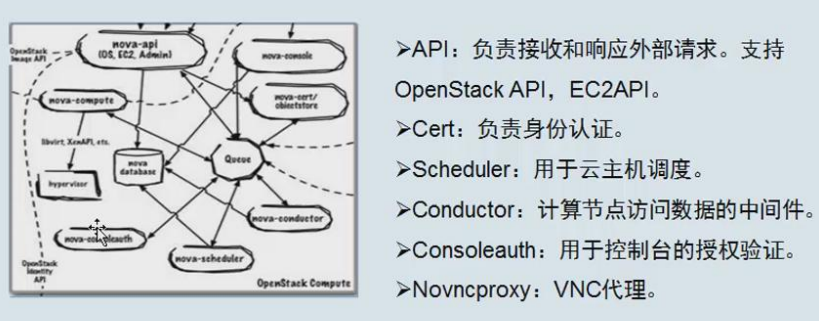

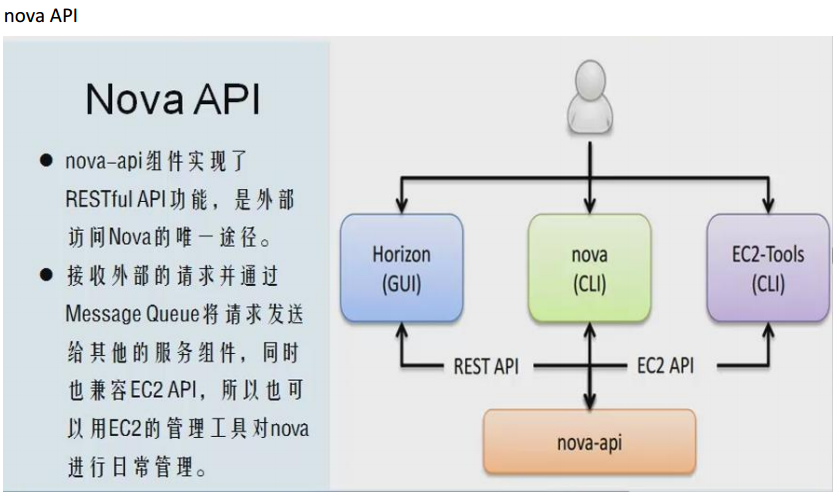

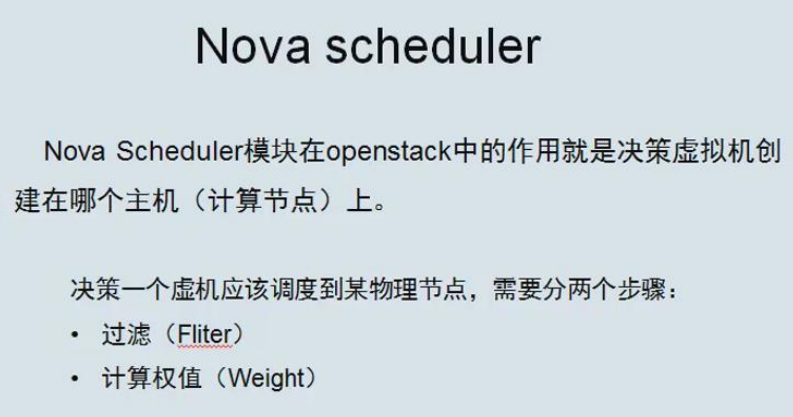

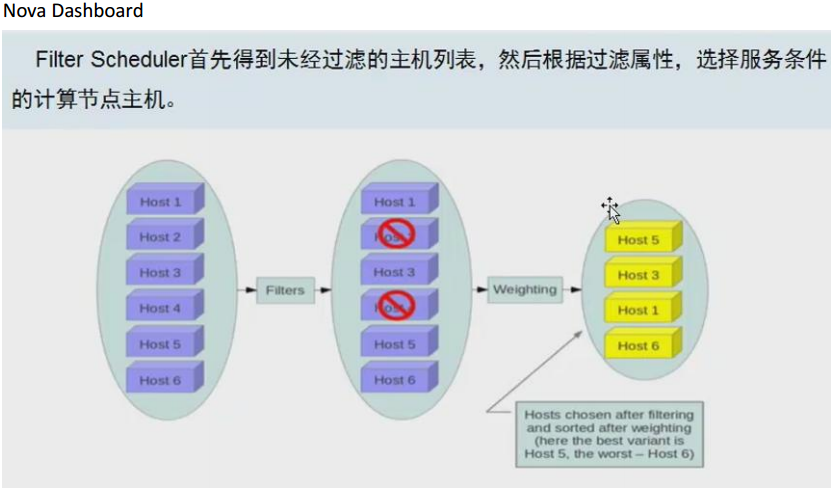

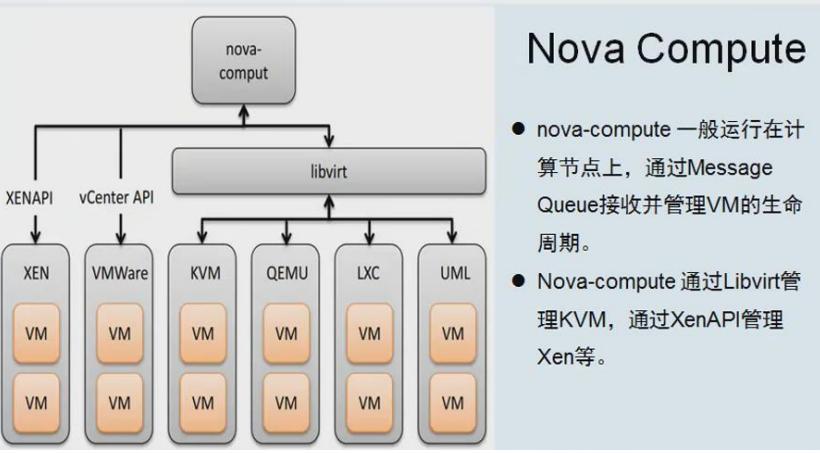

nova compute介绍,如图所示:

Node2计算节点配置步骤:

| mv /etc/yum.repos.d/CentOS-Base.repo{,.bak} wget -O /etc/yum.repos.d/CentOS-Base.repo http://mirrors.aliyun.com/repo/Centos-7.repo yum install centos-release-openstack-pike -y yum install python-openstackclient openstack-selinux -y yum install python-openstackclient python2-PyMySQL -y yum install openstack-utils -y #Nova yum install -y openstack-nova-compute yum install -y python-openstackclient openstack-selinux #Neutron yum install -y openstack-neutron-linuxbridge ebtables ipset #Cinder yum install -y openstack-cinder python-cinderclient targetcli python-oslo-policy #Update Qemu yum install -y centos-release-qemu-ev.noarch yum install qemu-kvm qemu-img -y |

修改nova.conf配置文件代码如下:

| #设置Nova实例路径,磁盘镜像文件; Vdir=/var/lib/nova VHD=$Vdir/instances mkdir -p $VHD chown -R nova:nova $Vdir #使用QEMU或KVM ,KVM硬件加速需要硬件支持 [[ `egrep -c '(vmx|svm)' /proc/cpuinfo` = 0 ]] && { Kvm=qemu; } || { Kvm=kvm; } echo "使用 $Kvm" VncProxy=10.150.150.239 #VNC代理外网IP地址 # #nova配置 /usr/bin/cp /etc/nova/nova.conf{,.$(date +%s).bak} #egrep -v '^$|#' /etc/nova/nova.conf echo '# [DEFAULT] instances_path='$VHD' enabled_apis = osapi_compute,metadata transport_url = rabbit://openstack:openstack@node1 my_ip = 192.168.1.121 use_neutron = True firewall_driver = nova.virt.firewall.NoopFirewallDriver [api_database] connection = mysql+pymysql://nova:nova@node1/nova_api [database] connection = mysql+pymysql://nova:nova@node1/nova [api] auth_strategy = keystone [keystone_authtoken] auth_uri = http://node1:5000 auth_url = http://node1:35357 memcached_servers = node1:11211 auth_type = password project_domain_name = default user_domain_name = default project_name = service username = nova password = nova [vnc] enabled = true vncserver_listen = 0.0.0.0 vncserver_proxyclient_address = $my_ip novncproxy_base_url = http://'$VncProxy':6080/vnc_auto.html [glance] api_servers = http://node1:9292 [oslo_concurrency] lock_path = /var/lib/nova/tmp [placement] os_region_name = RegionOne project_domain_name = Default project_name = service auth_type = password user_domain_name = Default auth_url = http://node1:35357/v3 username = placement password = placement [libvirt] virt_type = '$Kvm' #'>/etc/nova/nova.conf #sed -i 's#node1:6080#10.150.150.239:6080#' /etc/nova/nova.conf #启动计算节点相关服务 systemctl enable libvirtd.service openstack-nova-compute.service systemctl restart libvirtd.service openstack-nova-compute.service |

-

- Openstack节点测试

至此控制节点与计算节点配置完毕,如下为在计算节点进行测试,测试命令如下:

openstack host list

-

- Neutron控制节点配置

#Neutron服务注册

| source admin-openstack.sh # 创建Neutron服务实体,API端点: openstack service create --name neutron --description "OpenStack Networking" network openstack endpoint create --region RegionOne network public http://node1:9696 openstack endpoint create --region RegionOne network internal http://node1:9696 openstack endpoint create --region RegionOne network admin http://node1:9696 |

#Neutron配置命令如下:

| #Neutron备份配置 cp /etc/neutron/neutron.conf{,.bak2} cp /etc/neutron/plugins/ml2/ml2_conf.ini{,.bak} ln -s /etc/neutron/plugins/ml2/ml2_conf.ini /etc/neutron/plugin.ini cp /etc/neutron/plugins/ml2/linuxbridge_agent.ini{,.bak} cp /etc/neutron/dhcp_agent.ini{,.bak} cp /etc/neutron/metadata_agent.ini{,.bak} cp /etc/neutron/l3_agent.ini{,.bak} #配置Neutron echo ' [DEFAULT] nova_metadata_ip = node1 metadata_proxy_shared_secret = metadata #'>/etc/neutron/metadata_agent.ini # echo ' # [neutron] url = http://node1:9696 auth_url = http://node1:35357 auth_type = password project_domain_name = default user_domain_name = default region_name = RegionOne project_name = service username = neutron password = neutron service_metadata_proxy = true metadata_proxy_shared_secret = metadata #'>>/etc/nova/nova.conf # echo '# [ml2] tenant_network_types = type_drivers = vlan,flat mechanism_drivers = linuxbridge extension_drivers = port_security [ml2_type_flat] flat_networks = provider [securitygroup] enable_ipset = True #vlan # [ml2_type_valn] # network_vlan_ranges = provider:3001:4000 #'>/etc/neutron/plugins/ml2/ml2_conf.ini # eth0是网卡名 echo '# [linux_bridge] physical_interface_mappings = provider:eth0 [vxlan] enable_vxlan = false #local_ip = 10.150.150.239 #l2_population = true [agent] prevent_arp_spoofing = True [securitygroup] firewall_driver = neutron.agent.linux.iptables_firewall.IptablesFirewallDriver enable_security_group = True #'>/etc/neutron/plugins/ml2/linuxbridge_agent.ini # echo '# [DEFAULT] interface_driver = linuxbridge dhcp_driver = neutron.agent.linux.dhcp.Dnsmasq enable_isolated_metadata = true #'>/etc/neutron/dhcp_agent.ini # echo ' [DEFAULT] core_plugin = ml2 service_plugins = #service_plugins = trunk #service_plugins = router allow_overlapping_ips = true transport_url = rabbit://openstack:openstack@node1 auth_strategy = keystone notify_nova_on_port_status_changes = true notify_nova_on_port_data_changes = true [keystone_authtoken] auth_uri = http://node1:5000 auth_url = http://node1:35357 memcached_servers = node1:11211 auth_type = password project_domain_name = default user_domain_name = default project_name = service username = neutron password = neutron [nova] auth_url = http://node1:35357 auth_plugin = password project_domain_id = default user_domain_id = default region_name = RegionOne project_name = service username = nova password = nova [database] connection = mysql://neutron:neutron@node1:3306/neutron [oslo_concurrency] lock_path = /var/lib/neutron/tmp #'>/etc/neutron/neutron.conf # echo ' [DEFAULT] interface_driver = linuxbridge #'>/etc/neutron/l3_agent.ini #同步数据库 su -s /bin/sh -c "neutron-db-manage --config-file /etc/neutron/neutron.conf --config-file /etc/neutron/plugins/ml2/ml2_conf.ini upgrade head" neutron #检测数据 mysql -h node1 -u neutron -pneutron -e "use neutron;show tables;" #重启相关服务 systemctl restart openstack-nova-api.service #启动neutron systemctl enable neutron-server.service neutron-linuxbridge-agent.service neutron-dhcp-agent.service neutron-metadata-agent.service systemctl restart neutron-server.service neutron-linuxbridge-agent.service neutron-dhcp-agent.service neutron-metadata-agent.service |

#echo "查看网络,控制节点3个ID,计算节点1个ID"

openstack network agent list

-

- Neutron计算节点配置

配置Neutron,命令执行如下:

| #配置 cp /etc/neutron/neutron.conf{,.bak} echo '# [DEFAULT] auth_strategy = keystone transport_url = rabbit://openstack:openstack@node1 [keystone_authtoken] auth_uri = http://node1:5000 auth_url = http://node1:35357 memcached_servers = node1:11211 auth_plugin = password project_domain_id = default user_domain_id = default project_name = service username = neutron password = neutron [oslo_concurrency] lock_path = /var/lib/neutron/tmp #'>/etc/neutron/neutron.conf # echo ' # [neutron] url = http://node1:9696 auth_url = http://node1:35357 auth_type = password project_domain_name = default user_domain_name = default region_name = RegionOne project_name = service username = neutron password = neutron #'>>/etc/nova/nova.conf # cp /etc/neutron/plugins/ml2/linuxbridge_agent.ini{,bak} echo ' [linux_bridge] physical_interface_mappings = provider:eth0 [securitygroup] enable_security_group = true firewall_driver = neutron.agent.linux.iptables_firewall.IptablesFirewallDriver [vxlan] enable_vxlan = false # local_ip = 192.168.1.121 # l2_population = true #'>/etc/neutron/plugins/ml2/linuxbridge_agent.ini #重启相关服务 systemctl restart openstack-nova-compute.service #启动neutron systemctl enable neutron-linuxbridge-agent.service systemctl start neutron-linuxbridge-agent.service |

-

- 控制节点创建网桥

检查控制节点及计算节点信息,如图所示:

openstack network agent list

| source admin-openstack.sh #查看节点 nova service-list openstack catalog list nova-status upgrade check #openstack compute service list openstack network agent list ###------------------------ #创建秘钥 ssh-keygen -t dsa -P '' -f ~/.ssh/id_dsa nova keypair-add --pub-key ~/.ssh/id_dsa.pub mykey nova keypair-list #查看密钥 #创建可用域(Zone计算节点集合) nova aggregate-create Jfedu01 Jfedu01 nova aggregate-create Jfedu02 Jfedu02 nova aggregate-list #添加主机 nova aggregate-add-host Jfedu01 node2 #nova aggregate-add-host Jfedu02 node3 #创建云主机类型 openstack flavor create --id 1 --vcpus 1 --ram 512 --disk 10 m1.nano #安全规则 openstack security group rule create --proto icmp default openstack security group rule create --proto tcp --dst-port 22 'default' openstack security group rule create --proto tcp --dst-port 80 'default' ###------------------------ #创建虚拟网络 openstack network create --share --external \ --provider-physical-network provider \ --provider-network-type flat flat-net #创建子网 openstack subnet create --network flat-net \ --allocation-pool start=192.168.1.150,end=192.168.1.200 \ --dns-nameserver 8.8.8.8 --gateway 192.168.1.254 --subnet-range 192.168.1.0/24 \ sub_flat-net # ip netns # systemctl restart network # #单ip创建网络后,可能造成中断,需等待片刻,或重启系统 #查看网络 openstack network list # neutron net-list # neutron subnet-list #云主机可用类型 openstack flavor list #可用镜像 openstack image list #可用的安全组 openstack security group list #可用的网络 openstack network list #m1.nano 主机类型,net-id后面是网络ID号,Jfedu01 可用域 #创建虚拟机 kvm01-cirros NET=`openstack network list|grep 'flat-net'|awk '{print $2}'` echo $NET nova boot --flavor m1.nano --image cirros \ --nic net-id=$NET \ --security-group default --key-name mykey \ --availability-zone Jfedu01 \ kvm01-cirros #检查server list openstack server list #虚拟控制台访问实例url openstack console url show kvm01-cirros |

创建虚拟机的时候,openstack在neutron组网内是采用dhcp-agent自动分配ip,可以在创建虚拟机的时候,指定固定ip。

-

- 控制节点配置Dashboard

通过Dashboard WEB界面可以管理Openstack,部署方法如下:

| #Openstack dashboard配置 cp /etc/openstack-dashboard/local_settings{,.bak} #egrep -v '#|^$' /etc/openstack-dashboard/local_settings #显示默认配置 Setfiles=/etc/openstack-dashboard/local_settings sed -i 's#_member_#user#g' $Setfiles sed -i 's#OPENSTACK_HOST = "127.0.0.1"#OPENSTACK_HOST = "node1"#' $Setfiles ##允许所有主机访问# sed -i "/ALLOWED_HOSTS/cALLOWED_HOSTS = ['*', ]" $Setfiles #去掉memcached注释# sed -in '153,158s/#//' $Setfiles sed -in '160,164s/.*/#&/' $Setfiles sed -i 's#UTC#Asia/Shanghai#g' $Setfiles sed -i 's#%s:5000/v2.0#%s:5000/v3#' $Setfiles sed -i '/ULTIDOMAIN_SUPPORT/cOPENSTACK_KEYSTONE_MULTIDOMAIN_SUPPORT = True' $Setfiles sed -i "s@^#OPENSTACK_KEYSTONE_DEFAULT@OPENSTACK_KEYSTONE_DEFAULT@" $Setfiles echo ' #set OPENSTACK_API_VERSIONS = { "identity": 3, "image": 2, "volume": 2, } #'>>$Setfiles # systemctl restart httpd |

-

- Openstack GUI配置

#访问http://10.150.150.239/dashboard/,如图所示:

#用户密码 demo 或者 admin(管理员)

553

553

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?